Model Forecasting of Hydrogen Yield and Lower Heating Value in Waste Mahua Wood Gasification with Machine Learning

Abstract

Biomass is an excellent source of green energy with numerous benefits such as abundant availability, net carbon zero, and renewable nature. However, the conventional methods of biomass combustion are polluting and poor efficiency processes. Biomass gasification overcomes these challenges and provides a sustainable method for the supply of greener fuel in the form of producer gas. The producer gas can be employed as a gaseous fuel in compression ignition engines in dual-fuel systems. The biomass gasification process is a complex as well as a nonlinear process that is highly dependent on the ambient environment, type of biomass, and biomass composition as well as the gasification medium. This makes the modeling of such systems quite difficult and time-consuming. Modern machine learning (ML) techniques offer the use of experimental data as a convenient approach to modeling and forecasting such systems. In the present study, two modern and highly efficient ML techniques, random forest (RF) and AdaBoost, were employed for this purpose. The outcomes were employed with results of a baseline method, i.e., linear regression. The RF could forecast the hydrogen yield with R2 as 0.978 during model training and 0.998 during the model test phase. AdaBoost ML was close behind with R2 at 0.948 during model training and 0.842 during the model test phase. The mean squared error was as low as 0.17 and 0.181 during model training and testing, respectively. In the case of the low heating value model, during model testing, the R2 was 0.971 and RF and AdaBoost, respectively, during model training and 0.842 during the model test phase. Both ML techniques provided excellent results compared to linear regression, but RFt was the best among all three.

1. Introduction

There is a global shortage of economical and less polluting energy supply. Also, the world is suffering from incessant rain, global warming, and flash floods caused by changes in weather patterns, The use of fossil fuels in transportation and industry is held responsible for this negative phenomenon [1, 2]. Biomass energy is a valuable and eco-friendly form of energy derived from organic materials such as waste from plants and animals, crops, and energy crops [3, 4]. This sustainable energy source harvests solar energy through photosynthesis and turns it into useful products including electricity, heat, and biofuels. Biomass, as a carbon-neutral alternative to fossil fuels, plays a vital role in mitigating climate change [5, 6].

Biomass serves as crucial element to drive the progress in achieving the set targets toward Sustainable Development Goals (SDGs). Biomass enables the provision of affordable, universal, and affordable energy to all (SDG 7) [7, 8]. This helps in reduction of the ill effects of climate change (SDG 13) and at the same time also encourages sustainable land use (SDG 15). Biomass offers a sustainable solution to mitigate the greenhouse gas (GHGs)-led emissions and decreasing our reliance on fossil fuels [9, 10]. The efficient utilization of biomass contributed toward the global agenda of achieving the net-zero emissions targets. The employment of bioenergy as well as production offers supports to the local community, enhances the energy security, and also promotes economic growth [11, 12]. By integrating biomass within sustainable energy systems as well as land management approaches, society can make significant strides toward a more fair, adaptable, and environmentally responsible future. This would fall in line with the SDGs [13, 14].

The burning or conversion of biomass emits carbon dioxide, but this is compensated by the carbon dioxide absorbed by the plants all through their development, resulting in a closed carbon cycle. Biomass energy systems help to reduce waste and give an environmentally beneficial method for dealing with organic leftovers [15, 16]. Furthermore, the diversity of biomass feedstocks enables a flexible and decentralized energy production strategy, making it an essential component of a renewable and diverse energy portfolio [17, 18]. Biomass energy innovations continue to improve efficiency, minimize environmental impacts, and widen the breadth of its uses as technology improves, confirming its role in the worldwide transition to more environmentally conscious and sustainable energy alternatives [19, 20].

The thermochemical conversion process of converting biomass to producer gas is biomass gasification. A specially designed reactor is used for this purpose, in which the supply of gasification medium (air or steam) may be controlled. This reactor (biomass gasifier) is used for producing the producer gas [21, 22]. However, this gas is particle-laden and hot; hence, it should be cooled and cleaned before supplying to the engine. The producer gas after this may be employed as a gaseous fuel in the diesel engine either in single or dual-fuel (DF) mode [23, 24].

Several researchers have reported their work on biomass gasification using waste biomass in downdraft gasifiers as listed in Table 1.

| Biomass | Type of gasification | Analytical/prognostic | Main outcomes | Source |

|---|---|---|---|---|

| Timber, garden, and paper biomass waste | Downdraft air gasifier | Analytical |

|

Safarian et al. [25] |

| Pine biomass pallets and RDF char | Bubbling fluidized bed gasifier | Analytical | The yield of PG varied from 1.5 to 2.5 m3/kg | Nobre et al. [26] |

| Woody biomass and food waste | Fixed bed tubular reactor | Analytical | Peak value of LHV as 11.03 MJ m−3 was achieved during gasification | Nagy and Dobo [27] |

| Biomass and municipal solid waste (MSW) | Fixed bed reactor | Analytical | It was observed that equivalence ratio and higher temperature | Cai et al. [28] |

| String bean stalk, potato stalk, and tomato stalk biomass | Steam gasification | Prognostic study | C content and CO value showed linear response to syngas LHV, with R2 values of 0.533 and 0.864, correspondingly | Li et al. [29] |

| Waste biomass, polypropylene, and polyethylene terephthalate | Cogasification | Analytical | Addition of plastic waste increased LHV of syngas to 5.78 MJ m−3 | Montoya et al. [30] |

| Waste wood, carton, tire, plastic, and weed | Cogasification in downdraft gasifier | Analytical | Peak yield of 92.1% wt. could be achieved | Salavati et al. [31] |

Fazil et al. [32] employed refuse-driven fuel (RDF) with a high concentration of paper waste as feedstock in a downdraft gasifier. Using sawdust, cogasification of RDF mixed biomass has been studied as a way to lower the total ash level of the combination. When the RDF proportion in the feedstock is large, cogasification shows a synergy involving RDF and sawdust. During cogasification, a peak lower heating value of 4.65 MJ/Nm3 was attained, and the tar output decreased from 13.6 to 8.8 g/Nm3 as RDF in the feed rose from 25% to 75% (w/w). Awais et al. [33] investigated 24 kWe downdraft gasifier for cogasification. The study utilized a blend consisting of equal parts (50%) of wood chips with maize cobs, as well as a blend of equal parts (50%) of sugarcane bagasse with coconut shells. An assessment was carried out to evaluate the performance of the gasification process by varying the biomass ratios. The evaluation emphasized the gasification efficiency, temperature profiles of the reactor, and the impact of equivalency ratios on the specific output of tar levels and syngas composition. The experiments were conducted within a temperature range of 650–950°C, while the equivalency ratios varied between 0.15 and 0.30. According to the outcomes of this study, cogasification of sugarcane bagasse + coconut shells was an effective feedstock mixture for gasification as well as application of syngas for power generation is dependent on tar formation, syngas flowrate, and efficiency in a downdraft gasifier.

While there are numerous studies are reported on experimental work in this domain, the modeling studies show that it is a complex process. The availability of model ML techniques as well as enhanced computational power is capable of handling this task [34]. Machine learning is a subfield of artificial intelligence in which algorithms allow computers to learn and develop without being specifically programmed. Machine learning approaches can be useful in modeling as well as forecasting studies for biomass gasification in a downdraft gasifier [6, 35]. These algorithms are capable of analyzing vast datasets generated by the gasification process and discovering patterns and correlations between various input parameters and output reactions [36]. Machine learning models may then generate predictions or classifications based on previous data, assisting in optimizing gasification process parameters, forecasting gas composition, and improving overall efficiency. Machine learning’s adaptive nature enables continual improvement as fresh data becomes accessible, rendering it a great tool for optimizing and comprehending complicated biomass gasification systems [37, 38, 39].

The application of conventional ML techniques like artificial neural networks (ANN) and fuzzy methods is quite common in several fields of engineering including biomass gasification [40, 41, 42]. Kargbo et al. [43] employed ANN for the optimization of a two-stage downdraft gasifier operation. In this study, an ANN model was constructed and verified with data obtained from experiments to forecast and optimize the gasification process, saving development and testing time and costs. With a correlation R2 > 0.99, the model can reliably forecast gas composition as well as yield that corresponds to fluctuations at the output. The constructed neural network model was then used to optimize the two-stage gasification operating parameters for high carbon conversion, high hydrogen output, and low carbon dioxide in nitrogen and carbon dioxide environments. Cerinski et al. [44] developed a dynamic model for the prediction of the biomass gasification process. The possibility of employing nonlinear autoregressive networks containing exogenous inputs (NARX) for forecasting the gasification process when there is a limited quantity of experimental data was investigated in this paper. The use of an open-loop NARX network for online prediction of syngas compositions in a downdraft gasifier at various data recording frequencies was investigated. The projected findings revealed that reducing the data collection frequency increased the prediction error.

The literature review in this domain reveals that there are numerous published reports available on biomass gasification demonstrating experimental work. Also, studies on conventional ML methods are available. However, the domain of ML is evolving each day. At present, more efficient and highly precise ML techniques are available. Hence, the present study is an endeavor to apply modern ML approaches of random forest (RF)- and adaptive boosting (AdaBoost)-based ensemble methods. The model outcomes were compared with a baseline method of linear regression (LR). An ensemble of statistical metrics is employed. Furthermore, a battery of graphical methods, i.e., violin plots and Taylor’s diagram, is used for model comparison.

2. Materials and Methods

2.1. Test Downdraft Gasifier

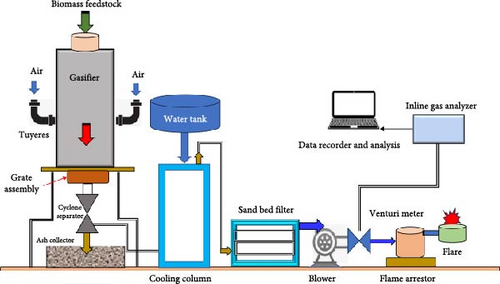

A fixed bed downdraft gasifier was employed in the study. It is made up of a cylindrical reactor with a fixed bed. A shakable and adjustable grate assembly is fitted for holding the biomass and effective ash removal at intervals. The air is supplied with the help of horizontal tuyeres fitted on the gasifier body. The bottom part of the reactor acts as the combustion zone, allowing for the controlled entry of extra air or oxygen. To survive the high temperatures created during the gasification process, the fixed bed is frequently made of heavy gauge steel plates. The biomass is supplied from the top side, and it moves downward during the process. A gas filter comprising three stages of sand beds was used for gas cleaning downstream of the grate assembly. A twin-column type cooling tower is employed for cooling down the producer gas. An inline gas analyzer is used to determine the hydrogen content in the ensuing PG. The composition of the PG was also used to estimate the lower heating values (LHV). The arrangement of the test setup is depicted in Figure 1.

2.2. Biomass Feedstock

Waste mahua wood, derived from the mahua tree (Madhuca longifolia), has a lot of promise as a biomass feedstock for gasification operations. Mahua wood, which is widespread in tropical countries, particularly India, is noted for its high energy content and availability as a byproduct of numerous industries like mahua seed oil production [45, 46]. The thermochemical conversion of waste mahua wood into a combustible gas mixture is the mechanism by which waste mahua wood is gasified. Waste mahua wood has various favorable properties as a biomass fuel. First, its abundance as a byproduct assures a long-term and readily available supply for gasification, which contributes to waste utilization and sustainability of the environment. Second, mahua wood has a high energy content, making it an excellent option for efficient gasification procedures [45, 47, 48].

2.3. Machine Learning for Model Prediction

The present study makes use of two advanced ML techniques, i.e., AdaBoost and RF, for model prediction of LHV and hydrogen yield. The equivalence ratio (ER), gas flow rate (GFR), and residence time (RT) were employed as control factors in the present study. A range of these control factors were used to gather the data for modeling.

2.3.1. AdaBoost

In the case of ML-based regression, the endeavor is to predict continuous numerical values by making use of input characteristics. It has been observed that while conventional regression approaches provide reasonable predictions, their predictive performance drops in the case of nonlinear and complex data [49]. In these cases, ensemble-based approaches offer an efficient solution to increase the accuracy of forecast resilience. AdaBoost is one such efficient and robust ML techniques. It belongs to the family of adaptable ensemble learning approaches employed in the ML domain. It has a unique approach in which it makes use of its capacity to improve the performance of weak learners, also known as “base classifiers,” by applying variable weights to occurrences in the dataset based on classification accuracy. The basic foundation of AdaBoost regressor is its inherent approach of using iterative methods for training a base estimator or regression models, or estimators, in which each succeeding model concentrates on improving upon the errors or inaccuracies of its predecessors. AdaBoost regressor employs this approach of iterative improvement for successfully learning and comprehending the complex correlations inherent in the data, thus resulting in greater prognostic accuracy [50, 51].

The fundamentals of AdaBoost can be described as [52, 53, 54, 55]:

Let M be the number of base estimators in the case of regression models, and hm(x) denotes the base estimator numbered as mth, while the weight associated is shown with αm.

- (1)

Initialize: Initialize the sample weight:

- (2)

In the case of each iteration m = 1, 2, 3, …., M.

- (i)

First, train a base estimator hm(x) by employing the weighted dataset:

(2) - (ii)

Next, estimate the weighted error ∈m for the mth estimator, as follows:

- (iii)

Now estimate the importance weight of mth estimator:

- (iv)

Next, update the sample weight:

-

; herein, Zm is the factor of normalization which ensures that the total weighted sum is 1:

(5) - (3)

Then the final prediction is

This forms the basis for the AdaBoost regressor, in which each base estimator focuses on improving the preceding estimator, and then their predictions are combined with adaptive weights to form the final prediction. The success of AdaBoost in several fields is attributed to its ability to handle large datasets with resilience even in the case of complex and nonlinear data [54, 55].

2.3.2. RF

RF is another ML technique being employed in the present study for regression. RF belongs to the ensemble-based learning family. RF has emerged as a robust ML technique. RF is characterized by the development of an ensemble of individual decision trees (DTs), such that each DT is trained on a fraction of the data. During training, a random selection of characteristics is employed. This clever strategy gives each of the trees more diversity, leading to the creation of an ensemble model that excels in generalizing, precision, and robustness [56, 57]. RF does have the robust efficiency to reduce the chances of overfitting, a common challenge with conventional DTs by combining forecasts from many trees. In light of this, RF excels at managing high-dimensional data and complex variable relationships [58, 59].

It can be summarized as follows [52, 53, 57, 58]:

In this case, N denotes the sample count in a given dataset, if D is the number of input features, while X is the input feature matrix N × D, and y is the target variable vector of N × 1. RF-based regression approach creates a set of DTs T1, T2, T3, …, TM where M is the number of trees in the forest. Here, TM DT was trained on a bootstrapped sample, and it employs a randomly selected subset of characteristics at each node.

The forecast by mth DT for the input feature vector x is represented by Tm(x). The Tm DT predicts the target variable employing the binary splits in the feature space. The split at each node of the tree is based on the arbitrarily chosen feature subset and reduces target variable variation within each subset. The RF-based regression model predicts the target variable robustly by using mean predictions of all trees. This strategy helps even in case of even the case of nonlinear as well as noisy data [60, 61].

2.3.3. LR

The LR method is a fundamental ML technique that is employed for forecasting the numerical values on the basis of the data that are supplied. Additionally, it presupposes that there is a linear relationship between the characteristics and the variable of interest. Through the process of learning the coefficients that provide the greatest match to the data, the model can generate forecasts for new inputs. Forecasting, analysis of trends, and correlation discovery are just some of the numerous sectors that make extensive use of this tool. LR is also an effective approach for creating a baseline prediction model in the case when multiple models are being tested. The use of LR as a baseline prediction model makes it easier to evaluate the increased predictive power achieved by rather complex ML algorithms. LR has its strength, specifically in cases when data depict a linear trend [62, 63]. On the other hand,it has limits in the case when dealing with complex and nonlinear data patterns. There are certain circumstances in which more complicated models, like DTs or ANN, may perform better than LR. The contrast between the simplicity of LR to these many advanced models sheds light on the tradeoff that exists between the level of complexity of the model and the precision of the forecast.

2.4. Model Evaluation

In the present study, several approaches to evaluating the prediction models developed in the present study were employed to ensure that a robust comparison can be made. Two different approaches were employed; firstly, numerical methods like the coefficient of determinations (R2), mean absolute percentage errors (MAPE), and root mean squared errors (RMSE) were employed [64, 65]. Also, a twin-pronged approach of Taylor’s diagrams as well as violin plots was employed in the study. The use of Taylor’s diagrams is an efficient and easy-to-use graphical tool to estimate the efficacy of ML models, specifically when several ML-based models to compared. The proximity of nodes to the benchmark standard, the correlation coefficient, and the RMSE ratio all provide information about the models’ dependability and correctness [66, 67]. Violin plots integrate the characteristics of kernel density plots as well as box plots. A violin plot combines model categories with prediction distributions. For each model category, quartiles, as well as median lines, offer a summary comparison of the model’s central tendencies and dispersion [68].

3. Results and Discussion

The data was collected from extensive testing of the biomass gasification process from waste mahua wood. Different settings of ER, RT, and GFR were used and response variables; namely, hydrogen yield and LHV were recorded. This data was then used for model development. Seventy percent of the experimental data was used to train the models, while the remaining 30% was employed for testing the models.

3.1. Data Analysis

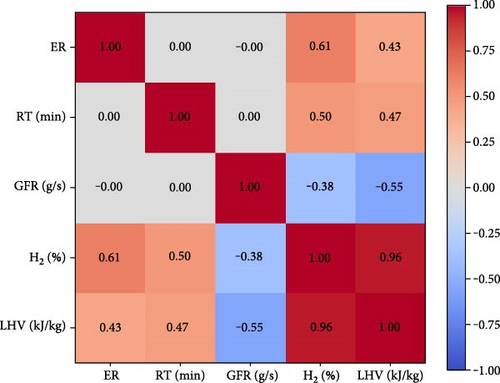

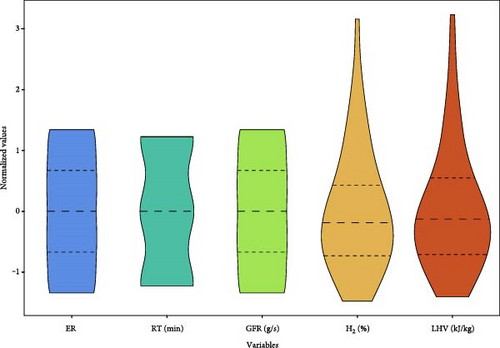

The correlation heatmap (Figure 2(a)) and descriptive statistical analysis, as well as the violin plots (Figure 2(b)), were employed for data analysis to evaluate the correlations among data columns. A high correlation between ER and hydrogen yield of 0.61 was observed indicating a strong positive link between these two. The correlation between ER and hydrogen production was 0.5, indicating a somewhat lower degree of correlation between the two compared to ER and hydrogen production. On the other hand, it was observed that the correlation between GFR and hydrogen yield was negative at −0.38, indicating that higher gas flow out of the gasifier negatively impacts the hydrogen yields.

In the case of a correlation between LHV and control factors, it was observed that the correlation value between ER and LHV was 0.43, while it was 0.47 between RT and LHV indicating a comparable positive link between these parameters. In the case of GFR and LHV, a negative correlation of −0.55 was observed, indicating that a higher rate of GFR reduces the LHV of outgoing PG. The violin plots depict the concentrations and dispersion of the data of different parameters.

3.2. Hydrogen Yield Models

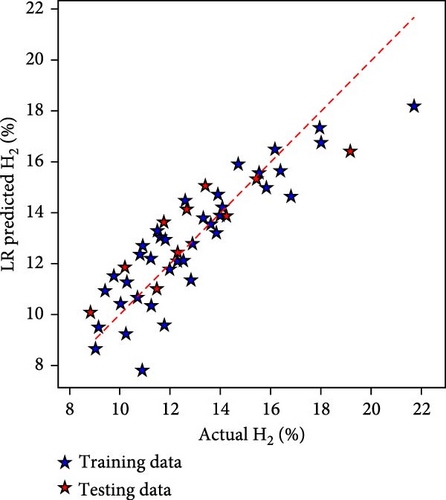

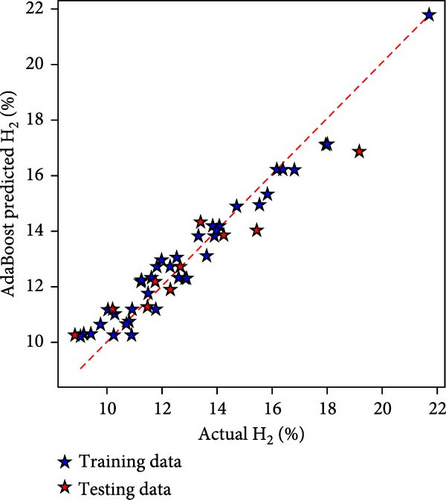

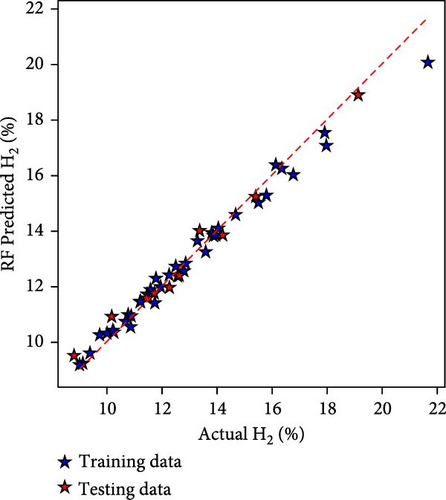

The hydrogen content in the producer gas is the most significant element as it directly impacts the quality and heating value of PG. Hence, it was considered for model predictions. Figure 3 depicts the performance of all three ML techniques employed in the present study in the model form of actual vs. model prediction. Figure 3(a) depicts the LR-based model, and Figure 3(b) shows the AdaBoost-based model, while Figure 3(c) depicts the RF-based hydrogen yield model. The closeness of the data points to diagonal lines shows the robustness of the models. It can be observed that the RF-based hydrogen yield model performs superior to the other two models.

The models were also evaluated based on statistical metrics. The statistical evaluation of the models has been listed in Table 2. During the model training phase, RF has the lowest MSE of 0.17, followed by AdaBoost with 0.405 and LR with 1.717. On the R2 metric, RF surpasses AdaBoost and LR, with a score of 0.978 vs. 0.948 and 0.779, respectively. The MAE demonstrates RF’s superior performance, with a value of 0.29 compared to AdaBoost’s 0.527 and LR 1.012. These indicators reflect the models’ capacity to produce correct predictions, with RF consistently outperforming other models in this specific modeling environment in terms of precision as well as low errors.

| Training/testing | Metric | LR | AdaBoost | RF | |

|---|---|---|---|---|---|

| H2 model | Training | Mean squared error | 1.717 | 0.405 | 0.17 |

| R2 | 0.779 | 0.948 | 0.978 | ||

| Mean absolute error | 1.012 | 0.527 | 0.29 | ||

| Explained variance | 0.779 | 0.95 | 0.979 | ||

| Testing | Mean squared error | 2.106 | 1.193 | 0.181 | |

| R2 | 0.721 | 0.842 | 0.976 | ||

| Mean absolute error | 1.183 | 0.857 | 0.357 | ||

| Explained variance | 0.751 | 0.844 | 0.976 | ||

| LHV model | Training | Mean squared error | 100,933 | 24,612.55 | 8,297.74 |

| R2 | 0.713 | 0.93 | 0.977 | ||

| Mean absolute error | 243.715 | 134.01 | 70.07 | ||

| Explained variance | 0.7131 | 0.937 | 0.977 | ||

| Testing | Mean squared error | 110,086.2 | 71,800.02 | 11,350.04 | |

| R2 | 0.715 | 0.814 | 0.971 | ||

| Mean absolute error | 259.03 | 198.6 | 93 | ||

| Explained variance | 0.718 | 0.815 | 0.972 | ||

On the other hand, during model testing using 30% fresh data, when looking at the MSE, the results show that RF works better than both LR and AdaBoost, with an MSE value of 0.181. AdaBoost comes in second with an MSE of 1.192, and LR comes in third with an MSE of 2.106. With a score of 0.976, the R2 number, which measures how well the models fit, shows that the RF model is the best, beating out AdaBoost (0.842) and LR (0.721). Furthermore, RF has a lower MAE value of 0.357 compared to AdaBoost’s 0.857 and LR’s 1.183, making it the best. The trend of explained variance is also the same, with RF getting a score of 0.976, which is higher than both AdaBoost (0.844) and LR (0.751). All of these data show that RF is better at predicting what will happen in this modeling case.

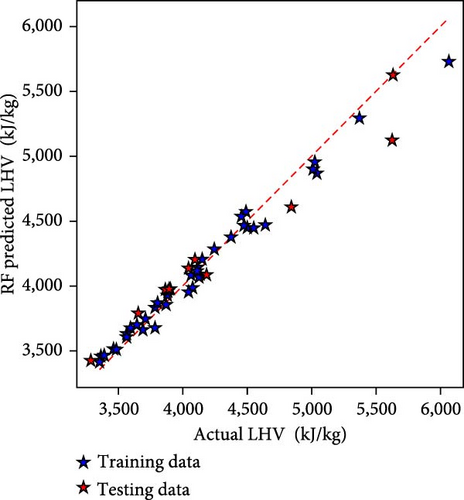

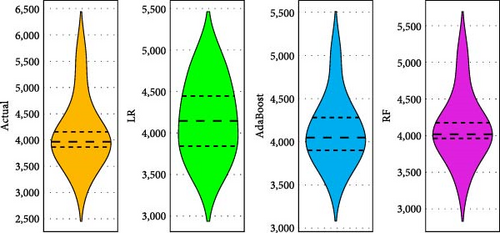

3.3. LHV Models

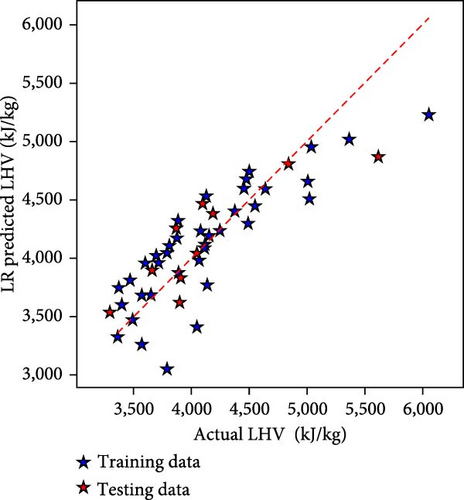

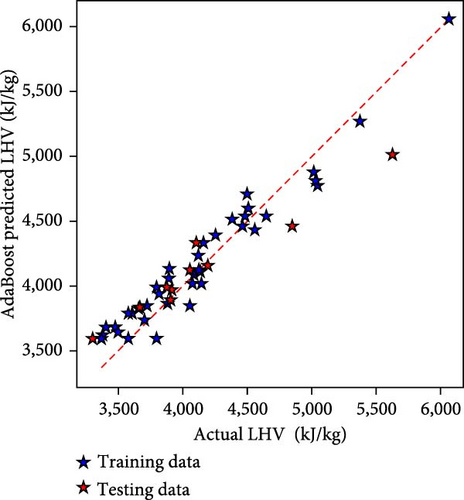

The LHV denotes the lower heating value of the producer gas in this study. Figure 4 depicts the performance of all three ML techniques employed in the present study for the model prediction of LHV data. Figure 4(a) shows the outcome of the LR-based model, and Figure 4(b) depicts the AdaBoost-based model, while Figure 4(c) depicts the RF-based LHV model. The proximity of the data points to diagonal lines demonstrates the models’ resilience. The RF-based LHV model outperforms the other two models as it can be observed that most of the data points lie close to the diagonal line.

These prediction models were developed with LR, RF, and AdaBoost and then combined with statistical methods. Table 2 lists the performance metrics for ML models. During the model training phase, in terms of MSE, the figures show substantial variances, with RF having the lowest error at 8,297.74, AdaBoost having 24,612.55, and LR having 100,933. With a score of 0.976, RF outperforms AdaBoost at 0.93 and LR at 0.713 in the R2 metric, which estimates goodness of fit. MAE highlights the RF’s precision even more, with the lowest value of 70.07, followed by AdaBoost at 134.01 and LR at 243.72. These patterns are mirrored in explained variance, with RF leading at 0.977, AdaBoost at 0.937, and LR at 0.713. In this scenario, these criteria jointly identify RF as the most successful model in terms of accuracy and predictive performance.

In the model testing phase also, RF has the lowest MSE at 11,350.04, surpassing AdaBoost at 71,800.02 and LR at 110,086.2. RF has the greatest R2 score of 0.971, topping AdaBoost at 0.814 and LR at 0.715, representing the quality of fit. Furthermore, RF outperforms both AdaBoost and LR in terms of MAE, with low values of 93 and 259.03, correspondingly. With a score of 0.972, the explained variance measure confirms RF’s better performance over AdaBoost and LR. These metrics highlight the RF-based model’s efficacy in generating higher accuracy and better model fit.

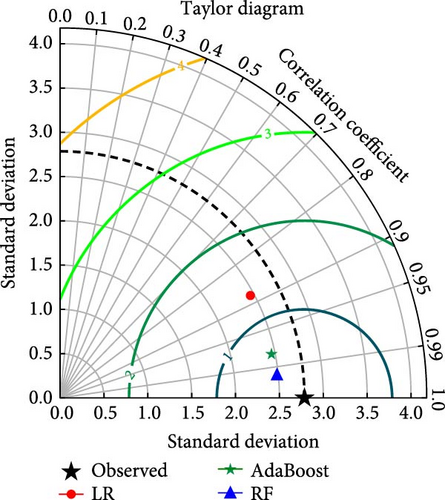

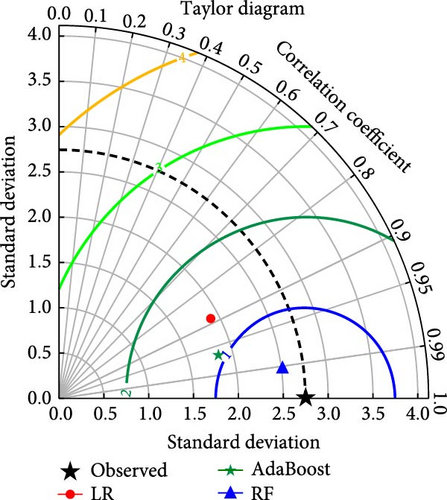

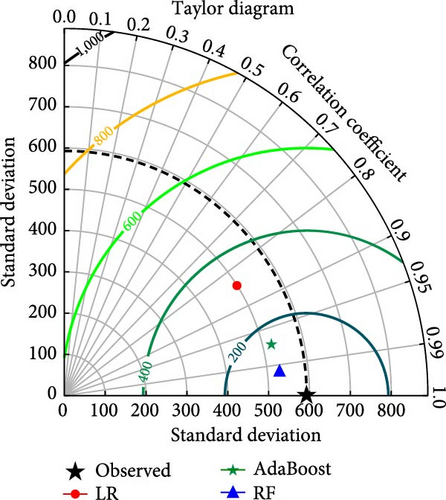

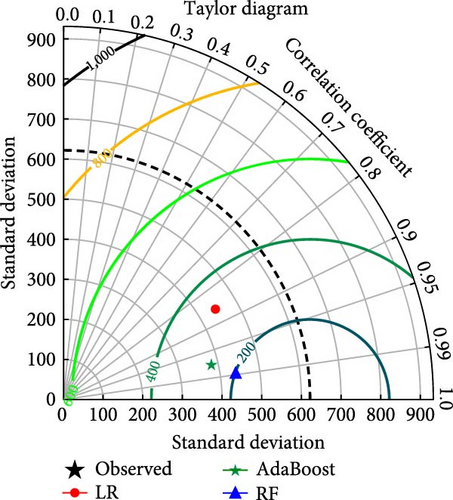

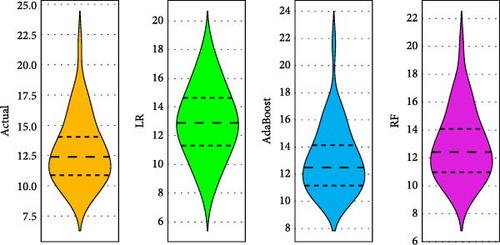

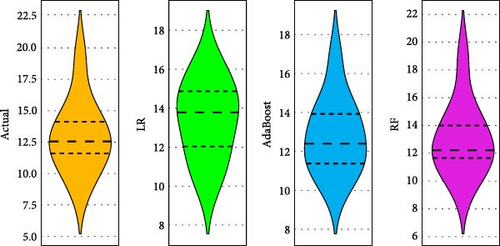

3.4. Model Comparison

The models developed with LR, RF, and AdaBosst were also compared with advanced methods of Taylor’s diagram and violin plots. Figure 5(a) depicts Taylor’s diagram for model comparison during model training, while Figure 5(b) depicts Taylor’s diagram during model testing in the case of hydrogen yield models. Also, Figure 6(a) depicts Taylor’s diagram for model comparison during model training, while Figure 6(b) depicts Taylor’s diagram during model testing in the case of LHV models. Taylor’s diagrams also show the superiority of RF-based models for both hydrogen yield and LHV models. The models were also compared with violin plots as it is easy to identify the superior models with this approach. The violin plots of the model having almost mirror reflection are considered superior since it is almost similar to actual data. Figures 7(a) and 7(b) depict the violin plots for hydrogen yield models during model training as well as model testing, respectively. Figures 8(a) and 8(b) depict the violin plots for LHV models during model training as well as model testing, respectively. In this also, it can be observed that violin plots are almost similarly shaped as actual data violin plots. This indicates excellent performance and low error in the case of RF-based models.

4. Conclusions

- (1)

RF outperformed AdaBoost (0.4048) and LR (1.717) in the hydrogen yield prediction model during training, delivering the lowest MSE at 0.17.

- (2)

RF outperformed AdaBoost (24612.55) and LR (100933) in LHV models during training, with the lowest MSE of 8297.74, reaffirming its accuracy in forecasting LHV.

- (3)

RF maintained its lead in hydrogen yield model testing, with the lowest MSE of 0.181, topping AdaBoost (1.193) and LR (2.106).

- (4)

Taylor’s diagrams and violin plots repeatedly demonstrated RF’s superiority, validating its ability to forecast hydrogen yield and LHV properly in both training and testing.

ML techniques like RF and AdaBoost are useful tools for simulating biomass gasification, but their efficacy depends upon the data quality. Another issue is that the prediction model’s employability to various biomass types is restricted owing to differences in biomass properties. On the other hand, the ML-based strategies may enhance the process efficiency by properly anticipating critical factors like the control factors and response variables. The robust prognostic models for biomass gasification may assist stakeholders in energy sector stakeholders in making informed choices like assessing biomass gasification viability for any project and technical improvement.

Abbreviations

-

- AdaBoost:

-

- Adaptive boosting

-

- ANN:

-

- Artificial neural networks

-

- BTE:

-

- Brake thermal efficiency

-

- CO:

-

- Carbon monoxide

-

- CO2:

-

- Carbon dioxide

-

- DF:

-

- Dual-fuel

-

- ER:

-

- Equivalence ratio

-

- GFR:

-

- Gas flow rate

-

- GHG:

-

- Greenhouse gases

-

- H2:

-

- Hydrogen

-

- kWe:

-

- Kilowatts electrical

-

- LR:

-

- Linear regression

-

- MSE:

-

- Mean squared error

-

- ML:

-

- Machine learning

-

- MAPE:

-

- Mean absolute percentage error

-

- MAE:

-

- Mean absolute error

-

- NARX:

-

- Nonlinear autoregressive with exogenous inputs

-

- PG:

-

- Producer gas

-

- R2:

-

- Coefficient of determinations

-

- RDF:

-

- Refuse driven fuel

-

- RF:

-

- Random forest

-

- RMSE:

-

- Root mean squared error

-

- RT:

-

- Residence time

-

- SDGs:

-

- Sustainable development goals

-

- w/w:

-

- Weights/weights

-

- LHV:

-

- Lower heating value.

Conflicts of Interest

The authors declare that they have no conflicts of interest

Authors’ Contributions

Mansoor Alruqi is responsible for the conceptualization, methodology, data curation, and writing, original draft. P. Sharma is responsible for the writing, review and editing, resources, and supervision. Prabhu Paramasivam is responsible for the writing, original draft, and writing, review and editing. H. A. Hanafi is responsible for the methodology, writing, review and editing, and supervision.

Acknowledgments

The author (Mansoor Alruqi) would like to thank the Deanship of Scientific Research at Shaqra University for supporting this work.

Open Research

Data Availability

Data available on request.