Investigating the Effects of Hyperparameters in Quantum-Enhanced Deep Reinforcement Learning

Abstract

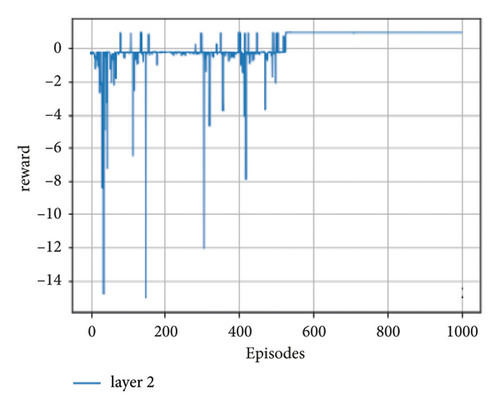

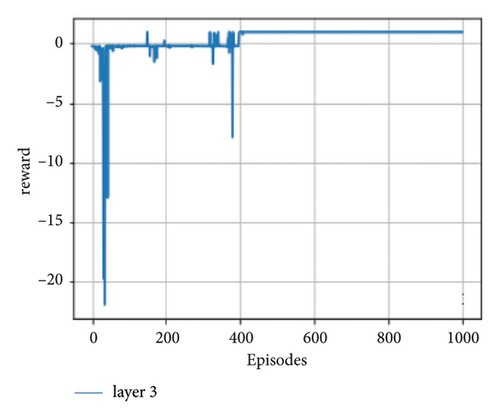

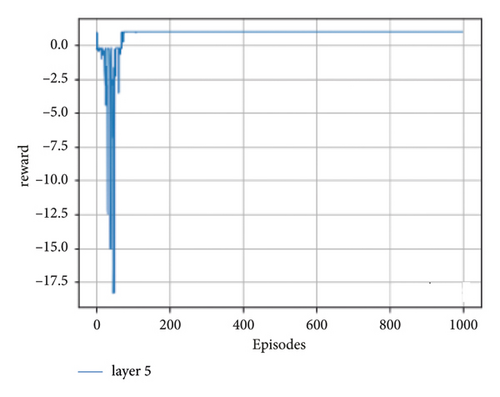

Quantum machine learning uses quantum mechanical concepts of superposition of states to make the decision. In this work, we used these quantum advantages to enhance deep reinforcement learning (DRL). Our primary and foremost goal is to investigate and elucidate a way of representing and solving the frozen lake problems by using PennyLane which contains Xanadu’s back-end quantum processing unit. This paper specifically discusses how to enhance classical deep reinforcement learning algorithms with quantum computing technology, making quantum agents get a maximum reward after a fixed number of epochs and realizing the effect of a number of variational quantum layers on the trainability of enhanced framework. We have analyzed that, as the number of layers increases, the ability of the quantum agent to converge to the optimal state also increases. For this work, we have trained the framework agent with 2, 3, and 5 variational quantum layers. An agent with 2 layers converges to a total reward of 0.95 after the training episode of 526. The other agent with layers converges to a total reward of 0.95 after the training episode of 397 and the agent which uses 5 quantum variational layers converges to a total reward of 0.95 after the training episode of 72. From this, we can understand that the agent with a more variational layer exploits more and converges to the optimal state before the other agent. We also analyzed our work in terms of different learning rate hyperparameters. We recorded every single learning epoch to demonstrate the outcomes of enhanced DRL algorithms with selected 0.1, 0.2, 0.3, and 0.4 learning rates or alpha values. From this result, we can conclude that the greater the learning rate values in quantum deep reinforcement learning, the fewer timesteps it takes to move from the start point to the goal state.

1. Introduction

Quantum machine learning (QML) is a subconcept of quantum computational data and information processing research in which the main target is developing the QML algorithms that can learn from data to improve the existing classical learning methods in machine learning [1]. Here, quantum computers can process data in the form of qubits rather than bits and this computer can have also its own architecture. For this architecture, it is impossible to apply classical machine learning methods directly to learn and process the data. A quantum machine learning algorithm can be implemented on a quantum computer; this computer can exploit the laws of the quantum mechanical model (quantum theory) to process the data and to predict the outcome. Aimed to investigate the applicability of quantum computing advantage to solve such problems, the researcher proposed this study and developed quantum circuits for DRL to analyze the performance of quantum agents with different learning rates and different quantum layers. This study used the advantage of PennyLane provided by Xanadu to create circuits and the open gym benchmark-like frozen lake problem was solved. The expected optimization of the best policy action selection of a quantized agent to get maximum reward is analyzed and then the learning performance is stated. This can be achieved by calculating the quantum expectation value or expected energy value.

The main basis of quantum machine learning is to use the essential benefits of quantum computing technology such as quantum entanglement, quantum superposition, and quantum parallelism to increase the performance of classical machine learning algorithms [2–5]. Although they utilized the quantum mechanics’ concept of quantum entanglement and supper position, most of the quantum machine learning algorithms (QMLA) are borrowed from the basic concept and architecture of classical machine learning algorithms [6]. In other cases, some researchers used the hybrid classical-quantum architecture and proved that the use of classical-quantum hybrid systems can overcome the limitation of noise intermediate scale quantum (NISQ) computers [7, 8]. For example, Dunjko et al. [9] proposed the quantum approximate algorithm in which classical solution parameters to a problem can be used as an input and as the starting point for larger problems such as variational quantum eigen solvers. Recently, the experiment held by Parades et al. [10] proved that quantum machine learning has shown interesting results for machine learning applications where classical machine learning techniques are limited to perform because of inadequate training data and high dimensionality of the features. They proposed a PennyLane simulator-based hybrid classical-quantum model for transfer learning to enhance the classification of face images with a COVID-19 mask and resulted in an accuracy of 99.05% in classifying the exact protective masks. Lloyd et al. [11] also proposed quantum-inspiredk-means and nearest centroid algorithms through quantum distance approximation as we do have quantum access to the classical data. In quantum enhanced clustering algorithm, the execution time for a certain state of N vectors in feature space with dimension D of each iteration (by a distance approximation technique with error ε) is given by O(kN logD/ε). Generally, several works depict the incredible performance of the quantum-enhanced machine learning model for the problem with large feature spaces, which can be difficult for the classical machine learning algorithm to perform.

2. Related Works

By its nature, whether it is enhanced or not, deep reinforcement learning (DRL) is a machine learning in which the interaction takes place between an agent and the environment. In various real-worlddecision-making situations, there is no data on the best course of action. For such a scenario, the model must interfere with its surroundings in order to gather information and learn how to complete a task via its own experience. This learning method is called reinforcement learning (RL). For example, a video game character might learn a fruitful strategy by repetitively playing the game, examining the outcomes, and improving the future ways. In current studies of quantum enhanced RL [12, 13], variational quantum circuits (VQC) replace the policy of training the deep neural network (DNN) of existing deep reinforcement learning (DRL). At each experience in each episode, a quantum agent with particular state information decides its action from the policy of variational quantum circuits, and the classical optimizer such as RMsprop, Adam optimizer, and others are used to update the parameters. The action selection is based on the expected value of quantum measurement.

Though quantum deep reinforcement learning (QDRL) with designated parameterized quantum circuits (PQC) is a quite new field of study, some researchers such as Lockwood and Si [12] studied different encoding methods such as directional encoding and scaled-up encoding techniques and also pooled the parameterized circuit with quantum pooling operations to solve some reinforcement learning problems. This encoding technique is cost-efficient but requires a large number of quantum gates which are beyond the capabilities of actual quantum simulators.

The method used by Hu and Hu [14] uses common gates, but they investigated with less number of qubits. It needs some investigation to know what if the number of qubits increased. Here, the intention is to advance the intelligence of quantum agents, which can react with the environment and learn from it to reach some stated goal. In this sense, numerous works have provided suggestions in the last few years [9, 15–18]. Few of these deal with enhancing reward by quantizing an agent which interacts with a classical environment through Grover search. The others prove quantum speed up when both agents and environments had been quantized with quantum phenomena.

Table 1 shows the bird’s eye view of quantum, quantum-classical, and classical deep reinforcement learning. The learning algorithm can be enhanced either by quantizing an agent or an environment, or both. Quantum computers may be available only in large companies such as IBM, D-Wave, and Google for research purposes [9, 19]. For the reason of unavailability of such resources, we find a way for developing the hybrid and running the learning algorithms on current classical computers such as the method used by [15, 20, 21].

| Ways of enhancing DRL | Agent | Environment | Information representation | Learning algorithm | Computational resource | Application area |

|---|---|---|---|---|---|---|

| Classical DRL | Classical | Classical | Bits | DNN, GBDT | Resource available | Broad application area |

| Hybrid C-Q DRL | Classical | Quantum | Bits and qubits | DNN + VQC | Limited resource | Area enhanced with partial QC advantage |

| Quantum | Classical | Bits and qubits | DNN + VQC | Limited resources | Area enhanced with partial QC advantage | |

| Pure quantum DRL | Quantum | Quantum | Qubits | Quantum algorithm | Limited resource | All areas which need QC advantage |

For the scenario of “quantum agent classical environment,” Dunjko et al. [9] suggested the Grovers iteration-based searching algorithm for unstructured search and investigates a potential quantum computing benefit when a quantized agent interacts with a classical environment and the study improves the quantum speedup over the classical computations. The other scenario of quantum-enhanced deep reinforcement learning comes with a “quantum agent quantum environment.” The proposed study by Lamata [22] and Albarran et al. [23] works on this scenario by considering the quantum agent and quantum environment interactions to investigate the possibility of implementations by increasing the extent of system complexity. Another prospect would be to investigate classical agent and quantum environment interactions which are made by applying the “classical agent-quantum environment” scenario. The study proposed by Daoyi Dong et al. [24] investigates the way of mapping conventional reinforcement learning into quantum reinforcement learning, and the study focuses on linking the previous states with future quantum states. According to the study with different learning parameters, there is a quadratic quantum speedup compared to the classical temporal difference (TD) by applying superposition and quantum entanglement on the quantum algorithms.

Some other researchers [9, 15–18] study the applicability of quantum computing on classical reinforcement learning. According to the study, variational quantum circuits can replace the policy training of deep neural networks in existing classical DRL. The interaction between the environment and an agent takes place based on the policy of quantum circuits. For each and every episode, an agent decides its action from the policy of quantum circuits, and the classical optimizer such as Adam optimizer is used to update the parameter [19]. This paper uses the same scenarios with different circuit designs and different environments.

3. Methods

3.1. Deep Reinforcement Learning

Reinforcement learning (RL) is different from supervised and unsupervised machine learning, which is naturally about training input with its corresponding output and searching the structure hidden in a group of unlabeled examples. Even though one might think of reinforcement learning as a type of unsupervised learning for the reason that it does not depend on the instances of correct behavior, RL is a learning method to maximize the reward signal instead of finding the hidden structure of the dataset. Finding structure in an agent’s experience can be advantageous in reinforcement learning, but the agent does not know the learning tricks of future activities.

All RL agents have obvious goals in that they pick actions to make an impact on their environments and based on the action the agent can be rewarded. Furthermore, it is typically supposed that from the starting point, the agent must try in any doubt about the environment it faces. When RL comprises of planning, it has to identify the relationship between planning and agents’ ability of real-time action selection, and also the searching method of the environments model are developed and enhanced. By recall, the aim of RL is to solve the problem of serial decision-making in discrete and continuous state space and to take actions with extremely probable rewards. The agent must follow a policy in making a series of decisions by taking actions in a different environment. Estimating the goodness of policy in taking the decision is evaluated at the end of accumulated costs or rewards through epochs. Based on the evaluation of the prior policy, the next policy must be improved by the agent to make an increased probability of deciding with greater estimated rewards. In the iteration of every step, the agent uses trial and error to improve the policy till the policy reaches the best optimum, which is the Markov decision process (MDP). MDPs are a classical reinforcement learning in serial decision-making, where actions affect not just the immediate rewards but also the successive states and the future rewards.

- (i)

Action-value function

-

Based on policy π, there is an expected reward returned to the agent for picking the action a in the environment of state s. The value of taking an action a in the state s following the policy π is represented by qπ(s, a) as the probable return of reward starting from the state s, taking an action a, and then following the policy π which is represented as

() - (ii)

State value function

-

The function value of state s which is under the policy π is represented by vπ(s) which is the probable return when the agent is initially in the state s and is following the policy π and this is represented as

() - (iii)

Optimal action-value function

-

There is one action value that is greater or equal to all other action values, and also at the minimum probability, there is permanently one policy that is greater than or equal to all other policies. This action value and policy are said to be optimal action value (denoted by q∗) and optimal policy (denoted by π∗) respectively.

() -

This function q∗(s, a) provides the probable return for taking action a in state s and then following the optimal policy π∗.

3.1.1. Q-Learning: Off-Policy Temporal Difference Control

This bellman equation taken from [25] simplifies the investigation of an algorithm and is used to provide early convergence confirmations. However, this algorithm is applicable only for the problem with a small number of states in the state space and is represented by the tabular method and is difficult for the problem with a huge number of state spaces and action spaces. That is why Huang came up with deep Q-Learning [26].

3.1.2. The Deep Q-Learning

According to Huang [26], Q-learning is one type of reinforcement learning algorithm that solves the Markov’s decision problem in the classical method. But, we recall that Q-learning is restricted to solving only problems having a discrete state. This means that it converges to optimal states by obtaining an optimal policy when the state or action space is small. Representing the action value function for the reinforcement problem with larger action and state by using Q-learning can be difficult. For this reason, the function approximators such as neural networks (NN) are used by researchers to represent the action-value function. Various researchers such as [27–29] have utilized NN to depict the Q-value function for the function approximators and they have gone over various reinforcement learning tasks such as playing a video game. Enhanced Q-Learning and deep Q-learning (DQN) are employed to learn the optimal policy of a problem containing a large state and action space.

Here, , is the parameter for the target network and θj is the parameter for the Q-network. In each defined timestep, the Q-network parameter θj is used to update the target network parameter. st+1 is the state of the environment after the agent plays an action at at the environmental state st. The loss function is calculated from the batch sampled from the replay memory.

3.2. Froze Lake Environment

- (1)

The agent reaches the goal G

- (2)

The agent iterates and reaches a maximum of 3000-time steps

- (3)

The agent falls into a hole (red subgrid).

For each episode in which the goal is reached, the agent takes a reward of +1 for successfully reaching the goal, and −0.1 if the agent is stepping into one hole.

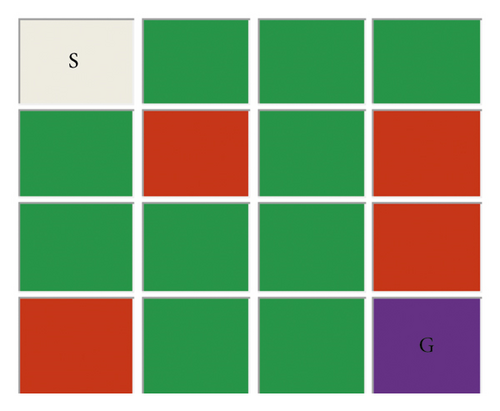

As shown in Figure 1 in frozen lake environment (extended from Hu and Hu [14]), it is expected from the quantum agent to move from the grid start location (S) found at the left-up corner to the right bottom corner goal location (G). The lake may not all be frozen which means there may be various holes (red color) on the path. It is the responsibility of the quantum agent to train and avoid walking into these hole locations, and if not, the quantum agent will get a huge negative reward, and then an episode iteration will be ended. To realize this, we have assigned a little negative return value to each wrong move. The frozen lake environment shown in Figure 1 has 16 eigen states (4 holes+ 12 frozen states) and 4 eigen actions (MoveTop, MoveDown, MoveLeft, and MoveRight). Without applying trainable input data weights, we encode each point on the grid as one of the computational basis states of a 4-qubit quantum system (|0000〉 … |1111〉).

From Table 2 , we have seen that most research studies have been performed in quantum reinforcement learning and they have realized the possibility of enhancing classical reinforcement learning with quantum computing. The literature depicts that the performance of the enhanced model was better than the classical one. Additionally, in the last five years, the study on the field of quantum computing has become significant and has grown as of frightening data increment and the idea of promising quantum computing technologies will change today’s computing world. This field got attention from various researchers of companies such as Google [34], IBM [35], and Microsoft [36], and they are racing to develop quantum computers and have realized the applicability of quantum computing technology in classical machine learning algorithms [37].

| Related works of literature | OpenAI gym environment | Performance | Algorithm used by the researcher | |

|---|---|---|---|---|

| Yueh Hsiao et al. [30] | Acrobot and LunarLander | Converged faster | Proximal policy optimization (PPO) | |

| Lockwood and Si [31] | CartPole-v1 and Acrobot | Not defined | Policy gradient with baseline’ | |

| Lan [32] | Pendulum | Faster convergency | Soft actor-critic | |

| Yun et al. [33] | CartPole-v0 | Not defined | Q-Learning | |

| Hu and Hu [14] | 2 × 3 frozen lakes | Not defined | Deep actor-critic | |

3.3. Variational Quantum Circuits

The parameterized quantum circuit (PQC) is a quantum circuit using tunable parameters to accomplish several mathematical tasks, such as classification, approximation, and optimization [38]. To perform such tasks, the operation of the quantum circuit model can have 4 simple steps.

3.3.1. Quantum State Preparation

This is the process of encoding input information into equivalent qubit quantum states, which can be processed in the quantum circuit later.

3.3.2. Applying Quantum Entanglement

This is the process of entangling qubit quantum states by controlled quantum gates such as CNOT gate and rotating qubits by parameterized quantum rotation gates. This process can be iterated after the update of parameters in a multilayer manner with other parameters, which attempts to improve the performance of the quantum circuit.

3.3.3. Quantum State Measurement

This is the process of decoding and evaluating the treated qubit states to a piece of proper output information.

3.3.4. Parameter Optimization

It is the last process conducted external to quantum circuits (QC) on a classical computer. The conventional classical optimizer algorithm, such as the Adam optimizer, can update the quantum circuit parameters in the way of optimizing the objective function of the algorithm. Then, the updated circuit with the new parameters has the ability to perform the calculation again from the 1st process with the next state input to the circuits. Similar to classical NN, the parameterized quantum circuit is known to estimate any continuous functions, and therefore, it has been broadly applied in QML research [39–42].

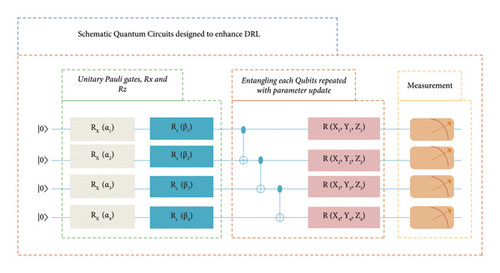

In the prototype of designed circuits shown in Figure 2, the agent is initially placed on the state |0000〉, and taking this encoded state as input, the unitary Pauli gates are applied to rotate the values on the arbitrary x-axis and z-axis. The entangling layer of the circuit entangles the values of unitary Pauli gates making the entangling between the qubits. The measurement layer measures each expectation value, which is related to the probability of measuring a certain state and how much does that state adds to the cost function.

3.4. Hybrid Classical-Quantum Deep Reinforcement Learning

The proposed hybrid framework with the designed VQC is viable on the existing noise intermediate scale quantum machine learning platform. Thanks to Xanadu for providing the PennyLane which consists of quantum machine learning libraries to simulate various tasks of quantum-enhanced machine learning, and the developed framework can resolve the VQC depth challenges by being hybridized with iterative parameter optimization (IPO) on a classical computer. Classical optimization takes place external to the quantum circuit and it provides the loss function and gradient calculations to update the parameters.

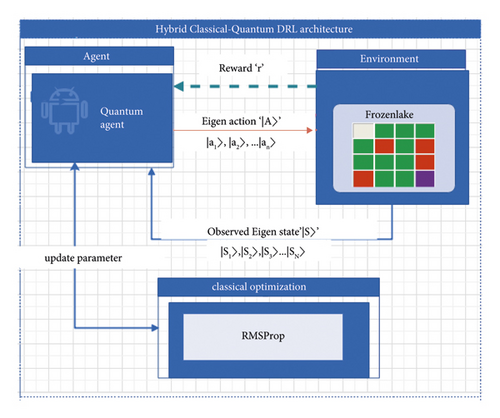

From Figure 3, the experience replay chooses ε-greedy action from the current state, executes it in the frozen lake environment, and returns a reward and then moves to the next state. It keeps this observation as a training sample of data. All previous experience replay observations are kept as training data. We now take the random batch of samples from this training data, so that it comprises of a combination of older and more recent samples. This batch of training data is then entered into both the quantum Q network and the target quantum network. The quantum Q network takes a current state and an action from the separate data sample and predicts the quantum Q value for that specific action which then becomes the predicted quantum Q value.

The target quantum network takes the next state from each data sample and predicts the best Q value out of all actions that can be taken from that state and is called the target quantum Q value. The predicted quantum Q value, target Q value, and the observed return of reward from the data sample are used to calculate the loss to train the quantum Q network. Moreover, we take a broad view of VQC to the standard deep reinforcement learning for approximating the action-value function. Lastly, we examine a policy reward of different hyperparameters of deep reinforcement learning for investigating the performance of PennyLane-based quantum deep Q-learning. For the testing environment, we have selected the standard openAI gym [43] frozen-lake environment.

Algorithm 1 Quantum enhanced DQL sampled from Huang [26] and Chen et al. [13].

-

Algorithm 1: Quantum enhanced deep Q-learning.

-

Set replay memory M to state size N

-

Initialize action-value function quantum circuit Q with arbitrary parameters θ

-

For episode e = 1, 2, 3, 4, ……. E do

-

Initialize State s1 from the set state S and encode it into

-

the quantum state using basis encoding

-

for the time step t = 1, 2, 3, …. T do

-

With probability ε, select a random action at

-

otherwise, select the optimal action at at = maxa q∗(st, a; θ) from the result of quantum circuit

-

Execute the selected action at and see the reward rt and the next state st+1

-

Store transition (st, at, rt, st+1) in replay memory M

-

Sample a random minibatch of transitions (sb, ab, rb, sb+1) from the replay memory M

-

-

Perform a gradient descent step on

-

end for

-

end for

3.4.1. Action Selection in Quantum Enhanced Deep Q-Network

The average value can be produced after the measurement, and this average value is called the value of quantum expectation.

To be specific, let us take the following example. The quantum agent again wants to measure the expectation value of |1〉 ⊗ |0〉 ⊗ |0〉 ⊗ |0〉 (See Table 3) and let us say that each qubit has been measured 500 times.

| |1〉 | |0〉 | |0〉 | |0〉 | |

|---|---|---|---|---|

| Total number of repeated measurements | 500 | 500 | 500 | 500 |

| Total number of measurements which gives 1 | 330 | 400 | 350 | 190 |

| Total number of measurements which gives 0 | 170 | 100 | 150 | 310 |

| Probability of getting 1 or P (1) | 0.66 | 0.8 | 0.7 | 0.38 |

| Probability of getting 0 or P (0) | 0.34 | 0.2 | 0.3 | 0.62 |

| Expectation value | 0.66 | 0.8 | 0.7 | 0.62 |

Furthermore, each measurement on a quantum bit of n qubits can be executed concurrently in parallel.

3.5. State Encoding

In the parameterized quantum circuits (PQC), to process classical data, data encoding is needed so that, classical data is converted into the quantum state. There are quite a lot of types of quantum encoding frequently used in quantum machine learning (QML) applications (see Schuld and Petruccione [46] for more information about quantum encoding). Some of them are amplitude encoding utilized by Antipov et al. [47], computational basis encoding, angle encoding, and directional encoding. Encoding classical state to quantum state is currently a hot research area and various methods of encoding can provide various quantum advantages for machine learning and natural language processing. Some of the encoding methods are currently not realized on real quantum computer hardware because they need a large number of quantum circuits made from complex quantum gate interaction.

3.5.1. Computational Basis Encoding for Frozen Lake Environment

Here, we have taken a frozen lake environment with four qubit systems. The four-qubit quantum system gives 24 or 16 possible basis states. The single-qubit unitary Pauli rotation operation is used to encode the classical bits into the 16 quantum states.

| Decimal number | Classical binary numbers | Representation of entangled quantum states |

|---|---|---|

| 0 | 0000 | |0〉 ⊗ |0〉 ⊗ |0〉 ⊗ |0〉 |

| 1 | 0001 | |1〉 ⊗ |0〉 ⊗ |0〉 ⊗ |1〉 |

| 2 | 0010 | |1〉 ⊗ |0〉 ⊗ |1〉 ⊗ |0〉 |

| …… | ……… | ……… |

| 13 | 1101 | |1〉 ⊗ |1〉 ⊗ |0〉 ⊗ |1〉 |

| 14 | 1110 | |1〉 ⊗ |1〉 ⊗ |1〉 ⊗ |0〉 |

| 15 | 1111 | |1〉 ⊗ |1〉 ⊗ |1〉 ⊗ |1〉 |

| State with the decimal number | Entangled quantum state | Value after applying Pauli gate of X gate and Y gate operators | |

|---|---|---|---|

| αi (i = 1, 2, 3, 4) | βi (i = 1, 2, 3, 4) | ||

| 0 | |0〉 ⊗ |0〉 ⊗ |0〉 ⊗ |0〉 | (0, 0, 0, 0) | (0, 0, 0, 0) |

| 1 | |0〉 ⊗ |0〉 ⊗ |0〉 ⊗ |1〉 | (0,0,0, Π) | (0,0,0, Π) |

| ………… | ………… | …… | …… |

| 14 | |1〉 ⊗ |1〉 ⊗ |1〉 ⊗ |0〉 | (Π, Π, Π, 0) | (Π, Π, Π, 0) |

| 15 | |1〉 ⊗ |1〉 ⊗ |1〉 ⊗ |1〉 | (Π, Π, Π, Π) | (Π, Π, Π, Π) |

The three parameters (Xi, Yi, and Zi) for this general single qubits unitary rotation operator are used to provide optimization.

4. Experimental Setup and Results

4.1. Experimental Setup

Quantum enhanced deep reinforcement learning (QDRL) framework is developed in the python programming language. Programming, experimental visualization, and all advance of this research study was completed in Python 3. We employed Python 3.7.8 for accessing the PennyLane platform. The other program that we have employed to simply manage the package and control the environment is the anaconda. We utilized Jupyter Notebook as the development environment for writing and implementing the python code. The Matplotlib was utilized for the plotting and was mostly tied to NumPy in real-world applications and it offers functions for the plots such as line, pie charts, scatter, histogram, and others. Hence, the implementation procedures and the hyperparameters needed for the developed framework is discussed in the following table. At the last, the researcher discusses the overall outcome of the study with the experiment-based interpretations, and all hyper parameters used through this study is depicted in table 6.

| Hyperparameters | Value assigned |

|---|---|

| Number of qubits | 4 |

| Number of layers | 2, 3, 5 |

| Epsilon | 1 |

| Batch size | 5 |

| Maximum time step | 3000 |

| Maximum episode | 1000 |

| Gamma | 0.99 |

| Alpha | 0.1, 0.2, 0.3, 0.4 |

| Learning rate | 0.01 |

| eps | 1e − 08 |

4.2. Experimental Result

The frozen lake environment is used in our work to evaluate the performance of DRL algorithms on quantum circuits. Previous practitioners such as Hu and Hu [14] used a grid-based environment similar to the frozen lake but with smaller size (2 × 3) grids. For this study, we have used the standard frozen lake values of grid size (4 × 4) by increasing the number of qubits to 4 which can have 24 basis states. For the designed circuits, we have simulated numerically with the PennyLane [44]. A numeric simulator is used to discover the prospects of using quantum computing technology to solve DRL task and their optimality. To expand the former work of Hu and Hu [14], we present algorithms that improve upon the previous results. We have applied the learning techniques to OpenAI Gym [43] and frozen lake environments which are more complex with the number of states and layers than in the previous work [14]. To verify the trainability of the framework, we take 1000 episodes of the standard frozen lake experiment. The horizontal coordinate signifies an episode in the enhanced learning process, and the vertical axis represents the number of rewards returned to the agent for taking some actions on the environment. The experiment considers the trainability of enhanced DRL and the effect of the number of layers on the enhanced DRL framework. According to the experiment, the quantum enhanced DRL is trainable in all cases but, the time it takes to converge to the optimal value is different for the different quantum layers.

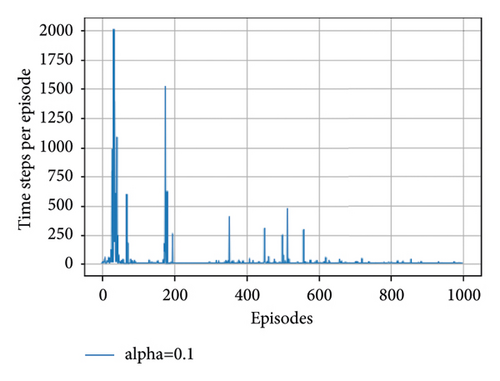

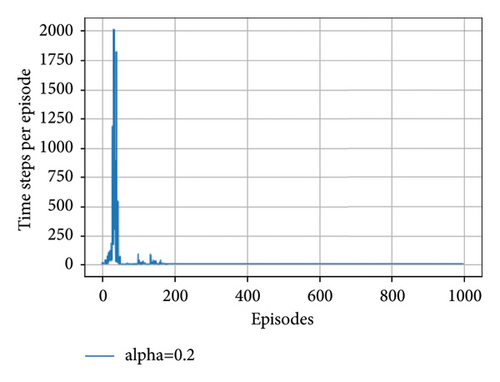

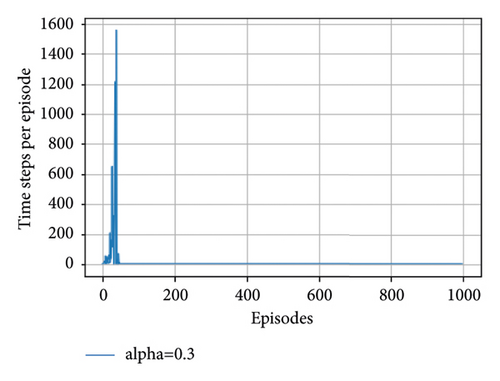

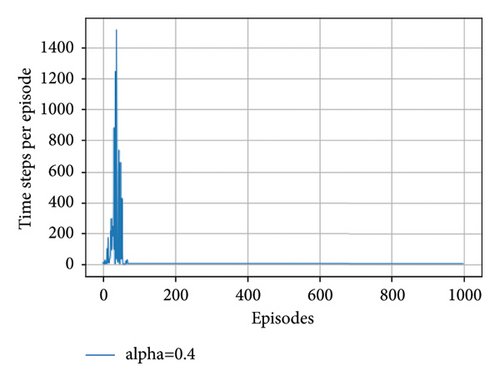

In the case of seeing the performance in different learning rates (alpha), the agent running with 0.1 alpha value hits the maximum time steps of 2012. When the alpha value is 0.1 or smaller, the agent explores much more but it learns very slowly, so the training process converges to the optimal state very slowly.

Figure 5 gives a precise description of enhanced framework learning results. We record every single learning epoch to verify the outcomes of enhanced DRL algorithms with selected 0.1, 0.2, 0.3, and 0.4 learning rates or alpha values (see Figures 5(a)–5(d), respectively). From this result, we can conclude that the greater the learning rate values in quantum deep reinforcement learning, the fewer timesteps it takes to move from the start point to the goal state. The result of QDRL shows advantages with 0.4 learning rate or alpha values. The advantages are the use of quantum representation. The quantum representation uses the quantum superposition strategies of quantum mechanics in which the updating method is carried out via quantum parallelism. Quantum parallelism will be more useful in the near future when quantum enhanced device comes into use rather than simulating on conventional computers.

From Table 7, we can see that when the agent got the reward of 0.95, it costs the agent 6 timesteps which is the time step the agent takes to converge into the optimal state. Generally, the time steps of an agent with a learning rate from 0.2 to 0.4 for the last 200 episodes is similar which is 6 and the reward also converges to the optimal value of 0.95.

| Episode | 799 | 849 | 899 | 949 | 999 | Alpha |

|---|---|---|---|---|---|---|

| Reward | −0.24 | −0.24 | −0.26 | 0.95 | 0.95 | ←0.1 |

| 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | ←0.2 | |

| 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | ←0.3 | |

| 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | ←0.4 | |

| Timesteps | 8 | 8 | 7 | 6 | 6 | ←0.1 |

| 6 | 6 | 6 | 6 | 6 | ←0.2 | |

| 6 | 6 | 6 | 6 | 6 | ←0.3 | |

| 6 | 6 | 6 | 6 | 6 | ←0.4 | |

5. Conclusions

Though the main focus of this study is enhancing DRL with quantum machine learning and analyzing the learning performance of that framework, we have studied the background of this technology and its applicability as well as and the need of applying this technology to current machine learning models. This can be seen from two sides which are the theoretical side and the technical side. On behalf of the theoretical study, we have studied that, the motivation behind this new machine learning paradigm is to obtain improved learning performance. The study also presents the state-of-the-art in the development of quantum science in the area of AI and encourages the enhancement of classical ML technology. Specifically, the representation of quantum computations is generally different from the current classical computations, and various features of quantum computation are prospectively evolving.

Machine learning is a feature that is currently influenced by the theory of quantum computation. There are various demonstrations and conformations that quantum mechanical phenomena such as superposition, entanglement, and quantum inference which can change the current machine learning algorithm such as supervised, unsupervised, and reinforcement learning for its betterment in providing sustainable speed up on processing data. In this study, we also analyzed the need for classical data and the method of encoding it to the quantum state to process it on cloud-based provided resources of quantum machine learning. To be accessed and processed on quantum processing units, the data need to be encoded into the quantum state. The type of encoding can be different from task to task for the specific problem that needs to be solved.

Generally, we have analyzed that knowing the encoding techniques that can handle the problems with that limited resource is expected from the researcher as the existing quantum devices are very small and intermediate scale. They only cover a small number of qubits. The other thing is considering the complexity of circuits. Minimizing the number of quantum circuits is needed to reduce the complexity and needs to minimize the number of parallel quantum actions required to grasp the quantum encoding. The well-known quantum data encoding is computational basis encoding which provides codification of classical data to a quantum state. Quantum phenomena such as superposition and entanglement can provide parallelization for all quantized states. Lastly, we have confirmed that the computational basis encoding is not efficient for the quantum deep reinforcement learning (QDRL) problem that needs a huge number of qubits but is efficient for tasks solved by applying a smaller number of qubits. The standard DRL task can be enhanced with a smaller number of qubits because the trainability of the framework can be investigated on a standard frozen lake which contains 4 × 4 matrices of state action spaces.

On behalf of the practical side, the outcomes of experiments validate the feasibility of an enhanced deep Q-learning framework and then verify its learning performance for the agent with different quantum layers and different learning rates. The experiment depicts that the value of a number of layers and the number of learning rates can affect the learning performance of an agent and as soon as a quantum-enhanced deep reinforcement learning turn on a real quantum computer, it can be efficiently used to improve the quantum robot learning for achieving some important tasks. Generally, we have verified the applicability of quantum computation to machine learning specifically deep reinforcement learning, and the further exciting results are what we expect after this enhanced deep Q-learning run on fully quantum computers in the nearby future.

6. Recommendations

Quantum-enhanced machine learning as a whole and quantum-enhanced deep reinforcement as specific is a newly quantum computing-inspired learning framework that has a theoretical and experimental gap. Most of the experimental gaps can be solved only when the quantum resource is available than today’s resources. Most companies such as IBM, Google, and Xanadu are trying to handle these problems by providing cloud-based resources. Though the resource is enough for some simple problems, it is not efficient for complex problems such as the Atari games and Game of Go. Here, every activity such as state representation (preparation and encoding), policy evaluation and policy iteration, action selection, and optimization in reinforcement learning needs theoretical and experimental studies to enhance it with quantum computing technology. In the case of state representation, we have applied basis encoding techniques to encode the classical states into quantum states and we recommend that, by seeing the framework’s performance applying other encoding techniques such as amplitude and angle encoding. In this paper, we mostly discussed the deep reinforcement problem with discrete state spaces. But the expected recommendation here is to extend this enhanced framework to DRL problems with continuous state spaces efficiently.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

The research was funded by Bule Hora University, Informatics College.

Open Research

Data Availability

The data used to support the findings of the study can be obtained from the corresponding author upon request.