Adaptive Robust Control for Uncertain Systems via Data-Driven Learning

Abstract

Although solving the robust control problem with offline manner has been studied, it is not easy to solve it using the online method, especially for uncertain systems. In this paper, a novel approach based on an online data-driven learning is suggested to address the robust control problem for uncertain systems. To this end, the robust control problem of uncertain systems is first transformed into an optimal problem of the nominal systems via selecting an appropriate value function that denotes the uncertainties, regulation, and control. Then, a data-driven learning framework is constructed, where Kronecker’s products and vectorization operations are used to reformulate the derived algebraic Riccati equation (ARE). To obtain the solution of this ARE, an adaptive learning law is designed; this helps to retain the convergence of the estimated solutions. The closed-loop system stability and convergence have been proved. Finally, simulations are given to illustrate the effectiveness of the method.

1. Introduction

Existing achievements of control techniques are mostly acquired under the assumption that there are no dynamical uncertainties in the controlled plants. Nevertheless, in practical control systems, there are many external disturbances and/or model uncertainties, so the system lifetimes are always affected by those uncertainties. The factors of uncertainties must be taken into consideration in the design of the controller such that the closed-loop systems must have good responses even in the presence of such uncertain dynamics. We say a controller is robust if it works even though the practical system deviates from its nominal model. Therefore, it creates the problem of robust control design, which has been widely studied during the past decades [1, 2]. The latest research [1, 3] shows that the robust control problem can be addressed via using the optimal control approach for the nominal system. Nevertheless, the online solution for the derived optimal control problem is not handled in [1].

Considering optimal control problems, recently, many approaches have been presented [4, 5]. A linear system optimal control problem is described to address the associated linear quadratic regulator (LQR) problem, where the optimal control law can be obtained. The theory of dynamic programming (DP) has been proposed to study the optimal control problem in the past years [6]; however, there is an obvious disadvantage for DP, i.e., with the increase in the dimensions of system state and control input, there is an alarming increase in the amount of computation and storage, which is called “curse of dimensionality.” To overcome this problem, the neural network (NN) is used to approximate the optimal control problem [7], which leads to recent research work on adaptive/approximate dynamic programming (ADP); the tricky optimal problem can be tackled via ADP method; thus, we can get the online solution of the optimal cost function [8]. Recently, robust control design based on adaptive critic idea has gradually become one of the research hotspots in the field of ADP. Many methods have been proposed one after another, which are collectively referred to as the robust adaptive critic control. A basic approach is to transform the problem to establish a close relationship between robustness and optimality [9]. In these literatures, the closed-loop system generally satisfies the uniformly ultimately bounded (UUB). These results fully show that the ADP method is suitable for the robust control design of complex systems in uncertain environment. Since many previous ADP results are not focus on the robust performance of the controller, the emergence of robust adaptive critic control greatly expands the application scope of ADP methods. Then, considering the commonness in dealing with system uncertainties, the self-learning optimization method combined with ADP and sliding mode control technology provides a new research direction for robust adaptive critic control [10]. In addition, the robust ADP method is another important achievement in this field. It is worth mentioning that the application of robust ADP methods in power systems has attracted special attention [11], leading to a higher application value in industrial systems.

Based on the above facts, we develop a robust control design for uncertain systems via using an online data-driven learning method. For this purpose, the robust control problem of uncertain systems is first transformed into an optimal control problem of the nominal systems with an appropriate cost function. Then, a data-driven technique is developed, where Kronecker’s products and vectorization operations are used to reformulate the derived ARE. To solve this ARE, a novel adaptive law is designed, where the online solution of ARE can be approximated. Simulations are given to indicate the validity of the developed method.

- (1)

To address the robust control problem, we transform the robust control problem of uncertain systems into an optimal control problem of the nominal system. It provides an approach to address the robust control problem

- (2)

Kronecker’s products and vectorization operations are used to reformulate the derived ARE, which can help to rewrite the original ARE into a linear parametric form. It gives a new pathway to online solve the ARE

- (3)

A newly developed adaptation algorithm driven by the parameter estimation errors is used to online learn the unknown parameters. The convergence of the estimated unknown parameters to the true values can be guaranteed

This paper is organized as follows: In Section 2, we introduce the robust control problem and transform the robust control problem into an optimal control problem. In Section 3, we design an ADP-based data-driven learning method to online solve the derived ARE, where Kronecker’s products and vectorization operations are used. Section 4 gives some simulation results to illustrate the effectiveness of the proposed method. Some conclusions are stated in Section 5.

2. Preliminaries and Problem Formulation

It should also be noted that the upper bound F of the uncertainties ω(d) is involved in the cost function (4) to address their effects. The following Lemma summarizes the equivalence between the robust control of the system (1) or (2) and the optimal control of the system (3) with cost function (4).

Lemma 1 (see [9].)If the solution to the optimal control problem of the nominal system (3) with cost function (4) exists, then it is a solution to the robust control problem for system.

Lemma 1 exploits the relationship between the robust control and optimal control and thus provides a new way to address the robust control.

3. Online Solution to Robust Control via Data-Driven Learning

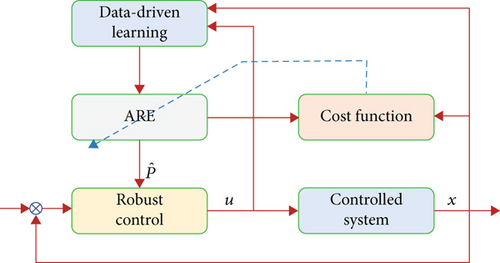

This section will propose a data-driven learning method to resolve the robust control, the schematic of the proposed control method as given Figure 1.

where , ϑ = [2(x ⊗ Ax), −vec(R) ⊗ (x ⊗ x)], and ϕ = (x ⊗ x)vec(QT).

3.1. Online Solution of Robust Control

For adaptive law (16), auxiliary vector M of (14) obtained based on and ϑ using (15) contains the information on the parameter estimation error . Thus, M can be used to drive parameter estimation. Consequently, parameter estimation can be updated along with the estimation error extracted by using the measurable system states x. Thus, this adaptive algorithm clearly differs to the gradient descent algorithms used in other ADP literatures.

Since the fact is true, then we can obtain the following lemma as follows.

Lemma 2 (see [13], [14].)Assume that the variable ϑ provided in (12) meets persistently excited, then the matrix Y given in (12) can be considered as positive definite, which means that λmin(Y) > σ > 0 for any positive constant σ.

Lemma 2 shows the positivity of the variable ϑ, then we can summarize the convergence of proposed adaptive learning law (16) as follows.

Theorem 3. Consider (11) with adaptive learning law (16), when variable ϑ provided in (11) satisfies PE condition, then the estimation error is convergence to the origin.

Proof. A Lyapunov function can be chosen as , then we can calculate its as

The step-by-step implementation of proposed learning algorithm is given as follows.

-

Algorithm 1: (Step-by-step Implementation for Online Robust Control Solution of Uncertain Systems).

-

1) (Initialization): given the initial parameter and gains κ, ℓ for adaptive learning law (16)

-

2) (Measurement): measure the system input\output data and construct the regressors ϕ, ϑ in (10) and (11)

-

3) (Online adaptation): solve Y, N, and M and learn the unknown parameter with (16) to obtain the control u

-

4) (Apply control): apply the derived output-feedback control u on the system

Remark 4. For the above designed adaptive learning law (16), which is derived by the estimation error. To this end, the control input u and system states x are used; this is clearly different to the existing results [15]. In particular, two operations vec(⋅) and ⊗ are applied to the derived ARE; this helps to realize the online learning. Consequently, faster convergence can be retained compared to the previous gradient method-based adaptive laws designed.

Remark 5. It is a fact that some ADP methods are applied to address the robust control problem successfully. However, most existing ADP techniques focus on H-infinity control problem. For proposed robust control problem in this paper, we know the uncertain parameter d are involved in system matrix A such that A(d), so we can consider the system contains unmolded dynamics. To obtain the uncertain term bound, we should do some operations such that A(d) − A(d0), which will be used in the cost function (4). Assume that the system dynamics are completely unknown, the uncertain bound may not be used in cost function as expected. Hence, the system matrix must be known in this paper; future work will try to solve the output-feedback robust control under completely unknown dynamics.

3.2. Stability Analysis

To complete the stability analysis, we use the following assumptions as follows.

Assumption 6. The dynamic matrices A ≤ bA and B ≤ bB for bA, bB > 0, the estimated matrix for bP > 0.

In fact, the above assumptions are not stringent in practical systems and have been widely used in many results [13, 14, 16].

Now, some results can be included as follows.

Theorem 7. Consider the system (3) with adaptive learning law (16), if the variable ϑ is PE, then the parameter estimation error converges to zero, and the derived control is convergence to its optimal control, i.e., ‖u − u∗‖⟶0.

Proof. Consider a Lyapunov function as

From (17), we have as

Then, the can be derived from systems (3) and (19) as

Thus, based on (21) and (22), we have as

Then, the parameters Γ1 and K1 can be chosen fulfilling following conditions

Therefore, we can rewrite (23) as

Thus, we have J⟶0 for t⟶∞ via Lyapunov theorem, then the estimation error converges to zero, i.e., . Consequently, we can obtain the error between u and u∗as

This implies the practical optimal control convergence to 0 is true. This completes the proof.☐

4. Simulation

4.1. Example 1: Second-Order System

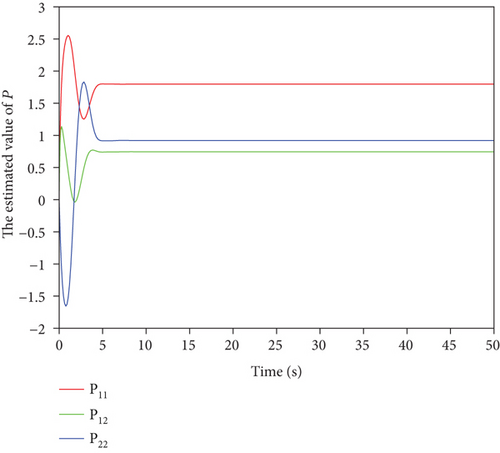

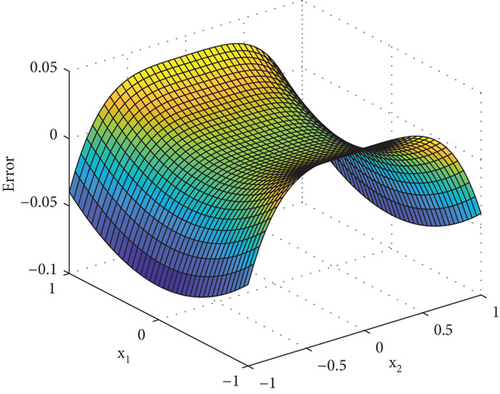

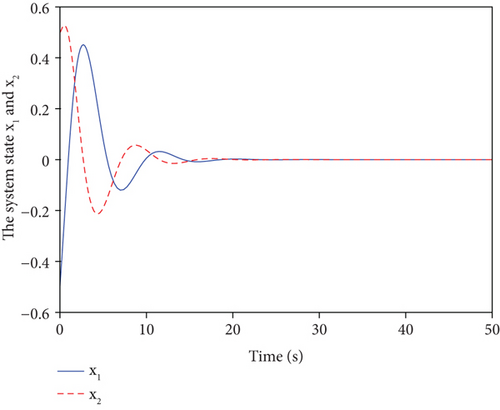

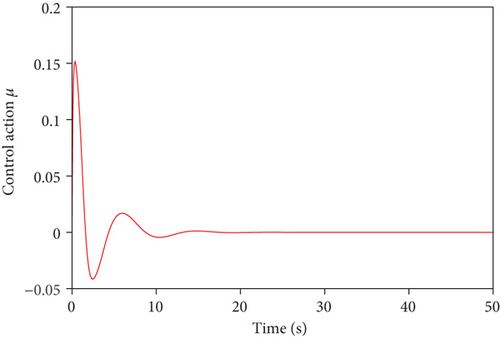

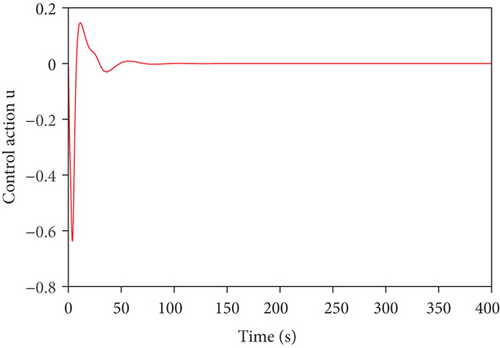

Figure 2 gives the estimation of the matrix with online adaptive learning law (16); based on the ideal solution in (31), we have that the estimated solution is convergence to its optimal solution P∗. This is also found in Figure 3, where the normal error, i.e., , is provided. The good convergence will contribute to the rapid convergence of the system states, which can be found in Figure 4, the system states are bounded and smooth. Since the estimated fast convergence to P∗, then the system response is quite fast; this also can be found in Figure 4. The corresponding control input is given in Figure 5, which is bounded.

4.2. Example 2: Power System Application

TG = 5(Hz/MW) is the time of the governor, Tt = 10(s) denotes the time of the turbine model, Tg = 10(Hz/MW) is the time of the generator model, Fr = 0.5(s) indicates the feedback regulation constant, Kt = 1(s) is the gain constant of the turbine model, and Kg = 1(s) shows the gain constant of the generator model.

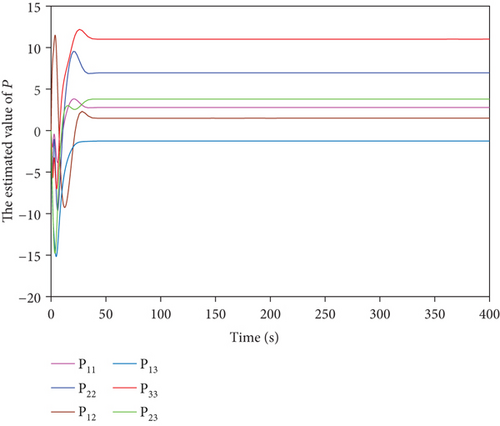

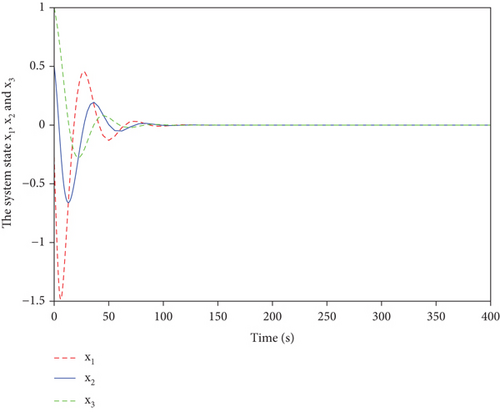

Figure 6 shows the convergence of estimated matrix P; based on the offline solution given in (33), we have that the estimated solution P can converge to its optimal solution P∗; this in turn affects the system state response (as shown in Figure 7). Figure 7 gives the system state response, which is smooth and bounded. The system control input is given in Figure 8.

5. Conclusion

In this paper, an online data-driven ADP method is proposed to solve the robust control problem for continuous-time systems with uncertainties. The robust control problem can be transformed into the optimal control problem. A new online ADP scheme is then introduced to obtain the solution of ARE via using the vectorization operator and Kronecker product. Finally, the closed-loop system stability and the convergence of the robust control solution are all analyzed. Simulation results are presented to validate the effectiveness of the proposed algorithm. It is worth noting that the research results are satisfied to the matched uncertainty condition. In our future work, we will extend the proposed idea to address the robust tracking control problem, which allows to carry out practical experimental validations based on existing test-rigs in our lab.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Acknowledgments

This work was supported by the Shandong Provincial Natural Science Foundation (grant no. ZR2019BEE066) and Applied Basic Research Project of Qingdao (grant no. 19-6-2-68-cg).

Open Research

Data Availability

Data were curated by the authors and are available upon request.