Feature Selection and Training Multilayer Perceptron Neural Networks Using Grasshopper Optimization Algorithm for Design Optimal Classifier of Big Data Sonar

Abstract

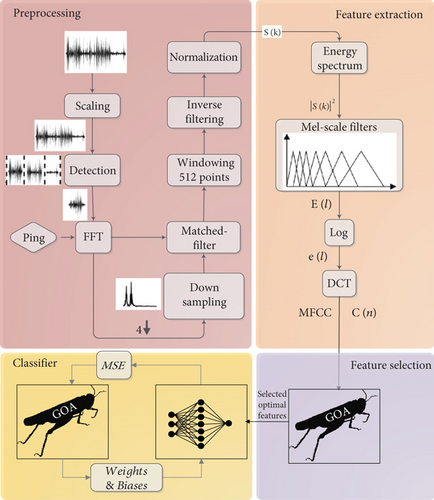

The complexity and high dimensions of big data sonar, as well as the unavoidable presence of unwanted signals such as noise, clutter, and reverberation in the environment of sonar propagation, have made the classification of big data sonar one of the most interesting and applicable topics for active researchers in this field. This paper proposes the use of the Grasshopper Optimization Algorithm (GOA) to train Multilayer Perceptron Artificial Neural Network (MLP-NN) and also to select optimal features in big data sonar (called GMLP-GOA). GMLP-GOA hybrid classifier first extracts the features of experimental sonar data using MFCC. Then, the most optimal features are selected using GOA. In the last step, MLP-NN trained with GOA is used to classify big data sonar. To evaluate the performance of GMLP-GOA, this classifier is compared with MLP-GOA, MLP-GWO, MLP-PSO, MLP-ACO, and MLP-GSA classifiers in terms of classification rate, convergence rate, local optimization avoidance power, and processing time. The results indicated that GMLP-GOA achieved a classification rate of 98.12% in a processing time of 3.14 s.

1. Introduction

Nowadays, big data analysis and classification are highly valuable [1, 2]. The reason is that as the data increase, the need for more accurate data analysis and classification also increases [3, 4]. The more precise and accurate analysis, the more secure our decision-making will be. Better decisions mean more practicality and less cost. Sonar data is one type of data that is regarded to be part of the big data family [5, 6].

Concerning the complex physical characteristics of sonar purposes, classifying original purposes and avoiding unreal purposes has developed into a critical practical area for active researchers and craftsmen [7, 8]. Due to the complexity and heterogeneity of sound circulation in saltwater, several parameters for categorization and differentiation of sonar purposes should be extracted. As the dimensions of the feature vectors grow, the data dimensions also grow.

There are two distinct ways of categorizing high-dimensional data [9]. The first is to employ the Deterministic Approach [10]. Because this approach is so reliable, it almost always results in the best response; nonetheless, the method encounters difficulties as data dimensions rise, which is followed by an increase in spatial and temporal complexity [11]. Furthermore, this strategy is inapplicable to the data classified as big data [12, 13]. The stochastic method is the second approach [14]. These methodologies yield a near-optimal solution [15]. Additionally, they are less complicated in terms of spatial and temporal dimensions than deterministic methods [16, 17]. Artificial Neural Networks (ANN) is one of the most effective stochastic methods utilized in the actual world of big data.

Neural networks have the ability to learn [18]. Learning here means, these networks are the basics of all neural networks, which may be parted into two groups of supervision learning [19] and without supervision learning [20, 21]. Most appliances are optimized for Multilayer Artificial Neural Networks, optimized [22] or standard [10, 23]. Backpropagation algorithm is used as a learning method that is considered among the family of supervised learning. The backpropagation algorithm is on an incline basis which has some problems such as gradual convergence [24] and appliance in a limit area [25, 26]. Thus, they are unreliable for functional appliances.

The eventual purpose for the process of learning in neural networks is to acquire the best structure of weighted edges and their bios. Such a way that the least number of errors may occur in network training and test specimens [27, 28]. The reference [29] demonstrates that metaheuristic optimization methods may be substituted with gradient-based learning algorithms, since the stochastic character of these algorithms prevents them from being trapped in a local optimum, increases the convergence rate, and decreases classification errors.

Some of the metaheuristic methods which have been recently used for training neural networks, are genetic algorithm (GA) [30], simulated annealing (SA) [31], biogeography-based optimization (BBO) [32], Magnetic Optimization Algorithm (MOA) [33], Artificial Bee Colony Algorithm (ABC) [34], Gray Wolf Optimizer (GWO) [35], Social Spider Algorithm (SSA) [36, 37], Particle Swarm Optimization and Gravity Search Algorithm (PSOGSA) [7], and so on. GA and SA decrease the possibility of getting stuck in the local optimum, but their low convergence rate. This shortage leads to a weak performance when the need for an immediate process exists. ABC acts properly dealing with small problems and data with low dimensions, but when the problem dimensions increase, the time for training increases greatly as well. MOA has an unsuitable performance and low accuracy, facing nonlinear data. BBO requires lengthy computations. Despite its simplicity and speed of convergence, GWO becomes trapped in the local optimum and so is not ideal for situations with a global optimization. Numerous adjustment parameters and a high level of complexity are SSA’s flaws. PSOGSA is formed by a combination of PSO and GSA which leads to an increase in the spatial and temporal complexity.

One of the commonalities between metaheuristic algorithms and other search algorithms is the split of the search region into two phases: exploration and exploitation [38–40]. The first phase occurs concurrently with the algorithm’s attempt to examine the most dependable areas of the search region [15, 41]. During the exploration phase, the population is subjected to abrupt alterations in order to properly investigate the whole region of the problem. The exploitation phase happens when the algorithm is converged toward a reliable answer. At this stage, the population is undergoing very small changes.

In most cases, given the random nature of evolutionary algorithms, there is no specified boundary betwixt these 2 phases [18, 42]. In other words, the lack of balance betwixt these two phases causes the algorithm to get stuck in the local optimum. This problem is intensified, dealing with data with high dimensions. By adjusting the displacement behavior betwixt these 2 phases, the probability of getting stuck in the local optimum can be reduced. As proved in the reference [43], GOA can properly recognize the border between exploration and exploitation phases [44, 45]. Thus, the algorithm converges toward more reliable answers.

On the other hand, any system that performs data classification consists of three main parts: data acquisition, feature extraction, and classifier design. The novelty of this article occurred in the feature extraction section. In general, all extracted features are not useful and may contain useless or duplicate information. Feature selection can be seen as the process of identifying useful features and removing useless and repetitive features. The goal of feature selection is to obtain a subset of features that solve problems well with minimal performance degradation. The goal of feature selection is to obtain a subset of features that solve problems well with minimal performance degradation.

This theory is mentioned here: No Free Lunch (NFL) [46, 47]. This proposition demonstrates logically that no metaheuristic method exists that is capable of resolving all optimization problems. In other words, one metaheuristic technique may perform admirably and predictably on one set of issues while failing miserably on another set of problems [48, 49]. NFL stimulates this field of study and contributes to the development of new methodologies and the formulation of new metaheuristic methods on an annual basis [50]. Taking into mind the described theory, the aforementioned issues, and GOA’s capacity to cope with big data, this approach may be utilized to train Multilayer Perceptron Neural Networks (MLP-NN) and, subsequently, to classify sonar data.

On the other hand, any system that performs data classification consists of three main parts: data acquisition, feature extraction, and classifier design. The novelty of this article occurred in the feature extraction section. In general, all extracted features are not useful and may contain useless or duplicate information. Feature selection can be seen as the process of identifying useful features and removing useless and repetitive features. The goal of feature selection is to obtain a subset of features that solve problems well with minimal performance degradation. The NFL theorem and the ability of GOA to find the boundary between the two phases of exploration and extraction in the search space is a strong motivation to investigate GOA for the problem of feature selection. Therefore, in this paper, in addition to GOA being used as a neural network training algorithm, GOA is used to select optimal features (GMLP-GOA).

- (i)

Obtaining and collecting experimental data sets

- (ii)

Feature extraction using the MFCC method

- (iii)

Feature selection using GOA

- (iv)

Designing an optimal GMLP-GOA hybrid classifier and classification of big data sonar

- (v)

Data classification using MLPs trained with five population-based metaheuristic algorithms

This paper is organized as section two will introduce the MLP-NN. Section 3 explains general issues for GOA. Section 4 will describe how the outcoming GOA as a training algorithm for metaheuristic methods in MLP-NNs is applied. Section 5 will present the dataset and feature selection. Section 6 presents experimental results and discussion. References used are provided in Section 7.

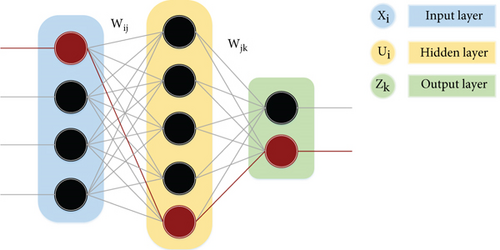

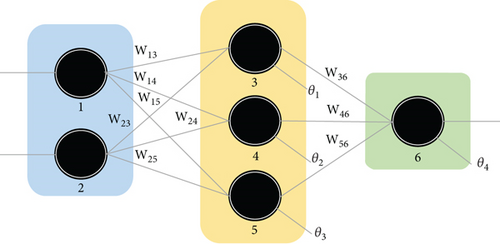

2. Multilayer Perceptron Neural Network

After calculating the hidden nodes, it is possible to define the final outputs as follows.

In which Wjk stands for the weight of the edge which stands for the bios of the node k -th and connects the node j −th (hidden layer) to the node k −th (output layer). The most essential factors of an MLP-NN, are the weight for edges and their bios. As seen in the above relations, edges weigh, and bios have defined the ultimate output. Training an MLP-NN, consist of detecting the best optimal output out of certain outputs.

3. Grasshopper Optimization Algorithm

Grasshoppers are an insect species. They are classified as pestilences owing to the harm they do to agricultural crops [55–57]. Although grasshoppers seem to be alone in nature, they are part of one of the biggest animal groups on the planet. They, sometimes, are a threat to farmers. One of their unique features is their social behavior which can be seen both in their childhood and their maturity. Millions of their kids jump and roll-like rollers and eat almost all the plants along the way. Slow movements and short steps are the main features of grasshoppers. Short and sudden movement is one feature of a mature grasshopper community. An important feature of their community is the search for food resources [58]. GOA being inspired by nature, logically, divides the searching process into 2 phases of exploration, and exploitation.

Equation (13) demonstrates that the position of the grasshopper is determined in terms of its present location, the position of the best solution, and the position of all grasshoppers in the group. It is worth noting that the first component of this equation examines the current location of the grasshopper in relation to the positions of other grasshoppers. To determine the placement of search agents around the purpose, we assessed the state of all grasshopper positions. This is in contrast to the particle swarm algorithm. Each particle in the particle mass algorithm has two vectors: a location vector and a velocity vector.

However, in the grasshopper algorithm, each search agent is represented by a single vector. Another significant distinction between the two methods is that the particle swarm algorithm modifies its location depending on the particle’s current position, the particle’s best position, and the group’s best response. Whereas in the Grasshopper Algorithm, the location of the search agent is modified based on its current position, the best response, and the positions of all the particles in the group. This implies that none of the other groups in the particle swarm algorithm engage in updating a particle’s location, but the Grasshopper Algorithm needs all search agents to participate in deciding each agent’s next position.

The parameter C is utilized twice in equation (13) for the following reasons. The first C on the left is fairly similar to the particle swarm algorithm’s (w) weighted inertia. This setting reduces the grasshoppers’ movements in the vicinity of the objective spot. In other words, this parameter optimizes the balance between the exploration (search) and exploitation stages of the input population. The second parameter in the equation decreases grasshopper absorption, inertia, and desorption. Consider the component in the equation (13), the component c(ubd − lbd)/2 linearly reduces the space that grasshoppers should explore and exploit. The component s (xj − xi)/dij indicates the grasshopper’s absorption to the purpose or the grasshopper’s desorption from the optimal location.

Internal C decreases absorption and desorption forces among grasshoppers as the number of repetitions increases, but external C decreases the coverage area around the ideal response as the number of repetitions increases. In summary, the first statement of equation (13) takes into account the total of the positions of the other grasshoppers and applies the grasshoppers’ natural interaction. replicates the grasshoppers’ hunger for food in the second sentence. Additionally, parameter C replicates the decline in the grasshoppers’ acceleration to and intake of the food source. To increase the randomness of the behavior and as a substitute, both phrases of equations (13) might be multiplied by a random value. Single sentences can also be multiplied by random values to model the grasshopper’s random behavior in interaction with each other as well as the tendency toward the food source. The mathematical approach offered here is capable of exploring and exploiting the search space. However, a mechanism must exist to transition candidates from the exploration stage to the exploitation stage. Naturally, grasshoppers look for food locally initially, since they lack wings throughout their infancy. They then fly freely across the air, discovering new regions. Unlike this, in stochastic optimization techniques, the exploration phase is conducted first to determine the permissible regions of the search space. Following the discovery of permitted areas, the exploitation phase forces the search agents to locate an accurate approximation of the optimal answer location on a local level.

The preceding discussion demonstrates that the suggested mathematical model motivates grasshoppers to progress toward the goal with increasing repetitions. However, in a true search space, there are no objectives, since it is not quite evident what the best and most significant objective is. As a result, each optimization phase requires us to assign a purpose to each collection of grasshoppers. The Grasshopper Algorithm makes the assumption that the best or purpose value is the most suitable grasshopper (response vector) throughout the optimization process. This will help the algorithm store the most appropriate answer vector in each repetition and in the search space and direct the grasshopper group toward that purpose value. This is done in the goal of discovering a more precise and superior purpose that serves as the best approximation for the overall and true optimization of the search space.

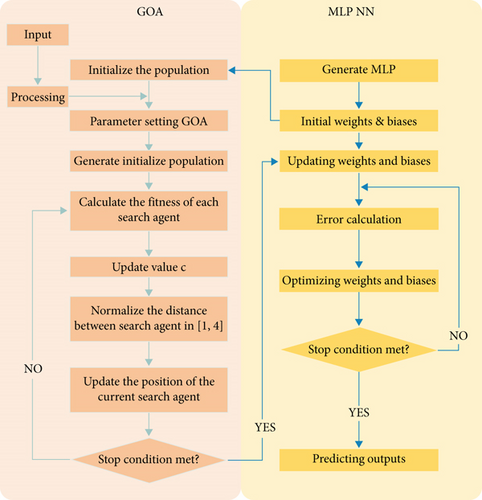

The Grasshopper Algorithm flowchart utilized in the neural network is seen in Figure 2. The GOA method begins by generating a random beginning population. Agents of search revise their positions in light of connections (13). Each iteration has updated the best answer so far. Additionally, factor c is determined using equation (14), and the distance between grasshoppers is normalized to a value between one and four. Updating the grasshopper position has been repeatedly performed to reach the criterion of terminating the algorithm. The position and value of the objective function of the optimal answer, as the best approximation of the overall optimal answer, is finally obtained.

4. Training a Multilayer Neural Network Using the Grasshopper Algorithm

In general, there are three ways for training MLP-NN using evolutionary algorithms. The first is to utilize evolutionary networks to determine the optimal mix of edge weight and node bias in an MLP-NN. The second is the use of evolutionary networks to determine the optimal arrangement of MLP-NNs in a given situation, and the third is the use of evolutionary networks to determine the learning rate and amount of movement of the gradient-based learning algorithm. The Grasshopper Optimization Algorithm is evaluated against an MLP-NN utilizing the 1-th approach in this research. To appropriately represent the weights of edges and nodes in a training procedure for MLP-NN networks, the weights of edges and nodes must be properly represented.

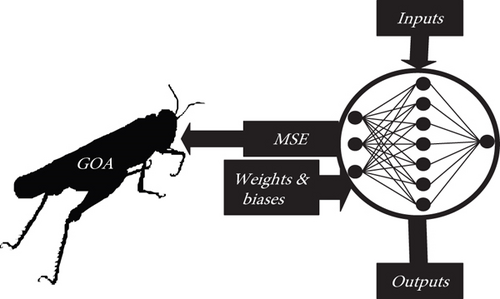

Generally, three methods are used to express the weight of edges and the bias of nodes: vector, matrix, and binary. Each element is represented as a vector, matrix, or string of binary bits in the vector, matrix, and binary methods. Each of these strategies has a number of benefits and downsides that may be advantageous in certain situations. Figure 3 shows how to train a neural network using GOA.

While it is straightforward to convert elements to vectors, matrices, or strings of binary bits using the first technique, the process of retrieving them is more complex. As a result, this technique is often utilized in rudimentary neural networks. In the second technique, it is simpler to recover than it is to encode components in complicated networks. This approach is particularly well-suited for developing algorithms for generic neural networks. The variables must be supplied in binary form for the third technique. When the network structure becomes intricate in this item, the length of each element likewise increases. As a result, the coding and decoding processes will be very difficult.

5. Data Set

This chapter uses one of the most challenging engineering problems in the real world to prove GOA’s capability. The chosen issue is the classification of sonar data, which is one of the challenges and concerns of engineers and scientists, working in this field.

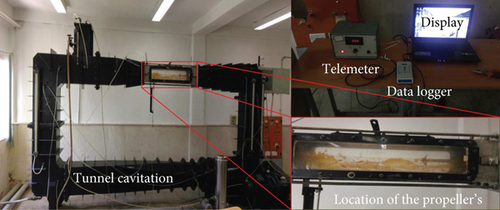

5.1. Scenario Test Design and Experimental Data Formation

Since our goal is to obtain a reliable and realistic set of high-dimensional sonar data, a real experiment was designed and implemented. The experiment was conducted using the tunnel cavitation model NA-10, made in England. In the first phase, three types of impellers were produced in classes A, B, and C. The Class A impeller has three blades that can be used to pick up sound from a boat, and small passenger ship. The Class B impeller has four blades that are used to get the sound from a container ship, ocean liner, and small oil tanker. The Class C impeller has five blades and is used to extract sound from the aircraft carrier and large oil tanker. In this experiment, the impellers are evaluated at different speeds to simulate different operating conditions. During these experiments, the sound (acoustic noise) of the various impellers was stored on a computer using the B&K 8103 hydrophone and Data-Logger of the UDAQ_Lite model.

At all experiments, the atmospheric pressure of 100 kPa and pressure inside the tunnel were considered concerning the depth of impeller placement in that floating class. The water flow rate inside the tunnel is also 4 m/s. One of the hydrophones is mounted next to the propeller at a distance of 10 cm and the other 50 cm from the first hydrophone.

In this section, the noise of the designed impellers is measured in four steps. In the first step, after the water flow slows down, the noise is received by the hydrophones and then received and stored by the MATLAB software and Data-Logger. Secondly, by turning on the impeller and without the impeller wheel, the engine noise is also obtained in several stages, so that we can obtain a reasonable estimate of this noise. In the third stage, the impeller rotates at different rotations (depending on the type of the model float) to obtain the impeller rotation noises for the different floating classes. In the fourth step, by turning on the water rotation pump and the bubble discharging pump into the discharge tunnel, the impeller motor is activated and the sound is collected by the Data-Logger and the MATLAB software, in the computer. At all stages, all the actual data, without amplifying the values, are stored in the computer for later use.

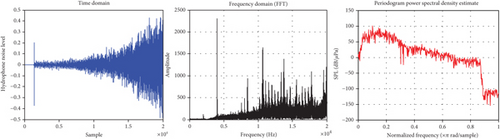

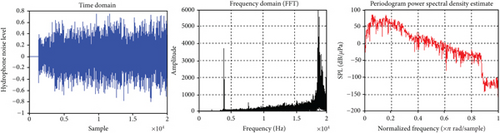

5.1.1. Drawing Noise Curves for Model propeller’s

According to the standard reference [30, 31] the power is calculated in dB related to the water acoustic reference power (1μPa). Figure 6 shows noise curves at the hydrophone surface, Fourier transforms, and dB power spectrum, respectively, for different classes of impellers.

In this section, 500 samples with different propeller and a number of rotations were obtained.

5.2. Feature Extraction

Figure 7 shows a block diagram of the procedures involved in the classification steps.

This section contains 140 extracted characteristics. Given 500 samples, the data set will be 500 × 140 in size, with 140 representing the number of input nodes (n) in the neural network and 281 being the number of neurons in the hidden layer. Thus, despite the vast data sets, computational and deterministic approaches have a high time complexity, and random methods are regarded as the optimal answer for this kind of problem.

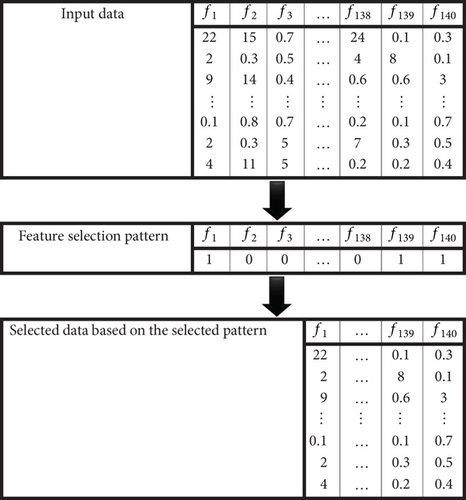

5.3. Feature Extraction

As discussed in the previous subsection, the dimension of the feature matrix is 500 × 140. All extracted features are not useful and may contain useless or duplicate information. As shown in Table 1, there are 2140 states for the obtained feature matrix. The binary version of GOA is responsible for selecting the optimal features.

| Feature vector states | f1 | f2 | f3 | ⋯ | f138 | f139 | f140 |

|---|---|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | ⋯ | 0 | 0 | 0 |

| 2 | 1 | 0 | 0 | ⋯ | 0 | 0 | 0 |

| 3 | 1 | 1 | 0 | ⋯ | 0 | 0 | 0 |

| 4 | 1 | 1 | 1 | ⋯ | 0 | 0 | 0 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| 2140-2 | 1 | 1 | 1 | ⋯ | 1 | 0 | 0 |

| 2140-1 | 1 | 1 | 1 | ⋯ | 1 | 1 | 0 |

| 2140 | 1 | 1 | 1 | ⋯ | 1 | 1 | 1 |

It is assumed that the initial population is 209. Table 2 shows the hypothetical values for the initial population of 209.

| Initial population | f1 | f2 | f3 | ⋯ | f138 | f139 | f140 |

|---|---|---|---|---|---|---|---|

| 1 | 0 | 1 | 0 | ⋯ | 0 | 0 | 0 |

| 2 | 0 | 0 | 1 | ⋯ | 0 | 1 | 0 |

| 3 | 1 | 0 | 0 | ⋯ | 0 | 1 | 1 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| 207 | 0 | 0 | 0 | ⋯ | 1 | 0 | 1 |

| 208 | 1 | 1 | 1 | ⋯ | 0 | 1 | 0 |

| 209 | 1 | 0 | 1 | ⋯ | 0 | 0 | 1 |

In Table 2, each row is used as a feature selection pattern. By using these patterns, the entire educational input data is selected. In this paper, accuracy is used as a fitness function. In the following, MLP-GOA is used to calculate the fitness function. Therefore, for each selected pattern, the accuracy is calculated using MLP-GOA (the accuracy value is exactly the fit value of each pattern). Assuming that the initial population is 209, the length of the fitness vector will also be equal to 209. Figure 8 shows how to select the feature from the most optimal selection pattern.

If the stop condition (reaching 100% accuracy or reaching the maximum number of iterations) occurs, the program ends, and the data in the best pattern (selected and reduced features) is selected for classification with MLP-GOA.

6. Experimental Results and Discussion

For fair comparison and performance evaluation of GMLP-GOA classifier, five classifiers MLP-GOA, MLP-GWO, MLP-PSO, MLP-ACO, and MLP-GSA are used. The selection algorithms are all population based. GMLP-GOA and MLP-GOA classifiers have the same training by GOA. The only difference between these two classifiers is that in the GMLP-GOA classifier, GOA is used for feature selection. Table 3 contains the parameters and beginning values for these algorithms.

| Algorithm | Parameter | Value |

|---|---|---|

| GWO | Population size | 209 |

| The number of Gray Wolf | 13 | |

| PSO | Population size | 208 |

| Cognitive constant (C1) | 1.1 | |

| Social constant (C2) | 1.1 | |

| Local constant (W) | 0.4 | |

| ACO | Population size | 209 |

| ACO primary pheromone (τ0) | 0.000001 | |

| Pheromone updating constant (Q) | 20 | |

| Pheromone constant (q0) | 1.1 | |

| Decreasing rate of the overall pheromone (Pg) | 0.8 | |

| Decreasing rate of local pheromone (Pt) | 0.6 | |

| Pheromone sensitivity (a) | 2 | |

| Observable sensitivity (β) | 6 | |

| GSA | Population size | 209 |

| Coefficient (α) | 21 | |

| Limit down | -31 | |

| Limit up | 31 | |

| Gravitational constant (G°) | 1 | |

| The initial speed of the masses | [0, 1] | |

| The initial value of the acceleration | 0 | |

| The initial value of mass | 0 | |

| GOA | Population size | 209 |

| Highest value (cmax) | 1 | |

| Lowest value (cmin) | 0.00001 | |

In the GMLP-GOA hybrid classifier, the optimal features obtained from GOA are used. If for other classifiers, a feature matrix with dimensions of 500 × 140 is used. Classifiers are evaluated in terms of classification rate, local minimization avoidance and convergence speed.

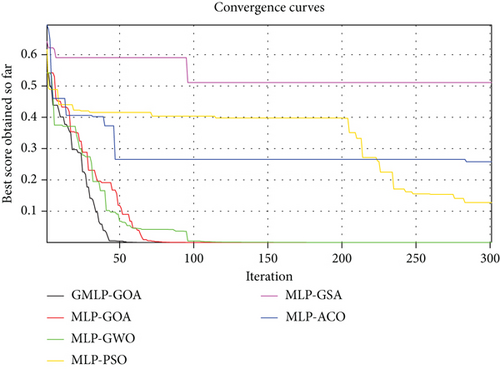

Table 4 shown the classification rate, mean and standard deviation of the smallest error, and P value for each method after it has been run 20 times. The classification rate indicates the correct recognition accuracy of the classifier, while the smallest error’s mean values and standard deviation, as well as the P value, and show the algorithmic power in avoiding local optimization. Also shown in Figure 9, is a comprehensive comparison of the convergence rate and method and the final error of the classifiers.

| Classifier | MSE (AVE ± STD) | P values | Classification rate (%) | Processing time (s) |

|---|---|---|---|---|

| GMLP-GOA | 0.1055 ± 3.4180e − 01 | N/A | 98.1276 | 3.14 |

| MLP-GOA | 0.1283 ± 8.2720e − 04 | N/A | 95.6667 | 6.24 |

| MLP-GWO | 0.1519 ± 0.0269 | 0.0039 | 94.3522 | 7.39 |

| MLP-GSA | 0.3149 ± 0.2965 | 6.2149e-04 | 69.6633 | 10.44 |

| MLP-ACO | 0.2527 ± 0.1744 | 7.2798e-12 | 75.3333 | 7.54 |

| MLP-PSO | 0.2011 ± 0.2076 | 0.2239e-03 | 92.8222 | 8.78 |

As shown in Figure 9, GMLP-GOA has the best convergence rate and MLP-GSA has the worst convergence rate among the used classifiers. The results obtained in Table 4 show that in terms of classification rate, GMLP-GOA succeeded in classifying sonar big data with 98.12% accuracy, while MLP-GSA had the worst performance with a classification rate of 69.66%. In terms of processing time, GMLP-GOA had the fastest processing time with 3.14 s, while MLP-GSA required more time for processing than other classifiers with 10.44 s. As can be seen in Table 4 and the values of standard deviation and P value, the GMLP-GOA hybrid classifier performs optimally in terms of avoiding being trapped in the local minimum. One of the reasons for the success of GMLP-GOA can be mentioned the power of GOA in detecting the boundary between exploration and extraction phase. As shown in Figure 9, GMLP-GOA converged after 50 iterations, while MLP-GOA and MLP-GWO converged after 75 and 95 iterations, respectively. Therefore, according to the obtained results, GMLP-GOA showed a successful performance in dealing with sonar big data and is recommended for use in real-world problems.

7. Conclusion

In this paper, GOA is used to select optimal features and train MLP-NN in GMLP-GOA hybrid classifier to classify sonar big data. Also, to have a fair comparison, 5 classifiers MLP-GOA, MLP-GWO, MLP-PSO, MLP-ACO, and MLP-GSA were used, which are all based on population-based metaheuristic algorithms. As seen in the simulation results, GOA can correctly detect the boundary between exploration and exploitation phases. Therefore, it does not get stuck in local optima, and its ability to find global optima for solving high-dimensional problems such as big data sonar is well-proven. The results show that GMLP-GOA has the best performance for classifying sonar big data by reaching a classification rate of 98.12%. 5 classifiers MLP-GOA, MLP-GWO, MLP-PSO, MLP-ACO, and MLP-GSA have the most accurate classification accuracy by reaching values of 95.66, 94.35, 92.82, 75.33, and 69.66, respectively.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Open Research

Data Availability

No data were used to support this study.