[Retracted] Piano Automatic Composition and Quantitative Perception under the Data-Driven Architecture

Abstract

This paper combines automatic piano composition with quantitative perception, extracts note features from the demonstration audio, and builds a neural network model to complete automatic composition. First of all, in view of the diversity and complexity of the data collected in the quantitative perception of piano automatic composition, the energy efficiency-related state data of the piano automatic composition operation is collected, carried out, and dealt with. Secondly, a perceptual data-driven energy efficient evaluation and decision-making method is proposed. This method is based on time series index data. After determining the time subjective weight through time entropy, the time dimension factor is introduced, and then the subjective time weight is adjusted by the minimum variance method. Then, we consider the impact of the perception period on the perception efficiency and accuracy, calculate and dynamically adjust the perception period based on the running data, consider the needs of the perception object in different scenarios, and update the perception object in real time during the operation. Finally, combined with the level weights determined by the data-driven architecture, the dynamic manufacturing capability index and energy efficiency index of the equipment are finally obtained. The energy efficiency evaluation of the manufacturing system of the data-driven architecture proves the feasibility and scientificity of the evaluation method and achieves the goal of it. The simulation experiment results show that it can reduce the perception overhead while ensuring the perception efficiency and accuracy.

1. Introduction

In the field of composition, human work music needs to master basic music theory, musical style, harmony, and other professional knowledge. For ordinary users, the professionalism and threshold of composition are too high [1]. Automatic composition allows more ordinary users to participate in the production of piano automatic composition, which improves the entertainment of piano automatic composition. At the same time, automatic composition is random, which can bring creative inspiration to professionals. Driven by new theories, new technologies, and social development needs, artificial intelligence has accelerated its development, showing new characteristics such as quantitative perception and cross-border integration. These problems cause these methods to be helpless when dealing with complex class structure data. However, in the process of driving the architecture, the degree of compactness within the class is also the key to measuring the success of the driving architecture. Therefore, increasing the distance between classes and increasing the compactness within classes are the goals of our drive architecture [2–5]. In order to solve this problem, we improved DSC and KNNG, taking the distance information between points into consideration, and got new measurement methods, density-aware DSC and density-aware KNNG. Using these two measurement methods, this paper designs a new linear drive architecture algorithm: PDD (perception-driven DR using density-aware DSC) uses the density DSC visual perception-driven supervisory drive architecture algorithm and PDK (perception-driven DR). The visual perception-driven supervisory-driven architecture algorithm uses density-aware KNNG [6–8].

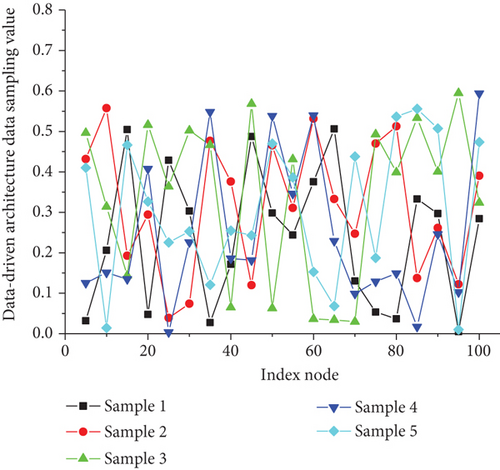

In order to test whether our method is effective for such data, we tried our method as follows. When calculating the global dDSC and dKNNG, we no longer directly calculate the mean value of dDSC(dKNNG) of all sample points but take the class as the unit to calculate the mean of each class and then calculate the mean of all classes. The driving architecture algorithm can project data into a low-dimensional space that is easier for humans to recognize, which will make it more convenient for users to explore the distinction between different types of data and the spatial distribution of data [9–11]. However, in the currently widely used unsupervised drive architecture algorithm, such as PCA, its drive architecture goal is not to maximize the class spacing as much as possible. The supervised driving architecture algorithm, such as LDA, is only suitable for data that conforms to the Gaussian distribution and does not take human knowledge into consideration. Second, we use our method to process high-dimensional data without class labels. Third, star coordinates are well-acclaimed in the field of visual analysis. Unlike traditional drive architecture algorithms, star coordinates can be extended with many interactive methods in two-dimensional or three-dimensional space. Incorporating the user’s prior knowledge into the drive architecture process is conducive to the user’s exploration and learning of data. We combine the drive architecture algorithm with the star coordinates and provide users with a series of interaction methods, such as point interaction, class interaction, and axis interaction, to facilitate users’ interactive data exploration [12–15].

In order to fill the gap in this regard, this paper proposes a linear drive architecture algorithm driven by automated arrangement perception. This method is aimed at maximizing the class spacing of data that conforms to the automated arrangement perception in the process of driving the architecture. Recently, the perception-based measurement method of class spacing has made a big breakthrough in the ability of simulating automatic arrangement perception. We further improve these methods, incorporate class density information, and combine them into the simulated annealing algorithm to find an approximate optimal solution. Based on the manufacturing service technology, an effective dynamic evaluation system of piano automatic composing running energy driven by perception data is designed and realized. The system mainly has four modules: equipment information management module, energy consumption data monitoring module, equipment capability evaluation module, and equipment service combination module. Each module realizes the addition, deletion, modification, and inspection of basic equipment information, monitoring and display of energy consumption data, and dynamic assessment of equipment capabilities and equipment historical service portfolio information. We provide enterprises with readily available and on-demand manufacturing resources and capabilities during the manufacturing process. We compare the algorithm with the most commonly used driving architecture algorithms on 93 data sets at the numerical level and the perceptual comparison of user scores and analyze the performance of the algorithm. At the same time, the algorithm is also extended to data with uneven class distribution and classless label data. Finally, it is combined with the star coordinate system to provide a series of interactive methods to facilitate users to further explore the data.

2. Related Work

In terms of micro resources, Machado et al. [16] define manufacturing capability as the integration of effective manufacturing resources in the realization of manufacturing tasks. It consists of processing capability and production capability. The processing capability represents the types of workpieces that can be processed under a specific machine tool, and the production capability represents the workpieces that can be produced per unit time and gives a new evaluation model and evaluation method for manufacturing capabilities. Scirea et al. [17] believe that manufacturing decision-making, resources, and manufacturing capabilities are mutually influencing. Under the common influence, manufacturing capabilities are jointly improved to achieve the goal of improving innovation performance and corporate performance. Based on this theory, manufacturing is established in the capability strategy model, but in the case proof, Größler et al. did not give out the relationship between manufacturing decision-making, resources, and manufacturing capacity but only discussed the influence of various elements of manufacturing capacity. They elaborated on the connotation of manufacturing capabilities under the cloud manufacturing model, gave the concept and classification of manufacturing capabilities under cloud manufacturing, and believed that manufacturing capabilities reflect the configuration and integration of manufacturing resources by enterprises.

Jeong et al. [18] proposed the perceptron model. Unlike the M-P model, which requires artificial setting of parameters, the perceptron can automatically determine the parameters through training. The training method is supervised learning. It is necessary to set the training samples and expected output and then adjust the error between the actual output and the expected output. After training, the computer can determine the connection weight of the neuron. Harrison and Pearce [19] proposed an error back propagation algorithm, which solved the linear inseparability problem by setting up a multilayer perceptron. Although the use of error backpropagation algorithms can be used for hierarchical training, there are some problems, such as too long training time, parameters need to be set based on experience, and there is no theoretical basis for preventing overfitting. Convolutional neural networks are widely used in the field of image recognition. Compared with traditional methods, the accuracy has been greatly improved. Raman et al. [20] proposed a method that combines pretraining and autoencoding with deep neural networks. During this period, hardware has been rapidly developed. Through high-speed GPU parallel computing, deep network training can be completed in just a few days. With the development of the Internet, the collection of training data sets has become more convenient, and researchers can obtain a large amount of training data, thereby suppressing overfitting.

Scholars analyzed the connotation of manufacturing capability in the cloud manufacturing environment, gave the definition and basic framework of manufacturing capability service, defined the metamodel and specific description attributes of manufacturing capability service, and used object-value-attribute for manufacturing capability service. The data model is formalized and heterogeneous. Some people believe that improving the manufacturing capabilities of enterprises should mainly start from the five aspects of quality assurance capabilities, cost control capabilities, flexible response capabilities, timely delivery capabilities, and innovation capabilities, and they have carried out in-depth ways to improve the manufacturing capabilities of enterprises under different types of strategies. It also compares the direct and indirect effects of quality, cost, flexibility, delivery capabilities, and innovation capabilities and gives the best paths for cost-oriented and innovation-oriented companies’ manufacturing capabilities [21]. Researchers introduced monitoring methods based on mobile agents, using forward graphs to continuously collect and update the global information of the system to support the self-repair function of distributed applications, and established MonALISA, a monitoring framework based on large-scale integrated service architecture sensing agents, to achieve a scalable dynamic perception of complex software systems, and based on this framework, the perception of complex application execution processes, workflow applications, and network resources has been successively realized. Considering that the system state can reflect whether the system is malfunctioning, we proposed a large-scale complex software system perception scheme based on an abstract state machine from a state perspective, using perception data as a calculation metric to establish a diagnosis for the system. Some researchers describe manufacturing capabilities as design and manufacturing capabilities, ascertained manufacturing capabilities, and actual manufacturing capabilities. According to manufacturing tasks, piano automation, equipment, and the relationship between roles, the model of the solution model of manufacturing capability from task expectation to demand deployment and the relationship model of piano automation composition from capability to role are established, and the piano automation composition hierarchical configuration model is proposed [22–24].

3. Data-Driven Architecture Awareness

3.1. Data-Driven Algorithm

The coefficient is used to determine the degree of weight connection adjustment. If the learning rate is too large, it may be overcorrected, leading to errors that cannot converge, and the neural network training effect is not good; on the contrary, if the learning rate is too small, the convergence speed will be very slow, resulting in too long training time. Generally, the learning rate is determined based on experience. First, set a larger value, and then gradually decrease the value. TensorFlow provides an interface of exponential decay function, which can flexibly and automatically adjust the learning rate during the training process and improve the stability of the network model.

3.2. Linear Drive Architecture Framework

The article shows the performance of dDSC and DSC in describing the degree of data separation, and it can be found that dDSC is more sensitive to different degrees of separation. In addition, the computational complexity of dDSC is the same as that of DSC, both are O(Cn), where C represents the number of classes. The high efficiency of dDSC allows dDSC to be applied to many interactive scenarios. The commonly used error direction propagation algorithm is the gradient descent algorithm, but this method cannot guarantee that the final result is the global optimal solution. If the initial value of the parameter is not set properly, the local optimal solution may be obtained instead of the global optimal solution. At the same time, the gradient descent method needs to minimize the loss function on all training data sets. Generally, in order to obtain a good network model in Figure 1, the training data set is massive, and calculating the loss function of all training data will cause the algorithm time for the complexity and space complexity.

Its overall structure is a tree topological structure, which is flexible in structure and convenient for subsequent addition of nodes. Among them, the wireless transmission network formed between the energy consumption-sensing device and the wireless router is the infrastructure network structure in the wireless network topology, which is built together by STA (workstation) and wireless AP. The router acts as a wireless AP to form a network and is responsible for each STA site. The convergence of data and the energy consumption monitoring node is connected to the AP as a STA (workstation) node, acting as a client in the network; the energy consumption-sensing device adopts the USR-WiFi232 serial port to the WiFi module, which meets the temperature range of industrial-grade working environment.

3.3. Separable Measure of Composition

In estimating the frequency of the pitch, the pitch value will be determined according to the highest energy. But the actual situation is that the key in the low range is not the peak of the time domain. On the contrary, the maximum amplitude value appears between the second and the fifth overtones. To the mid-low range, the envelope is basically parallel to the frequency axis and then downwards.

The stochastic gradient descent algorithm does not need to optimize all the data in the training set like the gradient descent method. Instead, in each round of iteration, a piece of training data is randomly selected for optimization to minimize its loss function. In this way, the time of a single training can be shortened, and the update speed of the parameters can be improved. However, the loss function of some samples does not represent the loss function of all data and may also cause interference, so that each iteration does not update the coefficients in the direction of overall optimization, and the final solution may not be the global optimal solution.

3.4. Data Simulation Perception

This result does not surprise us, because in this evaluation framework, the focus is on whether the classes are clearly separated or not. What can now be determined is that for clearly divided examples, the new method has the same performance as the original measurement method. Of course, our focus should be whether we can describe the examples that are not separated in more detail and accurately. In the iterative solution process of the driving architecture in Table 1, it is particularly important to accurately describe the nuances between the two results; especially in the early stages of the iteration, the class structure is not very clear.

| Number | Code name | Meaning | Type | Default value |

|---|---|---|---|---|

| 1 | Consumption | Time | INT | 0 |

| 2 | Learning rate | Index number | INT | 0 |

| 3 | Errors T | Data law | CHAR | Increment |

| 4 | C-Q | Class structure | CHAR | Increment |

| 5 | M-rate | Architecture | FLOAT | Increment |

| 6 | Energy-value | Clearly-divided | CHAR | 1 |

| 7 | CompreIndex | Performance | INT | 1 |

For energy consumption data, there are mainly errors or abnormalities, so the processing measures taken here are mainly data-cleaning processing to remove noise and abnormal data. Considering that the energy consumption data includes the working status of equipment standby, response, and processing, the data interval will change with the inconsistency of the working status, so the user-defined interval binning method is adopted here, and the relevant interval is defined according to the data law and classified energy consumption data accordingly.

Therefore, forming a dynamic constraint on the learning rate, the learning rate has a definite range, and the weight update is relatively stable. The parameter setting of the algorithm is relatively easy compared to other optimization algorithms, and usually, setting the default value has excellent performance. When constructing an automatic composition neural network model, it is necessary to combine the characteristics of the data set and the complexity of the network to select the best optimization algorithm, which can speed up the network training speed, shorten the network convergence time, and improve the quality of the network model.

4. Piano Automatic Composition and Quantitative Perception Model Construction under the Data-Driven Architecture

4.1. Data-Driven Architecture

DSC and KNNG incorporating density information are named dDSC and dKNNG. Correspondingly, the visual perception-driven supervisory-driven architecture algorithm using dDSC is named pDR. dDSC is PDD for short; and the visual perception-driven supervisory-driven architecture algorithm using dKNNG is named pDR. dKNNG is referred to as PDK. An important feature of wireless sensor networks is that homogeneous or heterogeneous sensor nodes can be deployed in the monitoring area at the same time.

According to the mapping relationship between the fundamental frequency and the keys, specific notes can be obtained; the length of the piano expresses the change in the length of the piano tone, which affects the choice of the time resolution in the automatic framing process of the piano composition signal. The pitch of the piano keys is determined according to the twelve equal laws, and the fundamental frequency of the keys is arranged according to the geometric progression shown in Figure 2. String vibration is a complex resonance. After the string is struck and vibrated, it will produce not only a fundamental tone but also overtones. Overtones will have an impact on the estimation of the fundamental tone, as well as the number distribution in different zones.

After that, the amplitude discrimination method is used to determine the abnormal data, that is, the difference between the i-th power or electric energy data sampling value, and the i-th sampling value is used for judgment. If the data consumption threshold is different, it is judged that the i-th sampling value is the true value at this time; if it exceeds the specified threshold, the i-th data is considered an abnormal point; and for abnormal data, it can be considered missing value data. In the value processing, the classical regression interpolation method is used for processing, and the regression model is usually expressed as the text.

4.2. Performance Analysis of Quantitative Perception Algorithm

At the same time, the receiving rate of the serial port can reach 460800 bps, and the uploading rate can reach 150 M. The performance is superior. Its UART pin is connected with STM32, which can easily receive the packaged information processed by STM32 and convert it into IEEE 802.11 protocol data for transmission. As for the equipment work-related information, it is read and processed by the industrial computer connected to the equipment PLC and then transmitted to the wireless router via the network cable through the SOCKET transmission method in Table 2 to realize the aggregation of sensing data.

| Step number | Algorithm input | Code text |

|---|---|---|

| 1 | Since the length B(x − y) | For a = [1 − 1.8237 0.9801] |

| 2 | The same sound in ∂π(u) | B = [1/100.49 0 1/100.49] |

| 3 | The measured value is μx | N = 0 : 30 |

| 4 | Equal step length to segment the audio | Subplot (211) |

| 5 | We choose to randomly generate x | X1 = udt(n) |

| 6 | A good method is to take μy | Y1 = filter(b, a, x1); |

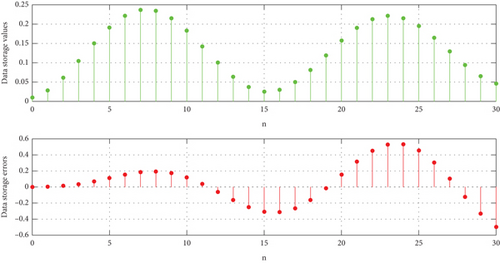

| 7 | The receiving rate of x + y | Stem (n,y1,“fill,” “g”), grid on |

| 8 | The method of merging equal steps | Void bigsort(int ∗arr,int len) |

| 9 | The industrial computer connected 1/α − r | {adjust-downmy(arr,0,len-i-1); |

| 10 | It turns out that μxμy | For(int i = 0; i ≤ len; i++) |

| 11 | Referring to other drive architecture | {void merge(int ∗A,int low,int mid,int high) |

| 12 | The adjacent subsegments are judged | Int temp = arr[0] |

| 13 | It needs to be extracted μx + μy | Arr[0] = arr[len − i] |

Since the length of the same sound in different audio files changes, the step length should also be changed synchronously, but this is difficult to control. A good method is to take the step length short enough and use equal step length to segment the audio. Then the adjacent subsegments are judged. If the pitch is the same and it is not the end of the note, it means that it is the same note, and the adjacent subsegments with the same note need to be merged. In this way, the method of merging equal steps is used to realize the change of the length of the sound.

4.3. Evaluation of Numerical Indexes of Automatic Composing

The data-driven architecture can decompose a problem, decompose a multiattribute problem into many small elements, and generate a hierarchical structure based on the affiliation between the elements, which can reflect the associated information between the elements. At the top of the structure is the target layer, which represents the objective of the evaluation; the next layer is the criterion layer, which represents the characteristic attributes of the target; and then the index layer and its subindicator layer, which can represent the criterion layer. The bottom layer is the scheme layer, which is composed of the objects to be evaluated. By calculating the relative importance of each element of each layer and its adjacent two layers, the weight between each subindicator and the total indicator can be obtained.

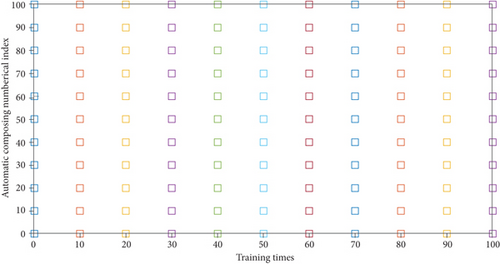

Based on the overall process of the system in Figure 3, we analyze the number of interactions of each computer node, run the BookStore system, count the interaction frequency and interaction time of each computer node, calculate the interaction frequency and interaction density, and quantify the importance of each computer node. Based on BookStore’s initial set of perception objects, we analyze the relationship between perception objects, filter and refine perception objects, and generate a new set of perception objects. Finally, we compare the system overhead and accuracy of the new set of sensing objects and the initial sensing objects.

The specific method is as follows: adopt the method of comparing each other in sequence, corresponding to the ratio scale value in the article, and then generate a judgment matrix. If the matrix is a consistent matrix, it means that the obtained weight is the normalized eigenvector of the matrix. Specifically, the weight value of this layer and the weight value of the upper layer are multiplied and calculated in sequence until the uppermost layer stops. In this way, the sub indicators of each target layer correspond to a relative weight.

5. Application and Analysis of Piano Automatic Composition and Quantitative Perception Model under Data-Driven Architecture

5.1. Quantitative Perception Data Preprocessing

In addition, when the space of the monitoring environment or other factors cause a single router to fail to complete the aggregation and transmission of all information to the server, the layout of the wireless relay node can be planned according to the monitoring environment space and actual needs, wireless router signal coverage, etc. After adding routers to the nodes, we use the router’s WDS wireless bridging function to set the relevant relay parameters to form a relay transmission network, realize the aggregation of the node information in the tree topology and the expansion of the transmission distance, and sense the aggregation of the information of each node in the network. It is sent to the server, and the server realizes the fusion processing and storage of the aggregated information. The data transmitted in the transmission network in Table 3 above are all encrypted by WPA2-PSK (AES), which ensures the security of the transmission channel.

| Processing index | Channel 1 | Channel 2 | Channel 3 |

|---|---|---|---|

| 1 | 0.94 | 0.01 | 0.56 |

| 2 | -0.43 | 0.03 | 0.19 |

| 3 | 3.07 | 0.00 | 0.29 |

| 4 | -0.12 | 0.02 | 0.04 |

| 5 | -5.43 | 0.02 | 0.07 |

| 6 | 0.66 | 0.00 | 0.48 |

| 7 | -0.56 | 0.01 | 0.38 |

The autoencoder can be regarded as a special feed-forward neural network, which is usually trained using the minibatch gradient descent method like the feed-forward neural network, so as to learn useful features of the data. AE is mainly composed of two important structures: encoder represented by and decoder represented by. Obviously, it can be concluded that the biggest feature of the encoder structure is that the input layer and output layer have the same number of neurons, and the number of neurons in the middle hidden layer is less than the number of neurons in the input layer and output layer. Experiments have proved that the number of neurons in the hidden layer of the autoencoder can be much smaller than the number of neurons in the input layer, so a very high compression ratio can be achieved.

5.2. Data-Driven Architecture Simulation

The duration of each ECG recording is ten seconds, the sampling frequency is 1000 Hz, that is, 1000 signal points are sampled per second, and the length of a single record is 10000. According to the window size, each record intercepts the same number of windows to obtain a total of 9975 ECG signals. Randomly, they divided them into training data set and test data set, of which training data set and test data set accounted for 80% and 20% of the total data, respectively. The accuracy of data collection is improved, and the redundant information collected by nodes can also be used as fault-tolerant detection of information.

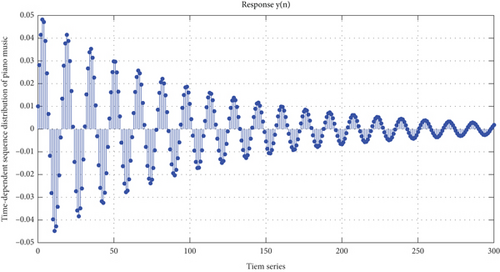

The input note sequence and the expected output note should be reasonably selected from the training set according to a certain correlation, that is to say, it needs to be formulated reasonably training rules. Finally, combined with the demonstration audio note feature data set, in order to obtain a better prediction network model in Figure 4, there will be multiple gated loop unit network layers in the network.

Online audition evaluation requires the development of an online audition effect scoring platform, which adopts the development form of separation of front and back ends. The evaluation method of piano music effect is based on the principle of Turing test. The automatically generated piano music and the piano music created by the human work composer are randomly combined and placed on the platform. Audition users can audition the piano music on the platform, according to their own judgments of each piano piece. Through this platform, users’ feedback can be collected to help optimize the model and do further research.

5.3. Case Application and Analysis

Considering that the adaptive sensing process in Table 4 will produce a large amount of sensing data that needs to be stored, and the adaptive process itself is a real-time process, the storage and reading of the sensing data are required to be fast. Therefore, this article chooses the MySQL database to store the perception data obtained by the adaptive perception process. Compared with other databases, the MySQL database is small in size and fast in running speed, which can meet the needs of fast sensory data storage. Moreover, the MySQL database is open source, which greatly reduces the cost of use. In addition, MySQL provides more data types.

| Range number | Sensing music | Data types | Process name | Score |

|---|---|---|---|---|

| 1 | Demo_10 | INT | MS | 29.41 |

| 2 | Demo_12 | CHAR | MS | 3.89 |

| 3 | Demo_09 | CHAR | MS | 7.39 |

| 4 | Demo_11 | INT | GRU | 47.67 |

| 5 | Demo_05 | INT | GRU | 37.59 |

| 6 | Demo_02 | INT | GRU | 11.98 |

| 7 | Demo_06 | INT | PC | 46.53 |

| 8 | Demo_07 | CHAR | PC | 31.07 |

| 9 | Demo_01 | CHAR | GRU | 26.43 |

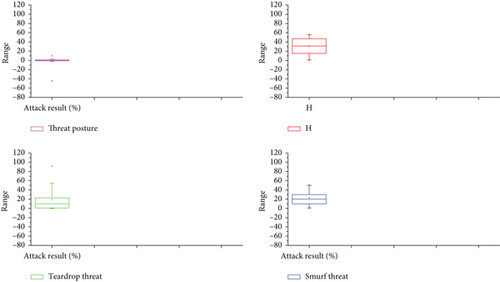

In the part of automatic composition quality evaluation, this article develops an online audition effect scoring platform and invites piano music lovers to make scores based on their subjective listening feelings. The offline performance evaluation invites professionals to designate 5 indicators, use the entropy weight method to assign weight to each indicator, and then conduct a comprehensive evaluation of each song. The scoring results show that the piano music automatically created in this paper has a high score, and some works can pass the Turing test in Figure 5.

The construction of the automatic composition neural network model first studies the cyclic neural network, which has short-term memory capabilities. This structure allows the cyclic neural network to theoretically process the sequence data of any length. However, the simple recurrent neural network can only learn short-term dependencies due to the explosion or disappearance of gradients. In the process of piano automatic composition, the dependence interval between notes is relatively large.

Users are allowed to move some points in the low-dimensional space to feed back to the drive architecture algorithm to improve the quality of the drive architecture. Specifically, the steps of the experimental program are as follows: If the distance between the unmarked data and the center point of the marked data is closer, then the unmarked point will be classified as this type. Visuals of the final classification results are shown to the users. Figure 6 uses the same classification method to test with LDA.

At the same time, the length of the same sound in different audio files also changes. This article will take the step length to be short enough and then combine the same notes in the adjacent subsegments to achieve this change in the length of the sound. After each frame that passes through the filter array, a set of output values will be obtained, and the maximum output value will be found. First, we judge with the set threshold to see if it is a silent segment, then index the filter bank corresponding to the maximum output value to the fundamental frequency of the frame, and determine whether adjacent subsegments need to be merged. There is a mapping relationship between the extracted fundamental frequency and piano notes, and the note sequence of the demonstration audio can be obtained through conversion, which can be used as the training set of the neural network.

Finally, we use alice.XPT and the corresponding alice.wav audio file to verify the design in Figure 7 based on the twelve equal laws of this article. The final experimental results show that, except for a few multifundamental moments, the extracted values of note features at other moments are completely consistent with the original file.

6. Conclusion

In this paper, by studying the collection and processing methods of massive sensing data in the manufacturing process, this paper proposes a sensing data-driven piano automatic composition operation energy efficiency evaluation model and applies these methods to the actual engineering application of the automated composing system. First of all, for the feature extraction part, using the design process of Mel frequency cepstral coefficient extraction for reference, combined with the characteristics of the piano automatic composition signal, designs based on the twelve equal temperaments are proposed. Secondly, for the network model construction part, the cyclic neural network has a memory function and is good at processing sequence data. Piano music can be regarded as a sequence composed of multiple notes according to the rules of music theory, and there is a certain dependency between the notes. Automatic composition allows the neural network model to learn these hidden rules and then predict and generate the note sequence. On this basis, the network model designed in this paper has a total of 5 layers, and the hidden layer is composed of a network of gated recurrent units. Through many experiments, the specific parameters in the network model are tuned and processed, and the quality of the final piano music is up to the desired effect. Finally, in view of the manufacturing system decision-making in quantitative perception, the energy efficient manufacturing evaluation is introduced, and the connotation of energy efficient manufacturing under the composing environment is analyzed. The simulation experiment determined the energy effective evaluation index based on the selection principle of the evaluation index, then described and preprocessed each evaluation index, and finally determined the index system and model for the effective evaluation of automatic music composition.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.