Feature Extraction and Classification of Music Content Based on Deep Learning

Abstract

To study the use of in-depth training in extracting and classifying the content of music samples, the work offers an algorithm for identifying and classifying musical genres based on a deep network of beliefs, enabling it to be used to extract and classify traditional Chinese musical instruments, and using real-world experiments to test its performance after training. The experimental results are as follows: the improved depth confidence network algorithm has the highest accuracy for music recognition and classification, which can reach 75.8%, higher than other traditional methods. The improved depth confidence network identifies and classifies Chinese traditional musical instruments through Softmax layer, and the accuracy is even as high as 99.2%; DBN is combined with Softmax neural network algorithm when only a few labeled samples in the training set are used for network fine-tuning, and the accuracy of the algorithm can still reach more than 90%, which can reduce the workload in the early stage. This study effectively solves the problem of too much workload and low accuracy in the process of music content recognition, classification, and extraction.

1. Introduction

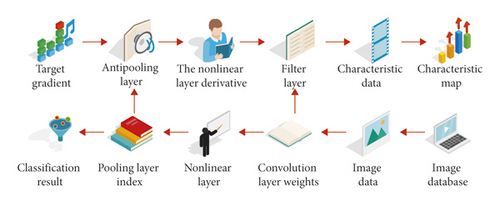

Music information retrieval (MIR) has changed in new research to meet the needs of most users [1]. Music information retrieval includes many subtasks, such as (1) identification and classification of music genres: for unknown songs or music, identify their music information and identify their genres. For example, George divides music into ten genres based on content [2]. (2) Identification and classification of musical instruments identify the types of musical instruments from unknown music fragments and determine the specific names of musical instruments. (3) Composer identification and classification identify which composer’s music works belong to from unknown music fragments. (4) Singer identification and classification identify and classify the singers of unknown songs. (5) Emotion recognition and classification identify and classify the emotion types expressed by songs or pure music. Among them, identifying and classifying music genres are very important in searching for music information. Many music users are only interested in a particular type of music, and the role of the music type recognition and classification system is to classify music according to its style [3–5]. So that they can recommend music according to their interests and hobbies, which is convenient for users to quickly retrieve and efficiently manage happy music. Figure 1 shows the music content feature visualization and model evaluation method of deep learning.

2. Literature Review

Nasr et al. and others proposed the music genre recognition and classification method. The algorithm is based on traditional acoustic features. Finally, the recognition rate of 10 music genres is 61% [6]. Zhang et al. and others analyzed the impact on the effect when people choose different features for music genre recognition and classification and used three classifiers: support vector machine (SVM), multilayer support vector machine, and linear discriminant analysis (LDA). Finally, they found the short-time Fourier transform feature (FFT) and Mel frequency cepstrum coefficient feature (MFCC). The recognition and classification accuracy of the feature combination set of pitch feature and beat feature are the highest [7]. Yang et al. and others proposed a new method of recognizing and classifying music genres based on wave theory. Statistical methods are used to calculate the statistical value of the wave coefficient to obtain features. At the same time, different styles are used to identify and classify. SVM, GMM, LDA, and KNN were studied, and finally, it was found that SVM had the best effect on identification and classification [8]. Zhao and others’ uncontrolled dimensional reduction (NMPCA) method is proposed for grade 3 tensors, and the results show that NMPCA can produce more important properties compared to the multicomponent subspatial analysis methods widely used for identification and classification [9]. In defining and classifying music genres, the sun proposed a high-order instantaneous characteristic that combines the third-order statistical properties of the spectrum to create segment-level characteristics. However, the size of the eigenvector shows an increasing trend, and the calculation becomes more complex [10]. Although China began researching the genre of music later, it has made some progress. The forward selection algorithm was used to identify and classify music types such as energy square root, zero intersection velocity, strongest pulse, strongest pulse intensity, spectral center value, spectral flow, and spectral variation. Cepstral coefficients, linear prediction coefficients, and other basic properties were examined, and a method for identifying and classifying many types of music combined with music labels was proposed. The task of identifying and classifying 8 types of music in the system reached almost 87% [11].

This article offers a network algorithm for recognizing and classifying music genres based on a deeply trusted network. It is sufficient to include only the basic characteristics of networked music signals, such as MPC, FFT, and MFCC. Automatically learns and analyzes input function data. This allows for the acquisition of more appropriate abstract features that reflect the nature of different music, drastically reducing the complexity of extracting features, and making the process of recognizing and classifying music genres better and smarter.

3. Research Methods

3.1. Deep Learning

From the birth of machine learning to the present, according to the hierarchical structure of the model, its development process has gone through two stages: shallow learning and deep learning. In general, these models are considered nonlinear, only nonlinear transformers [12, 13]. Deep learning is a model of a deep neural network with many layers of mystery. With the help of multiple hidden layers, people can perform multilayer nonlinear learning on input data, and with adequate learning, they can arbitrarily approximate complex functions and compute more complex and important properties [14, 15]. The comparison between shallow learning and in-depth learning is shown in Table 1.

| Model category | Shallow learning | Deep learning |

|---|---|---|

| Number of layers | 1-2 floors | Above 3 floors |

| Model expression ability | Limited | Powerful |

| Theory | Mature theoretical basis | Difficulty in theoretical analysis |

| Training difficulty | Easily | Complex |

| Data demand | Less | Quite a lot |

| Feature extraction method | Manual feature extraction | Network autonomous learning |

| Applicable occasions | Tasks requiring simple features | Tasks that require clarity and good manners: making pictures, making natural language, knowing music, etc. |

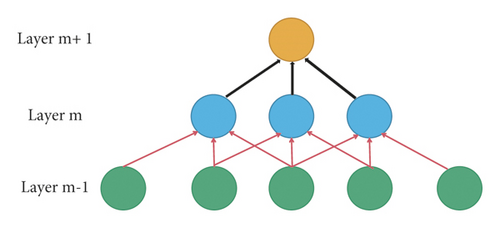

With the deepening of the research on deep learning, researchers have put forward many effective deep learning structural models. Choose the appropriate network structure according to different use purposes, and the ultimate goal may be synthesis, generation, recognition, and classification. We divide deep learning into generative depth structure, differentiated depth structure, and hybrid depth structure [16]. Convolutional neuron networks (CNN) are a very important network model for deep learning. It has a structure more similar to biological neural networks, including two special structures: convolution layer and lower sampling layer. It is a partially connected neural network structure. In order to realize the local correlation in the space of each layer, CNN forces the local connection of neurons in adjacent layers [17]. In other words, the input of each neuron in the latent m layer is obtained by connecting local units in the m − 1 layer, see Figure 2.

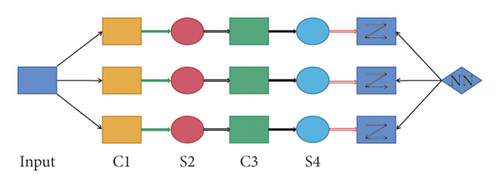

CNN is a multilayered neural network, with each layer containing a large number of two-dimensional planes, and each plane containing multiple nerve nodes. Its basic structure is shown in Figure 3.

As seen in Figure 3, the convolution layer is C1 and C3, and the lower sampling layer is S2 and S4. C1 to S4 are also known as the four hidden layers of the neural network. Each layer contains three plane groups, and each plane contains multiple neurons [18, 19].

3.2. Music Feature Extraction

Music features can be used to characterize the essential attributes of redundant music, which can be reflected by feature vectors that can calculate specific values, in which the timbre is mainly reflected by short-term features; the tone and rhythm are characterized by long-term features, which can also be obtained by the combination of short-term features [20]. Therefore, the experiment in this paper mainly extracts the short-term features when extracting the original features of music signals. Short-time features can be divided into the following three categories.

3.2.1. Time Domain Characteristics of Music

- (1)

Short-term power

-

Short-term energy determines the change in the amplitude of a music signal over a period of time, and the amplitude changes significantly over time. This function is often used because there is a sharp difference between the music segment and the silent segment, identifies silent segments, and thus determines the beginning, transition, and end of music [21].

-

If x (n) represents the signal value of the nth example of one of the melodies, its shortest period of energy count is

(1) -

where w (n − m) is the window function, and N is the window length.

- (2)

Short-time average zero-crossing rate

-

The short-term average cross-sectional velocity is the number of times the time waveform of the music signal for each frame exceeds the zero point. The higher the zero velocity, the higher the frequency components in the frame of music signal. As with short-term energy, this function is mainly used to detect silent frames. The standard for calculating the short-term average zero-section velocity is

(2) -

Of these, w (n − m) is a window function, and sgn [x] is a symbolic function (3).

(3)

3.2.2. Frequency Domain Characteristics of Music

The music signal is transformed in the time domain and frequency domain, and then the frequency-domain signal is analyzed and processed. The obtained characteristics are called the frequency domain characteristics of music. F(ω) represents the Fourier transform of a frame of music signal: where l0 and h0, respectively, represent the minimum and maximum frequency of music signal in the frequency domain, which is commonly used in the frequency domain of music signal processing.

- (1)

Spectral centroid

-

Spectrum center is a characteristic measure used to measure the spectral center of a music signal. The high-frequency component of the music signal will increase with the increase of spectrum centroid value. The calculation formula is

(4) - (2)

Spectrum energy

-

Spectrum energy represents the frequency domain energy of a frame of music signal, and its calculation formula is

(5)

3.2.3. Cepstrum Domain Characteristics of Music

- (1)

Linear prediction cepstral coefficient (LPCC)

-

LPC was first used in the field of speech signal processing to calculate basic speech frequencies, formats, and so on. Later, researchers found that some characteristics of music signals can also be reflected by LPCC, so this feature is also widely used to analyze music signals [22]. It is a feature that combines the two principles of linear prediction and cepstrum. It’s all-pole system functions are

(6)where p is the prediction order, and ak is the prediction coefficient -

If the response to the first music signal pulse is h (n), the process of obtaining the cepstrum is

(7) - (2)

The Mayer frequency inverted spectrum coefficient (Mel frequency cepstral coefficient, MFCC)

-

MFCC is a cepstrum feature obtained in the Mel frequency domain, because the music frequency perceived by the human ear changes nonlinearly, and MFCC can convert this nonlinear change into linear change, which is more convenient to measure the human ear’s perception of music. Then, the relationship between Mel frequency fmel and linear frequency f is

(8) -

The features described above are only a few of the many music features that can be extracted manually. The type of music required to classify musical types and identify traditional Chinese musical instruments may vary depending on the type of musical instrument.

3.3. Identify and Classify Music According to Deep Beliefs

The task of music genre recognition and classification is mainly divided into three steps: music signal preprocessing, music feature extraction, and music genre discrimination.

3.3.1. Signal Preprocessing

- (1)

Preaggravation

-

Preemphasis is generally realized by a first-order digital filter before feature parameter extraction. The transfer function of the filter is expressed as

(9) -

The result of the preturbulence pressure is

(10) - (2)

Framing

-

Before decomposing the characteristics of a musical signal, it is necessary to first develop a framework; that is, the signal is divided into small segments with stable statistical characteristics. Each segment is called a frame. The theoretical calculation formula of the number of frames of a music signal segment is

(11) - (3)

Windowing

-

After framing all genre music clips, in order to increase the continuity between frames, reduce the edge effect and reduce the spectrum leakage, it is also necessary to add windows to the music signals after framing.

The definition formulas of these three window functions are as follows (12)–(14):

A comparison of the performance of the three window functions is shown in Table 2.

| Window type | Main lobe width (2π/M) | First side lobe attenuation (dB) |

|---|---|---|

| Rectangular window | 2 | 13 |

| Hanning window | 4 | 32 |

| Hamming window | 4 | 43 |

3.3.2. Music Feature Extraction

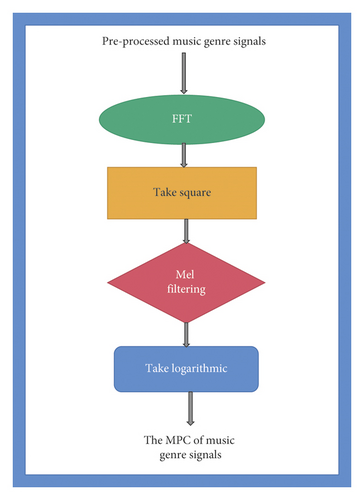

In this paper, the first characteristics are derived manually, such as the Mel-Fon coefficient (MPC), and then, a deep belief network is used to further study the more important musical characteristics of the various types of music. The process of decomposing the parameters of the MPC function of a music genre signal is shown in Figure 4.

3.3.3. Music Genre Discrimination

Deep belief networks (DBN) are deep neural network overlapped by several RBMs. In this work, an algorithm for recognizing and classifying music genres based on a deep network of beliefs has been experimentally studied and improved.

3.4. Recognition of Traditional Chinese Musical Instruments Based on a Deep Network of Beliefs

Compared to traditional methods, the deep-faith network music genre identification and classification method can achieve better identification and classification results while reducing the workload of manually unpacking complex musical characteristics. This article presents an example of how to recognize and classify traditional Chinese musical instruments and offers an algorithm for recognizing and classifying traditional Chinese musical instruments based on a network of deep beliefs. The music library of traditional Chinese musical instruments used in the experiment consists of six categories: gujen, pipa, erhu, flute, baby silk, and suona (see Table 3).

| Label | Names of traditional Chinese musical instruments | Training set music clips | Verification set music clip | Test set music clips |

|---|---|---|---|---|

| 1 | Guzheng | 60 | 20 | 20 |

| 2 | Pipa | 60 | 20 | 20 |

| 3 | Erhu fiddle | 60 | 20 | 20 |

| 4 | Dizi | 60 | 20 | 20 |

| 5 | Hulusi | 60 | 20 | 20 |

| 6 | Suona horn | 60 | 20 | 20 |

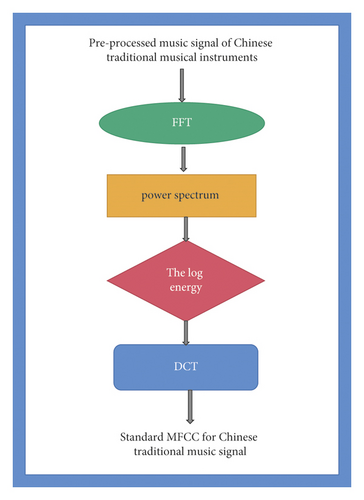

After the establishment of the Chinese traditional musical instrument music database, the preprocessing process of the above music genres is basically the same, including preemphasis, framing, and windowing. The original characteristic parameters of Chinese traditional musical instruments have a great impact on their recognition and classification performance. In this experiment, another feature, the Mel frequency cepstral coefficient (MFCC), is extracted. This is because the key factor to distinguish different musical instrument categories is the timbre of musical instruments, and MFCC has proved to be a feature that can characterize the timbre of music. The specific flow chart is shown in Figure 5.

In this experiment, when recognizing and classifying Chinese traditional musical instruments, the MFCC feature vector is selected as the input feature of the network, and the deep confidence network is used as the deep neural network to extract abstract features. Then, the extracted abstract features are predicted through the output layer, that is, softmax layer.

4. Result Analysis

4.1. Comparison of the Performance of Traditional Methods and Networks of Deep Beliefs for the Classification of Music Genres

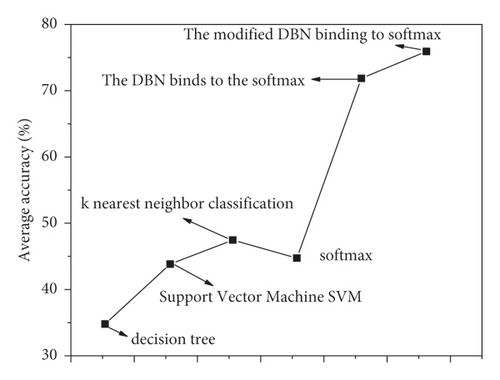

Use the training set samples to train the model, then use the trained model to predict the music genre samples of the test set, and finally compare the predicted music genre labels with the actual music genre labels to obtain the average recognition and classification accuracy. The classification algorithm based on DBN combined with Softmax and the traditional classification method is used to train, verify, and test 10 music genres, respectively, and the average accuracy obtained is shown in Figure 6.

As shown in Figure 6, the recognition accuracy of traditional classification methods is very low. The lowest decision tree has only 34.3% accuracy, and the highest support vector machine (SVM) has only 46.8% accuracy. However, after further study through a network of deep beliefs, the effect of recognizing and classifying music types has improved significantly. Among them, the recognition and classification accuracy of the continuous and nonenergy deep trust network algorithm reached 71.4%, and the recognition and classification accuracy of the improved deep trust network algorithm reached 75.8%, with an increase of more than 4%.

4.2. Recognition and Classification Algorithm of Chinese Traditional Musical Instruments Based on Deep Confidence Network

In this experiment, to identify and classify traditional Chinese musical instruments, the MFCC feature vector was selected as the network input property. The deep trust network was used as the deep neural network to extract the abstract properties, and then, the abstract properties obtained were used. Make assumptions about tool classes.

4.2.1. Parameter Selection of Deep Confidence Network Model

Table 4 shows the predictive accuracy of traditional Chinese musical instruments if the deep belief network contains only one latent layer that alters the number of nerve nodes.

| Number of hidden layer nodes | 39 | 78 | 117 | 156 | 195 | 234 | 273 | 312 | 351 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | 80.2 | 90.4 | 97.9 | 97.7 | 98.3 | 98.2 | 98.0 | 98.2 | 98.1 |

As shown in Table 4, when the network of deep beliefs contains only one latent layer, the accuracy level essentially merges, thereby increasing the number of nerve nodes and maintaining the accuracy level of about 98%. Table 5 shows a comparison of the accuracy of the assumptions obtained by DBN for different numbers of hidden layer nodes, where the number of nodes in the first layer is 117 when there are two hidden layers.

| Number of nodes in the second hidden layer | 39 | 78 | 117 | 156 | 195 | 234 | 273 | 312 | 351 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | 98.9 | 99.0 | 98.9 | 99.0 | 98.9 | 99.0 | 99.1 | 99.2 | 99.2 |

Changing the number of neurons in the second layer is less effective for accuracy, as can be seen in Table 5, where the accuracy is usually about 99% different. Table 6 provides a detailed comparison of the assumptions of the different numbers of concealed methods, with the number of cells in the first layer being 117 and the number of cells in the second layer being 78 when DBN has three hidden layers.

| Number of hidden layer nodes in the third layer | 39 | 78 | 117 | 156 | 195 | 234 | 273 | 312 | 351 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | 99.2 | 99.1 | 99.0 | 99.2 | 99.1 | 99.1 | 99.1 | 99.2 | 99.0 |

Changing the number of neurons in our three layers is not very effective for the accuracy of the theory, as can be seen from Table 6, and the accuracy is usually kept at around 99.1%. Comparing the results of our table above, we see that the initial encryption process has the greatest efficiency of the estimation result, and the network performance is significant. The best network design is not used in this experiment in combination with the test results as shown in Tables 4–6 and Table 7.

| Parameter name | Parameter value |

|---|---|

| Block size | 50 |

| Pretraining learning rate | 0.001 |

| Fine-tuning learning rate | 0.1 |

| Number of pretraining per layer | 10 |

| Number of fine adjustments per layer | 100 |

| Contrast divergence step | 1 |

| Enter the number of layer nodes | 39 |

| Number of output layer nodes | 6 |

| Number of nodes in the first hidden layer | 117 |

| Number of nodes in the second hidden layer | 78 |

| Number of nodes in the third hidden layer | 39 |

4.2.2. The Average Accuracy of a Deep Belief Network Design for the Chinese Traditional Musical Instrument Category

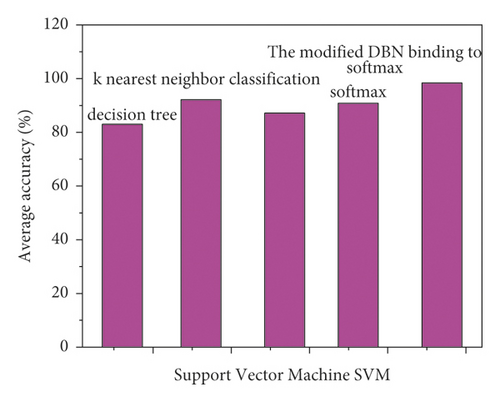

Use the training set of instrument samples to train the model, then cross verify the training set, and predict the instrument music samples of the test set. Finally, the predicted instrument label is compared with the actual instrument label to obtain the average accuracy of identification and classification. DBN-based classification algorithms and traditional classifiers are used to train, test, and test six types of traditional Chinese musical instruments, and the average accuracy of identification and classification is shown in Figure 7.

In Figure 7, the recognition and classification effect of directly inputting MFCC features into traditional classical classifiers is poor, in which the recognition and classification accuracy of the decision tree is the lowest, 83.9%, and the k-nearest neighbor classification algorithm (KNN) with the highest average accuracy of recognition and classification is 92.7%. After further learning through the deep confidence network, the abstract features are obtained, and the effect of identifying and classifying Chinese traditional musical instruments through the softmax layer is the best, with an accuracy of 99.2%, which is 6.5% higher than KNN. The experimental results show that the music samples of traditional Chinese musical instruments can have better recognition and classification effect after further learning of deep confidence network.

4.2.3. The Average Is the Level of Identification and Classification of Composite Models of Musical Instruments Including Deep Reliability Models

Mixed samples of six types of traditional Chinese musical instruments are classified in different ways in order to identify the accuracy of different musical instruments.

After the 39 dimensional MFCC features of each traditional Chinese musical instrument music sample are directly input into the decision tree, the recognition accuracy is obtained. In Figure 8, the average accuracy of classifying traditional Chinese instruments using this classification method is over 80 percent, of which the recognition rate of Erhu is the lowest, only 77%, and the recognition rate of gourd silk and suona is the highest, both 89%.

By comparing the recognition accuracy of six classification methods, it is found that different classification methods have different recognition effects on various traditional Chinese musical instruments, which may be related to the training of the inherent characteristics of musical instruments in these classifiers. In the traditional recognition and classification methods, the recognition rate of Erhu and flute is low, but after further training, the instrument samples with the deep confidence network and significant improvements in the recognition of these two instruments also demonstrate the superiority of a network of deep beliefs in the identification and classification of traditional Chinese musical instruments. This approach can reveal more important features of traditional Chinese musical instruments.

4.2.4. Accurate Analysis and Classification of Composite Models of Musical Instruments in China Based on Deep-Seated Models in Various Training Packages

The experiments in the first two sections use all labeled training set data to fine-tune the network, while the pretraining process of the deep confidence network is unsupervised. 1/6, 1/3, 1/2, 2/3, and 5/6 of the training set samples were labeled, respectively, to form five different training sets. The test set remains unchanged, and five experiments are carried out. The correct recognition rate of Chinese musical instruments is obtained by combining the traditional DBmax algorithm with Table 8.

| Proportion of labeled samples in all training samples | DBN combined with softmax (%) |

|---|---|

| 1/6 | 91.4 |

| 1/3 | 95.2 |

| ½ | 96.5 |

| 2/3 | 97.9 |

| 5/6 | 98.3 |

In Table 8, the proportion of the labeled samples in the training set of traditional Chinese musical instruments has been adjusted for network fine-tuning training. As long as the total number of samples in the training package remains unchanged, the accuracy of the instrument identification will improve as the number of samples with network fine-tuning labels increases. However, when only a few labeled samples in the training set are used for network fine-tuning, the accuracy of the algorithm can still reach more than 90%. In this way, only a small number of samples need to be labeled when a large number of traditional Chinese musical instrument music samples are identified and classified by a deep confidence network.

5. Conclusion

- (1)

The new music features learned by DBN are better than traditional MPC and MFCC in recognizing and classifying traditional Chinese musical instruments. Deep-faith networks have the ability to learn audio signals independently. The results of the experiment confirm that deepening beliefs are important as important studies in the activities of recognizing and distributing music genres and instruments.

- (2)

The accuracy of recognition and distribution of music is lower than that of Chinese musical instruments. In this experiment, the best recognition and classification accuracy is 75.8%. The music in the traditional Chinese musical instrument library is pure music and only contains music played by six single musical instruments. Therefore, it is relatively easy to extract the original music features and learn new features in the subsequent DBN. Therefore, the accuracy of recognition and classification is high, even up to 99.2%.

- (3)

When using a deep belief network to identify and classify traditional Chinese musical instruments, only a small number of musical instrument samples need to be labeled, and the accuracy of identification and classification is high. This is because deep preconfigured network pretraining is the core process of network training, which is an uncontrolled learning process that monitors the fine-tuning of the network and only needs to be labeled. In the experiment, by changing the proportion of labeled samples in the training set of Chinese traditional musical instruments, it is found that the recognition and classification accuracy of different proportions is more than 90%, which further proves the superiority of using a deep confidence network to recognize and classify Chinese traditional musical instruments.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

The research was supported by Guangxi Arts University and Sehan University.

Open Research

Data Availability

The labeled data set used to support the findings of this study is available from the corresponding author upon request.