Pell Collocation Method for Solving the Nonlinear Time–Fractional Partial Integro–Differential Equation with a Weakly Singular Kernel

Abstract

This article focuses on finding the numerical solution of the nonlinear time–fractional partial integro–differential equation. For this purpose, we use the operational matrices based on Pell polynomials to approximate fractional Caputo derivative, nonlinear, and integro–differential terms; and by collocation points, we transform the problem to a system of nonlinear equations. This nonlinear system can be solved by the fsolve command in Matlab. The method’s stability and convergence have been studied. Also included are five numerical examples to demonstrate the veracity of the suggested strategy.

1. Introduction

Nowadays, fractional partial differential equations (FPDEs) have emerged as one of the most crucial issues due to their vast applications in various branches of science, such as medicine [1, 2], control theory [3–5], engineering [6, 7], viscoelasticity [8], mathematical physics [9], geo–hydrology [10], signals [11], stochastic models [12], electrical engineering, [13], and financial economics [14]. Due to the fact that analytical solutions of FPDEs are rarely available, the use of numerical methods is inevitable. Hitherto, a number of numerical methods for FPDEs have been suggested, such as finite difference [15, 16], spectral methods [17–21], homotopy methods [22, 23], and finite element [24, 25]. The nonlinear FPDEs have been extensively analyzed using numerical methods. Dehghan et al. used the homotopy analysis method to construct a scheme for solving the fractional KdV equation [26]. Nikan et al. proposed a meshless technique in order to solve the nonlinear fractional fourth–order diffusion equation [27]. Safari and Azarsa introduced a meshless method based on Muntz polynomials to solve nonlinear and linear space fractional partial differential equations [28]. Yaslan applied the Legender collocation method for solving nonlinear fractional partial differential equations [29].

When it comes to solving differential equations, spectral approaches are extremely effective. The solution to the differential equation is sought as a series of basis polynomials using this method. The Galerkin, Tao, and collocation approximations are the most common spectral methods [30, 31]. For example, Samiee et al. [20] designed a Petrov–Galerkin spectral method for distributed–order PDEs. Agarwal et al. [32] suggested a spectral collocation approach for variable–order fractional integro–differential equations. In [33], the authors used the polynomial–sinc collocation method for solving distributed order fractional differential equations. Abbaszadeh et al. proposed a Crank–Nicolson Galerkin spectral method for distributed order weakly singular integro–partial differential equations [34].

where 0 < α, β < 1, g(ξ, η) ∈ C([0, L] × [0, T]), and signify the fractional operator. This problem appears in the modeling of heat transfer materials with memory, population dynamics [35], and nuclear reaction theory [36].

To the best of the author’s knowledge, little work has been done on problem (1). For example, Guo et al. [37] proposed a numerical technique for solving (1)–(3). In the case of α = 1, Zheng et al. [35] described three semi–implicit compact finite difference schemes for problem (1)–(3). This encourages us to suggest a numerical scheme for the problem (1)–(3). The finite difference schemes are the easiest methods for solving these equations. It is, however, difficult to apply the mathematical study of finite difference methods to nonlinear TFPIDEs. Polynomial spectral techniques are effective tools for solving PDEs. To build spectral methods, many polynomials have been developed (see [38–41]). The coefficients of Pell polynomials are integers, and the number of terms increases slowly. This leads to less CPU time and fewer computational errors. Because of this, the Pell polynomials with both of these two characteristics will be employed.

In this paper, we will focus on the spectral collocation method based on two–variable Pell polynomials. We use them as the basis polynomials to solve the main problem numerically. With the use of operational matrices, the problem is turned into a system of nonlinear equations in the approach based on these polynomials. The error analysis is presented. Several test problems are provided to illustrate the method’s efficacy.

The following is the body of the article: Section 2 introduces a number of key themes. To remedy the main problem, we suggest a polynomial spectral technique in Section 3. The error analysis is investigated in Section 4. Section 5 contains the experiments. The conclusion is addressed in Section 6.

2. Definitions

Definition 1 (see [15].)The Riemann–Liouville integral of a function on (0, L) × (0, T) is defined as follows

Definition 2 (see [15].)The Riemann–Liouville derivative of a function on (0, L) × (0, T) is defined as follows

Definition 3 (see [15].)The Caputo derivative of a function on (0, L) × (0, T) is defined as follows

3. Analysis of the Numerical Method

Here, we find several operational matrices with the help of Pell polynomials, which are useful in developing the suggested technique.

where ξi = (2i + 1)/2K + 2 and ηj = (2j + 1)/2J + 2.

By solving this system, the unknown matrix can be determined. It is worth noting that we have used the fsolve command in Matlab.

4. Convergence

Theorem 4. Let and , , and be the best approximations of , , and in the spaces G × Q, Gξ × Q, and Gξξ × Q, respectively. The following inequalities are true.

Proof. Using Taylor expansion, we have [45].

Similarly, other inequalities can also be proved.

Theorem 5. Let is the exact solution and is the approximation solution of equations (45). Then, one has

Proof. We have

So that

Now, we prove that the presented numerical method is convergent.

Theorem 6. Let SKJ(ξ, η) be the perturbation term and be the approximate solution to the main problem derived using the proposed approach. Then, the perturbation term tends to zero as K, J⟶∞.

Proof. Thanks to (9), we deduce that

Lemma 7 (see [46].)Let Iu(z) denote the modified Bessel function of order u of the first kind. The following identity holds:

Lemma 8 (see [46].)The modified Bessel function of the first kind Iuz satisfies the following inequality:

Theorem 9. Suppose , ,i ≥ 0, where L is a positive constant and . Then

Proof. From (73), Lemma (7), and Lemma (8), we have

As a result, the first portion of the theorem is established.

As a result, we can conclude that the series is absolutely convergent using the comparison test.

5. Numerical Experiments

Example 1. Consider the following nonlinear time–fractional partial integro–differential equation on [0, 1] × [0, 1] with the exact solution

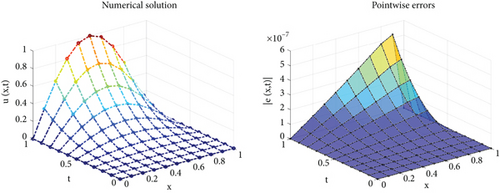

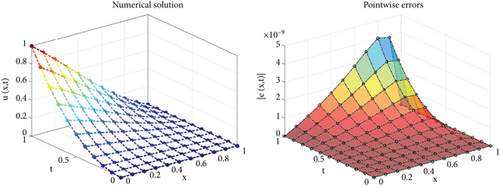

We solved this problem numerically using the polynomial spectral scheme provided in this paper. We employed fsolve in Matlab to solve a nonlinear system of equations (45). Table 1 shows the absolute errors for α = 0.5 and various β values. We can observe from this table that the recommended strategy is effective. Also, we portrayed the numerical solution and absolute error surfaces in Figure 1. Furthermore, the norm of errors and CPU times is reported in Table 2. Table 1, Table 2, and Figure 1 show that the numerical method provides acceptable results.

Example 2. Consider the following equation

| (ξi, ηi) | α = 0.5, K = 11 | α = 0.5, K = 11 | α = 0.5, K = 9 | α = 0.5, K = 9 |

|---|---|---|---|---|

| β = 0.1, J = 4 | β = 0.3, J = 4 | β = 0.7, J = 4 | β = 0.9, J = 4 | |

| (0.1,0.1) | 5.2665e − 11 | 7.5026e − 11 | 9.4157e − 10 | 1.5935e − 09 |

| (0.2,0.2) | 8.8713e − 10 | 7.0142e − 10 | 1.7084e − 09 | 4.5505e − 09 |

| (0.3,0.3) | 4.6062e − 09 | 3.6713e − 09 | 5.8173e − 08 | 1.3764e − 09 |

| (0.4,0.4) | 1.4766e − 08 | 1.2353e − 08 | 3.6620e − 07 | 1.2902e − 07 |

| (0.5,0.5) | 3.6426e − 08 | 3.1853e − 08 | 1.2736e − 06 | 6.7104e − 07 |

| (0.6,0.6) | 7.6243e − 08 | 6.9345e − 08 | 3.4065e − 06 | 2.2596e − 06 |

| (0.7,0.7) | 1.4258e − 07 | 1.3432e − 07 | 7.7630e − 06 | 6.0393e − 06 |

| (0.8,0.8) | 2.4555e − 07 | 2.3833e − 07 | 1.5689e − 05 | 1.3673e − 05 |

| (0.9,0.9) | 3.8165e − 07 | 3.7801e − 07 | 2.5814e − 05 | 2.4145e − 05 |

| (1, 1) | 7.3344e − 15 | 1.1944e − 16 | 1.6793e − 15 | 2.1690e − 14 |

| J = 4, K = 6 | J = 4, K = 7 | J = 4, K = 8 | J = 4, K = 9 | J = 4, K = 10 | |

|---|---|---|---|---|---|

| ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | |

| 1.7427e − 03 | 1.7093e − 03 | 3.7497e − 05 | 3.7046e − 05 | 5.5452e − 07 | |

| CPU | 0.7787s | 1.3037s | 1.4066s | 1.5607s | 1.9541s |

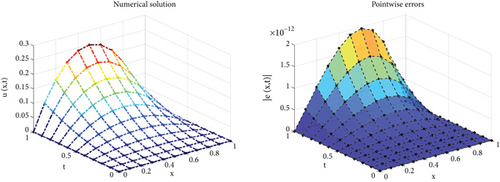

The absolute errors for equal values of α, β, and K = J = 3 are illustrated in Table 3. This table shows quite revealingly that the expressed method has good precision. In addition, we can observe from the table that only a small number of basis functions have produced the necessary outcomes. The CPU times and the norm of errors are provided in Table 4. Figure 2 shows a visualization of the approximate solution as well as absolute errors.

Example 3. Consider the following equation on [0, 1] × [0, 1]:

| (ξi, ηi) | α = 0.5 | α = 0.7 | α = 0.9 | α = 1 |

|---|---|---|---|---|

| β = 0.5 | β = 0.5 | β = 0.5 | β = 0.5 | |

| (0, 0) | 1.3878e − 17 | 1.3878e − 17 | 1.3878e − 17 | 6.9389e − 17 |

| (0.1,0.1) | 2.9677e − 15 | 2.4715e − 15 | 2.1732e − 15 | 2.4820e − 15 |

| (0.2,0.2) | 1.3601e − 14 | 1.0819e − 14 | 8.4386e − 15 | 8.1749e − 15 |

| (0.3,0.3) | 4.2369e − 14 | 3.3196e − 14 | 2.4869e − 14 | 2.1178e − 14 |

| (0.4,0.4) | 1.0748e − 13 | 8.5140e − 14 | 6.4282e − 14 | 5.2874e − 14 |

| (0.5,0.5) | 2.2699e − 13 | 1.8235e − 13 | 1.4061e − 13 | 1.1730e − 13 |

| (0.6,0.6) | 4.0491e − 13 | 3.2892e − 13 | 2.5840e − 13 | 2.2102e − 13 |

| (0.7,0.7) | 6.1083e − 13 | 5.0086e − 13 | 3.9917e − 13 | 3.4946e − 13 |

| (0.8,0.8) | 7.5499e − 13 | 6.2282e − 13 | 5.0181e − 13 | 4.4802e − 13 |

| (0.9,0.9) | 6.5503e − 13 | 5.4298e − 13 | 4.4097e − 13 | 4.0014e − 13 |

| (1, 1) | 7.5493e − 16 | 3.3867e − 16 | 1.0991e − 15 | 1.0437e − 15 |

| J = 3, K = 3 | J = 3, K = 4 | J = 3, K = 5 | J = 3, K = 6 | J = 3, K = 7 | |

|---|---|---|---|---|---|

| ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | |

| 1.9604e − 12 | 4.9638e − 12 | 8.0466e − 11 | 1.3686e − 13 | 1.4568e − 12 | |

| CPU | 0.7492s | 0.9354s | 1.1262s | 1.2330s | 1.2589s |

The exact solution is .

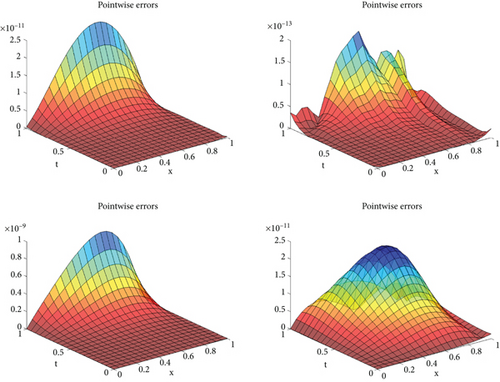

The numerical results are reported in Table 5. We have chosen K = 4, J = 5, and for equal α and β, the obtained results are fruitful. This table confirms that the presented method has high performance and produces accurate results. For α = β = 0.5 and different J and K, the norm of errors and the CPU times are provided in Table 3. The absolute error functions for equal α and β are sketched in Figure 3. These figures show that the numerical and exact solutions are almost identical (Table 6).

| (ξi, ηi) | α = 0.1 | α = 0.4 | α = 0.6 | α = 0.8 |

|---|---|---|---|---|

| β = 0.1 | β = 0.4 | β = 0.6 | β = 0.8 | |

| (0, 0) | 4.7184e − 14 | 3.4972e − 15 | 5.5511e − 15 | 3.9191e − 14 |

| (0.1,0.1) | 3.0197e − 14 | 1.8513e − 17 | 9.9751e − 13 | 5.3904e − 14 |

| (0.2,0.2) | 9.1656e − 14 | 1.8249e − 15 | 3.8079e − 12 | 1.1759e − 12 |

| (0.3,0.3) | 1.9482e − 13 | 2.8203e − 15 | 8.9047e − 12 | 3.4628e − 12 |

| (0.4,0.4) | 4.2674e − 13 | 7.0777e − 15 | 1.8213e − 11 | 6.6157e − 12 |

| (0.5,0.5) | 9.4080e − 13 | 1.0436e − 14 | 3.9364e − 11 | 1.0878e − 11 |

| (0.6,0.6) | 1.9982e − 12 | 3.2196e − 14 | 8.7362e − 11 | 1.6063e − 11 |

| (0.7,0.7) | 3.9228e − 12 | 4.3965e − 14 | 1.7459e − 10 | 1.9690e − 11 |

| (0.8,0.8) | 6.4684e − 12 | 8.2379e − 14 | 2.8359e − 10 | 1.6850e − 10 |

| (0.9,0.9) | 7.9272e − 12 | 6.1506e − 14 | 3.2058e − 10 | 5.0651e − 12 |

| (1, 1) | 5.7618e − 12 | 8.9421e − 14 | 5.1744e − 11 | 3.9714e − 12 |

| J = 5, K = 4 | J = 5, K = 5 | J = 5, K = 6 | J = 5, K = 7 | J = 5, K = 8 | |

|---|---|---|---|---|---|

| ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | |

| 1.7474e − 13 | 4.6830e − 10 | 5.1812e − 10 | 2.2715e − 09 | 3.7225e − 08 | |

| CPU | 0.6941s | 1.4075s | 1.4990s | 1.7516s | 2.1537s |

The exact solution is .

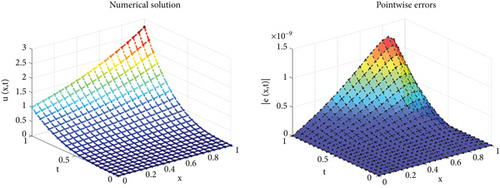

The absolute errors for α = β = 0.5 are presented in Table 7. Table 8 illustrates the norm of errors and CPU times for α = β = 0.5 with various J and K values. Table 9 also contains data on L2 errors. Numerical solutions and pointwise error graphs are demonstrated in Figure 4. This figure shows the behavior of the numerical solution and the error function. Numerical results are in good settlement with theoretical results.

Example 5. Finally, we investigate the following equation on [0, 1] × [0, 1]

| (ξi, ηi) | J = 4 | J = 4 | J = 4 | J = 4 |

|---|---|---|---|---|

| K = 6 | K = 7 | K = 8 | K = 9 | |

| (0, 0) | 3.9549e − 20 | 1.9230e − 22 | 6.7320e − 20 | 1.1194e − 18 |

| (0.1,0.1) | 1.0519e − 10 | 5.3038e − 12 | 4.6608e − 13 | 2.8112e − 12 |

| (0.2,0.2) | 2.7128e − 09 | 1.4373e − 10 | 7.5190e − 12 | 8.1029e − 12 |

| (0.3,0.3) | 2.6072e − 08 | 1.4112e − 09 | 6.8470e − 11 | 1.6060e − 11 |

| (0.4,0.4) | 1.0383e − 07 | 5.6159e − 09 | 2.7027e − 10 | 3.5035e − 11 |

| (0.5,0.5) | 2.8045e − 07 | 1.5208e − 08 | 7.3186e − 10 | 8.5052e − 11 |

| (0.6,0.6) | 6.1575e − 07 | 3.3347e − 08 | 1.6119e − 09 | 1.9935e − 10 |

| (0.7,0.7) | 1.2047e − 06 | 6.5140e − 08 | 3.1553e − 09 | 4.1556e − 10 |

| (0.8,0.8) | 2.1149e − 06 | 1.1777e − 07 | 5.7928e − 09 | 7.3660e − 10 |

| (0.9,0.9) | 2.8168e − 06 | 1.7066e − 07 | 8.9641e − 09 | 9.7501e − 10 |

| (1, 1) | 1.3234e − 14 | 2.6645e − 15 | 1.0658e − 14 | 3.5527e − 15 |

| J = 4, K = 5 | J = 4, K = 6 | J = 4, K = 7 | J = 4, K = 8 | J = 4, K = 9 | |

|---|---|---|---|---|---|

| ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | | ‖e‖∞ | |

| 5.4599e − 05 | 3.6853e − 06 | 2.2397e − 07 | 1.1841e − 08 | 1.2327e − 09 | |

| CPU | 0.6648s | 1.3185s | 1.2689s | 1.3789s | 1.5100s |

| J | K | ‖e‖2 |

|---|---|---|

| 4 | 5 | 3.0728e − 05 |

| 4 | 6 | 2.0621e − 06 |

| 4 | 7 | 1.1742e − 07 |

| 4 | 8 | 6.0069e − 09 |

| 4 | 9 | 7.7425e − 10 |

In Table 10, the L2 error is computed for α = β = 0.5 and different N and M. Table 11 also shows the norm of errors and CPU times. Figure 5 shows the numerical solution and absolute error plots. This figure shows that for K = 9 and J = 4, the numerical solution is close to the exact solution. Table 10, Table 11, and Figure 5 affirm the validity and efficacy of the presented method.

| α = β = 0.3 | α = β = 0.5 | α = β = 0.7 | α = β = 0.9 | ||

|---|---|---|---|---|---|

| J | K | ‖e‖2 | ‖e‖2 | ‖e‖2 | ‖e‖2 |

| 3 | 5 | 7.2576e − 05 | 7.1011e − 05 | 7.1448e − 05 | 6.0514e − 05 |

| 3 | 6 | 1.5363e − 05 | 1.5000e − 05 | 1.4978e − 05 | 1.3806e − 05 |

| 3 | 7 | 3.8656e − 07 | 3.7824e − 07 | 3.7609e − 07 | 3.4637e − 07 |

| 3 | 8 | 5.1965e − 08 | 5.0757e − 08 | 5.0432e − 08 | 3.9225e − 08 |

| 3 | 9 | 1.1091e − 09 | 2.4799e − 09 | 1.6532e − 08 | 6.7070e − 08 |

| J = 3, K = 5 | J = 3, K = 6 | J = 3, K = 7 | J = 3, K = 8 | J = 3, K = 9 | |

|---|---|---|---|---|---|

| ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | ‖e‖∞ | | ‖e‖∞ | |

| 1.1228e − 04 | 2.3945e − 05 | 6.0186e − 07 | 8.0155e − 08 | 4.0315e − 09 | |

| CPU | 0.5944s | 1.0808s | 1.1061s | 1.1594s | 1.2277s |

6. Conclusions

The purpose of this study was to suggest a collocation approach for solving a nonlinear TFPIDE based on Pell polynomials. In the Caputo sense, the fractional derivative is considered. The equation’s solution was expressed as a series of Pell polynomials with two variables. An algebraic system of nonlinear equations is obtained using the numerical technique. We proved that the method is convergent. Five test problems are provided to show that the method is efficacious. In numerical results, a small number of basis Pell polynomials is used to obtain good accuracy. In all examples, the CPU time was about one second. All of the tables and graphs demonstrated that the strategy is effective.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Open Research

Data Availability

All results have been obtained by conducting the numerical procedure and the ideas can be shared for the researchers.