Knowledge-Based Recurrent Neural Network for TCM Cerebral Palsy Diagnosis

Abstract

Cerebral palsy is one of the most prevalent neurological disorders and the most frequent cause of disability. Identifying the syndrome by patients’ symptoms is the key to traditional Chinese medicine (TCM) cerebral palsy treatment. Artificial intelligence (AI) is advancing quickly in several sectors, including TCM. AI will considerably enhance the dependability and precision of diagnoses, expanding effective treatment methods’ usage. Thus, for cerebral palsy, it is necessary to build a decision-making model to aid in the syndrome diagnosis process. While the recurrent neural network (RNN) model has the potential to capture the correlation between symptoms and syndromes from electronic medical records (EMRs), it lacks TCM knowledge. To make the model benefit from both TCM knowledge and EMRs, unlike the ordinary training routine, we begin by constructing a knowledge-based RNN (KBRNN) based on the cerebral palsy knowledge graph for domain knowledge. More specifically, we design an evolution algorithm for extracting knowledge in the cerebral palsy knowledge graph. Then, we embed the knowledge into tensors and inject them into the RNN. In addition, the KBRNN can benefit from the labeled EMRs. We use EMRs to fine-tune the KBRNN, which improves prediction accuracy. Our study shows that knowledge injection can effectively improve the model effect. The KBRNN can achieve 79.31% diagnostic accuracy with only knowledge injection. Moreover, the KBRNN can be further trained by the EMRs. The results show that the accuracy of fully trained KBRNN is 83.12%.

1. Introduction

Cerebral palsy is a leading cause of disability and could be challenging to cure throughout life [1]. The TCM theory plays an active role in the treatment of cerebral palsy. Symptoms are crucial in clinical diagnosis and treatment [2]. During clinical diagnosis, doctors integrate TCM theories to identify the syndrome based on patients’ symptoms, which are heavily influenced by the doctor’s previous experience. AI-assisted TCM diagnosis relies primarily on digital data obtained by modern electronic instruments, making TCM diagnosis more quantitative, objective, and standardized [3]. Thus, it is necessary to have a computer-aided decision-making model for the diagnosis to balance the uncertainty of human factors.

For the past two decades, owing to advancements in sensor, detector, and transducer technologies, it makes possible for AI to learn from digital information. Thus, AI-assisted TCM diagnosis has become a burgeoning field of research [4]. In earlier research, most AI approaches employed in TCM diagnosis are mostly limited to traditional machine-learning algorithms and their modified forms, such as support vector machine (SVM), random forest (RF), AdaBoost, and decision tree (DT). Wang [5] used a Bayesian classifier to generate the relationships between the human pulse and diagnostic. Zhang et al. [6] studied quantitative correlations between diseases and the physical appearance of the human tongue. In these conventional machine learning methods, the characteristics are extracted by specialists with extensive TCM clinical expertise. Deep learning technology has grown rapidly in recent years. Unlike the traditional machine learning methods, neurons in deep learning models can acquire diagnostic properties from the initial data set. The deep learning model comprises more complex hierarchical multilayer networks of artificial neurons that can automatically discover valuable features from the original data. Hu et al. [7] proposed a classifier by using the Shannon energy envelope, Hilbert transform, and deep convolutional neural networks (DCNN) for the analysis of the human pulse. Combing the characteristics of basic image processing and deep learning, Fu et al. [8] presented a computerized tongue coating nature diagnosis method using deep neural networks. Hou et al. [9] proposed a neural network for tongue color classification, which is more practical and accurate than the traditional one. Although the previous studies have attained a high level of accuracy, they only considered single-modal data and only a portion of patients’ information. Therefore, recent studies are expected to introduce more comprehensive data. Yang et al. [10] developed a novel deep neural network that uses multiview features of the gene data to identify the disease genes. Dai et al. [11] proposed a multimodal deep learning framework based on the four-diagnosis of TCM. These approaches effectively compensate for the information in a single-modal and improve the accuracy of the model.

With the rise of medical digitalization, the hospital information system deposited a considerable volume of EMR data, which completely documents the patients’ situation in text form. There is increasing interest in applying machine learning techniques to decision-making models for medical diagnosis and treatment. Liang et al. [12] adopted the deep belief network (DBN) to acquire feature representation from EMR and then combined the SVM for supervised learning on the labeled data. Similarly, various supervised machine learning algorithms such as random forest and logistic regression were used in [13] to build ischemic stroke classifiers. Although these ML-based methods outperform conventional techniques such as rule-based algorithms by using massive datasets, they ignore domain-specific knowledge.

The knowledge graph (KG), once known as ontology in early research, serves as an excellent solution to inject domain-specific knowledge into the ML models. The KG is a multirelational graph composed of entities and relationships containing a large amount of prior knowledge [14, 15]. Gone et al. [16] stood on advances in graph embedding learning techniques, decomposing the medicine recommendation task into a link prediction process, and proposed the safe medicine recommendation framework. Abdelaziz et al. [17] developed a large-scalesimilarly-based framework that predicts drug-drug interactions through text and graph embedding algorithms. These studies fully exploit the domain knowledge in the knowledge graph, but they cannot benefit from the large scale of labeled data. In other words, an exceptional specialist should process not just sound professional knowledge but also extensive experience.

For the TCM cerebral palsy diagnosis model to benefit from both the knowledge graph and the EMR, we propose a two-step model called KBRNN to achieve this purpose. In the first step, we extract evidence-based diagnostic knowledge from cerebral palsy KG by using intelligent optimization algorithms and represent this knowledge as tensors. Then, we inject the knowledge into RNN by converting the tensor to the parameter of the RNN. So far, we have obtained the knowledge-based RNN (KBRNN) that can be trained with the TCM data for fine-tuning.

- (1)

We propose the knowledge-based RNN (KBRNN). Compared with the traditional methods, the KBRNN can be enhanced by the domain knowledge in KG. Also, the performance of KBRNN can be further enhanced by training on the labeled data.

- (2)

Under the KBRNN proposed, we design an evolutionary algorithm for knowledge extraction and give an ingenious way to represent the knowledge as tensors and inject them into the RNN.

- (3)

The experiment results show the accuracy of diagnosis of the untrained KBRNN which only with knowledge injections is 79.31%, and is up to 83.12% for the fully trained KBRNN.

2. Related Work

2.1. Knowledge Graph Inference and Its Applications

The knowledge graph contains the amount of prior knowledge [18], which can provide external information for various downstream tasks [19]. For medical tasks, Yang et al. [20] introduced the link prediction for the diagnosis of syndrome by dismantling medical records into multiple symptoms based on the KG. Zheng et al. [21] learned the relational embedding from nodes in KG to access medical knowledge and used them to improve the classifier’s performance through the mechanism of medical knowledge attention. Zhang and Che. [22] constructed Parkinson’s disease KG and KG completion methods that were leveraged to predict drug candidates. Yang et al. [23] pretrained the embeddings of entities by large-scale domain-specific corpus while learning the knowledge embeddings of entities via a joint TransC-TransE model. Lin et al. [24] combined the context provided by medical entity descriptions with the embeddings of medical entities and relations and user embeddings to learn patient similarities through a convolutional neural network. Lin et al. [25] utilized graph representation learning models to obtain the embedding vectors of the entities, then applied the embeddings to study patient similarities. These works used joint representation to bring entity and word vector space closer. However, for KGs with large numbers of entities, dealing with entities and their relationships leads to higher time complexity.

Furthermore, there is also some research about inference on the KG directly, without embedding the relations and entities. El-Shafai et al. [26] provided a method that simulates syndrome differentiation through Bayes and TF-IDF on a knowledge graph to achieve automated diagnosis in TCM. Yao et al. [27] presented an ontology-based model that utilized ontology attributes for training the neural network for medicine side-effect prediction. Xie et al. [28] applied the TF-IDF to the TCM KG and proposed a knowledge-based syndrome reasoning model.

2.2. Neural Network with Knowledge Enhance

Lin et al. [29] proposed a trigger matching network, which trains a trigger matching network with additional annotation and uses the output as the attention of the sequence labeler. Luo et al. [30] combined a neural network with regular expressions (RE) to improve supervised learning for natural language processing. Jiang et al. [31] proposed FA-RNN, a recurrent neural network that incorporates the benefits of both neural networks and regular expression rules. Finally, Jiang et al. [32] transformed regular expressions into neural networks to combine the two ways for slot filling.

3. Methods

3.1. Framework Overview

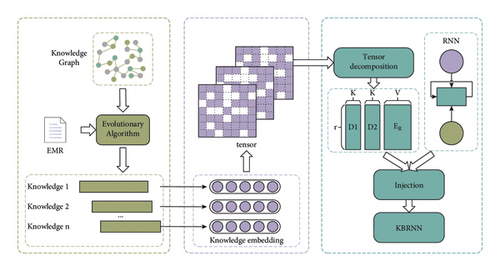

Figure 1 shows a two-step routine to construct a KBRNN, i.e., knowledge extracting and knowledge injecting. In knowledge extraction, an evolutionary algorithm is designed to extract high-scored knowledge from the KG. A part of EMRs is utilized to score knowledge. In knowledge injecting, knowledge is converted to a tensor in the knowledge embedding module. Then, the tensor decompose module decomposes the knowledge tensor as the parameters of RNN. This gives us the KBRNN which incorporates domain knowledge.

3.2. Notation

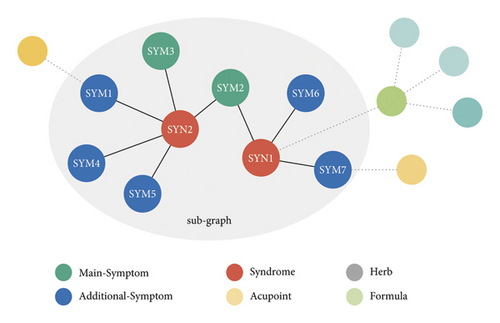

To focus on diagnosing the syndrome by the patients’ symptoms, shown in Figure 2, we reconstruct a sub-KG K based on the KG proposed by [33]. In this sub-KG K , we only retain the symptom and syndrome entities related to this research and exclude other entities such as acupoints, formula, and herb which are not related to diagnosis. For description, we give each symptom a unique and continuous ID starting from 0 and denote the symptom by “SYM”+ID. Similarly, we use “SYN”+ID to refer to a syndrome.

-

E: a set of entities. |E| = N. There are three types of entities (main symptom, additional symptom, and syndrome), E = Emain_sym ∪ Eadd_sym ∪ Esyn.

-

R: a set of relations. |R| = M.

-

t: Let ei, ek ∈ E, rj ∈ R, t = (ei, rj, ej) is the relationship between entities.

-

E_Query(SYNi, E′): returns a set containing all the entities in E′ that are connected to SYNi.

-

E_match(sentence): sequential output the alias of entities which appear in sentence.

3.3. Extract Knowledge from KG

3.3.1. Definitions and Task Complexity

This section details the thought to treat the knowledge extracting task as an optimization problem.

Obviously, the sentence sSYN2 = <SYM5, SYM3, SYM7, SYN2> labeled as SYN2 can be recognized by RESYN2. However, the risk raised with the RE s is that it may lead to the wrong diagnosis. For example, RESYN2 may also recognize the sentence sSYN3 = <SYM4, SYM2, SYM7> labeled as SYN3. For this issue, it looks like a feasible method that enumerates the subsets of Emain_sym and Eadd_sym, then, splicing them to generate as candidate solutions and filtering the useful KnowlSYNi with the verification of EMR sentences for each syndrome. But the time complexity is as high as . Fortunately, too much knowledge injection complicates the diagnosis model, which will be discussed further in Section 3.4.2. Thus, for a specific syndrome named SYNi and a KnowlSYNi scoring function V, it is enough to find the “top-k KnowlSYNi” corresponding to the k highest score KnowlSYNi from all the KnowlSYNi of each syndrome.

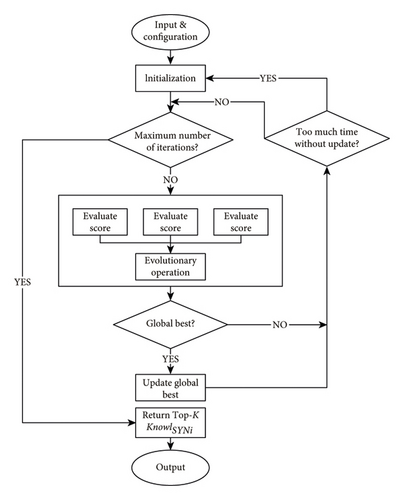

By the well-performance of the evolutionary algorithm in searching for relative optimal solutions from the large solution space, we design an evolutionary algorithm for knowledge extraction. Figure 3 shows the main steps of the algorithm.

Our knowledge extraction method via evolutionary algorithms is based on the combination of two well-known expansions to the standard genetic strategy. On the one hand, we apply repeated reinitializations of the candidate solution when it reaches a state of stagnation. On the other hand, we utilize parallel computing in the process of evolution. While the former effectively overcomes the evolutionary algorithm’s difficulty of falling into local optimal, the latter significantly improves the efficiency by allowing parallel calculation of the score of each solution. Moreover, assigning individuals to different computational cores can be viewed as a strategy for multiple population evolution, optimizing the algorithm’s robustness.

There are two problem-specific modules in evolutionary algorithms, i.e., generator and evaluator. The following sections detail their specific implementation.

3.3.2. Generator

- (i)

φ ∈ {0,1}m: main symptom vector, ϕ[j] = 1 if SYMj is selected, else ϕ[j] = 0.

- (ii)

ψ ∈ {0,1}m: additional symptom vector, ψ[j] = 1 if SYMj is selected, else ψ[j] = 0.

- (iii)

v ∈ℝ: the score of such solution, calculated by using the evaluator module. Initialize to 0.

The generator generates a list of τr = <ϕr, ψr, 0> denoted by Γ = [τ0, τ1, τ2, …, τl−1] by initializing the ϕi and ψi randomly, where l is the length of Γ, l > k, 0 ≤ r < l.

3.3.3. Evaluator

The evaluator calculates the score of each τ in Γ. We select n EMR sentences as the test case to compute the score of τ. This section will detail the scoring algorithm.

For a syndrome aliased SYNi, the evaluator divides the n EMR sentences into two disjoint sets denoted by EMRtrue and EMRfalse, where let |EMRtrue| = a, |EMRfalse| = b, a + b = n. A sentence is divided into EMRtrue if and only if it is labeled as SYNi.

- (i)

tr∈ℕ: the number of sentences in EMRtrue which can be recognized by τr

- (ii)

fr∈ℕ: the number of sentences in EMRfalse which can be recognized by τr

- (iii)

cr∈ℕ: the number of symptoms in τr

- (i)

TP ∈ {0,1}a×m: TP[i][j] = 1 if the SYMj in the ith sentence of EMRtrue, otherwise TP[i][j] = 0

- (ii)

TF ∈ {0,1}b×m: TF[i][j] = 1 if the SYMj in the ith sentence of EMRfalse, otherwise TF[i][j] = 1

3.4. Convert the Knowledge to KBRNN

By the knowledge extraction algorithm details in Section 3.3, we get the top-k KnowlSYNi for each syndrome. A KnowlSYNi can be converted to an RE as (3)and (4) details in Section 3.3.1. We formally take the syndrome diagnosis task as a text classification problem, i.e., given an EMR sentence as the input of KBRNN, the output is the syndrome corresponding to the sentence.

In the following section, we illustrate the implementation of the KBRNN, which is generated by injecting KnowlSYNi into RNN.

3.4.1. Embedding the Knowledge via Finite-State Automaton

Finite-State Automaton (FSA) is an abstract model of computation, which can change from one state to another in response to some inputs. The FSA can be used to recognize sentences. Given a sentence s = <′BOS′, sym1, sym2, sym3, …, symn,′EOS′> , an FSA Λ, we feed the elements of s into Λ in order. Λ recognizes s if and only if the state transition sequence starts from the start state and ends with a final state.

There are two types of FSA: nondeterministic finite automaton (NFA) and deterministic finite automaton (DFA). The “deterministic” indicates that by giving the state an input, there is a unique transition to the next state. With Thompson’s construction algorithm [34], an RE can be converted into an NFA. Then, the NFA can be converted to a unique DFA with a minimum number of states called m-DFA by the power set construction algorithm and the DFA minimization algorithm.

-

Q: a nonempty, finite set of states. Let |Q| = K.

-

Σ: a nonempty, finite set of input vocabulary. Let |Σ| = V, V ∝ |Esym|.

-

δ: transfer function, δ(q, σ) = p(p, q ∈ Q, σ ∈ Σ).

-

qϵ: the start state, qϵ ∈ Q.

-

F′: a nonempty, finite set of final states, F′⊆Q.

-

T ∈ {0,1}V×K×K: the transfer matrix, T[σ, i, j] = 1 if the state qi can transit to qj when input a vocabulary σ, otherwise 0. (qi, qj ∈ Q, σ ∈ Σ).

-

S ∈ {0,1}K: S[i] = 1 if qϵ can transit to qi directly, otherwise 0.

-

F ∈ {0,1}K: F[i] = 1 if qi ∈ F′, otherwise 0.

Now, we obtain the knowledge embedding 〈T, S, F〉.

3.4.2. Inject the Knowledge Embedding into RNN

Here, we extend the approach in [31], which used canonical polyadic decomposition (CPD) to decompose T into ER ∈ RV×r, D1 ∈ RK×r, D2 ∈ RK×r, where r is a hyperparameter. As the study in [31], the decomposition is approximate when the r converges to the rank of T, and if r is too large, it may lead to a higher space complexity. In this work, the rank of T is positive to the number of symptoms in KnowlSYNi. That is why, we must maintain a minimum number of symptoms in KnowlSYNi.

So far, we have obtained the RNN injected with knowledge.

4. Experiments and Results

4.1. Datasets

We collect the dataset from a project by the National Key Research and Development Program of the Chinese Academy of Traditional Chinese Medicine, “Chinese Medicine Data Center and Health Cloud Platform Building.” The EMR data are mainly from the Hospital Information System (HIS), which includes admission records, course records, discharge summaries, and medical records of cerebral palsy patients within a specific time frame. These data come from clinically valid cases and have been desensitized to protect patients’ private information.

The original EMR data has several flaws, including a nonstandard format and diverse expression. A team of professionals is invited to tag the EMR data manually so that it may be organized into structured data for further research. Data tagging assumes the form of two-person cooperation to prevent errors caused by the limited expertise of a single individual. There remain nonstandard data in the structured data after the data tagging process. For instance, a particular symptom may have several distinct expressions. In data standardization, numerous professional words are first standardized and sorted out collaboratively by a group of individuals. Then, a medical specialist induces the standard terms included in the medical records. In the end, the standardization of 988 symptoms and 15 syndromes was achieved. According to traditional Chinese medicine, these symptoms may be further subdivided into main symptoms and additional symptoms. The main symptoms might generally represent the patients’ overall condition, but the additional symptoms relate to complications, which is a significant diagnostic criterion for syndrome kinds.

Thus, we obtained 5514 labeled diagnostic records from 1755 patients. Each record has three fields, main symptoms, additional symptoms, and syndrome as the label.

4.2. Experimental Steps

- (i)

Pre-set (20%): the pretrained dataset that engages in the scoring of knowledge in the knowledge extraction algorithm

- (ii)

Train-set (50%): train dataset, the dataset used for training models

- (iii)

Dev-set (20%): validation dataset, a set of examples used to tune hyperparameters

- (iv)

Test-set (10%): test dataset, a dataset for testing the performance of the trained model

During the knowledge extraction phase, we execute the evolutionary algorithm and utilize pre-set data for knowledge scoring and obtain top-k KnowlSYNi (k = 6 in practice) for each syndrome. We removed some KnowlSYNi that scored poorly, which is caused by the insufficiency of the corresponding syndromes’ sample sizes.

During knowledge embedding and injecting, we obtain an untrained KBRNN that has not been trained on the train-set. We adopt some conventional machine learning models which are frequently used in text classification as baselines and compare them with KBRNN. For each baseline, we feed the hidden representation produced by these models into a multilayer perceptron (MLP) and use the cross-entropy loss as the objective function.

4.3. Experimental Results

- (1)

The contribution of knowledge extraction to KBRNN: we use the pre-set as the training set of baselines and compare the performance of untrained KBRNN and baselines on the test set

- (2)

The ability of KBRNN to benefit from labeled data: we utilize both the pre-set and the train-set (50%, 100%) as the training set and fine-tune the untrained KBRNN with the train-set

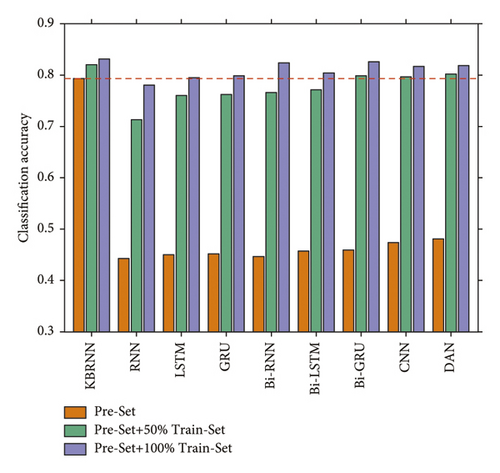

Table 1 displays the classification accuracy of the KBRNN and baseline models on the test-set after training with varying amounts of training data. The KBRNN can achieve 79.31% diagnostic accuracy with only injecting the knowledge extracted from the KG based on pre-set and rises to 83.12% with sufficient training based on the 100% train-set.

| Preset | Preset + 50% train-set | Preset + 100% train-set | |

|---|---|---|---|

| KBRNN | 79.31 | 82.03 | 83.12 |

| RNN | 44.28 | 71.32 | 78.03 |

| LSTM | 45.01 | 76.04 | 79.49 |

| GRU | 45.19 | 76.22 | 79.85 |

| Bi-RNN | 44.64 | 76.59 | 82.39 |

| Bi-LSTM | 45.74 | 77.13 | 80.40 |

| Bi-GRU | 45.91 | 79.85 | 82.58 |

| CNN | 47.37 | 79.67 | 81.67 |

| DAN | 48.09 | 80.21 | 81.85 |

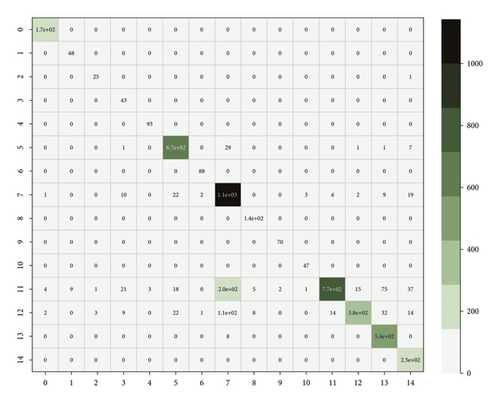

The result shows that the untrained KBRNN outperforms all the other baselines which are only trained on the pre-set. It is also better than some of the baselines trained with 50% of the train-set (Figure 4.). We believe that KBRNN obtains considerable a priori knowledge from the knowledge graph through injection. The classification result on the full samples by using the fully trained KBRNN is shown as the confusion matrix in Figure 5, which provides a good insight into how often samples of each fifteen syndromes are correctly classified or misclassified by the proposed model. We can find that the number of samples varies greatly in each syndrome type, and the true positive rate could be maintained at a high level even for the syndrome with a large number of samples. As with other models, KBRNN can benefit from expanding the training set while keeping accuracy benefits.

5. Discussion and Conclusions

TCM, as a complementary field of medicine outside the modern medicine system, has played a significant role in cerebral palsy syndrome diagnosis. In this work, we propose a knowledge-based RNN (KBRNN) for cerebral palsy syndrome diagnosis. Our major contribution is building an evolutionary algorithm to extract the diagnosis knowledge from the KG. In particular, we also present the method of injecting the TCM knowledge into the RNN. Compared with the simple KG inference or the rule-based methods, as a neural network model, the KBRNN can be further trained by EMR data, which makes the KBRNN more generalized. On the other hand, compared with the traditional neural network model, KBRNN can benefit from TCM knowledge. Specifically, with the help of TCM knowledge, KBRNN outperforms previous neural approaches in the scene where only a few EMRs are available, and it remains competitive in rich-resource settings.

In conclusion, KBRNN can benefit from two aspects, i.e., knowledge extracted from the cerebral palsy knowledge graph and labeled EMR. We show that KBRNN achieves higher accuracy in syndrome diagnostic tasks only with knowledge injection. Moreover, the performance of KBRNN can be further improved after training with a large amount of labeled EMR, which outperforms the current model.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This work was supported by the CACMS Innovation Fund (CI2021A00512) and the National Key Research and Development Program of China under grant (2017YFC1703506).

Open Research

Data Availability

All data included in this study are available upon request by contact with the corresponding author.