[Retracted] Mixture of Gaussian Processes Based on Bayesian Optimization

Abstract

This paper gives a detailed introduction of implementing mixture of Gaussian process (MGP) model and develops its application for Bayesian optimization (BayesOpt). The paper also develops techniques for MGP in finding its mixture components and introduced an alternative gating network based on the Dirichlet distributions. BayesOpt using the resultant MGP model significantly outperforms the one based on Gaussian process regression in terms of optimization efficiency in the test on tuning the hyperparameters in common machine learning algorithms. This indicates the success of the methods, implying a promising future of wider application for MGP model and the BayesOpt based on it.

1. Introduction

Bayesian optimization (BayesOpt) is a highly effective method for optimizing expensive black-box objective functions. These functions are expensive to evaluate and cannot be accessed analytically due to their complicated structures. They often come along with properties such as being noisy and having multiple local optima. Simple approaches like random search are inappropriate, and derivative-based algorithms like gradient decent are unreliable as well as inefficient due to the noisy and expensive nature of the objective functions. To address these issues, BayesOpt offers a derivative-free approach by building a surrogate that models the objective function and deciding where to evaluate using Bayesian statistics [1–3]. The ability to optimize expensive black-box derivative-free functions brought BayesOpt a wide range of applications since the 1960s [4, 5]. It is extensively used for designing engineering systems in robotics, computer graphics, and sensor networks [6–8], and it has recently become extremely popular for tuning hyperparameters in machine learning algorithms [9, 10].

BayesOpt is a member of the surrogate modelling methods in nature. The central idea behind this more general class of method is to use a well-behaved function that estimates the objective function based on a limited number of sampled data while being cheap to evaluate [11, 12]. The mostly used surrogate in BayesOpt is Gaussian process regression (GPR) [13]. It uses a set of parameters in its covariance function (kernel) to characterize the fluctuation of the objective function and makes regression using Bayes’ theorem. When combined with other methods to help finding the parameters in its kernel, GPR provides satisfying result. But it can be further improved by generalizing to the mixture of Gaussian process (MGP) model. The main motivation of this generalization is that the objective function’s behaviour is most likely inconsistent throughout the input space; hence, a single GPR with a fixed set of kernel parameters is typically inadequate for modelling the entire objective function. The MGP model overcomes this problem by self-organizing several GPs with different kernel parameters, allowing the kernel to be input-dependent [14].

The MGP model is a variant of the well-known mixture of expert (ME) model of Jacobs et al. [15] and was firstly introduced in Tresp [14]. It is a mixture model consisting of an input-dependent gating network and several GPs as the mixture components. Each GP specializes in a certain region of the input space due to their unique kernel parameters and stands for the local expert in the ME model. The gating network learns the specialization of the experts from the training data and estimates the participation of each expert for a new input. With this mechanism, the MGP model divides up the regression of the entire objective function into several regional subtasks to be executed by each expert, respectively, achieving a better overall result than any individual GPR [14]. By substituting GPR with MGP as the surrogate model, BayesOpt can more accurately estimate the objective function, speeding up its convergence on finding the optimum.

This paper firstly gives a brief review of the BayesOpt using GPR and then provides a detailed description of the MGP model with an alternative gating network modified from Rasmussen and Ghahramani [16] along with the method to find the local experts. Based on the discussions in the previous sections, a clustering-assisted approach to implement the MGP in the BayesOpt is introduced. Finally, the performance of the MGP model and the BayesOpt based on it are tested and discussed.

2. Background Review

2.1. Gaussian Process Regression

The term denotes the mean vector, which is commonly chosen to be a constant value, typically zero or the average of the sampled values. Matrix Σ is the covariance matrix, generated by a covariance function also known as kernel. The kernel models the correlation between each pair of points based on their positions. The mean vector together with kernel completely defines the properties of the GP [18, 19].

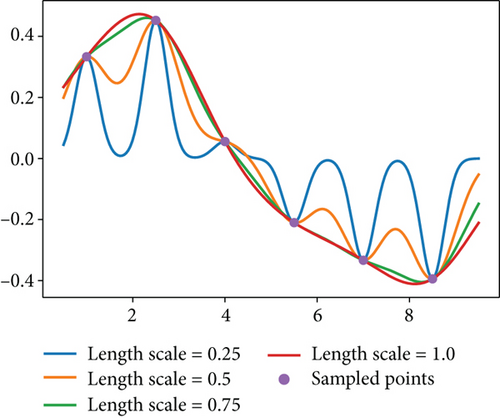

2.2. Choosing Kernel Parameters

The length scales found are anisotropic in general. One can also assume the length scales to be isotropic, meaning that the same length scale is shared for all axes and MLE degenerates to maximizing . Such assumption is obviously less comprehensive but more appropriate when there are relatively few sampled points. It is especially the case when the number of sampled points is not significantly larger than the dimension of the input space, since the assumption for the function being well-represented breaks down and MLE could fall into overfitting. Typically, the objective function is randomly sampled a few times before using BayesOpt to meet the minimum requirement for applying GPR. It is also suggested to use isotropic GPR before having enough points to apply the anisotropic GPR.

2.3. Acquisition Function

2.4. Mixture of Gaussian Processes

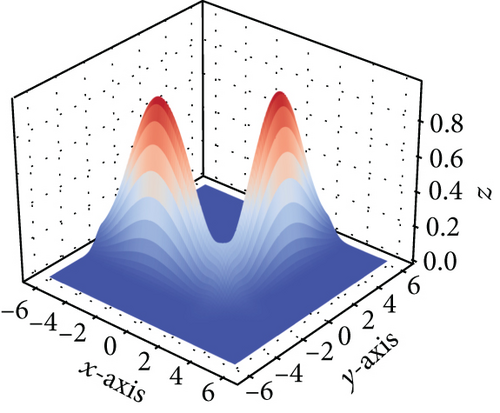

GPR uses a certain configuration of kernel parameters typically optimized via MLE, assuming a global behaviour of the function in each dimension. In most cases, this is inadequate to model the entire objective function, as the function typically has different behaviours in different regions. For example, the objective function could have one local peak that changes quickly on x-axis but slowly on y-axis and another one elsewhere that behaves in reverse, or the objective function could be a single hump surrounded by a vast flat region. The main motivation for replacing GPR by the mixture of Gaussian process (MGP) model is to address these scenarios by permitting input-dependent participation of kernel parameters. The MGP model is a variation of mixture of expert (ME) model and a generalization of GPR. It consists of a set of GPs with different kernel parameters as the experts and a gating network to determine which expert to use for a given input [15]. The gating network and the mixture components are iteratively trained using the expectation maximization (EM) algorithm to maximize the likelihood of the observed data. Compared with the original method introduced in Tresp [14], the MGP model introduced in this paper is slightly modified for better performance.

2.5. Finding the Local Experts

The regions to generate the local experts are not necessarily mutually exclusive as the gating network will assign the tasks automatically. However, they are expected to be exhaustive since otherwise the regressions in the uncovered area could result badly. A simple approach to fulfill this requirement is to use the GPR optimized via MLE (such GPR will be referred to as global GPR for the rest of this paper) along with the ones optimized for certain regions. Finally, to avoid overfitting, one has to make sure the regions to optimize are well-shaped and contain enough points.

2.6. Iterative Learning Using EM

The M step and the E step are conducted alternately until convergence.

If the EM still does not converge after a certain number of iterations, simply choose the iteration step that corresponds to the highest posterior density.

3. Methodology

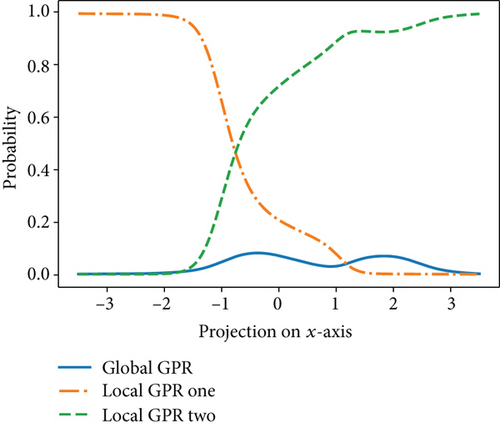

3.1. Learning the Alternative Gating Network

The gating function in ME model evaluates the expert-membership of a new input by means of regression. In the original paper, this is done using Gaussian process classification [29] (GPC) with a softmax function. This method is found to be not ideal enough. One reason is that GPC requires to label the expert-memberships for each data point, while some points have rather equivalent probabilities for several GPRs, making it inappropriate to label them with any model. Another problem originates from the fact that some data points, inside a region covered by a local GPR through, could fit very well to another GPR optimized for some other region. As a result, the situation that one data point that seemingly belongs to a GPR being surrounded by many data points that favour another GPR is likely a sign of this type of problem. Hence, it may be desirable to take into account the status of the surrounding data. The successful implementation of the original method was on functions that are rather steep, such as the step function in Tresp [14] and the spike-shaped function in Stachniss et al. [30], where the sudden change of label makes sense. However, for most functions, the original method could lead to wrong classification and hence is not very ideal. Also, a M-class GPC uses M GPRs to make regressions and is computationally rather expensive.

A modification from Foster et al. [31] is that (19) uses the posterior distribution calculated from (12). This is to integrate the update of the gating network into the EM learning algorithm. This method successfully solves the problems found in the original gating network as it takes in the probabilities of the indicator variable as well as the surrounding data. The length scales ϕ in the kernel smoother characterizes the smoothness of the gating network. Optimizing the ϕ can be difficult as this could fall into overfitting. It is advised to preset an isotropic ϕ and to use the smoother on the data normalized in each dimension.

3.2. Complete Algorithm of MGP

To sum up, one can successfully implement the MGP following the steps presented in Algorithm 1.

-

Algorithm 1: Training the MGP model.

-

Input: Sampled data, the regions to optimize, the term ϕ in the smoother.

-

1 Sample from the posterior of α using (21) and ARS.

-

2 Find the global GPR and the local experts using (11).

-

3 Initialize the gating network (equal probability for all experts is fine).

-

4 repeat

-

5 Calculate the probability densities for the data points under each GP.

-

6 Estimate the latent variables using (12).

-

7 Update the GPs using (13).

-

8 Update the gating network using (18).

-

9 /∗ The gating network is averaged over the sampled posterior of α∗/

-

10 Δ = the root-mean-square deviation of the updated gating network.

-

11 until Δ < tolerance;

-

Output: the trained GPs with the gating network.

For any input to evaluate, use (18) to calculate the assignment probabilities and then use (5) to make regressions for each GPR, respectively.

In practice, one may conduct EM until convergence without (13), which is to keep the GPs fixed, before applying the EM with (13). This is because recalculating the probability densities after updating the GPs is the most time-consuming step. Hence, a better gating network to begin with can significantly speed up the training. But above all, one needs to make sure there are enough data points to perform the MGP; otherwise, this can lead to overfitting.

3.3. Clustering-Assisted Method for BayesOpt

A major drawback of the MGP introduced above is that the local expert can only be designated if the regions to optimize are known. If the behaviour of the function is vaguely known in advance or if there are many sampled data points, one can select the regions manually. But for a general optimization task, this can be hard to do as little is known for the objective function and number of samplings is limited.

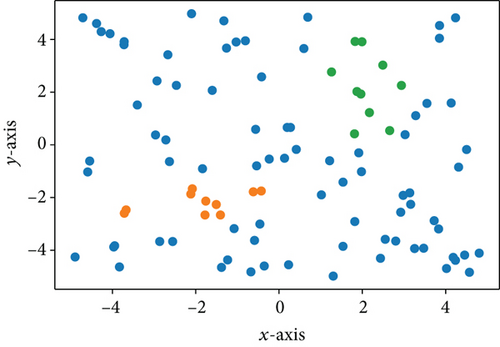

By studying general BayesOpt optimizations, one can find that the local experts that the optimizer is mostly interested in are the ones for the local peaks where the sampling density is high. Utilizing this feature, local experts to construct MGP for BayesOpt can be determined using a clustering-assisted approach. One firstly filters out the data with values below the average (or above the average if to minimize) and then applies density-based clustering to the rest points. The clustering step is advised to be conducted on data normalized in each input dimension. Each cluster found corresponds to a group of samplings around a local peak and the local experts can be found using (11). As the later samplings tend to lie around the higher peaks, the average of sampled values increases after each iteration. Thus, the lower peaks are of lesser value and will gradually be filtered out (once the cluster size is too small), leaving only the highest peak standing.

If there is no cluster found, meaning that the BayesOpt has not found any peak or there are not enough points near a peak to fit a local expert, the MGP uses only the global GPR which does not pose any adverse consequences either. The worst case that the clustering approach could lead to is finding a cluster in the filtered data points which turns out to be high value points mixed up with low value ones in the whole dataset. In this case, the GPR calculated via (11) does not reflect any regional behaviour of the function but is simply overlearning this subset of data. This is a rare scenario but is still worthy of attention. A solution addressing this issue is to make a k-nearest neighbour classification of the unselected data for each cluster. This grows each cluster by the data that are potentially filtered out.

3.4. Complete Algorithm of MGP-Based BayesOpt

This is equivalent to the marginalized acquisition function and (22) is not limited to EI. If there is no extra local expert found in the clustering step, (22) degenerates to the EI for a single global GPR. The algorithm for MGP-based BayesOpt is summarized and illustrated below.

4. Experiment

4.1. MGP Regression Test

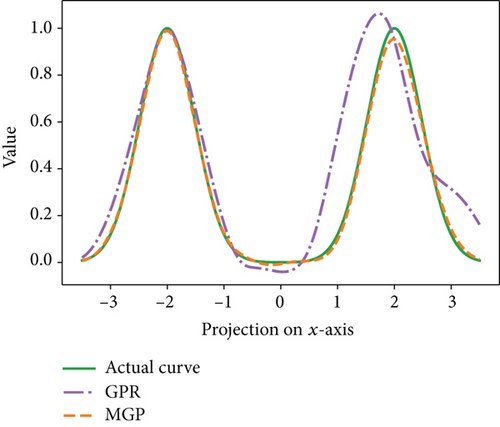

It can be seen from Figure 2 that the MGP model trained using Algorithm 1 greatly outperforms the global GPR as the regression curve almost overlaps with the actual curve. Thanks to the gating function that assigns each model to the region where they excel, each peak is assigned to the corresponding local expert, leading to the outstanding regression performance. This recreates the results in Tresp [14] and indicates the success of the method for find local experts and the alternative gating network.

4.2. Test on Hyperparameter Tuning

The hyperparameter tuning problem in machine learning algorithms is a prime example of optimizing expensive black-box derivative-free functions. The hyperparameters of the machine learning models are specified prior to training and greatly affect the model performance. The training process can be very computationally expensive, and the performance of the trained model is usually noisy as randomness is typically involved during model training and testing.

Based on the successful implementation of the MGP model, Algorithm 2 for applying MGP in BayesOpt is tested on the hyperparameter tuning of four common machine learning algorithms. These algorithms are chosen for being highly tunable with large cross-validated average improvement after optimization [11]. The XGBoost is a boosting tree model, and its regularization is mainly controlled by the shrinkage factor and two penalty terms. The elastic net is a linear model that uses L1 and L2 penalties to reduce overfitting. The support vector machine (SVM) is a classifier using kernel trick, where its performance is determined by the regularization parameter and the kernel coefficient. The multilayer perceptron (MLP) is an artificial neural network, where the regularization term, the learning rate, and the hidden-layer sizes together contribute to its performance. The experiment is to test the efficiencies of the MGP-based BayesOpt in optimizing practical back-box derivative-free functions compared with the BayesOpt using GPR. The four algorithms above are trained on different datasets, and the configurations of their hyperparameters are optimized by the two types of Bayesian optimizers, respectively. The values of the current optima found by each optimizer are recorded in each iteration to compare the efficiency of optimization. Considering the MGP needs enough data to find the local experts, both optimizers start from a presampled dataset consisting of 5 random samplings followed by a few iterations of BayesOpt using GPR. The information for each tested model is given in Table 1.

-

Algorithm 2: MGP-based Bayesian optimization.

-

Input: pre-sampled data, the objective function, the term ϕ in the smoother,

-

the number of iterations N, minimum size of the clusters.

-

1 for iteration =1 to N: do

-

2 Standardize the data in the input space.

-

3 Filter out the data with values below the average.

-

4 Perform a density-based clustering for the rest data points.

-

5 Apply k-nearest neighbour classification on unselect data points.

-

6 Reject the clusters smaller than the minimum size.

-

7 Use Algorithm 1 to train the MGP.

-

8 /∗ The regions to optimize are the clusters found ∗/

-

9 Sample at the position that maximizes the acquisition function (22).

-

10 Add the sampled point to the data.

-

11 end for

-

Output: the value and the coordinates of the best sampled point.

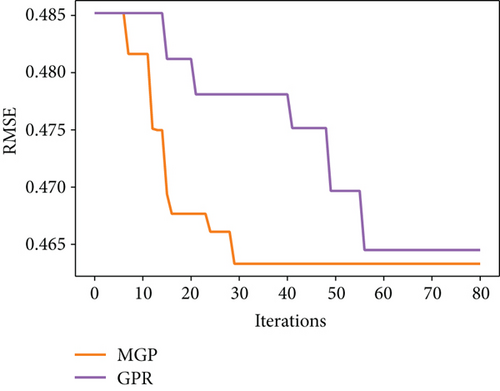

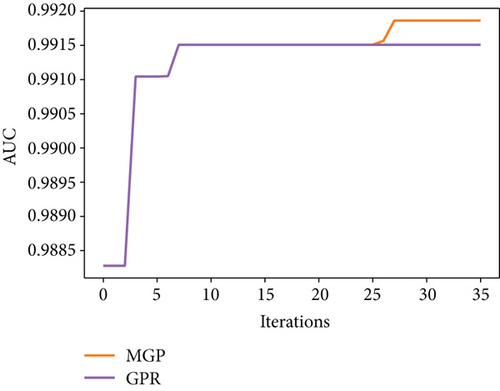

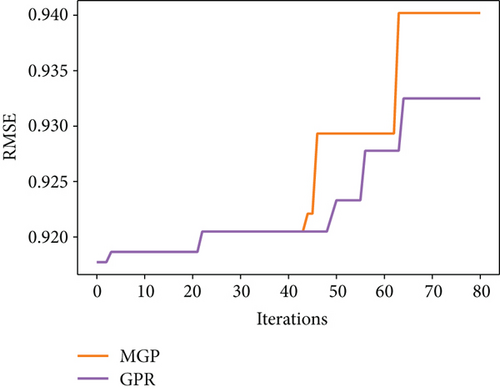

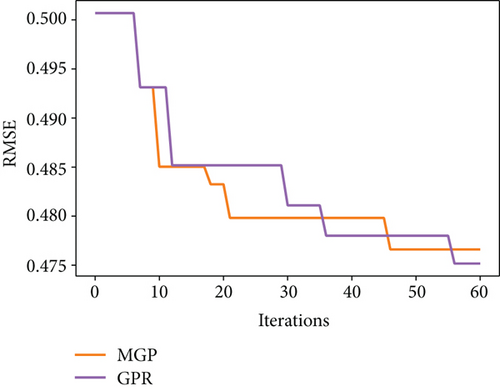

The comparison of tuning efficiency is presented in Figure 3. It can be seen that MGP model outperforms GPR in BayesOpt thanks to the more accurate modelling of the objective function. It is most significant in the test on XGBoost and MLP where the tuning dimension is relatively higher. This is reasonable as the higher dimension gives more space for tuning, while the other model converges too quickly to their optima even without the use of MGP. Such result marks the success of the clustering assisted method for finding the experts of the peaks. Interestingly, it is observed in the test on XGBoost and MLP that the number of GPs used in each optimizing iteration experienced an increase followed by a decrease. This corresponds to the design that lower peaks will be filtered out for lesser evaluation value.

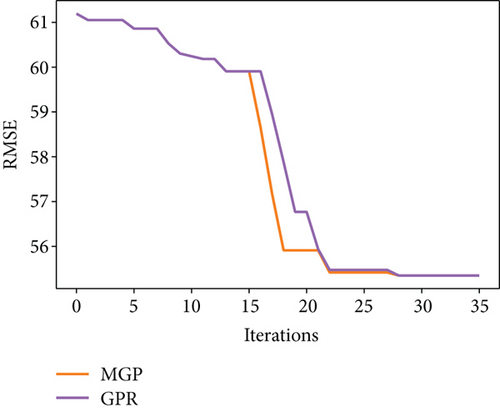

The presampling before applying MGP is important. It is observed that if the MGP-based BayesOpt is applied without a certain number of presamplings, its performance is not guaranteed to outperform the BayesOpt using GPR (see Figure 4). By examining the optimization processes, it is found that in situations where MGP fails to outperform the GPR, MGP stays in a local optimum (typically the first peak it finds) for longer iterations before moving to the global optimum, which is likely a cause of overfitting to the existing data. The use of MGP is based on the assumption that the behaviour of the objective function is well-represented by the sampled data. This assumption clearly breaks down when MGP is applied to optimize an objective function that is poorly explored. BayesOpt is a trade-off of exploitation and exploration, and MGP favours the former by increasing the complexity of the surrogate model, leading to a higher risk of overfitting and falling into local optima. MGP-based BayesOpt is definitely a good supplement to the GPR-based BayesOpt and may provide more accurate estimations in most cases. But the higher risk of overfitting implies it cannot be a complete replacement for GPR-based BayesOpt, especially when the input space is poorly sampled.

In practical optimization problems, it is advised to take enough samples using GPR before applying MGP to lower the risk of overfitting. One may also combine MGP and GPR together by switching back to GPR as the surrogate model every a few iterations of using MGP-based BayesOpt. This periodically decrease of model complexity makes a more robust optimization approach, having more accurate modelling while reducing the risk of overfitting. In our test where GPR is used every third iteration in the MGP-based BayesOpt, the phenomenon of being trapped in local optima is no longer observed and the combined method always outperforms the GPR-based BayesOpt.

5. Conclusion

This paper explained the motivations for replacing GPR by MGP in BayesOpt and provided a detailed introduction for implementing it. The method for finding the local experts, the alternative gating network, and the clustering-assisted method are proven to be successful by results in the experiment section. The MGP is a powerful candidate for achieving better regression performance than GPR. The BayesOpt based on it significantly outperforms the one using GPR especially for objective functions with larger input dimension. The only drawback of potential overfitting when optimizing with relatively few data can be addressed by more presampling or periodically switching back to GPR, making it an excellent supplement to the GPR-based BayesOpt. Despite the fact that MGP is computationally more expensive than a single GPR, it is still much faster to train than most machine learning algorithms and may significantly boost the efficiency of BayesOpt. Also, many steps in the EM can be trained in parallel, including the evaluation of the probability density for each data point. A fully optimized algorithm of MGP can undoubtedly speed up its implementation, providing a higher application value.

The methods are all explained with details, and the results are clearly illustrated and analysed to be satisfying. Hopefully, future works may be developed on improving the MGP or on finding more applications for it.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Authors’ Contributions

R.M. contributed the central idea and the major part of the software, carried out part of the experiment and analysis, revised the manuscript, and finalized this paper; C.C. provided the data, contributed part of the software, and completed the rest part of the experiment and analysis. The remaining authors equally contributed to refining the idea and designing the experiment. All authors discussed the results and wrote the initial draft of this paper.

Open Research

Data Availability

All data, models, and code generated or used during the study appear in the submitted article.