Analysis and Research on Nonlinear Complex Function Approximation Problem Based on Deep Learning

Abstract

Shallow models have limited ability to express high-dimensional nonlinear complex functions. Based on deep learning, a Gaussian radial basis function neural network (RBFNN) is proposed, which is an analysis method of nonlinear complex function approximation based on the Gaussian-RBFNN model. The proposed method can approximate single-variable and binary complex nonlinear functions, and the approximation error is less than 0.1, which can achieve the ideal approximation effect. Finally, the proposed method is applied to analyze the nonlinear complex stock prediction approximation problem, and the effectiveness and practicality of the method are further verified. It can be seen that compared with the BP neural network model, this model performs better in average training time, training standard error, Ratt, RATR, and trmse For RBFNN; the training only needs 50 iterations, while the traditional BP needs 7100 training to gradually converge. In the actual function test, after 275 times of training, the convergence speed gradually tends to be stable, and the predicted value is closer to the actual value. Therefore, the model can be used to analyze practical nonlinear complex function approximation problems.

1. Introduction

With the development of intelligent technology and other high and new technologies, product functions gradually tend to be complex, resulting in the response time and parameters of the corresponding functions of products showing nonlinear and complex many-to-many characteristics. And the traditional shallow mathematical model cannot meet the modeling of the nonlinear system. For example, based on the BP neural network model and the network model with support vector machine (SVM), etc., there are only computing nodes of the hidden layer. And it can express the nonlinear simple mapping relationship, which can achieve ideal expression effect. However, when the training sample is small, the expression ability of the model has certain limitations [1, 2]. To solve this problem, some scholars try to improve the traditional shallow mathematical model to improve the approximation and expression of high-dimensional nonlinear complex functions. Xingqiang Chen and Yao Lu et al. used genetic algorithm (GA) to optimize BP neural network parameters, which realizes the improvement of the prediction accuracy of the model. Moreover, the validity of the model is proved by applying it in the examples of ship track prediction and CMT weld deformation prediction [3, 4]. Xu Ying et al. proposed an improved BP neural network method for beetle antenna search and verified the effectiveness of the method by applying it to predict multifactor gas explosion pressure. The results show that this method can improve the nonlinear systems modeling ability of BP neural network for complex many-to-many feature [5]. Razaque Abdul et al. combined radial basis function with SVM to propose a SVM-RBF and SVM-linear variant prediction method, which improved the reliability and fault tolerance of the SVM model, and made it have better approximation effect on nonlinear function problems [6]. To improve the classification accuracy of SVM model, Zhang Xiaoyan et al. proposed an improved SVM classification algorithm. The results show that this algorithm can solve the problem of low classification accuracy of SVM for nonlinear complex problems and provide a theoretical reference for the analysis of nonlinear complex function approximation problem [7]. Tian-bing Ma et al. used particle swarm optimization algorithm (PSO) to improve the SVM model. Features of each IMF component are extracted and fused; thus, the classification and recognition accuracy are improved [8]. Huang Wei, Dai Jianhua, etc. applied the nonlinear function to GDP prediction and achieved good results, which also laid the foundation for this study [9–13]. It provides a reference for the application of the traditional shallow mathematical model for the analysis of complex nonlinear problems. However, compared with the deep learning model, the improved shallow model still has some limitations in processing and analyzing nonlinear complex problems. Therefore, based on the RBFNN model, with Gaussian as kernel function, a Gaussian-RBFNN nonlinear complex function approximation method is proposed.

2. RBFNN Model

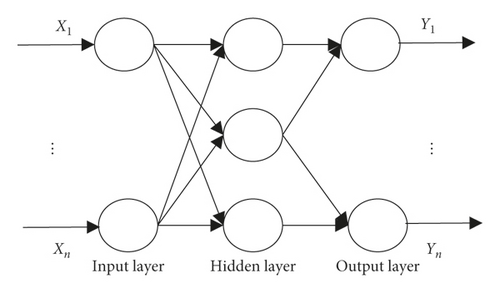

RBFNN is an artificial neural network which can approximate any nonlinear complex continuous function with any accuracy. It has the advantage of fast convergence speed and unique optimal approximation [14], which can solve the problem of local minimum. The basic structure of RBFNN is shown in Figure 1, which consists of input layer, hidden layer, and output layer.

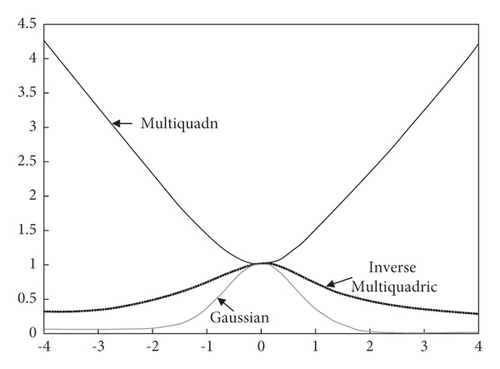

The common radial basis functions of RBFNN mainly include kernel functions, namely, Gaussian, multiquadric, and inverse multiquadric, and their radial basis function distribution is shown in Figure 2 [15]. It can be seen that, with the increase of center distance, multiquadric is a monotonically increasing function, while Gaussian and inverse multiquadric are monotonically decreasing functions. For monotonically decreasing function, when the variable is close to the function center, the function can obtain a large value; otherwise, its value tends to 0. Therefore, monotonically decreasing function has good local characteristics [16]. Thus, when RBFNN basis function is selected, monotonically decreasing function is usually used. Gaussian functions are characterized by simple form and good resolution [17]. Therefore, based on the advantages of Gaussian function, this paper uses it as the base function of RBFNN.

3. Nonlinear Complex Function Approximation Based on Gaussian-RBFNN

3.1. Multidimensional Gaussian-RBFNN Interpolation Approximation

When A is large enough, Wa(x, A) can approximate We(x, A), and approximate dataset (xi, fi) ∈ RR+1, i = 0,1, …, n.

Theorem 1. When i ≠ j and xi ≠ xj, dataset (xi, fi), xi ∈ [a, b]k, fi ∈ R, i = 0,1, …, n, can determine We(x, A) and Wa(x, A). For any ε > 0 and x ∈ [a, b]k, when a sufficiently large positive real variable A′(A > A′) is existing, there is

Prove that We(x, A) exists:

According to Lemma 1 [20, 21], when ϕ(0) = 1, ϕ(x) is a monotonically decreasing function on interval |0, +∞|, and there is

Therefore, in mij(i ≠ j), if ∀ε > 0, there is

Thus, Aij > 0 is existing, and when A > Aij, there is

Therefore, when , there is

Considering that f(x) = x/1 − x is a monotonically increasing function on interval (−∞, 1) [22], there are

Therefore, it can be inferred that when i ≠ j and xi ≠ xj, the dataset (xi, fi), xi ∈ [a, b]k, fi ∈ R, i = 0,1, …, n, determines Wa(x, A) and We(x, A). If ∀ε > 0, there is a sufficiently large positive real number A′. When A > A′, |fi − Wa(x, A)| < ε.

3.2. Gaussian-RBFNN Approximating Nonlinear Complex Function

- (1)

From 0 to 5, the nonlinear complex function is sampled every 0.1.

- (2)

Calculate the sample function value and establish the Gaussian-RBFNN model.

- (3)

The sample sets are divided into training sample set and testing sample set. The training sample set is adopted to train the model until the error is less than the set threshold or no longer changes, and the model is saved. After the training reaches the maximum number of iterations, if the error of the model is still greater than the set threshold or tends to decrease, the network structure of the model is adjusted, such as number of hidden layers and number of hidden layers’ neurons, and training continues until the requirements are met.

In net1, p and t are input and output vectors, respectively, goal is the error square sum, and spread is the radial base walking constant. In net2, p is the input vector. Furthermore, n and tansig are the number of hidden layer nodes and transfer function, respectively, purelin is the transfer function of the output layer, and trainlm is the adopted learning algorithm.

4. Simulation Experiment

4.1. Construction of Experimental Environment

This experiment uses the neural network toolbox developed under MATLAB7.0 environment to establish the simulation experiment of nonlinear complex function approximation. The experiment is carried out on 64-bit Windows7 operating system with Centrino2D 2.1ghz CPU, Tesla K80 GPU, and 16G memory.

4.2. Data Sources and Preprocessing

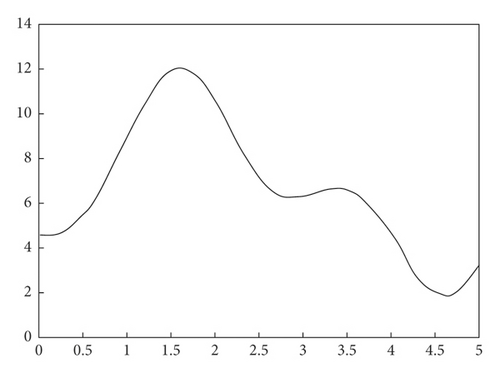

The nonlinear function is to be approximated.

4.3. Parameter Settings

Set the activation function of the proposed model as .

4.4. Evaluation Indexes

4.5. Experimental Results

4.5.1. Results of Single-Variable Nonlinear Function Approximation

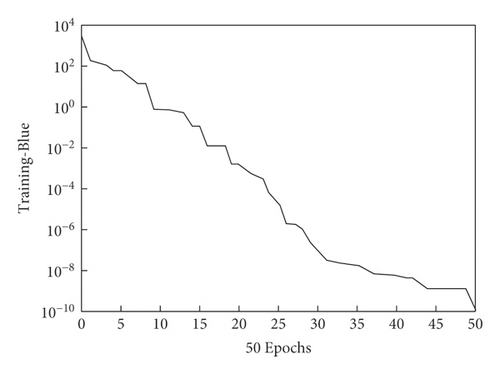

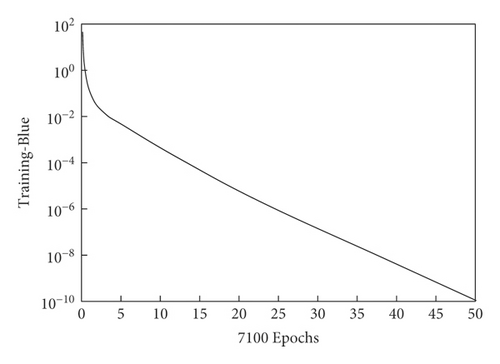

(1) Convergence Speed. The training processes of the proposed model and the BP neural network model approximating single-variable nonlinear function y = 7sin3x+0.2 × 2+4.5 are shown in Figure 4. The proposed model only needs 50 steps to complete training. However, the BP neural network model needs 7100 steps. In addition, the accuracy is slightly higher than that of the BP neural network model. Thus, the proposed model has certain advantages, and its convergence speed of nonlinear functions approximation is better than the current BP network model.

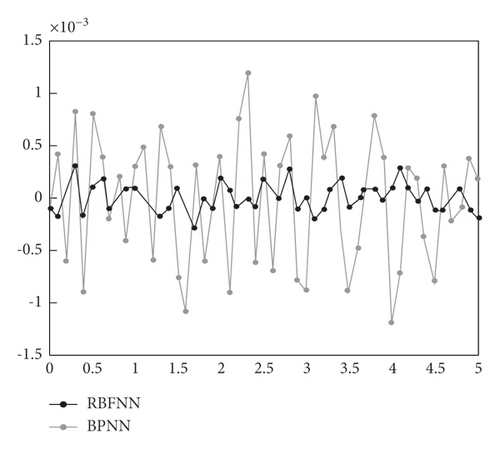

(2) Approximation Ability. The comparison of errors in the training process of the proposed model and the BP model is shown in Figure 5, where the simulation error of the proposed model is close to 0, and the oscillation amplitude of the simulation error of the BP neural network model is significantly higher than that of the proposed model. Furthermore, the approximation accuracy of two models to training samples is 10−3. Thus, the proposed model has better approximation ability to training samples, almost reaching complete approximation.

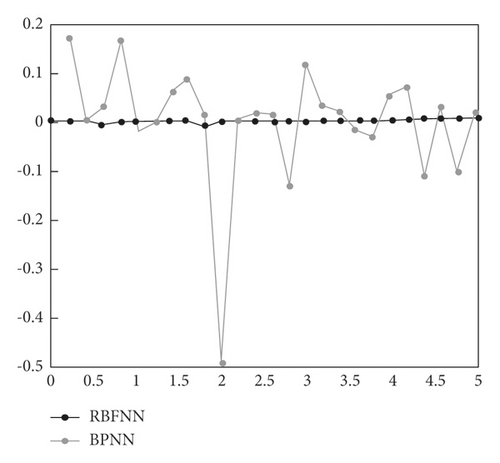

The proposed model and the BP neural network model approximate nontraining samples, and the comparison of errors are shown in Figure 6. Compared with the BP neural network model, the proposed model has significantly better approximation ability to the nontraining samples, almost complete approximation. And the overall simulation error is less than 0.1. However, the BP neural network model has a large error fluctuation, and the maximum error is close to 0.5. This shows that the proposed model has better approximation ability to nontraining samples.

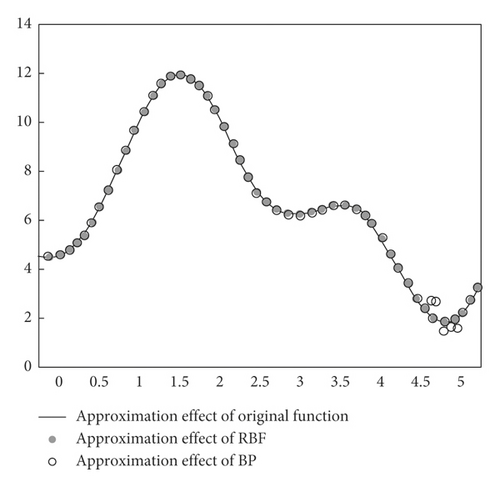

The proposed model and the BP model are used to overall approximate y = 7sin3x+0.2 × 2+4.5, and the results are shown in Figure 7. Here, the proposed model is entirely consistent with y = 7sin3x+0.2 × 2+4.5. However, the BP model is inconsistent with some function segments of y = 7sin3x+0.2 × 2+4.5. Therefore, the overall approximation of the proposed model is better than that of the BP neural network model, and the overall effect is great.

(3) Network Structure. The number of hidden layer neurons is a main index to measure the complexity of the network structure. To compare the network structure of the proposed model and the BP neural network model, different training sample numbers are used to construct the proposed model and its comparison model, and the number of neurons of the proposed model and the comparison model is shown in Table 1. Here, under the same number of training samples, the number of hidden layer neurons of the proposed model is higher than that of the BP neural network model. It means that when training the same samples, the network structure of the proposed model is more complex than that of the BP neural network model.

| Sample number | RBF neuron number | BP neuron number |

|---|---|---|

| 21 | 21 | 13 |

| 35 | 35 | 24 |

| 41 | 41 | 20 |

| 67 | 67 | 22 |

| 85 | 88 | 35 |

Therefore, the proposed model can well approximate the single-variable nonlinear function with high approximation accuracy, and the overall approximation error is less than 0.1.

4.5.2. Approximation Results of Binary Nonlinear Functions

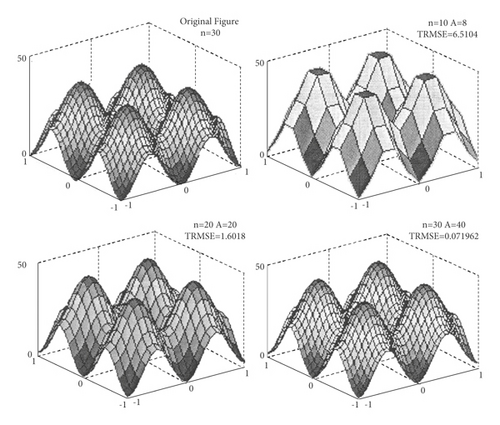

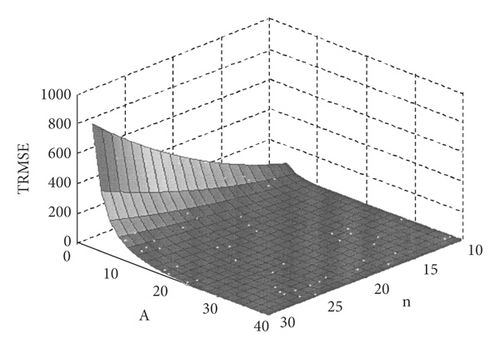

Figure 8 shows the uniform approximation results of the proposed model to the binary nonlinear function f2(x, y) = x2 + y2 − 10(cos 2 πx + cos 2 πy) + 20. Figure 9 shows the relationship between A and n and TRMSE when the proposed model approximates f2(x, y) = x2 + y2 − 10(cos 2 πx + cos 2 πy) + 20. The proposed model has a good approximation effect for binary nonlinear complex function f2(x, y) = x2 + y2 − 10(cos 2 πx + cos 2 πy) + 20. With the increase of A and n, the model will uniformly converge to f2(x, y).

Table 2 shows the comparison of approximation performance of the proposed model and BP neural network to binary nonlinear complex function f2(x, y) = x2 + y2 − 10(cos 2 πx + cos 2 πy) + 20. The performance of the proposed model is better than that of the BP neural network model on average training time, training standard error, RATT, RATR, and TRMSE, which indicates that the proposed model has better approximation performance.

| Performance | Training | Test | Parameter | ||||

|---|---|---|---|---|---|---|---|

| Time | RATT | Time | TRMSE | Dev | RATR | n = 20 | |

| RBFNN | 0.11875 | 1 | 0.115625 | 0.00375471 | 0 | 1 | A = 40 |

| BPNN | 1.095313 | 9.223684 | 0.010938 | 0.07008864 | 0.04897677 | 18.66685 | Node = 60 |

5. Application Analysis of the Gaussian-RBFNN Model

5.1. Data Source and Preprocessing

For the verification of the practical application effect of the proposed method, it is used to approximate stock price to predict the stock price. The Shanghai composite index of 300 consecutive trading days from April 1, 2018, to September 6, 2019, is selected as the experimental sample, the closing price is used as the prediction object, and the prediction period is 5 days [27]. In addition, datasets are divided into the training set and the testing set. There are 290 data in the training set and 10 data in the testing set.

5.2. Construction of the Stock Prediction Model

5.3. Results and Analysis

5.3.1. Analysis of Prediction Accuracy

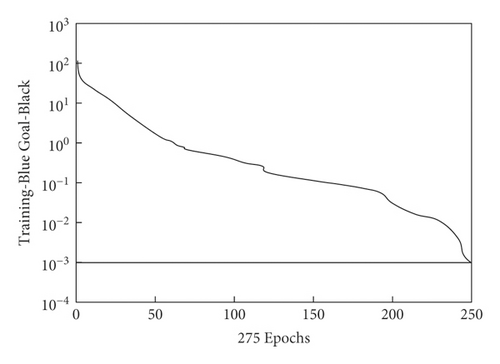

Figure 10 shows the trend of training errors when the proposed model predicts stock prices. The training error of the proposed model is less than 0.1, which realizes the required prediction accuracy. The model completes the training through 275 steps, which means that the convergence speed of model is fast. Therefore, the prediction accuracy and convergence speed of the proposed model are effective.

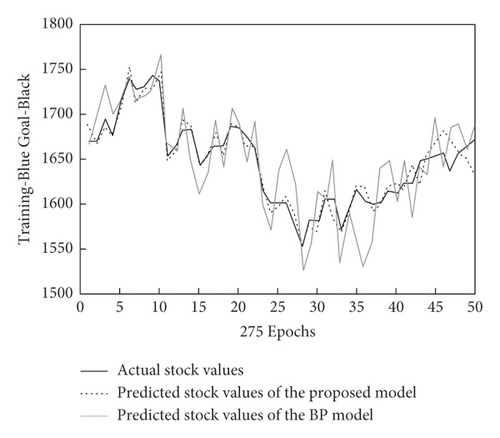

5.3.2. Comparison of Prediction Effects

The effectiveness of the proposed method in practical application is further verified by comparing prediction results on the closing price of the stock exchange index for 50 trading days from June 29, 2018, to September 6, 2018. The results are shown in Figure 11. Compared with the comparison model, the predicted value of the proposed model is closer to the actual value, and the fitting effect of the predicted value curve and the actual value curve is better. Thus, the proposed model has higher prediction accuracy and better prediction effect.

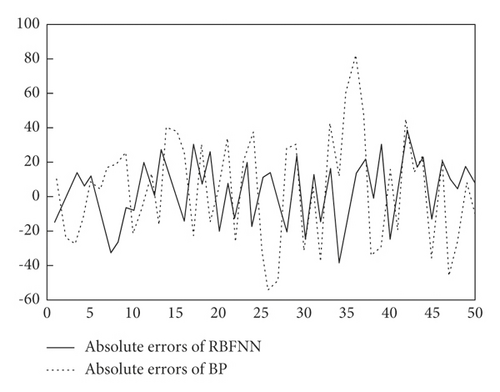

Figure 12 shows the comparison of absolute errors of the proposed model and BP neural network model predicting the closing price of Shanghai composite index in 50 trading days from June 29, 2020, to September 6, 2020. Here, the prediction absolute error of the proposed model has a small fluctuation range. There are about 72% absolute error values concentrated on interval [-20, 20], and the maximum error is less than ±40, which basically achieves the ideal prediction effect. However, the fluctuation range for absolute error of the BP neural network model is larger, which mainly focuses between [-40,-20] and [20,40], accounting for 48% of the total. In addition, there are a few absolute errors between [-60,-40] and [40,60], and one absolute error exceeds 80, which means that its prediction effect is poor. Thus, the proposed model has certain effectiveness and superiority in practical application.

6. Conclusion

To sum up, the proposed Gaussian-RBFNN model can approximate single-variable nonlinear complex functions and binary nonlinear complex functions, and the approximation effect is good. Compared with the BP neural network model, the proposed model performs better in average training time, training standard error, RATT, RATR, and TRMSE. In addition, the training speed of the proposed model is faster, and the approximation effect is better. The approximation error is less than 0.1, which can achieve the ideal approximation effect. In practical stock prediction application, the proposed model has good curve fitting effect of the predicted value and the actual value, and the training absolute error is less than 0.1, which can achieve the required prediction accuracy. Compared with the BP neural network model, the proposed model has certain advantages and precision in prediction accuracy and convergence speed. Moreover, a new idea and reference are provided for deep learning in the analysis of nonlinear complex function problems. As can be seen, some research achievements have been obtained in this paper, but because of the limitations of conditions, there are still some shortcomings to be improved. Here, only the Gaussian-RBFNN model is studied, and the prediction effect of the RBFNN model with other base function is not considered. Therefore, the next research is to find an optimal nonlinear complex function approximation method by deeply exploring this aspect.

Conflicts of Interest

The authors declare that they have no conflicts of interest regarding this work.

Open Research

Data Availability

The experimental data used to support the findings of this study are available from the corresponding author upon request.