EEG Channel Selection Using Multiobjective Cuckoo Search for Person Identification as Protection System in Healthcare Applications

Abstract

Recently, the electroencephalogram (EEG) signal presents an excellent potential for a new person identification technique. Several studies defined the EEG with unique features, universality, and natural robustness to be used as a new track to prevent spoofing attacks. The EEG signals are a visual recording of the brain’s electrical activities, measured by placing electrodes (channels) in various scalp positions. However, traditional EEG-based systems lead to high complexity with many channels, and some channels have critical information for the identification system while others do not. Several studies have proposed a single objective to address the EEG channel for person identification. Unfortunately, these studies only focused on increasing the accuracy rate without balancing the accuracy and the total number of selected EEG channels. The novelty of this paper is to propose a multiobjective binary version of the cuckoo search algorithm (MOBCS-KNN) to find optimal EEG channel selections for person identification. The proposed method (MOBCS-KNN) used a weighted sum technique to implement a multiobjective approach. In addition, a KNN classifier for EEG-based biometric person identification is used. It is worth mentioning that this is the initial investigation of using a multiobjective technique with EEG channel selection problem. A standard EEG motor imagery dataset is used to evaluate the performance of the MOBCS-KNN. The experiments show that the MOBCS-KNN obtained accuracy of 93.86% using only 24 sensors with AR20 autoregressive coefficients. Another critical point is that the MOBCS-KNN finds channels not too close to each other to capture relevant information from all over the head. In conclusion, the MOBCS-KNN algorithm achieves the best results compared with metaheuristic algorithms. Finally, the recommended approach can draw future directions to be applied to different research areas.

1. Introduction

Over many years, our universe has transferred to a digital community in which each person is living with a particular digital identifier. Indeed, there are several kinds of identifiers, such as identification cards and passwords. Meanwhile, they can be easily circumvented, stolen, and forgotten [1]. Therefore, personal behavior or characteristics can be used to strengthen identification systems. Such techniques, the so-called biometrics, make use of several pieces of in-person information to allow more robust identification systems, such as face and voice recognition, fingerprint information, and iris data [2]. The motivation of using EEG and body sensors in healthcare systems has been an interesting area for many researchers [3–5].

On the other hand, the widespread and influential deployment of biometric systems leads to a new challenge, which is called “spoofing” [1, 6–8]. Such type of attack is classified as the most dangerous in security systems since it is designed to break the biometric systems’ security, thus allowing unwarranted persons to get admission to the system [2].

In real life, there have already been several spoofing attacks on the biometrics systems, such as face spoofing (printed photos and 3D mask attack [9–12]), fake fingerprints (gummy fingers), finger-vein systems fooled through a piece of paper [13], iris recognition systems fooled by an eyeball opposite to the scanner of iris, and voice recognition fooled through replaying a voice recording opposite to the recognition system speaker [14]. Therefore, people are looking for biometric authentication systems that can grant access to a person based on invisible characteristics, thus becoming harder to be attacked by an external threat. In this context, one shall refer to user authentication based on brain signals, which can be captured by the well-known electroencephalogram (EEG) exam [15].

The EEG signals appear as a great alternative for designing new biometric systems since several studies showed that such information presents uniqueness features, universality, and natural robustness to spoofing attacks [1, 16]. These signals represent the graphical recording of the brain electrical activity, which can be measured by placing electrodes (sensors) in different positions of the scalp [1, 17–20]. EEG channel selection is totally dependent on the characteristics of the EEG signal, where the most informative features that provide the highest accuracy rate from the channel selection shall be determined.

Multiobjective optimization algorithms have been applied in different methodological phases and criteria [21, 22]. In a multiobjective formulation of the feature selection problem, the possible features define the vector of decision variables. The space of features in many classification problems is usually very large [23]. In [24, 25], the authors proposed new methods for feature selection, based on a multiobjective evolutionary algorithm, which managed to select a small subset of features, trying to avoid the overfitting problems and reduce classification problems in high-dimensional feature space. In the same direction, the feature selection problem has been also solved by an embedded multiobjective genetic optimization procedure, subject to the simultaneous minimization of the misclassification ratio and number of selected attributes [26, 27]. Multiobjective optimization algorithms have been also used in the deionizing stage of EEG signal. In [28], an approach has been adopted in the signal deionizing stage to model decomposition and present two metrics to quantify the amount of EEG information lost during the cleaning process. Furthermore, in [7], the authors proposed a novel method for extracting unique features from the original EEG signals using the multiobjective flower pollination algorithm and the wavelet transform.

- (1)

To evaluate binary cuckoo search algorithm (BCSA) for EEG-based person identification

- (2)

To model the problem of EEG channel selection as an evolutionary-based optimization task

- (3)

To propose a multiobjective technique combined with the KNN classifier for EEG-based biometric person identification

We use evolutionary optimization algorithms for the EEG channel selection due to their efficiency when solving challenging real-world problems and their simplicity. The performance of the proposed method (MOCS-KNN) is compared with other seven multiobjective metaheuristic algorithms (binary PSO (MOPSO-KNN), binary GWO (MOGWO-KNN), binary FFA (MOFFA-KNN), binary BAT (MOBAT-KNN), binary MVO (MOMVO-KNN), binary WOA (MOWOA-KNN), and binary MFO (MOMFO-KNN)). The comparison includes five measures, which are accuracy ratio (EEGACC), channels selected (EEGLen), Specificity (EEGPrecision), F-score (EEGFscore), and Recall (EEGRecall). The results show that the proposed technique (MOCS-KNN) is able to outperform other metaheuristic algorithms in almost all results produced.

The remainder of this paper is organized as follows: the related works about a number of EEG-based identification techniques are reviewed in Section 2. A discussion about cuckoo search algorithm is presented in Section 3. The proposed method is provided in Section 4. Results are discussed in Section 5, and Section 6 provides conclusions and future work.

2. Related Works

This section presents an overview of previous studies related to using optimization algorithms in the BCI applications. Several works have been proposed based on optimization algorithms for tackling issues relevant to person identification case study. According to [1, 30], the number of selected channels can effect positively the accuracy of classification task. The authors especially addressed the problem of reducing the number of required sensors while maintaining a comparable performance. They have achieved significant results by obtaining very good person identification rates using much fewer channels. In [1], the authors proposed a binary version of the flower pollination algorithm under different transfer functions to select the best subset of channels that maximizes the accuracy. In [31], the authors proposed a genetic algorithm to reduce the number of necessary electrodes for measurements by EEG devices. The results were encouraging, and it was possible to accurately identify a subject using about 10 out of 64 electrodes. The Authors in [16] proposed hybrid optimization techniques based on binary flower pollination algorithm (FPA) and β-hill climbing (called FPA β-hc) for selecting the most relative EEG channels (i.e., features) that come up with efficient accuracy rate of personal identification. The proposed method is able to identify persons with high Acc, Sen, F_s, and Spe and less number of channels selected. However, these studies have concentrated only on the number of channels as baseline for optimization process.

In [7], the authors have proposed novel method for EEG signal denoising based on the multiobjective flower pollination algorithm. They designed a multiobjective function that considers a balance between reducing the EEG noise and keeping its signal energy. In [32], the authors proposed multiobjective optimization method for optimal electroencephalographic (EEG) channel selection to provide access to subjects with permission in a system by detecting intruders and identifying the subject. The optimization process was performed by the nondominated sorting genetic algorithm (NSGA). The optimization process consists of finding the best nu and gamma for the SVM with the RBF kernel to increase the TAR, TRR, and accuracy of subject identification or maintain them as high as possible for previous configurations, while using the smallest number of possible EEG channels. However, the optimization process is restricted within SVM hyperparameters, and it is hard to generalize for another study, especially when using different classifiers where each classifier has its own characteristics and hyperparameters.

To summarize, for person identification within optimization algorithms, two schemes have been observed: first, optimization scheme based on single objective criteria, mainly channel selection; second, optimization scheme based multiobjective criteria such as channel selection, EEG noise, and classifiers hyperparameters. Bioinformatics applications frequently involve classification problems that require improving the learning accuracy [33]. According to [32], certain aspects need to be analyzed and improved before reaching an industrial level application of new biometric systems. One is person identification, which is an essential security layer in any secure system. This is also important for the development of portable low-density EEG devices that retain similar accuracy to high-density EEG. Thus, the accuracy of person identification is very important aspect. To the best of our knowledge, on the one hand, this is the first study to present multiobjective optimization based on the number of channels and the classification accuracy weights as baseline for optimization process. On the other hand, this is the first study to implement and test eight optimization algorithms in order to generalize the best algorithm that can be adopted for the person identification task.

3. Cuckoo Search Algorithm

- (i)

Each cuckoo chooses only one nest to lay one egg in that nest.

- (ii)

The high quality egg in the best nest is marked to be used in the next generations.

- (iii)

Since the number of hosting nests is predetermined in advance, the host cuckoo can discover that the eggs in the nest are not its own eggs with a probability of pa where pa ∈ (0,1). In this case, the host birds either throw the foreign eggs or abandon the nest and rebuild another one in different place.

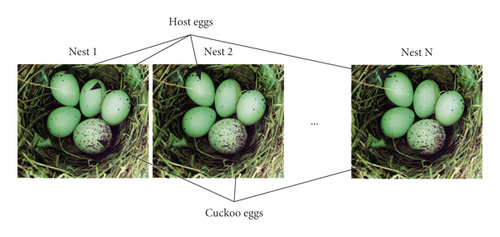

In CS algorithm, the eggs in each nest represent the set of solutions while the cuckoo eggs represent the new solution (see Figure 1). The quality of eggs in the nest is the fitness function of that solution. The ultimate aim is to replace the eggs in a nest with potentially better cuckoo eggs. The cuckoo frequently changes its position using Levy flights after leaving nest. The host bird can throw the cuckoo eggs or leave the nest when cuckoo eggs are discovered. The flowchart of the CS algorithm is given in Figure 1. The pseudocode of CS algorithm is given in Algorithm 1. Table 1 shows the local and global search parameters of CS algorithm. The discussion below provides procedural steps of the CS algorithm.

| Parameter | Description |

|---|---|

| The next position | |

| The current position selected randomly at the position k | |

| The current position selected randomly at the position j | |

| α | Positive step size scaling factor |

| s | Step size |

| ⊗ | Entrywise product of two vectors |

| H | Heaviside function |

| pa | Used to switch between local and global random walks |

| ε | Random number from uniform distribution |

| L(s, λ) | distribution, used to define the step size of random walk |

-

Algorithm 1: The CS algorithm pseudocode.

- (1)

Initialize the parameters of CS algorithm.

- (2)

Construct the initial host nest population HNP.

- (3)

Calculate the fitness of each host nest in the population (xi), where 0 ≤ i ≤ N.

- (4)

t = 1

- (5)

while termination criterion is not met do

- (6)

Global random walk using (3)

- (7)

Update the host nest population

- (8)

Local random walk using (5)

- (9)

end while

- (10)

Return the best solution.

-

Step 1: initialize CS algorithm parameters. Initially, the optimization problem is conventionally modeled in terms of objective function as follows:

(1) -

where f(x) is the objective function to evaluate the quality of the solution x = (x1, x2, …, xd) of d decision variables. Each decision variable xi ∈ [LBi, UBi] in which LBi is the lower bound and UBi is the upper bound of variable xi, respectively. The parameters of the optimization problem are normally extracted from the datasets. The objective function is used to evaluate the solutions of the problem.

-

The CS parameters can be divided into two types: (i) algorithmic parameters such as maximum number of iterations and number of nests or population size; (ii) control parameter pa which is the discovery rate of alien eggs/solutions.

-

Step 2: initialize the host nest population (HNP). The host nest population is formulated as a matrix HNP of size N × d, where the N is the total number of eggs in the nests and d is the solution dimension. Each row in the HNP represents a solution as shown in the following equation:

(2) -

The objective function value f(xi) of each solution xi is also calculated.

-

Step 3: this step is also called global random walk. For each solution, xi(t) in the HNP is updated (i.e., xi(t + 1)) using Levy flights step as formulated in the following equation:

(3) -

where

(4) -

where α > 0 is the step size scale factor while s is the step size. Note that the step size is calculated based on the scale size of the optimization problem on hand [35–37]. The mathematical notation ⊕ refers to pairwise product operation. is the flights and is calculated based on heavy-tailed probability distribution formulated in (3). The random walk is represented in the stochastic equation (4). Γ() is the gamma function.

-

Step 4: update the host nest population. In order to update the HNP, each solution xi(t + 1), i ∈ (1, ldots, N) updated by flights step is compared with another randomly selected solution xj, xj(t) ∈ NHP. Thereafter, the solution xj(t) is replaced by solution xi(t + 1), if the latter is better.

-

Step 5: local random probability of Pa, each solution xi(t + 1), i ∈ (1, ldots, N) in the HTP(t + 1) is check for weather or not abandoned as follows:

(5) -

where xj(t) and xk(t) are two different randomly selected solutions and H(u) is the Heaviside function. ε is a function that generates a random number extracted from a uniform distribution, and s is the step size. For more clarifications about the CS algorithm convergence behavior, interesting papers can be referred to [34, 35, 38].

-

Step 6: stop criterion. Steps 3, 4, and 5 are repeated until the termination criterion; for example, the maximum number of iterations is met.

CS algorithm is successfully applied to solve several optimization problems like traveling salesman problem [39], economic load dispatch [40], face recognition [41], loading pattern optimization [42], data clustering [43], feature selection [44], short-term load forecasting [45], modeling proton exchange membrane fuel cells [46], electric distribution network reconfiguration [47], optimal reactive power scheduling [48], contrast enhancement of gray scale images [49], estimating peak particle velocity in mine blasting [50], systems of nonlinear equations [51], maximizing area coverage in wireless sensor networks [52], job scheduling [53], and others reported in [35, 54, 55].

However, the framework of the CS algorithm is modified and integrated with other components in order to improve its performance. These modifications include CS algorithm with adaptive parameters [56, 57], multiobjective CSA [45, 58], chaotic CSA [43, 59], binary CS algorithm [60, 61], CS algorithm with other metaheuristic algorithms [52, 62, 63], CS algorithm with other components [52, 64], and other improvements of CS algorithm [44, 51, 59, 65].

4. Methodology

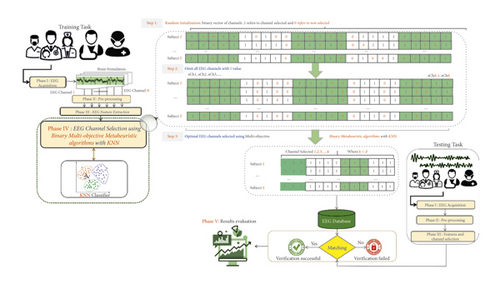

This section provides a full explanation of the methodology of the proposed MOCS with KNN classifier (MOCS-KNN) to solve EEG channel selection problem. Overall, the methodology has five phases. Figure 2 shows the flowchart of these phases. Phase I involved EEG signal acquisition task which has been done using 64 electrodes. Section 5.2 will provide more details about this phase. In Phase II, two conventional filters (band-pass and notch filter) were used to remove unwanted artifacts from the original EEG signal such as those used in [16], and then wavelet was applied to denoise the EEG signal as suggested in [17]. In Phase III, three autoregressive coefficients have been extracted from the denoised EEG signal as feature extraction data, that is, AR5, AR10, and AR20, which are suggested by Rodrigues et al. [1].

Phase IV is the main contribution of this work where a multiobjective cuckoo search algorithm for EEG channel selection is proposed. The following subsections explain in detail the proposed method.

4.1. Formulation of Multiobjective Approach

4.2. Transfer Function

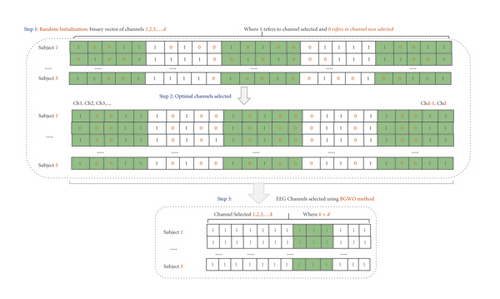

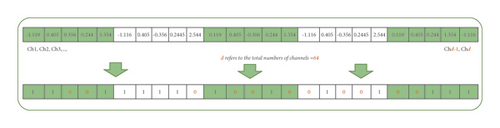

There are three steps that must be considered to select the optimal subset of channels. First, random initialization of the binary vector (representing the EEG channels) is conducted, where “1” means that a given channel will be selected and “0” indicates that the channel will not be selected. Later, the MOCS-KNN will start searching for the space to find the optimal subset of channels, i.e., the one that can provide the highest accuracy rate. Finally, we discard all channels with “0” values and keep the remaining ones.

4.3. Cuckoo Search Algorithm for EEG Channel Selection

-

Step 1: initialize MOCS parameters. The first step of adapting MOCS is initializing the EEG channel selection problem and MOCS parameters. The EEG channel selection problem parameters are CHn. MOCS parameters are the minimum (LB) and maximum (UB) ranges for the search agent, which are initialized to be 1 and 64, respectively, due to the total number of EEG channels, the number of search agents in the pack (N), and the maximum number of iterations (I).

-

Step 2: initialize MOCS population. In this step, all MOCS’s solutions are initialized and generated randomly to configure the population. Each solution represents a cuckoo in MOCS and contains the AR feature’s coefficient. Figure 4 presents a solution in MOCS-CS population (P). P contains 30 solutions as shown in the following equation:

(11) -

Step 3: objective function evaluation. As mentioned previously, each solution is evaluated based on the objective function in (8). After this evaluation, the best three solutions will be assigned to best cuckoo.

-

Step 4: update MOCS-KNN population. The population of BMOCS-KNN method will be updated in this step in an attempt to find better solutions and achieve the optimal EEG channel set. This updating can be done using (4). The updating mechanism starts by generating new solutions.

-

Step 5: check the stop criterion.

Steps 3 and 4 of MOCS-KNN are repeated until the stop criterion is met.

5. Result and Discussion

In this section, we discuss the details of the experiments used to assess the robustness of the proposed approach as well as the dataset employed in this work. Parameter setting and experimental setup are later discussed in Section 5.3, while Section 5.4 presents a comparison between the proposed approach, MOCS-KNN, and other metaheuristic algorithms.

5.1. The Performance of Traditional EEG Classification Methods

The main purpose of this section is to provide a brief idea about the performance of traditional machine learning classification approach used for EEG-based personal identification problem. The measurements used to evaluate the performance are the classification accuracy and the area under curve (AUC). The results obtained are summarized in Table 2 using three datasets. Several traditional EEG classification methods are experimented with: artificial neural networks (ANN), linear support vector machines, support vector machine with radial basis function (RBF-SVM), k-nearest neighbors (k-NN), decision tree (J48), optimum-path forest (OPF), Naive Bayes, and linear discriminant analysis (LDA). Based on the results reported, the KNN is able to achieve better classification accuracy for EEG-based personal identification problem. Therefore, the KNN is adopted for MOCS-EEG.

| Features | Number of channels | Accuracy (%) |

|---|---|---|

| AR5 | 64 | 87 |

| AR10 | 64 | 91 |

| AR20 | 64 | 92 |

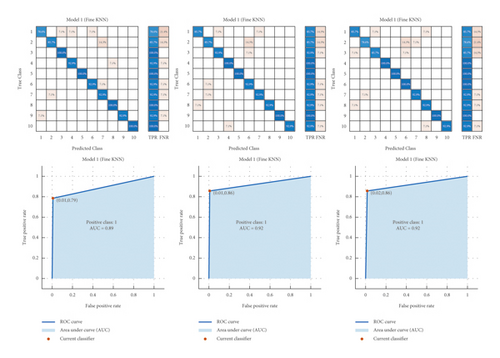

The area under curve (AUC) measures and confusion matrix are also visualized for KNN results in Figure 5.

5.2. Dataset

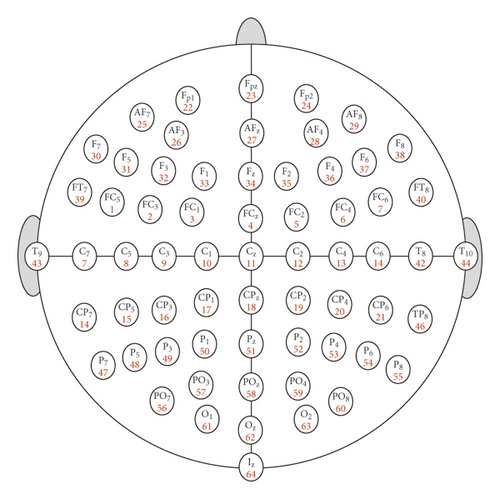

In this work, a standard EEG dataset [68] has been used for evaluating the proposed method (FPA β-hc). The brain signals of this dataset are collected from 109 subjects using brain-computer interface software called BCI2000 system [69]. The EEG signals are then acquired from 64 electrodes (i.e., channels), and each subject performs 12 motor/imagery tasks (i.e., 12 records of EEG signals for each subject). Further, AR features are extracted from these 12 recordings with three different number of coefficients: AR5, AR10, and AR20. To reduce the dispersion of the EEG patterns and to quickly process the extracted features, we compute the mean value of each electrode. Figure 6 shows the distribution of the electrodes of the EEG dataset used in the paper.

5.3. Experimental Setup

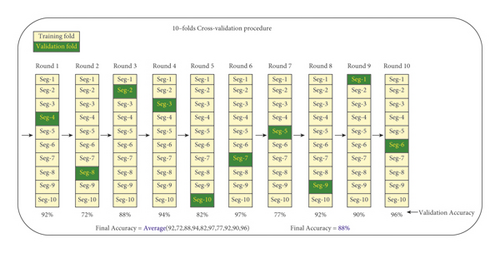

In this section, the performance of the proposed channel selection approach and other approaches was evaluated using three EEG signal datasets collected by applying autoregressive (AR) models according to three different coefficients. The solution representation in all channel selection approaches is represented by a vector that consists of a series of 1’s and 0’s, where “1” means that the channel is selected and “0” means that the channel is ignored. During classification process, the EEG signal data dimension will be reduced and formed solely according to those channels endorsed by channel selection approaches. On the other hand, the channels that were not endorsed will be removed from the original dataset. Afterwards, the classification process is applied here using 10-fold-cross-validation, where the reduced data is iteratively divided into 10 parts, where one part is considered as testing data and the remaining parts as training data. This process is repeated 10 times where the testing part is allocated new samples each time until all samples being covered. The 10-fold-cross-validation process is presented in Figure 7. In this work, the classification accuracy obtained by k-nearest neighbors (KNN) and the number of channels are used to design the multiobjective fitness function.

Table 3 shows the parameters used for selected metaheuristic algorithms in this work. With respect to CS, we need to define β, which is utilized for the computation of the Levy distribution and pa. pa stands for the probability of replacing worst nest by new constructed ones.

| Approach | Parameters |

|---|---|

| MOCS | β = 1.5, Pa = 0.25 |

| MOPSO | c1 = c2 = 2 |

| MOMVO | WEPMax = 1, WEPMin = 0.2 |

| MOBAT | A = 0.5, r = 0.5 |

| MOFFA | α = 0.5, γ = 1, β0 = 0.2 |

| MOMOF | — |

| MOGWO | — |

| MOWOA | a = [2, 0], b = 1 |

5.4. Comparison between MOCS-KNN and Other Metaheuristic Algorithms

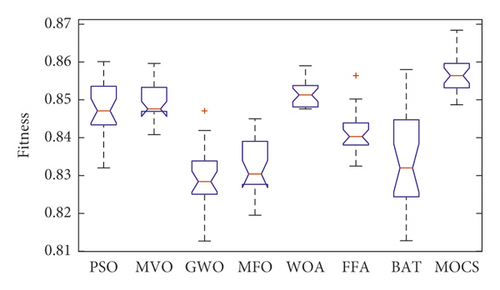

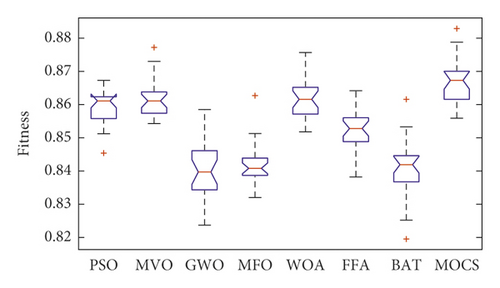

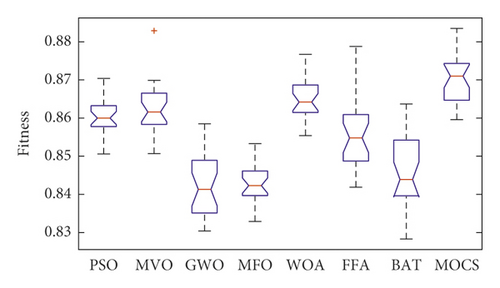

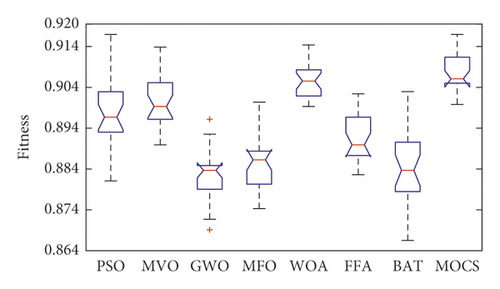

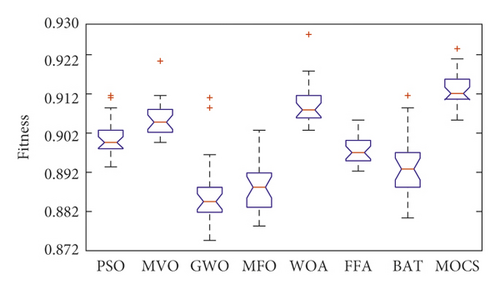

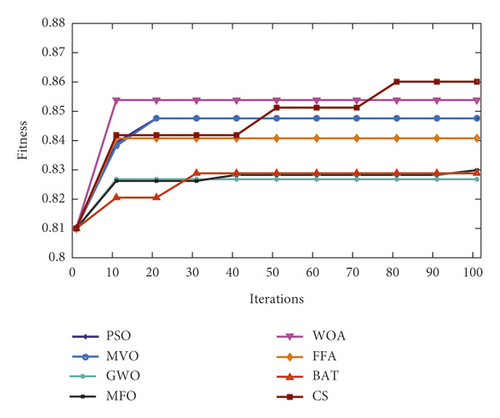

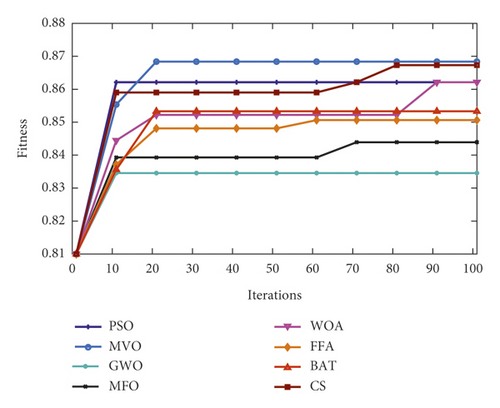

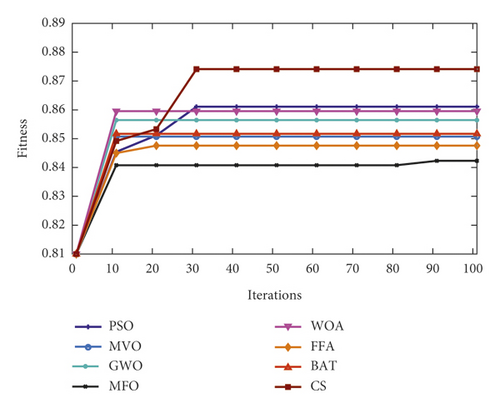

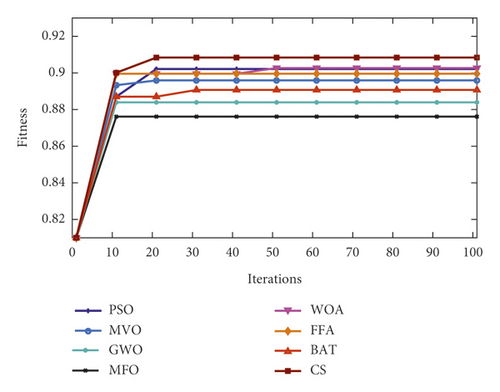

Figures 8–10 show the boxplot and convergence rate over 25 runs for selected metaheuristic algorithms during the experimental evaluation using AR5, AR10, and AR20, respectively. The boxplot components are defined as follows: box length illustrates interquartile range, the whiskers indicate the range of the values, the horizontal line in the box indicates the median value, and the outliers are represented by the circles. The boxplots reveal that MOCS-KNN managed to yield highly accurate results. As shown in Figure 8, for AR5 and AR20 datasets, it can be observed that MOCS-KNN shows a superior efficacy in convergence trends compared to other metaheuristic algorithms. For AR10 dataset, MOCS-KNN and MOMVO-KNN show competitive efficacy in the early stage of convergence, but in the later stages, MOCS-KNN surpasses MOMVO-KNN. Overall, MOCS-KNN shows improved convergence learning due to its capability to pave the way for its swarm to achieve the best trajectory leading to global optimal solution by avoiding stagnation drawbacks.

To further validate the results obtained by MOCS-KNN and other methods, Wilcoxon signed-rank statistical test [70] is adopted in this study to show if there is statistically significant difference between these methods. In Table 4, Z-value represents standardized test statistics, and P-value represents the statistical significance (P < 0.05). A P value <0.5 means that there is statistical significant difference between paired methods; otherwise, there is no statistical significant difference. From Table 4, it can be observed that MOCS-KNN achieved statistical significant results in all datasets when compared with other methods.

| Dataset | Metaheuristic | Z-value | P value | MOCS-KNN |

|---|---|---|---|---|

| AR5 | MOPSO-KNN | −4.3724 | <0.00001 | Significant |

| MOGWO-KNN | −4.3724 | <0.00001 | Significant | |

| MOFFA-KNN | −4.3724 | <0.00001 | Significant | |

| MOBAT-KNN | −4.2109 | <0.00001 | Significant | |

| MOMVO-KNN | −4.2109 | <0.00001 | Significant | |

| MOWOA-KNN | −4.3724 | <0.00001 | Significant | |

| MOMFO-KNN | −4.3724 | <0.00001 | Significant | |

| AR10 | MOPSO-KNN | −4.3724 | <0.00001 | Significant |

| MOGWO-KNN | −4.3724 | <0.00001 | Significant | |

| MOFFA-KNN | −4.3724 | <0.00001 | Significant | |

| MOBAT-KNN | −4.1302 | <0.00001 | Significant | |

| MOMVO-KNN | −4.3724 | <0.00001 | Significant | |

| MOWOA-KNN | −4.3724 | <0.00001 | Significant | |

| MOMFO-KNN | −4.3724 | <0.00001 | Significant | |

| AR20 | MOPSO-KNN | −2.758 | 0.00578 | Significant |

| MOGWO-KNN | −4.3455 | <0.00001 | Significant | |

| MOFFA-KNN | −4.3455 | <0.00001 | Significant | |

| MOBAT-KNN | −4.3455 | <0.00001 | Significant | |

| MOMVO-KNN | −2.166 | 0.03 | Significant | |

| MOWOA-KNN | −4.184 | <0.00001 | Significant | |

| MOMFO-KNN | −4.0226 | <0.00001 | Significant | |

| TDF | MOPSO-KNN | −3.7132 | 0.0002 | Significant |

| MOGWO-KNN | −3.1143 | 0.00188 | Significant | |

| MOFFA-KNN | −4.3724 | <0.00001 | Significant | |

| MOBAT-KNN | −4.3724 | <0.00001 | Significant | |

| MOMVO-KNN | −3.1143 | 0.00188 | Significant | |

| MOWOA-KNN | −1.9238 | 0.05486 | Nonsignificant | |

| MOMFO-KNN | −4.3724 | <0.00001 | Significant | |

| T-FDF | MOPSO-KNN | −4.1973 | <0.00001 | Significant |

| MOGWO-KNN | −4.3724 | <0.00001 | Significant | |

| MOFFA-KNN | −4.3724 | <0.00001 | Significant | |

| MOBAT-KNN | −4.332 | <0.00001 | Significant | |

| MOMVO-KNN | −3.7714 | 0.00016 | Significant | |

| MOWOA-KNN | −2.5571 | 0.01046 | Significant | |

| MOMFO-KNN | −4.3724 | <0.00001 | Significant | |

In this section, the performance of the proposed method (MOCS-KNN) is compared with other seven metaheuristic algorithms (PSO algorithm (MOPSO-KNN), GWO algorithm (MOGWO-KNN), binary FFA algorithm (MOFFA-KNN), binary BAT (MOBAT-KNN), MVO (MOMVO-KNN), WOA (MOWOA-KNN), and MFO (MOMFO-KNN)). The comparison includes five measures, which are accuracy ratio (EEGACC), channels selected (EEGLen), Specificity (EEGPrecision), F-score (EEGFscore), and Recall (EEGRecall). Table 5 shows the results of proposed technique (MOCS-KNN) with other metaheuristic algorithms using autoregressive three different coefficients. Overall, it is worth mentioning that the MOCS-KNN obtained the best results on all measurements for all datasets. To be more precise, in terms of number of channels, the results of MOCS-KNN and MOWOA-KNN are equal, where MOCS-KNN obtained the minimum number of channels in AR20 (24), while MOWOA-KNN obtained the minimum number of channels in AR10 (25), and both methods minimize the number of channels in AR5 to the least length (24). To gain a clear overview of the performance of MOCS-KNN and other methods in all measurements, the summation of ranks is applied in AR5, AR10, and AR20 datasets for all measurements as shown in Table 6. To elaborate the summation of ranks procedure, the method that achieved the best result will be given a rank of “1,” the second best method will be given a rank of “2,” etc. The summation of ranks in the last row of Table 6 represents the sum of ranks of each method with the corresponding datasets. The bold font highlights the best result. The results suggest that MOCS-KNN achieved the best performance in all evaluation measurements, followed by MOMVO, MOPSO, MOWOA, MOFFA, MOMFO, and MOGWO.

| Algorithms | Measures | AR5 | AR10 | AR20 | TDF | T-FDF |

|---|---|---|---|---|---|---|

| MOPSO-KNN | EEGFit | 0.8483 | 0.8593 | 0.8604 | 0.8988 | 0.9008 |

| EEGACC | 91.29 | 93.14 | 93.34 | 0.9671 | 0.9714 | |

| EEGLen | 27 | 29 | 29 | 24 | 24 | |

| EEGPrecision | 0.9129 | 0.9314 | 0.9334 | 0.9722 | 0.9764 | |

| EEGRecall | 0.9191 | 0.9385 | 0.9382 | 0.9671 | 0.9764 | |

| EEGFscore | 0.9135 | 0.9319 | 0.9336 | 0.9673 | 0.9717 | |

| MOCS − KNN | EEGFit | 0.8556 | 0.8665 | 0.8705 | 0.9078 | 0.9133 |

| EEGACC | 91.46 | 93.57 | 93.86 | 0.9668 | 0.9714 | |

| EEGLen | 24 | 25 | 24 | 21 | 20 | |

| EEGPrecision | 0.9146 | 0.9357 | 0.9386 | 0.9716 | 0.9763 | |

| EEGRecall | 0.9202 | 0.9420 | 0.9424 | 0.9668 | 0.9714 | |

| EEGFscore | 0.9150 | 0.9363 | 0.9387 | 0.9670 | 0.9716 | |

| MOMVO − KNN | EEGFit | 0.8492 | 0.8619 | 0.8627 | 0.9011 | 0.9058 |

| EEGACC | 91.26 | 93.20 | 93.46 | 0.968 | 0.9734 | |

| EEGLen | 26 | 27 | 27 | 23 | 23 | |

| EEGPrecision | 0.9126 | 0.9320 | 0.9346 | 0.9730 | 0.9779 | |

| EEGRecall | 0.9180 | 0.9394 | 0.9388 | 0.968 | 0.9734 | |

| EEGFscore | 0.9130 | 0.9325 | 0.9348 | 0.9680 | 0.9737 | |

| MOBAT − KNN | EEGFit | 0.8346 | 0.8408 | 0.8469 | 0.8849 | 0.8931 |

| EEGACC | 89.89 | 90.97 | 91.91 | 0.9568 | 0.9665 | |

| EEGLen | 27 | 28 | 28 | 26 | 26 | |

| EEGPrecision | 0.8989 | 0.9097 | 0.9191 | 0.9635 | 0.9722 | |

| EEGRecall | 0.9068 | 0.9180 | 0.9235 | 0.9568 | 0.9665 | |

| EEGFscore | 0.8995 | 0.9102 | 0.9189 | 0.9569 | 0.9668 | |

| MOFFA − KNN | EEGFit | 0.8412 | 0.8519 | 0.8559 | 0.8920 | 0.8975 |

| EEGACC | 90.94 | 92.49 | 92.77 | 0.9642 | 0.9691 | |

| EEGLen | 28 | 28 | 28 | 25 | 25 | |

| EEGPrecision | 0.9094 | 0.9249 | 0.9277 | 0.9702 | 0.9746 | |

| EEGRecall | 0.9149 | 0.9325 | 0.9326 | 0.9642 | 0.9691 | |

| EEGFscore | 0.9096 | 0.9254 | 0.9278 | 0.9645 | 0.9694 | |

| MOMFO-KNN | EEGFit | 0.8326 | 0.8419 | 0.8429 | 0.8859 | 0.8880 |

| EEGACC | 89.82 | 92.46 | 92.66 | 0.9605 | 0.964 | |

| EEGLen | 28 | 31 | 31 | 26 | 27 | |

| EEGPrecision | 0.8983 | 0.9246 | 0.9266 | 0.9672 | 0.9707 | |

| EEGRecall | 0.9057 | 0.9317 | 0.9313 | 0.9605 | 0.964 | |

| EEGFscore | 0.8986 | 0.9251 | 0.9268 | 0.9607 | 0.9643 | |

| MOGWO-KNNN | EEGFit | 0.8294 | 0.8398 | 0.8425 | 0.8825 | 0.8869 |

| EEGACC | 90.17 | 91.90 | 92.31 | 0.9577 | 0.9628 | |

| EEGLen | 29 | 30 | 31 | 26.76 | 26.68 | |

| EEGPrecision | 0.9017 | 0.9189 | 0.9231 | 0.9647 | 0.9688 | |

| EEGRecall | 0.9077 | 0.9260 | 0.9270 | 0.9577 | 0.9628 | |

| EEGFscore | 0.9024 | 0.9194 | 0.9229 | 0.9577 | 0.9630 | |

| MOWOA-KNN | EEGFit | 0.8517 | 0.8618 | 0.8652 | 0.9054 | 0.9092 |

| EEGACC | 90.74 | 92.23 | 92.94 | 0.9674 | 0.9697 | |

| EEGLen | 24 | 24 | 25 | 21.92 | 21.28 | |

| EEGPrecision | 0.9074 | 0.9223 | 0.9294 | 0.9724 | 0.9747 | |

| EEGRecall | 0.9137 | 0.9302 | 0.9345 | 0.9674 | 0.9697 | |

| EEGFscore | 0.9079 | 0.9228 | 0.9296 | 0.9675 | 0.9699 | |

- Bold values indicate best results.

| Measures | MOPSO | MOMVO | MOGWO | MOMFO | MOWOA | MOFFA | MOBAT | MOCS |

|---|---|---|---|---|---|---|---|---|

| ACC-AR5 | 2 | 3 | 6 | 8 | 5 | 4 | 7 | 1 |

| ACC-AR10 | 3 | 2 | 7 | 5 | 6 | 4 | 8 | 1 |

| ACC-AR20 | 3 | 2 | 7 | 6 | 4 | 5 | 8 | 1 |

| ACC-TDF | 4 | 3 | 8 | 6 | 2 | 5 | 7 | 1 |

| Len-T-FDF | 4 | 3 | 8 | 7 | 2 | 5 | 6 | 1 |

| Len-AR5 | 3 | 2 | 5 | 4 | 1 | 4 | 3 | 1 |

| Len-AR10 | 5 | 3 | 6 | 7 | 1 | 4 | 4 | 2 |

| Len-AR20 | 5 | 3 | 6 | 6 | 2 | 4 | 4 | 1 |

| Len-TDF | 4 | 3 | 7 | 6 | 2 | 5 | 6 | 1 |

| Len-T-FDF | 4 | 3 | 7 | 8 | 2 | 5 | 6 | 1 |

| Recall-AR5 | 2 | 3 | 4 | 8 | 6 | 5 | 7 | 1 |

| Recall-AR10 | 3 | 2 | 7 | 4 | 6 | 5 | 8 | 1 |

| Recall-AR20 | 3 | 2 | 7 | 6 | 4 | 5 | 8 | 1 |

| Recall-TDF | 3 | 1 | 7 | 6 | 2 | 5 | 8 | 4 |

| Recall-T-FDF | 1 | 2 | 8 | 7 | 4 | 5 | 6 | 3 |

| Precision-AR5 | 2 | 3 | 6 | 8 | 5 | 4 | 7 | 1 |

| Precision-AR10 | 3 | 2 | 7 | 6 | 4 | 5 | 8 | 1 |

| Precision-AR20 | 3 | 2 | 7 | 6 | 4 | 5 | 8 | 1 |

| Precision-TDF | 3 | 1 | 7 | 6 | 2 | 5 | 8 | 4 |

| Precision-T-FDF | 2 | 1 | 8 | 7 | 4 | 5 | 6 | 3 |

| F-score-AR5 | 2 | 3 | 6 | 8 | 5 | 4 | 7 | 1 |

| F-score-AR10 | 3 | 2 | 7 | 5 | 6 | 4 | 8 | 1 |

| F-score-AR20 | 3 | 2 | 7 | 6 | 4 | 5 | 8 | 1 |

| F-score-TDF | 3 | 1 | 7 | 6 | 2 | 5 | 8 | 4 |

| F-score-T-FDF | 2 | 1 | 8 | 7 | 4 | 5 | 6 | 3 |

| Summation of ranks | 75 | 55 | 170 | 159 | 89 | 117 | 170 | 41 |

- Bold values indicate best results.

6. Conclusion and Future Work

In this work, we proposed a binary version of multiobjective approach using several metaheuristic algorithms with the aim of addressing the challenge of channel selection in EEG-based biometric person identification. The main purpose of this work is to demonstrate that not all available EEG channels need to be used to achieve high accuracy rate. Therefore, we introduce for modeling the problem of channel selection as an optimization issue, where the channel subset which optimizes the recognition ratio over a validation set is utilized as the fitness function.

The outcomes of experiments showed that the introduced method outperformed several metaheuristic algorithms and the one proposed by Rodrigues et al. [1]. It is worth noting that, while retaining high accuracy rates, the number of sensors has been lessened by half. Additionally, the outcomes displayed a positive correlation between the number of features obtained from the EEG signal and the accuracy ratio; i.e., further features lead to higher accuracy rates. Such finding suggests that the proposed algorithm has the potential to remove duplicate and undesirable features whereas retaining specific features.

Regarding the future work, we intend to evaluate selected metaheuristic algorithms over different features, such as time- and frequency-domain information, to improve the overall identification performance while selecting fewer channels.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This work was supported in part by the Korea Institute for Advancement of Technology (KIAT) grant funded by the Korea Government (MOTIE), the Competency Development Program for Industry Specialist, under Grant P0008703, and in part by the Ministry of Science and ICT (MSIT), South Korea, under the Information Technology Research Center (ITRC) Support Program IITP-2021-2018-0-01799 supervised by the Institute for Information and Communications Technology Planning and Evaluation (IITP).

Open Research

Data Availability

The dataset was taken from https://www.physionet.org/.