Surface Quality Automatic Inspection for Pharmaceutical Capsules Using Deep Learning

Abstract

Capsules are commonly used as containers for most pharmaceutical products. Thus, the quality of a capsule is closely related to the therapeutic effect of the products and patient health. At present, surface quality testing is an essential task in the actual production of pharmaceutical capsules. In this study, a deep learning-based capsule defect detection model, called CapsuleDet, is proposed to classify and localize defects in image sensor data from capsule production for practical application. A guided filter-based image enhancement method and hybrid data augmentation method are used in improving the quality and quantity of the raw data, respectively, to mitigate the low contrast issue and enhance the robustness of the model training. Deformable convolution module and attentional fusion feature pyramid are also used to improve the detection effect of capsule defects by effectively utilizing the semantic and geometric information in the extracted feature maps and catering to the detection of defects with different shapes and scales. The evaluation results on the capsule defect dataset demonstrate that the proposed method achieves 92.91% mean average precision and 22.16 frames per second. Moreover, its overall performance in terms of training time, model size, detection accuracy, and speed is better than that of the currently popular detectors.

1. Introduction

For the pharmaceutical industry, drug quality is relevant to the effectiveness of the treatment for the corresponding symptoms and patient health [1]. In recent years, compared with traditional tablets, granules, and other types of drugs, pharmaceutical capsules have been widely used in the pharmaceutical market owing to their ability of masking odors and quick absorption. The corresponding scale of production and sales has been also expanding continuously.

However, various types of capsule defects (e.g., dents and holes) are inevitable during mass production. These defects affect the appearance quality of a product and even directly affect the control of the dosage in the subsequent filling process by a pharmaceutical manufacturer [2]. If the defective products are not eliminated in time and put on the market, the actual efficacy of the capsules cannot be guaranteed, resulting in potential safety hazards that can cause economic losses and negative impacts on the enterprise. Hence, surface quality inspection of pharmaceutical capsules is an integral part of the production process.

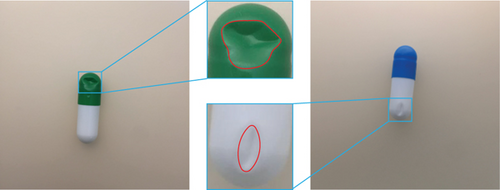

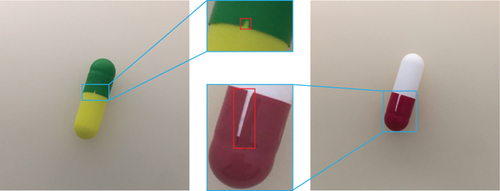

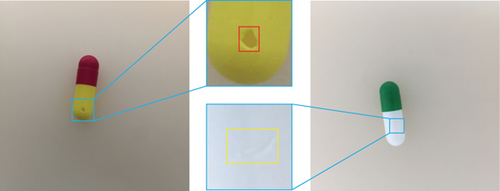

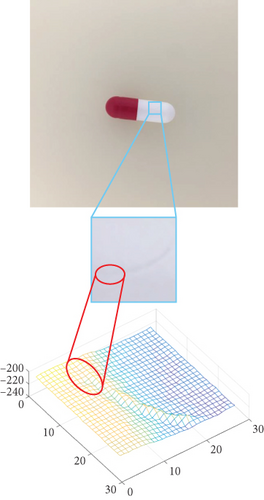

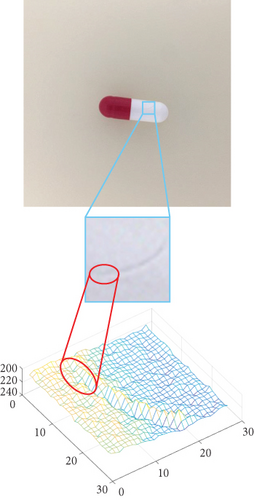

In general, the enterprise must not only improve production efficiency but also strictly ensure product quality for the entire industrial manufacturing domain. Thus, improving the quality of manufactured products without affecting production is the core competence of the manufacturing industry. As one of the important components in the manufacturing process, surface defect detection is an essential link to control industrial product quality [3]. Similar to most defect detection tasks, surface defect detection of pharmaceutical capsules has some pressing issues to be addressed because of the complexity of the following defects: (1) Shape anisotropy: uncertainty in the manufacturing process usually leads to large irregular variations in the shape of similar defects. As shown in Figure 1(a), the shape of the dented area is quite different (marked by the red curve), thereby placing high demands on the robustness of the method. (2) Scale difference: the extent of product damage is not always similar because of the disruption in online manufacturing processes and environmental factors. Figure 1(b) shows the large difference in scale between the same type of defects. Among them, the feature information of tiny defects after high abstraction is easily lost, making it difficult to be accurately located. (3) Low contrast: defect detection largely depends on the imaging effect of the features in the image, which is particularly important for learning-based methods via deep feature extraction. Figure 1(c) presents that detecting the weak scratch in the yellow box is more difficult than detecting the hole in the red box.

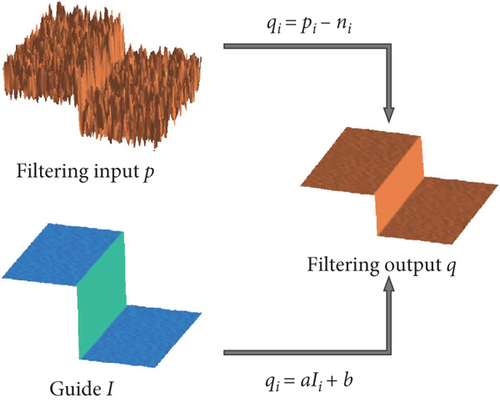

These challenges, which are common during surface quality inspection of industrial products, are gradually being addressed because of the effective progress achieved by numerous extensive and in-depth research works in the field of defect detection. Jin et al. [4] resolved the problem of detecting defects inside castings by combining traditional image processing with a classifier. Similarly, Wu and Lu [5] used support vector machines to complete the inspection of product packaging appearance quality. Other methods based on manually crafted features have also achieved good results [6–8]. However, these methods are weak when facing multiple types of defect features. They also fail to represent the detection results effectively (particularly for the minor defects). Deep learning-based methods have recently succeeded in computer vision tasks. Deep learning-based methods have been proved to be a more efficient solution than traditional image processing or machine learning methods [9, 10]. Thus, introducing deep learning methods into the field of surface defect detection has become increasingly favored. At present, deep learning approaches based on the convolutional neural network (CNN) are used to accomplish the quality control tasks for various industrial products through detection models with diverse network architectures. For shape anisotropy, the model must have strong deformation modeling capabilities to capture position shifts during visual perception [11]. However, the standard convolution in most common feature extraction networks does not have the expected adaptive ability. Inspired by [12], this study introduces deformable convolution in the feature extraction module to accommodate irregular variations in the shape of defects. Thus, adjusting the feature sampling area correspondingly to the shape information of the respective defect becomes possible, thereby reducing the background information contained in the defect area. For scale difference, the features at different levels of CNN involve different object scales [13], whereas the previous approaches [14, 15] focusing on feature learning at the end of the network lack small object information contained in the shallow features, further leading to unsatisfactory detection performance. A feature pyramid module is used in this study to address the issue by fusing multiple feature maps at different scales, thereby obtaining semantic information between different levels and enhancing the ability to recognize tiny defect features. For low contrast, the faint defect information in the image causes the model to have a problem from the initial feature extraction phase [16]. Moreover, the only remaining residual features gradually fade away with the deepening of the network. In this scenario, the model should have excellent effects at the primary phase of feature extraction and be biased toward the region of interest. Therefore, a guided filter is used in this study as an image enhancement method to highlight low-contrast defective features. The attention module is added to the feature fusion process to assure that the enhanced semantic information can be effectively transmitted.

- (1)

In the data preparation phase, the guided filter-based image enhancement method is used to the low contrast of defects. A novel hybrid data augmentation method is proposed to improve the generalization ability of the training model. This efficient way of processing raw image sensor data from quality and quantity perspectives can provide a solution for relevant research when facing similar situations

- (2)

A defect detection model named CapsuleDet based on deformable convolution and attentional fusion feature pyramid is proposed to adapt to the differences in shape and scale of surface defects in pharmaceutical capsules. It copes well with irregular variations in defect shapes and can explore rich feature information in multilevel feature fusion, thereby making a reference for the field of multiclass minor defect detection

- (3)

Compared with other typical detectors, such as SSD, Faster R-CNN, YOLOv3, FCOS, and Cascade R-CNN, the proposed method in this article has certain advantages in terms of comprehensive performance, including training time, model size, detection accuracy, and inference speed. Therefore, it has an excellent chance to complete deployment in the actual production process

The rest of this article is organized as follows. Section 2 presents an overview of the related works. Section 3 provides the details of the proposed methods. Section 4 describes the experimental details and the corresponding discussions. Finally, Section 5 concludes this article.

2. Related Work

In the past, surface defects in pharmaceutical products were mainly identified by manual visual inspection, which was too costly and inefficient in meeting the demands of quality control [17]. Nowadays, automated inspection technology based on visual perception is widely used for quality control tasks in industrial manufacturing [18], commodity packaging [19], and fruit grading [20] by replicating and surpassing the effects of the human eye. Therefore, surface quality automatic inspection, which can be mainly divided into two categories, is undoubtedly the ideal solution to ensure high throughput and high reliability of products in the pharmaceutical industry.

2.1. Traditional Detection Approaches

The related enterprises are eager to transition and upgrade from manual to automated detection to safeguard the quality of pharmaceutical products while reducing labor costs [21]. Many research works for the early exploration of automated detection have used the most common traditional detection approaches in machine vision to monitor pharmaceutical production quality, including two categories of methods: image processing and traditional machine learning.

2.1.1. Image Processing-Based Approaches

Mozina et al. [22] established a statistical model for pharmaceutical tablets using image processing algorithms, which were used to evaluate the quality of imprints by comparing the tablets to be inspected with the qualified tablets. Similarly, Kekre et al. [23] used a series of image transformation methods to count image information, such as greyscale, color, and texture, to determine the differences among defect features on the surface of pharmaceutical capsules and to classify various defect types. Gabriele et al. [24] accomplished the detection of defects, such as cracks on the surface of pharmaceutical glass tubes, using image filters and threshold segmentation algorithms.

2.1.2. Traditional Machine Learning-Based Approaches

Wang et al. [25] used the support vector machine (SVM) to achieve the binary classification of pharmaceutical capsule head defects based on image binarization. For multiple types of defects, Qi and Jiang [26] used the Canny edge-detection algorithm to extract features of defect areas in pharmaceutical capsules and then completed multiclass classification by the hierarchical SVM. Bahaghighat et al. [27] proposed a method for counting the number of cards within drug packages based on machine learning, which can be used to identify the accuracy of drug distribution on the production line.

These approaches achieved good performance in feature description and defect detection by relying on statistical modeling or cluster analysis. However, most of them are applied to homogenous textures or salient features, and they heavily depend on expert knowledge. When faced with complex textures and multiclass defects, these methods are also complex and do not have good robustness.

2.2. Deep Learning-Based Detection Approaches

Unlike traditional detection approaches, deep learning methods supported by massive data information and powerful computing power can learn autonomously and determine representation effectively [28]. Moreover, they are flexible and stable in processing complex semantic information. Thus, they have the potential to replace traditional methods in the field of quality inspection and broad development prospects. At present, deep learning-based detection approaches have two main types: image classification and object detection.

2.2.1. Image Classification-Based Approaches

At present, deep learning methods in image classification mainly use CNN-based classification networks, which include two parts: feature extractor (composed of the cascaded convolutional and pooling layers) and classifier. The images for inspection are processed by the well-trained classification model, which can output the class of images and its class confidence. Accordingly, Zhou et al. [29] proposed a CNN-based defect detection model for pharmaceutical capsules. This model can classify various capsule defects, such as dent, hole, and stain. However, it cannot locate the defects precisely. Other researchers have also used this method for surface quality inspection tasks on manufactured products, such as steel [30] and fabric [31], and have achieved good results. Although image classification approaches can achieve great classification accuracy and high stability, they are only applicable in detecting single defect types. Representing accurately the specific semantic and location information of each defect in the image is difficult when a single image contains several defects of the same or different types.

2.2.2. Object Detection-Based Approaches

Compared with image classification-based approaches, object detection-based approaches that use CNN-based detectors for quality inspection tasks have the ability of manual visual inspection in the traditional sense of defect detection (i.e., to achieve defect localization and classification). The current deep learning-based object detection models can be roughly divided into two categories: two-stage methods (e.g., Faster R-CNN [32] and Cascade R-CNN [33]) and one-stage methods (e.g., SSD [34], YOLOv3 [35], and FCOS [36]). The common idea of both is to first abstract the important semantic features of the image by a specially designed feature extractor, so as to obtain a deep representation effect, and then complete the category division and border localization of the object by the classifier and regressor, respectively. The difference between the two methods is determined by whether the generation phase of the candidate object boxes is contained. The former focuses on detection accuracy and uses this phase to perform an initial screening of objects, which provides high-quality localization options for subsequent classification and localization, and also increases the detection time to a certain extent. Conversely, the latter further relies on well-designed feature fusion operations to directly provide accurate semantic information for subsequent processing, which enables it to guarantee detection accuracy while having certain advantages in detection speed. Therefore, both methods have relatively wide application in the existing research of product surface defect detection when facing diverse practical needs. In the early exploration of drug defect localization, a method based on R-CNN for pharmaceutical capsule defect identification and localization was proposed in [37]. However, this method only achieves the defect detection of two types: scratch and stain. Zhang et al. [38] proposed a detection method for foreign particles in liquid pharmaceutical products. This method first locates the suspect objects with Faster R-CNN and then classifies the foreign particles and noise with the random forest classifier. However, it does not achieve a classification of specific classes for foreign particles because of the various uncertainties in the appearance features.

In general, these existing research works must be improved based on the following aspects: (1) Most methods cannot locate defects in pharmaceutical capsules. (2) The few methods that can locate defects achieve only a few types of detection. (3) These methods are also only used for large or salient defect features. Therefore, a deep learning-based surface quality inspection method for pharmaceutical capsules is proposed in this study. In contrast to the previous methods, the proposed method in this study can accomplish the accurate classification and localization of multiclass defects while effectively addressing the existing challenges.

3. Methods

3.1. System Overview

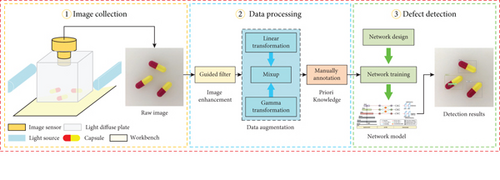

- (1)

First, image collection is the primary link in the task of surface defect detection. However, direct irradiation by light sources caused by the easily reflective property of the capsule surface leads to local highlights in the image, which interfere with the feature extraction and learning effect of the model. Therefore, we propose an image collection scheme, as shown in Figure 2. The collection process is illuminated by the lateral LED light source. A cubic photomask composed of light diffuse plates is designed and processed to avoid image highlights. This cubic photomask feeds the capsule with transmitted light. This process can prevent light spots on the raw image captured by the image sensor due to the reflection of bright light on the capsule surface

- (2)

Then, the link of data processing after the completion of image collection is set in this study to address the shortcomings of the original data in terms of quantity and quality. Therefore, this link can provide a data guarantee for the subsequent network training phase, thereby enabling the model to have good convergence and detection accuracy

- (3)

Finally, a surface defect detection model based on deep learning is designed for the characteristics of the pharmaceutical capsule dataset to classify and locate multiclass defects in capsules accurately. The specific method is described in detail below

3.2. Image Enhancement

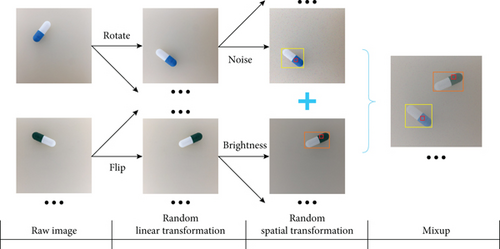

3.3. Data Augmentation

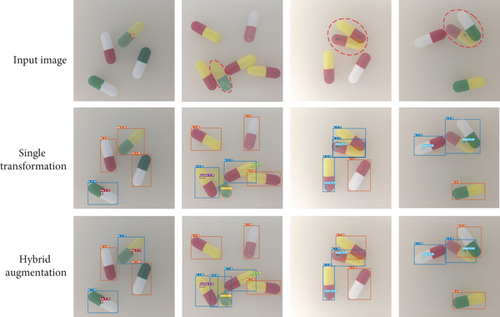

With 7380 sample images, the amount of available data is increased two times through data augmentation operation. Compared with the commonly used single transformation method, the hybrid approach not only increases the training samples but also expands the type and number of objects contained in a single image, which can further enhance the generalization performance of the model while avoiding poor model fitting.

3.4. Defect Detection

We choose the one-stage detector technology route to combine the detection advantages of speed and accuracy in designing our CapsuleDet, which can be adapted to the actual rhythm of capsule production and control the application cost of detection technology. In the following, the construction of the network structure is introduced in detail, and the two main functional modules, namely, the deformable convolution module and the attentional fusion module (AFM), are described separately.

3.4.1. Network Architecture

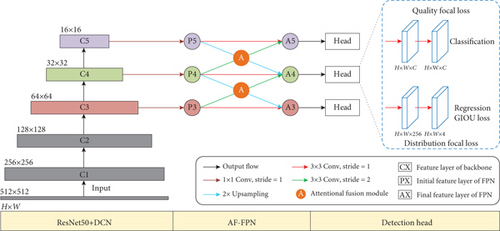

A one-stage object detector is commonly made up of a backbone network, a detection neck that is typically a feature pyramid network (FPN), and a detection head for object classification and localization. The CapsuleDet proposed in this study also consists of these three parts, and its overall architecture is shown in Figure 5. The corresponding detection components are introduced as follows.

(1) Backbone. We choose ResNet50 [41], which is widely used for computer vision tasks, as the feature extractor for our CapsuleDet. It consists of five feature layers, namely, {C1, C2, C3, C4, C5}. Figure 5 shows that we only use C3, C4, and C5 for subsequent feature fusion to trade off the memory usage against efficiency. We introduce the deformable convolutional network (DCN) to enhance the effectiveness of the extracted features for various defect shapes by replacing the 3 × 3 convolution operation in the three output layers on the basis of cost control.

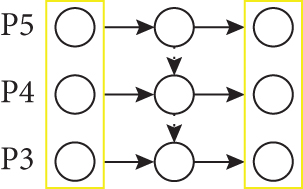

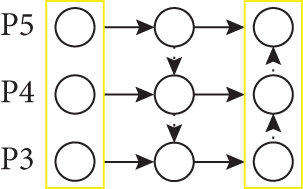

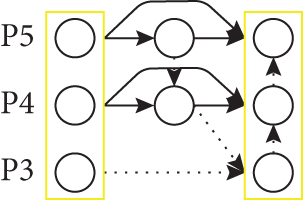

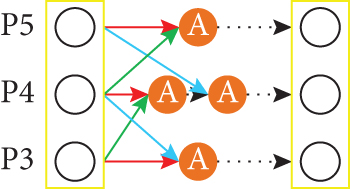

(2) Detection Neck. The main function of the detection neck is to process the features extracted from the backbone network at all levels to fuse and utilize the semantic information and geometric details contained in the features and to lay a solid foundation for subsequent classification and regression of the detection head. We opt for the pyramid-style feature fusion module to accomplish this task. Figure 6 illustrates the comparison of the attentional fusion FPN (AF-FPN) proposed in the study with different classical FPNs. Based on [42], the designed AF-FPN uses shared convolution operations and interpolation operations to exchange the same feature map size between the upper and lower feature layers Pi+1, Pi−1 and feature layer Pi, which can ensure the consistency of the corresponding semantic features while avoiding the excessive computation caused by independent convolution in traditional FPN. We use the attention-based feature fusion module instead of the traditional element-wise addition operation to enhance the effectiveness of the last crucial step in FPN (i.e., feature fusion). The details of which are described subsequently.

(3) Detection Head. CapsuleDet has a very simple detection head. As shown in Figure 5, it consists of two branches: classification and regression. Each of these contains only one layer of 3 × 3 convolution for the normalization of the features from the FPN, unlike the typical one-stage detector with four convolutional layers in the detection head. This setting is chosen because we determine in our experiments that the number of convolutional layers has little effect on the detection accuracy of the capsule dataset and that additional convolutional layers increase memory usage and detection time. Each branch uses one layer of 3 × 3 convolution for classification or regression, where C denotes the number of categories for classification and 4 represents the predicted value for bounding box localization. For the loss function, we adopt the scheme proposed by generalized focal loss (GFL) [46], which is described as follows.

3.4.2. Deformable Convolution Module

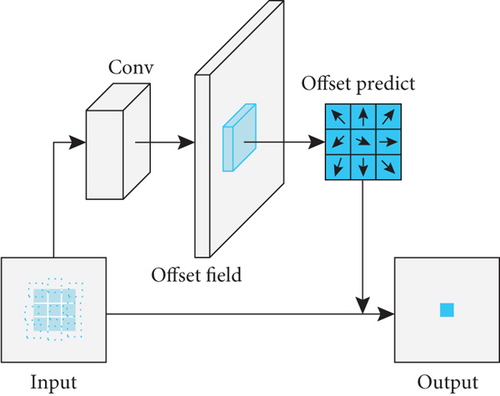

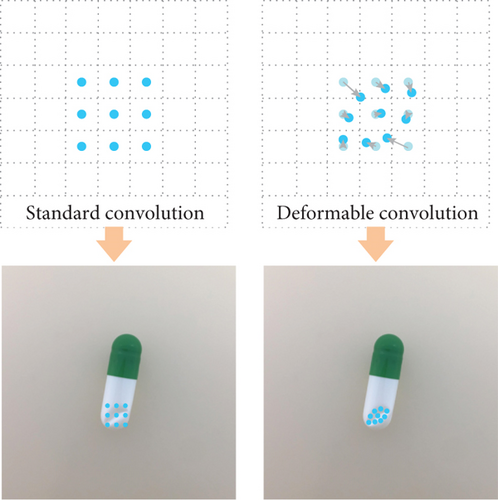

Restricted by the default sampling method (matrix sampling) of traditional CNN, the pure standard convolution lacks the adaptive ability to the geometry of the input object when processing the image, making its operation process suffer from certain transformation defects. As such, it falls into a bottleneck of difficulty in improving accuracy when identifying irregular defects. For the network to have certain invariance in the process of affine transformation, it is usually necessary to change the original sampling method as a way to enhance the deformation modeling capability of ordinary convolution. Therefore, by adding offset prediction to the sampling points of conventional convolution to obtain new sampling points [12], DCN can use the offset to learn how to adaptively adjust the sampling area after the shape features of the instance objects are processed by additional convolution layers. Inspired by this, we apply DCN to the feature extraction module in the backbone network.

As illustrated in Figure 7(a), the sampling offset is predicted in parallel to adjust the regular sampling area by incorporating an additional convolution operator. More specifically, in the implementation of the deformable convolution operator, an additional convolution layer is added after the input feature map to obtain an offset field of the same size as the input feature map. Note that if the 3 × 3 convolution is implemented, its channel dimension direction includes 3 × 3 two-dimensional offset weights (as a representation of the bias in the x and y directions). And each position in the offset field represents the offset of the original convolution kernel from the corresponding position on the input feature map. When the final deformable convolution calculation is performed, the offset at the corresponding position will be added to the sampling position of the original convolution kernel to obtain the sampling position after the offset. Then, the output feature map can be calculated by the same feature sampling procedure as the standard convolution. Compared with the standard convolution, the deformable convolution is therefore allowed to learn the offsets of diverse defect shapes effectively and focus intensely on the semantic regions of interest to improve the network’s own ability in modeling deformation. Furthermore, Figure 7(b) simulates the difference in sampling between the two when dealing with irregular defective regions in the capsule. The use of deformable convolution instead of a standard one can effectively improve the effect of feature extraction. However, the extra convolution operator inevitably increases the computational effort, thereby prompting us to consider the balance between accuracy and speed when introducing DCN.

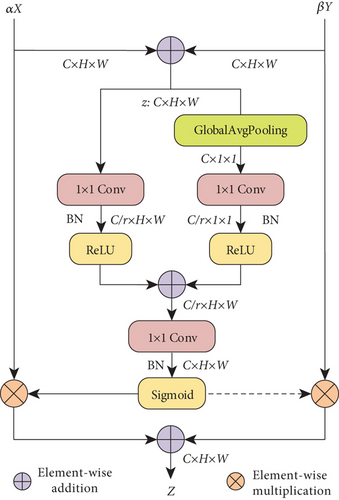

3.4.3. Attentional Fusion Module

The main idea of FPN is to form multiscale feature blocks through feature fusion [48] (i.e., the aggregation of features from various levels or branches), and its ultimate aim is to improve the generalization of the network to different scale objects. However, most classical FPNs usually implement feature fusion at different levels only by simple operations (e.g., summation or concatenation), which may result in the loss of the combined focus for contextual semantic information during the fusion of features. We propose AFM to combine effectively the adjacent features that are inconsistent in semantics and scales.

4. Experiments

4.1. Dataset

The experimental results of the CapsuleDet presented in this study were evaluated on the capsule defect dataset that we constructed. In this dataset, 7380 capsule images are included, along with five common types of capsule surface defects (i.e., dent, scratch, hole, stain, and gap). Each image has a resolution of 512 × 512. The detection task of the dataset is to classify and locate the corresponding defects as accurately as possible while determining whether the capsule is good or bad in the image. A common dataset labeling tool (LabelImg) was used to label the capsule dataset to annotate data. During this time, the specific capsules and the corresponding defects were labeled by professionals in the form of rectangular boxes, which contain the respective coordinates and category information.

In addition, the annotation information was preserved as XML files in PASCAL VOC format. Each image corresponds to an XML file. Table 1 shows the distribution of various types of data in our capsule dataset. In particular, we randomly divided the entire dataset into training and testing data in a ratio of 8 : 2 and avoided the uneven distribution of various defects, ensuring the fairness of subsequent experimental comparisons.

| Dataset | Number of images | Number of capsules | Number of defects | |||||

|---|---|---|---|---|---|---|---|---|

| OK | NG | Dent | Scratch | Hole | Stain | Gap | ||

| Training | 5904 | 5994 | 8006 | 1431 | 1636 | 1679 | 1573 | 1687 |

| Testing | 1476 | 2221 | 2754 | 445 | 581 | 587 | 557 | 584 |

| Total | 7380 | 8215 | 10760 | 1876 | 2217 | 2266 | 2130 | 2271 |

4.2. Evaluation Metrics

General research comparisons only evaluate model performance by various types of accuracy metrics. Considering the actual landing demands for engineering applications, we chose the following four aspects in performing the multidimensional evaluation.

4.2.1. Training Time

For deep learning-based approaches, the length of training time determines the speed of model updating. In particular, the shorter the time spent on training, the more efficient is the learning of the new classes for the model. Therefore, the training time of the detection model was recorded to evaluate its adaptability when facing complex and changeable situations in industrial manufacturing.

4.2.2. Model Size

In practical industrial applications, the matching costs for the corresponding detection technology should be as low as possible to cater to the hardware facility conditions. As the direct object of the transfer from the experimental phase to the industrial environment, model size affects the compatibility of the application client. Thus, we used the memory size occupied by the weight parameters of the model after training as an important basis for evaluating this metric.

4.2.3. Detection Accuracy

As one of the indispensable evaluation metrics in reflecting the superiority of detection results, detection accuracy can demonstrate the direct effectiveness of the model for capsule datasets. Therefore, mean average precision (mAP) was used to measure the overall detection accuracy of the model for the capsule dataset.

4.2.4. Inference Speed

Detection speed is another essential factor in evaluating model performance that cannot be ignored to fit the actual production rhythm. Hence, the detection speed (i.e., the number of detectable capsule images per second) of the model during inference was evaluated using frames per second (FPS).

4.3. Implementation Details

4.3.1. Computation Platform

The hardware configuration for the experiments is an Intel Xeon Silver 4210R @ 2.40 GHz processor and an NVIDIA GeForce RTX 2080Ti (with 11 G memory). The software environment includes a 64-bit Windows 10 operating system, the open-source deep learning framework PyTorch, and the integrated development environment PyCharm.

4.3.2. Parameter Setting

The initial parameters of the models for all methods at the beginning of training were derived from the corresponding backbone network weights that have been pretrained on the ImageNet dataset to shorten the respective training cycles. The specific training details and hyperparameter settings are shown in Table 2. For the model training scheme, we set the epoch size to 40 and the initial learning rate to 0.001. The learning rate was also adjusted via piecewise decay to ensure that each model could achieve convergence. Given the GPU memory limitations, the batch size was set to 1 for the two-stage method to drive the model training properly and 8 for the rest of the methods. The optimizer during training is Momentum, whereas the parameter regularization method is L2. In addition, the warmup strategy was used for model training to ensure the stability of the later learning.

| Parameter | Value |

|---|---|

| Epoch size | 50 |

| Batch size | 8 |

| Learning rate | 0.001 |

| Decay_factor | [0.5, 0.1, 0.05, 0.01] |

| Decay_milestones | [5, 15, 25, 40] |

| Warmup_factor | 0.1 |

| Warmup_steps | 1000 |

| Momentum | 0.9 |

| L2_factor | 0.0001 |

| Score_threshold | 0.025 |

| NMS_threshold | 0.6 |

4.4. Main Results

4.4.1. Comparison with Baseline

We first compared the proposed CapsuleDet with the baseline model GFL [46] to examine the model performance of the former. Table 3 shows the overall performance comparison between the two. The overall performance of CapsuleDet is better than that of the baseline owing to the lightweight design of the network structure and the addition of functional modules. CapsuleDet discards the two scale feature maps (P6 and P7) derived from the C5 feature layer, and the number of convolution layers used by the detection head is reduced, thereby making the model light. Therefore, compared with those of the baseline, the training time and the FPS during inference of our CapsuleDet are reduced by nearly 0.5 h and improved by approximately 3.6, respectively. CapsuleDet introduces DCN and AC-FPN with shared convolution to ensure model accuracy, effectively improving the mAP of the model by approximately 2.2% while avoiding an excessive increase in model parameters.

| Methods | Backbone | Training time (h) | Parameters (M) | mAP (%) | FPS (Hz) |

|---|---|---|---|---|---|

| Baseline [46] | ResNet50 | 3.76 | 186.16 | 90.67 | 17.58 |

| Our CapsuleDet | ResNet50+DCN | 3.28 | 182.64 | 92.91 | 21.16 |

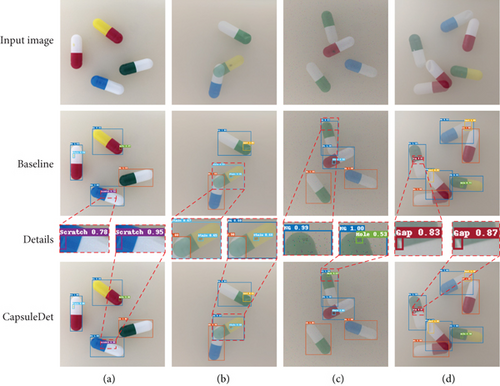

As shown in Figure 9, the classification confidence of CapsuleDet is higher than that of the baseline when the two localization effects are similar. The localization accuracy of CapsuleDet is higher than that of the baseline when the difference in classification results between the two is small. As shown in Figure 9(b), the baseline model incorrectly determines the corresponding defective capsule as a defect rather than a bad capsule while detecting a defect. Figure 9(c) presents its missed detection when facing minor defects. By contrast, our CapsuleDet solves these problems well and illustrates the effectiveness of the model in detecting objects with different scales.

4.4.2. Comparison with Currently Popular Object Detectors

Table 4 shows the overall effectiveness of our CapsuleDet compared with modern mainstream methods, including two-stage versus one-stage methods. The performances of our model in all evaluation aspects, which are described below, are better than those of modern mainstream methods. It is worth noting that the backbone of each popular method follows the optimal framework proposed in their original studies, and the training settings of the comparison process are all consistent to ensure the fairness of the experiments.

| Methods | Backbone | Training time (h) | Parameters (M) | mAP (%) | FPS (Hz) |

|---|---|---|---|---|---|

| TTFNet [49] | DarkNet53 | 4.12 | 263.35 | 79.96 | 26.57 |

| SSD [34] | VGG16 | 4.82 | 141.37 | 84.05 | 24.76 |

| Faster RCNN [32] | ResNet50 | 13.48 | 189.76 | 88.07 | 5.45 |

| FCOS [36] | ResNet50 | 5.97 | 186.22 | 89.78 | 14.27 |

| Cascade RCNN [33] | ResNet50 | 9.26 | 398.87 | 90.85 | 12.33 |

| YOLOv3 [35] | DarkNet53 | 4.46 | 353.94 | 91.10 | 30.15 |

| Our CapsuleDet | ResNet50+DCN | 3.28 | 182.64 | 92.91 | 21.16 |

(1) Training Time. The two-stage method takes much more time to train than the one-stage method does because of the separation of the candidate box generation and selection stages from the subsequent classification and regression phases. Compared to the one-stage method, our CapsuleDet has 0.84 h less training time than the suboptimal method TTFNet [49] after several lightweight operations. Moreover, compared with the accuracy of the suboptimal method YOLOv3, CapsuleDet has an advantage of 1.18 h. Thus, CapsuleDet is highly efficient in learning and can quickly adapt to the identification and localization of new classes of defects for complex and changing manufacturing environments.

(2) Model Size. CapsuleDet has 41 M more weight parameters than the minimal model SSD has. However, compared with the memory usage size of other models, particularly the accuracy suboptimal method YOLOv3, the memory usage size of our CapsuleDet is only nearly half. Therefore, our model can be configured at a low cost for the same hardware facilities and easily arranged for the industrial application side.

(3) Detection Accuracy. As seen in Table 4, CapsuleDet has the best detection accuracy in this important detection metric. In particular, our CapsuleDet outperforms the training time suboptimal method TTFNet by approximately 13% mAP and by roughly 8.9 points compared to the model SSD with the minimum number of parameters. Importantly, CapsuleDet also has an advantage of 1.8 points over the accuracy of the suboptimal method YOLOv3.

(4) Inference Speed. The introduction of DCN inevitably reduces the inference speed of the model. However, CapsuleDet still has a speed advantage over the two-stage methods of the RCNN family, despite the difference of nearly 9 points compared to the suboptimal accuracy method YOLOv3. However, the difference in the inference speed between these models is minor compared to other one-stage methods. Moreover, our CapsuleDet has an inspection speed of 21.16 FPS to meet the actual capsule production rhythm.

4.4.3. Comparison with the Classical FPN

- (i)

In terms of training time and model parameters, compared with the independent convolution in other feature fusion modules, the shared convolution used in our AF-FPN can effectively reduce the training time and compress the model size to a certain extent

- (ii)

In terms of detection accuracy and speed, the single top-down fusion path (i.e., FPN) lacks effective use of low-level features. However, the addition of other bottom-up augmented paths (i.e., PAN and Bi-FPN) can effectively aggregate semantic information from the upper and lower feature layers to improve the detection accuracy, but the increase is less than 1 point, and it also slows down the inference speed in a certain extent

- (iii)

In general, similar to the bidirectional fusion path, AF-FPN chooses the interactive fusion of the upper and lower feature layers to maximize the use of the feature information at all levels. Meanwhile, we chose to weigh the fusion operation with an attention module to avoid the semantic information of different feature layers from being confused in the fusion phase by ensuring that the fusion process was performed correctly. Although the AFM reduces the inference speed, this effect is relatively minor. Compared with PAN and Bi-FPN, the detection accuracy of our proposed optimized version of the feature pyramid module (AF-FPN) is improved by 1.1 points over the baseline module FPN

4.5. Ablation Experiments

4.5.1. Ablation Studies of Data Augmentation

To analyze the impact of data augmentation, we first compared the single transformation method with the hybrid data augmentation proposed in the article. Before the experiment, the training data used in both schemes were augmented to the same amount by the respective method. And the testing data contain both types of augmented data as a way to promote a fair comparison.

As shown in Table 6, compared with the single transformation method, the hybrid data augmentation method has data diversity that enables the model to be trained effectively under defective samples with rich semantic information while not affecting the training efficiency, memory usage size, and inference speed of the model. Specifically, it can deliver an accuracy advantage of approximately 1.2%. And Figure 10 further demonstrates the detection effect of both data augmentation methods on the same test image. As can be seen, compared to the single transformation method, the hybrid enhancement method enhances the localization effect of minor defects and the detection rate of low contrast objects by the data diversity. Meanwhile, in the case of overlapping capsules, the single transformation method is limited by its monotonicity, which leads to false detection or missed detection of overlapping capsules. In contrast, the hybrid augmentation proposed in the article can solve this problem well. The multiple expansions of quantity and object prove the effectiveness of the hybrid data augmentation method in prompting model learning and fitting.

| Augmentation method | Training time (h) | Parameters (M) | mAP (%) | FPS (Hz) |

|---|---|---|---|---|

| Single | 3.28 | 182.64 | 91.67 | 21.16 |

| Hybrid | 3.28 | 182.64 | 92.91 | 21.16 |

4.5.2. Ablation Studies of Model Components

A series of ablation studies were performed to analyze the importance and the corresponding contribution of the various components in CapsuleDet. As shown in Table 7, the experiments were conducted by incrementally adding the hybrid data augmentation, deformable convolution, feature pyramids with normal fusion, and feature pyramids with attentional fusion on CapsuleDet, thereby observing the effect of each component.

| DCN | NF-FPN | AF-FPN | Training time (h) | Parameters (M) | mAP (%) | FPS (Hz) |

|---|---|---|---|---|---|---|

| 2.58 | 170.73 | 89.75 | 23.29 | |||

| ✓ | 3.23 | 174.94 | 91.56 | 22.63 | ||

| ✓ | ✓ | 3.25 | 181.78 | 92.49 | 22.24 | |

| ✓ | ✓ | 3.28 | 182.64 | 92.91 | 21.16 |

Several valuable conclusions can be drawn from the results in Table 7: (i) The efficacy brought by the addition of each component is not conflicting. The progressive improvement in mAP shows that the power of each module can effectively reduce the difficulty of detecting capsule defects. (ii) Although the lightweight operation degrades the performance of the baseline model, the introduction of deformable convolution greatly enhances the performance of the model in detecting multiscale defects, by approximately 1.8 points of accuracy improvement compared to the original model. It makes the model worth the cost in terms of the increased number of parameters and computation. And compared to not using attentional fusion, feature pyramids with attention can further improve the detection accuracy by enhancing feature association. (iii) In automatic defect detection, the real-time performance of the detector is also considered an important metric. In the last column of Table 7, the inference speeds of the model for capsule defect detection under different configurations are compared. Although adding the designed module to the model prolongs the inference time, CapsuleDet has no considerable reduction in FPS compared with the incomplete model. Moreover, CapsuleDet can still meet the time requirements of the existing tasks. Furthermore, compared with those of the currently popular detectors and baseline models, the increase in training time and model memory usage of CapsuleDet remains within acceptable limits.

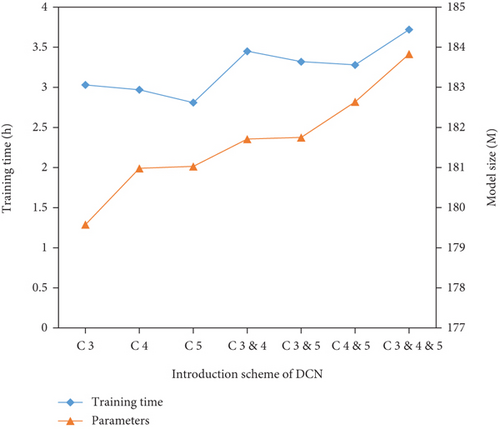

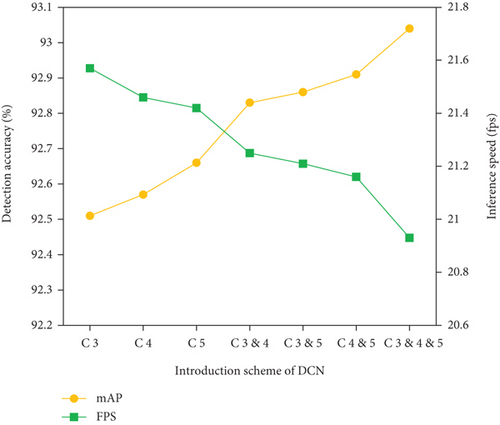

4.5.3. Ablation Studies of DCN

As mentioned earlier, DCN inevitably affects the performance of the model in other aspects while increasing accuracy. We conducted experiments on permutations between the three feature layers {C3, C4, C5} to explore a good choice for introducing DCN in the backbone network ResNet50 by observing the balance point of CapsuleDet performance in each aspect. Among them, the original remaining training parameters and model configuration remain unchanged. However, the setting of the DCN introduction is different. Figure 11 illustrates the performance effect of introducing DCN at different stages on CapsuleDet.

Figure 11(a) shows that the training time and memory usage of the model gradually increases with the introduction of DCN in other feature layers. The training time of the model when the DCN is introduced into the feature layer containing C5 is shorter than that in the opposite case. In addition, each increase in the number of DCN introduced results in an increase in memory usage of less than 2 M, which is negligible compared with the model size of the majority of the currently popular detectors. Figure 11(b) shows that the additional DCNs result in not only increased accuracy but also a corresponding slowdown in inference speed. Although the trends of increase and decrease are similar, the loss of less than 0.6 FPS (i.e., approximately 0.6 more capsule images can be detected per second) appears minor compared with the implication of the accuracy gain. Therefore, the introduction of DCN in the feature layers {C4, C5} is the good choice for our CapsuleDet training configuration to obtain the highest detection accuracy possible while considering the training time factor. The previous experiments were also conducted under this choice.

4.6. Further Analysis and Discussion

From the above experimental results, it can be concluded that the proposed method has a more balanced and comprehensive performance under multiple metrics assessment. The performance leadership of the method cannot be separated from the guidance of diversity data, the adaptation of different shape, and the fusion of multiscale features. Among them, the hybrid data ensures the diversity of the learning process and lays the foundation for the model to handle defect detection of multiple objects. The introduction of deformable convolution effectively enhances the self-adaptive ability of the model to cope with different defect shapes and improves its detection effect of multiple types of defects. And the multiscale fusion mechanism enables the network to adapt to different defect scales by enriching the semantic information of each stage. These are the strengths of the proposed method in this article over other methods.

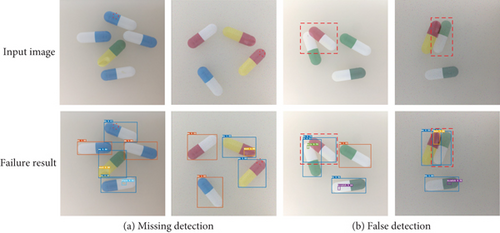

However, our method continues to have failure cases when facing some difficult samples. As can be seen in Figure 12(a), our method suffers from missing detection in detecting some local defects. This situation may occur in the detection process of low-contrast defects under weak illumination or noise interference. Although the model is able to determine its entirety as an unqualified capsule, it is difficult to filter and locate the specific defect location from the network. This is mainly because with the in-depth extraction of features by the network, the location information of the defects under light or noise interference is gradually lost, which affects the detection effect of the model. In addition, the model may also have a false detection situation as shown in Figure 12(b). When the defective capsules overlap with the qualified capsules, if the junction area is close to the defect, it will cause the network to regard the originally qualified capsules as having defects, which will cause the interference of discrimination. Whether missing detection or false detection, these confusing situations were not expected earlier. For this, the above problems are mainly attributed to the oversimplicity of the detection head, which makes the network too sensitive to complex interference variations. And the robustness of the model can be further extended by enhancing the traceability and differentiation of the location and semantic information output with the detection head. This will be the future work that we need to investigate.

5. Conclusions

Given the practical landing needs of engineering applications, a deep learning-based method for detecting surface defects in pharmaceutical capsules was proposed in this study. Through efficient processing of raw data (including quality enhancement and quantity augmentation) and well-designed detection model architecture (including lightweight operation and the addition of functional modules), our CapsuleDet achieves a more encouraging overall performance than the baseline models and existing popular detectors. In addition to its powerful recognition and localization capabilities, it has good training time and model size. Compared with the methods in the existing relevant research, which have few types of recognition and lack the ability to locate defects, our method can achieve the detection of multiclass capsule defects while discriminating between good and bad capsules. It can effectively improve the product appearance quality and market competitiveness of the relevant pharmaceutical enterprises.

Deep learning requires a huge amount of training data to produce continuous improvement in performance. Thus, it greatly increases the cost of collecting industrial data and the manual annotation cost during training. The use of few data for defect detection or automated sample annotation will be one of the future directions of our research. In addition, pixel-level segmentation of capsule defects via image semantic segmentation technique to characterize the defect shapes excellently is also in our plans for future work.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Acknowledgments

This work was supported in part by the National Key Research and Development Program of China (No. 2020YFB1713304), the National Natural Science Foundation of China under Grant 62166005, the Key Laboratory of Ministry of Education Project (No. QKHKY (2020) 245), and in part by the Guizhou University Research Projects under Grant [2019]22 and Grant [2020]16.

Open Research

Data Availability

Code and dataset have been made available at https://github.com/D-ai-Y/CapsuleDet.