[Retracted] Dancer Tracking Algorithm in Ethnic Areas Based on Multifeature Fusion Neural Network

Abstract

Due to the complex posture changes in dance movements, accurate detection and tracking of human targets are carried out in order to improve the guidance ability of dancers in ethnic areas. A multifeature fusion-based tracking algorithm for dancers in ethnic areas is proposed. The edge contour model of video images of dancers in ethnic areas is detected, and the video tracking scanning imaging model of dancers in ethnic areas is constructed. The video images of dancers in ethnic areas are enhanced based on the initial contour distribution, and a visual perception model of dancers tracking images in ethnic areas is established. To improve the algorithm’s estimation of complex poses and finally complete the dance movement recognition, a feature pyramid network is used to extract the features of dance movements, and then, a multifeature fusion module is used to fuse multiple features. The tracking algorithm proposed in this paper has higher robustness than other algorithms and effectively reduces the error samples generated during the tracking process, thus improving the accuracy of long-term tracking.

1. Introduction

Dance in ethnic areas is a treasure of traditional art in my country. In order to realize the inheritance of dance in ethnic areas, it is necessary to optimize and guide dance in ethnic areas [1]. With the rise of artificial intelligence, computer vision technology has developed rapidly in recent years. As an important part of the field of computer vision, visual object tracking has also received extensive attention and in-depth research. Computer vision [2] plays an indispensable role in many practical applications. The eyes are an important tool for humans to perceive the external world. Humans use vision to perceive and understand various information such as the location, size, color, and type of external things [3]. Computer vision collects image information through sensor equipment and obtains the information contained in the image through image processing, detection, recognition, understanding, etc., so as to achieve the purpose of perceiving the external world. At present, the visual target tracking technology has been developed rapidly, especially in the case of simple background; few interfering objects or setting constraints, the tracking effect has been able to meet the needs of human beings [4]. However, there are still many challenges in target tracking in practical applications, especially the illumination changes, deformation, occlusion, background clutter, and other factors during the tracking process have a great impact on the performance of the target tracking algorithm. The current optimization algorithm can only solve the problems caused by a single or a few factors and cannot fully adapt to complex scenarios. The limitation of a single feature makes it difficult for tracking algorithms to deal with various disturbances in the tracking process. Therefore, accurate and robust long-term tracking of dancers still has a long development process [5].

Target tracking is an important link in the field of computer vision. Its main purpose is to track and locate the moving target of each frame of image in a video sequence and obtain the information of the target’s position, moving direction, speed, size, etc., which is the basis for the next higher-level analysis of the target object [6]. Video tracking technology involves many disciplines, such as computer science, mathematics, physics, optics, and morphology, and it integrates many technologies, such as image processing, video feature extraction, pattern recognition and artificial intelligence, information analysis, detection, and recognition and tracking of target motion information, and has important significance and application prospect in both civil and military aspects [7]. Human detection and tracking technology, which can automatically identify and track dancers’ targets, have always been a hot research direction in the field of computer vision [8]. The application field, however, has higher requirements for human detection and tracking technology due to the complexity and dynamics of the stage environment, and it is necessary to follow the movements of dancers on the stage in real time and consistently, or the tracking effect will be poor. The necessary hardware and software conditions for target tracking are provided by the continuous improvement of computer performance and the extensive and in-depth study of computer vision algorithms [9]. At the moment, the most common methods for selecting and understanding features are representation fusion and gesture-based features. However, because the stage background and costumes of dance movements are changeable, and occlusion and self-occlusion are common during the performance process, representation information fusion is unable to accurately and completely express human motion information in the majority of cases [10]. The pertinence of training and the ability of standardized correction of movements can be improved by studying the tracking technology of ethnic dancers, analyzing the movement characteristics of ethnic dancers, and guiding and judging by experts through feature extraction. This paper proposes a multifeature fusion-based dancer tracking method in ethnic areas.

Although target tracking has been applied in many fields for a long time, the change of the environment in which the target is located and the change of the state of the target itself will affect the performance of the tracking algorithm [11]. Therefore, finding a reliable, adaptable, and efficient tracking algorithm has always been the research topic of researchers [12]. With the development of image processing design, the methods of video surveillance and visual image analysis are used to reconstruct the images and identify the features of dancers in ethnic areas. According to the results of image analysis, the dancers’ movements can be corrected and standardized, and the training and optimization guidance ability of dances in ethnic areas can be improved [13]. This paper proposes a multifeature fusion method for tracking dancers in ethnic areas. The video image samples of dancers in ethnic areas are analyzed, and the outline model of the video images of dancers in ethnic areas is constructed. By connecting high and low resolution feature maps, the deep feature map samples with global information are fused with shallow features, so that the high resolution feature maps of each stage have low resolution feature map representation. In order to verify the effectiveness and feasibility of the multifeature fusion method for tracking dancers proposed in this paper, a basic dance data set is established, that is, recording dance videos from front and side angles and collecting Wi-Fi signal information corresponding to movements synchronously. Then, a comparative experiment is designed on this data set. The experimental results show that the method based on multifeature fusion has higher tracking accuracy than other methods.

2. Related Work

Reference [14] proposed a moving body tracking algorithm based on region segmentation contour, which has more accurate and stable performance in complex occlusion environments. In reference [15], a human target tracking algorithm based on multitemplate regression weighted mean shift is proposed. The target template contour set is constructed by changing the pose and angle of the target body, which has better real-time target detection [16]. Multiple samples are placed in the sample bag, each sample bag corresponds to a label, and the target is tracked using the sample bag’s online learning. Based on AdaBoost-STC and random forest, the reference [17] proposed an eye tracking and localization algorithm. Based on the likelihood map and optical flow, reference [18] proposes a real-time AdaBoost cascade face tracker. The algorithm proposed in reference [19] uses the circulant matrix’s properties to achieve similar dense sampling of the target’s surrounding area to obtain a large number of positive and negative samples and constructs the target classifier using the ridge regression and correlation filtering principles, as well as the circulant matrix in the Fourier space. A number of properties help to speed up model training and detection by simplifying matrix calculations. In addition, a kernel technique for mapping ridge regression from linear to nonlinear space is introduced, which improves the algorithm’s tracking performance. Reference [20] added a detection module to the tracking algorithm and calculated the weighted average of the results obtained from detection and tracking to obtain the target position. The online update learning of the model is carried out through the learning module, and the long-term tracking is realized by correcting the errors of the tracking module through the detection module. In reference [21], the online structured output SVM is used to directly predict the position change of the target in each frame of the image to obtain the tracking result, instead of the traditional classification of the target and the background. The algorithm proposed in [22] also takes the area around the target into account in the tracking process and reduces the risk of tracking failure caused by target occlusion by constructing a spatial context model and a spatiotemporal context model. Reference [23] solves the problem of insufficient number of target tracking samples and improves the tracking ability by applying the powerful object representation ability trained on the deep learning image classification dataset to the tracking problem. Reference [24] directly uses the tracking video as a training sample to train the CNN network [25] to obtain a general target representation ability. At the same time, due to the differences in the target itself, motion mode, lighting, etc. in different tracking sequences, a multidomain network composed of shared layers and branch layers is designed. The shared layer learns the general features of the tracking target. The branching layer, on the other hand, targets a specific tracking sequence.

This paper proposes a dancer tracking algorithm in ethnic areas based on multifeature fusion, based on a thorough review of the literature. To achieve self-adaptation of feature weight, the feature reliability is judged before fusion, and the weight is biased toward the feature with strong representation ability. The regional pixel feature reconstruction method is used to perform three-dimensional information reconstruction of image tracking of dancers in ethnic areas, contour detection, and pixel tracking of dancers in ethnic areas, and the pixel tracking and identification ability of dancers in ethnic areas are improved. The experimental results show that the proposed method outperforms other methods in terms of improving dancer tracking and identification in ethnic areas.

3. Methodology

3.1. Target Tracking System and Related Algorithms

In view of the importance of vision to obtain various kinds of information, improve the quality of human life, and improve work efficiency, machine vision emerges as the times require, which can be seen as an extension of human vision and perception. With the advancement of science and technology and the development of electronic equipment, human beings have higher and higher requirements for the comprehensiveness, accuracy, and real-time of information. Some electronic devices can gradually replace the human eye to obtain information, and it is more urgent to use higher-performance computers to provide people with more effective video information services, which greatly promotes in-depth research and development in the field of machine vision. In recent years, in order to uniformly evaluate the tracking effects of various target tracking algorithms, many research individuals and communities have established standard databases and evaluation criteria for evaluation and have also evaluated and analyzed a large number of target tracking algorithms.

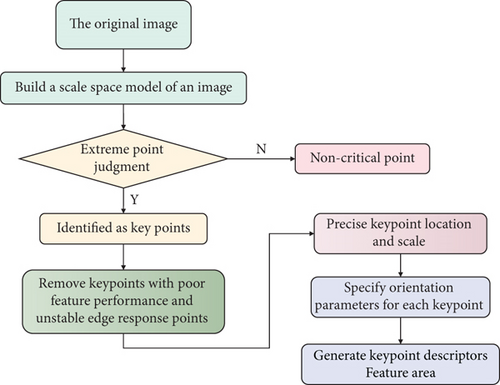

Early target tracking often used background difference, frame difference, optical flow method, and so on. The interframe difference method uses the difference calculation between two or more frames of images to obtain the position of the moving target. The background difference method obtains the target by calculating the difference between the image of the current frame and the prebuilt background model image. When the background changes, it needs to continuously extract the background area to update the background model [26]. The optical flow method calculates the motion speed of the pixels in the image by matching and realizes the detection of moving objects by detecting the difference between the background pixel and the target pixel speed, but the optical flow method requires a large amount of calculation, and the detection of optical flow is easily affected by illumination changes and other interference effects. The most common motion capture system is the optical motion capture system. Its basic principle is that the athlete wears photosensitive nodes on each limb so that the three-dimensional information captured by the cameras installed around the capture field can be used to restore the athlete’s movement [27]. There are two types of photosensitive nodes: active and passive. The active nodes actively emit light, whereas the passive nodes are coated with special materials that make them extremely bright when exposed to light. To function, a complete system necessitates the collaboration of multiple cameras. One of the most basic technologies used in the field of computer vision is object tracking. The goal is to find and track moving objects in a video sequence before displaying the tracking results. Figure 1 depicts the target tracking process.

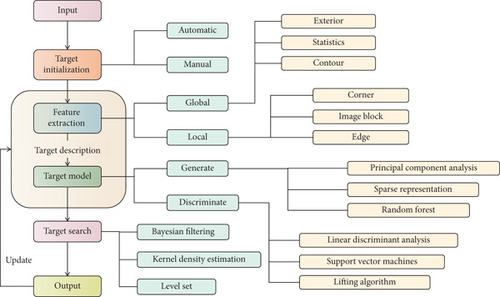

Related theoretical methods in machine learning have also been widely introduced into object tracking. The mechanical motion capture system is composed of multiple joints and rigid links. Its working principle is to capture the movement of the joints at every moment through the angle sensors installed at each joint of the human body. Therefore, compared with the optical capture system, it has the advantages of not being easily disturbed by the environment, and the system is stable, but the system is somewhat bulky, and the movement of the captured object is greatly restricted due to the link connection. The target tracking work process is divided into 5 steps: (1) target initialization stage, (2) feature extraction stage, (3) establish the target model stage, (4) target search stage, and (5) the target model update stage. The task of target tracking can be simply summarized as given the target position in the first frame of a video sequence, the position of the target needs to be estimated in subsequent video images [28]. The design of target tracker generally considers the aspects of feature extraction, building target model, and model updating and constructs a complete target tracking algorithm system through appropriate combination.

In order to obtain accurate motion data, generally, after the motion information of the captured person is captured, it is necessary to further extract the motion trajectories of its key joints and skeletons and use these information to model the motion. Proper organization and representation of these joint information is also crucial for subsequent action data processing [29]. Different features often have different advantages and disadvantages. In the process of tracking a video sequence, the tracked target is often affected by various environmental factors such as illumination, rotation, occlusion, and background clutter. Therefore, a single feature is used in target tracking. It is difficult to adapt to all distraction situations. If you use multiple features to track the target at the same time, you can take advantage of different features. When a certain feature fails to track in a specific environment, other features can supplement it, so that the complementary effect between features can be better adapted. Track the various environments in the process. The feature extraction algorithm flow is shown in Figure 2.

At present, there are many excellent target tracking algorithms, and the modes of these tracking algorithms are mainly divided into three categories: generation mode, discriminative mode, and based on deep learning. However, there are still many challenges in the field of target tracking; especially in the actual tracking process, the target object will be affected by various factors such as illumination change, deformation, background clutter, scale change, and occlusion, making it very difficult to use a single unique tracker. Changes in various environmental factors can easily disturb it [30]. There are many tracking algorithms that integrate multiple features to better describe the target from multiple perspectives, in addition to using a single feature to represent the target appearance model. The majority of current fusion strategies either directly connect different features or simply use a preset parameter to perform linear interpolation on the tracking results of different features, and thus do not fully exploit the benefits of different features. Simultaneously, in order to adapt to changes in the target environment during tracking, the model must be updated in real time. The traditional method of updating the weights after a set number of frames risks introducing noise data and causing tracking drift.

3.2. Multifeature Fusion of Dance Personnel Tracking in Ethnic Regions

In the image tracking framework based on feature search, one of the core problems is the selection of tracking target features. How to construct a stable and effective feature template has become a hot issue in current research. In order to realize the tracking and identification of dancers in ethnic areas, it is necessary to build a video tracking scanning imaging model of dancers in ethnic areas and detect the edge contour model of video images of dancers in ethnic areas. Video image tracking technology is used to analyze the detailed characteristics of dancers, and according to the results of movement feature extraction of dancers in ethnic areas, it guides dance training and teaching in ethnic areas. In the case of monocular vision, when the human body rotates, translates, and stretches, that is, when the human body moves continuously, it is more difficult to detect the human body. Therefore, a human body shape model based on a statistical learning model is adopted to realize human body detection.

A single feature can improve tracking results in a specific tracking environment, but in real-world complex tracking scenarios, factors like lighting, target motion state, attitude, and target scale are constantly changing. Tracking that is based on a single characteristic algorithms is frequently limited in their adaptability. It can better adapt to changes in the scene and lighting conditions and achieve better performance, if a variety of feature information is used for target tracking, and the expressive ability of different features in different environments is used to adjust the weight and complement each other’s advantages. The good results obtained by the multifeature fusion tracking algorithm demonstrate that the complementarity between different features can be used to more effectively characterize the target, and the tracking algorithm’s performance can be significantly improved, particularly for deformation, motion blur, background similar object interference, illumination changes, and so on. The situation has resulted in a significant improvement in tracking failure.

The effective features extracted from the target image are very important, which is not only the basis of fast and effective tracking but also the important content of sample learning by the trainer. Common image features include color features, texture features, and edge features. Combining video feature analysis and image acquisition technology, the dynamic video information samples of dancers in ethnic areas are collected, the movements of dancers in ethnic areas are tracked and identified by cameras and image sensors, and the video frame format features are quantitatively analyzed. Combined with the method of machine vision reconstruction, the features of dancers in ethnic areas are extracted, and the position and posture of dancers in ethnic areas are tracked according to the results of feature extraction. The residual module generally consists of two branches. The first branch is mainly composed of two 1 × 1 convolution layers and a 3 × 3 convolution layer for increasing depth and extracting features. The second branch core is different from the conventional residual module in order to control the number of input channels and output channels. The residual module is controlled by the number of input channels and the number of output channels and can operate on images of any scale.

Texture is the quantification of the smoothness, roughness, and regularity of the target surface, as well as the measurement of the brightness change. Texture creation necessitates a series of processing steps. Dance is characterized by complex and large-scale changes, and tracking and identifying dance gestures necessitate the use of a deep learning model to understand the requirements of each scale when extracting features. People’s orientation, limb arrangement, and the relationship between adjacent joints must all be inferred and identified from global context data, and local data can be precisely located.

In this paper, m = 10 is chosen to represent the size of a small batch. Fine-tune the application of multiclass loss function in each small batch, and constantly update the parameters of the network model through the back-propagation algorithm. Stop the optimization when the loss function is not changing.

In the correlation filtering tracking framework, the corresponding filter model is constructed separately for each channel of the features in the tracking process, and the correlation response is calculated. The corresponding fusion weights for each channel’s responses are then calculated using the tracking results’ reliability analysis, and the response maps are fused for the first time using the weight calculation weights, so that each feature corresponds to a response map based on channel fusion. As one of the most widely used features, the color feature has obvious advantages, making it ideal for describing deformed and partially blocked targets. It is also stable in the face of target shape change, plane rotation, and nonrigid body, whereas the color feature is sensitive to illumination change and does not contain any target pixel information. In the probability tracking framework, the color histogram of candidate areas is counted, and the probability response of target color is calculated. Combined with the response of the correlation filter frame obtained by the second fusion, the corresponding weights are also obtained by the reliability analysis of the response graph, and then, the target response results of the correlation filter and the color probability frames are fused by the weights to obtain the final target response of the algorithm.

4. Result Analysis and Discussion

The tracking simulation of dancers in ethnic areas is carried out in this chapter to verify the performance improvement effect of the multifeature fusion method proposed in this paper on the target tracking algorithm. The estimation of complex key points is handled using the multifeature fusion module. The residual module is used to improve feature extraction for each layer, resulting in richer semantic features. Simultaneously, the feature resolution of each stage is improved by upsampling operation in order to obtain better local features. The effectiveness of the algorithm introduced in this paper is demonstrated by comparing it to the performance of several well-known target tracking algorithms using a standard data set and unified evaluation standard.

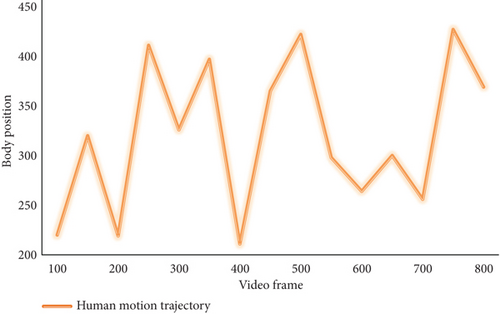

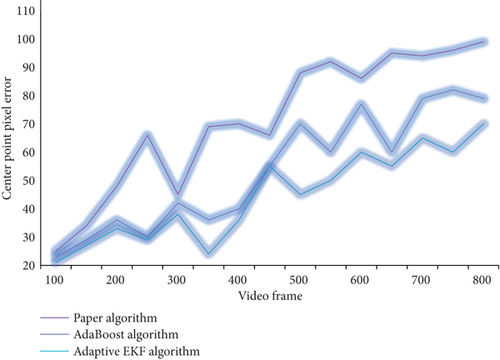

In order to analyze and verify the human tracking algorithm proposed in this paper, a simulation experiment is carried out. Select a piece of national dance video from the data set as sample data. The action video contains 800 frames. At the beginning of the dance action, the actor has a long period of preparation action, and after the action, the actor returns to the initial state from the last posture, which leads to a gentle change in the joint distance at the end position and then a drastic change. The accuracy and success rate are calculated by OPE experiment; that is, the tracking algorithm only initializes the position in the first frame of each video sequence, and the algorithm only runs once in each video sequence. The experiment includes the overall performance comparison test with other mainstream algorithms under standard data set and the performance comparison test under different target attributes. The human detection effect of the proposed algorithm is quantitatively analyzed to evaluate its robustness. The translation error of human body detection in 800 frames video sequence is shown in Figure 3.

It can be seen from Figure 3 that the human detection effect of the proposed algorithm is good with low average error in the case of translation. Edge is the junction of one attribute area and another attribute area in the image, which concentrates a large number of pixels whose gray level changes in the image, and is the place where the image information is most concentrated and abundant. Edge can describe the general outline of the target and show the shape, direction, step nature, and other information of the target intuitively, which is an important channel to select features in image recognition and analysis.

The initial sample of the model can only be obtained from the first frame of the video when tracking the target in video, and the state of the target will change in many ways during the tracking process. The tracker model must be updated in real time during the tracking process to adapt to the target change. When sampling and updating near the target, if the target position obtained in the previous frame is inaccurate or the target is occluded, a large amount of background information will be taken as positive samples to participate in the update, resulting in the model’s resolution gradually decreasing and tending to the background. The gradual accumulation of such errors will cause the tracking to drift or fail. In order to quantitatively compare the tracking performance, the comparison experiments of this algorithm, AdaBoost algorithm and adaptive EKF algorithm are carried out for the same video sequence. Figure 4 shows the tracking results of three algorithms for human body.

As can be seen from Figure 4, there is no obvious difference in the center point pixel error of the three different algorithms in the early stage. However, with the increase of tracking time, the tracking algorithm in this paper has a strong advantage in the pixel error of the center point, while the difference between AdaBoost algorithm and adaptive EKF algorithm is not great, and it is obviously higher than the combined algorithm proposed in this paper. It shows that the algorithm proposed in this paper is excellent in stability and robustness under the same conditions. The representation ability of all effective features in the current frame is detected, and the weights of corresponding features are dynamically adjusted according to the judgment results, and the features with strong representation ability get greater weights, and vice versa.

The video images of dancers in ethnic areas are corner calibrated, and the video images of dancers in ethnic areas are enhanced according to the initial contour distribution, and the visual perception model of the tracking images of dancers in ethnic areas is established to realize dancer tracking. The confidence in target tracking results is reflected in the response diagram. We analyze the characteristics of the response diagram to judge the reliability of tracking results, and we dynamically choose different weights to fuse the results by analyzing the reliability of different trackers’ results. An algorithm’s ability to adapt to the background of a variety of complex situations is difficult. Constraints are commonly used in experiments to improve the target algorithm for a given situation. However, once in the actual scene, the constraints are lost, and the traditional tracking algorithm will have problems such as drift, false detection, and failure, which will seriously affect the follow-up tracking tasks. In order to deal with complex background, a complex background learning model based on multiscale discrimination is established to assist the tracking algorithm to adapt to the tracking environment quickly.

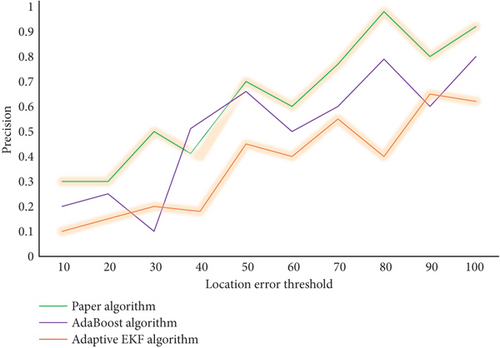

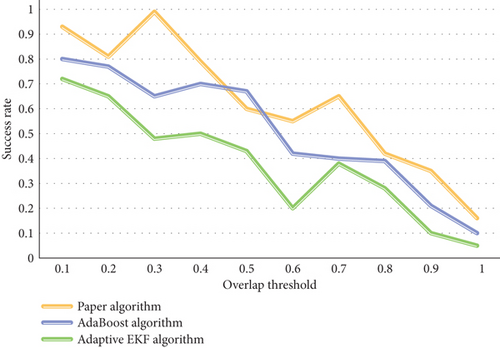

In order to compare the overall performance of these algorithms, experiments are carried out on each algorithm, and the success rate and accuracy of each tracking algorithm are evaluated by success rate chart and accuracy chart. Figure 5 is a precision graph. Figure 6 is a graph of success rate.

From the analysis of Figures 5 and 6, it is obvious that the performance of the algorithm using multifeature fusion for target tracking is obviously better than the other three algorithms using only a single feature for tracking. At the same time, the algorithm proposed in this paper is superior to the other three tracking algorithms in accuracy and success rate. This algorithm can meet the requirements of real-time processing.

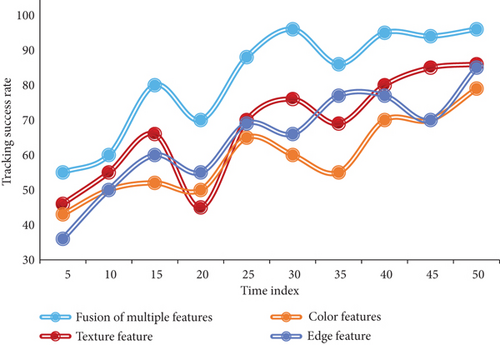

The edge contour features of the video images of dancers in ethnic areas are decomposed, and the viewpoint tracking and switching method are used to extract the video features of dancers in ethnic areas and track and identify the technical trends. Scale is one of the important properties of the target, and the estimation accuracy of the change of scale will affect the tracking effect of the tracking algorithm. In order to make the tracker algorithm adapt to the target change in the tracking process, the target samples will be selected in real time to track the model in the tracking process. In the process of sample selection, the images in the rectangular box of the target scale will be regarded as the target area. If the sampling scale is larger than the target’s actual scale, too much background information will be included as the target in the model update, causing the model’s ability to distinguish between the target and the background to deteriorate. However, if the sampling scale is smaller than the target’s actual scale, only a portion of the target will be sampled, leaving the sample information incomplete. Various changes will inevitably occur while tracking the target’s motion, and the target’s salient features will change as a result. The initial feature template is clearly incapable of meeting the demands of precise and real-time tracking. The size of the tracking window and the target’s feature model will be adjusted synchronously to adapt to the target’s motion state. When the external environment changes, such as when a moving target is blocked or the angle of view changes, video-based tracking information is lost, and accuracy suffers. The success rate of tracking is improved by combining multiple features. Dance videos were used in experiments to test the effectiveness of a single feature in complex scenes. The success rate of video sequence tracking is shown in Figure 7.

It can be seen from Figure 7 that the target tracking algorithm based on multifeature fusion has the best overall recognition rate compared with other three single-feature target tracking methods. At the same time, the multifeature combination method also shows more advantages, and the recognition rate of the method used in this paper is obviously higher than that of the single method. Combining the advantages of each feature, it improves the weight of features with good stability and high recognition rate and enhances the robustness and accuracy of tracking. The effect is far better than other single features.

The premise that the maximum response value reflects the reliability of tracking results is that the target tracking model can effectively distinguish the background area from the target. Once the target appearance changes greatly and the model fails to adapt in time, there may be a situation that even if the current maximum response value is accurate, the response value is small, or when the target model loses its discrimination ability; even if the maximum response value is large, it predicts the wrong target position. Because each pixel is considered independently, this will also lead to some wrong results. Recently, video segmentation has achieved good results by restricting the spatial consistency of adjacent pixels. Markov random field model is used to make temporal and spatial reasoning in the image to obtain more accurate foreground and background. A complex energy function is defined, which depends on image data and its labels. The minimization of energy ensures the temporal and spatial consistency of the front/background in the image sequence and eliminates the singularity.

The simulation experiment in this chapter shows that this method can effectively track and identify dancers in ethnic areas and improve the imaging quality. The method proposed in this paper has certain advantages. The average error of human motion detection is lower, and the robustness is higher. Compared with other methods, the method proposed in this paper has smaller pixel error of the center point of human motion, is suitable for long-term tracking process, and has better stability.

5. Conclusions

Artificial intelligence and computer vision have grown in popularity in recent years and have become increasingly important in everyday life. Target tracking technology is a must-have in today’s science and technology society, and it has a wide range of applications. The traditional target tracking algorithm, in general, can only meet the tracking requirements in specific scenes. In practice, it is common to need to improve adaptability to different scenarios. Factors such as illumination change, distortion, occlusion, background clutter, and others have a significant impact on the performance of target tracking algorithms, so overcoming these factors’ impact on tracking performance is a hotspot for research in this field. This paper investigates ethnic dancers’ tracking technology, analyzes ethnic dancers’ movement characteristics, and conducts expert guidance and judgment using the feature extraction method, in order to improve the pertinence of training and the ability of standardized movement correction. The tracking algorithm described in this paper can take full advantage of the relationship between target and background features, weight the proportion of good expression ability, handle external environment changes and disturbances effectively, and track local targets continuously and effectively. Human target detection is accomplished using a multifeature fusion detection method, which effectively improves human detection robustness. Simultaneously, when compared to other mainstream tracking algorithms, the algorithm proposed in this paper has improved tracking performance in all aspects, and it can still achieve good tracking effect even when various factors, such as cluttered background, occlusion, and out of view, are present, demonstrating good adaptability. However, it can also handle real-time processing requirements. This method can effectively improve the pixel tracking and recognition ability of dancers in ethnic areas, so as to realize the video guidance of dancing in ethnic areas. However, due to the limitation of my research and energy, the algorithm proposed in this paper still has defects and deficiencies, and further research and improvement are needed in the future.

Conflicts of Interest

The author does not have any possible conflicts of interest.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.