Analyzing the Application of Multimedia Technology Assisted English Grammar Teaching in Colleges

Abstract

To address the problem that the traditional English composition is manually reviewed, which leads to low efficiency of grammar error checking and heavy teaching burden for teachers and seriously affects the quality of English teaching, a verb grammar error checking algorithm based on the combination of a multilayer rule model and a deep learning model is proposed with current multimedia technology. First, the basic principles and network structure of the long short-term memory network LSTM are specifically analyzed; then, a multilayer rule model is constructed; after that, an attention model-based LSTM verb grammar checker is designed, and a word embedding model is used to encode the text and map it to the status space to retain the textual information. Finally, the proposed algorithm is experimentally validated using the corpus dataset. The experimental results revealed that the accuracy, recall, and F1 values of the proposed algorithm in verb error detection were 45.51%, 28.77%, and 35.5%, respectively, which were higher than those of the traditional automatic review algorithm, indicating that the proposed algorithm has superior performance and can improve the accuracy and efficiency of grammar error detection.

1. Introduction

In recent years, with the rapid development of multimedia technology, the learning of English in university gradually tends to be automated and intelligent. Among them, English composition is gradually converted from manual marking to automatic marking, which gradually improves marking efficiency and reduces teachers’ teaching pressure. However, the traditional English composition error detection system has a low accuracy rate of grammar detection and a high rate of error detection for words such as verbs, prepositions, and coronals, which cannot meet the current requirements of college English grammar-assisted teaching [1–3]. In response to this problem, a large number of studies have been proposed by scholars and experts. Krzysztof pajak et al. proposed a seq2seq error correction method based on denoising automatic coding and obtained an F1 score of 56.58%[4]. Zhaoquan Qiu proposed a two-stage Chinese grammar error correction algorithm, which is characterized by providing reference for Chinese grammar error correction and introducing a deep learning algorithm [5]; Ailani Sagar summarizes the current mainstream syntax error correction algorithms, which provide a reference for a comprehensive understanding of syntax error correction [6]. Chanjun Park et al. put forward the syntax error correction method of a neural network and the evaluation index of correction and over correction [7]; Bo Wang et al. proposed marking the statistical machine translation results to improve the accuracy of error correction [8]; Lichtage Jared et al. improved grammar error correction from the perspective of data training so as to provide a way to improve training and improve the accuracy of grammar error correction [9]. Crivellari Alessandro et al. proposed a direction-finding algorithm with error detection and fault tolerance for longer statements in English, and the LSTM network model with attention mechanism is effective in learning longer statements in both directions and preserving the features of long and short statement sequences [10–13]. Based on the above, the LSTM network in deep learning is applied to English grammar error detection to improve the accuracy and efficiency of English grammar error detection and to assist English teachers in instructing students.

2. Basic Methods

2.1. LSTM Fundamentals

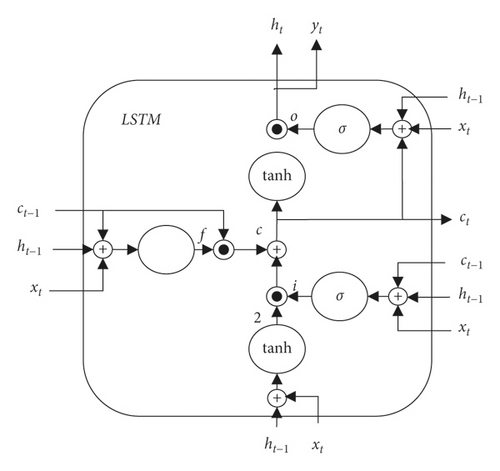

Long short term memory (LSTM) is a recurrent neural network with memory function to process the next period of time sequence. Its main role is to solve the dependent memory decline in data modeling, which can avoid the gradient disappearance and explosion problem during model training. To solve the problem that the RNN has only ht states in the hidden layer at moment t, the unit state ct, i.e., the information storage, will be added. The values to be updated are selected in the input gate sigmoid function, and then a candidate vector obtained from the tanh layer is added to ct. As a result, LSTM can effectively solve nonlinear time series data associated with specific memory functions. With the control of the learning rates of input gate, output gate, and forget gate, the large data sequences can be better processed [14–16].

At time t, the input to the LSTM consists mainly of the input value xt of the neural network at time t, the output value ht−1 at time t − 1, and the cell state ct−1 at time t-1; The resulting LSTM outputs are as follows: the output value ht of the neural network at moment t and the cell state ct at moment t. The LSTM neuron structure is shown in Figure 1.

In the above equation, it, ft, and ot denote the states of the input, forget, and output gate at moment t, respectively; σ and tanh are the sigmoid function and hyperbolic tangent activation function, respectively; w and b denote the corresponding weight coefficient matrix and bias terms; ct denotes the state of the memory cell at moment t; ht denotes the full output of the LSTM network unit at time point t.

2.2. Back Propagation Process of LSTM

LSTM model training is mainly implemented by the BPTT algorithm, which calculates all output values according to equations (3) to (9); the error term δ is calculated from the spatiotemporal direction; according to δ, the weight gradient is derived and a suitable gradient optimization algorithm is selected to update the weights. diag denotes the diagonal matrix with diagonal elements as vector elements; o denotes the corresponding elements multiplied by each other, and the calculation process is

In the above equation, fl−1 is the activation function of l − 1. Since , , , and are all functions of xt, and xt is a function of , the error can be transferred to the l − 1 layer by the total derivative formula.

3. English Grammar Checker Design

3.1. Syntax Checking Based on Multilayer Rule Model

3.1.1. Overall Module Design

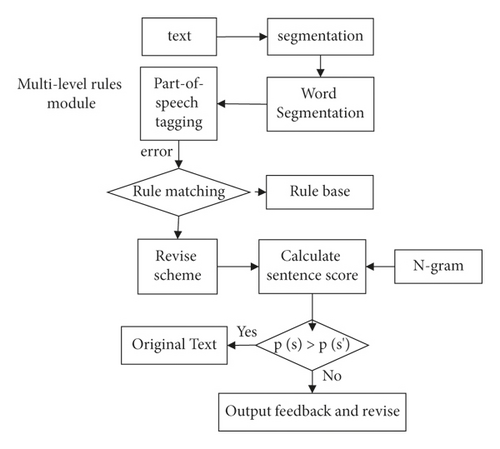

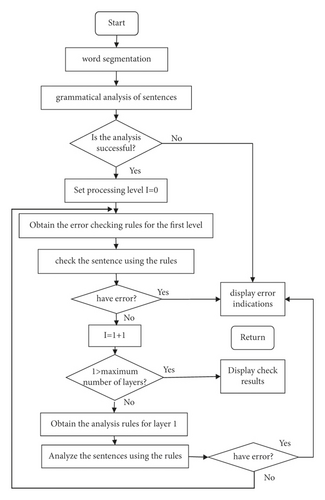

In English grammar error checking, firstly, we obtain the rules in the grammar, build an error checking model based on chopped rules, match the rules with the database through the input text, find out the error points, and modify them according to the corpus information. The module design is obtained as follows which is referring to Xu et al. [23] (Figure 2):

3.1.2. Part-of-Speech Tagging

English statements are mainly composed of various words, including nouns, verbs, and coronals. Since the meaning of each word is different and has an impact on the later rule matching, part-of-speech tagging will be performed before grammar error checking for better error correction of the statement, thus making the rule base better to discriminate the wrong text. At present, the commonly used annotation method is the marking convention, which is useful for different part of speech. Based on the information characteristics of the English corpus, Stanford parser will be used for annotation.

3.1.3. Rule Base Design

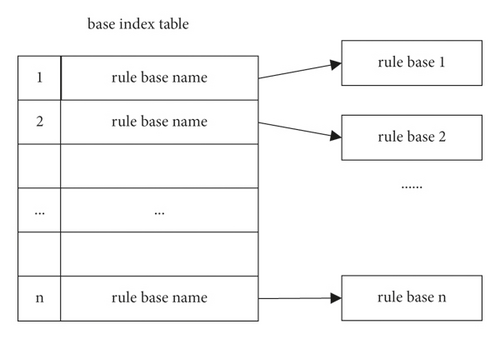

To improve the accuracy of grammar error detection, referring to the results of Xu et al., a rule base will be designed to match the input statements, and its design structure is shown in Figure 3 [24]. It is mainly ranked according to the specific characteristics of the grammar and thus stored, and is arranged in accordance with the name of the file so that multiple rules can be found for subsequent queries.

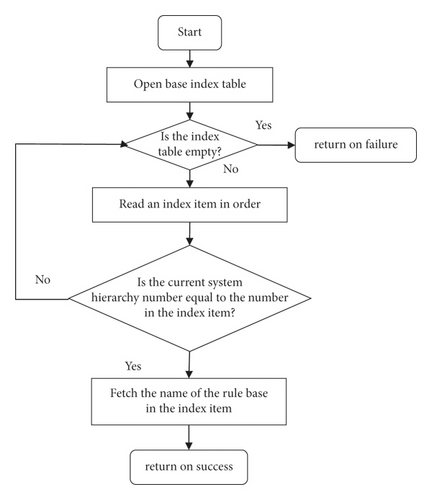

In the rule base, its rules are mainly adjusted according to the corresponding algorithm. The adjustment is done from top to bottom, and its specific process is as follows which refers to the results of Xu et al. (Figures 4–6).

First, open the index table in the rule base, check whether it is blank, if so, reopen for operation; conversely, select an index item according to the order, and then the system number it, check whether its number is consistent with the index item, if so, get its rule base name, end the index, otherwise, go back to the second step for operation.

3.1.4. Multilayer Rule Syntax Error Correction

- (1)

After inputting the statements, appropriate deletions are made, and the statements with important information are preserved

- (2)

The above algorithm is used to check for errors, and if an error is found, it goes to the next step, and if not, it ends the calculation and returns to the above steps for recalculation until the end of the rule base

- (3)

If the system finds an error, it goes back to step (2) to search for the error location

3.2. LSTM Syntax Checker Design Based on Attention Model

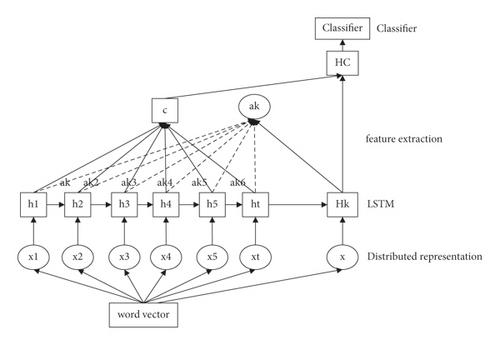

In order to improve the accuracy of English syntax error detection, the LSTM network model is applied to English syntax error detection by encoding the utterance, extracting features through it, and later classifying the features using the attention mechanism, thus realizing syntax error detection. The structure of the syntax error checker is shown in Figure 6 [26].

- (1)

Word vector representation: the English statements are represented using the word embedding model. If the input utterance is X = {x1, x2, x3, ⋯, xT}, where xi belongs to K-dimension, the summation is averaged over all utterances, after which the output is X′.

- (2)

Feature extraction: the statement features are extracted and encoded using the LSTM model.X and X′ are input into the system as X′. h1, h2, h3, ⋯, ht and x1, x2, x3, ⋯, xt corresponding to each other, and they are the invisible corresponding values.

- (3)

Error classification: an attention model is used to construct a classifier, and English statements are input into this classifier to classify them according to the above extracted features, thus achieving error classification.

Among them, the word embedding method can make the statements shorter and improve the consistency of statements, which can effectively solve the grammatical error correction problem and is applicable to shorter English texts for grammatical error correction.

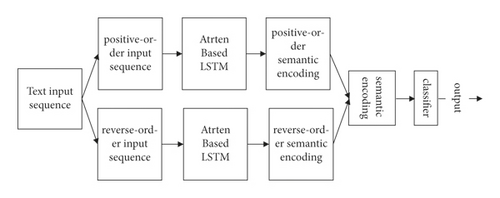

3.3. LSTM Model Based on Attention Model with Combination of Positive and Reverse Order

The above error detection model is mainly for short English statements, but when longer statements with more complex semantic information are input, the model is unable to detect errors and cannot take care of the contextual connections, resulting in poor error detection.

Therefore, based on the above model, the positive and negative sequences are added to combine the information in both directions to achieve multilayer feature extraction and encoding [27]. The specific structure is shown in Figure 7.

In the above model structure, x1, x2, x3, ⋯, xt are the sequences of the system being input; h1, h2, h3, ⋯, ht are the potential nodes of the layer with forward transmission direction; indicate the potential nodes of the layer with backward transmission direction; Y1, Y2, Y3, ⋯, Yt indicate the sequences of the final obtained output.

- (1)

Two directional data are input into the model, and x1, x2, x3, ⋯, xt and X′ are both backward sequences, and the rest are forward sequences. The two data are represented by the word embedding method, after which they are input into the error detection model.

- (2)

Feature extraction is performed using the LSTM in the error detection model, and the association information of the two sequences is encoded.

- (3)

After completing the above operations, the attention model is used for classification, and the classification of erroneous statements is thus obtained.

The error detection model designed in the study can fully take into account the length and direction of the input data, which can retain more text information for better text extraction and classification, and finally realize the error detection of long and short statements.

3.4. Design and Implementation of Verb Syntax Checking

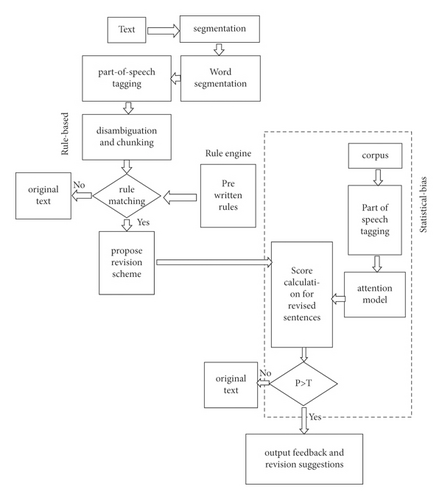

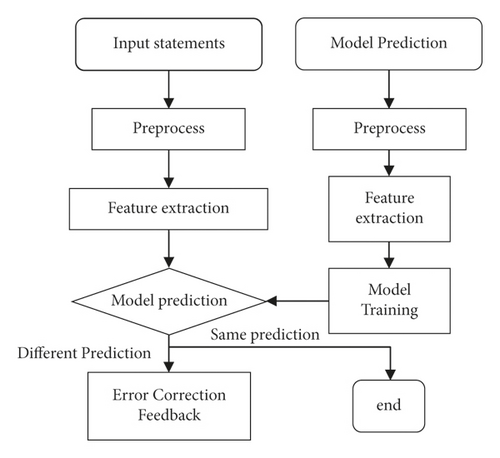

Based on the above LSTM model, it is combined with the rule set to design an English grammar checker with the following overall architecture [28–30] (Figure 8).

As can be seen from the above figure, the checker mainly consists of a rule model and an LSTM model. First, the rule model is used to perform operations such as information matching on the input statements to find out the location of the wrong syntax and give a preliminary modification plan; then, the LSTM error checking module based on the attention model is used to detect, compare it with the standard statements of the corpus to find out the statement errors, mark and locate the errors, and finally give the corresponding revision strategy.

4. Experimental Results and Analysis

4.1. Evaluation Contents

There are a variety of grammatical problems in English statements, the more common ones being grammatical errors, word morphology errors, missing words, and other aspects of application. The study will identify the more typical common errors, which are described in Table 1.

| Type | Content |

|---|---|

| Vt | Verb tense error |

| Vo | Verb missing error |

| Vform | Verb form error |

| SVA | Subject-verb agreement error |

| ArtOrDet | Article error |

| Prep | Preposition error |

| Nn | Form error of singular and plural nouns |

| Others | Other errors |

In order to enhance the evaluation accuracy of the experimental results, NUCLE-releaSe2.2 will be selected as the experimental corpus, and the types and numbers of errors it contains are shown in Table 2.

| Type | Quantity |

|---|---|

| Vt | 3211 |

| Vo | 411 |

| Vform | 1453 |

| SVA | 1527 |

| ArtOrDet | 6658 |

| Prep | 2404 |

| Nn | 3779 |

| Others | 16865 |

4.2. Evaluation Criteria

In the above formula, R represents the proportion of statements with errors but marked correctly in all wrong statements. B represents the total number of unmarked errors and sentences in the text.

The effectiveness of English syntax detection is verified by F1. If F1 takes a higher value, it means that the detection effect of this syntax detection system is better.

4.3. Experimental Results and Analysis

4.3.1. Verification of Article Detection

In English grammar, article errors mainly include three forms of article redundancy, absence, and misuse, and their error ratios are shown in (Tables 3 and 4).

| Type | Article redundancy (%) | Article absence (%) | Article misuse (%) |

|---|---|---|---|

| Error ratio | 26.8 | 57.5 | 15.7 |

| Training set | Test set | |

|---|---|---|

| Number of sentences | 57151 | 1381 |

| Number of participles | 1161567 | 29207 |

| Number and proportion of article errors | 14.9% | 19.9% |

The information of the experimental corpus of article errors was obtained from the NUCLE English composition of a university, and the training and test sets of article class errors were classified after manual annotation, as shown in Table 4.

The above datasets are applied to the error detection model individually; the model is trained and predicted, respectively, and the flowchart of the article error detection system is shown in Figure 9.

The experiment compares the results of the above three evaluation metrics on the test set before and after pretreatment, and the results are shown in Table 5.

As can be seen from Table 5, the precision, recall, and F1 values of this corpus information increased after preprocessing the dataset, by 24.57%, 56.74%, and 34.08%, respectively, and the model training is significantly faster and better.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| Before pretreatment | 22.64 | 54.90 | 33.13 |

| After pretreatment | 24.57 | 56.74 | 34.08 |

In English syntax error detection, the error detection model belongs to the maximum entropy model, and the number of iterations seriously affects the error detection effect, and the relationship between the number of iterations and F-Measure is obtained as shown in Table 6.

| Number of iterations | 1 (%) | 2 (%) | 3 (%) | 4 (%) | 5 (%) | 10 (%) | 100 (%) |

|---|---|---|---|---|---|---|---|

| F1 | 34.08 | 34.08 | 34.11 | 34.12 | 34.18 | 32.24 | 35.48 |

From Table 6, we can see that the F-Measure increases with the increasing number of iterations, and the increase is small. The process increases the training time, which will affect the subsequent syntax error detection results; therefore, it is most reasonable to set the number of iterations to 1.

To verify the effect of the maximum entropy model on the processing of grammatical information, the model was compared with the Naive Bayesian model using the test set data, and the experimental results are shown in Table 7.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| Naive bayesian model | 16.13 | 41.59 | 23.25 |

| Maximum entropy model | 24.42 | 56.38 | 34.08 |

As can be seen from Table 7, the precision, recall, and F-Measure of the maximum entropy model are 24.42%, 56.38%, and 34.08%, respectively, which are better than the Naive Bayesian model for classification recognition and faster training.

Since the article corpus dataset is small, a partial wiki corpus will be added to this dataset for comparison. The test results after adding the corpus are obtained as shown in Table 8.

From Table 8, it can be seen that the precision, recall, and F-Measure of the training corpus are improved after adding different amounts of corpus, but the improvement is small.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| NUCLE | 24.42 | 56.38 | 34.08 |

| NUCLE + Wiki (l0w strip) | 24.27 | 56.81 | 34.01 |

| NUCLE + Wiki (20w strip) | 24.54 | 57.39 | 34.38 |

In order to further test the superiority of the article error detection system, this experiment compares the error detection results of this system and the CoNLL 2013 shared task system, and the comparison results are obtained as shown in Table 9.

From Table 9, we can find that the P value, R value and F value of the article error detection system are 24.54%, 57.39%, and 34.38%, which are 1.35%, 9.87%, and 1.19% higher than the CoNLL 2013 system, respectively, thus indicating that the proposed article error detection system can achieve better error detection results and superior system performance.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| End results | 24.54 | 57.39 | 34.38 |

| Shared task | 25.65 | 47.84 | 33.40 |

4.3.2. Preposition Detection Verification

Preposition errors in English statements can be mainly classified as the same as article. The proportions of incorrect statements are shown in Table 10.

| Type | Preposition redundancy (%) | Preposition missing (%) | Preposition misuse (%) |

|---|---|---|---|

| NUCLE | 24.42 | 56.38 | 34.08 |

| Error ratio | 18.12 | 24.03 | 57.91 |

Preposition data were obtained from the NUCLE-release2.2 corpus, and 28 types were obtained, where prep represents the number of errors. The preposition corpus data statistics are shown in Table 11.

| Training set | Test set | |

|---|---|---|

| Number of statements | 57151 | 1381 |

| Number of participles | 1161567 | 29207 |

| Number of prep errors | 133665 | 3332 |

The data preprocessing of the prepositional corpus according to the experimental evaluation indexes yielded the following test results before and after preprocessing.

As can be seen from Table 12, the preprocessing of this dataset improved all the indicators of the prepositional dataset, indicating that the preprocessing was carried out with better results, which was beneficial to the subsequent experiments.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| Before preprocessing | 68.13 | 13.47 | 21.82 |

| After preprocessing | 68.87 | 14.20 | 23.54 |

In order to test the effect of different values of the error difference C factor on the F1 value of the prepositional error detection system, the experiment will set the C value, as shown in Table 13.

From Table 13, it can be seen that when C takes the value of 0.95, the F1 value is 26.74, which takes the highest value among the five C values. Therefore, the C value was set to 0.95, and the Wike corpus was added to the original dataset, respectively, and placed into the prepositional dataset for testing, and the test results were obtained, as shown in Table 14.

| C | 0.98 (%) | 0.95 (%) | 0.9 (%) | 0.8 (%) | 0.7 (%) |

|---|---|---|---|---|---|

| F1 | 25.27 | 26.74 | 23.47 | 18.54 | 10.78 |

As can be seen from Table 14, the expansion of the preposition training set resulted in a significant increase in its F1 value up to 27.68%. To further test the error detection effectiveness of the preposition detection model, the model was compared with Naive Bayes and shared task, and the results are shown in Table 15.

| F1 (%) | |

|---|---|

| UNCLE | 23.54 |

| UNCLE + Wiki (10wstrip) | 24.57 |

| UNCLE + Wiki (50wstrip) | 27.68 |

As can be seen from Table 15, the F1-value of this model is 27.68, which is 16.19 and 0.17 higher than the other two models, respectively, indicating that this model is more effective in error detection.

| F1 (%) | |

|---|---|

| NB-priors | 11.87 |

| Maxent | 27.68 |

| Shared task | 27.51 |

4.3.3. Validation of Detection System Effect

At present, the common grammatical errors of verbs in college English composition mainly include four types of errors, verb missing errors, modal errors, tense errors, and subject-verb agreement errors. According to the statistics of the corpus, the results of verb error classification are shown in Table 16.

| Error type | Verb missing error (%) | Verb mood error (%) | Verb tense error (%) | Subject-verb agreement error (%) |

|---|---|---|---|---|

| Error percentage | 6 | 19 | 36 | 39 |

The test materials were obtained from the language material library CLEC, from which 100 English compositions were arbitrarily selected for the experiment, which contained a total of 1,128 sentences and 1,083 mislabeled sentences. This experiment, referring to the process of proposed by Xu et al., uses the above dataset to test the effect of grammar error detection, as shown in Table 17 [23].

From Table 16, the overall accuracy of the grammar error detection system is 62.14%, the recall rate is 37.00%, and the F1-value is 46.40%. The overall error detection rate is relatively high, and the overall error detection accuracy is further improved and compared with the results of articles, prepositions, and verbs.

| Precision (%) | Recall (%) | F1 (%) | |

|---|---|---|---|

| Verb error detection | 45.47 | 29.67 | 34.95 |

| Subject-verb agreement error detection | 39.69 | 20.98 | 32.06 |

| Error detection of the singular and plural forms of noun | 86.03 | 53.97 | 63.04 |

| Article error detection | 68.14 | 43.05 | 52.57 |

| Preposition error detection | 71.98 | 38.06 | 50.18 |

| Overall error detection | 62.26 | 37.14 | 46.56 |

5. Conclusion

In summary, the English syntax error detection model based on the combination of multilayer rules and LSTM model proposed in the study can improve the accurate error detection results of English syntax with significant error detection effects and can be applied in the teaching of English grammar. The experimental results suggest that the overall error detection effect of the proposed model is significantly improved compared with the traditional error detection model, and better error detection effect can be achieved in the grammar of articles, prepositions, and verbs. The pressure of English teachers at work can be reduced by higher efficiency of error detection. The innovation of this study is to correct grammar by combining the advantages of LSTM in temporal change data processing so as to greatly improve the accuracy of grammar translation.

Conflicts of Interest

The authors declared that they have no conflicts of interest regarding this work.

Open Research

Data Availability

The experimental data used to support the findings of this study are available from the corresponding author upon request.