Conv-Wake: A Lightweight Framework for Aircraft Wake Recognition

Abstract

The recognition of aircraft wake vortex can provide an indicator of early warning for civil aviation transportation safety. In this paper, several wake vortex recognition models based on deep learning and traditional machine learning were presented. Nonetheless, these models are not completely suitable owing to their dependence on the visualization of LiDAR data that yields the information loss of in reconstructing the behavior patterns of wake vortex. To tackle this problem, we proposed a lightweight deep learning framework to recognize aircraft wake vortex in the wind field of Shenzhen Baoan Airport’s arrival and departure routes. The nature of the introduced model is geared towards three aspects. First, the dilation patch embedding module is used as the input representation of the framework, attaining additional rich semantics information over long distances while maintaining parameters. Second, we combined a separable convolution module with a hybrid attention mechanism, increasing the model’s attention to the space position of wake vortex core. Third, environmental factors that affect the vortex behavior of the aircraft’s wake were encoded into the model. Experiments were conducted on a Doppler LiDAR acquisition dataset to validate the effectiveness of the proposed model. The results show that the proposed network has an accuracy of 0.9963 and a recognition speed at 100 frames per second was achieved on an experimental device with 0.51 M parameters.

1. Introduction

Near-Earth aircraft wake vortices emerge as a by-product of the lift generated by aircraft on approach and departure. In dense and busy airport airspace, the trailing wake of the aircraft in front can have a serious impact on the safety of the aircraft behind [1]. To prevent the wake from colliding with the aircraft, the International Civil Aviation Organisation (ICAO) developed standards for wake spacing in the 1970s. With the continuous increase of airport passenger flow, the airport’s capacity is largely limited to the redundant wake interval. Although the COVID-19 pandemic has stopped the growing demand for flights, it is certain that the effective control of the epidemic by various countries will lead to increasing passenger throughput.

To achieve real-time dynamic spacing adjustments [2, 3], LiDAR-based wake recognition is becoming a topic of interest in the civil aviation community due to its better performance and stability. In contrast to the traditional manual methods that highly depend on the intuition and experience of experts, intelligent technologies complement the program capabilities to recognize the LiDAR-based wake vortex. For this purpose, several methods have been proposed to detect wake in wind fields. Previous publications such as [4–6] are usually based on low-level hand-crafted features, recognizing wake based on their symmetry on radar echoes. For the evolving near-Earth wake [7], wake-up detection methods using radial velocity methods based on machine learning techniques may perform poorly and are very sensitive to environmental changes. Deep learning has proved to be successful in solving complicated intelligence tasks by efficiently processing complex input data and intelligently learning different knowledge. Recently, some aircraft wake vortex recognition methods based on deep learning have been proposed [8–10]. Similar to [4–6], these deep learning-based recognition methods typically map aircraft wake vortex data obtained from LiDAR into pseudo-color maps with three channels to meet popular deep learning network models and then use classifiers to recognize aircraft wake vortex, which remains low computational performance. In other words, the performances of these existing methods depend on the visualization of LiDAR data and the colormap configuration at different speeds, and information loss resulted by improper color mapping during data-to-image conversion can lead to poor performance in wave vortex recognition.

- (i)

A lightweight network backbone was proposed to significantly reduce the computational cost of the overall framework

- (ii)

Based on the patch input model, we employed the dilated convolution instead of standard convolution to obtain additional rich semantics over long distances and maintain parameters

- (iii)

To further improve the performance of the proposed network, we introduced a hybrid attention mechanism in deep separated convolution to enhance the feature representation and focus on important information

- (iv)

As aircraft wake vortex is susceptible to various environmental factors, some important real-time environmental state information (wind speed, temperature, humidity, and pressure) is encoded into the architecture of this network via a fully connected network to provide additional reference information

- (v)

Experimental performance on the dataset show that our proposed framework achieves excellent results in terms of recognition accuracy and speed

The rest of this paper is organized as follows. Section 2 reviews previous work. The source of the experimental data and the visualization form is presented in Section 3. Section 4 describes the proposed framework in wake recognition and introduces each module of the wake recognition. The comparison experiments on the dataset are provided in Section 5. Finally, Section 6 gives the conclusion.

2. Related Work

The recognition schemes for aircraft wake vortex mainly include machine learning techniques and deep learning methods. Machine learning takes advantage of feature engineering to expand a model’s feature vector when it is applied to wake vortex recognition. For example, we used support vector machines (SVM) to determine the presence of wake vortices on LiDAR scans [5]. It should be noted that wake vortex evolution characteristics are closely associated with atmospheric parameters [11] and the study [6] introduced background wind and used random forest (RF) to determine the existence of wake vortices in LiDAR scans. However, the evolution patterns of complex wakes at various stages are not sufficiently characterized by feature engineering.

In contrast, deep neural networks exhibit super learning ability in capturing and extracting complex structural information from massive and high dimensional data [12]. To solve the task of aircraft wake vortex recognition, studies [8, 9] use neural networks to determine the presence of aircraft wake vortex and the location of vortex cores. However, to meet popular neural network architectures, both approaches convert the raw aircraft wake vortex data produced by LiDAR into color images on scaling and mapping operations. In addition, the designs of popular network architectures are used to solve natural image challenges such as the ImageNet challenge [13] and the COCO challenge [14], so the scaling and mapping operation for LIDAR aircraft wake vortex require extra computation cost and lead to information loss.

2.1. Data Source

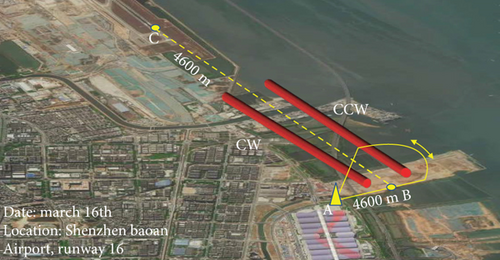

The LiDAR measurement of aircraft wake vortex was performed at Shenzhen Baoan Airport on 16 March 2021. In the satellite map of Figure 1(a), Point A is the LiDAR location for scan, and Point C indicates the threshold of Runway 16. The extension of the runway centerline from Point C intersects the detection plane of the LiDAR RHI mode at Point B. The distance from LiDAR location (Point A) to intersection (Point B) is 255 m, and the distance between the runway threshold (Point C) and the intersection (Point B) is 4600 m. When the aircraft is flying at high speed, clockwise (CW) and counter-clockwise (CCW) vortices will be generated near the wingtips of the aircraft, and the measured value is stored in the LiDAR memory along with the time stamp and position (distance, azimuth, and elevation).

A photograph of the LIDAR for the aircraft wake vortex detection is shown in Figure 1(b). The radial velocities are acquired by the LiDAR. The specific configuration for field measurement is listed in Table 1. A LIDAR scan rate of 2°/s, an elevation range of 16° to 55°, and a scan time of approximately 20 seconds are also considered. Particularly, an additional wind profile LiDAR was deployed to obtain background wind field wind speed and direction information.

| Parameters (unit) | Value |

|---|---|

| Azimuth angle (°) | 247 |

| Scanning rate (°/s) | 1 |

| Elevation range (°) | 16-55 |

| Elevation angle resolution (°) | 0.2 ± 0.03 |

| Detection radial range (m) | 68-608 |

| Longitudinal resolution (m) | 10 |

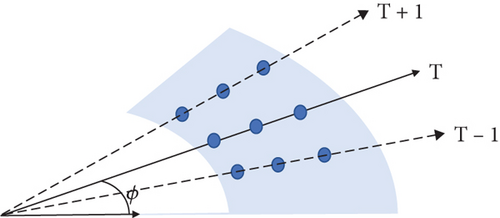

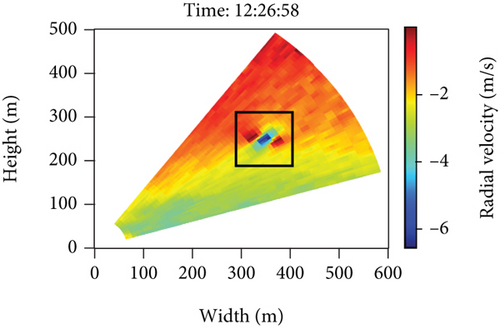

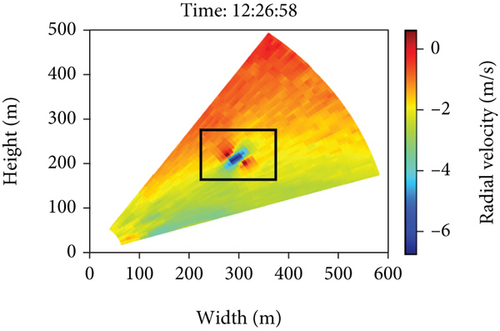

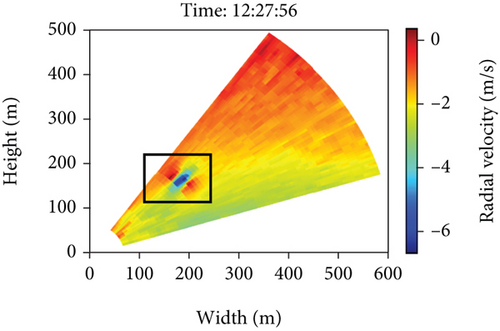

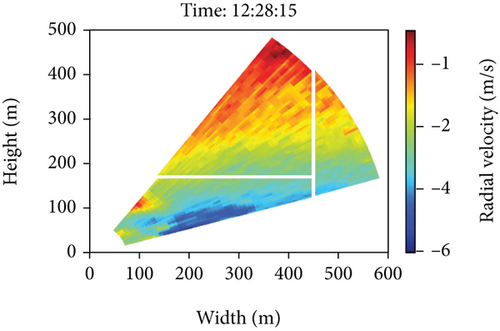

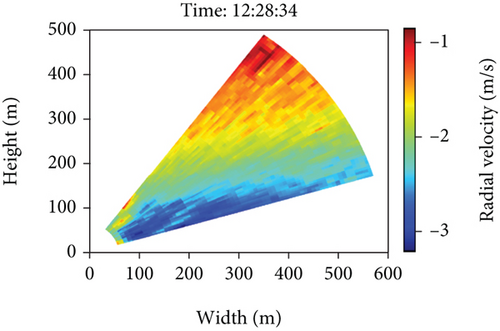

LiDAR performs periodic scanning for the distance and height indicator, as shown in Figure 2(a). Each point represents the aerosol particle measured from the LiDAR angle. By calculating the Doppler shift between the emitted beam and the backscattered beam, the radial velocity of the aerosol at that position is obtained [15]. Figure 3 shows the evolution of the aircraft wake vortex when an aircraft flies over the LIDAR scan zone.

3. Methods

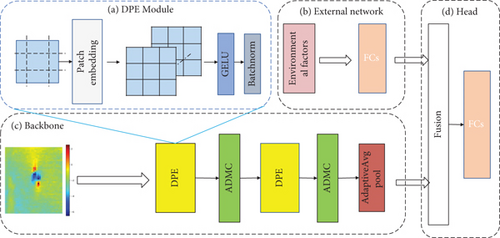

In this section, we present the Conv-Wake integration framework for the recognition task of aircraft wake vortex. Figure 4 shows the architecture of the proposed approach, aiming to reduce the number of calculation parameters and shorten the recognition time while achieving high accuracy. Therefore, the recognition mechanism of Conv-Wake can be summarized as follows.

3.1. DPE Module

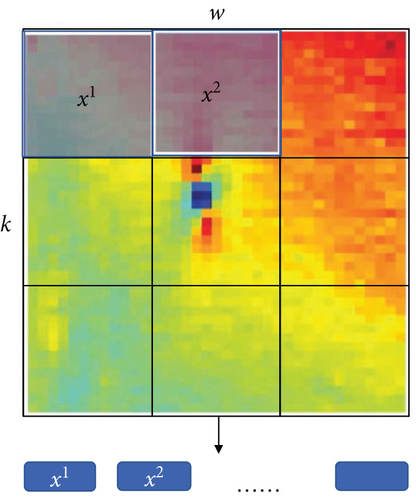

The patch embedding layer outperformed standard computer vision models in tests on vision datasets. This implies that patch representations may be the most critical component for achieving superior performance in deep learning architectures [16, 17]. In this paper, the acquired LIDAR data is input into the backbone network in the form of patches. To improve the perceptual field without increasing the amount of calculation, we take dilated convolution in the patch embedding layer to replace the standard convolution kernel of the original patch embedding layer. The improved patch (xi ∈ ℝk×w) embedding layer module with dilated convolution is called DPE. A comparison between these two versions is shown in Figure 5.

| Data input | Operator parameters (p) | Output |

|---|---|---|

| h × w × k | p=2 | m × w/2 × k/2 |

| h × w × k | p=3 | m × w/3 × k/3 |

| h × w × k | p=4 | m × w/4 × k/4 |

3.2. AMDC Module

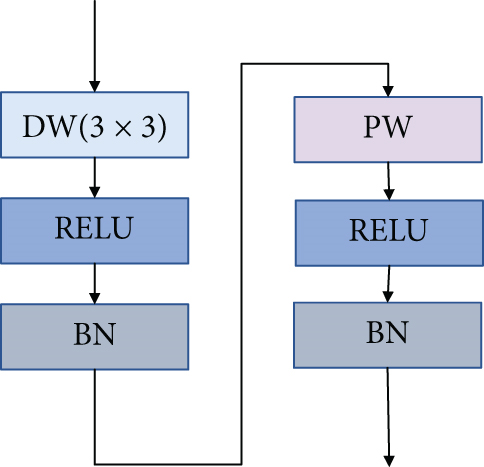

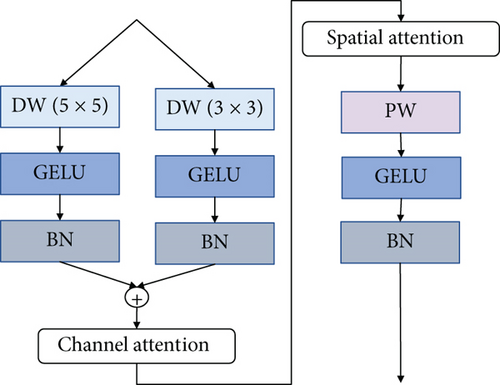

To achieve a lightweight network, a deeply separated convolution block is considered. The standard depth-separated convolution block consists of a group convolution part (DW) and a point convolution part (PW) [18], followed by a RELU activation layer and a BatchNorm (BN) layer, respectively. The DW convolution uses group convolution with the number equal to that of channels h for feature extraction, while the PW uses a 1 × 1 kernel size to convolve with the output of the previous layer, making the network much less computationally intensive. The reduction in parameters may make the feature extraction capability lower than that of standard convolution. To increase the capability of the depth-separable convolution, the perceptual capabilities of different convolution kernels are fused in the group convolution (see Figure 6(b)), using one branch of the traditional 3 × 3 group convolution to extract features and another branch of a 5 × 5 convolution to extract features with a larger field of view. Figure 6(a) shows two depth-separated convolutional computation processes.

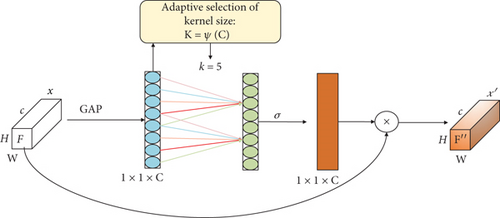

In fact, the unstructured contour behaved by the aircraft wake vortex makes it difficult to distinguish the boundary of its echo on the LiDAR. In addition, there usually exists a distinct vortex core center in wake vortex (CW and CCW) [19]. The entire network’s attention may thus provide a powerful tool for recognition of these patterns. In this paper, attention modules are introduced in deeply separable convolutional units. Two major developments of attention modules include the following: a channel attention mechanism ECA-Net [20] and a hybrid convolutional block attention module (CBAM) [21]. Briefly, the dilated patch embedding module outputs a relatively large number of channels; thus, the channel attention mechanism in CBAM is more complex and has a larger computational operation than ECA-Net. Therefore, in this work, we use the ECA-Net channel attention module for the channel attention mechanism and employ the spatial attention module of CBAM for the spatial attention mechanism. The relevant attention modules are described as follows.

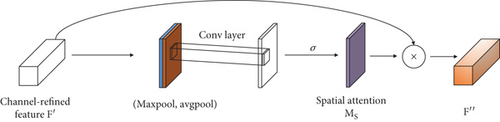

The spatial attention module is shown in Figure 8. First, the spatial attention mechanism extracts the average- and maximum pooled feature descriptions of the F channel dimension values of the feature map and concatenates them, which is then convolved by a convolutional layer with a convolutional kernel of 7 × 7 and finally generates the spatial attention map (MS( F)).

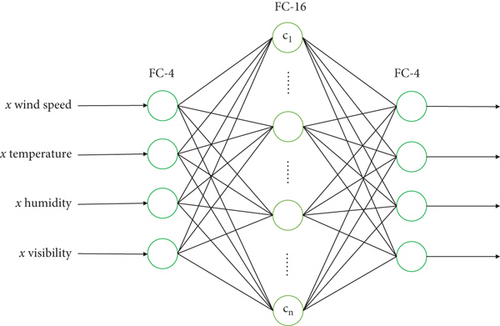

3.3. External Network

Many studies have proved that environmental conditions exert an important influence on the generation and evolution of wakes [22]. Particularly, some external factors may cause the shape of three-dimensional wake vortex change. Stephan et al. found that gust disturbance might lead to the local vortex tilting in their experiments [23]. Herein, we take the real-time status information of the environment to be a series of four-dimensional vectors, including wind speed, temperature, humidity, and pressure. By constructing the multilayer feedforward network depicted in Figure 9, the environmental factors data are coded to extract the information, and the features extracted from the backbone network are fused and finally fed into the recognition head.

3.4. Proposed Network Structure

The proposed Conv-Wake model mainly consists of a backbone network, external network, and a classification task head, as illustrated in Figure 4. We use the basic convolutional blocks of DPE module and AMDC module to form the backbone network by overlaying basic convolutional sheets. The DPE module with empty convolution extends the perceptual field of patch input without increasing the number of parameters. The AMDC module uses the improved separable convolution to replace ordinary convolution for feature extraction, thus reducing the number of parameters and the calculation amount of the entire network. The size of the feature map was reduced by sampling each backbone network, while the number of channels remained the same. A global pooling layer is used at the end of the backbone network to output smaller dimensions for the classification task head. The specific parameters of the entire Conv-Wake network are listed in Table 3.

| Location | Layer | Operation | Output size |

|---|---|---|---|

| Backbone feature extraction | DPE module (AMDC module) | p=5, dr=2 | 256×13×11 |

| DPE module (AMDC module) | p=5, dr=2 | 256×3×3 | |

| AdaptiveAvgPool2d | — | 256×1×1 | |

| Head (class) | FC | — | 256×1 |

| FC | — | 128×1 | |

| FC | — | 2×1 |

4. Network Training and Experimental Results

This section describes the structure of the dataset used for our experiments, the evaluation metrics, the training hyperparameters, and the final results.

4.1. Experimental Environment and Configuration

The experiments were implemented on Windows 10 and Python on a computer with 16G of RAM and a dual-core CPU with 12 cores and 3.4 G. The deep convolutional neural network was implemented mainly on the PyTorch framework. The model in this paper was trained using the SGD optimization algorithm [24]. We chose a learning rate of 0.001, a batch size of 20, and 100 Epoch training iterations in our experiments. The losses and accuracies were saved after each Epoch training. Table 4 summarizes the network parameters in training process.

| Parameter | Value |

|---|---|

| Number of epoch | 100 |

| Batch size | 20 |

| Momentum parameter | 0.9 |

| Learning rate | 0.001 |

| Learning rate decay | 0.1 |

| Optimizer | SGD |

Wake data used in recognition experiments is obtained by Doppler LiDAR scans, and each data is unique. In our study, we randomly selected 60% of the data as training set, 20% as validation set, and the rest as test set. All test results were obtained with no data augmentation. Table 5 describes the data set when it is applied to wake vortex recognition.

| Datasets | Number | Resolution |

|---|---|---|

| Train | 980 | 43x37 |

| Val | 139 | 43x37 |

| Test | 279 | 43x37 |

4.2. Impact of DPE Module

To test the performance of introduced dilated convolution in the patch embedding layer for wake vortex recognition, we conducted classification experiments based on the obtained dataset. The results of the experiments are listed in Table 6. For the same dataset, the patch embedding layer did not significantly increase the parameter number using the dilation convolution parameters. While the convolutional expansion rate increased, the larger perceptual field resulted in a more accurate classification.

| Dilation rate (dr) | #.Parameter | Acc |

|---|---|---|

| 0 | 0.51 M | 0.992 |

| 1 | 0.51 M | 0.996 |

| 2 | 0.51 M | 1.000 |

4.3. AMDC Ablation Experiment

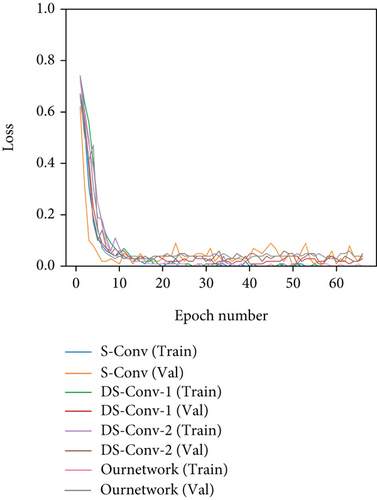

To verify the performance of the proposed AMDC depth-separable module, we designed a classification experiment on the LIDAR-acquired aircraft wake vortex dataset. The proposed module was compared to the standard convolutional module (S-Conv), the standard depth-separable convolutional network convolutional module (DS-Conv-1), and the -depth-separable convolutional module containing only multiple branches (DS-Conv-2). Figure 10 depicts the loss profiles of each model on the training and validation sets. It is clear that the loss between the training and validation sets decreases as the number of iterations increases and the four models can converge to the relatively small amount of data after 10 iterations. When epoch = 50, the loss drops to below 0.1 and is stabilized. Compared to standard convolution, the module proposed in this paper reduces the oscillation of the loss function and converges very quickly.

The comparison results of the four modules are summarized in Table 7. It is clear that our network obtains the highest recognition accuracy of 99.6%, compared to Network-1, Network-2, and ResNet50. The recognition accuracy of AMDC is higher than that of DS-Conv-2. This suggests that the hybrid visual attention mechanism can significantly improve the feature learning capability of deep separable convolutional models. DS-Conv-2 achieved higher recognition accuracy than DS-Conv-1. That demonstrates that the fusion of group convolution with different kernel sizes can effectively improve the ability of deep separable convolution in feature extraction.

| Type | Multibranch | Attention | Recall | Precision | Acc |

|---|---|---|---|---|---|

| S-Conv | — | — | 0.984 | 0.992 | 0.989 |

| DS-Conv-1 | — | — | 0.977 | 0.992 | 0.985 |

| DS-Conv-2 | √ | — | 0.992 | 0.992 | 0.992 |

| AMDC | √ | √ | 0.996 | 1.000 | 0.996 |

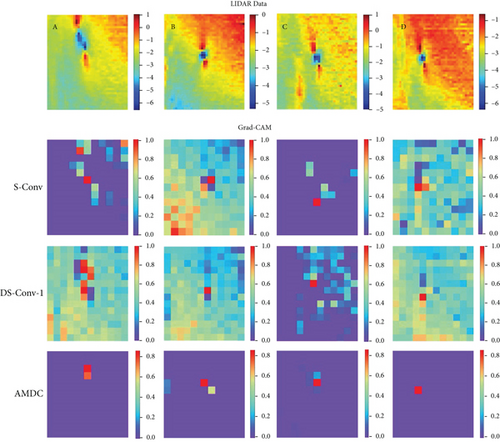

In addition, we conducted an experiment to explain AMDC using Grad-CAM, as shown in Figure 11. Compared to the standard convolution module and the standard depth separable convolution module, the attention mechanism guides the network to pay attention to the important feature parts of the target object for different models or different evolution stages of the wake and gives its visual interpretation. From the observations, through the compound application of spatial and channel attention mechanisms, the ADMC module can better focus on the effective information in the wake velocity field, effectively focus on the vortex cores and aggregated negative vortex pairs with large gradient changes of the target, discard the invalid background parts, and learn the object well information and aggregate features from it.

5. Compared with Other Work

In the experiments, the proposed model was compared with some other methods under the same conditions, i.e., KNN [4], SVM [5], RF [6], AlexNet [8], InceptionV2 [9], and CNNLSTM [10]. A comprehensive evaluation was carried out, and the results were presented in Tables 8 and 9. From Table 8, the ACC value of the proposed method on the dataset reveals 9.9%, 4.7%, and 3.9% improvements than those of KNN, SVM, and RF, respectively. This suggests that our model is significantly better than the method based on artificial features when they cope with complex scenarios.

| Model | Published year | Acc. (%) |

|---|---|---|

| Ours | — | 99.6 |

| KNN | 2019 | 89.7 |

| SVM | 2020 | 94.9 |

| RF | 2022 | 95.7 |

| Model | Published year | Acc. (%) | F1-score (%) | #.Parameter | FPS |

|---|---|---|---|---|---|

| Ours | — | 99.6 | 99.6 | 0.51 M | 100 |

| AlexNet | 2020 | 95.6 | 94.1 | 60 M | 50 |

| CNNLSTM | 2021 | 97.1 | 97.0 | 24 M | 20 |

| InceptionV2 | 2021 | 97.2 | 97.1 | 11 M | 10 |

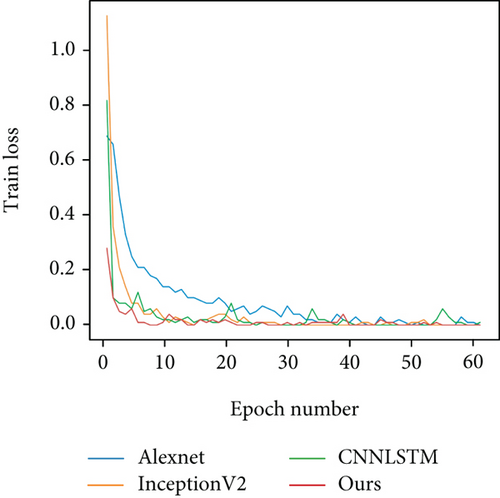

The results in Table 9 show that the recognition accuracy of the proposed model is superior to other neural network architectures those relies on pseudo-color mapping of the wake data. Particularly, the model tends to be stable after convergence, and the fluctuation range is smaller, as shown in Figure 12. In summary, deep detachable convolution is used in our network construction process without scaled LiDAR data. Our network has fewer parameters than other methods. Briefly, the model size is about 1/100th of AlexNet, and the iteration time is about 1/20th of InceptionV2. In addition, the proposed model obtained faster speeds than AlexNet and InceptionV2 while maintaining higher accuracy. More importantly, the application of the attention module in the network can guide the network to highlight the important parts of the target object and also reduce the impact of the background on the recognition performance.

6. Conclusions

In this paper, a lightweight convolutional neural network is designed for the recognition of aircraft wake vortices. To achieve weight reduction, the DPE module and the ADMC module are introduced to construct the recognition framework. The DPE module improves the patch input by expanding the convolution kernel while ensuring that the calculation parameters do not change significantly. The ADMC module operating on the characteristics of the aircraft wake itself can strengthen the module’s ability of paying attention to important information through multibranch and hybrid attention mechanisms. To evaluate the performance of our model, we conducted experiments on the wake data collected at Shenzhen Airport. The results showed that the AMDC module and the DPE module have good wake feature extraction capabilities. Compared with other methods, the proposed aircraft wake recognition framework has higher accuracy and faster recognition speed.

Although in this paper our proposed framework has achieved excellent results in aircraft wake recognition, the proposed framework may have more applications. By simply replacing the task header, the framework may be used for wake parameter estimation purposes, and we will further try this in the future.

Conflicts of Interest

The authors declare no conflict of interest.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant No. U1733203), the Program of China Sichuan Science and Technology (Grant No. 2021YFS0319), and the Special Project of Local Science and Technology Development Guided by the Central Government in 2020 (Grant No. 2020ZYD094).

Open Research

Data Availability

The data used to support the findings of this study have not been made available because the raw data required to reproduce these findings cannot be shared at this time as the data also form a part of an ongoing study.