Performance Optimization Mechanism of Adolescent Physical Training Based on Reinforcement Learning and Markov Model

Abstract

Upon the teenagers’ failure to obtain the plenty of physical exercises at the growth and development stage, the related central nervous system is prone to degeneration and the physical fitness starts to decline gradually. In fact, through monitoring the exercise process real-timely and quantifying the exercise data, the adolescent physical training can be effectively conducted. For such process, it involves two issues, i.e., the real-time data monitoring and data quantification evaluation. Therefore, this paper proposes a novel method based on Reinforcement Learning (RL) and Markov model to monitor and evaluate the physical training effect. Meanwhile, the RL is used to optimize the adaptive bit rate of surveillance video and help the real-time data monitoring; the Markov model is employed to evaluate the health condition on the physical training. Finally, we develop a real-time monitoring system on exercise data and compare with the state-of-the-art mechanisms based on this system platform. The experimental results indicate that the proposed performance optimization mechanism can be more efficient to conduct the physical training. Particularly the average evaluation deviation rate based on Markov model is controlled within 0.16%.

1. Introduction

The physical fitness of teenagers has attracted the global attention for a long time because it has the considerably important influence on the rise and fall of each country. In fact, the related central nervous system gradually starts to degenerate, and the physical fitness also will decline with it, when the teenagers fail to obtain the plenty of physical exercises at the growth and development stage. Furthermore, according to what one hears and sees as well as the reliable news, the sudden death events among high school and college students happen frequently, which makes people turn their attention to the fitness problem during the process of physical training [1]. To put it crudely, the adolescent physical training is of great importance while the exercise (including public/private, individual/population) must be done properly.

The researches show that the generated physical training data not only reflects the real trajectories of exercisers but also implies the abundant and valuable information related to the whole exercise process [2]. To be specific, the information includes time, speed, acceleration, steps, and energy consumption. Among them, the energy consumption is an important metric and it can release two key signals, i.e., the amount of exercise and the exercise intensity. Given this, the amount of exercise and exercise intensity information can be obtained easily via monitoring the consumed energy, and then the physical training can be conducted and adjusted, which can be regarded as the healthy and reasonable exercise. In addition, according to the monitored energy consumption, the unforeseen circumstances due to the overtraining can be discovered in a timely manner, avoiding the worse tragedies as much as possible.

According to the above statements, we can observe that the video surveillance pays the nonnegligible role during the process of monitoring the physical training data. However, the traditional video surveillance shows some limitations. On the one hand, the development of computation speed is unable to keep pace with the increasing of application data; on the other hand, the inherent transmission overhead is very large, and the provided network bandwidth and the transmission of sliced segments are also not always matched [3]. Therefore, it is very necessary to optimize the Adaptive Bit Rate (ABR) [4] and guarantee obtaining the real-time monitoring data.

The typical ABR algorithm includes caching stage and stable stage [5]. At the first stage, the ABR algorithm usually tends to fill up the cache as quickly as possible; at the second stage, the ABR algorithm usually tries the best to improve the quality of video segment and prevent the cache overflow. At present, there have been some ABR optimization algorithms, including the traditional ones and the Artificial Intelligence- (AI-) based ones. To the best of our knowledge, the traditional ABR optimization algorithms cannot obtain the real-time network status to adapt to the dynamic network environment. On the contrary, the AI-based ABR optimization algorithms can adaptively adjust the network parameters and obtain the relatively optimal video transmission [6]. In terms of AI, the Reinforcement Learning (RL) [7] is the most popular representative. For RL, it can retrieve the demanded data by information exchanging between the intelligent agents and the external environment, without preparing the additional training datasets before training. Compared with the other RL-based ABR optimization algorithms, the Q-learning-based ABR optimization algorithms have better experience quality. However, the current Q-learning-based ABR optimization algorithms fail to encode for the continuous state values and cannot complete the fast convergence in terms of the large state space. As a conclusion, this paper improve the Q-learning to optimize the ABR algorithm.

In addition to the data monitoring, the quantification evaluation for the physical training data is also very significant. Specifically, the physical training features can be extracted by analyzing the monitored exercise data [8]; on this basis, the embedded laws on the physical training can be explored and the corresponding physical fitness evaluation model can be built, such as exercise effect, exercise tolerance, and improvement situation. Based on the evaluation model, the differentiated physical training educations can be effectively developed.

With the above considerations, this paper proposes a novel method based on RL and Markov model to monitor and evaluate the adolescent physical training effect. The major contributions are summarized as follows. (i) The Q-learning-based RL is exploited to optimize the ABR of surveillance video by combining the nearest neighbor algorithm. (ii) The Markov model is used to evaluate the health condition on the physical training by considering the energy consumption metric. (iii) The real-time monitoring system on exercise data is implemented, and the performance optimization effect on the adolescent physical training is demonstrated based on the system platform.

The rest of the paper is organized as follows. Section 2 reviews the related work. The improved Q-learning-based ABR optimization is proposed in Section 3. Section 4 gives the physical fitness evaluation model. The experimental results are shown in Section 5. Section 6 concludes this paper.

2. Related Work

The physical training has always been concerned and some most cutting-edge works have been developed. Buckinx et al. evaluated the effect of citrulline supplementation combined with high-intensity interval training on physical performance in healthy older adults [9]. Ana et al. proposed the multicomponent exercise training method by combining with the nutritional counselling to improve the physical education [10]. Konstantinos et al. presented a study to compare the effectiveness of virtual and physical training for teaching a bimanual assembly task and in a novel approach, where the task complexity was introduced as an indicator of assembly errors during final assembly [11]. Roland Van Den et al. studied the training order of explosive strength and plyometrics training on different physical abilities in adolescent handball players [12]. Rodrigo et al. investigated the plyometric training on the soccer players’ physical fitness by considering muscle power, speed, and change-of-direction speed tasks [13]. Simpson et al. enhanced the physical performance in professional rugby league players via the optimised force-velocity training [14]. Unquestionably, the above references showed the professional research on the physical training. In spite of this, they did not provide the networked physical training mode, i.e., regardless of the transmission of data generated from the video surveillance. To this end, Sun and Zou concentrated on the video transmission and improved the performance of extended training by using mobile edge computing [3]. However, [3, 9–14] still did not pay attention to the ABR optimization during the transmission process of physical training data.

The ABR optimization plays an important role in the networked physical training mode. The traditional optimization algorithms usually are heuristics. Cicco et al. announced two policies to optimize ABR, i.e., gradual increasing, but accelerate decreasing for the bit rate [15]. The heuristic ABR optimization existed the suboptimal problem and the shock problem of transmission quality, which had the considerable influence on the experience quality. As a result, Mok et al. paid attention to the improvement of quality of experience [16], which could guarantee that the transmission quality kept the stable level. Furthermore, the traditional ABR optimization algorithms also had a limitation, i.e., could not build the predictable and describable mathematical models for the concrete problems. For such purpose, some researchers used the control theory to optimize the ABR, where the controller was responsible for handling the input parameters. For example, Xiong et al. proposed the adaptive control model based on fuzzy logic, which could effectively meet the dynamic network change [17]. Besides, Vergados et al. used the fuzzy logic to design the adaptive policy by inputting the varying information on caching [18], preventing the cache overflow. Although [17, 18] achieved the good effect on the ABR optimization, they could not obtain the real-time network status to adapt to the dynamic network environment. To this end, some AI-based ABR optimization algorithms were proposed. For example, Chien et al. mapped the feature values related to network bandwidth to the bit rate of video by using the random forest classification decision tree [19]. Basso et al. [20] trained the classification model and estimated the bit rate based on the classification model. In fact, these ABR optimization algorithms like [19, 20] needed the ready-made dataset used for training. Instead, the RL-based ABR optimization algorithms could easily obtain the physical training data without preparing the additional datasets. Among them, the Q-learning-based ABR optimization algorithms could obtain the relatively best experience quality [21]. In spite of this, it was very difficult for the current Q-learning-based ABR optimization algorithms to encode for the continuous state values and realize the fast convergence in case of the large state space.

The evaluation model building of physical training data is very significant because it can effectively conduct and adjust the physical training. ElSamahy et al. presented a computer-based system for safe physical fitness evaluation for subjects undergoing aerobic physical stress, in which a proportional-integral fuzzy controller was applied to control the applied physical stress to ensure not exceeding the predefined target heart rate to satisfy safety [22]. Zhong and Hu designed a WebGIS-based interactive platform to collect and analyze national physical fitness-related indicators, including realizing the seven functional modules [23]. Heldens et al. studied the care data evaluation model to address the association between performance parameters of physical fitness and postoperative outcomes in patients undergoing colorectal surgery [24]. Ma proposed a kind of multilevel estimation and fuzzy evaluation of physical fitness and health effect of college students in regular institutions of higher learning based on classification and regression tree algorithm [25]. Qu et al. considered the physical fitness evaluation in children with congenital heart diseases versus healthy population [26]. Although the above references built the evaluation models for the physical fitness, they did not address the adolescent physical training. Regarding this, Guo et al. proposed a machine learning-based physical fitness evaluation model oriented to wearable running monitoring for teenagers, in which a variant of the gradient boosting machine combined with advanced feature selection and Bayesian hyperparameter optimization was employed to build a physical fitness evaluation model [27]. In spite of this, [27] did not concentrate the ABR optimization, which cannot complete the optimal performance optimization for the adolescent physical training.

3. Q-Learning-Based RL for ABR Optimization

The RL-based ABR optimization algorithms show the tradeoff between state space division and convergence speed. To be specific, if the state space is divided in the more fine-grained way, the more adequate states are generated and further the system behaviors can be more precisely described, while this causes the slow convergence problem. On the contrary, if the division granularity is relatively large, the number of states becomes small with it, while the convergence speed can be accelerated. Besides, these states in the ABR optimization problem are usually continuous, and the current Q-learning-based ABR optimization algorithms only make the simple discrete processing for these states. Therefore, this section plans to combine the nearest neighbor algorithm to address the abovementioned problems.

3.1. ABR Decision Model

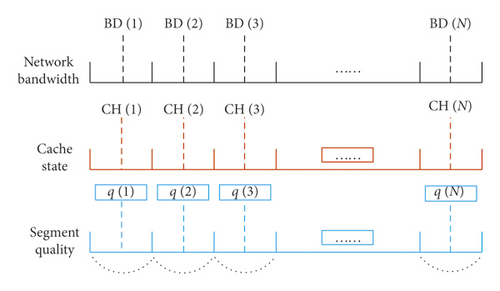

Suppose that each code rate involves N video segments, denoted by seg1, seg2, …, segN, respectively. The client can select the corresponding segment from some code rate according to the network status information, such as network bandwidth, caching condition, and so on. In fact, the video segment selection can be regarded as the sequential decision process, and the decision objective is to guarantee the stable video display with the high code rate on the condition where the network bandwidth keeps the dynamic change. Given this, this paper assumes that there is an intelligent agent to determine how to download the video segment. Mathematically, for any segi, we can observe the information like network bandwidth (denoted by BDi), caching state (denoted by CHi), and the previous segment’s quality (denoted by qi−1), and the corresponding environment state is defined as si = (BDi, CHi, qi−1).

3.2. Nearest Neighbor Algorithm for Q-Learning

3.2.1. K-Nearest Neighbor Proposal

As we know, formula (7) can be solved by the dynamic linear programming algorithm to obtain the optimal strategy. However, the dynamic linear programming has high computation complexity, and thus Kröse [29] uses the Q-learning method to obtain the optimal strategy with the relatively low computation complexity. In terms of Q-learning, it maintains one Q-table which includes the entries on mapping from the state to the action. As above mentioned, the Q-learning has two limitations; thus, this paper prepares to use K-nearest neighbor algorithm to optimize it.

3.2.2. ABR Optimization

According to the above statements, the pseudocode of ABR optimization based on Q-learning with consideration of K-nearest neighbor algorithm is described in Algorithm 1.

-

Algorithm 1: ABR optimization algorithm.

-

Input: State space and action space

-

Output: Q-value

-

Initialize Q-table;

-

for each state, do

-

Compute Q(si, :) with formula (10);

-

Confirm br(ai) by Q(si, :);

-

Request to download segi;

-

Update CHi with formula (2);

-

Compute R(i) with formula (3);

-

ifsi ∈ S, then

-

Update Q-value with formula (12);

-

else

-

Update Q-value with formula (13);

-

endfor

4. Physical Fitness Evaluation

The quantification evaluation for the physical training data is also very significant. In fact, the physical training process is intricate and has the strong randomness, which leads to the difficulty of quantification evaluation. The traditional physical evaluation models (e.g., [22–26]) usually consider the relatively simple factors with the subjectivity, which have a few limitations. Thus, it is required to find a proper model to evaluate the physical training.

4.1. Thought Incubation

- (i)

Individual Exercise Modelling. (i) The generated physical training data is given a rank; (ii) the matrix of transition probability is obtained by the varying data rank; (iii) the vector of stability probability is computed by referring to the stability of Markov process, to predict the stable state; and (iv) the subsequent physical training is conducted based on the exercise limit.

- (ii)

Population Exercise Modelling. The first two steps are similar to those in the individual exercise modelling. The third step is to compare the generated population data and adjust the improvement degree to adapt to the whole physical training effect.

Among them, epij is the transition probability; edij is the energy consumption span difference, and it can be computed by formula (8); and c is the regularity parameter.

4.2. Modelling for Two Situations

4.2.1. Individual Modelling

- (i)

State Space Division. The maximal value and the minimal value in the sequence are found, denoted by crmax and crmin, respectively. Suppose that there are θ divided state intervals, and the length of interval is defined as

-

On this basis, the divided intervals are [crmin, crmin + Δcr), …, [crmin + (θ − 1)Δcr, crmax].

- (ii)

Transition Probability Matrix Computation. For the continuous time periods, their transition probabilities are computed, and one matrix used to record these transition probabilities can be obtained and denoted by eP.

- (iii)

Stable-State Vector Determination. When tmax energy consumption rates are completed, we give an initial state vector denoted by to satisfy

-

According to the stability of Markov chain, we can obtain a state vector eS∗ = {es1, es2, …, esθ} to satisfy , where eS∗ is called the stable-state vector.

- (iv)

Limited Energy Consumption Rate Computation. For θ state intervals, their maximal values are selected, where denotes the maximal value of the ith interval, and the limited energy consumption rate is defined as

To sum up, if cri is larger than crlim, it means that the current physical training is dangerous and the system will notify the teenagers to slow down the physical training.

4.2.2. Population Modelling

- (i)

State Space Division. The maximal value and the minimal value in the sequence are found, denoted by and , respectively. Suppose that there are θ divided state intervals, and the length of interval is defined as

-

Then, the divided intervals are .

- (ii)

Transition Probability Matrix Computation. It is similar to the operation in the individual evaluation model.

- (iii)

Transition Improvement Degree Computation. It can be obtained by formula (16).

In total, if eKij is larger than ∑eKij/(θ − 1), it means that the physical training effect has been improved and the system will notify the population to enhance the physical training.

5. Simulation Results

In this section, we pay attention to the simulation experiments. At first, we develop the data monitoring system. Then, we test the physical training evaluation models. Finally, the whole performance optimization on the adolescent physical training is verified. Meanwhile, the last two parts are based on the developed system platform. In particular, regarding the simulation settings, we make the different simulations and find one proper combination.

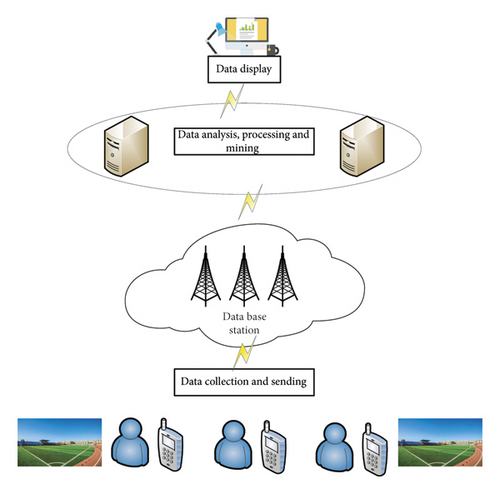

5.1. System Implementation

The real-time data monitoring system depends on computer technology, communication technology, and sports science, which provides the real-time exercise monitoring services according to collecting the data information with respect to the physical training. In terms of the adolescent physical training, the data monitoring system platform architecture is shown in Figure 2. We observe that the system platform includes four modules, i.e., data collection, data receiving and data sending, data analysis and handling, and data display. Among them, the last module can provide the reference for the adolescent physical training directly according to the monitored data.

5.2. Model Evaluation

This section will evaluate two models, i.e., individual evaluation model and population evaluation model. The involved parameters are set as follows: c = 3, tmax = 300, θ = 24, and time period for 30 s. In addition, we use the deviation rate to measure whether the evaluation models can be acceptable. For the individual evaluation model, we test 1000 teenagers for 12 times experiments, where the frequency is once a day. The experimental results on the conducted physical training conditions are shown in Table 1. For the population evaluation model, we also test 1000 populations for 12 times experiments, where one population includes 20 teenagers and the frequency is once a day. The corresponding results on the population physical training conditions are shown in Table 2. Among them, the deviation rate is defined as the ratio of the number of improper conducts and the total number of experiments.

| Experiment no. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #Correct conduct | 998 | 1000 | 997 | 999 | 999 | 998 | 999 | 999 | 999 | 997 | 998 | 1000 |

| #Improper conduct | 2 | 0 | 3 | 1 | 1 | 2 | 1 | 1 | 1 | 3 | 2 | 0 |

| Deviation rate (%) | 0.2 | 0 | 0.3 | 0.1 | 0.1 | 0.2 | 0.1 | 0.1 | 0.1 | 0.3 | 0.2 | 0 |

| Experiment no. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #Correct conduct | 1000 | 998 | 999 | 1000 | 997 | 1000 | 999 | 999 | 1000 | 999 | 1000 | 998 |

| #Improper conduct | 0 | 2 | 1 | 0 | 3 | 0 | 1 | 1 | 0 | 1 | 0 | 2 |

| Deviation rate (%) | 0 | 0.2 | 0.1 | 0 | 0.3 | 0 | 0.1 | 0.1 | 0 | 0.1 | 0 | 0.2 |

As seen from Tables 1 and 2, the deviation rate for each group experiment is always smaller than 0.3%. To be specific, the average deviation rate in terms of the individual evaluation model is 0.158% and that for the population evaluation model is 0.092%, and the two values can be controlled within the 0.16%, which implies that it is efficient to use the Markov model to evaluate the adolescent physical training. Furthermore, it implies that the Markov model has better evaluation effect in terms of the population physical training situation because 0.158% < 0.092%.

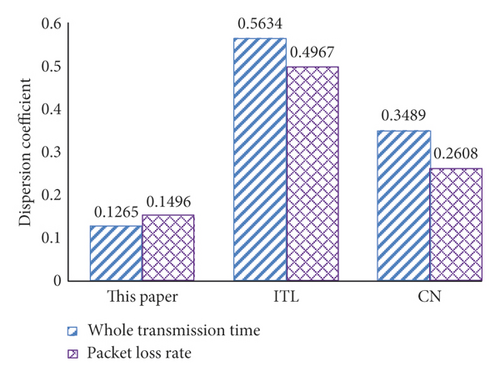

5.3. Performance Verification

This section will verify the optimization preformation of adolescent physical training by comparing with two benchmarks, i.e., [3, 27] published by Internet Technology Letters (ITL) and Computer Networks (CN), respectively. Meanwhile, the whole transmission time and packet loss rate are adopted as two performance verification metrics. The involved parameters are set as follows: γ = 0.45, α = 0.6, β = 0.4, λ = 0.9, K = 6, η = 0.35, and ξ = 0.4. In addition, the number of simulations is set as 10. The experimental results on the whole transmission time and packet loss rate are shown in Tables 3 and 4, respectively.

| Experiment no. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| This paper | 43.26 | 44.61 | 43.97 | 45.67 | 43.09 | 44.56 | 44.13 | 45.02 | 43.86 | 42.61 |

| ITL | 56.67 | 55.37 | 58.64 | 57.26 | 55.97 | 56.43 | 59.06 | 58.34 | 53.49 | 55.73 |

| CN | 83.18 | 82.67 | 84.62 | 85.64 | 85.31 | 84.64 | 83.59 | 84.22 | 82.98 | 84.55 |

| Experiment no. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| This paper | 0.156 | 0.173 | 0.146 | 0.152 | 0.139 | 0.109 | 0.154 | 0.167 | 0.141 | 0.121 |

| ITL | 0.648 | 0.694 | 0.703 | 0.625 | 0.713 | 0.633 | 0.687 | 0.701 | 0.692 | 0.681 |

| CN | 0.355 | 0.386 | 0.321 | 0.364 | 0.339 | 0.309 | 0.359 | 0.316 | 0.356 | 0.392 |

It can be seen from Tables 3 and 4 that this paper always consumes the smallest whole transmission time and the lowest packet loss rate. This implies that this paper has the optimal optimization performance for the adolescent physical training. This is because this paper uses RL to obtain the relatively optimal solution and uses the Markov model to obtain the relatively accurate training effect. In addition, regarding the two metrics, we show the corresponding dispersion coefficients to evaluate the stability, as shown in Figure 3. We observe that this paper always has the smallest dispersion coefficient due to the stability guarantee of using RL, which implies that the performance mechanism is the most stable.

6. Conclusions

The physical fitness of teenagers has attracted the global attention for a long time because it has a considerably important influence on the rise and fall of each country. This paper proposes to optimize the adolescent physical training based on RL and Markov model. Because the RL-based ABR optimization algorithms shows the tradeoff between state space division and convergence speed, this paper improves the Q-learning by using the K-nearest neighbor algorithm. In addition, we also present the evaluation models on the physical fitness, including individual exercise modelling and population exercise modelling, based on the Markov model. Moreover, we make the simulation experiments based on the developed data monitoring system platform, and the results have demonstrated that this paper has always the optimal optimization performance for the adolescent physical training with the most stable state. In the future, we will deploy more functions in our system platform, such as adaptive recognition and falling warning. Besides, we also make the large-scale experiments based on the real testbed instead of the system platform.

Conflicts of Interest

The authors declare that they have no conflicts of interest regarding the publication of this paper.

Acknowledgments

The work was supported by the Special Funds Project for Basic Scientific Research in Central Universities (no. 451170306081) and the Research Project on the Realization PATH of High-Quality Development of School Competitive Sports in Jilin Province (no. 2020C088). In addition, the authors also thank Yuanshuang Li who is an expert in the field of video transmission to provide the great help for ABR knowledge.

Open Research

Data Availability

No data were used to support this study.