A Double Nonmonotone Quasi-Newton Method for Nonlinear Complementarity Problem Based on Piecewise NCP Functions

Abstract

In this paper, a double nonmonotone quasi-Newton method is proposed for the nonlinear complementarity problem. By using 3-1 piecewise and 4-1 piecewise nonlinear complementarity functions, the nonlinear complementarity problem is reformulated into a smooth equation. By a double nonmonotone line search, a smooth Broyden-like algorithm is proposed, where a single solution of a smooth equation at each iteration is required with the reduction in the scale of the calculation. Under suitable conditions, the global convergence of the algorithm is proved, and numerical results with some practical applications are given to show the efficiency of the algorithm.

1. Introduction

Nonlinear complementarity problems arisen in many practical applications, for example, the KKT systems of mathematical programming problem, the economic equilibrium, the engineering design problem, can be reformulated into the NCP [1–3].

During the past decades, various efficient numerical algorithms are proposed to solve the NCP. One of the most effective methods is to transform the NCP into the semismooth equations (based on nonlinear complementarity function, NCP function) so that the semismooth Newton-type method can be deployed. The most well-known NCP functions are the Fischer–Burmeister function [4] (FB NCP function) and the modified FB NCP function [5]. Sun and Qi [6] proposed several NCP functions, investigated their properties, and provided a numerical comparison between the behavior of different NCP functions. Based on NCP functions, some kinds of algorithm are designed, see, for example, [7–11].

Another well-known class of algorithm is the smoothing algorithm. The main idea of smoothing algorithm is to reformulate the NCP to smooth equations by introducing the smoothing NCP functions. Some smoothing NCP functions and the corresponding algorithms can be found in [12–15].

Besides the NCP functions mentioned above, a 3-1 piecewise NCP function was proposed by Liu et al. [16], using it to solve the inequality-constrained nonlinear optimization. The advantage of the 3-1 piecewise lies in the absence of the smoothing parameter. Motivated by the 3-1 piecewise NCP function, Su and Yang [17, 18] developed smooth-based Newton algorithms with nonmonotone line search for nonlinear complementarity and generalized nonlinear complementarity problems. Different from the previous methods, the authors introduced independent variable quantities to simplify the algorithm, reducing the amount of calculation without using the smoothing parameter.

Smoothing procedure allows one to use successful quasi-Newton approaches, and there are many quasi Newton methods available for the nonlinear complementarity problems based on some smoothing functions [19–26].

In this paper, we will construct a 3-1 piecewise and 4-1 piecewise NCP functions and develop a double nonmonotone quasi Newton method to solve the nonlinear complementarity problems. Based on the piecewise NCP functions, the nonlinear complementarity problem is transformed into the smooth equation. Moreover, we only solve one smooth equation at each iteration. In order to get the better numerical results, a double nonmonotone line search is used by combining with the Broyden-like algorithm. Consequently, the omission of the parameter μ and the single calculation of the Jacobian matrix at each iteration have led to the simplicity and flexibility of this approach. Furthermore, let t = F(x) as an independent variable, which has no relationship with x, ensures the realization of our algorithm easier. Our algorithm is proved to be well-defined and globally convergent under suitable conditions. At the end of the paper, we give numerical results to prove the effectiveness of the algorithm. This paper is organized as follows: the piecewise linear NCP functions are introduced in Section 1. The double nonmonotone line search with quasi-Newton method is given in Section 2. In Section 3, the convergence properties of the algorithm are presented. We give some numerical results in Section 4, and the conclusion is drawn in Section 5.

2. Algorithm Analysis

The identity matrix of n × n, diagonal matrix whose ith diagonal element is , and the diagonal matrix whose ith diagonal element is are represented by I, , and , respectively.

We use the nonmonotone line search to present Broyden-like method. The search directions d and λ are obtained by calculating a system of smooth equation, and the algorithm is described in detail in Algorithm1.

-

Algorithm 1:

-

Step 0: initialization.

-

Given initial point (x0, t0) ∈ R2n, μ ∈ (0,1), ξ > 0, , B0 = V(x0, t0), k = 0.

-

Step 1: if Ψ(xk, tk) = 0, then stop. Otherwise, calculate the search direction

-

. (a)

-

By (a), we can obtain dk and λk.

-

Step 2: modified linear search technique.

-

Step 2.1 If

-

Ψ(xk + dk, tk + λk) ≤ ξΨ(xk, tk), (b)

-

‖Ψ(xk + dk, tk + λk)‖ ≤ ξmax0≤r≤m(k)−1‖Ψ(xk−r, tk−r)‖, (c)

-

‖tk + λk − F(xk + dk)‖ ≤ ξmax0≤r≤m(k)−1‖tk−r − F(xk−r)‖, (d)

-

where m(0) = 0, 0 ≤ m(k) ≤ min{m(k − 1) + 1, M} is a positive constant. Then, let

-

xk+1 = xk + dk, tk+1 = tk + λk, (e)

-

and go to Step 3; otherwise, go to Step 2.2.

-

Step 2.2: for j = 0,1, …, check the following inequality with μj successively

-

‖Φ(xk + μjdk, tk + μjλk)‖ ≤ ξmax0≤r≤m(k)−1‖Φ(xk−r, xk−r)‖, (f)

-

‖sk + μjλk − F(xk + μjdk)‖ ≤ ξmax0≤r≤m(k)−1‖tk−r − F(xk−r)‖, (g)

-

Let jk be the smallest nonnegative integer j such that (f) and (g) hold for μj. Set , and

-

xk+1 = xk + ρkdk, tk+1 = tk + ρkλk, (h)

-

and go to Step 3.

-

Step 3: Update Bk to get Bk+1,

-

, (i)

-

where

-

. (j)

-

Select ξk to satisfy and matrix Bk+1 is nonsingular.

-

Step 4: Let k = k + 1, go to Step 1.

3. Convergence Analysis

In this section, the global convergence properties of a Broyden-like algorithm with 3–1 piecewise NCP function are discussed. We give some assumptions to prove the convergence of the algorithm.

Assumption 1.

- (a)

Suppose F : Rn⟶Rnis P0-function and it is continuously differentiable.

- (b)

On the level set of

Remark 1. (see [27]). F(x) is P0-function, then F ′(x) is positive semidefinite.

Lemma 1. If H(x0, t0) ≠ 0, then B0 = V0 is nonsingular.

Proof. Assume H(x0, t0) ≠ 0. If for some (u, v) ∈ R2n, where and , then

By the definitions of and , for all i, and . Therefore, diag(β0) is nonsingular. Then

Substitute v in (12) by (14), and multiply by uT, we have

According to the definition of P0-function, all the principal minor determinants of F′(x) is nonnegative; hence, F′(x) is positive semidefinite. And matrix is positive definite. Therefore u = 0. Together with (14), it holds that v = 0, which implies B0 is nonsingular.

Lemma 2. Assume that Assumption 1 holds. Then Φ(xk, tk)⟶0, as k⟶∞.

Proof. For convenience, we define ‖Φl(k)‖ = max0≤r≤m(k)−1‖Φk−r‖, where k − m(k) + 1 ≤ l(k) ≤ k. When m(k + 1) ≤ m(k) + 1, we have

Which means ‖Φl(k)‖ is decreasing monotonely, and hence, we have {‖Φl(k)‖} convergent. Based on (c) of Algorithm1, we have ‖Φl(k)‖ ≤ ‖Φl(l(k) − 1)‖.

By ξ ∈ (0,1), {‖Φl(k)‖}⟶0(k⟶∞) holds, so according to ‖Φk+1‖ ≤ ξ‖Φl(k)‖⟶0, the conclusion holds.

Lemma 3. Assume Assumption 1 holds. Then tk − F(xk)⟶0 as k⟶∞.

Proof. Define ‖tl(k) − F(xl(k))‖ = max0≤r≤m(k)−1‖tk−r − F(xk−r)‖, where k − M ≤ l(k) ≤ k. For m(k + 1) ≤ m(k) + 1, we have

From (17), ‖tl(k) − F(xl(k))‖ is decreasing in a monotone way; then {‖tl(k) − F(xl(k))‖}is convergent.

According to (g) of Algorithm 1, ‖tl(k) − F(xl(k))‖ ≤ ξ‖tl(l(k) − 10) − F(xl(l(k) − 1))‖. By ξ ∈ (0,1), {‖tl(k) − F(xl(k))‖}⟶0(k⟶∞) holds. That means ‖tk+1 − F(xk+1)‖ ≤ ξ‖tl(k) − F(xl(k))‖⟶0 holds by Algorithm1, so the conclusion is as follows.

Lemma 4. Assume Assumption 1 holds. Then dk⟶0, λk⟶0, and Hk⟶0, as k⟶∞.

Proof. We have Φ(xk, tk)⟶0, [tk − F(xk)]⟶0, as k⟶∞ by Lemma 2 and Lemma 3.

So, H(xk, tk)⟶0, as k⟶∞:

We know that Bk is nonsingular by Algorithm1. So, dk⟶0, and λk⟶0, as k⟶∞.

Theorem 1. Under the same condition in Lemma 4, equation (a) of Algorithm1 has solutions, and the definition of Algorithm1 is well.

Proof. On the one hand, we know B0 is nonsingular by Lemma 1. And Bk produced by the Broyden-like iteration is nonsingular. Hence equation (a) of Algorithm1 has one and only one solution. On the other hand, we know Φ(xk, tk)⟶0 and [tk − F(xk)]⟶0 as k⟶∞ by Lemma 2 and Lemma 3. So ‖H(xk, tk)‖⟶0 as k⟶∞.

Lemma 5. Assume Assumption 1 holds, and let {(xk, tk)} be generated sequence by Algorithm1; then {(xk, tk)} ⊂ L(x0, t0).

Proof. By induction, for k = 0, we have (x0, t0) ∈ L(x0, t0).

Assume (xk, tk) ∈ L(x0, t0); then we have Ψ(xk, tk) ≤ Ψ(x0, t0). By (c) and (d) of Algorithm1, we get

So, (xk+1, tk+1) ∈ L(x0, t0). Based on the similar analysis, it is easy to see {(xk, tk)} ⊂ L(x0, t0) for all k.

Theorem 2. Assume Assumption 1 holds, and {(xk, tk)} is generated by Algorithm1; then there exists an accumulation point (x∗, t∗) of the sequence {(xk, tk)} which is solution of NCP(1).

Proof. From Lemma 3 and Lemma 4, we know {(xk, tk)} ⊂ L(x0, t0). By Assumption 1(b), we see that L(x0, t0) is bounded. So, {(xk, tk)} has an accumulation point. Suppose there exists a subsequence which has an accumulation point (x∗, t∗). We should prove H(x∗, t∗) = 0.

Suppose be an infinite sequence generated by Algorithm1. By construction of the algorithm, we know there are two types of successive iteration. Let K1 = {k|xk+1 = xk + dk, tk+1 = tk + λk} and K2 = {k|xk+1 = xk + ρkdk, tk+1 = tk + ρkλk}. We need to prove the conclusion by the following two cases:

-

Case I: K1 is an infinite index set. Let the sequence be , which satisfy (b) of Algorithm1. Therefore,

-

This suggests that liminfk⟶∞H(xk, tk) = 0.

-

Case II: K2 is an infinite index set. Let the sequence be , which satisfy (f) and (g) of Algorithm 1.

It is known that ‖Φl(k)‖ is monotone decreasing and limk⟶∞‖Φk‖ = 0 by Lemma 2 and ‖tl(k) − F(xl(k))‖ is monotone decreasing and limk⟶∞‖tk − F(xk)‖ = 0 by Lemma 3. Therefore, limk⟶∞‖H(xk, tk)‖ = limk⟶∞‖Φk‖ + limk⟶∞‖tk − F(xk)‖ = 0 as k ∈ K2.

Therefore, the conclusion is followed.

4. Numerical Results

In this section, some numerical results are given. We used a personal computer with 4.0 GB memory and Intel(R) Core(TM)i5-5200U CPU @2.20 GHz to perform all experiments. We used Windows 10 as the operating system and Matlab R2018b to write the computer codes. In the whole experiment, the parameters used in Algorithm1 were ξ = 0.9, μ = 0.8, ξk ≡ 1, M is an integer which is randomly selected from 2 to 5. ‖H(x, t)‖ < 10−6 was the stop criterion.

The number of iterations, the CPU time in seconds, and the value of x(T)F(x) at the final iteration are are listed in Table 1. x0 in Table 1 means the Initial point where ones (i, 1) means the i dimension of this problem.

4.1. Some Test Problems

Examples 1–8 (NCP) are considered.

Example 1. Consider (1), where x ∈ Rnand F(x) = Mx + q with

We use x0 = (1,1, …,1)Tand as the starting points to text this problem.

Example 2. Consider (1), where x ∈ R3, and F(x) : R3⟶R3 given by

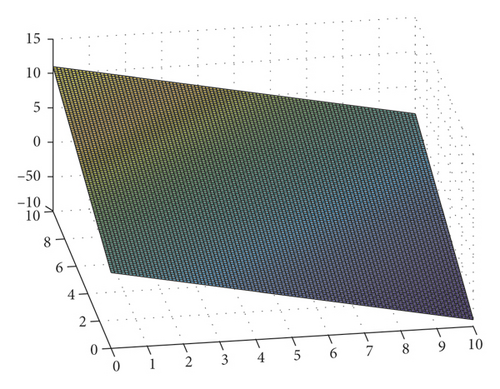

Figure 1 is the 3D diagram of Example 2. (0, λ, 0) is an infinite solution of this problem, where λ ∈ [0,1]. The initial points x0, t0 are randomly generated, and these elements are in the interval (0,10).

Example 3. Consider (1), where x ∈ R7, and F(x) : R7⟶R7 given by

Example 4. Consider (1), where x ∈ R4 and F(x) : R4⟶R4 given by

Example 5. (Kojima–Shindo Problem). Consider (1), where x ∈ R4and F(x) : R4⟶R4 given by

is a degenerate solution, and (1,0,3,0) is a nondegenerate solution.

Example 6. (Modified Mathiesen Problem). Consider (1), where x ∈ R4 and F(x) : R4⟶R4 given by

Example 7. The function f(x) is endowed with the component as follows:

Example 8. Consider (1), where x ∈ Rn and F(x) = Mx + q with

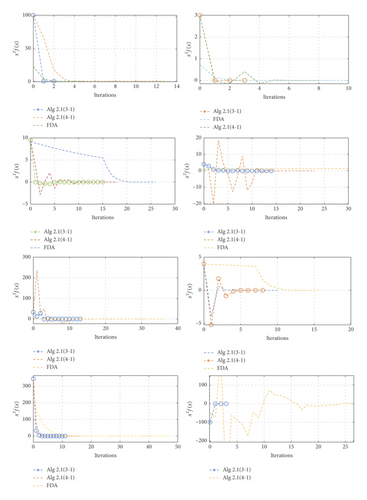

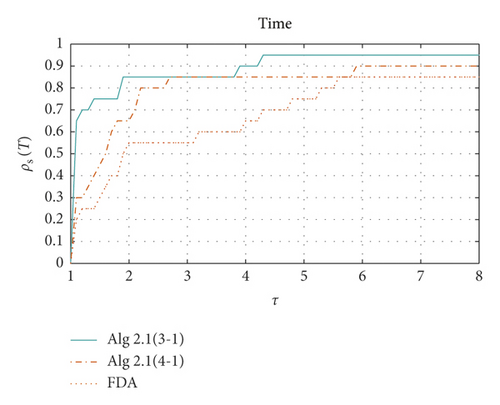

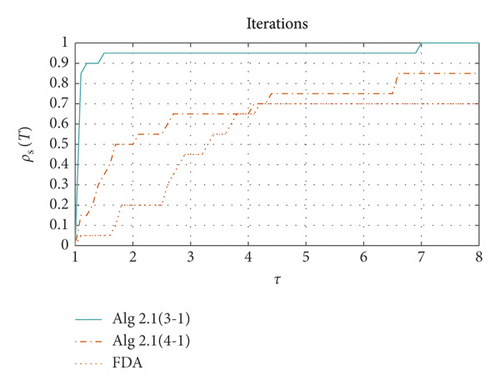

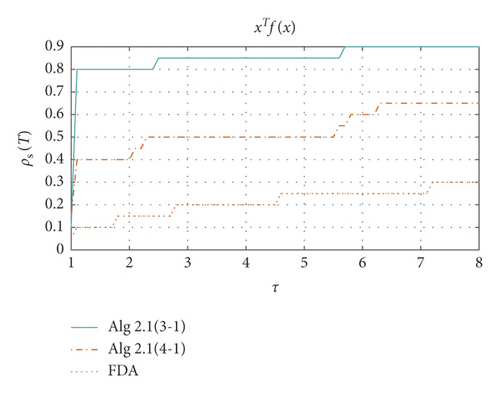

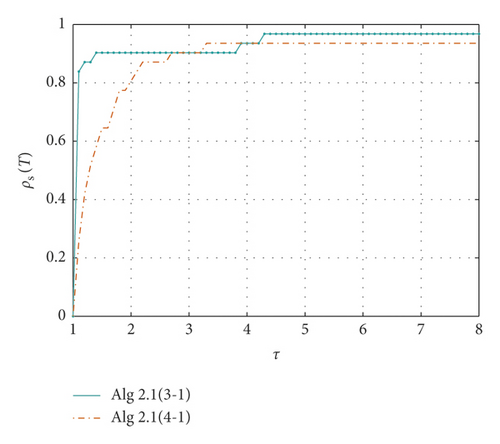

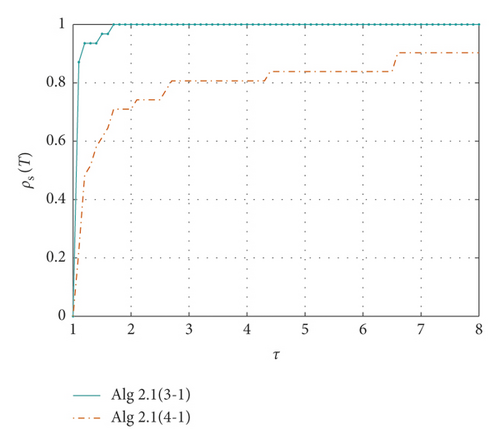

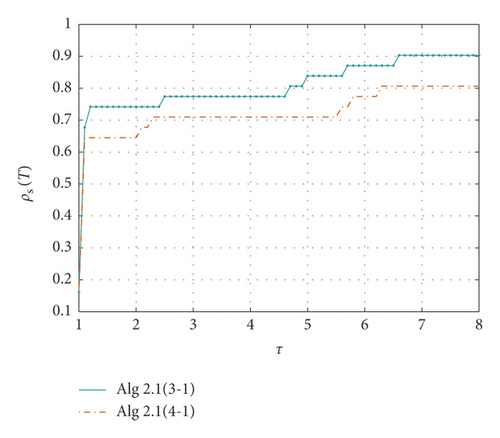

Table 1 shows the results of Examples 1–8 using 3-1 piecewise, 4-1 piecewise Algorithm1 and feasible direction method, respectively. It can be seen from the table that Algorithm1 applying 3-1 piecewise has a good solution to all the above problems. Algorithm1 applying 4-1 piecewise is slightly insufficient, and the feasible direction method has some difficulties in solving examples above, and some of the examples cannot be solved. Figure 2 shows how the xTf(x) value of the three algorithms decreases as the number of iterations increases in each specific example. We use performance profiles [28]—distribution functions for a performance metric—as a tool for comparing different algorithms. We consider the comprehensive performance of the above three algorithms in terms of CPU time, number of iterations, and xTf(x) value. If the curve is closer to 1, the better the ability to solve the problem (Figure 3).

| Problem | x0 | Algorithm 1 with 3–1 piecewise | Algorithm 1 with 4–1 piecewise | Feasible directions algorithm | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Iter | CPU time | xTf(x) | Iter | CPU time | xTf(x) | Iter | CPU time | xTf(x) | ||

| 4.1 | ones(100,1) | 2 | 0.000814 | 5.49E – 09 | 13 | 0.001768 | 1.12E – 08 | 5 | 0.004301 | 6.58E – 07 |

| 2 | 0.473808 | 5.69E – 08 | 13 | 1.520458 | 1.21E – 08 | 5 | 0.257754 | −8.81E – 14 | ||

| ones(4069,1) | 3 | 15.640096 | −1.58E – 11 | 13 | 18.551757 | 1.16E – 08 | 5 | 8.556959 | 7.20E – 14 | |

| ones(8138,1) | 2 | 51.204458 | 4.51E – 10 | 13 | 70.145272 | 1.44E – 08 | 5 | 53.324527 | −1.27E – 12 | |

| (1,1,1)T | 3 | 0.000235 | −1.02E – 07 | 8 | 0.000617 | -5.41E – 08 | 10 | 0.000354 | 4.66E – 07 | |

| 4.2 | (10,10,10)T | 4 | 0.000288 | 5.25E – 08 | 10 | 0.000604 | 3.26E – 07 | 13 | 0.000529 | 3.76E – 07 |

| (1,5,9)T | 7 | 0.000462 | −2.23E – 08 | 5 | 0.000742 | 4.64E – 07 | 19 | 0.001465 | 3.50E – 07 | |

| ones(7,1) | 15 | 0.001014 | −2.23E – 08 | 22 | 0.001768 | 5.53E – 07 | 26 | 0.004301 | 6.58E – 07 | |

| 4.3 | 10∗ones(7,1) | 15 | 0.001661 | −4.62E – 09 | 24 | 0.002366 | 1.05E – 08 | NaN | NaN | NaN |

| 10∗rand(7,1) | 16 | 0.001608 | −2.14E – 09 | 20 | 0.001204 | 1.20E – 08 | NaN | NaN | NaN | |

| 4.4 | (1,1,1,1)T | 14 | 0.007181 | −3.11E – 08 | 23 | 0.001696 | -6.07E – 07 | >500∗ | Inf | NaN |

| (10,10,10,10)T | 145 | 0.019268 | 1.80E – 08 | 86 | 0.004996 | -2.26E – 07 | 21 | 0.007137 | 6.12E – 07 | |

| 4.5 | (1,1,1,1)T | 13 | 0.00103 | −5.62E – 09 | 26 | 0.001658 | 4.93E – 09 | 36 | 0.001121 | 6.43E – 07 |

| (1,2,3,4)T | 23 | 0.002497 | −5.65E – 07 | 30 | 0.003223 | -2.75E – 08 | 65 | 0.009764 | 9.61E – 07 | |

| 4.6 | (1,1,1,1)T | 10 | 0.001351 | 1.52E – 08 | 9 | 0.001473 | 6.19E – 09 | 16 | 0.00746 | 3.67E – 07 |

| 4.7 | ones(100,1) | 11 | 0.039089 | −9.29E – 11 | 17 | 0.001768 | 8.98E – 08 | 46 | 0.024301 | 8.81E – 07 |

| ones(1024,1) | 20 | 1.230144 | 2.26E – 10 | 20 | 1.745354 | -2.68E – 11 | 52 | 1.157443 | 7.57E – 07 | |

| ones(4069,1) | 15 | 25.532848 | −2.61E – 12 | 20 | 35.615797 | 3.61E – 10 | 55 | 50.806954 | 8.14E – 07 | |

| ones(8138,1) | 15 | 305.640096 | −4.09E – 14 | 20 | 370.126146 | 4.62E – 09 | 57 | 415.720027 | 7.56E – 07 | |

| ones(100,1) | 4 | 0.002064 | 7.41E – 07 | 57 | 0.107685 | 1.32E – 07 | NaN | NaN | NaN | |

| 4.8 | ones(1024,1) | 6 | 0.641349 | 5.40E – 07 | 407 | 22.002366 | 3.10E – 06 | NaN | NaN | NaN |

| ones(4069,1) | 8 | 26.727794 | 1.87E – 06 | >500∗ | Inf | NaN | NaN | NaN | NaN | |

| ones(8138,1) | 10 | 215.640096 | 5.19E – 06 | >500∗ | Inf | NaN | NaN | NaN | NaN | |

4.2. Nash Equilibrium Problem

General economic equilibrium [29] means that total supply and total demand are exactly equal in a price system. With the existing productivity and technical conditions, producers get the most profit, while consumers get the most utility when they meet the budget constraints. The theory of general economic equilibrium was first put forward by the French economist Walras. Walras believes that when the whole economy is in equilibrium, the prices of all consumer goods and factors of production will have a certain equilibrium value, and their output and supply will have a certain equilibrium quantity. It is assumed that the whole economic system is a large and complete trading market, and the equilibrium price system means that all commodities are traded in this market, and finally all commodities can be traded.

Considering the competitive economic model of production and investment, suppose H is a price system, in which there are N kinds of commodities, we use RN to express commodity space. For producer i, the set of production is Yi⊆RN. For consumer j, the set of consumption is Zj⊆RN. The number of producers and consumers in the system are l and k, respectively. The total production, total consumption, and initial commodity reserve are represented by Yi, Zj, and λj, respectively, and the proportion of consumer j in the profit of producer i is represented by ϕji. Specially, i = 1, …, l; j = 1, …, k; and Zj, Yi, λj ∈ RN.

To describe the model better, we assume the following definitions. In particular, Zj, Yi, and λj are independent of x.

Definition 1. Let zj ∈ Zj, yi ∈ Yi, x is the equilibrium price:

- (1)

For every i, the maximum profit function is x · yi.

- (2)

For every j, preference maximum element is .

- (3)

Economic equilibrium is defined as .

It can be seen from Definition 1 that when price system H reaches economic equilibrium, the demands of both producers and consumers are satisfied and then all the commodities of price system H are sold, that is, the commodities are cleared. We define the conditions for clearing the goods as

Equation (29) is not only the equilibrium state of free allocation, but also the model of linear complementarity problem. If Zj, Yi, and λj are related to x, (29) will become a nonlinear complementarity problem (NCP).

Let the inverse demand function for the market be defined by

Example 9. Data is given in Table 2.

| Firm i | ci | Li | βi |

|---|---|---|---|

| 1 | 10 | 5 | 1.2 |

| 2 | 8 | 5 | 1.1 |

| 3 | 6 | 5 | 1 |

| 4 | 4 | 5 | 0.9 |

| 5 | 2 | 5 | 0.8 |

Example 10. Data is given in Table 3.

| Firm i | ci | Li | βi |

|---|---|---|---|

| 1 | 5 | 10 | 1.20 |

| 2 | 3 | 10 | 1.00 |

| 3 | 8 | 10 | 0.90 |

| 4 | 5 | 10 | 0.60 |

| 5 | 1 | 10 | 1.50 |

| 6 | 3 | 10 | 1.00 |

| 7 | 7 | 10 | 0.70 |

| 8 | 4 | 10 | 1.10 |

| 9 | 6 | 10 | 0.95 |

| 10 | 3 | 10 | 0.75 |

4.3. Two-Dimensional Contact Problem

Under the conditions of nonpenetration and negligible attraction between objects, the elastic contact problem mainly requires the contact surface and the pressure of the contact surface when two objects are pressed together. The wheel-rail problem is a typical elastic contact problem.

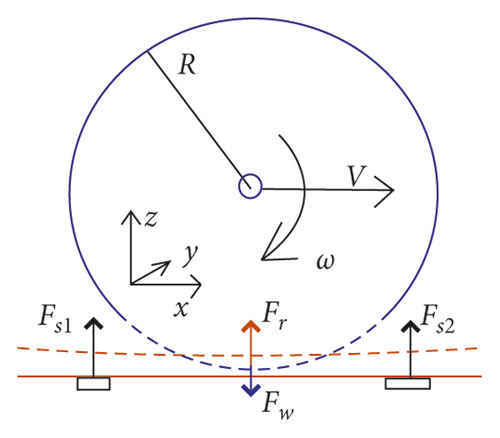

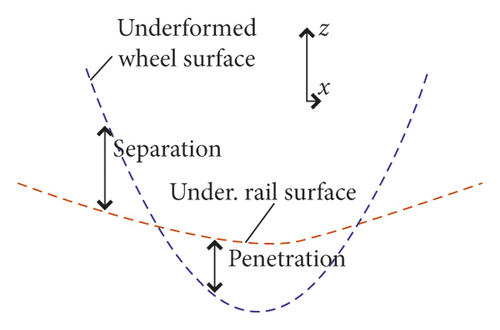

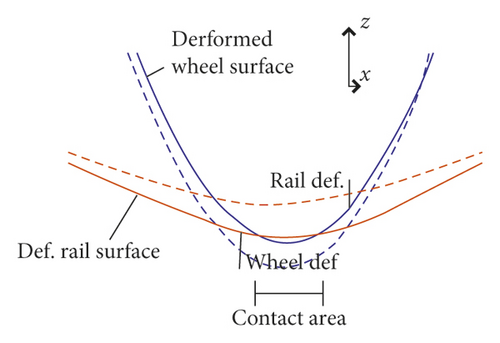

Figure 4 [31] shows the geometric structure application of the wheel-rail contact phenomenon, where Figure 4(a) represents the overall geometric structure showing the forward speed V and angular velocity ω of the track when the wheel is rolling. The track is deformed by the wheel pressure Fw and the sleeper pressure Fs1 and Fs2. At the same time, the wheel deforms due to the wheel-rail pressure Fr, and Figures 4(b) and 4(c) represent the undeformed and deformed states, respectively.

Example 11. The diagonal element Tk of the coefficient matrix T is

Example 12. The diagonal element Tk of the coefficient matrix T is

Example 13. The diagonal element Tk of the coefficient matrix T is

Table 4 shows the performance of Algorithm1 using different piecewise methods for practical application problems. From Figures 5 to 7, it can be seen that Algorithm1 using 3-1 piecewise has a stronger ability to solve all the above problems than Algorithm1 applying 4-1 piecewise.

| Problem | x0 | Algorithm 1 with 3–1 piecewise | Algorithm 1 with 4–1 piecewise | ||||

|---|---|---|---|---|---|---|---|

| Iter | CPU time | XTF(X) | Iter | CPU time | XTF(X) | ||

| 4.9 | 20∗ones(5,1) | 20 | 0.005197 | −1.87E − 06 | 23 | 0.00523 | −1.49E − 06 |

| 30∗ones(5,1) | 18 | 0.010009 | −2.28E − 06 | 21 | 0.019381 | −3.98E − 06 | |

| 4.10 | 20∗ones(5,1) | 45 | 0.033759 | 3.61E − 06 | 43 | 0.050365 | 1.03E − 06 |

| 30∗ones(5,1) | 55 | 0.05047 | 7.62E − 07 | 49 | 0.055852 | −1.80E − 06 | |

| 4.11 | ones(100,1) | 13 | 0.052309 | 1.87E − 08 | 15 | 0.089898 | 1.65E − 08 |

| ones(1024,1) | 14 | 2.080973 | −3.66E − 09 | 16 | 2.616348 | 1.94E − 09 | |

| ones(4069,1) | 14 | 60.367315 | 1.05E − 09 | 16 | 68.659795 | 8.54E − 09 | |

| ones(8138,1) | 14 | 574.245627 | 3.05E − 09 | 20 | 682.960276 | −5.23E − 09 | |

| ones(100,1) | 14 | 0.058579 | 1.96E − 09 | 14 | 0.072436 | −5.59E − 09 | |

| 4.12 | ones(1024,1) | 14 | 2.376266 | −1.89E − 08 | 14 | 2.348159 | −4.86E − 09 |

| ones(4069,1) | 12 | 53.195688 | −7.32E − 09 | 14 | 61.321038 | 2.96E − 12 | |

| ones(8138,1) | 17 | 519.846528 | −2.89E − 08 | 14 | 582.933657 | 3.25E − 11 | |

| ones(100,1) | 17 | 0.060449 | 1.99E − 08 | 19 | 0.0813907 | 4.00E − 09 | |

| ones(1024,1) | 17 | 2.860266 | 5.62E − 08 | 19 | 3.145843 | 8.61E − 09 | |

| 4.13 | ones(4069,1) | 17 | 74.532155 | 7.27E − 08 | 19 | 82.960276 | −2.45E − 09 |

| ones(8138,1) | 21 | 674.226819 | 2.02E − 08 | 23 | 782.155632 | 5.12E − 09 | |

5. Conclusion

In this paper, by using 3-1 and 4-1 piecewise nonlinear complementarity problem functions, we reformulate the nonlinear complementarity problem into smooth equations. By using a new nonmonotone line search, a modified smooth Broyden-like algorithm is proposed and the global convergence of the proposed algorithm is obtained, and the numerical tests for some practical problems show the efficiency of the algorithm. How to get the local convergence under certain conditions is worth studying in the future.

Conflicts of Interest

The authors declare that there are no conflicts of interests regarding the publication of this paper.

Open Research

Data Availability

The data used to support the findings of this study are included within the article.