QoS Prediction for Neighbor Selection via Deep Transfer Collaborative Filtering in Video Streaming P2P Networks

Abstract

To expand the server capacity and reduce the bandwidth, P2P technologies are widely used in video streaming systems in recent years. Each client in the P2P streaming network should select a group of neighbors by evaluating the QoS of the other nodes. Unfortunately, the size of video streaming P2P network is usually very large, and evaluating the QoS of all the other nodes is resource-consuming. An attractive way is that we can predict the QoS of a node by taking advantage of the past usage experiences of a small number of the other clients who have evaluated this node. Therefore, collaborative filtering (CF) methods could be used for QoS evaluation to select neighbors. However, we might use different QoS properties for different video streaming policies. If a new video steaming policy needs to evaluate a new QoS property, but the historical experiences include very few evaluation data for this QoS property, CF methods would incur severe overfitting issues, and the clients then might get unsatisfied recommendation results. In this paper, we proposed a novel neural collaborative filtering method based on transfer learning, which can evaluate the QoS with few historical data by evaluating the other different QoS properties with rich historical data. We conduct our experiments on a large real-world dataset, the QoS values of which are obtained from 339 clients evaluating on the other 5825 clients. The comprehensive experimental studies show that our approach offers higher prediction accuracy than the traditional collaborative filtering approaches.

1. Introduction

In recent years, video content accounts for a large proportion of global Internet consumption. Video steaming is gradually becoming the most attractive service [1–3]. However, Internet does not provide any quality of service guarantees to video content delivery. To expand the server capacity and reduce the video streaming bandwidth, P2P technologies are widely adopted by many content delivery systems [4–7]. In a P2P network, a peer not only downloads the media data from the network but also uploads the download data to other clients in the same network. To get a better user experience of watching videos, each client (or node) in the P2P network should select some other nodes as its neighbors in terms of the quality of service (QoS) for this client [8–10]. For example, a client might prefer to select nodes with high bandwidth. Due to the different locations and network conditions, different clients might have different QoS experience for the same node. To get the neighbors with the best QoS, one might want to evaluate the QoS of all the other nodes for each client. Unfortunately, the video streaming P2P network usually includes an extremely large number of users, and evaluating the QoS of all the nodes is time-consuming and resource-consuming.

An attractive way is that we can predict the QoS value of a node by taking advantage of the past usage experiences of a small number of the other clients who have evaluated this node. This refers to a famous technology, collaborative filtering (CF), which has been extremely studied in recommender systems [11–13]. With the help of CF method, each client only needs to know a small number of the real QoS values of the other nodes to select neighbors. The core idea is that if two clients have similar evaluation values of a specific QoS for some known nodes, they might also have similar QoS evaluation values for the other unknown nodes.

However, the neighbor selection policy might need to be changed to improve the quality of video content delivery. If the new policy uses the new QoS property to select neighbors, but the historical user experiences include very few data of this new QoS property, CF methods would incur severe overfitting issues, and then each client might get worse neighbor recommendation list. Transfer learning aims to adapt a model trained in a source domain with rich labeled data for use in a target domain with less labeled data, where the source and target domain are usually related but under different distributions [14–16]. Recently, deep neural networks have yielded remarkable success on many applications, especially on the computer vision, speech recognition, and natural language processing. Deep neural networks are powerful for learning general and transferable features. There are two major transfer learning scenarios, fine-tuning the pretrained network or treating the pretrained network as a fixed feature exactor. Instead of random initialization, we can initialize the network with a pretrained network, or we can freeze the weights of some layers of the network [17–19].

Unlike many supervised transfer learning tasks, we cannot simply fine-tune or freeze the weights of the network. The only information about the nodes in the video streaming P2P network is their identifiers (IDs) and the QoS evaluation historical experience. There is no raw feature for each node, and we need to lean abstract features for the nodes using embedding. Freezing the embedding features seems unreasonable. Furthermore, different QoS properties have different value ranges, and fine-tuning will make the final weights differ greatly from the initial weights pretrained in the source domain. Due to the sparsity of target domain labeled data, fine-tuning too much would incur severe overfitting problem.

- (i)

We propose a novel neural collaborative filtering model for QoS prediction using transfer learning technology.

- (ii)

We provide a novel interaction layer to represent the relationship between latent embedding factors of the nodes.

- (iii)

We adopt partial fine-tuning and MMD measurement to train the target domain model to implement domain adapting.

The remainder of this paper is organized as follows: We introduce the related work in Section 2. Section 3 presents the design details of our method. Section 4 describes our experiments and Section 5 concludes this paper.

2. Related Work

Distributed user-generated videos delivery poses a new challenge to large-scale streaming systems. To stream live videos generated by users, many existing video streaming systems rely on a centralized network architecture [23–25]. Even these streaming systems use Content Delivery Network (CDN) for video delivery, such a kind of solution is not cost-effective [26–28]. The unit price of content delivery over the Internet has dramatically decreased in recent years. However, there are higher requirements in terms of resolution, frame rate, or bitrate than before. Therefore, the amount of bandwidth consumed per user has grown at a faster rate. To reduce the bandwidth or the costs and improve the user experience, the P2P architectures can be adopted instead.

Collaborative filtering is a rational QoS prediction technology to select neighbors for each client in the P2P video streaming network [29–31]. To select the best neighbors with high delivery quality for the clients, CF should predict the QoS values between the clients and then select the top k best neighbors in terms of the QoS values. Each client only knows partial information about the QoS values for all the nodes in the network. Memory-based CF methods are some kinds of generalized k-nearest-neighbors (KNN) algorithms [32, 33], which have two types of models: user-based and item-based. Model-based CF methods are more popular, which act like generalized regression or classification algorithms, but they deal with abstract features not concrete or raw features. Among many model-based CF methods, matrix factorization has become the most popular technology to handle such kind of issues [34–40]. Probabilistic Matrix Factorization (PMF) model considers that the QoS values obey a normal Gaussian distribution, and the latent factors should be learned from zero-mean Gaussian distribution [41]. Nonnegative matrix factorization (NMF) can learn the nonnegative latent factors for the users or items, but it usually deals with the implicit feedback [42–44].

However, even if matrix factorization CF algorithms have obtained remarkable success, they have difficulty in dealing with cross-domain learning tasks if the output values of the source and target domain have different ranges. Deep neural networks can easily learn general and transferable features. More and more cross-domain applications adopt deep learning technologies and have yielded remarkable performance [45–47]. However, the exploration of deep neural networks on recommender systems or QoS prediction has received relatively less attraction. Recently, some studies have proposed some deep learning-based collaborative filtering models. Two impressive technologies are Google’s Wide & Deep [48] and Microsoft’s Deep Crossing [49]. The input of these models is side information, not the interaction between the users and items. Neural Collaborative Filtering (NCF) models are designed purely for user and item interactions [50]. However, none of them are designed for cross-domain QoS prediction.

3. DTCF Model

For the cross-domain QoS prediction in the video streaming P2P network, we are given a source domain with ns examples, which is characterized by the probability distribution p and a target domain with nt examples which is characterized by the probability q. Usually the size of examples in the target domain is extremely small, ns≻nt. Our work aims to build a deep neural network to learn transferable features that bridge these two domains’ discrepancy.

3.1. Model Architecture Overview

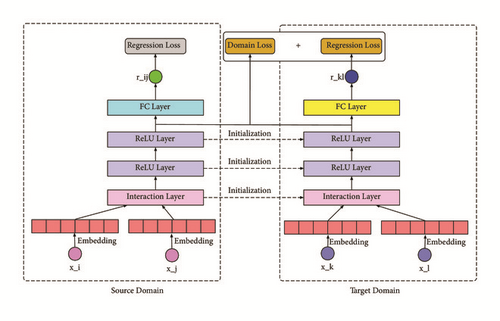

We propose a novel neural architecture, outlined in Figure 1. The source domain and the target domain share the same network architecture. The input of the model is the identifier number of the nodes. For example, if size of nodes in the P2P network is n, the ID of each node is an integer number from 1 to n. The output of the mode is the QoS value that the node xi evaluates on the node xj.

Since we do not use any concrete feature for each node, we need to learn abstract features for them. Here, we use embedding layer to learn a continuous latent vector/factor u for each node. The details of designing embedding layers are described in Section 3.1.

Unfortunately, it is too simple to completely represent the complex interaction between nodes. In this paper, we propose a novel interaction layer to tackle this problem, which has powerful representation capacity. We will give the design details in Section 3.2.

Finally, we use a fully connected layer to generate the output. When training the model in the source domain, we use the regression loss. We then use the all the layers of the pretrained model but the last FC layer to construct the model for target domain. The weights of these layers are kept as the initialized weights of the target domain model, but the final FC layer is initialized randomly. To avoid the overadaptation problem, we use both the domain loss and regression loss to train the target domain model. We will describe how to design the domain loss in Section 3.3.

3.2. Embedding Layer

Therefore, ui is the ith column of matrix W. Since the node identifier number is transformed to a one-hot vector, the result of matrix multiplication is exactly a specific latent vector for each node. This weight matrix is jointly trained with the other parameters of the whole network.

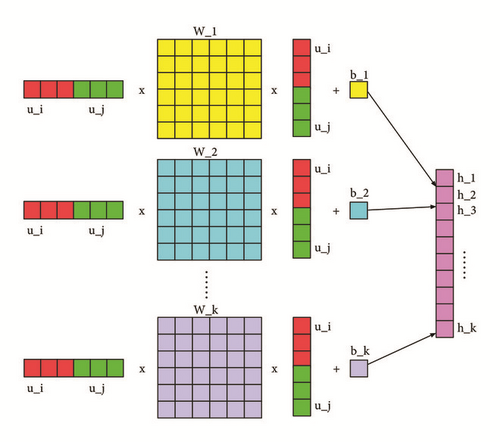

3.3. Interaction Layer

There are two inputs of the interaction layer, ui and uj. Suppose any single vector is a column vector, and concatenating the two inputs will get a longer vector. This concatenation vector will be transformed to another vector, encoding interactive information between these two inputs. The transformation process is outlined in Figure 2.

If the length of ui is d, Ws is a 2d × 2d square matrix. hc is a scalar, the value of which is determined by the matrix Ws and the bias bs. If the length of h is k, we need k weight matrices and biases.

hc include all the possible interaction relationships between ui and uj. Denote , and we can see that , where is the element at the ith row and the jth column in the matrix Ws

3.4. Domain Loss

The output of the last ReLU layer of the model in the source domain is denoted as hs, and the output of the last ReLU layer of the model in the target domain is denoted as ht. If we want to avoid the overadaptation problem, one possible way is to minimize the differences between the distributions and , where and .

If the function class is too large, it is not practical to work with this rich function class in the finite sample setting. A rational choice of the function class is a universal reproducing kernel Hilbert space , named universal RKHS. Therefore, we have that , where . The kernel function k(x, y) is equal to 〈ϕ(x), ϕ(y)〉.

3.5. Algorithm

- (i)

We first train the model of the source domain using the loss function . The gradient of each weigh is computed according to formula (13).

- (ii)

After training, we use the weights of this model to initialize the model in the target domain except the weights of the last FC layer. The last FC layer of the model of the target domain is initialized randomly.

- (iii)

While training the model of the target domain, we use the loss function .

- (iv)

For each training iteration, we randomly select examples in the dataset, and compute the gradient according to formulas (14) and (15).

- (v)

We use ADAM (Adaptive Moment Estimation) as the optimizer.

4. Experimental Results

4.1. Dataset and Evaluation Metrics

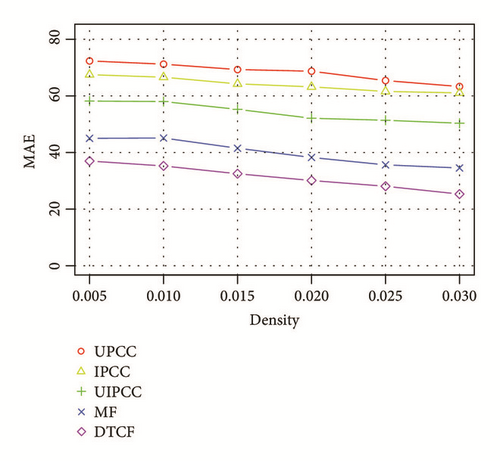

We conduct our experiments on a publicly large accessible dataset, WS-DREAM dataset#1, obtained from 339 hosts doing QoS evaluation on the other 5825 hosts. There are two types of QoS properties in this dataset: response time and throughput. Here, we use the response time as the source domain, and the throughput as the target domain.

For the source domain, we randomly extract 30% (density) of the data as the source training set. For the target domain, we construct 5 different training sets with different density of 0.5%, 1%, 1.5%, 2%, 2.5%, and 3%. Consequently, the remaining data is the test set.

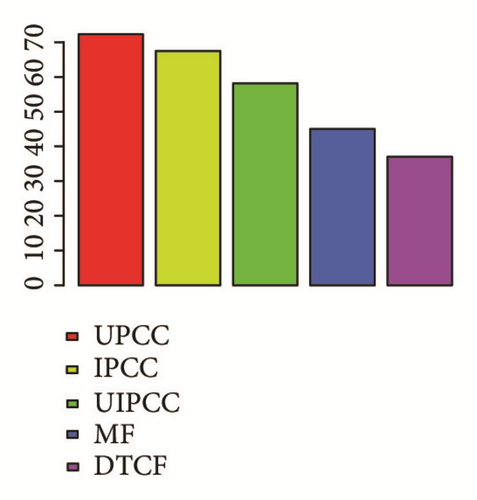

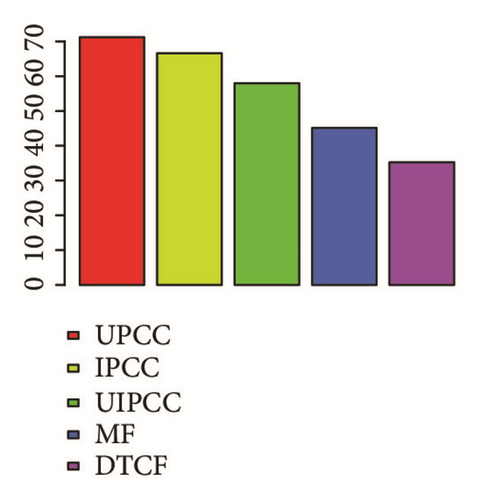

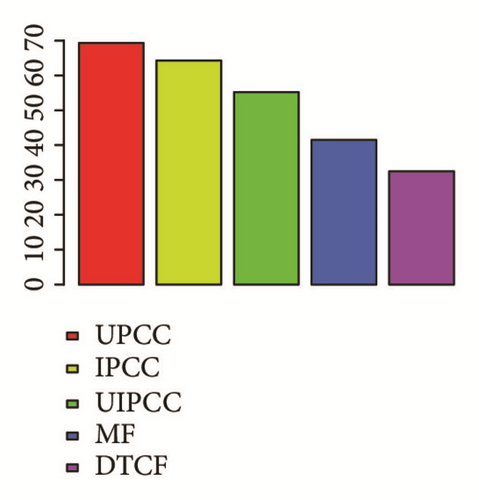

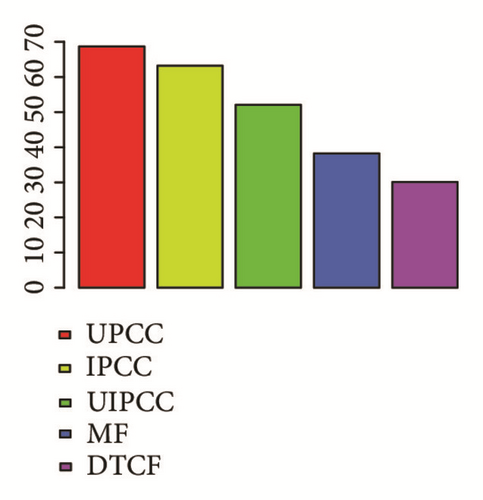

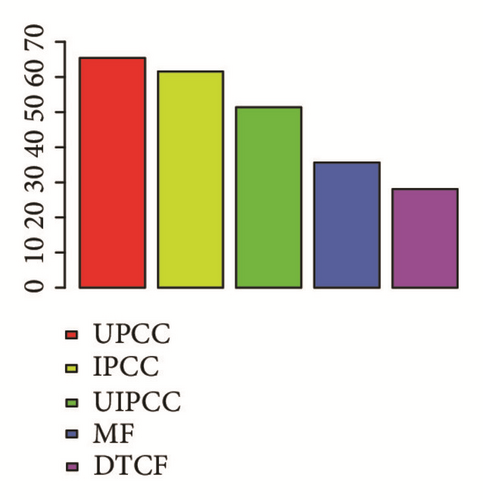

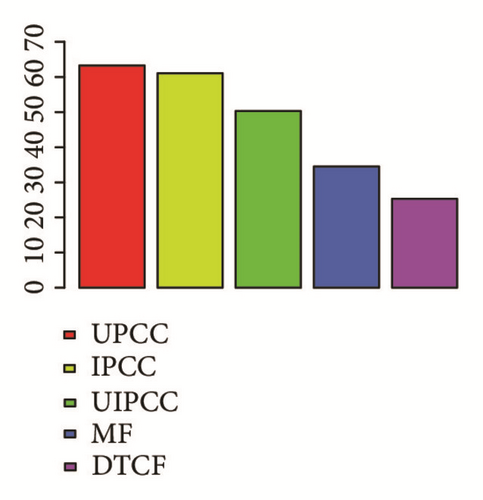

4.2. Performance Comparison

We compare our methods with some traditional collaborative filtering methods: UPCC, IPCC, UIPCC [34], and matrix factorization (MF). UPCC is a user-based CF method, which uses PCC (Pearson Correlation Coefficient) to calculate the similarity between users. IPCC is similar to UPCC, except that it calculates the similarity between items. UIPCC combines the advantages of these two methods by balancing the proportions of them in the final results. For UPCC, IPCC, and UIPCC, different tradeoff parameters k = 5,10,15,20,25,30 (the parameters of top k similar users or services) are tried, and finally we choose k = 10. For MF and DTCF, the sizes of latent factors are also set to 10. For DTCF, different hidden ReLU layers and different hidden unit sizes are tried. Here, the maximum number of hidden layers is limited to 5. We tested the batch size of [128, 256, 512, 1024], the learning rate of [0.0001,0.0005, 0.001, 0.005], and the training epoch of [10, 20, 30, 40, 50, 60, 70, 80]. The bandwidth δ is set to the median pairwise distance on the source training data.

We conduct 10 experiments for each model and each sparsity level and then average the prediction accuracy values.

- (i)

As the sparsity level increases, the MAEs of all the models decrease.

- (ii)

Our DTCF methods outperform the other traditional collaborative filtering methods, especially when the training set is extremely sparse.

- (iii)

DTCF model has more weights that need to be trained than the other models, but it gets the best performance, which indicates that the relationship between nodes is very complex, and shallow models cannot capture these structures.

Although shallow models are not easily overfitting when the target domain training dataset is extremely sparse, they cannot transfer rich information from the source domain. The deep models might easily incur overfitting problem, but they can learn common latent features from the source domain. To balance this dilemma, we need to control the degree of fine-tuning the deep model. This experiment shows that MMD domain loss is an efficient way of controlling the adapting degree.

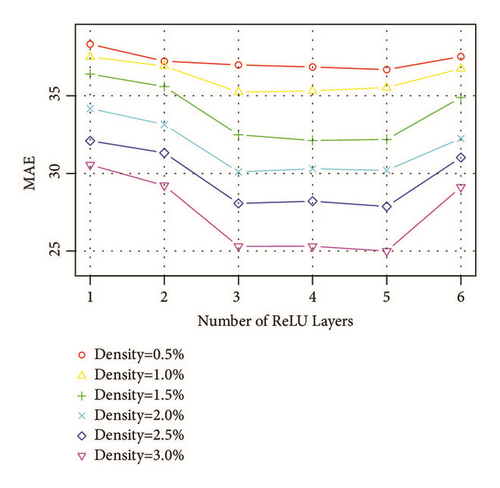

4.3. Impact of the Network Depth

- (i)

Adding more ReLU layers can get better prediction performance, but when the depth exceeds a limited value, the performance starts to become worse.

- (ii)

Although adding more ReLU layers can improve the performance, it seems that enlarging the size of the training data would be more helpful.

- (iii)

Sometimes, adding more layers would not improve the performance anymore, but it also does not get worse prediction performance. This indicates that deep neural network has some kind of regularization property.

Actually, if the training dataset is very large, adding more layers usually does not incur overfitting problems, but for the cross-domain learning, the target domain has very little data, so the network depth needs control.

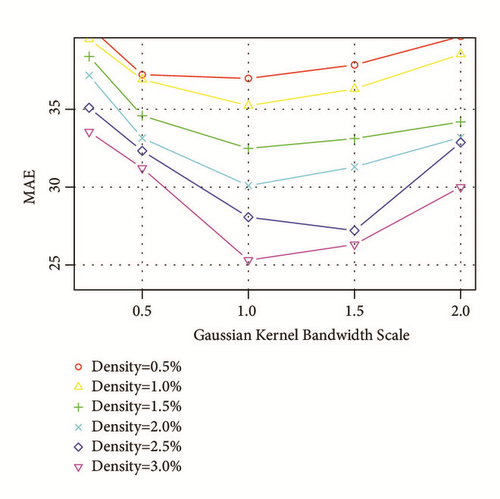

4.4. Impact of the Gaussian Kernel Bandwidth

- (i)

Obviously, the default value is a rational choice, and scaling too small or too large would get worse prediction performance.

- (ii)

If the bandwidth is too large, the kernel will be approximately equal to 1, and the nodes would look the same. We cannot propose personal recommendation for them.

- (iii)

If the bandwidth is too small, the kernel will be approximately equal to 0, and the nodes cannot find similar neighbors to follow their past experiences.

5. Conclusion

Selecting neighbors in terms of the QoS is an effective way of providing high quality contents in video streaming P2P networks. Due to the heterogeneous network conditions, the QoS between any pairs of nodes is different. However, evaluating the QoS of all the nodes for each user is resource-consuming. An attractive way is to adopt collaborative filtering technologies, which use only a small amount of past usage experience.

Unfortunately, the video content providers might often choose different QoS properties to select neighbors. Traditional CF methods cannot solve the cross-domain QoS prediction problem. This paper proposed a novel neural style CF method based on transfer learning. We first outlined our model architecture and then introduced the details of important parts of this model. To avoid the overadaptation problem, we combined domain loss and prediction loss together to train the model of the target domain. We adopted MMD distance as our domain loss, and we also provide its principle and how to compute the gradient. Finally, we conducted our experiments on a real-world public dataset. The experimental results show that our DTCF model can outperform the other models for cross-domain QoS prediction.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Acknowledgments

This work are supported by the National Nature Science Foundation of China (No. 61602399 and No. 61502410).

Open Research

Data Availability

The WS-Dream data used to support the finding of this study is owned by a third party, which is an open dataset and is deposited in “https://github.com/wsdream/wsdream-dataset".