A New Accurate and Efficient Iterative Numerical Method for Solving the Scalar and Vector Nonlinear Equations: Approach Based on Geometric Considerations

Abstract

This paper deals with a new numerical iterative method for finding the approximate solutions associated with both scalar and vector nonlinear equations. The iterative method proposed here is an extended version of the numerical procedure originally developed in previous works. The present study proposes to show that this new root-finding algorithm combined with a stationary-type iterative method (e.g., Gauss-Seidel or Jacobi) is able to provide a longer accurate solution than classical Newton-Raphson method. A numerical analysis of the developed iterative method is addressed and discussed on some specific equations and systems.

1. Introduction

Solving both nonlinear equations and systems is a situation very often encountered in various fields of formal or physical sciences. For instance, solid mechanics is a branch of physics where the resolution of problems governed by nonlinear equations and systems occurs frequently [1–10]. In most cases, Newton method (also known as Newton-Raphson algorithm) is most commonly used for approximating the solutions of scalar and vector nonlinear equations [11–13]. But, over the years, several other numerical methods have been developed for providing iteratively the approximate solutions associated with nonlinear equations and/or systems [14–25]. Some of them present the advantage of having both high accuracy and strong efficiency using a numerical procedure based on an enhanced Newton-Raphson algorithm [26]. In this study, we propose to improve the iterative procedure developed in previous works [27, 28] for finding numerically the solution of both scalar and vector nonlinear equations. This study is decomposed as follows: (i) in Section 2, a new numerical geometry-based root-finding algorithm coupled with a stationary-type iterative method (such as Jacobi or Gauss-Seidel) is presented with the aim of solving any system of nonlinear equations [29, 30]; (ii) in Section 3, the numerical predictive abilities associated with the proposed iterative method are tested on some examples and also compared with other algorithms [31, 32].

2. New Iterative Numerical Method for Solving the Scalar and Vector Nonlinear Equations Based on a Geometric Approach

2.1. Problem Statement

With the aim of numerically solving system (2), we adopt a Root-Finding Algorithm (RFA) coupled with a Stationary Iterative Procedure (SIP) such as Jacobi or Gauss-Seidel [26, 30]. The use of any SIP allows to reduce the considered nonlinear system to a successive set of nonlinear equations with only one variable and therefore it can be solved with a RFA [30]. In the present study, we propose an extended version of RFA already developed in [27, 28] and combined with a Jacobi or Gauss-Seidel type iterative procedure for dealing any system of nonlinear equations.

2.2. Stationary Iterative Procedures (SIPs) with Root-Finding Algorithms (RFAs)

2.2.1. Jacobi and Gauss-Seidel Iterative Procedures

- (i)

in the case of Jacobi procedure:

() - (ii)

in the case of Gauss-Seidel procedure:

()

2.3. Used Root-Finding Algorithm (RFA)

In previous works [27, 28], a root-finding algorithm (RFA) has been developed for approximating the solutions of scalar nonlinear equations. The new RFA presented here is an extended version to that previously developed taking into account some geometric considerations. In this paper, we propose to use a RFA coupled with Jacobi and Gauss-Seidel type procedures for iteratively solving nonlinear system . Hence, we adopt a new RFA for finding approximate solution (when i is fixed and with the known set Δi) associated with each nonlinear equation Fi of system (see Section 2.1). For each nonlinear equation Fi, parametrized by the set of variables Δi, depending only on one variable , we introduce the exact and inexact local curvature associated with the curve representing the nonlinear equation in question.

- (i)

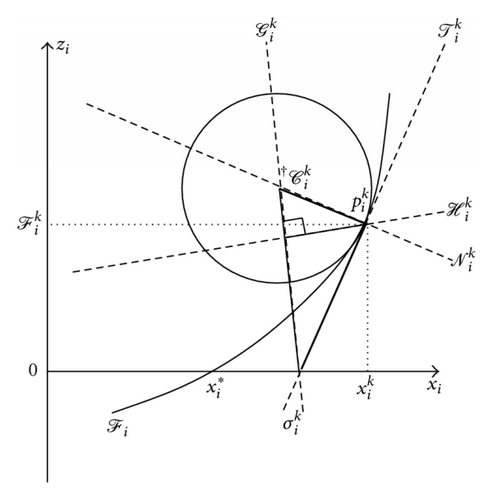

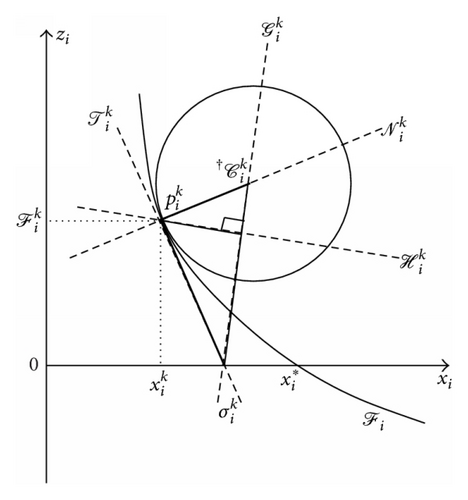

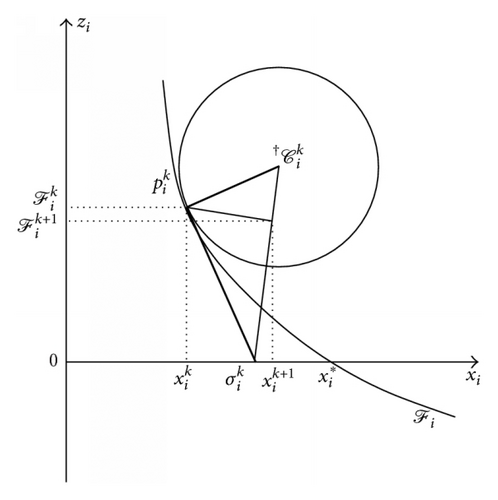

In the first step, we consider the iterative tangent and normal straight lines associated with nonlinear function Fi at point (see Figure 1):

() -

where (resp., ) denotes the value (resp., first-order derivative) of function Fi at point , is the set of known variables and (Ti, Ni) are two functionals associated with .

- (ii)

In the second step, we introduce the iterative exact and inexact local curvature associated with the curve representing nonlinear function at point (see Figure 1):

() -

where |∗| denotes the absolute-value function associated with the variable ∗, is the exact († = ex) or inexact († = in) radius of the osculating at point , (with † = ex, in) is functional associated with , and is the second-order derivative of function Fi at point . It should be noted that: (i) we consider the exact radius associated with the true osculating circle at point (see [33]); (ii) in line with [27, 28], we consider an inexact radius associated with the osculating circle at point , that is, (see (7)).

- (iii)

In the third step, we define the iterative center associated with the exact and inexact osculating circle at point , that is, (see Figure 1)

() -

By taking (7) and (8), the iterative centers are (with † = ex, in)

() -

where is the iterative centers associated with the exact and inexact osculating circle (with † = ex, in) associated with each curve representing nonlinear function at point , are two functionals associated with and sgn(♠) is sign function (i.e., sgn(♠) = −1 when ♠ < 0, sgn(♠) = 1 when ♠ > 0, and sgn(♠) = 0 when ♠ = 0).

- (iv)

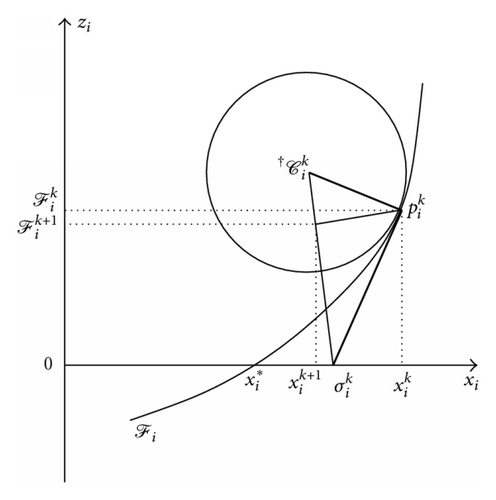

In the fourth step, we introduce the iterative point such as , that is,

() -

where Wi is a functional associated with .

- (v)

In the fifth step, we define the iterative straight line passing through two iterative points and , that is, (with † = ex, in)

() -

where is the set of known variables.

- (vi)

In the sixth step, we introduce the iterative straight line passing through the point and the iterative perpendicular straight line such as (with † = ex, in)

() -

where Hi is a functional associated with .

- (vii)

In the last step, we define the iterative point which is the solution of the following relation (with † = ex, in)

() -

with

() -

where Pi is a functional associated with Φi.

-

In line with (10), (14) can be rewritten as

()

- (i)

First condition [BC1] is

() - (ii)

Second condition [BC2] is

() - (iii)

Third condition [BC3] is

() - (iv)

Fourth condition [BC4] is

() - (v)

Fifth condition [BC5] is

() - (vi)

Sixth condition [BC6] is

()

3. Numerical Examples

3.1. Preliminary Remarks

In this section, we propose to evaluate the predictive abilities associated with the numerical iterative method developed in Section 2.3 (i.e., AGA) on some examples both in the case of scalar and vector nonlinear equations. Hence, AGA is compared to other iterative Newton-Raphson type methods [27, 28, 30–32] coupled with Jacobi (J) and Gauss-Seidel (GS) techniques. All the numerical implementations associated with these presented iterative methods have been made in Matlab software (see [26, 34–39]).

- (i)

Newton-Raphson Algorithm (NRA):

() -

where denotes the first-order differential operator associated with nonlinear function F at point and inv(†) is the inverse transform operator of †. It is important to highlight that NRA can be used if and only if operator “” exists, that is, , (det(‡) is determinant of operator ‡).

- (ii)

Standard Newton’s Algorithm (SNA):

() - (iii)

Third-order Modified Newton Method (TMNM):

()

- (i)

For scalar-valued equations :

- (a)

(C1S) on the iteration number,

() -

where denotes the maximum number of iterations associated with scalar-valued equations.

- (b)

(C2S) on the residue error,

() -

where is the tolerance parameter associated with the residue error criterion for scalar-valued equations and ‖†‖ = |†| is the absolute-value norm.

- (c)

(C3S) on the approximation error,

() -

where is the tolerance parameter associated with the absolute error criterion for scalar-valued equations.

- (a)

- (ii)

For vector-valued equations :

- (a)

(C1V) on the iteration number, we adopt the same condition that (C1S), that is, (where denotes the maximum number of iterations associated with vector-valued equations).

- (b)

(C2V) on the residue error,

() -

where (resp., ) is the tolerance parameter associated with the residue error criterion for vector-valued equations and is the vector p-norm (here, p = 1,2). It is important to point out that is so-called Euclidean norm.

- (c)

(C3V) on the approximation error,

() -

where ϵae is the tolerance parameter associated with the absolute error criterion for vector-valued equations.

- (a)

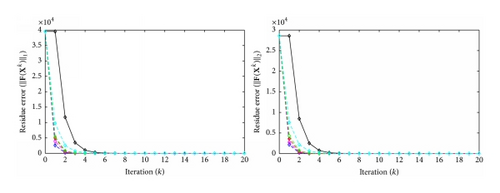

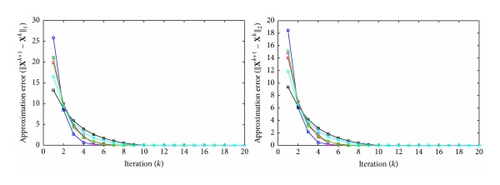

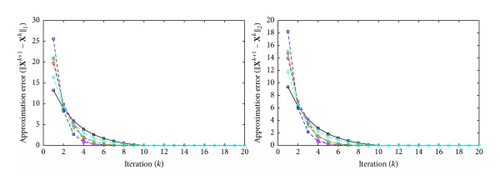

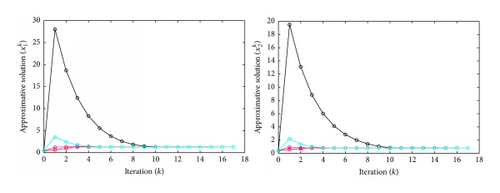

Here, for the stopping criteria (C1S, C1V), (C2S, C2V), and (C3S, C3V) associated with the iterative process, we consider: (i) the maximum number of iterations ; (ii) the tolerance parameter for the scalar-valued equations; (iii) the tolerance parameter (with i = 1,2) for the vector-valued equations.

3.2. Examples

- (i)

In case n = 1 (i.e., scalar-valued equations), one has the following:

Example 1. Consider the following:

Example 2. Consider the following:

- (ii)

In case n = 2 (i.e., vector-valued equations), one has the following:

Example 3. Consider the following:

Example 4. Consider the following:

3.3. Results and Discussion

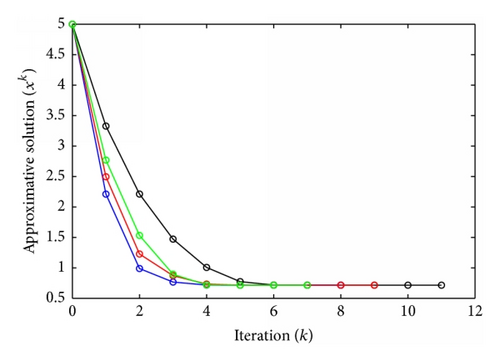

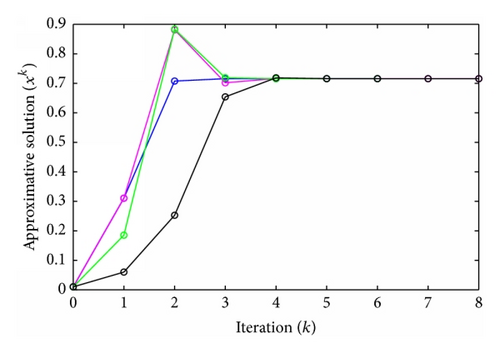

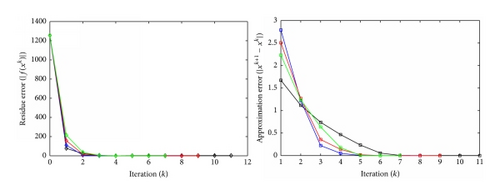

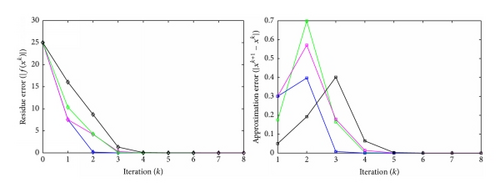

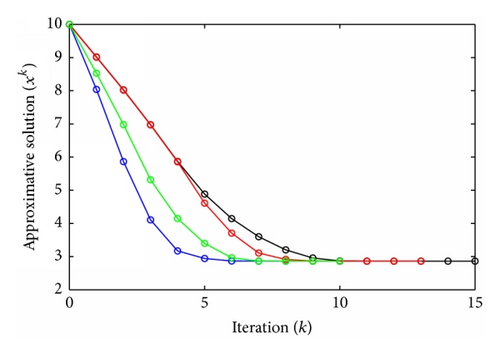

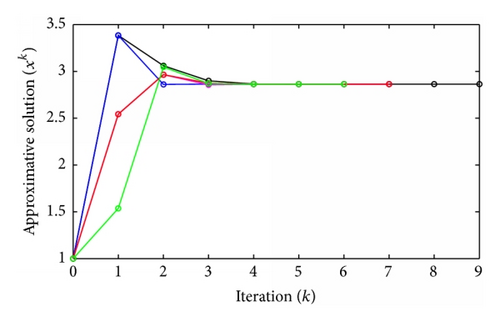

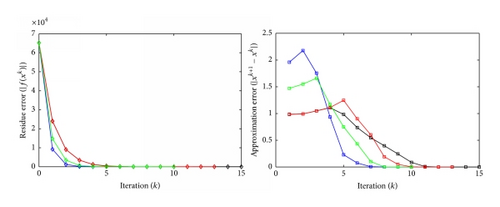

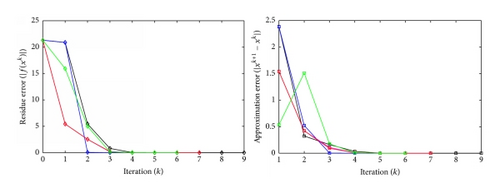

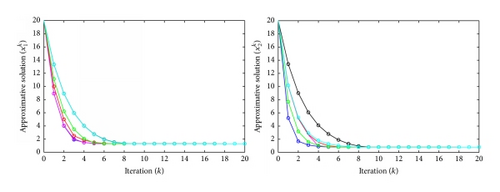

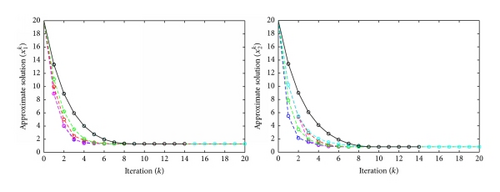

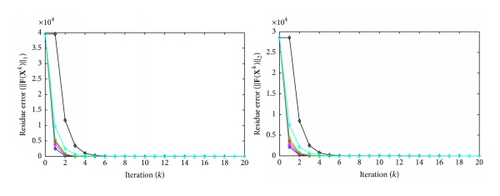

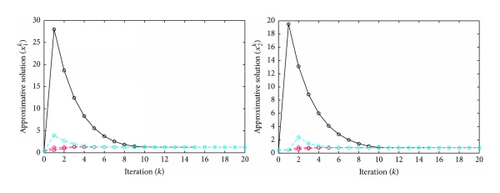

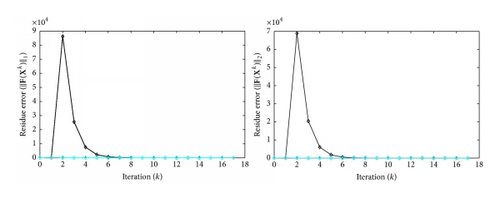

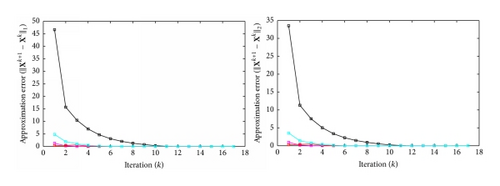

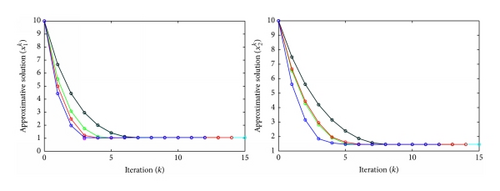

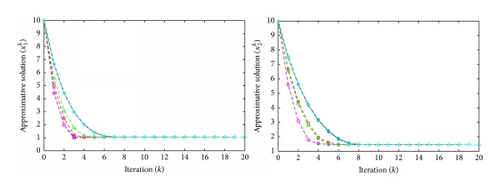

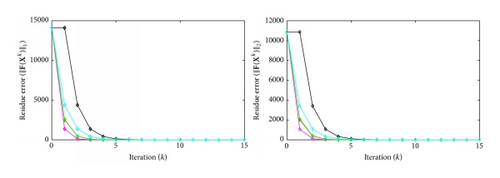

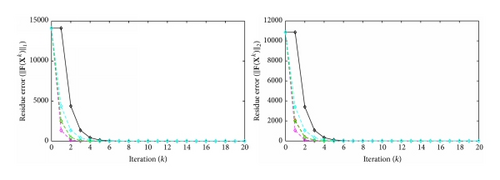

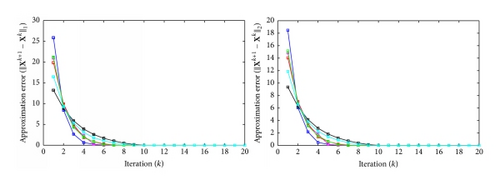

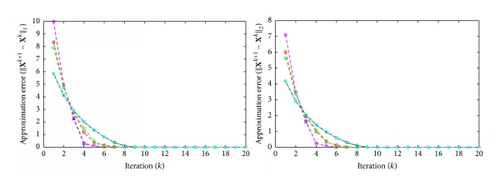

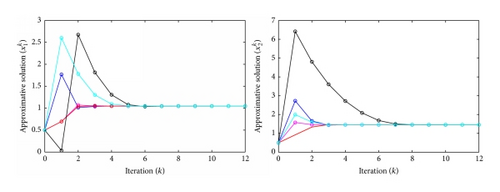

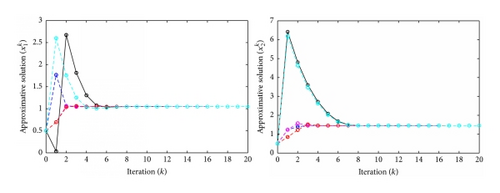

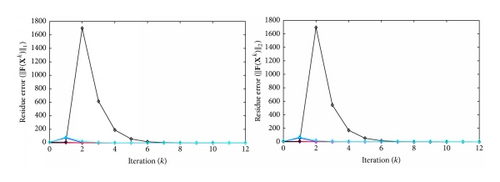

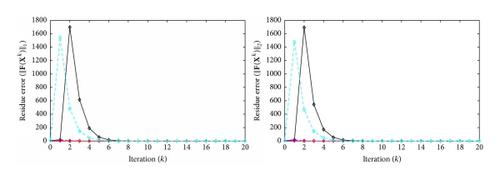

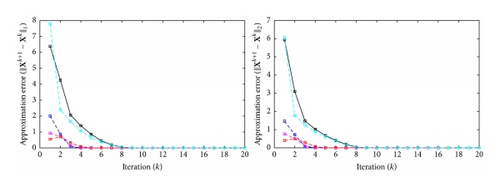

All the numerical results of Examples 1–4 are shown in Figures 3–32. For Example 1 (resp., Example 2) with guest start point (resp., ), we can see that approximate solutions provided by AGA with condition [BC1]/[BC3] (i.e., condition [BC1] is the same that [BC3] where † = ex) are better than AGA with condition [BC2], NRA/SNA (when n = 1, NRA and SNA are the same), and TMNM. For Example 1 (resp., Example 2) in the case of guest start point (resp., ), we can see that approximate solutions provided by AGA with conditions [BC4], [BC5], and [BC6] in the first iterations are accurately better than NRA/SNA (when n = 1, NRA and SNA are the same) and TMNM. For Example 3 with guest start point couple , we can observe that approximate solutions given by AGA using Gauss-Seidel (GS) or Jacobi (J) procedure with: (i) condition [BC1] are more accurate numerically than NRA, TMNM, and SNA; (ii) conditions [BC2] and [BC3] are accurately better than NRA (only in the first iterations) and SNA. In the case of guest start point couple , we can see that approximate solutions provided by AGA using: (i) Gauss-Seidel (GS) procedure with condition [BC6] give much greater numerical accuracy than NRA and SNA; (ii) Gauss-Seidel (GS) procedure with conditions [BC4] and [BC5] offer much greater numerical accuracy than NRA and SNA; (iii) Jacobi (J) procedure with conditions [BC4], [BC5], and [BC6] are better than NRA (only in the first iterations) and SNA. For Example 4 with guest start point couple , we can see that approximate solutions given by AGA using: (i) Gauss-Seidel (GS) procedure with conditions [BC1] and [BC3] are more accurate numerically than SNA, TMNM, and NRA; (ii) Gauss-Seidel (GS) procedure with condition [BC2] are accurately better than TMNM and NRA (only in the first iterations) and SNA; (iii) Jacobi (J) procedure with conditions [BC1], [BC2], and [BC3] are more accurate numerically than NRA (only in the first iterations) and both TMNM and SNA. In the case of guest start point couple , approximate solutions obtained by AGA using: (i) Gauss-Seidel (GS) procedure with conditions [BC5] and [BC6] are more accurate numerically than SNA and NRA; (ii) Jacobi (J) procedure with the conditions [BC4], [BC5], and [BC6] are accurately better than NRA (only in the first iterations) and SNA. Overview of different numerical results shows that the Adaptive Geometry-based Algorithm (AGA) can be able to provide quite accurately the approximate solutions associated with both nonlinear equations and systems and can potentially provide a better or more suitable approximate solution than that of Newton-Raphson Algorithm (NRA).

4. Concluding Comments

The present work concerns a new numerical iterative method for approximating the solutions of both scalar and vector nonlinear equations. Based on an iterative procedure previously developed in a study, we propose here an extended form of this numerical algorithm including the use of a stationary-type iterative procedure in order to solve systems of nonlinear equations. A predictive numerical analysis associated with this proposed method for providing a more accurate approximate solution in regard to nonlinear equations and systems is tested, assessed and discussed on some specific examples.

Competing Interests

The author declares that there are no competing interests regarding the publication of this paper.