A Convex Adaptive Total Variation Model Based on the Gray Level Indicator for Multiplicative Noise Removal

Abstract

This paper focuses on the problem of multiplicative noise removal. Using a gray level indicator, we derive a new functional which consists of the adaptive total variation term and the global convex fidelity term. We prove the existence, uniqueness, and comparison principle of the minimizer for the variational problem. The existence, uniqueness, and long-time behavior of the associated evolution equation are established. Finally, experimental results illustrate the effectiveness of the model in multiplicative noise reduction. Different from the other methods, the parameters in the proposed algorithms are found dynamically.

1. Introduction

Various adaptive filters for multiplicative noise removal have been proposed. In the beginning, variational methods for multiplicative noise reduction deal with the Gaussian multiplicative noise [1]. In actual life, the speckle noise [2] is more widespread, such as synthetic aperture radar (SAR) imagery [3, 4]. Then, Aubert and Aujol [5] propose a new variational method for Gamma multiplicative noise reduction. By the logarithm transform, a large variety of methods rely on the conversion of the multiplicative noise into additive noise.

1.1. Gaussian Noise

If by the gradient projection method the values of λ1 and λ2 are found dynamically, the method is not always convex; while if λ1, λ2 > 0 are fixed, the corresponding minimization problem will lead to a sequence of constant function u approaching +∞.

1.2. Gamma Noise

1.3. The Model Based on the Logarithm Transform

1.4. The Model Based on the Exponent Transform

The paper is organized as follows. In Section 2, inspired from [1, 5, 14], based on the gray level indicator α(x), we derive a convex adaptive total variation model (4) for multiplicative noise removal. In Section 3, we prove the existence and uniqueness of the solution of the minimization problem (4). In Section 4, we study the associated evolution problem to the minimization problem (4). Specifically, we define the weak solution of the evolution problem, derive estimates for the solution of an approximating problem, prove existence and uniqueness of the weak solution, and discuss the behavior of the weak solution as t → ∞. In Section 5, we provide two numerical algorithms and experimental results to illustrate the effectiveness of our algorithms in image denoising. We also compare new algorithms with other ones.

2. A New Variational Model for Multiplicative Noise Removal

The goal of this section is to propose a new variational model for multiplicative noise removal. First, we proposed a new fidelity with global convexity, which can always satisfy the constraint (2) during the evolution. Then, by analyzing the properties of the noise, we propose the adaptive total variation based on a gray level indicator α(x).

2.1. A Global Convex Fidelity Term

Remark 1. (1) It is easy to check that the function u → u + flog (1/u) reaches its minimum value f + flog (1/f) over ℝ+ for u = f.

(2) Let us denote by

We have h′(u) = 1 − (f/u) = (u − f)/u, and h′(u) = (f/u2) > 0, which deduce that the new fidelity term H(u, f) is globally strictly convex.

2.2. The Adaptive Total Variation Model

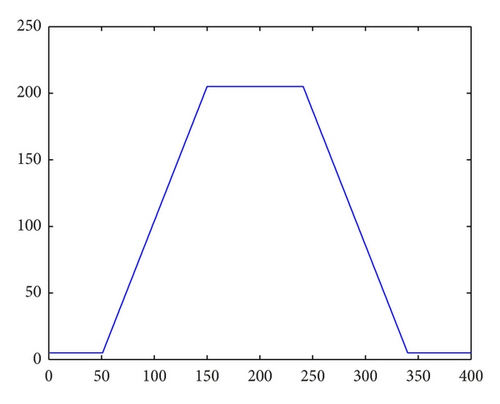

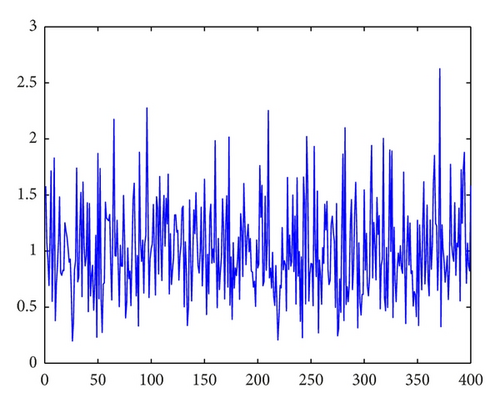

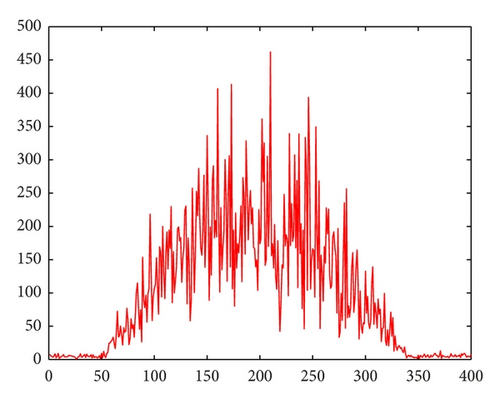

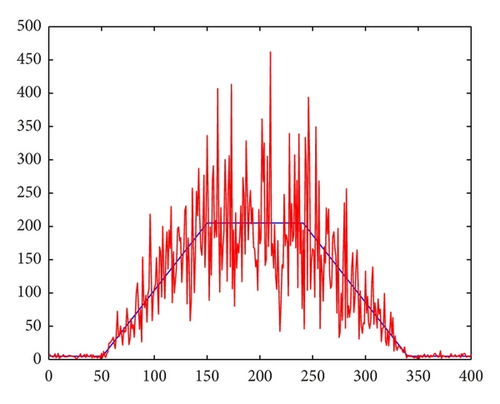

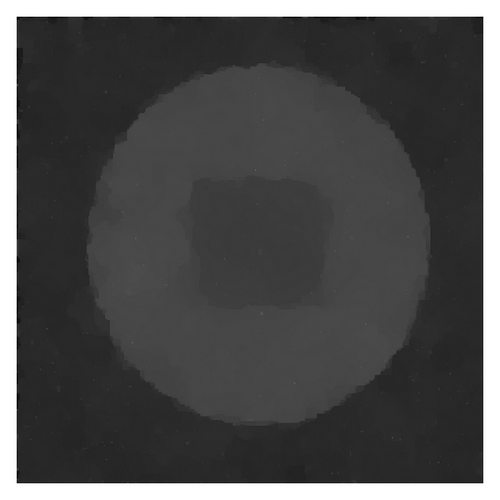

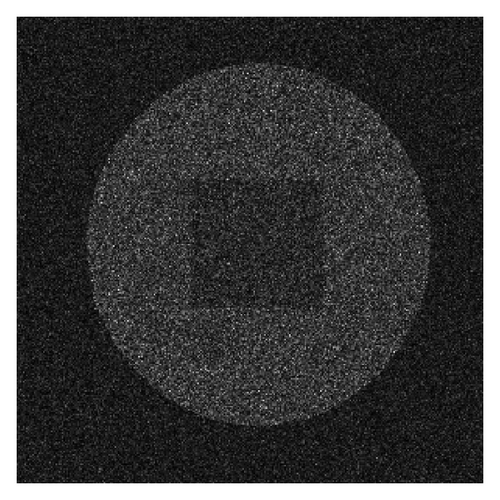

Assume u is a piecewise function, that is, , where Ωi ∩ Ωj = ∅, for i ≠ j, , and gi is the gray level. Moreover, we assume that the samples of the noise on each pixel x are mutually independent and identically distributed (i.i.d.) with the probability density function η(x). For x ∈ Ω, f(x) = giη(x), and therefore, , where Var [f] and Var [η] are the variance of the noise image f and the noise at the pixel x, respectively. It is noticed that the variance of the noise is the constant σ2 on each pixel, but the variance of the noise image f is influenced by the gray level. The higher the gray level is, the more remarkable the influence of the noise is. Especially, f = u when u = 0, and therefore f is noise free in this case. The fact is illustrious in Figure 1. It can be seen that in despite of the independent identically distribution of noise (see Figure 1(b)), the noise image shows different features on the pixel, where the gray levels are different (see Figure 1(d)).

3. The Minimization Problem (28)

In this section, we study the existence and uniqueness of the solution to the minimization problem (28), and then we consider the comparison principle for the problem (28).

3.1. Preliminaries

We always assume that Ω is a bounded open subset of ℝn with Lipschitz boundary. As in [16], we introduce the following definition of α-total variation.

Definition 2. A function u ∈ L1(Ω) has bounded α-total variation in Ω, if

Remark 3 (see [16].)(1) If u ∈ L1(Ω) has bounded α-total variation in Ω, there is a Radon vector measure Du on Ω such that

(2) From (32), u ∈ L1(Ω) having bounded α-total variation in Ω implies that u ∈ BV(Ω).

Now, we directly show some lemmas on α-total variation from [16].

Lemma 4. Assume that and uk → u in L1(Ω). Then, u ∈ BV(Ω), and

Lemma 5. Assume that u ∈ BV(Ω). Then, there exists a sequence such that

By a minor modification of the proof of Lemma 1 in Section 4.3 of [17], we can have the following lemma.

Lemma 6. Let u ∈ BV(Ω), and let φa,b be the cut-off function defined by

3.2. Existence and Uniqueness of the Problem (28)

In this subsection, we show that problem (28) has at least one solution in BV(Ω).

Theorem 7. Let f ∈ L∞(Ω) with inf x∈Ω f > 0. Then, the problem (28) admits a unique solution u ∈ BV(Ω) such that

Proof. Let us rewrite

Step 1. Let us denote a = inf x∈Ω f and b = sup x∈Ω f. We first claim that 0 < a ≤ un ≤ b.

In fact, we remark that h(s) is decreasing for s ∈ (0, f) and increasing for s ∈ (f, +∞). Therefore, if M ≥ f, one always has

Step 2. Let us prove that there exists u ∈ BV(Ω) such that

Finally, from Remark 1(2), h is strictly convex as f > 0, and then the uniqueness of the minimizer follows from the strict convexity of the energy functional in (28).

3.3. Comparison Principle

In this subsection, we state a comparison principle for problem (28).

Proposition 8. Let f1, f2 ∈ L∞(Ω) with inf x∈Ω f1, inf x∈Ω f2 > 0, and f1 < f2. Assume that u1 (resp., u2) is a solution of the problem (28) for f = f1 (resp., f = f2). Then, u1 ≤ u2 a.e. in Ω.

Proof. Let us denote u∨v = sup (u, v), and u∧v = inf (u, v).

From Theorem 7, It is noticed that there exist the solutions u1 and u2 for f1 and f2, respectively. Since ui is a minimizer with data fi, for i = 1, 2,

4. The Associated Evolution Equations (29)–(31)

In this section, by an approach from the theory used in both [16, 20], we define the weak solution of the evolution problems (29)–(31), derive estimates for the solution of an approximating problem, prove existence and uniqueness of the weak solution, and discuss the behavior of the weak solution as t → ∞.

4.1. Definition of a Pseudosolution to the Problems (29)–(31)

On the other hand, let v = u + ϵϕ in (59) with , , and ϕ > 0. Then left-hand side of the inequality (59) has a minimum at ϵ = 0. Hence, if u satisfies (59) and u ∈ L2(0, T; BV∩L2(Ω))∩L∞(QT) with ut ∈ L2(QT), u > 0, and , u is also a solution of the problems (29)–(31) in the sense of distribution. Based on this fact which is similar to the ideas in [13, 16, 21], we give the following definition.

4.2. The Approximating Problem for Problems (29)–(31)

Lemma 10. Let fδ ∈ L∞(Ω) with inf x∈Ω fδ > 0. Then, there is a unique solution to the problem

Based on this fact, we have the following existence and uniqueness result for the problems (61)–(63).

Theorem 11. Let fδ ∈ L∞(Ω) with inf x∈Ωfδ > 0 and sup x∈Ωf ≤ 1. Then, the problems (61)–(63) admit a unique pseudosolution up,δ ∈ L∞(0, ∞; W1,p(Ω)∩L2(Ω)) with ∂tup,δ ∈ L2(Q∞), that is,

Proof. Let us fix k = inf x∈Ω fδ > 0 and introduce the following functions:

Next, let us verify that the truncated function [·]k in the problems (72)–(74) can be omitted. Multiply (72) by , where

Moreover, multiplying (61) by ∂tup,δ and integrating it over Ωt yield

Finally, we will verify that the above weak solution will be the pseudosolution for the problems (61)–(63). Suppose that v ∈ L2(0, T; H1(Ω)) with v > 0 and . Multiplying (61) by v − up,δ, then integrating by parts over ΩT, we obtain

4.3. Existence and Uniqueness of the Problems (29)–(31)

In this subsection, we will prove the main theorem for the existence and uniqueness for the solution to the problems (29)–(31).

Theorem 12. Suppose f ∈ BV(Ω)∩L∞(Ω) with inf x∈Ω f > 0 and sup x∈Ω f ≤ 1. Then, there exists a unique pseudosolution u ∈ L∞(0, ∞, BV(Ω)∩L∞(Ω)) to the problems (29)–(31). Moverover,

Proof Step 1. First we fix δ > 0 and pass to the limit p → 1.

Let up,δ be the pseudosolution solution of (61)–(63). From (69)-(70), we know that, for fixed δ > 0, up,δ is uniformly bounded in L∞(0, ∞, BV(Ω)∩L∞(Ω)) and ∂tup,δ is uniformly bounded in L2(Q∞), that is,

In fact, from (91), there is a sequence and a function uδ ∈ L∞(Q∞) with ∂tuδ ∈ L2(Q∞) such that (92) and (93) hold.

Note that, for any ψ ∈ L2(Ω), as j → ∞,

Since

To see (95), noting that from (100), is equicontinuous, and from (93) and (99), we have, for each t ∈ [0, ∞),

From (70) and (92), we also obtain that uδ ∈ L∞(0, ∞, BV(Ω)∩L∞(Ω)) with ∂tuδ ∈ L2(Q∞). The claim is proved.

Next we show that, for all v ∈ L2(0, T; H1(Ω)) with v > 0 and , and for each t ∈ [0, ∞),

Step 2. Now it only remains to pass to the limit as δ → 0 in (102) to complete the existence of the solution to (29)–(31).

Replacing up by in (70), letting j → ∞ (pj → 1), and using (92)–(95), (104), and (64), we obtain

Then, by a processing similar to the one for getting (92)–(96), we can obtain a sequence of functions and a function u ∈ L∞(0, ∞; BV(Ω) ∩ L∞(Ω) such that, as j → ∞, δj → 0,

Replacing uδ by in (102), letting j → ∞, δj → 0, and using Lemma 4, we can have from (107)–(110)

Moreover, replacing uδ by , letting j → ∞ in (105), and using (107)–(110) and (64), we have

Finally, the uniqueness of pseudosolutions to the problems (29)–(31) follows as in [21, 23]. Let u1, u2 be two pseudosolutions to (29)–(31) with u1(x, 0) = u2(x, 0) = f. Then, we have

4.4. Long-Time Behavior

At last, we will show the asymptotic limit of the solution u(·, t) as t → ∞.

Theorem 13. As t → ∞, the pseudosolutions to the problems (29)–(31), u(x, t), converges strongly in L2(Ω) to the minimizer of the functional E(u), that is, the solution of the problem (28).

Proof. Take a function v ∈ BV(Ω) ∩ L∞(Ω) with v > 0 in (59), then

By dividing t in (116) and then taking the limit along si → ∞, we get that, for any v ∈ BV(Ω)∩L∞ with v > 0,

5. Numerical Methods and Experimental Results

We present in this section some numerical examples illustrating the capability of our model. We also compare it with the known model (AA). In the next two subsections, two numerical discrete schemes, the α-total variation (α-TV) scheme and the p-Laplace approximate (p-LA) scheme, will be proposed.

5.1. α-Total Variation Scheme

Here the MATLAB function “conv2" is used to represent the two-dimensional discrete convolution transform of the matrix ui,j, that is, G*u. Through the above lines, we can obtain by . The program will stop when it achieves our desire.

5.2. p-Laplace Approximate Scheme

5.3. Comparison with Other Methods

In this section, we used the similar way for numerical experiments as [25]. For comparison purposes, some very recent multiplicative noise removal algorithms from the literature are considered, such as the SO Algorithm [8] (see (16)-(17)) and the AA Algorithm [5] (see (10)). As recommended in [8], the stopping rule about SO Algorithm is to reach K such that K = max {k ∈ ℕ : Var [wk − wO] ≥ Var [η] = Ψ1(L)}, where wO is the underlying log-image, and η is the relevant noise, and the variance is defined by (16).

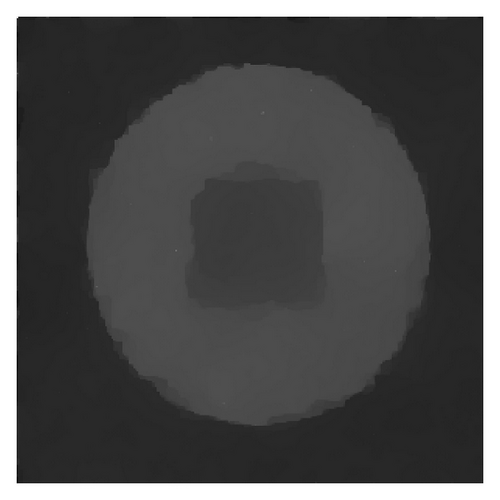

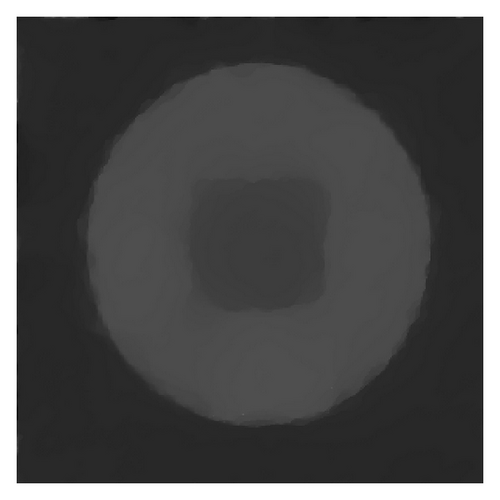

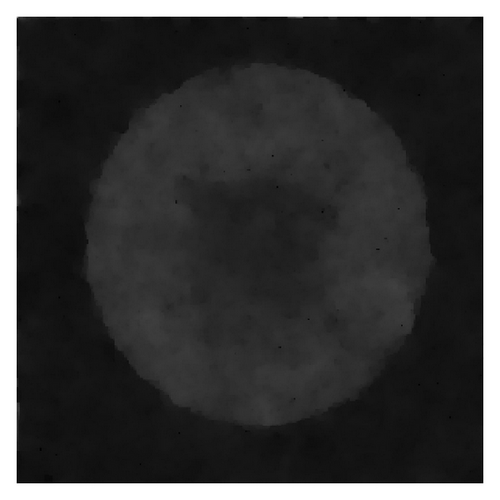

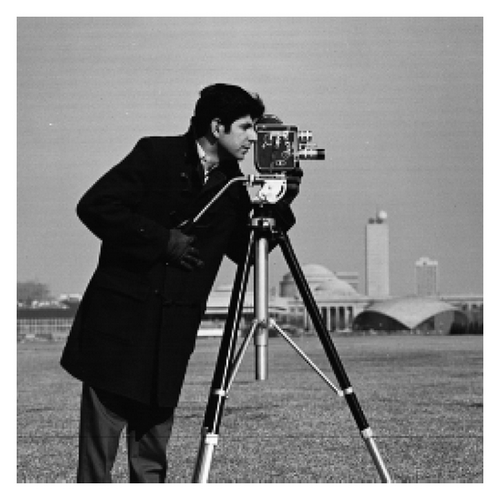

The denoising algorithms were tested on three images: a synthetic image (300 × 300 pixels), an aerial image (256 × 256 pixels), and a cameraman image (256 × 256 pixels). In all numerical experiments by our algorithms, the images do not need to be normalized in the range [1, 256]. This is different from the other algorithms. For each image, a noisy observation is generated by multiplying the original image by the speckle noise according to the model in (8) with the choice L ∈ {1,4, 10}.

For fair comparison, the parameters of SO and AA were tweaked manually to reach their best performance level. Their values are summarized in Table 1. In the α-total variation (α-TV) scheme, there are four parameters: the influencing factor k, the scale of convolution σ, the time step τ, and the turning factor λ. However, the parameter λ is found dynamically by (124). To ensure stability as well as optimal results, we choose τ = 0.05. In p-Laplace approximate (p-LA) scheme, besides the same four parameters with α-TV scheme, there is a new parameter pU with 1 < pU ≤ 2. We need not the the exact value of pU but an approximate estimate (e.g., pU = 1.2). Notice that the parameters of our method are very stable with respect to the image.

| Algorithm | Parameters | ||

|---|---|---|---|

| L = 1 | L = 4 | L = 10 | |

| The synthetic image (300 × 300) | |||

| α-TV | σ = 2, k = 0.05 | σ = 2, k = 0.05 | σ = 2, k = 0.05 |

| p-LA | pU = 1.3 | pU = 1.3 | pU = 1.2 |

| SO | λ = 0.1, α = 0.25 | λ = 0.7, α = 0.25 | λ = 0.2, α = 0.25 |

| AA | λ = 20 | λ = 150 | λ = 240 |

| The aerial image (512 × 512) | |||

| α-TV | σ = 2, k = 0.03 | σ = 2, k = 0.015 | σ = 2, k = 0.015 |

| p-LA | pU = 1.4 | pU = 1.4 | pU = 1.4 |

| SO | λ = 0.1, α = 0.25 | λ = 0.3, α = 0.25 | λ = 1.2, α = 0.25 |

| AA | λ = 25 | λ = 120 | λ = 130 |

| The cameraman image (256 × 256) | |||

| α-TV | σ = 2, k = 0.005 | σ = 2, k = 0.005 | σ = 2, k = 0.005 |

| p-LA | pU = 1.3 | pU = 1.2 | pU = 1.2 |

| SO | λ = 0.04, α = 0.25 | λ = 0.2, α = 0.25 | λ = 1, α = 0.25 |

| AA | λ = 125 | λ = 240 | λ = 390 |

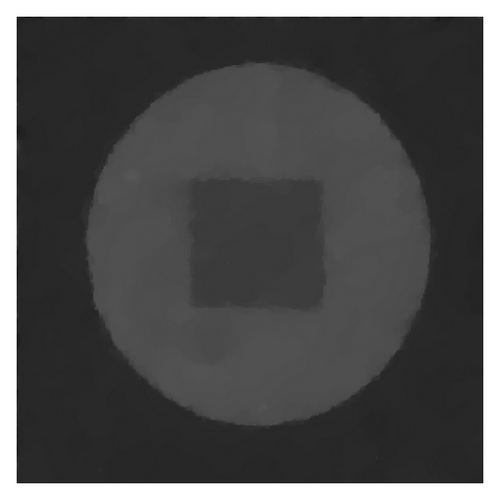

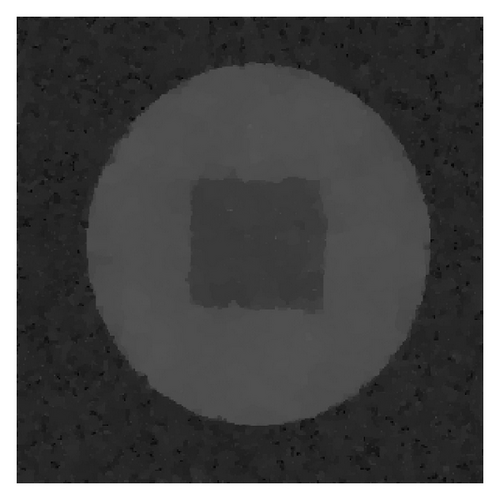

The results are depicted in Figures 2, 3, and 4 for the synthetic image, Figures 5, 6, and 7 for the aerial image, and Figures 8, 9, and 10 for the cameraman image. Our methods do a good job at restoring faint geometrical structures of the images even for low values of L, see for instance the results on the aerial image for L = 1 and L = 4. Our algorithm performs among the best and even outperforms its competitors most of the time both visually and quantitatively as revealed by the PSNR and MAE values. For SO method, the number of iterations necessary to satisfy the stopping rule rapidly increases when L decreases [25]. For AA method, the appropriate parameter λ is necessary.

In the numerical experiments, we can see that for the nontexture image, our methods and AA method work well (see Figure 4); for the texture image, our methods and SO method work well (see Figure 7). The denoising performance results are tabulated in Table 2, where the best PSNR and MAE values are shown in boldface. The PSNR improvement brought by our approach can be quite high particularly for L = 1 (see e.g., Figures 2–4), and the visual resolution is quite respectable. This is an important achievement since in practice L has a small value, usually 1, rarely above 4. This improvement becomes less salient as L increases which is intuitively expected. But even for L = 10, the PSNR of our algorithm can be higher than AA and SO methods (see Table 2).

| PSNR | MAE | ||||||

|---|---|---|---|---|---|---|---|

| L | 1 | 4 | 10 | L | 1 | 4 | 10 |

| The synthetic image (300 × 300) | |||||||

| α-TV | 19.67 | 23.86 | 26.37 | α-TV | 2.45 | 1.27 | 0.92 |

| p-LA | 19.76 | 23.14 | 25.68 | p-LA | 2.51 | 1.43 | 0.96 |

| SO | 4.14 | 15.81 | 21.00 | SO | 23.94 | 6.15 | 2.84 |

| AA | 16.33 | 20.14 | 23.94 | AA | 4.50 | 2.94 | 1.49 |

| The aerial image (512 × 512) | |||||||

| α-TV | 23.25 | 25.82 | 27.92 | α-TV | 12.00 | 8.95 | 7.12 |

| p-LA | 23.47 | 26.16 | 28.18 | p-LA | 11.64 | 8.81 | 6.98 |

| SO | 17.82 | 24.00 | 27.27 | SO | 25.44 | 11.05 | 7.42 |

| AA | 22.34 | 24.20 | 26.04 | AA | 13.35 | 10.30 | 8.13 |

| The cameraman image (256 × 256) | |||||||

| α-TV | 20.81 | 24.22 | 26.29 | α-TV | 14.65 | 8.88 | 7.14 |

| p-LA | 24.10 | 23.14 | 26.03 | p-LA | 13.94 | 9.18 | 7.43 |

| SO | 14.15 | 21.61 | 24.96 | SO | 38.92 | 14.64 | 8.36 |

| AA | 18.81 | 21.91 | 24.25 | AA | 22.14 | 14.25 | 9.97 |

Acknowledgments

The authors would like to express their sincere thanks to the referees for their valuable suggestions for the revision of the paper which contributed greatly to this work. The authors would also like to thank Jalal Fadili for providing them the MATLAB code of his algorithm. They would like to express their deep thanks to the referees for their suggestions while revising the paper, yet again. This work was partially supported by the Fundamental Research Funds for the Central Universities (Grant nos. HIT.NSRIF.2011003 and HIT.NSRIF.2012065), the National Science Foundation of China (Grant no. 11271100), the Aerospace Supported Fund, China, under Contract no. 2011-HT-HGD-06, China Postdoctoral Science Foundation funded project, Grant no. 2012M510933, and also the 985 project of Harbin Institute of Technology.