A Collocation Method Based on the Bernoulli Operational Matrix for Solving High-Order Linear Complex Differential Equations in a Rectangular Domain

Abstract

This paper contributes a new matrix method for the solution of high-order linear complex differential equations with variable coefficients in rectangular domains under the considered initial conditions. On the basis of the presented approach, the matrix forms of the Bernoulli polynomials and their derivatives are constructed, and then by substituting the collocation points into the matrix forms, the fundamental matrix equation is formed. This matrix equation corresponds to a system of linear algebraic equations. By solving this system, the unknown Bernoulli coefficients are determined and thus the approximate solutions are obtained. Also, an error analysis based on the use of the Bernoulli polynomials is provided under several mild conditions. To illustrate the efficiency of our method, some numerical examples are given.

1. Introduction

Complex differential equations have a great popularity in science and engineering. In real world, physical events can be modeled by complex differential equations usually. For instance, the vibrations of a one-mass system with two DOFs are mostly described using differential equations with a complex dependent variable [1, 2]. The various applications of differential equations with complex dependent variables are introduced in [2]. Since a huge size of such equations cannot be solved explicitly, it is often necessary to resort to approximation and numerical techniques.

In recent years, the studies on complex differential equations, such as a geometric approach based on meromorphic function in arbitrary domains [3], a topological description of solutions of some complex differential equations with multivalued coefficients [4], the zero distribution [5], growth estimates [6] of linear complex differential equations, and also the rational together with the polynomial approximations of analytic functions in the complex plane [7, 8], were developed very rapidly and intensively.

Since the beginning of 1994, the Laguerre, Chebyshev, Taylor, Legendre, Hermite, and Bessel (matrix and collocation) methods have been used in the works in [9–19] to solve linear differential, integral, and integrodifferential-difference equations and their systems. Also, the Bernoulli matrix method has been used to find the approximate solutions of differential and integrodifferential equations [20–22].

Here, the coefficients Pk(z) and the known function G(z) together with the unknown function f(z) are holomorphic (or analytic) functions in the domain D = {z ∈ ℂ : z = x + iy, a ≤ x ≤ b, c ≤ y ≤ d : a, b, c, d ∈ ℝ} where the coefficients βr are appropriate complex constants.

In this paper, by generalizing the methods [20, 21] from real calculus to the complex calculus, we propose a new matrix method which is based on the Bernoulli operational matrix of differentiation and a uniform collocation scheme. It should be noted that since an ordinary complex differential equation equals to a system of partial differential equations (see Section 4) the methods that based on high-order Gauss quadrature rules [23, 24] could not be effective. Needing to more CPU time from one side and ill conditioning of the associated algebraic problem from another side are two disadvantages of such methods. Therefore, implementing an easy to use approach such as the methods that based on operational matrices is necessary for solving any practical problem.

The rest of this paper is organized as follows. In Section 2, we review some notations from complex calculus and also provide several properties of the Bernoulli polynomials. Section 3 is devoted to the proposed matrix method. Error analysis and accuracy of the approximated solution by the aid of the Bernoulli polynomials is given in Section 4. Several illustrative examples are provided in Section 5 for confirming the effectiveness of the presented method. Section 6 contains some conclusions and notations about the future works.

2. Review on Complex Calculus and the Bernoulli Polynomials

This Section is divided into two subsections. In the first subsection we review some notations from complex calculus specially the concept of differentiability in the complex plane under some remarks. Then we recall several properties of the Bernoulli polynomials and introduce the operational matrix of differentiation of the Bernoulli polynomials in the complex form.

2.1. Review on Complex Calculus

From the definition of derivative in the complex form, it is immediate that a constant function is differentiable everywhere, with derivative 0, and that the identity function (the function f(z) = z) is differentiable everywhere, with derivative 1. Just as in elementary calculus one can show from the last statement, by repeated applications of the product rule, that, for any positive integer n, the function f(z) = zn is differentiable everywhere, with derivative nzn−1. This, in conjunction with the sum and product rules, implies that every polynomial is everywhere differentiable: if f(z) = cnzn + cn−1zn−1 + ⋯+c1z + c0, where c0, …, cn are complex constants, then f′(z) = ncnzn−1 + (n − 1)cn−1zn−2 + ⋯+c1.

Remark 1. The function f = u + iv is differentiable (in the complex sense) at z0 if and only if u and v are differentiable (in the real sense) at z0 and their first partial derivatives satisfy the relations ∂u(z0)/∂x = ∂v(z0)/∂y, ∂u(z0)/∂y = −∂v(z0)/∂x. In that case

Remark 2. Two partial differential equations

Remark 3 (sufficient condition for complex differentiability). Let the complex-valued function f = u + iv be defined in the open subset G of the complex plane ℂ and assume that u and v have first partial derivatives in G. Then f is differentiable at each point where those partial derivatives are continuous and satisfy the Cauchy-Riemann equations.

Definition 4. A complex-valued function that is defined in an open subset G of the complex plane ℂ and differentiable at every point of G is said to be holomorphic (or analytic) in G. The simplest examples are polynomials, which are holomorphic in ℂ, and rational functions, which are holomorphic in the regions where they are defined. Moreover, the elementary functions such as exponential function, the logarithm function, trigonometric and inverse trigonometric functions, and power functions all have complex versions that are holomorphic functions. It should be noted that if the real and imaginary parts of a complex-valued function have continuous first partial derivatives obeying the Cauchy-Riemann equations, then the function is holomorphic.

Remark 5 (complex partial differential operators). The partial differential operators ∂/∂x and ∂/∂y are applied to a complex-valued function f = u + iv in the natural way:

Intuitively one can think of a holomorphic function as a complex-valued function in an open subset of ℂ that depends only on z, that is, independent of . We can make this notion precisely as follows. Suppose the function f = u + iv is defined and differentiable in an open set. One then has

2.2. The Bernoulli Polynomials and Their Operational Matrix

- (i)

The operational matrix of differentiation in the Bernoulli polynomials has less nonzero elements than for shifted Legendre polynomials. Because for Bernoulli polynomials, the nonzero elements of this matrix are located in below (or above) its diagonal. However for the shifted Legendre polynomials is a strictly lower (or upper) filled triangular matrix.

- (ii)

The Bernoulli polynomials have less terms than shifted Legendre polynomials. For example, B6(x) (the 6th Bernoulli polynomial) has 5 terms while P6(x) (the 6th shifted Legendre polynomial) has 7 terms, and this difference will increase by increasing the index. Hence, for approximating an arbitrary function we use less CPU time by applying the Bernoulli polynomials as compared to shifted Legendre polynomials; this issue is claimed in [25] and is proved in its examples for solving nonlinear optimal control problems.

- (iii)

The coefficient of individual terms in the Bernoulli polynomials is smaller than the coefficient of individual terms in the shifted Legendre polynomials. Since the computational errors in the product are related to the coefficients of individual terms, the computational errors are less by using the Bernoulli polynomials.

3. Basic Idea

4. Error Analysis and Accuracy of the Solution

This section is devoted to provide an error bound for the approximated solution which may be obtained by the Bernoulli polynomials. We emphasized that this section is given for showing the efficiency of the Bernoulli polynomial approximation and is independent of the proposed method which is provided for showing how a complex ordinary differential equation (ODE) is equivalent to a system of partial differential equations (PDEs). After conveying this subject, we transform the obtained system of PDEs (together with the initial conditions (2)) to a system of two dimensional Volterra integral equations in a special case. Before presenting the main Theorem of this section, we need to recall some useful corollaries and lemmas. Therefore, the main theorem could be stated which guarantees the convergence of the truncated Bernoulli series to the exact solution under several mild conditions.

Corollary 6. Assume that g ∈ H = L2[0,1] be an enough smooth function and also is approximated by the Bernoulli serie , then the coefficients gn for all n = 0,1, …, ∞ can be calculated from the following relation:

Proof. See [21].

In practice one can use finite terms of the above series. Under the assumptions of Corollary 6, we will provide the error of the associated approximation.

Lemma 7 (see [20].)Suppose that g(x) be an enough smooth function in the interval and be approximated by the Bernoulli polynomials as done in Corollary 6. With more details assume that PN[g](x) is the approximate polynomial of g(x) in terms of the Bernoulli polynomials and RN[g](x) is the remainder term. Then, the associated formulas are stated as follows:

Proof. See [20].

Lemma 8. Suppose g(x) ∈ C∞[0,1] (with bounded derivatives) and gN(x) is the approximated polynomial using Bernoulli polynomials. Then the error bound would be obtained as follows:

Proof. By using Lemma 7, we have

According to [20] one can write

Now we use the formulae (1.1.5) in [27] for the even Bernoulli numbers as follows:

Therefore,

In other words , where C is a positive constant independent of N. This completes the proof.

Corollary 9. Assume that u(x, y) ∈ H × H = L2[0,1] × L2[0,1] be an enough smooth function and also is approximated by the two variable Bernoulli series , then the coefficients um,n for all m, n = 0,1, …, ∞ can be calculated from the following relation:

Proof. By applying a similar procedure in two variables (which was provided in Corollary 6) we can conclude the desired result.

In [28], a generalization of Lemma 7 can be found. Therefore, we just recall the error of the associated approximation in two dimensional functions.

Lemma 10. Suppose that u(x, y) be an enough smooth function and uN(x, y) be the approximated polynomial of u(x, y) in terms of linear combination of Bernoulli polynomials by the aid of Corollary 9. Then the error bound would be obtained as follows:

- (i)

,

- (ii)

.

It should be noted that the second condition is based upon Lemmas 8 and 10.

Theorem 11. Assume that U and U0 : = U(x, 0) be approximated by UN and U0,N, respectively, by the aid of the Bernoulli polynomials in (55) and also we use a collocation scheme for providing the numerical solution of (55). In other words

5. Numerical Examples

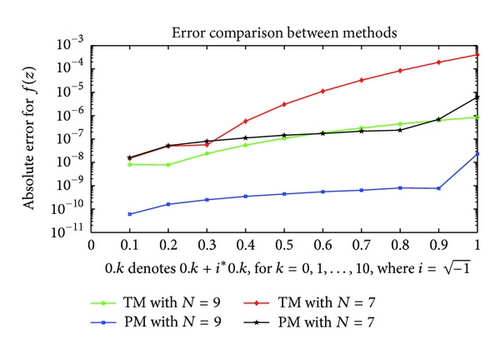

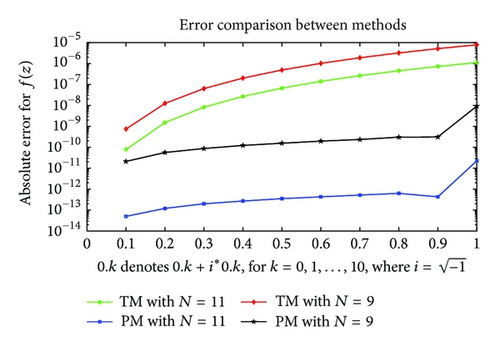

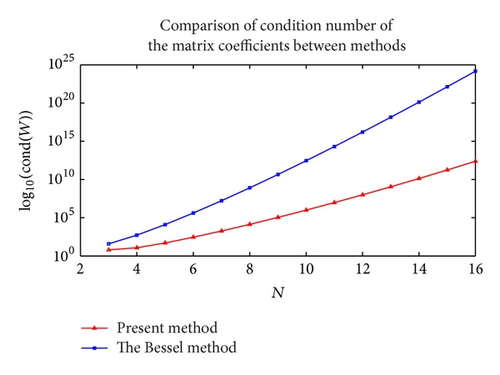

In this section, several numerical examples are given to illustrate the accuracy and effectiveness of the proposed method and all of them are performed on a computer using programs written in MATLAB 7.12.0 (v2011a) (The Mathworks Inc., Natick, MA, USA). In this regard, we have reported in the tables and figures the values of the exact solution f(z), the polynomial approximate solution fN(z), and the absolute error function eN(z) = |f(z) − fN(z)| at the selected points of the given domains. It should be noted that in the first example we consider a complex differential equation with an exact polynomial solution. Our method obtains such exact polynomial solutions readily by solving the associated linear algebraic system.

Example 12 (see [26].)As the first example, we consider the first-order complex differential equation with variable coefficients

According to (29), the matrix coefficients W are as follows:

Example 13 (see [26].)As the second example, we consider the following second-order complex differential equation

According to (29), the fundamental matrix equation is

| z = x + iy | Exact solution (real) | PM for N = 5 | PM for N = 7 | PM for N = 9 |

|---|---|---|---|---|

| 0.0 + 0.0i | 1.000000000000000 | 1.000000000000002 | 0.999999999999999 | 1.000000000000002 |

| 0.1 + 0.1i | 1.099649666829409 | 1.099650127059032 | 1.099649681790901 | 1.099649666809790 |

| 0.2 + 0.2i | 1.197056021355891 | 1.197058065414137 | 1.197056071303251 | 1.197056021305326 |

| 0.3 + 0.3i | 1.289569374044936 | 1.289572683703872 | 1.289569451705194 | 1.289569373971185 |

| 0.4 + 0.4i | 1.374061538887522 | 1.374064757066184 | 1.374061646628469 | 1.374061538795035 |

| 0.5 + 0.5i | 1.446889036584169 | 1.446891795476775 | 1.446889179088898 | 1.446889036484456 |

| 0.6 + 0.6i | 1.503859540558786 | 1.503862872087464 | 1.503859710928599 | 1.503859540462517 |

| 0.7 + 0.7i | 1.540203025431780 | 1.540203451564549 | 1.540203240474244 | 1.540203025363573 |

| 0.8 + 0.8i | 1.550549296807422 | 1.550520218427163 | 1.550549536105736 | 1.550549296761452 |

| 0.9 + 0.9i | 1.528913811884699 | 1.528765905385641 | 1.528913283429636 | 1.528913812578978 |

| 1.0 + 1.0i | 1.468693939915885 | 1.468204121679876 | 1.468688423751699 | 1.468693951050675 |

| z = x + iy | Exact solution (Im) | PM for N = 5 | PM for N = 7 | PM for N = 9 |

|---|---|---|---|---|

| 0.0 + i0.0 | 0 | 0.000000000000000 | −0.000000000000001 | 0.000000000000000 |

| 0.1 + i0.1 | 0.110332988730204 | 0.110330942667805 | 0.110332993217700 | 0.110332988786893 |

| 0.2 + i0.2 | 0.242655268594923 | 0.242645839814175 | 0.242655282953655 | 0.242655268747208 |

| 0.3 + i0.3 | 0.398910553778490 | 0.398893389645474 | 0.398910574081883 | 0.398910554017485 |

| 0.4 + i0.4 | 0.580943900770567 | 0.580922057122650 | 0.580943925558894 | 0.580943901105221 |

| 0.5 + i0.5 | 0.790439083213615 | 0.790412124917929 | 0.790439110267023 | 0.790439083643388 |

| 0.6 + i0.6 | 1.028845666272092 | 1.028807744371502 | 1.028845687924972 | 1.028845666809275 |

| 0.7 + i0.7 | 1.297295111875269 | 1.297248986448220 | 1.297295129424920 | 1.297295112510736 |

| 0.8 + i0.8 | 1.596505340600251 | 1.596503892694281 | 1.596505330955563 | 1.596505341401562 |

| 0.9 + i0.9 | 1.926673303972717 | 1.926900526193919 | 1.926672856270450 | 1.926673303646987 |

| 1.0 + i1.0 | 2.287355287178843 | 2.288259022526098 | 2.287352245460983 | 2.287355267120311 |

Example 14 (see [26].)As the final example, we consider the following second-order complex differential equation

6. Conclusions

High-order linear complex differential equations are usually difficult to solve analytically. Then, it is required to obtain the approximate solutions. For this reason, a new technique using the Bernoulli polynomials to numerically solve such equations is proposed. This method is based on computing the coefficients in the Bernoulli series expansion of the solution of a linear complex differential equation and is valid when the functions Pk(z) and G(z) are defined in the rectangular domain. An interesting feature of this method is to find the analytical solutions if the equation has an exact solution that is a polynomial of degree N or less than N. Shorter computation time and lower operation count results in reduction of cumulative truncation errors and improvement of overall accuracy are some of the advantages of our method. In addition, the method can also be extended to the system of linear complex equations with variable coefficients, but some modifications are required.

Conflict of Interests

The authors declare that they do not have any conflict of interests in their submitted paper.

Acknowledgments

The authors thank the Editor and both of the reviewers for their constructive comments and suggestions to improve the quality of the paper.