Profile Inferences on Restricted Additive Partially Linear EV Models

Abstract

We consider the testing problem for the parameter and restricted estimator for the nonparametric component in the additive partially linear errors-in-variables (EV) models under additional restricted condition. We propose a profile Lagrange multiplier test statistic based on modified profile least-squares method and two-stage restricted estimator for the nonparametric component. We derive two important results. One is that, without requiring the undersmoothing of the nonparametric components, the proposed test statistic is proved asymptotically to be a standard chi-square distribution under the null hypothesis and a noncentral chi-square distribution under the alternative hypothesis. These results are the same as the results derived by Wei and Wang (2012) for their adjusted test statistic. But our method does not need an adjustment and is easier to implement especially for the unknown covariance of measurement error. The other is that asymptotic distribution of proposed two-stage restricted estimator of the nonparametric component is asymptotically normal and has an oracle property in the sense that, though the other component is unknown, the estimator performs well as if it was known. Some simulation studies are carried out to illustrate relevant performances with a finite sample. The asymptotic distribution of the restricted corrected-profile least-squares estimator, which has not been considered by Wei and Wang (2012), is also investigated.

1. Introduction

The rest of this paper is organized as follows. In Section 2, we first review the restricted corrected-profile least-squares estimator of β and then study the asymptotic distribution of . After that, we construct the modified profile Lagrange multiplier test statistic and derive its asymptotic distribution under the null and alternative hypotheses. In Section 3, two-stage restricted estimators for the nonparametric components are proposed and their asymptotic distribution are presented. Some simulation studies are carried out to assess the performance of the derived results in Section 4. Proofs of the main results are given in Section 5.

2. Asymptotic Results of the Restricted-Profile Least-Squares Estimator and Modified Profile Lagrange Multiplier Test

2.1. The Restricted-Profile Least-Squares Estimator and Its Asymptotic Property

- (A1)

The density functions of Z1 and Z2 are bounded away from 0 and have bounded continuous second partial derivatives.

- (A2)

Let. The matrix is positive-definite, E(εi∣Xi, Zi1, Zi2) = 0, and .

- (A3)

The bandwidths h1 and h2 are of order n−1/5.

- (A4)

The function K(·) is a symmetric density function with compact support and satisfies ∫K(u)du = 1, ∫ uK(u)du = 0, ∫ u2K(u)du = 1, and ∫ u4K(u)du < ∞.

- (A5)

EUi = 0 and.

Under these assumptions, we give the following theorem that states the asymptotic distribution of.

Theorem 1. Suppose that conditions (A1)–(A5) hold and εi is homoscedastic with variance σ2 and independent of Ui. Then the modified restricted profile least-squares estimatoris asymptotically normal. Namely,

Remark 2. From the previous theorem, it is easy to check that when X is observed exactly the asymptotic distribution of modified restricted profile least-squares estimatoris the same as the asymptotic distribution obtained by Theorem 3.1 which appeared in the works of Wei and Liu [16].

To apply Theorem 1 to inference, we need to estimate ΓX|Z and Λ. Using plug-in method, a consistent estimatorof Σ can be obtained. Wei and Wang [13] proposed to estimate ΓX|Z and Λ, respectively, by

The asymptotic distribution of, where B is an s × p matrix with rank (B) = s, can be given by the following result.

Corollary 3. Suppose that conditions (A1)–(A5) hold and εi is homoscedastic with variance σ2 and independent of Ui. Then as n → ∞, one has

2.2. Modified Profile Lagrange Multiplier Test and Its Asymptotic Properties

The following theorem gives the asymptotic distribution of the modified profile Lagrange multiplier test statistic Tn.

Theorem 4. Suppose that conditions (A1)–(A5) hold. Then

where .

Remark 5. From the above theorem, we known that our results are the same to the results of adjusted test statistic derived by Wei and Wang [13], but our proposed test statistic is more easier to perform, especially for the case when the covariance matrix Σuu of measurement error is unknown. From Wei and Wang [13] we know that, when Σuu is unknown, it is difficult to estimate RSS(H0) and RSS(H1); thus their proposed test statistic is unapplicable in this case. So our proposed profile Lagrange multiplier test statistic is more attractive.

3. Two-Stage Restricted Estimator for the Nonparametric Component

From (29), we know thatandare only the estimators of f1(·) and f2(·) at the observations (Z11, …, Zn1) ′ and (Z12, …, Zn2) ′, respectively. We next give the two-stage restricted estimator of f1(·).

Let p1(z1) denote the density function of Z1, v0 = ∫ K2(u)du, μ2 = ∫ u2K(u)du. The following theorem gives the asymptotic normality of the two-stage restricted estimator.

Theorem 6. Suppose that conditions (A1)–(A5) hold and nh5 = O(1). If σ2(z1) = E(ε2∣Z1 = z1) has continuous derivative, then as n → ∞, one has

Remark 7. From Theorem 6, we known that the two-stage restricted estimator is asymptotically normal and has an oracle property; that is, the estimator performs as if the other nonparametric component f2(z2) was known though it was unknown. Simulation studies further confirm our theory result. Similarly, the two-stage restricted estimator of f2(z2) and its asymptotic result can be obtained.

4. Simulation

In this section, some simulations are carried out to evaluate the finite sample performance of the testing procedure and the proposed two-stage restricted estimator for nonparametric components.

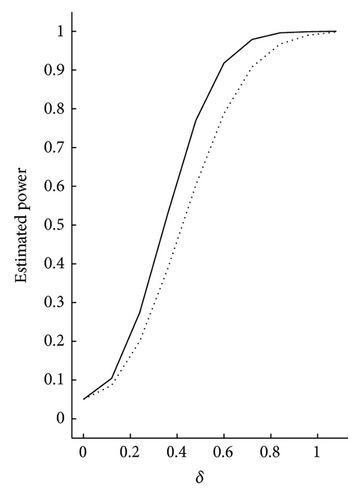

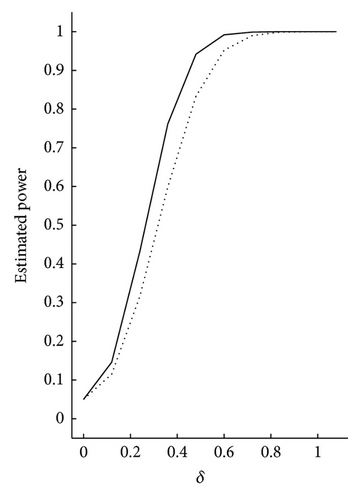

We first study the performance of the proposed testing procedure. Consider that the null hypothesis is H0 : Aβ = 5 with A = (1,1) and the corresponding alternative hypothesis is H1 : Aβ = 5 + δ with δ being a series of positive constants. For δ = 0, the alternative hypothesis becomes the null hypothesis. We take the bandwidths h1 = h2 = 0.8 · n−1/5, the sample sizes n = 200,300, and . To illustrate the effectiveness of the proposed test statistics, the estimated power function curves with the significance level α = 0.05 are plotted for and . For each case, we repeat 1000 times. The results are given in Figure 1.

- (1)

Our proposed test statistic is sensitive to the corresponding alternative hypothesis. This can be seen from the fact that if we increase the constant δ a little, the estimated power function increases rapidly.

- (2)

The measurement errors affect the power function. For the same sample sizes, increasing the variance of the measurement error results in the decrease of the estimated power function. For the same measurement error, when the observation sample sizes increase, the estimated power function also increases. So our proposed procedure is feasible and easy to perform.

| Σuu | n = 200 | n = 300 | n = 500 |

|---|---|---|---|

| Σuu = 0.25I2 | |||

| Two-stage | |||

| SM | 0.2249 | 0.1887 | 0.1530 |

| STD | 0.1152 | 0.0915 | 0.0552 |

| Benchmark | |||

| SM | 0.2239 | 0.1762 | 0.1494 |

| STD | 0.0958 | 0.0764 | 0.0533 |

| Σuu = 0.4I2 | |||

| Two-stage | |||

| SM | 0.2787 | 0.2298 | 0.1923 |

| STD | 0.1245 | 0.1077 | 0.0930 |

| Benchmark | |||

| SM | 0.2784 | 0.2284 | 0.1905 |

| STD | 0.1235 | 0.1043 | 0.0916 |

| Σuu = 0.6I2 | |||

| Two-stage | |||

| SM | 0.3253 | 0.2609 | 0.2162 |

| STD | 0.1728 | 0.1375 | 0.1038 |

| Benchmark | |||

| SM | 0.3200 | 0.2602 | 0.2153 |

| STD | 0.1707 | 0.1344 | 0.1008 |

From Table 1, it can be seen that, with the increase of the sample size n, the finite sample performance of the two estimators improves and the performance of the two-stage restricted estimator is close to that of the benchmark estimator. So the proposed two-stage restricted estimator of the nonparametric component has an oracle property.

5. Proofs

Firstly, some lemmas will be given.

Lemma 8. Let (X1, Y1), …, (Xn, Yn) be i.i.d random vectors, where Yi is scalar random variable. Further assume that E | Y|s < ∞ and sup x∫ | y|sp(x, y)dy < ∞, where p(x, y) denotes the joint density of (X, Y). Let K(·) be a bounded positive function with a bounded support, satisfying a Lipschitz condition. Given that n2δ−1h → ∞ for some δ < 1 − s−1, then

This is Lemma 7.1 of Fan and Huang [17].

Lemma 9. Suppose that assumptions (A1)–(A5) hold. Then the following asymptotic approximations hold uniformly over all the elements of the matrices:

This lemma is Lemma 3.1 and Lemma 3.2 of Opsomer and Ruppert [1].

Lemma 10. Suppose that assumptions (A1)–(A5) hold. Then as n → ∞, it holds that

The proof of this lemma can be found in the works of Wang et al. [10].

Lemma 11. Suppose that assumptions (A1)–(A5) hold. Then the corrected-profile least-squares estimatorof β is asymptotically normal. Namely,

This lemma is just Theorem 1 of Liang et al. [11] and is same as Theorem 3.2 of Wang et al. [10].

Proof of Theorem 1. Let

By Lemma 10, we can obtain

By the first equation of (18), we have

Using the results that Jn − J = oP(1) and , we get

By the Slutsky theorem and Lemma 11, we can derive the result after some calculations.

Proof of Theorem 4. (1) Using Lemma 10, we know that

Similarly, we can prove that

Under the null hypothesis of testing problem (25) and by applying Lemma 11, we can prove that

By the second equation of (18), we get

Using (43), (31), and the Slutsky theorem, we derive that

By (43)–(45), it is easy to derive that, under the null hypothesis of testing problem (25),

(2) Under the alternative hypothesis and again by applying Lemma 11, we have

Using the similar argument as in (47), we can prove that

Then under the alternative hypothesis,we have

Proof of Theorem 6. Using (31), by some simple calculations, we get

Referring to the proof of Theorem 4.1 in Opsomer and Ruppert [1] and using Lemma 9, we obtain that

Using the result that

Using similar calculation to that of T2 and the result derived from Theorem 1 that , then we have

By using the Slutsky theorem again, we derive the desired result.

Acknowledgments

Wang’s researches are supported by the NSF project (ZR2011AQ007) of Shangdong Province of China and NNSF projects (11201499 and 11301309) of China.