Fourth- and Fifth-Order Methods for Solving Nonlinear Systems of Equations: An Application to the Global Positioning System

Abstract

Two iterative methods of order four and five, respectively, are presented for solving nonlinear systems of equations. Numerical comparisons are made with other existing second- and fourth-order schemes to solve the nonlinear system of equations of the Global Positioning System and some academic nonlinear systems.

1. Introduction

The search for solutions of nonlinear systems of equations is an old and difficult problem with wide applications in sciences and engineering. The best known method, for being very simple and effective, is Newton’s method. Its generalization to a system of equations was proposed by Ostrowski [1] and to Banach spaces by Kantorovič [2]. In the literature, several modifications have been made on classical methods in order to accelerate the convergence or to reduce the number of operations and evaluations of functions in each step of the iterative process. The extension of the variants of Newton’s method described by Weerakoon and Fernando in [3], by Özban in [4] and Gerlach in [5], to the functions of several variables has been developed in [6–9]. In [6, 7], families of variants of Newton’s method of third-order have been designed by using open and closed formulas of quadrature, including the families of the methods defined by Frontini and Sormani in [8]. Using the generic formula of the interpolatory quadrature, in [9] a family of methods is obtained with order of convergence 2d + 1, under certain conditions, where d is the order up to which the partial derivatives of each coordinate function evaluated in the solution are canceled. Indeed, Darvishi and Barati improved in [10] the method from Frontini and Sormani, getting a fourth-order scheme. In addition to multistep methods based on interpolatory quadrature, other schemes have been developed by using different techniques, as extension to several variables of one-dimensional schemes (see [11]), Adomian decomposition (see [12, 13], e.g.), the one proposed by Darvishi and Barati in [14, 15] with super cubic convergence, and the methods proposed by Cordero et al. in [16] with orders of convergence four and five. Another procedure to develop iterative methods for nonlinear systems is the replacement of the second derivative by some approximation. In [17], Traub presented a family of multipoint methods based on approximating the second derivative that appears in the iterative formula of Chebyshev’s scheme, and more recently, Babajee et al. in [18] designed two Chebyshev-like methods free from second derivatives. Recently, Sharma et al. [19] designed a fourth-order scheme by using weight-function technique. Another well-known acceleration technique is the composition of two iterative methods of orders p1 and p2, respectively, obtaining a method of order p1p2 (see [17]). New evaluations of the Jacobian matrix and the nonlinear function are usually needed in order to increase the order of convergence.

- (1)

F(q)(u)(v1, v2, …, vq−1, ·) ∈ ℒ(ℝn),

- (2)

F(q)(u)(vσ(1), vσ(2), …, vσ(q)) = F(q)(u)(v1, v2, …, vq) for all permutation σ of {1,2, …, q},

- (1)

F(q)(u)(v1, v2, …, vq) = F(q)(u)v1 · ⋯·vq,

- (2)

F(q)(u)vq−1F(p)vp = F(q)(u)F(p)(u)vq+p−1.

In [7], the concept of computational order of convergence was introduced as follows.

Definition 1. Let be a zero of a function F, and suppose that x(k−1), x(k), and x(k+1) are three consecutive iterations close to . Then, the computational order of convergence p can be approximated using the formula

Conjecture 2. Given a multipoint iterative method to solve nonlinear systems of equations which requires d = k1 + k2 functional evaluations per step such that k1 of them correspond to the functional evaluations of the Jacobian matrix and k2 to evaluations of the nonlinear function. We conjecture that the optimal order for this method is if k1 ≤ k2.

In this paper, we propose two new and competitive iterative methods of orders four and five, respectively, that improve other known methods.

The rest of this paper is organized as follows: in Section 2, we make an introduction to the Global Positioning System (GPS), focusing on the way that the receiver calculates the user position using the ephemeris data of the artificial satellites. In Section 3, we present our new iterative methods and analyze its convergence order, and by using the idea of a technique presented in [25], it is also proved that, in general, if we combine two methods of orders p and q, respectively, with p ≥ q, in the same way that we do it in our method of order five, the order of convergence of the resultant method is p + q. In Section 4, we show an application of this analysis in order to solve the nonlinear system of the GPS and several academic nonlinear systems of equations. A comparison is established among the new methods and Newton and Sharma’s methods in terms of convergence order, approximated computational convergence order (ACOC), and computational and efficiency indices, CI and I, respectively.

2. Basics on Global Positioning System

This section introduces the basic concept of how a GPS receiver determines its position. From the satellite constellation, the equations required for solving the user position conform a nonlinear system of equations. In addition, some practical considerations (i.e., the inaccuracy of the user clock) will be included in these equations. These equations are usually solved through a linearization and a fixed point iteration method. The obtained solution is in a Cartesian coordinate system, and after that the result will be converted into a spherical coordinate system. However, the Earth is not a perfect sphere; therefore, once the user position is estimated, the shape of the Earth must be taken into consideration. The user position is then translated into the Earth-based coordinate system. In this paper, we are going to focus our attention in solving the nonlinear system of equations of the GPS giving the results in a Cartesian coordinate system. We can find further information about GPS in [26].

2.1. Basic GPS Concepts

The position of a point in space can be found by using the distances measured from this point to some known position in space. We are going to use an example to illustrate this point.

Figure 1 shows a two-dimensional case. In order to determine the user position U, three satellites S1, S2, and S3 and three distances are required. The trace of a point with constant distance to a fixed point is a circle in the two-dimensional case. Two satellites and two distances give two possible solutions because two circles intersect at two points. A third circle is needed to uniquely determine the user position. For similar reasons in a three-dimensional case, four satellites and four distances are needed. The equal-distance trace to a fixed point is a sphere in a three-dimensional case. Two spheres intersect to make a circle. This circle intersects another sphere, and this intersection produces two points. In order to determine which point is the user position, one more satellite should be needed. In GPS, the position of the satellite is known from the ephemeris data transmitted by the satellite. By measuring the distance from the receiver to the satellite, the position of the receiver can be determined. In the above discussion, the distance measured from the user to the satellite is assumed to be very accurate, and there is no bias error. However, the distance measured between the receiver and the satellite has a constant unknown bias, because the user clock usually is different from the GPS clock. In order to solve this bias error, one more satellite is required. Therefore, in order to find the user position, five satellites are needed. If one uses four satellites and the measured distance with bias error to measure a user position, two possible solutions can be obtained. Theoretically, one cannot determine the user position. However, one of the solutions is close to the Earth′s surface, and the other one is in the space. In fact, as we will see in Section 4, in this memory, we have used four satellites, and sometimes we have found the solution in the space. Since the user position is usually close to the surface of the earth, it can be uniquely determined. Therefore, the general statement is that four satellites can be used to determine a user position, even though the distance measured has a bias error. The method of solving the user position discussed in the next subsections is through iteration. The initial position is often selected at the center of the Earth. In the following discussion, four satellites are considered as the minimum number required for finding the user position.

2.2. Basic Equations for Finding User Position

Because there are three unknowns and three equations, the values of xu, yu, and zu can be determined from these equations. Theoretically, there should be two sets of solutions as they are second-order equations. These equations can be solved by linearizing them and making an iterative approach. The solution of these equations will be discussed later in Section 2.4. In GPS operation, the positions of the satellites are given. This information can be obtained from the data transmitted from the satellites. The distances from the user (the unknown position) to the satellites must be measured simultaneously at a certain time instance. Each satellite transmits a signal with a time reference associated with it. By measuring the time of the signal traveling from the satellite to the user, the distance between the user and the satellite can be found. The distance measurement is discussed in the next section.

2.3. Measurement of Pseudorange

2.4. Solution of User Position from Pseudoranges

When δυ is lower than a certain predetermined threshold, the iteration will stop. Sometimes, the clock bias bu is not included in (23). In this paper, we use as stopping criterion the quantity | | x(k+1) − x(k)||+| | F(x(k+1))|| because it is stronger than (23). As we can verify in [27] the above iterative method used to calculate via software, the receiver position in the GPS is Newton′s method, a well-known method of second-order of convergence. In this work, we improve the GPS software by means of two methods of order four and five, respectively, that converge to the solution with less number of iterations and better I or CI than Newton scheme.

3. Description of the Methods and Convergence Analysis

3.1. A Fourth-Order Method

Theorem 3. Let F : D⊆ℝn → ℝn be sufficiently differentiable at each point of an open neighborhood D of that is a solution of the nonlinear system F(x) = 0. Let one suppose that F′(x) is continuous and nonsingular in . Then, the sequence {x(k)} k≥0 obtained by using the iterative expression (24) converges to with order four. The error equation is

Proof. Taylor expansion of F(x(k)) and F′(x(k)) around gives

On the other hand, we have that

Analogously, we obtain the expression of . Given that the kth iteration of Traub′s scheme is

Besides, the expression of F′(z(k)) is

So,

Then,

Finally, by replacing (28) and (37) in the iterative expression (24), we obtain the error equation

3.2. A Fifth-Order Method

Theorem 4. Let F : D⊆ℝn → ℝn be sufficiently differentiable at each point of an open neighborhood D of that is a solution of the nonlinear system F(x) = 0. Let one suppose that F′(x) is continuous and nonsingular in . Then, the sequence {x(k)} k≥0 obtained by using the iterative expression (39) converges to with order five. The error equation is

3.3. Pseudocomposition

In [25] a technique called pseudocomposition that uses a known method as a predictor and the Gaussian quadrature as a corrector was introduced. The order of convergence of the resulting scheme depends, among other factors, on the order of the last two steps of the predictor. Following this idea, we generalize the procedure used to design method M5.

Then, we can establish the next result.

Theorem 5. Let F : D⊆ℝn → ℝn be sufficiently differentiable at each point of an open neighborhood D of that is a solution of the nonlinear system F(x) = 0. Let one suppose that F′(x) is continuous and nonsingular in . Let y(k) be the kth iteration of an iterative method of order q and z(k) the kth iteration of an iterative method of order p, with p ≥ q. The sequence {x(k)} k≥0 obtained by the iterative expression

Proof. Taylor’s expansions of y(k) and z(k) are

Taylor’s expansion of F(z(k)) around gives

Then, the convergence order of the method that results from this combination of a method of order q with another of order p with p ≥ q is p + q.

4. Numerical Results

Numerical computations have been carried out using variable precision arithmetic, with 2000 digits of mantissa, in MATLAB 7.1. The stopping criterion has been | | x(k+1) − x(k)||+| | F(x(k+1))|| < 10−250, and therefore, we check that the iterate sequence converges to an approximation of the solution of the nonlinear system. For every method, we count the number of iterations needed to reach the wished tolerance, and we calculate the approximated computational order of convergence ACOC, the efficiency index I, the computational index CI, and an error estimation made with the last values of | | x(k+1) − x(k)|| and | | F(x(k+1))||.

4.1. Numerical Results Obtained with Academic Nonlinear Systems

- (a)

,

- (b)

,

- (c)

F(x) = (f1(x), f2(x), …, fn(x)), where x = (x1, x2, …, xn) T and fi : ℝn → ℝ, i = 1,2, …, n such that

()When n is odd, the exact zeros of F are and .

In Table 1, we can find a comparative among the different numerical methods for the nonlinear systems (a), (b), and (c). As we can see, the approximated computational orders of convergence are the expected ones, and methods M4 and M5 are clearly very competitive in terms of error estimation.

| Function | x(0) | Method | Iter | ACOC | | | x(k+1) − x(k)|| | | | F(x(k+1))|| |

|---|---|---|---|---|---|---|

| (a) | (2,2) T | N | 13 | 2 | 0.163e − 338 | 0 |

| S | 7 | 4 | 0.962e − 270 | 0 | ||

| M4 | 7 | 4 | 0.140e − 805 | 0 | ||

| M5 | 7 | 5 | 0.893e − 831 | 0 | ||

| (b) | (−0.1, −0.1) T | N | 9 | 2 | 0.94e − 307 | 0.28e − 921 |

| S | 5 | 4 | 0.53e − 276 | 0.83e − 1106 | ||

| M4 | 5 | 4 | 0.98e − 300 | 0.32e − 1801 | ||

| M5 | 5 | 5 | 0.435e − 647 | 0.1e − 3941 | ||

|

(2,2, …, 2) T | N | 11 | 2 | 0.535e − 488 | 0.286e − 976 |

| S | 6 | 4 | 0.113e − 408 | 0.960e − 1636 | ||

| M4 | 6 | 4 | 0.380e − 704 | 0 | ||

| M5 | 6 | 5 | 0.122e − 1201 | 0 | ||

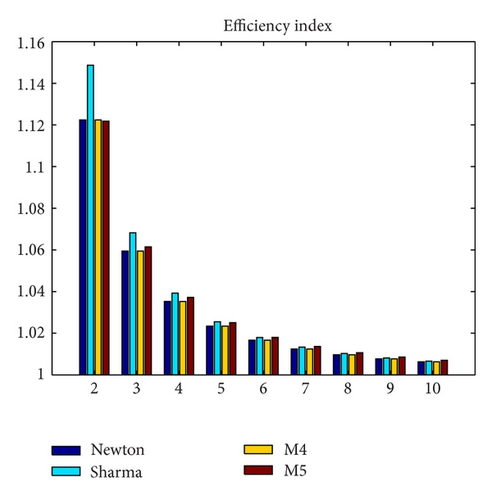

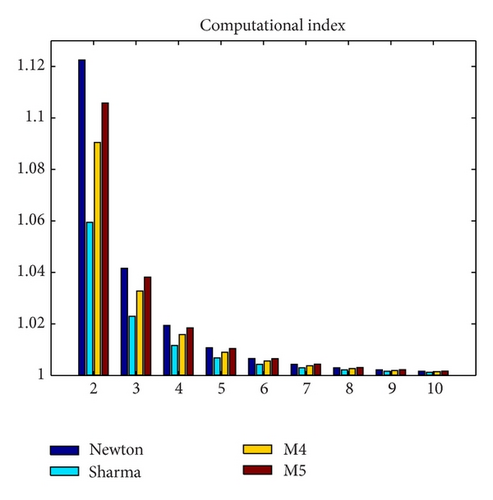

In Figures 2 and 3, we show these efficiency indices for n = 2,3, …, 10. It can be concluded that our methods improve Sharma′s scheme in terms of CI, although the classical efficiency of Sharma′s procedure is better for n ≤ 6. M4 and M5 are competitive, obtaining better error estimation than N and S, with the same number of iterations.

4.2. Numerical Results for the GPS Problem

In order to test the proposed schemes on the problem of a user position of a GPS device, we have requested to the Cartographic Institute of Valencia to provide us with data of known geocentric coordinates.

- (i)

an example of a fixed-point GPS in the geocentric coordinates: x = 4984687,426, y = −41199,155, and z = 3966605,952. It is a point located in Alcoy (Alicante, Spain),

- (ii)

observations from that fixed point (file *.09o) for a day,

- (iii)

positions of the satellites for that day:*.09n and *.sp3 files,

- (iv)

description of RINEX format (*.09o file): http://www.igs.org/components/formats.html

- (v)

description of the ephemeris file and satellite positions sp3: http://igscb.jpl.nasa.gov/igscb/data/format/sp3c.txt

- (vi)

link to other libraries for analysis calculations: http://www.ngs.noaa.gov/gps-toolbox/exist.htm.

With these data, we obtain the positions of the visible satellites in the instant that corresponds to the provided data. With these coordinates, we calculate the approximated pseudoranges for every satellite, and then we are able to build the nonlinear system of equations of GPS (18) using four of the satellites, with which we check the iterative methods of Newton, Sharma, M4, and M5.

In Table 2, we can find a comparative among the iterative methods N, S, M4, and M5 for the nonlinear system of the GPS. We recall that the coordinates of the center of the Earth and bu = 0, that is, x(0) = (0,0, 0,0) T, are usually used as initial estimation. Despite this, we have also tested the methods with some other initial conditions. We denote that x* ≈ (4984687.426, −41199.155,3966605.952, and 0.116e − 8) T as the Earth’s solution and xs ≈ (−39720114.893, −16748760.539, −23938190.113, and − 0.159) T as the exterior space solution.

| Method | x(0) | Iter | ACOC | | | x(k+1) − x(k)|| | | | F(x(k+1))|| | |

|---|---|---|---|---|---|---|

| N | (0,0, 0,0) T | 12 | 2 | 0.6e − 374 | 0.830e − 739 | x* |

| S | 7 | 4 | 0.173e − 767 | 0.2e − 1991 | x* | |

| M4 | 7 | 4 | 0.823e − 512 | 0.1e − 1991 | x* | |

| M5 | 7 | 5 | 0.222e − 814 | 0.3e − 1991 | xs | |

| N |

|

15 | 2 | 0.421e − 317 | 0.537e − 627 | xs |

| S | 8 | 4 | 0.739e − 799 | 0.1e − 1991 | x* | |

| M4 | 7 | 4 | 0.191e − 735 | 0 | x* | |

| M5 | 8 | 5 | 0.104e − 563 | 0.1e − 1991 | xs | |

| N |

|

12 | 2 | 0.371e − 381 | 0.317e − 753 | x* |

| S | 7 | 4 | 0.138e − 784 | 0.3e − 1991 | x* | |

| M4 | 7 | 4 | 0.704e − 520 | 0 | x* | |

| M5 | 7 | 4.9968 | 0.510e − 692 | 0.1e − 1991 | xs | |

As we can see, for this particular system of equations, Newton′s method does not converge to the user position for all the initial estimations, so does M5, but M4 is a good method in all senses, very competitive in respect of known methods.

5. Conclusions

In this paper, we have gone in depth on an emerging line of investigation, the GPS receivers software improvement. Concretely, GPS receivers currently use Newton′s method to solve the nonlinear system (18) and to calculate their exact position with the information obtained from signals received from the GPS constellation of satellites. We propose two different combinations of Newton method and Traub′s methods, obtaining two methods of fourth- (M4) and fifth-order (M5). Using the idea presented in [25], called pseudocomposition, it is proved that combining in a particular way two methods of order p and q, respectively, with p ≥ q, the order of convergence of the resulting scheme is p + q. We have numerically compared the different methods, and we have concluded that M4 and M5 are very competitive in terms of the error estimation.

Acknowledgments

This research was supported by Ministerio de Ciencia y Tecnología MTM2011-28636-C02-02 and FONDOCYT 2011-1-B1-33 República Dominicana. The authors would also like to thank the work of the anonymous referee.