On the Low-Rank Approximation Arising in the Generalized Karhunen-Loeve Transform

Abstract

We consider the low-rank approximation problem arising in the generalized Karhunen-Loeve transform. A sufficient condition for the existence of a solution is derived, and the analytical expression of the solution is given. A numerical algorithm is proposed to compute the solution. The new algorithm is illustrated by numerical experiments.

1. Introduction

Throughout this paper, we use Rm×n to denote the set of m × n real matrices. We use AT and A+ to denote the transpose and Moore-Penrose generalized inverse of the matrix A, respectively. The symbol On×n stands for the set of all n × n orthogonal matrices. The symbols rank (A) and ∥A∥F stand for the rank and the Frobenius norm of the matrix A, respectively. For a = (ai) ∈ Rn, the symbol ∥a∥ stands for the l2-norm of the vector a, that is, . The symbol A1/2 stands for the square root of the matrix A, that is, (A1/2) 2 = A. For the random vector x = (xi) ∈ Rn, we use E{xi} to stand for the expected value of the ith entry xi, and we use E{xxT} = (eij) n×n to stand for the covariance matrix of the random vector x, where eij = E[(xi − E{xi})(xj − E{xj})], i, j = 1,2, …, n.

Problem 1. Given two matrices A ∈ Rm×n, B ∈ Rp×n and an integer d, 1 ≤ d < m, p, find a matrix of rank d such that

In the last few years there has been a constantly increasing interest in developing the theory and numerical approaches for the low rank approximations of a matrix, due to their wide applications. A well-known method for the low rank approximation is the singular value decomposition (SVD) [5, 6]. When the desired rank is relatively low and the matrix is large and sparse, a complete SVD becomes too expensive. Some less expensive alternatives for numerical computation, for example, Lanczos bidiagonalization process [7], and the Monte Carlo algorithm [8] are available. To speed up the computation of SVD, random sampling has been employed in [9]. Recently, Ye [10] proposed the generalized low rank approximations of matrices (GLRAM) method. This method is proved to have less computational time than the traditional singular value decomposition-based methods in practical applications. Later, GLRAM method has been revisited and extended by Liu et al. [11] and Liang and Shi [12]. In some applications, we need to emphasize important parts and deemphasize unimportant parts of the data matrix, so the weighted low rank approximations were considered by many authors. Some numerical methods, such as Newton-like algorithm [13], left versus right representations method [14], and unconstrained optimization method [15], are proposed. Recently, by using the hierarchical identification principle [16] which regards the known matrix as the system parameter matrix to be identified, Ding et al. and Xie et al. present the gradient-based iterative algorithms [16–21] and least-squares-based iterative algorithm [22, 23] for solving matrix equations. The methods are innovational and computationally efficient numerical algorithms.

In this paper, we develop a new method to solve the low rank approximation Problem 1, which can avoid the disadvantages of SVD method. We first transform Problem 1 into the fixed rank solution of a matrix equation and then use the generalized singular value decomposition (GSVD) to solve it. Based on these, we derive a sufficient condition for the existence of a solution of Problem 1, and the analytical expression of the solution is given. A numerical algorithm is proposed to compute the solution. Numerical examples are used to illustrate the numerical algorithm. The first one is artificial to show that the new algorithm is feasible to solve Problem 1, and the second is simulation, which shows that the new algorithm can be used to realize the image compression.

2. Main Results

In this section, we give a sufficient condition and an analytical expression for the solution of Problem 1 by transforming Problem 1 into the fixed rank solution of a matrix equation. Finally, we establish an algorithm for solving Problem 1.

Lemma 2. A matrix is a solution of Problem 1 if and only if it is a solution of the following matrix equation:

Proof. It is easy to verify that a matrix is a solution of Problem 1 if and only if satisfies the following two equalities simultaneously:

Since the normal equation of the least squares problem (13) is

Remark 3. From Lemma 2 it follows that Problem 1 is equivalent to (12), hence we can solve Problem 1 by finding a fixed rank solution of the matrix equation XBBT = ABT.

Theorem 4. If

Remark 5. In contrast with (11), the solution expression (35) does not require the matrix B to be square and nonsingular and does not need to compute the inverse of B.

Based on Theorem 4, we can establish an algorithm for finding the solution of Problem 1.

3. Numerical Experiments

In this section, we first use a simple artificial example to illustrate that Algorithm 6 is feasible to solve Problem 1, then we use a simulation to show that Algorithm 6 can be used to realize the image compression. The experiments were done with MATLAB 7.6 on a 64-bit Intel Pentium Xeon 2.66 GHz with emach ≈ 2.0 × 10−16.

Example 7. Consider Problem 1 with

Example 7 shows that Algorithm 6 is feasible to solve Problem 1. However, the SVD method in [1] cannot be used to solve Example 7, because B is not a square matrix.

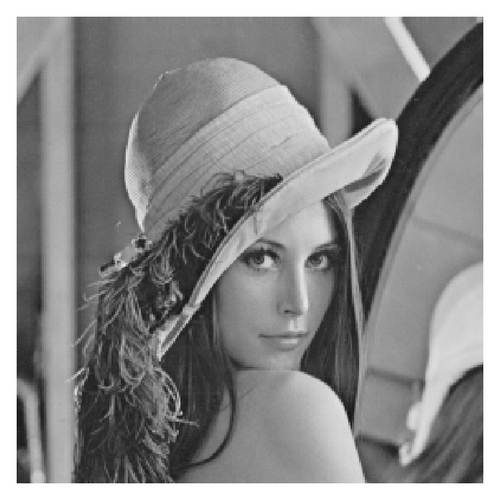

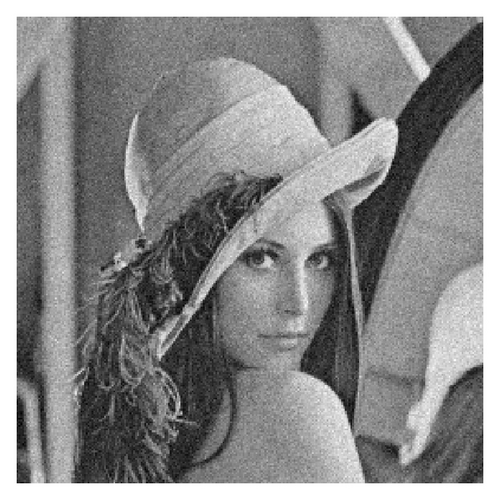

Example 8. We will use the generalized Karhunen-Loeve transform, based on Algorithm 6 and SVD method in [1], respectively, to realize the image compression. Figure 1(a) (see page 3) is the test image which has 256 × 256 pixels and 256 levels on each pixel. We separate it into 32 × 32 blocks such that each block has 8 × 8 pixels. Let and (i, j = 0,1, 2, …, 7; k, l = 0,1, 2, …, 31) be the values of the image and a Gaussian noise (generated by Matlab function imnoise) at the (i, j)th pixel in the (k, l)th block, respectively. For convenience, let a = i + 8j, p = k + 32l, and the (i, j)th pixel in the (k, l)th block be expressed as the ath pixel in the pth block (a = 0,1, 2, …, 63; p = 0,1, …, 1023). We can also express and as and , respectively.

The test image is processed on each block. Therefore, we can assume that the blocked image space is 64-D real vector space R64. The pth block of the original image is expressed by the pth vector:

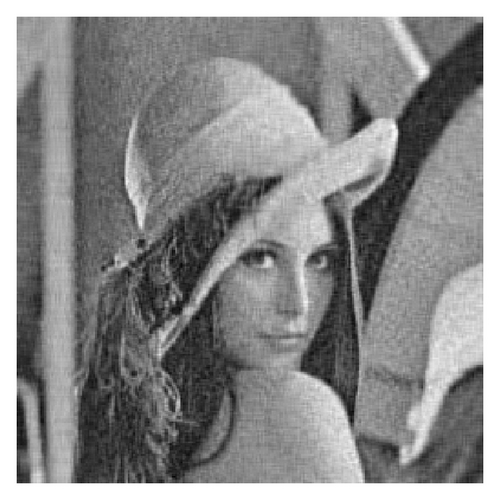

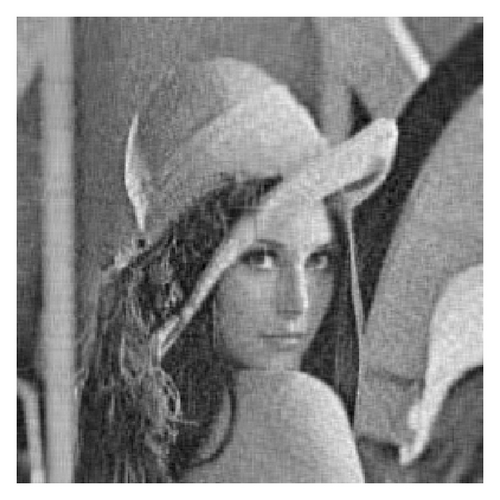

Figure 2 illustrates that Algorithm 6 can be used to realize image compression. Although it is difficult to see the difference between Figures 2 and 3, which are compressed by SVD method in [1], from Table 1 we can see that the execution time of Algorithm 6 is less than that of SVD method at the same rank. This shows that our algorithm outperforms the SVD method in execution time.

4. Conclusion

The low rank approximation Problem 1 arising in the generalized Karhunen-Loeve transform is studied in this paper. We first transform Problem 1 into the fixed rank solution of a matrix equation and then use the generalized singular value decomposition (GSVD) to solve it. Based on these, we derive a sufficient condition for the existence of a solution, and the analytical expression of the solution is also given. Finally, we use numerical experiments to show that new algorithm is feasible and effective.

Acknowledgments

This research was supported by the National Natural Science Foundation of China (11101100; 11226323; 11261014; and 11171205), the Natural Science Foundation of Guangxi Province (2012GXNSFBA053006; 2013GXNSFBA019009; and 2011GXNSFA018138), the Key Project of Scientific Research Innovation Foundation of Shanghai Municipal Education Commission (13ZZ080), the Natural Science Foundation of Shanghai (11ZR1412500), the Ph.D. Programs Foundation of Ministry of Education of China (20093108110001), the Discipline Project at the corresponding level of Shanghai (A. 13-0101-12-005), and Shanghai Leading Academic Discipline Project (J50101).