State Estimation for Neural Networks with Leakage Delay and Time-Varying Delays

Abstract

The state estimation problem is investigated for neural networks with leakage delay and time-varying delay as well as for general activation functions. By constructing appropriate Lyapunov-Krasovskii functionals and employing matrix inequality techniques, a delay-dependent linear matrix inequalities (LMIs) condition is developed to estimate the neuron state with some observed output measurements such that the error-state system is globally asymptotically stable. An example is given to show the effectiveness of the proposed criterion.

1. Introduction

In the past few years, neural networks have been extensively studied and successfully applied in many areas such as combinatorial optimization, signal processing, associative memory, affine invariant matching, and pattern recognition [1]. In such applications, the stability analysis is a necessary step for the practical design of neural networks [2]. In hardware implementation, time delays occur due to finite switching speed of the amplifiers and communication time. The existence of time delay may lead to some complex dynamic behaviors such as oscillation, divergence, chaos, instability, or other poor performance of the neural networks [3]. Therefore, the issue of stability analysis of neural networks with time delay attracts many researchers, and a number of remarkable results have been built up in the open literature; for example, see [2–5] and references therein.

When a neural network is designed to handle complex nonlinear problems, a great number of neurons with tremendous connections are often required. In such relatively large-scale neural networks, it may be very difficult and expensive (or even impossible) to obtain the complete information of the neuron states. On the other hand, in many practical applications, one needs to know the neuron states and then use them to achieve certain objectives. For instance, a recurrent neural network was presented in [6] to model an unknown nonlinear system, and the neuron states were utilized to implement a control law. Therefore, it is of great importance to study the state estimation problem of neural networks.

Recently, some results related to the state estimation problem for neural networks have been reported; for example, see [7–38] and references therein. In [7], authors initially studied the state estimation problem of delayed neural networks, where a delay-independent condition was obtained in terms of a linear matrix inequality (LMI). In [8], authors proposed a free-weighting matrix approach to discuss the state estimation problem for neural networks with time-varying delay. By using the Newton-Leibniz formula, some slack variables were introduced to derive a less conservative condition. In [11], attention was focused on the design of a state estimator to estimate the neuron states by using the delay-fractioning technique to reduce the possible conservatism. The authors in [13] first investigated the robust state estimator problem of delayed neural networks with parameter uncertainties. Delay-dependent conditions were presented to guarantee the global asymptotical stability of the error system. In [16], a further result on design problem of state estimator for a class of neural networks of neutral type was presented. A delay-dependent LMI criterion for existence of the estimator was derived. In [20], the state estimation problem for discrete neural networks with partially unknown transition probabilities and time-varying delays was discussed. By utilizing a novel Lyapunov functional integrating both lower and upper time-delay bounds and some new techniques, some delay-range-dependent sufficient conditions under which the estimation error dynamics were stochastically stable are established. In [22], authors investigated the state estimation problem for neural networks with discrete time-varying delay and distributed time-varying delay; a delay-interval-dependent condition is developed to estimate the neuron state with some observed output measurements such that the error-state system was globally asymptotically stable. In [25], leakage delay in the leakage term was used to destabilize the neuron states. By constructing the Lyapunov-Krasovskii functional which contains a triple-integral term, an improved delay-dependent stability criterion was derived in terms of LMIs. In [27], the state estimation problem for a class of discrete-time stochastic neural networks with random delays was considered. By employing a Lyapunov-Krasovskii functional, sufficient delay distribution- dependent conditions were established in terms of LMIs that guarantee the existence of the state estimator. In [33], authors discussed the state estimation problem for Takagi-Sugeno (T-S) fuzzy Hopfield neural networks via strict output passivation of the error system. In [36–38], authors investigated the distributed state estimation problem for sensor networks and presented several new sufficient conditions to guarantee the convergence of the estimation error systems. To the best of the author’s knowledge, there are no results on the problem of state estimation for neural networks with leakage delay and time-varying delays. As pointed out in [39], neural networks with leakage delay are a class of important neural networks; time delay in the leakage term also has great impact on the dynamics of neural networks since time delay in the stabilizing negative feedback term has a tendency to destabilize a system. Therefore, it is necessary to investigate the state estimation problem for neural networks with leakage delay [25].

Motivated by the previous discussions, the objective of this paper is to study the state estimation for neural networks with leakage delay and time-varying delays by employing new Lyapunov-Krasovskii functionals and using matrix inequality techniques. The obtained sufficient condition does not require the differentiability of time-varying delays and is expressed in terms of linear matrix inequalities, which can be checked numerically using the effective LMI toolbox in Matlab. An example is given to show the effectiveness of the proposed criterion.

Notations. The notations are quite standard. Throughout this paper, ℝn and ℝn×m denote, respectively, the n-dimensional Euclidean space and the set of all n × m real matrices. ∥·∥ refers to the Euclidean vector norm. AT represents the transpose of matrix A, and the asterisk “*” in a matrix is used to represent the term which is induced by symmetry. I is the identity matrix with compatible dimension. X > Y means that X and Y are symmetric matrices and that X − Y is positive definite. Matrices, if not explicitly specified, are assumed to have compatible dimensions.

2. Model Description and Preliminaries

Throughout this paper, we make the following assumptions.

Remark 1. As pointed out in [40], the constants and in assumption (H1) of this paper are allowed to be positive, negative, or zero. Hence, assumption (H1), first proposed by Liu et al. in [40], is weaker than the Lipschitz condition.

The problem to be addressed in this study is to find out the gain matrix K such that the system (8) is globally asymptotically stable.

To prove our results, the following lemmas that can be found in [22] are necessary.

Lemma 2 (see [22].)For any constant matrix W ∈ ℝm×m, W > 0, scalar 0 < h(t) < h, and vector function ω(·):[0, h] → ℝm such that the integrations concerned are well defined, then

Lemma 3 (see [22].)Given constant matrices P, Q, and R, where PT = P, QT = Q, then

3. Main Results

Theorem 4. Assume that the assumptions (H1)–(H3) hold. If there exist four symmetric positive definite matrices Pi (i = 1,2, 3,4), five positive diagonal matrices W1, W2, R1, R2, and R3, and seven matrices Q1, Q2, Q3, X11, X12, X22, and Z, such that the following LMIs hold:

Proof. From assumption (H1), we know that

Let W1 = diag (w11, w12, …, w1n), W2 = diag (w21, w22, …, w2n), and consider the following Lyapunov-Krasovskii functional as

Calculating the time derivative of Vi(t) (i = 1,2, 3,4), we obtain

In deriving inequality (21), we have made use of Lemma 2. It follows from inequalities (19)–(22) that

From model (8), we have

By Newton-Leibniz formulation and assumption (H2), we have

In addition, for positive diagonal matrices Ri > 0 (i = 1,2, 3), we can get from assumption (H1) and assumption (H3) that [15]

By using Lemma 3, and noting , it is easy to verify the equivalence of Π < 0 and Ω < 0. Thus, one can derive from (14) and (27) that

4. Numerical Example

To verify the effectiveness of the theoretical result of this paper, consider the following example.

Example 1. Consider a two-neuron neural network (1), where

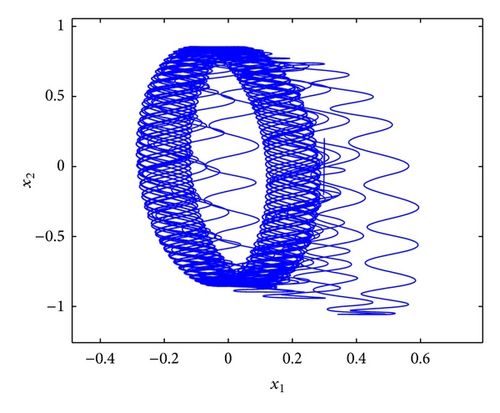

Figure 1 shows that the considered neural network has a chaotic attractor, where the initial condition is x1(t) = 0.5cos (0.5t), x2(t) = −0.2sin (18t), and t ∈ [−0.27,0].

It can be verified that assumptions (H1) and (H2) are satisfied, and F1 = 0, F2 = I, F3 = 0, F4 = diag {0.5,0.5}, τ = 0.27.

Choose network measurement (4), where

It is obvious that assumption (H3) is satisfied with G1 = −0.01I and G2 = 0. By the Matlab LMI Control Toolbox, we find a solution to the LMIs in (13) and (14) as follows:

Subsequently, we can obtain from that

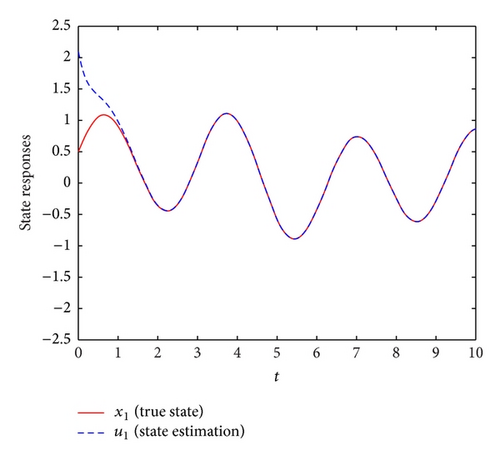

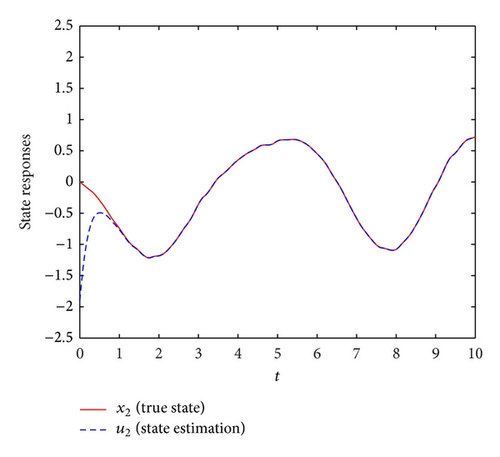

Therefore, we know from Theorem 4 that error-state system (8) of the delayed neural network described by (1) and (6) is globally asymptotically stable. The simulation results are shown in Figures 2 and 3, which demonstrate the effectiveness of the developed approach for the design of the state estimator for neural networks with leakage delay and time-varying delay.

5. Conclusions

In this paper, the state estimation problem has been investigated for neural networks with leakage delay and time-varying delay as well as general activation functions. By employing Lyapunov functional method and the matrix inequality techniques, a delay-dependent LMIs condition has been established to estimate the neuron state with some observed output measurements such that the error-state system is globally asymptotically stable. An example has been provided to show the effectiveness of the proposed criterion.

Acknowledgments

The authors would like to thank the reviewers and the editor for their valuable suggestions and comments which have led to a much improved paper. This work was supported by the National Natural Science Foundation of China under Grants 61273021, 60974132, 11172247, and 51208538 and in part by the Natural Science Foundation Project of CQ cstc2013jjB40008.