Iterative Solution to a System of Matrix Equations

Abstract

An efficient iterative algorithm is presented to solve a system of linear matrix equations A1X1B1 + A2X2B2 = E, C1X1D1 + C2X2D2 = F with real matrices X1 and X2. By this iterative algorithm, the solvability of the system can be determined automatically. When the system is consistent, for any initial matrices and , a solution can be obtained in the absence of roundoff errors, and the least norm solution can be obtained by choosing a special kind of initial matrix. In addition, the unique optimal approximation solutions and to the given matrices and in Frobenius norm can be obtained by finding the least norm solution of a new pair of matrix equations , where , . The given numerical example demonstrates that the iterative algorithm is efficient. Especially, when the numbers of the parameter matrices A1, A2, B1, B2, C1, C2, D1, D2 are large, our algorithm is efficient as well.

1. Introduction

Throughout the paper, we denote the set of all m × n real matrix by Rm×n, the transpose matrix of A by AT, the identity matrix of order n by In, the Kronecker product of A and B by A ⊗ B, the mn × 1 vector formed by the vertical concatenation of the respective columns of a matrix A ∈ Rm×n by vec (A), the trace of a matrix A by tr (A), and the Frobenius norm of a matrix A by ∥A∥ where .

In this paper, we consider the following two problems.

Problem 1. For the given matrices A1 ∈ Rp×k, A2 ∈ Rp×m, B1 ∈ Rr×q, B2 ∈ Rn×q, C1 ∈ Rs×k, C2 ∈ Rs×m, D1 ∈ Rr×t, D2 ∈ Rn×t, E ∈ Rp×q, and F ∈ Rs×t, find X1 ∈ Rk×r and X2 ∈ Rm×n such that

Problem 2. When Problem 1 is consistent, let S denote the solution set of the pair of matrix equation (1). For the given matrices , , find such that

Problem 2 is to find the optimal approximation solutions to the given matrices in the solution set of Problem 1. It occurs frequently in experiment design (see, for instance, [1]). In the recent years, the matrix optimal approximation problem has been studied extensively (e.g., [2–13]).

The research on solving matrix equation pair has been actively ongoing for the last 30 years or more. For instance, Mitra [14] gave conditions for the existence of a solution and a representation of the general common solution to AXB = E, CXD = F. Shinozaki and Sibuya [15] and van der Woude [16] discussed conditions for the existence of a common solution to AXB = E, CXD = F. Navarra et al. [5] derived sufficient and necessary conditions for the existence of a common solution to AXB = E, CXD = F. Yuan [13] obtained an analytical expression of the least-squares solutions of AXB = E, CXD = F by using the generalized singular value decomposition (GSVD) of matrices. Dehghan and Hajarian [17] presented some examples to show a motivation for studying the general coupled matrix equations , i = 1,2, …, l, and [18] constructed an iterative algorithm to solve the general coupled matrix equations , i = 1,2, …, p. Wang [19, 20] gave the centrosymmetric solution to the system of quaternion matrix equations A1X = C1, A3XB3 = C3. Wang [21] also solved a system of matrix equations over arbitrary regular rings with identity.

Recently, some finite iterative algorithms have also been developed to solve matrix equations. Ding et al. [22, 23] and Xie et al. [24, 25] studied the iterative solutions of matrix equations AXB = F and AiXBi = Fi and generalized Sylvester matrix equations AXB + CXD = F and AXB + CXTD = F. They presented a gradient based and a least-squares based iterative algorithms for the solution. Li et al. [26, 27] and Zhou et al. [28, 29] considered iterative method for some coupled linear matrix equations. Deng et al. [30] studied the consistent conditions and the general expressions about the Hermitian solutions of the matrix equations (AX, XB) = (C, D) and designed an iterative method for its Hermitian minimum norm solutions. Li and Wu [31] gave symmetric and skew-antisymmetric solutions to certain matrix equations A1X = C1, XB3 = C3 over the real quaternion algebra H. For more studies on iterative algorithms on coupled matrix equations, we refer to [3, 10–12, 17, 32–37]. Peng et al. [6] presented iterative methods to obtain the symmetric solutions of AXB = E, CXD = F. Sheng and Chen [8] presented a finite iterative method; when AXB = E, CXD = F is consistent. Liao and Lei [38] presented an analytical expression of the least-squares solution and an algorithm for AXB = E, CXD = F with the minimum norm. Peng et al. [7] presented an algorithm for the least-squares reflexive solution. Dehghan and Hajarian [2] presented an iterative algorithm for solving a pair of matrix equations AXB = E, CXD = F over generalized centrosymmetric matrices. Cai and Chen [39] presented an iterative algorithm for the least-squares bisymmetric solutions of the matrix equations AXB = E, CXD = F. Yin and Huang [40] presented an iterative algorithm to solve the least squares generalized reflexive solutions of the matrix equations AXB = E, CXD = F.

However, to our knowledge, there has been little information on finding the solutions to the system (1) by iterative algorithm. In this paper, an efficient iterative algorithm is presented to solve the system (1) for any real matrices X1, X2. The suggested iterative algorithm, automatically determines the solvability of equations pair (1). When the pair of equations is consistent, then, for any initial matrices and , the solution can be obtained in the absence of round errors, and the least norm solution can be obtained by choosing a special kind of initial matrix. In addition, the unique optimal approximation solutions and to the given matrices and in Frobenius norm can be obtained by finding the least norm solution of a new pair of matrix equations , , where , . The given numerical examples demonstrate that our iterative algorithm is efficient. Especially, when the numbers of the parameter matrices A1, A2, B1, B2, C1, C2, D1, D2 are large, our algorithm is efficient as well while the algorithm of [32] is not convergent. That is, our algorithm has merits of good numerical stability and ease to program.

The rest of this paper is outlined as follows. In Section 2, we first propose an efficient iterative algorithm for solving Problem 1; then we give some properties of this iterative algorithm. We show that the algorithm can obtain a solution group (the least Frobenius norm solution group) for any (special) initial matrix group in the absence of roundoff errors. In Section 3, a numerical example is given to illustrate that our algorithm is quite efficient.

2. Iterative Algorithm for Solving Problems 1 and 2

In this section, we present the iterative algorithm for the consistence of the system (1).

Algorithm 3. (1) Input matrices A1 ∈ Rp×k, A2 ∈ Rp×m, B1 ∈ Rr×q, B2 ∈ Rn×q, C1 ∈ Rs×k, C2 ∈ Rs×m, D1 ∈ Rr×t, D2 ∈ Rn×t, E ∈ Rp×q, F ∈ Rs×t, , and (where , are any initial matrices).

(2) Calculate

(3) If (k = 1,2, …), then stop. Otherwise,

(4) Calculate

Lemma 4. In Algorithm 3, the choice of βk makes ∥diag (Ek+1, Fk+1)∥ reach a minimum and diag (Ek+1, Fk+1) and orthogonal to each other.

Proof. From Algorithm 3, we have

On the other hand, if the choice of βk makes diag (Ek+1, Fk+1) and orthogonal to each other, that is, , we can have the same βk as (7).

Theorem 5. Algorithm 3 is bound to be convergent.

Proof. From Algorithm 3 and Lemma 4 we have

Lemma 6 (see [41].)Suppose that the consistent system of linear equations My = b has a solution y0 ∈ R(MT); then y0 is the least Frobenius norm solution of the system of linear equations.

Theorem 7. Assume that the system (1) is consistent. Let , be initial matrices where Y ∈ Rp×q, Z ∈ Rs×t are any initial matrices, or, especially, , ; then the solution generated by Algorithm 3 is the least Frobenius norm solution to (1).

Proof. If (1) is consistent, from , , using Algorithm 3, we have the iterative solution pair of (1) as the following:

We know that (1) is equivalent to the system

Considering Lemma 6, with the initial matrices , , where Y ∈ Rp×q, Z ∈ Rs×t are arbitrary, or, especially, and , then the solution pair generated by Algorithm 3 is the least Frobenius norm solution of the matrix equations (1).

3. An Example

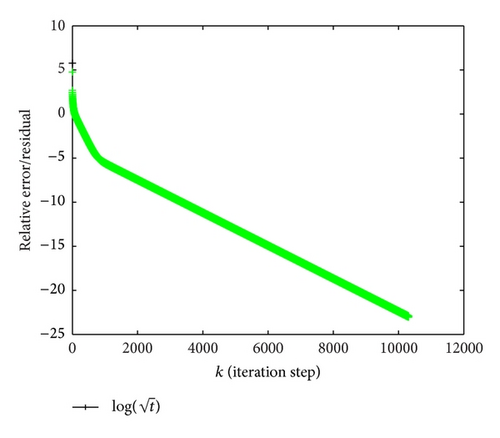

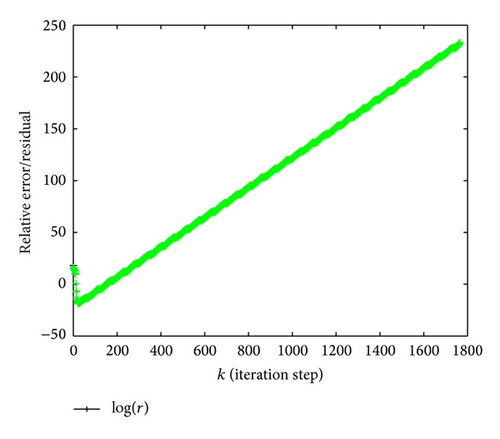

In this section, we show a numerical example to illustrate the efficiency of Algorithm 3. All computations are performed by MATLAB 7. For the influence of the error of calculation, we consider the matrix R as a zero matrix if ∥R∥ < 10−10.

Example 1. Consider the solution of the linear matrix equations

(2) Using the algorithm of [32], to this example, the iteration is not convergent. The obtained result is presented in Figure 2.

This numerical example demonstrates that our algorithm has merits of good numerical stability and ease to program.

Acknowledgments

This research was supported by the Grants from the Key Project of Scientific Research Innovation Foundation of Shanghai Municipal Education Commission (13ZZ080), the National Natural Science Foundation of China (11171205), the Natural Science Foundation of Shanghai (11ZR1412500), and the Nature Science Foundation of Anhui Provincial Education (ky2008b253, KJ2013A248).