Least-Squares Parameter Estimation Algorithm for a Class of Input Nonlinear Systems

Abstract

This paper studies least-squares parameter estimation algorithms for input nonlinear systems, including the input nonlinear controlled autoregressive (IN-CAR) model and the input nonlinear controlled autoregressive autoregressive moving average (IN-CARARMA) model. The basic idea is to obtain linear-in-parameters models by overparameterizing such nonlinear systems and to use the least-squares algorithm to estimate the unknown parameter vectors. It is proved that the parameter estimates consistently converge to their true values under the persistent excitation condition. A simulation example is provided.

1. Introduction

Parameter estimation has received much attention in many areas such as linear and nonlinear system identification and signal processing [1–9]. Nonlinear systems can be simply divided into the input nonlinear systems, the output nonlinear systems, the feedback nonlinear systems, and the input and output nonlinear systems, and so forth. The Hammerstein models can describe a class of input nonlinear systems which consist of static nonlinear blocks followed by linear dynamical subsystems [10, 11].

Nonlinear systems are common in industrial processes, for example, the dead-zone nonlinearities and the valve saturation nonlinearities. Many estimation methods have been developed to identify the parameters of nonlinear systems, especially for Hammerstein nonlinear systems [12, 13]. For example, Ding et al. presented a least-squares-based iterative algorithm and a recursive extended least squares algorithm for Hammerstein ARMAX systems [14] and an auxiliary model-based recursive least squares algorithm for Hammerstein output error systems [15]. Wang and Ding proposed an extended stochastic gradient identification algorithm for Hammerstein-Wiener ARMAX Systems [16].

Recently, Wang et al. derived an auxiliary model-based recursive generalized least-squares parameter estimation algorithm for Hammerstein output error autoregressive systems and auxiliary model-based RELS and MI-ELS algorithms for Hammerstein output error moving average systems using the key term separation principle [17, 18]. Ding et al. presented a projection estimation algorithm and a stochastic gradient (SG) estimation algorithm for Hammerstein nonlinear systems by using the gradient search and further derived a Newton recursive estimation algorithm and a Newton iterative estimation algorithm by using the Newton method (Newton-Raphson method) [19]. Wang and Ding studied least-squares-based and gradient-based iterative identification methods for Wiener nonlinear systems [20].

Fan et al. discussed the parameter estimation problem for Hammerstein nonlinear ARX models [21]. On the basis of the work in [14, 15, 21], this paper studies the identification problems and their convergence for input nonlinear controlled autoregressive (IN-CAR) models using the martingale convergence theorem and gives the recursive generalized extended least-squares algorithm for input nonlinear controlled autoregressive autoregressive moving average (IN-CARARMA) models.

Briefly, the paper is organized as follows. Section 2 derives a linear-in-parameters identification model and gives a recursive least squares identification algorithm for input nonlinear CAR systems and analyzes the properties of the proposed algorithm. Section 4 gives the recursive generalized extended least squares algorithm for input nonlinear CARARMA systems. Section 5 provides an illustrative example to show the effectiveness of the proposed algorithms. Finally, we offer some concluding remarks in Section 6.

2. The Input Nonlinear CAR Model and Estimation Algorithm

Let us introduce some notations first. The symbol I (In) stands for an identity matrix of appropriate sizes (n × n); the superscript T denotes the matrix transpose; 1n represents an n-dimensional column vector whose elements are 1; |X | = det [X] represents the determinant of the matrix X; the norm of a matrix X is defined by ∥X∥2 = tr [XXT]; λmax [X] and λmin [X] represent the maximum and minimum eigenvalues of the square matrix X, respectively; f(t) = o(g(t)) represents f(t)/g(t) → 0 as t → ∞; for g(t)⩾0, we write f(t) = O(g(t)) if there exists a positive constant δ1 such that |f(t)| ⩽ δ1g(t).

2.1. The Input Nonlinear CAR Model

2.2. The Recursive Least Squares Algorithm

3. The Main Convergence Theorem

The following lemmas are required to establish the main convergence results.

Lemma 3.1 (Martingale convergence theorem: Lemma D.5.3 in [23, 24]). If Tt, αt, βt are nonnegative random variables, measurable with respect to a nondecreasing sequence of σ algebra ℱt−1, and satisfy

Lemma 3.2 (see [14], [21], [25].)For the algorithm in (2.10)-(2.11), for any γ > 1, the covariance matrix P(t) in (2.11) satisfies the following inequality:

Theorem 3.3. For the system in (2.8) and the algorithm in (2.10)-(2.11), assume that {v(t), ℱt} is a martingale difference sequence defined on a probability space {Ω, ℱ, P}, where {ℱt} is the σ algebra sequence generated by the observations {y(t), y(t − 1), …, u(t), u(t − 1), …} and the noise sequence {v(t)} satisfies E[v(t)∣ℱt−1] = 0, and E[v2(t)∣ℱt−1] ⩽ σ2 < ∞, a.s [23], and , γ > 1. Then the parameter estimation error converges to zero.

Proof. Define the parameter estimation error vector and the stochastic Lyapunov function . Let . According to the definitions of and T(t) and using (2.10) and (2.11), we have

4. The Input Nonlinear CARARMA System and Estimation Algorithm

This paper presents a recursive least squares algorithm for IN-CAR systems and a recursive generalized extended least squares algorithm for IN-CARARMA systems with ARMA noise disturbances, which differ not only from the input nonlinear controlled autoregressive moving average (IN-CARMA) systems in [14] but also from the input nonlinear output error systems in [15].

5. Example

| t | a1 | a2 | c1 | c2 | c3 | b2c1 | b2c2 | b2c3 | δ (%) |

|---|---|---|---|---|---|---|---|---|---|

| 100 | −1.35989 | 0.76938 | 0.94139 | 0.49861 | 0.18862 | 1.69875 | 0.86164 | 0.32773 | 2.59527 |

| 200 | −1.35622 | 0.76001 | 0.96720 | 0.50101 | 0.19076 | 1.67233 | 0.84941 | 0.34369 | 1.43552 |

| 500 | −1.35239 | 0.75452 | 1.00256 | 0.50137 | 0.19363 | 1.66468 | 0.84394 | 0.34485 | 0.74281 |

| 1000 | −1.35034 | 0.75193 | 1.00570 | 0.50128 | 0.19460 | 1.65482 | 0.85095 | 0.33765 | 1.06112 |

| 2000 | −1.34844 | 0.74940 | 0.99224 | 0.50089 | 0.20143 | 1.69169 | 0.85148 | 0.33583 | 0.67584 |

| 3000 | −1.34776 | 0.74847 | 0.99012 | 0.49943 | 0.20333 | 1.68675 | 0.85321 | 0.33416 | 0.68173 |

| True values | −1.35000 | 0.75000 | 1.00000 | 0.50000 | 0.20000 | 1.68000 | 0.84000 | 0.33600 | |

| t | a1 | a2 | b2 | c1 | c2 | c3 | δ (%) |

|---|---|---|---|---|---|---|---|

| 100 | −1.35989 | 0.76938 | 1.75670 | 0.94139 | 0.49861 | 0.18862 | 3.90775 |

| 200 | −1.35622 | 0.76001 | 1.74205 | 0.96720 | 0.50101 | 0.19076 | 2.81582 |

| 500 | −1.35239 | 0.75452 | 1.70821 | 1.00256 | 0.50137 | 0.19363 | 1.15783 |

| 1000 | −1.35034 | 0.75193 | 1.69268 | 1.00570 | 0.50128 | 0.19460 | 0.59233 |

| 2000 | −1.34844 | 0.74940 | 1.69068 | 0.99224 | 0.50089 | 0.20143 | 0.52605 |

| 3000 | −1.34776 | 0.74847 | 1.68512 | 0.99012 | 0.49943 | 0.20333 | 0.46851 |

| True values | −1.35000 | 0.75000 | 1.68000 | 1.00000 | 0.50000 | 0.20000 | |

| t | a1 | a2 | c1 | c2 | c3 | b2c1 | b2c2 | b2c3 | δ (%) |

|---|---|---|---|---|---|---|---|---|---|

| 100 | −1.37143 | 0.79561 | 0.81731 | 0.49688 | 0.16665 | 1.75280 | 0.90056 | 0.30999 | 7.98804 |

| 200 | −1.36256 | 0.77335 | 0.89403 | 0.50353 | 0.17365 | 1.65999 | 0.87041 | 0.36082 | 4.46419 |

| 500 | −1.35374 | 0.76009 | 1.00710 | 0.50417 | 0.18108 | 1.63863 | 0.85315 | 0.36321 | 2.08028 |

| 1000 | −1.35074 | 0.75537 | 1.01710 | 0.50372 | 0.18381 | 1.60488 | 0.87297 | 0.34101 | 3.17034 |

| 2000 | −1.34587 | 0.74895 | 0.97678 | 0.50296 | 0.20424 | 1.71432 | 0.87455 | 0.33540 | 2.01031 |

| 3000 | −1.34448 | 0.74649 | 0.97053 | 0.49834 | 0.20990 | 1.69896 | 0.87916 | 0.33025 | 2.00404 |

| True values | −1.35000 | 0.75000 | 1.00000 | 0.50000 | 0.20000 | 1.68000 | 0.84000 | 0.33600 | |

| t | a1 | a2 | b2 | c1 | c2 | c3 | δ (%) |

|---|---|---|---|---|---|---|---|

| 100 | −1.37143 | 0.79561 | 1.93906 | 0.81731 | 0.49688 | 0.16665 | 12.66088 |

| 200 | −1.36256 | 0.77335 | 1.88773 | 0.89403 | 0.50353 | 0.17365 | 9.26624 |

| 500 | −1.35374 | 0.76009 | 1.77500 | 1.00710 | 0.50417 | 0.18108 | 3.83703 |

| 1000 | −1.35074 | 0.75537 | 1.72205 | 1.01710 | 0.50372 | 0.18381 | 1.90836 |

| 2000 | −1.34587 | 0.74895 | 1.71204 | 0.97678 | 0.50296 | 0.20424 | 1.57452 |

| 3000 | −1.34448 | 0.74649 | 1.69603 | 0.97053 | 0.49834 | 0.20990 | 1.39744 |

| True values | −1.35000 | 0.75000 | 1.68000 | 1.00000 | 0.50000 | 0.20000 | |

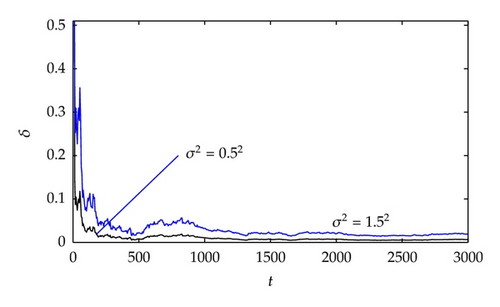

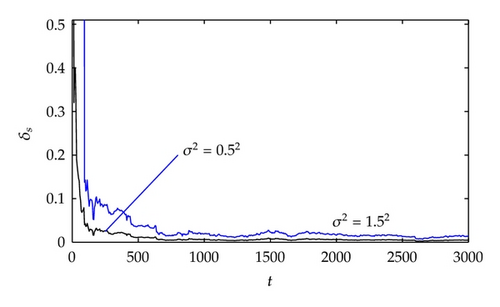

- (i)

The larger the data length is, the smaller the parameter estimation errors become.

- (ii)

A lower noise level leads to smaller parameter estimation errors for the same data length.

- (iii)

The estimation errors δ and δs become smaller (in general) as t increases. This confirms the proposed theorem.

6. Conclusions

The recursive least-squares identification is used to estimate the unknown parameters for input nonlinear CAR and CARARMA systems. The analysis using the martingale convergence theorem indicates that the proposed recursive least squares algorithm can give consistent parameter estimation. It is worth pointing out that the multi-innovation identification theory [26–33], the gradient-based or least-squares-based identification methods [34–41], and other identification methods [42–49] can be used to study identification problem of this class of nonlinear systems with colored noises.

Acknowledgment

This work was supported by the 111 Project (B12018).