Periodic Solutions of a Cohen-Grossberg-Type BAM Neural Networks with Distributed Delays and Impulses

Abstract

A class of Cohen-Grossberg-type BAM neural networks with distributed delays and impulses are investigated in this paper. Sufficient conditions to guarantee the uniqueness and global exponential stability of the periodic solutions of such networks are established by using suitable Lyapunov function, the properties of M-matrix, and some suitable mathematical transformation. The results in this paper improve the earlier publications.

1. Introduction

The research of neural networks with delays involves not only the dynamic analysis of equilibrium point but also that of periodic oscillatory solution. The dynamic behavior of periodic oscillatory solution is very important in learning theory due to the fact that learning usually requires repetition [1, 2].

Cohen and Grossberg proposed the Cohen-Grossberg neural networks (CGNNs) in 1983 [3]. Kosko proposed bi directional associative memory neural networks (BAMNNs) in 1988 [4]. Some important results for periodic solutions of delayed CGNNs have been obtained in [5–10]. Xiang and Cao proposed a class of Cohen-Grossberg BAM neural networks (CGBAMNNs) with distributed delays in 2007 [11]; in addition, many evolutionary processes are characterized by abrupt changes at certain time; these changes are called to be impulsive phenomena, which are included in many neural networks such as Hopfield neural networks, BAM neural networks, CGNNs, and CGBAMNNs and can affect dynamical behaviors of the systems just as time delays. The results for periodic solutions of CGBAMNNs with or without impulses are obtained in [11–15].

The objective of this paper is to study the existence and global exponential stability of periodic solutions of CGBAMNNs with distributed delays by using suitable Lyapunov function, the properties of M-matrix, and some suitable mathematical transformation. Comparing with the results in [13, 14], improved results are successively obtained, the conditions for the existence and globally exponential stability of the periodic solution of such system without impulses have nothing to do with inputs of the neurons and amplification functions; and we also point that CGBAMNNs model is a special case of CGNNs model, many results of CGBAMNNs can be directly obtained from the results of CGNNs.

The rest of this paper is organized as follows. Preliminaries are given in Section 2. Sufficient conditions which guarantee the uniqueness and global exponential stability of periodic solutions for CGBAMNNs with distributed delays and impulses are given in Section 3. Two examples are given in Section 4 to demonstrate the main results.

2. Preliminaries

; tk is called impulsive moment and satisfies 0 < t1 < t2 < ⋯, limk→+∞tk = +∞; xi(t−) and xi(t+) denote the left-hand and right-hand limits at tk; respectively, we always assume and .

- (H1)

The amplification function ai(·) is continuous, and there exist constants such that for 1 ≤ i ≤ n.

- (H2)

The behaved function bi(t, ·) is T-periodic about the first argument; there exists continuous T-periodic function αi(t) such that

-

for all x ≠ y, 1 ≤ j ≤ n.

- (H3)

For activation function fj(·), there exists positive constant Lj such that

-

for all x ≠ y, 1 ≤ j ≤ n.

- (H4)

The kernel function kij(s) is nonnegative continuous function on [0, +∞) and satisfies

-

is differentiable function for λ ∈ [0, rij), 0 < rij < +∞, Kij(0) = 1 and

- (H5)

There exists positive integer k0 such that and hold.

Remark 2.1. A typical example of kernel function is given by for s ∈ [0, +∞), where rij ∈ (0, +∞), r ∈ {0,1, …, n}. These kernel functions are called as the gamma memory filter [16] and satisfy condition (H4).

For any continuous function S(t) on [0, T], and denote min t∈[0,T] {|S(t)|} and max t∈[0,T] {|S(t)|}, respectively.

For any , t > 0, define , and for any , s ∈ (−∞, 0], define

Denote

The initial conditions of system (2.1) are given by

Let x(t, φ) = (x1(t, φ), x2(t, φ), …, xn(t,φ)T) denote any solution of the system (2.1) with initial value φ ∈ PC((−∞, 0], Rn).

Definition 2.2. A solution x(t, φ) of system (2.1) is said to be globally exponentially stable, if there exist two constants λ > 0, M > 0 such that

Definition 2.3. A real matrix is said to be a nonsingular M-matrix if aij ≤ 0 (i, j = 1,2, …, n, i ≠ j), and all successive principle minors of A are positive.

Lemma 2.4 (see [17].)A matrix with nonpositive off-diagonal elements is a nonsingular M-matrix if and if only there exists a vector such that pTA > 0 or Ap holds.

Lemma 2.5. Under assumptions (H1)–(H5), system (2.1) has a T-periodic solution which is globally exponentially stable, if the following conditions hold.

- (H6)

ℳ = A − C is a nonsingular M-matrix, where

- (H7)

ai((1 − γik)s) ≥ |1 − γik|ai(s), for all s ∈ R, i = 1,2, …, n.

Proof. Let x(t, ψ1) and x(t, ψ2) be two solutions of system (2.1) with initial value ψ1 = (φ1, φ2, …, φn) and ψ2 = (ζ1, ζ2, …, ζn) ∈ PC((−∞, 0], Rn), respectively.

Let

Now we define a Lyapunov function V(t) by

When t ≠ tk, k ∈ Z+, calculating the upper right derivative of V(t) along solution of (2.1), similar to proof of Theorem 3.1 in [10], corresponding to case in which r → 1, vijl(t) = 0 in [10], we obtain from (2.12)–(2.15) that

We can always choose a positive integer N such that and define a Poincaré mapping P : C → C by P(ξ) = xT(ξ); we have

Remark 2.6. The result above also holds for (2.1) without impulses, and the existence and globally exponential stability of the periodic solution for (2.1) have nothing to do with amplification functions and inputs of the neuron. The results in [5] have more restrictions than Lemma 2.5 in this paper because conditions for the ones in [5] are relevant to amplification functions.

3. Periodic Solutions of CGBAMNNs with Distributed Delays and Impulses

, ; tk is called impulsive moment and satisfies 0 < t1 < t2 < ⋯, lim k→+∞ tk = +∞; xi(t−), yj(t−) and xi(t+), yj(t+) denote the left-hand and right-hand limits at tk; respectively, we always assume , and .

- (H8)

Amplification functions ai(·) and cj(·) are continuous and there exist constants and such that .

- (H9)

bi(t, u), dj(t, u) are T-periodic about the first argument, there exist continuous, T-periodic functions αi(t) and βj(t) such that

(3.2)for all x ≠ y, 1 ≤ i ≤ n, 1 ≤ j ≤ m.

- (H10)

For activation functions fj(·) and gi(·), there exist constant Lj and such that

(3.3) - (H11)

The kernel functions kij(s) and are nonnegative continuous functions on [0, +∞) and satisfy

(3.4)are differentiable functions for λ ∈ [0, rij) and ; respectively, , , and - (H12)

There exists positive integer k0 such that and hold.

Let Z(t, ψ) = (x(t, ψ), y(t, ψ)) denote any solution of the system (3.1) with initial value ψ = (φ, ϕ) ∈ PC, x(t, ψ) = (x1(t, ψ), x2(t, ψ), …, x(tn, ψ)), y(t, ψ) = (y1(t, ψ), y2(t, ψ), …, ym(t, ψ)).

Theorem 3.1. Under assumptions (H8)–(H12), there exists a T-periodic solution which is asymptotically stable, if the following conditions hold.

- (H13)

The following ℳ is a nonsingular M-matrix, and

(3.6)in which(3.7) - (H14)

ai((1 − γik)s)≥|1 − γik | ai(s), cj((1 − δjk)s)≥|1 − δjk | cj(s), ∀ s ∈ R, i = 1,2, …, n, j = 1,2, …, m.

Proof. Let

Initial conditions are given by

Then, we know from (3.8) and (3.11) that Theorem 3.1 holds.

If ai(xi(t)) = cj(yj(t)) = 1, bi(t, xi(t)) = bi(t)xi(t) and dj(t, yj(t)) = dj(t)yj(t), where bi(t) and dj(t) are positive continuous T-periodic functions for i = 1,2, …, n, j = 1,2, …, m. System (3.1) reduces to the following Hopfield-type BAM neural networks model:

Corollary 3.2. Under assumptions (H9)–(H12), there exists a T-periodic solution which is globally asymptotically stable, if the following conditions hold.

-

The following ℳ is a nonsingular M-matrix, and

(3.14)in which(3.15) -

0 ≤ γik ≤ 2, 0 ≤ δjk ≤ 2 for i = 1,2, …, n, j = 1,2, …, m, k ∈ Z+.

Proof. As bi(t, xi(t)) = bi(t)xi(t) and dj(t, yj(t)) = dj(t)yj(t), we obtain αi(t) = bi(t) and βj(t) = dj(t) in (), () implies () holds. Since ai(xi(t)) = cj(yj(t)) ≡ 1, then condition () reduces to (). Corollary 3.2 Holds from Theorem 3.1.

Remark 3.3. The conditions for the existence and globally exponential stability of the periodic solution of (3.1) without impulses have nothing to do with inputs of the neuron and amplification functions. The results in [13, 14] have more restrictions than Theorem 3.1 in this paper because conditions for the ones in [13, 14] are relevant to amplification functions and inputs of neurons our results should be better. In addition, Corollary 3.2 is similar to Theorem 2.1 in [15]; our results generalize the results in [15].

Remark 3.4. In view of proof of Theorem 3.1, since CGBAMNNs model is a special case of CGNNs model in form as BAM neural networks model is a special case of Hopfield neural networks model, many results of CGBAMNNs can be directly obtained from the ones of CGNNs, needing no repetitive discussions. Since system (3.1) reduces to autonomous system, Theorem 3.1 still holds, which means that system (3.1) has a equilibrium which is globally asymptotically stable; we know that many results in [18] can be directly obtained from the results in [19].

4. Two Simple Examples

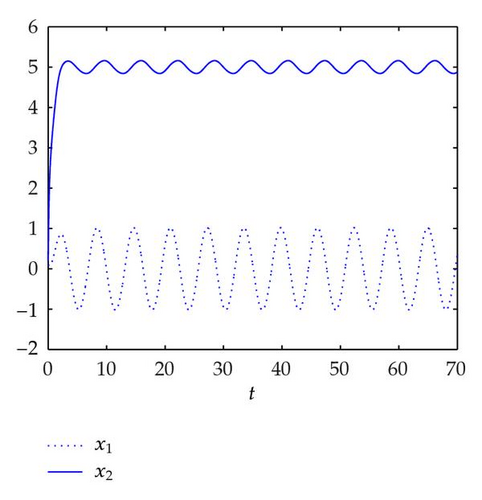

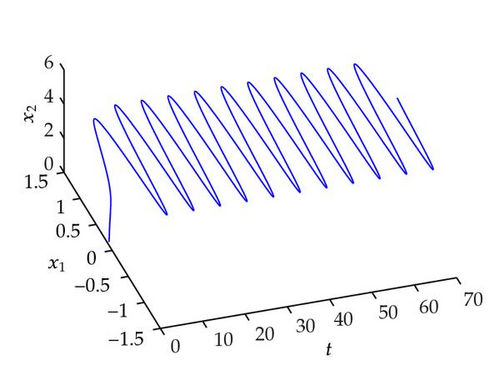

Example 4.1. Consider the following CGNNs model with distributed delays:

Note that

However, It is easy to check that system (4.1) does not satisfy Theorem 4.3 or 4.4 in [5], so theorems in [5] cannot are used to ascertain the existence and stability of periodic solutions of system (4.1).

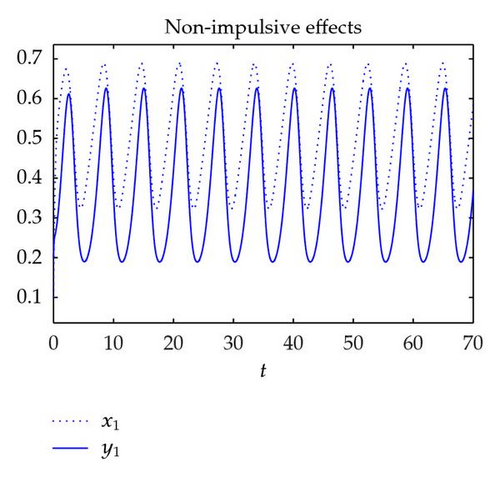

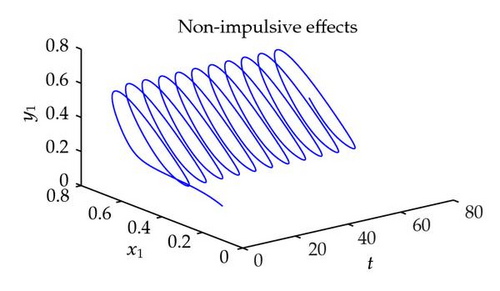

Example 4.2. Consider the following CGBAMNNs model with distributed delays and impulses:

Obviously, system (4.3) satisfies (H8)–(H12).

Case 1. γ1k = 0, δ1k = 0. Note that

However, it is easy to check that system (4.3) without impulses does not satisfy Theorem 1 in [13] and theorems in [14]; so theorems in [13, 14] cannot be used to ascertain the existence and stability of periodic solutions of system (4.3).

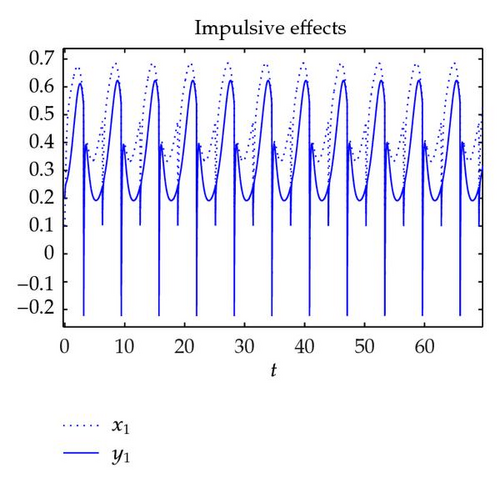

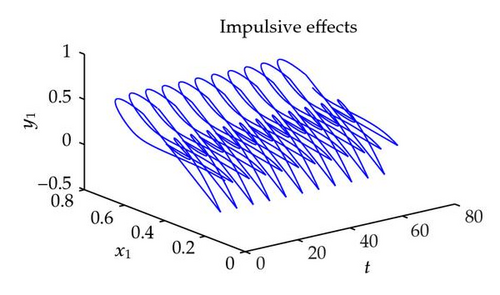

Case 2. γ1k = 0.7, δ1k = (1 − 0.5sin (tk + 1)). Note that a1(s) = 2 + sin s, c1(s) = 3 + cos s, and and , which means condition (H14) also holds for system (4.3). Hence, system (4.3) with impulses still has that there exists a 2π-periodic solution which is globally asymptotically stable. Figure 3 shows the dynamic behaviors of system (4.3) with initial condition (0.1,0.2).

This example illustrates the feasibility and effectiveness of the main results obtained in this paper, and it also shows that the conditions for the existence and globally exponential stability of the periodic solutions of CGBAMNNs without impulses have nothing to do with inputs of the neurons and amplification functions. If impulsive perturbations exist, the periodic solutions still exist and they are globally exponentially stable when we give some restrictions on impulsive perturbations.

5. Conclusions

A class of CGBAMNNs with distributed delays and impulses are investigated by using suitable Lyapunov functional, the properties of M-matrix, and some suitable mathematical transformation in this paper. Sufficient conditions to guarantee the uniqueness and global exponential stability of the periodic solutions of such networks are established without assuming the boundedness of the activation functions. Lemma 2.5 improves the results in [5], and Theorem 3.1 improves the results in [13, 14] and generalize the results in [15]. In addition, we point that CGBAMNNs model is a special case of CGNNs model; many results of CGBAMNNs can be directly obtained from the ones of CGNNs, needing no repetitive discussions. Our results are new, and two examples have been provided to demonstrate the effectiveness of our results.

Acknowledgment

The authors would like to thank the editor and the reviewers for their valuable suggestions and comments which greatly improved the original paper. Projects supported by the National Natural Science Foundation of China (no. 11071254).

Appendix

-

clear

-

T=70;

-

N=7000;

-

h=T/N;

-

m=40/h;

-

for i=1:m

-

U(:,i)=[0.1; 0.2];

-

end

-

for i=(m+1):(N+m)

-

r(i)=i*h-40;

-

x(i)=r(i);

-

I=2;

-

J=2+cos(U(2,i-1));

-

A=[-I,0; 0,-J];

-

B=[0,sin(x(i))*I; 0.3*J,0];

-

U(:,i)=h*A*[(U(1,i-1)-0.2*tanh(U(1,i-1))); U(2,i-1)]+U(:,i-1);

-

P(:,1)=[0; 0];

-

for k=1:m

-

P(:,1)=P(:,1)+h*exp(-(40-(k-1)*h))*[(tanh(U(1,i-m+k-1)));(tanh(U(2,i-m+k-1)))];

-

end

-

U(:,i)=U(:,i)+B*h*[(P(1,1));(P(2,1))]+h*[0; 5*J];

-

end

-

y=U(1,:);

-

z=U(2,:);

-

hold on

-

plot(r,y,’:’)

-

hold on

-

plot(r,z)

-

hold on

-

plot3(r,y,z)

-

clear

-

T=70;

-

N=7000;

-

h=T/N;

-

m=40/h;

-

for i=1:m

-

U(:,i)=[0.1;0.2];

-

end

-

for i=(m+1):(N+m)

-

r(i)=i*h-40;

-

x(i)=r(i);

-

I=2+sin(U(1,i-1));

-

J=3+cos(U(2,i-1));

-

A=[-I,0;0,-J];

-

B=[0,I*sin(x(i)); J*sin(x(i)),0];

-

U(:,i)=h*A*[2*U(1,i-1);(3+cos(x(i)))*U(2,i-1)]+U(:,i-1);

-

P(:,1)=[0;0];

-

for k=1:m

-

P(:,1)=P(:,1)+h*exp(-(40-(k-1)*h))*[(abs(U(1,i-m+k-1)));(abs(U(2,i-m+k-1)))];

-

end

-

U(:,i)=U(:,i)+B*h*[(P(1,1));(P(2,1))]+[I; J]*h;

-

if mod(i-m,314)==0

-

U(:,i)=[0.3,0; 0,1/2*(sin(x(i)+1))]*U(:,i);

-

end

-

end

-

y=U(1,:);

-

z=U(2,:)

-

hold on

-

plot(r,y,’:’)

-

hold on

-

plot(r,z)

-

hold on

-

plot3(r,y,z)