A Discrete Particle Swarm Optimization Algorithm for Uncapacitated Facility Location Problem

Abstract

A discrete version of particle swarm optimization (DPSO) is employed to solve uncapacitated facility location (UFL) problem which is one of the most widely studied in combinatorial optimization. In addition, a hybrid version with a local search is defined to get more efficient results. The results are compared with a continuous particle swarm optimization (CPSO) algorithm and two other metaheuristics studies, namely, genetic algorithm (GA) and evolutionary simulated annealing (ESA). To make a reasonable comparison, we applied to same benchmark suites that are collected from OR-library. In conclusion, the results showed that DPSO algorithm is slightly better than CPSO algorithm and competitive with GA and ESA.

1. Introduction

Efficient supply chain management has led to increased profit, increased market share, reduced operating cost, and improved customer satisfaction for many businesses. One strategic decision in supply chain management is facility location [1]. Location problems are classified into categories with some assumptions such as limiting the capacity and open number of sites. The uncapacitated facility location (UFL) problem assumes the cost of satisfying the client requirements has two components: a fixed cost of setting up a facility in a given site, and a transportation cost of satisfying the customer requirements from a facility. The capacities of all the facilities are assumed to be infinite [2].

1.1. Literature Review

There are many different titles for the UFL problem in the literature: the problem of a nonrecoverable tools optimal system [3], the standardization and unification problem [4], the location of bank accounts problem [5], warehouse location problem [6], uncapacitated facility location problem [7], and so on. The academic interest to investigate this mathematical model reasoned different interpretations. UFL problem is one of the most widely studied problems in combinatorial optimization problems thus there is a very rich literature in operations research (OR) for this kind of problem [8]. All important approaches relevant to UFL problems can be classified into two main categories: exact algorithms and metaheuristics-based methods.

There is a variety of exact algorithms to solve the UFL problem, such as branch and bound [6, 9], linear programming [10], Lagrangean relaxation algorithms [11], dual approach (DUALLOC ) of Erlenkotter [12], and the primal-dual approaches of Körkel [13]. Although Erlenkotter [12] developed this dual approach as an exact algorithm, it can also be used as a heuristic to find good solutions. It is obvious that since the UFL problem is NP-hard [14], exact algorithms may not be able to solve large practical problems efficiently. There are several studies to solve UFL problem with heuristics and metaheuristics methods. Alves and Almeida [15] proposed a simulated annealing algorithms and reported they produce high-quality solutions, but quite expensive in computation times. A new version of evolutionary simulated annealing algorithm (ESA) called distributed ESA presented by Aydin and Fogarty [16]. They stated that with implementing it they get good quality of solutions within short times. Another popular metaheuristic, tabu search algorithm, is applied by Al-Sultan and Al-Fawzan in [17]. Their application produces good solutions, but takes significant computing time and limits the applicability of the algorithm. Michel and Van Hentenryck [18] also applied tabu search and their proposed algorithm generates more robust solutions. Sun [19] examined tabu search procedure against the Lagrangean method and heuristic procedures reported by Ghosh [2]. Genetic algorithms (GA) are also applied by Kratica and Jaramillo [20, 21]. Finally, there are also artificial neural network approaches to solve UFL problems in Gen et al. [22] and Vaithyanathan et al. [23].

The particle swarm optimization (PSO) is one of the recent metaheuristics invented by Eberhart and Kennedy [24] based on the metaphor of social interaction and communication such as bird flocking and fish schooling. On one hand, it can be counted as an evolutionary method with its way of exploration via neighborhood of solutions (particles) across a population (swarm) and exploiting the generational information gained. On the other hand, it is different from other evolutionary methods in such a way that it has no evolutionary operators such as crossover and mutation. Another advantage is its ease of use with fewer parameters to adjust. In PSO, the potential solutions, the so-called particles, move around in a multidimensional search space with a velocity, which is constantly updated by the particle′s own experience and the experience of the particle′s neighbors or the experience of the whole swarm. PSO has been successfully applied to a wide range of applications such as function optimization, neural network training [25], task assignment [26], and scheduling problem [27, 28].

Since PSO is developed for continuous optimization problem initially, most existing PSO applications are resorting to continuous function value optimization [29–32]. Recently, a few researches applied PSO for discrete combinatorial optimization problems [26–28, 33, 34, 35, 36, 37].

1.2. UFL Problem Definition

Constraint (2) makes sure that all customers demands have been met by an open facility and (3) is to keep integrity. Since it is assumed that there is no capacity limit for any facility, the demand size of each customer is ignored, and therefore (2) established without considering demand variable.

It is obvious that since the main decision in UFL is opening or closing facilities, UFL problems are classified in discrete problems. On the other hand, PSO is mainly designed for continuous problem thus it has some drawbacks when applying PSO for a discrete problem. This tradeoff increased our curiosity to apply PSO algorithm for solving UFL problem.

The organization of the paper is as follows: in Section 2, the implementation of both continuous and discrete PSO algorithms for UFL problem is given with the details of how a local search procedure is embedded. Section 3 reports the experimental settings and results. There are three sets of comparisons: the first is between CPSO and DPSO algorithms; the second is between CPSO with local search (CPSOLS) and DPSO with local search (DPSOLS) algorithms; and the third is among DPSOLS with two other algorithms from the literature. Finally, Section 4 provides with the conclusion.

2. PSO Algorithms for UFL Problem

As mentioned Section 1, PSO is one of the population-based optimization technique inspired by nature. It is a simulation of social behaviour of a swarm, that is, bird flocking, fish schooling. Suppose the following scenario: a flock of bird is randomly searching for food in an area, where there is only one piece of food available and none of them knows where it is, but they can estimate how far it would be at each iteration. The problem here is “what is the best strategy to find and get the food?”. Obviously, the simplest strategy is to follow the bird known as the nearest one to the food. PSO inventers were inspired of such natural process-based scenarios to solve the optimization problems. In PSO, each single solution, called a particle, is considered as a bird, the group becomes a swarm (population) and the search space is the area to explore. Each particle has a fitness value calculated by a fitness function, and a velocity of flying towards the optimum, the food. All particles fly across the problem space following the particle nearest to the optimum. PSO starts with initial population of solutions, which is updated iteration-by-iteration. Therefore, PSO can be counted as an evolutionary algorithm besides being a metaheuristics method, which allows exploiting the searching experience of a single particle as well as the best of the whole swarm.

2.1. Continuous PSO Algorithm for UFL Problem

The continuous particle swarm optimization (CPSO) algorithm used here is proposed by the authors, Sevkli and Guner [33]. The CPSO considers each particle has three key vectors: position (Xi), velocity (Vi), and open facility (Yi). Xi denotes the ith position vector in the swarm, Xi = [xi1, xi2, xi3, …, xin], where xik is the position value of the ith particle with respect to the kth dimension (k = 1, 2, 3, …n). Vi denotes the ith velocity vector in the swarm, Vi = [vi1, vi2, vi3, …, vin], where vik is the velocity value of the ith particle with respect to the kth dimension. Yi represents the opening or closing facilities based on the position vector (Xi), Yi = [yi1, yi2, yi3, …, yin], where yik represents opening or closing kth facility of the ith particle. For an n-facility problem, each particle contains n number of dimensions.

| ith particle vectors | Particle dimension (k) | ||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| Position vector (Xi) | 1.8 | 3.01 | −0.99 | 0.72 | −5.45 |

| Velocity vector (Vi) | −0.52 | 2.06 | 3.56 | 2.45 | −1.44 |

| Open facility vector (Yi) | 1 | 1 | 0 | 0 | 1 |

Considering the 5-facility to 6-customer example shown in Table 2, the total cost of open facility vector (Yi) can be calculated as follows:

| Facility locations | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| Fixed cost | 12 | 5 | 3 | 7 | 9 | |

| Customers | 1 | 2 | 3 | 6 | 7 | 1 |

| 2 | 0 | 5 | 8 | 4 | 12 | |

| 3 | 11 | 6 | 14 | 5 | 8 | |

| 4 | 19 | 18 | 21 | 16 | 13 | |

| 5 | 3 | 9 | 8 | 7 | 10 | |

| 6 | 4 | 7 | 9 | 6 | 0 | |

For each particle in the swarm, let define Pi = [pi1, pi2, …, pin], as the personal best, where pik denotes the position value of the ith personal best with respect to the kth dimension. The personal bests are determined just after generating Yi vectors and their corresponding fitness values. In every generation, the personal best of each particle is updated based on its position vector and fitness value. Regarding the objective function, fi(Yi ← Xi), the fitness values for the personal best of the ith particle, Pi, is denoted by . At the beginning, the personal best values are equal to position values (Pi = Xi), explicitly Pi = [pi1 = xi1, pi2 = xi2, pi3 = xi3, …, pin = xin] and the fitness values of the personal bests are equal to the fitness of positions, .

Then the best particle in the whole swarm is selected as the global best. G = [g1, g2, g3, …, gn] denotes the best position of the globally best particle, fg = f(Y ← G), achieved so far in the whole swarm. At the beginning, global best fitness value is determined as the best of personal bests over the whole swarm, , with its corresponding position vector Xg, which is to be used for G = Xg, where G = [g1 = xg1, g2 = xg2, g3 = xg3, …, gn = xgn] and corresponding Yg = [yg1, yg2, yg3, …, ygn] denotes the open facility vector of the global best found.

After updating position values for all particles, the corresponding open facility vectors can be determined by their fitness values in order to start a new iteration if the predetermined stopping criterion has not met yet. In this study, we employed the gbest model of Kennedy et al. [38] for CPSO , which is elaborated in the pseudocode given below.

2.2. Discrete PSO Algorithm for UFL Problem

The discrete PSO (DPSO) algorithm used here is first proposed by Pan et al. [37] for the no-wait flowshop scheduling problem. We employed the DPSO algorithm for UFL problem. In DPSO, each particle is based on only the open facility vector Yi = [yi1, yi2, yi3, …, yin], where yik represents opening or closing kth facility of the ith particle. For an n-facility problem, each particle contains n number of dimensions. The dimensions of Yi are binary random numbers. The fitness of the ith particle is calculated by fi(Yi).

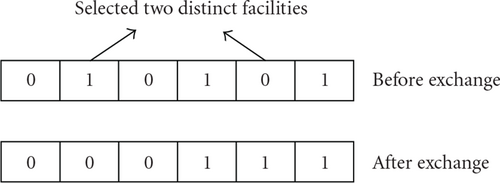

Equation (10) consists of three components: the first component (11) is the velocity of the particle. F1 represents the exchange operator (Figure 1) which is selecting two distinct facilities from the open facility vector, , of particle and swapping randomly with the probability of w . In other words, a uniform random number, r, is generated between 0 and 1. If r is less than w then the exchange operator is applied to generate a perturbed Yi vector of the particle by , otherwise current Yi is kept as .

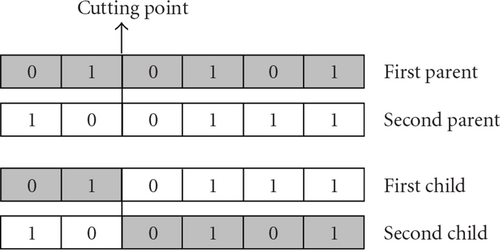

The second component (12) is the cognition part of the particle representing particle’s own experience. F2 represents the one-cut crossover (Figure 2) with the probability of c1. Note that and will be the first and second parents for the crossover operator, respectively. It is resulted either in or in depending on the choice of a uniform random number

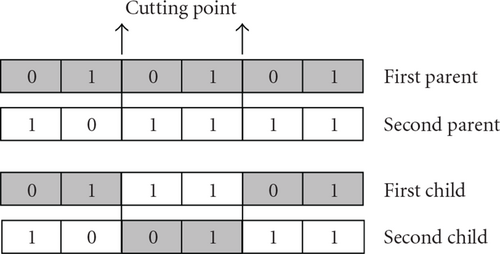

The third component (13) is the social part of the particle representing experience of whole swarm. F3 represents the two-crossover (Figure 3) operator with the probability of c2. Note that and Gt−1 will be the first and second parents for the crossover operator, respectively. It is resulted either in or in depending on the choice of a uniform random number. In addition, one-cut and two-cut crossovers produce two children. In this study, we selected one of the children randomly.

The corresponding Yi vectors are determined with their fitness values so as to start a new iteration if the predetermined stopping criterion has not met yet. We apply the gbest model of Kennedy and Eberhart for DPSO. The pseudocode of DPSO is given in Algorithm 2.

-

Algorithm 1: Pseudocode of CPSO algorithm for UFL problem.

-

Begin

-

Initialize particles (population) randomly

-

For each particle

-

Calculate open facility vectors (1)

-

Calculate fitness value using open facility vector

-

Set to position vector and fitness value

-

as personal best ()

-

Select the best particle and its position

-

vector as global(Gt)

-

End

-

Do {

-

Update inertia weight

-

For each particle

-

Update velocity (8)

-

Update position(9)

-

Find open facility vectors

-

Calculate fitness value using open

-

facility vector (1)

-

Update personal best()

-

Update the global best (Gt) value with

-

position vector

-

End

-

Apply local search (for CPSOLS) to global best

-

} While (Maximum Iteration is not reached)

-

End

-

Algorithm 2: Pseudocode of DPSO algorithm for UFL problem.

-

Begin

-

Initialize open facility vector

-

Calculate fitness value using Yi(1)

-

Do

-

Find personal best ()

-

Find global best (Gt)

-

For Each Particle

-

Apply velocity component (11)

-

Apply cognition component (12)

-

Apply social component (13)

-

Calculate fitness value using Yi(1)

-

Apply local search (for DPSOLS)

-

to global best

-

While (Maximum Iteration is not reached)

-

End

2.3. Local Search for CPSO and DPSO Algorithm

Apparently, CPSO and DPSO conduct such a rough search that they produce premature results, which do not offer satisfactory solutions. For this reason, it is inevitable to embed a local search algorithm to CPSO and DPSO so as to produce more satisfactory solutions. In this study, we have applied a simple local search method to neighbours of the global best particle in every generation. In CPSO global best has three vectors, so local search is applied to the position vector (Xi). Since DPSO has one vector. Local search is applied only this vector (Yi).

The local search algorithm applied in this study is sketched in Algorithm 3. The global best found at the end of each iteration of CPSO and DPSO is adopted as the initial solution by the local search algorithm. In order not to lose the best found and to diversify the solution, the global best is modified with two facilities (η and κ) which are randomly chosen. Then flip operator is applied to as long as it gets a better solution. The final produced solution, s, is replaced with the old global best if its fitness value is better than the initial one.

-

Algorithm 3: Pseudocode for local search.

-

Begin

-

Set globalbest open facility vector (Yg)

-

to s0 (for DPSOLS)

-

Set globalbest position vector (Xg)

-

to s0 (for CPSOLS)

-

Modify s0 based on η,κ and set to s

-

Set 0 to loop

-

Repeat:

-

Apply Flip to s and get s1

-

if (f(s1)≤f(s0))

-

Replace s with s1

-

else

-

loop = loop + 1

-

Until loop < n is false.

-

if (f(s)≤f(s0)) Replace Yg with s (for DPSOLS)

-

if (f(s)≤f(s0)) Replace Xg with s (for CPSOLS)

-

End.

3. Experimental Results

| Benchmarks | ||

|---|---|---|

| Problems | Size (m × n) | Optimum |

| Cap71 | 16×50 | 932615.75 |

| Cap72 | 16×50 | 977799.40 |

| Cap73 | 16×50 | 1010641.45 |

| Cap74 | 16×50 | 1034976.98 |

| Cap101 | 25×50 | 796648.44 |

| Cap102 | 25×50 | 854704.20 |

| Cap103 | 25×50 | 893782.11 |

| Cap104 | 25×50 | 928941.75 |

| Cap131 | 50×50 | 793439.56 |

| Cap132 | 50×50 | 851495.33 |

| Cap133 | 50×50 | 893076.71 |

| Cap134 | 50×50 | 928941.75 |

| CapA | 100×1000 | 17156454.48 |

| CapB | 100×1000 | 12979071.58 |

| CapC | 100×1000 | 11505594.33 |

| CPSO | DPSO | |||||

|---|---|---|---|---|---|---|

| Problem | ARPE | HR | ACPU | ARPE | HR | ACPU |

| Cap71 | 0.026 | 0.83 | 0.1218 | 0.000 | 1.00 | 0.0641 |

| Cap72 | 0.050 | 0.83 | 0.1318 | 0.000 | 1.00 | 0.0651 |

| Cap73 | 0.034 | 0.73 | 0.1865 | 0.000 | 1.00 | 0.0708 |

| Cap74 | 0.095 | 0.00 | 0.1781 | 0.000 | 1.00 | 0.0693 |

| Cap101 | 0.183 | 0.00 | 0.8818 | 0.000 | 1.00 | 0.3130 |

| Cap102 | 0.135 | 0.33 | 0.7667 | 0.000 | 1.00 | 0.3062 |

| Cap103 | 0.145 | 0.00 | 0.9938 | 0.000 | 1.00 | 0.3625 |

| Cap104 | 0.286 | 0.60 | 0.6026 | 0.002 | 0.93 | 0.2021 |

| Cap131 | 0.911 | 0.00 | 3.6156 | 0.173 | 0.13 | 2.5464 |

| Cap132 | 0.756 | 0.00 | 3.5599 | 0.090 | 0.17 | 2.6328 |

| Cap133 | 0.496 | 0.00 | 3.7792 | 0.042 | 0.43 | 2.5292 |

| Cap134 | 0.691 | 0.23 | 3.3333 | 0.000 | 1.00 | 1.7167 |

| CapA | 21.242 | 0.00 | 29.5739 | 8.654 | 0.00 | 24.8972 |

| CapB | 10.135 | 0.00 | 27.1318 | 4.918 | 0.00 | 22.0652 |

| CapC | 8.162 | 0.00 | 27.6149 | 4.545 | 0.00 | 23.1340 |

Obviously, the higher the HR the better quality of solution, while the lower the ARPE the better quality. The computational time spent for CPSO [33] and DPSO cases are obtained as time to get best value over 1000 iterations, while for CPSOLS and DPSOLS cases are obtained as time to get best value over 250 iterations. All algorithms and other related software were coded with Borland C++ Builder 6 and run on an Intel Centrino 1.7 GHz PC with 512 MB memory.

There are fewer parameters used for the DPSO and DPSOLS algorithms and they are as follows: the size of the population (swarm) is the number of facilities, the social and cognitive probabilities, c1 and c2, are set as c1=c2 = 0.5, and inertia weight, w, is set to 0, 9. Each problem solution run is conducted for 30 replications. There were two termination criteria that have been applied for every run: first, one is getting the optimum solution, the other is reaching the maximum iteration number that is chosen for obtaining the result in a reasonable CPU time.

The performance of CPSO algorithm looks not very impressive as the results produced within the range of time over 1000 iterations. The CPSO search found 6 optimal solutions, whereas the DPSO algorithm found 12 among 15 benchmark problems. The ARPE index which is expected lower for good solution quality is very high for CPSO when applied CapA, CapB, and CapC benchmarks and none of the attempts for these benchmarks hit the optimum value. As come to the ARPE index of DPSO, it is better than the ARPE index of CPSO, but not satisfactory as expected. In term of CPU, DPSO is better than CPSO as well. When the results are investigated statistically with using the t-test with 99% levels of confidence, the DPSO produced significantly better fitness results than CPSO when CapA, CapB, and CapC fitness results are excluded. It may be possible to improve the solutions quality by carrying on with algorithms for a further number of iterations, but, then the main idea and useful motivation of employing the heuristics, that is, getting a better quality within shorter time, will be lost. This fact imposed that it is essential to empower CPSO and DPSO with hybridizing with a local search algorithm. Thus a simple local search algorithm is employed in this case for that purpose, as mentioned before.

The results of CPSOLS and DPSOLS are shown in Table 5. The performance of CPSOLS and DPSOLS looks very impressive compared to the CPSO and the DPSO algorithms with respect of all three indexes. HR is 1.00 which means 100% of the runs yield with optimum for all benchmark except CapB and CapC for CPSOLS and except CapA, CapB, and CapC for DPSOLS. On the other hand, it should be mentioned that DPSOLS found optimum solutions of all instances, while CPSOLS found optimums except for CapC. The ARPE index results of CPSOLS and DPSOLS are very small for both algorithms and very similar to each other thus there is no meaningful difference. When the results are compared statistically with using the t-test with 99% levels of confidence, the CPSOLS and DPSOLS can be considered as equal. In term of CPU, CPSOLS consumed 18% more time than DPSOLS thus we can say that the results of DPSOLS are slightly better than CPSOLS.

| CPSOLS | DPSOLS | |||||

|---|---|---|---|---|---|---|

| Problem | ARPE | HR | ACPU | ARPE | HR | ACPU |

| Cap71 | 0.000 | 1.00 | 0.0146 | 0.000 | 1.00 | 0.0130 |

| Cap72 | 0.000 | 1.00 | 0.0172 | 0.000 | 1.00 | 0.0078 |

| Cap73 | 0.000 | 1.00 | 0.0281 | 0.000 | 1.00 | 0.0203 |

| Cap74 | 0.000 | 1.00 | 0.0182 | 0.000 | 1.00 | 0.0109 |

| Cap101 | 0.000 | 1.00 | 0.1880 | 0.000 | 1.00 | 0.1505 |

| Cap102 | 0.000 | 1.00 | 0.0906 | 0.000 | 1.00 | 0.0557 |

| Cap103 | 0.000 | 1.00 | 0.2151 | 0.000 | 1.00 | 0.1693 |

| Cap104 | 0.000 | 1.00 | 0.0370 | 0.000 | 1.00 | 0.0344 |

| Cap131 | 0.000 | 1.00 | 1.4281 | 0.000 | 1.00 | 0.4922 |

| Cap132 | 0.000 | 1.00 | 1.0245 | 0.000 | 1.00 | 0.2745 |

| Cap133 | 0.000 | 1.00 | 1.3651 | 0.000 | 1.00 | 0.4516 |

| Cap134 | 0.000 | 1.00 | 0.3635 | 0.000 | 1.00 | 0.0594 |

| CapA | 0.037 | 1.00 | 16.3920 | 0.051 | 0.53 | 14.5881 |

| CapB | 0.327 | 0.63 | 19.6541 | 0.085 | 0.40 | 17.6359 |

| CapC | 0.091 | 0.00 | 17.4234 | 0.036 | 0.13 | 15.7685 |

The experimental study is also carried out as a comparative work in Table 6. A genetic algorithm (GA) introduced by Jaramillo et al. [21] and an evolutionary simulated annealing algorithm (ESA) proposed by Aydin and Fogarty [16] are taken for the comparison. These algorithms were coded and run under the same condition with the DPSOLS algorithm. The performance of DPSOLS algorithm looks slightly better than GA in both two indexes. Especially, in respect of CPU time DPSOLS much more better than GA. Comparing with ESA, both algorithms deviations from optimum are very similar. However, especially for CapA, CapB, and CapC; GA and ESA consume more CPU time than DPSOLS algorithm.

| Deviation from optimum [33] | Average CPU | |||||

|---|---|---|---|---|---|---|

| Problem | GA [21] | ESA [16] | DPSOLS | GA [21] | ESA [16] | DPSOLS |

| Cap71 | 0.00 | 0.00 | 0.000 | 0.287 | 0.041 | 0.0130 |

| Cap72 | 0.00 | 0.00 | 0.000 | 0.322 | 0.028 | 0.0078 |

| Cap73 | 0.00033 | 0.00 | 0.000 | 0.773 | 0.031 | 0.0203 |

| Cap74 | 0.00 | 0.00 | 0.000 | 0.200 | 0.018 | 0.0109 |

| Cap101 | 0.00020 | 0.00 | 0.000 | 0.801 | 0.256 | 0.1505 |

| Cap102 | 0.00 | 0.00 | 0.000 | 0.896 | 0.098 | 0.0557 |

| Cap103 | 0.00015 | 0.00 | 0.000 | 1.371 | 0.119 | 0.1693 |

| Cap104 | 0.00 | 0.00 | 0.000 | 0.514 | 0.026 | 0.0344 |

| Cap131 | 0.00065 | 0.00008 | 0.000 | 6.663 | 2.506 | 0.4922 |

| Cap132 | 0.00 | 0.00 | 0.000 | 5.274 | 0.446 | 0.2745 |

| Cap133 | 0.00037 | 0.00002 | 0.000 | 7.189 | 0.443 | 0.4516 |

| Cap134 | 0.00 | 0.00 | 0.000 | 2.573 | 0.079 | 0.0594 |

| CapA | 0.00 | 0.00 | 0.051 | 184.422 | 17.930 | 14.5881 |

| CapB | 0.00172 | 0.00070 | 0.085 | 510.445 | 91.937 | 17.6359 |

| CapC | 0.00131 | 0.00119 | 0.036 | 591.516 | 131.345 | 15.7685 |

4. Conclusion

In this paper, one of the recent metaheuristics algorithms called DPSO is applied to solve UFL benchmark problems. The algorithm has been tested on several benchmark problem instances and optimal results are obtained in a reasonable computing time. The results of DPSO with local search (DPSOLS) are compared with results of a CPSO [33] with local search (CPSOLS) and two other metaheuristics approaches, namely, GA [21] and ESA [16]. It is concluded that the DPSOLS is slightly better than the CPSOLS and competitive with GA and ESA.

The main purpose of this paper is testing performance of CPSO and DPSO algorithms under the same condition. When compared CPSO, DPSO proves to be a better algorithm for UFL problems. It also should be noted that, since CPSO considers each particle based on three key vectors; position (Xi), velocity (Vi), and open facility (Yi). So, CPSO allocates more memory than DPSO for each particle. In addition, to the best our knowledge, this is the first application of discrete PSO algorithm applied to the UFL problem.