New insights for preventing transfusion-transmitted cytomegalovirus and other white blood cell–associated viral infections

Transfusion-transmitted viruses that exist primarily in the plasma such as human immunodeficiency virus (HIV), hepatitis B virus, and hepatitis C virus have been the focus of significant research, regulatory, and clinical attention. In this issue of TRANSFUSION, a series of articles addresses another set of transmissible viruses that have typically received less attention: human T-lymphotropic virus (HTLV), human herpesvirus (HHV)-8, and cytomegalovirus (CMV). These viruses share the common characteristic that they are white blood cell (WBC) associated, and the articles in aggregate address the effective use of serology, leukoreduction (LR), and other approaches for interdiction of such blood-borne pathogens.

Hakre and colleagues1 describe a case of HTLV-1 transmission after transfusion of an injured US soldier in Afghanistan. As part of an emergency whole blood transfusion program, military practitioners transfuse unscreened (and non-LR) blood components when screened products are not available. At later times, recipients are retrospectively screened for viral infection. In this case, the recipient was found to be HTLV-positive, and detailed molecular analyses strongly supported transmission from one of the unscreened donors who harbored an HTLV strain with significant genetic similarities to that found in the recipient. This case represents the first report of HTLV-1 transmission by transfusion in the US military during combat operations and highlights the continuing importance of serologic screening. In the US civilian population, HTLV serology (implemented in 1988) has had an impressive track record, with the last case of transfusion-transmitted HTLV-1 occurring in 1989.

A second article in this issue2 provides evidence that LR also plays an important role in reducing the risks of HTLV transmission. Hewitt and coworkers performed an HTLV lookback investigation within the NHS Blood and Transplant programs. HTLV screening by serology was initiated in 2002 in the United Kingdom, and donors found to be seropositive between 2002 and 2011 were included in this study (n = 194). Sixty-four of these individuals had donated untested units before the introduction of serology (837 total blood components). Samples were obtained from 114 recipients of these components, and HTLV infection consistent with transfusion transmission was confirmed in six of the recipients. When analysis focused on the subset of recipients transfused after 1998 (when universal LR was introduced), LR was associated with a 93% reduction in HTLV transmission compared to non-LR units (transfused before 1998). The maximum HTLV transmission rate with LR units was estimated at 3.7% (using pre-2002 filtration technologies); this calculated rate may actually underestimate the efficacy of LR as the single HTLV-positive recipient of LR units in the study by Hewitt and colleagues had other risk factors for viral infection.

HHV-8 (a human herpesvirus) is another WBC-associated virus that is readily transfusion transmissible. For example, based on the results of a Ugandan transfusion study, HHV-8 has a transmission rate of 2.8% in the absence of screening and LR. To investigate the potential efficacy of LR against HHV-8 transmission, Dollard and colleagues3 (disclosure: JDR is a coauthor on this publication) collected blood from HIV+ men with Kaposi sarcoma (KS, associated with high HHV-8 levels) and then measured plasma and blood HHV-8 viral loads before and after LR. Of 12 blood samples from KS donors with detectable HHV-8, the seven samples with only WBC-associated viral DNA (no detectable plasma virus) had no viral DNA after LR. Interestingly, while the remaining five samples showed a 99.9% decrease in WBCs after LR, HHV-8 DNA was still detectable with only approximately a 90% reduction in viral load. Further investigation showed that these five samples had plasma viremia (averaging 8% of total virus) and that postfiltration total viral loads approximated the levels of prefiltration plasma virus. Thus, the work of Dollard and colleagues suggests that LR efficiently removes WBC-associated HHV-8, but is relatively ineffective against plasma-free virus (consistent with the results of Furui and colleagues, see below).

Although HTLV and HHV-8 are certainly of concern to transfusion medicine, greater clinical implications are usually assigned to transfusion-transmitted CMV (TT-CMV) and accordingly more work has been done on this problem. The standard approaches to prevent TT-CMV include the use of seronegative donors (which is effective, but has the usual viral window phase concerns) or transfusion of LR units from unscreened donors (which carries risks associated with using CMV-infected donors).4 Ziemann and colleagues recently proposed a novel third strategy for providing CMV-safe transfusions: provision of LR units from donors who seroconverted at least 1 year earlier. This approach avoids the use of recently infected (seroconverting) donors who are known to have the highest viral loads (including plasma free CMV).

In this issue of TRANSFUSION, Ziemann and colleagues5 provide additional data supporting this strategy. CMV serology and nucleic acid testing (NAT) were performed on samples from approximately 23,000 whole blood donations. Serology results were also compared against other donations from the same individual to approximate the timing of seroconversion. Ten samples were reproducibly positive for plasma CMV DNA and could be divided into three groups: 1) window period donations, 2) early seroconversion donations, and 3) remotely infected donations. One of the most significant findings was that plasma CMV DNA was found most often (n = 7) and at highest levels (median = 50 IU/mL) in Group 2, the donors who had very recently seroconverted. Although they did not perform viral cultures, other studies6 suggest that this time of peak viral DNA is associated with infectious virus in the plasma. By contrast, Donor Groups 1 and 3 rarely had detectable plasma CMV DNA (n = 2 and 1 donor, respectively), and only at very low levels (median = 40 and <30 IU/mL, respectively). Thus, even seronegative donors in the window period of CMV infection may present a low risk of transmitting CMV. Furthermore, the results also support the use of LR blood from remotely infected donors since their incidence of viremia from CMV reactivation is comparable to, or less than, window period–seronegative donors. The investigators also noted that most remotely infected donors have neutralizing CMV antibodies in the plasma, which may provide additional protection against CMV transmission when using this latter strategy for mitigation.

The article by Furui and coworkers7 provides complementary data regarding TT-CMV risks. The investigators performed a study of Japanese blood donors to determine changes in seroprevalence and DNA positivity during different decades of life. On average, 77% of donors were seropositive, although nearly 100% of those over 60 years were seropositive. These percentages are higher than in many other countries but may have provided a higher yield for the second part of the study in which the investigators found CMV DNA most frequently in those older than 60 years (including in plasma fraction) and, consistent with the findings of Ziemann and colleagues, highest CMV DNA prevalence in those that were IgM positive (recently seroconverted). Additionally, units from donations with CMV DNA in plasma still had detectable CMV DNA after LR, lending support to the contention of Dollard and coworkers3 that LR does not significantly diminish plasma viral DNA (or, likely, plasma virus).

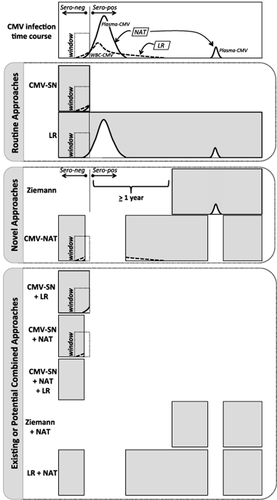

Taken together, the data from these articles improve our understanding of the advantages and drawbacks of various strategies to prevent WBC-associated infections (Fig. 1). Although the following discussion is focused on CMV, the considerations could also apply to the prevention of HTLV and HHV-8 transmission. The top panel is a schematic time course of CMV infection in a hypothetical donor.8 After infection, and shortly before seroconversion, CMV DNA is detectable in plasma (solid line) and WBCs (dashed line). However, the majority of the plasma and WBC viral phases, and the peak viral levels in each compartment, occur after seroconversion.9 CMV DNA usually remains detectable until 90 to 120 days after seroconversion. Because CMV DNA is only rarely detectable in WBCs in remotely infected donors, we have omitted showing WBC CMV after the end of primary infection. However, remotely infected seropositive individuals can experience CMV reactivation,5, 7 which is shown as a transient plasma CMV spike at the right edge of the time course. In the following discussion this time course is used to illustrate the effects of different approaches for providing CMV-safe blood.

Markers of donor CMV infection and approaches to prevent transfusion-transmitted infections. (Top) Schematic representation of CMV antibody, plasma CMV DNA, and WBC CMV DNA expression kinetics after primary infection in a hypothetical donor. Seroconversion is shown as a vertical line, and the preseroconversion window period is highlighted. The application of NAT and LR methods to interdict units with plasma CMV DNA and WBC-associated virus, respectively, is also shown. (First tab) Routine approaches to reduce risks of TT-CMV: use of CMV-seronegative units (CMV-SN) or LR of unscreened cellular components (LR). The width of the gray box illustrates the proportion of the “donor lifetime” during which they can donate units for patients at risk for CMV infection. The truncated gray box for CMV-SN shows that after seroconversion this donor can no longer provide “CMV-safe” units based on serologic testing. The superimposed white curves (taken from the top panel) illustrate likely causes for the residual risk of CMV infection with either of these approaches. (Second tab) Novel approaches to abrogate TT-CMV: Ziemann's proposal or use of CMV NAT. The former can be considered as the approximate inverse of the CMV-SN strategy, since the blood center would wait until seroconversion plus 1 year before using LR blood from this donor for CMV at-risk patients. With the CMV NAT approach the gaps in the gray box indicate those times during the donor's lifetime in which their donated units would be interdicted (because of the presence of plasma CMV DNA) and not considered CMV-safe. (Third tab) Potential efficacies of existing or novel combined strategies to prevent TT-CMV. Please see the text for further discussion.

Serology and LR are the current routine strategies for supplying CMV-safe blood (Fig. 1, first tab), although these approaches do not provide absolute protection against CMV transmission.4 For CMV-seronegative units (Fig. 1 , CMV-SN) residual risk is due to the presence of CMV (typically inferred from viral DNA) in plasma and WBC compartments during the window phase. For unselected LR units, CMV transmission may occur because plasma virus present during the viremia spikes is not captured by LR filters.3, 7 Although some of the CMV DNA may not be associated with intact viral particles, the presence of CMV DNA should be taken as the potentially worst case scenario for transmitting infectious virus. Note in Fig. 1 for this hypothetical CMV-infected donor that blood collected over his or her entire “donation lifetime” can be leukoreduced to prepare CMV-safe units; in contrast, using the serology approach, his or her blood can only be used for at-risk recipients before seroconversion.

The novel Ziemann approach (Fig. 1, second tab) allows the hypothetical donor to provide CMV-safe units for a relatively long period of his or her donor life (depending on when they seroconvert), but it does not address the small but potentially significant risks associated with CMV reactivation that produces plasma-free virus not interdicted by LR. Although the data of Ziemann and coworkers seem to minimize this risk,5 the concern appears more significant based on the work of Furui and coworkers.7

Although not in routine use (therefore considered “novel” for this discussion), CMV NAT could also be used to prepare CMV-safe units. For the purposes of this discussion, we are focused on plasma NAT to detect CMV infections and reactivation for two reasons: first, we previously published on the performance problems of using CMV NAT assays for WBC fractions;10 second, in seronegative and unselected LR units, 90% of detectable CMV DNA is found in the plasma compartment,5 although previous studies suggest that viable virus can also be found associated with the WBC fraction.11, 12 As seen in Fig. 1, in comparison with Ziemann and colleagues, donors tested by NAT could donate CMV-safe units over a greater proportion of their lifetimes and would likely not have the risks associated with viral blips occurring remotely after infection. However, the “tails” of the WBC CMV profiles extend beyond those of plasma DNA during primary infection. Thus, plasma NAT by itself would not detect very early infected donors nor would it detect recently seroconverted donors whose plasma CMV has cleared but who still have detectable WBC-associated CMV DNA.

Finally, it is interesting to consider how these strategies could be combined to improve transfusion safety while maximizing the use of donor blood for patients at risk for CMV infection (Fig.1, third tab). Many physicians currently provide units that are both seronegative and LR. By overlapping these strategies, it appears that LR eliminates the risks associated with the preseroconversion WBC CMV tail, while serology addresses the risks of high postseroconversion plasma CMV. While these combined strategies should make the product safer (and in fact we have evidence that the combined strategy is in fact very safe for at-risk very-low-birthweight neonates13), it does limit the pool of available units. Combining serology with NAT (but without LR) would eliminate the postseroconversion risks of NAT alone (without addressing the window phase risks of WBC CMV). Adding LR to serology and NAT has the potential to completely eliminate the risk of CMV transmission, but yields a relatively small donor pool. A better tradeoff appears to be combining LR with NAT. While this approach would be effective using Ziemann-style LR (which requires serology to identify postseroconversion donors), it would be expected to be just as effective with standard LR while also expanding the donor pool and eliminating the need for serology altogether. Because universal LR has become almost the de facto standard of care throughout much of the world, it is worthwhile considering whether adding NAT may be a cost-effective way to further improve the safety of LR units for patients at risk for CMV transmission.

In summary, the five articles in this issue of TRANSFUSION point to the continuing importance of WBC-associated infections to transfusion safety and the efficacy of serology and/or LR in reducing the risks associated with these infections and suggest ways that LR could be made even safer. One is left to ask, however, if the combination of multiple overlapping methods is required to prepare the safest blood for at-risk recipients: is it time to consider replacing all these strategies with pathogen inactivation (when available for routine use of each blood product), which in this comparison may turn out to be logistically simpler and less expensive?

Conflict of Interest

The authors have no relevant conflicts of interest with the material in this editorial.