Neural Representations of Task Context and Temporal Order During Action Sequence Execution

This article is part of the topic “Everyday Activities,” Holger Schultheis and Richard P. Cooper (Topic Editors).

Abstract

Routine action sequences can share a great deal of similarity in terms of their stimulus response mappings. As a consequence, their correct execution relies crucially on the ability to preserve contextual and temporal information. However, there are few empirical studies on the neural mechanism and the brain areas maintaining such information. To address this gap in the literature, we recently recorded the blood-oxygen level dependent (BOLD) response in a newly developed coffee–tea making task. The task involves the execution of four action sequences that each comprise six consecutive decision states, which allows for examining the maintenance of contextual and temporal information. Here, we report a reanalysis of this dataset using a data-driven approach, namely multivariate pattern analysis, that examines context-dependent neural activity across several predefined regions of interest. Results highlight involvement of the inferior-temporal gyrus and lateral prefrontal cortex in maintaining temporal and contextual information for the execution of hierarchically organized action sequences. Furthermore, temporal information seems to be more strongly encoded in areas over the left hemisphere.

1 Introduction

Many routine action sequences have similar stimulus-response mappings. As a consequence, their correct execution relies on the ability to maintain contextual and temporal information that disambiguates one sequence from another (Lashley, 1951). For example, the sequences for preparing soy milk latte and regular latte are similar. Like many real-world sequences, they exhibit hierarchical structure: Both are specific instances of making coffee, which itself is an instance of making a hot beverage. A barista who makes both of these drinks regularly must, therefore, maintain contextual information about the identity (soy milk vs. regular milk) of the drink that is currently being made, or else they could confuse the two recipes. Similarly, a common pizza recipe calls for first brushing the dough with olive oil and then spreading the toppings. Therefore, pizza-making requires maintenance and manipulation of temporal information, that is, the serial rank order of the actions that have been performed already and the ones that need to be performed later. Notably, the failure to maintain contextual and temporal information can lead to “action slips” (Norman, 1981; Reason, 1990), which are errors that relate to the execution of an action that is inappropriate in the current context but appropriate in the context of other, typically habitual sequences (see Mylopoulos, this issue). Human-like computational solutions to this problem have also been proposed for robotic systems, which confront similar sequencing issues when operating in natural environments (Finzi and Caccavale, this issue).

Despite the importance of temporal and contextual information to everyday behavior, the neural underpinnings and mechanisms that support their maintenance in the service of sequence execution are still debated. While many empirical studies have attempted to address these questions (for a review, see Desrochers & McKim, 2019 and the discussion below), most of these do not feature voluntary production of hierarchical and time-extended sequences that are said to be essential to complex human behavior (Holroyd & Verguts, 2021; Holroyd & Yeung, 2012). Thus, the ecological validity of previous findings is somewhat limited.

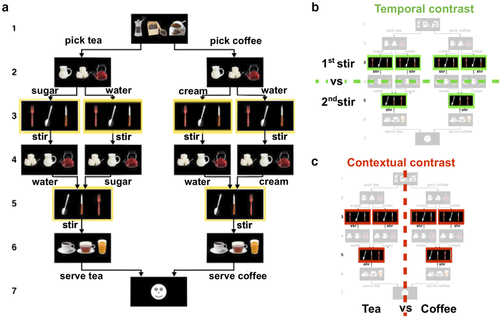

Two recent studies have addressed this gap by using a hierarchically structured temporally extended sequence production task. Balaguer, Spiers, Hassabis, and Summerfield (2016) showed that the anterior midcingulate cortex (aMCC) maintains contextual information in a hierarchical planning task. And in a study that is central to our present investigation, we also found the aMCC to be involved in sequencing (Holroyd, Ribas-Fernandes, Shahnazian, Silvetti, & Verguts, 2018). In that study, we recorded the BOLD response in a newly developed coffee–tea making task that required participants to maintain contextual and temporal information during sequence production. According to the cover story for the task, participants are instructed to prepare coffee or tea by making six consecutive decisions that determine which beverage is being prepared (first and sixth steps of the sequence), which ingredients to add (second and fourth steps of the sequence), and which cutlery to use to stir the added ingredients (third and fifth steps). Based on task instructions, four sequences of actions were admissible. That is, the task instructions permitted only some choices of ingredients when making each beverage and cutlery when stirring the ingredients. Importantly, the third and fifth steps in the sequence, which we call the “stir” actions, afforded identical responses to identical stimuli and were performed twice over the course of the sequence (Fig. 1a, yellow frames).

In that study, we tested a prediction motivated by our previous theoretical work (Shahnazian & Holroyd, 2018) that aMCC encodes contextual and temporal information using distributed representations similar to the encoding scheme of artificial recurrent neural networks (RNNs). Toward this end, we trained an RNN model (Botvinick & Plaut, 2004; Shahnazian & Holroyd, 2018) to perform the task. Given that RNNs represent temporal and contextual information in their hidden layer, we estimated the degree of dissimilarity between patterns of activity across all pairwise combinations of conditions, separately for the model and for (localized) brain activity. Then, we applied a representational similarity analysis (RSA) with a searchlight approach to find the second-order similarities between the model representations and the brain representations (Freund, Etzel, & Braver, 2020; Kriegeskorte, Goebel, & Bandettini, 2006, 2008). The RSA confirmed that aMCC and the model represented the task conditions similarly, in particular, that both code for the identity of the sequence currently being performed (contextual information), and for the temporal progression through each sequence (temporal information). Moreover, in keeping with past literature examining less complex sequential tasks (e.g., Desrochers, Collins, & Badre, 2019), an exploratory analysis found that a broad region in rostrolateral prefrontal cortex (RLPFC) codes for contextual information, namely, each of the coffee and tea sequences.

Nevertheless, despite the insights into aMCC function yielded by this hypothesis-based study, the approach by Holroyd et al. (2018) may have overlooked other brain areas that also code for contextual and temporal information. In particular, the RSA approach is relatively conservative (see discussion). Therefore, here, we applied a data-driven approach in order to explore the contribution of other brain areas toward representing contextual and temporal information.

To be specific, we reanalyzed the data from Holroyd et al. (2018) using a different multivariate pattern analysis (MVPA) technique, namely classification analysis (Haxby, Connolly, & Guntupalli, 2014), in order to identify the brain regions that specifically represent contextual and temporal information. We classified each dimension of interest (i.e., contextual and temporal) by applying MVPA on the multivariate activity associated with one specific step in the sequence: the stir action. As described above, the stir action was presented twice in every sequence and required identical responses to identical stimuli. These events thus tightly control for potential sensory and motor confounds (Holroyd et al., 2018). First, by contrasting neural activity for stir actions at different stages in the sequence, we examined whether temporal information was encoded in brain activity. Second, by contrasting neural activity across different sequences (i.e., coffee sequences vs. tea sequences), we tested whether information about the task context was encoded in brain activity.

To study the neural topography of contextual and temporal information representations, we adopted a region of interest (ROI) approach (Poldrack, 2007; Saxe, Brett, & Kanwisher, 2006). This approach consists of first defining functional or anatomical ROIs based on subject-specific data (e.g., gyral-based cortical parcellation) and then performing the analyses of interest, in our case MVPA, in each ROI independently. This ROI approach substantially increases statistical power relative to whole-brain analyses while preserving subject-specific differences in brain organization (see also Dugué, Merriam, Heeger, & Carrasco, 2017 and Senoussi, Berry, VanRullen, & Reddy, 2016 for examples of this approach). We accordingly determined a set of ROIs based on past literature on sequencing tasks in both humans and nonhuman primates (see discussion). We then extracted anatomical locales for those regions by comparing the coordinates reported in the articles against their approximately corresponding regions, as specified by the automatic gyral-based parcellation algorithm of the brain imaging software package Freesurfer (Desikan et al., 2006; see Fig. S1).

Regarding temporal information, we included areas that have been shown to be modulated by the rank order of actions in a sequence or that exhibited ramping up of their activity in relation to progression in a sequence. These candidate areas included caudal anterior cingulate cortex and rostral middle frontal cortex (Holroyd et al., 2018; Hyman, Ma, Balaguer-Ballester, Durstewitz, & Seamans, 2012), caudal middle frontal cortex (Ninokura, Mushiake, & Tanji, 2004; Warden & Miller, 2010), middle temporal cortex and pars orbitalis (Kalm & Norris, 2017a), hippocampus (Davachi & DuBrow, 2015), and parahippocampal cortex (Hsieh, Gruber, Jenkins, & Ranganath, 2014). Regarding contextual information, we included areas that have been shown to encode contextual information, such as context-specific stimuli or actions, or changes in contexts. These candidate areas included caudal anterior cingulate cortex (Balaguer et al., 2016; Holroyd et al., 2018), caudal middle frontal cortex (Averbeck & Lee, 2007), rostral middle frontal cortex (Desrochers et al., 2019), hippocampus (Ranganath & Ritchey, 2012), frontal pole (Mansouri, Koechlin, Rosa, & Buckley, 2017), and areas in inferior frontal junction (Wilson, Marslen-Wilson, & Petkov, 2017), including pars opercularis, pars orbitalis, and pars triangularis. We discuss the choice of these areas in the discussion section. Finally, we additionally explored the lateralization of temporal and contextual information by performing the aforementioned classification analyses separately on the left-hemisphere and right-hemisphere portions of each ROI. The results provide insight into which brain regions support the execution of action sequences by maintaining contextual and temporal information that disambiguate otherwise identical environmental states or action affordances.

2 Methods

A complete description of the materials, experimental procedure, and MRI recording can be found in Holroyd et al. (2018). Here, we provide a short summary of the relevant aspects. The experimental procedures were approved by both the Ethical Committee of Ghent University Hospital and the University of Victoria Human Research Ethics Board, and the experiment was conducted in accordance with the 1964 Declaration of Helsinki.

2.1 Dataset

2.1.1 Participants

Eighteen volunteers participated (13 females, 5 males; median age 22, range 19–29) for pay (40 Euros; 3 participants earned an extra 10 Euros for overtime). The participants were recruited via the online participant management system SONA (www.sona-systems.com), contracted by Ghent University.

2.1.2 Task

The coffee–tea making task entailed producing sequences of six decisions (steps) for a total of 72 trials divided equally across four blocks. Each decision state occurred approximately 4.5 s after the onset of the previous decision state (SD = 0.42), which allowed for better isolation of the BOLD response to each decision state. Each trial, therefore, lasted about 31.5 s to complete. Each step was prompted by the presentation of three images of items arranged in a horizontal row on the computer screen. Participants indicated their response by pressing a spatially corresponding button on the response pad. For each step, a single set of three items appeared on the screen, but the spatial locations of the items were randomized, thereby preventing sensory and motor confounds. As a cover story, participants were told that they were baristas, and that on each trial, they should choose what beverage (coffee or tea) they would serve to a customer. They were further instructed that to prepare the beverage, two ingredients were to be added. The beverages differed in one ingredient (coffee required milk and tea required sugar) and were identical for the other ingredient (both coffee and tea required water). To prepare the beverage (Fig. 1), the participants were instructed to select the drink type (first decision), add one ingredient (second decision), stir the added ingredient (third decision), add the other ingredient (fourth decision), stir the added ingredient (fifth decision), and serve the prepared beverage (sixth decision). Participants were instructed to select the beverages and the orders of the ingredients (water or the other ingredient first) in a random manner “as if flipping a coin.” Of particular interest here, the third and fifth decisions, referred to as “stir actions,” were prompted by the presentation of three cutlery images (spoon, knife, and fork). Participants were instructed on these steps to always choose the spoon (in order to stir the previously added ingredient), irrespective of the sequence that was being performed. Importantly, because the stimuli and motor requirements were equated across conditions, the contextual (coffee or tea sequence) and temporal (first or second stir) information cannot be inferred from such cues. Participants practiced the task outside the scanner for a total of six trials during which they emulated preparing both beverages with an almost equal frequency (M = 3.3, SD = 1.4, for performing the coffee sequence).

2.1.3 MRI recording

Structural and functional images were acquired at Ghent University Hospital using a Siemens 3T Magnetom Trio MRI scanner.

2.1.4 Preprocessing and generalized linear model

EPI images were aligned to the first image in each time session and corrected for slice acquisition timing. Next, the mean functional image was coregistered to the individual anatomical volume. The coregistered images were not normalized or smoothed.

A general linear model was applied to the resulting voxel-level time-series in each block. The regressors of this analysis were generated through convolving the time-series impulse function for each of the 24 task states (six decisions across four sequences) as well as motion parameters and global signal, with a canonical hemodynamic response function.

2.2 Multivariate pattern analysis

2.2.1 Contrasts of interest

As detailed below, we used a linear support vector machine (SVM) classifier, implemented in the Scikit-learn Python toolbox version 0.23.1 (Pedregosa et al., 2011), to classify activity patterns associated with each event of interest in each of the predetermined ROIs (De Martino et al., 2008).

In the temporal contrast, we trained the SVM classifier on the normalized beta coefficients (Kalm & Norris, 2017b) associated with actions 3 and 5 (i.e., stir actions) in the sequence, irrespective of the performed sequence (i.e., coffee or tea sequence), to discriminate between the first and second instance that the stir action was performed. For the context contrast, we trained a classifier on the same beta coefficients to discriminate whether the stir action was performed in a tea or a coffee sequence, irrespective of the sequence position of the stir action (i.e., first or second stir). Given that the GLM provides beta estimates for two consecutive stir actions across four sequences in each of the four blocks, there were a total of 32 observations (16 per class) to be classified for each participant in either of the contrasts (Fig. 1b and c).

2.2.2 Controlling for univariate confounds

A recent study by Kalm and Norris (2017b) has shown that in studies investigating the neural correlates of sequence learning or sequence execution, the results can be confounded by the concurrent evolution of other cognitive factors, such as cognitive load, or by a general drift in neural activity throughout a sequence. They argue that these effects can mask actual sequence representations. To control for such confounding factors, we followed one of their suggestions and normalized beta values associated with each event of interest, independently for each participant and ROI. More specifically, in each classification analysis, for each of the 32 event-related betas to be classified, we subtracted the average beta value of all voxels in that ROI in this event, and divided by their standard deviation.

2.2.3 Regions of interest

As discussed above, our analysis focused on a set of predetermined ROIs as defined by the Freesurfer automatic gyral parcellation algorithm (Desikan et al., 2006; Fischl, 2012). For each of these ROIs, only voxels located in the gray matter, as segmented by the automatic gray-white matter segmentation by Freesurfer, were used. The ROIs include areas in lateral frontal cortex and frontal operculum (frontal pole, pars opercularis, pars orbitalis and pars triangularis, rostral, and caudal middle frontal cortex); in medial prefrontal cortex (caudal anterior cingulate cortex); and in the temporal lobe (middle temporal cortex, hippocampus, and parahippocampal cortex, see Figure S1). To enable comparisons across contrasts, all of these ROIs were subjected to the same set of analyses.

2.2.4 Regions of interest analysis

In order to allow comparison across the different ROIs offered by the Freesurfer parcellation, and their varying spatial expanse, we used a feature selection procedure per ROI. Indeed, if a subregion implicated in previous studies shows functional specificity in representing temporal and contextual information in our task, voxels belonging to that subregion should be picked up by our feature selection procedure, thereby reducing the potential for the differences in ROI size to severely affect our results. Thus, for the classification analysis in each ROI, we carried out a feature-selection procedure in which we first conducted a univariate analysis of variance (ANOVA) of all the voxels with the contrast of interest as the independent variable and the beta-values associated with the training set's observations as the dependent variable, and then selected the 120 voxels (see Fig. S2, which illustrates the effect that the number of features has on the classifier's performance) with the highest F-statistics as features for the classification analysis (see Zhang, Kriegeskorte, Carlin, & Rowe, 2013). We then fit the linear SVM to classify the beta-values and took the average four-fold cross-validated classification accuracy as the accuracy score for that ROI. The feature-selection and classification were performed in a cross-validation procedure to avoid double-dipping, using the cross_validate and Pipeline functions from Scikit-learn.

We additionally carried out a second ROI classification analysis to test whether temporal and contextual neural representations generalize across different tasks (coffee vs. tea) and step in the sequence (third vs. fifth), respectively. To do so, we used a cross-classification procedure (Kaplan, Man, & Greening, 2015; Senoussi, VanRullen, & Reddy, 2020) in which we modified the cross-validation scheme for each contrast as follows: In the temporal contrast, the classifiers were trained to differentiate the first and second stir actions of coffee sequences, and subsequently tested on first and second stirs of tea sequences for the first cross-validation fold, and the reverse for the second cross-validation fold. For the contextual contrast, classifiers were trained to differentiate coffee and tea sequences in the first stir action-related betas in the first two blocks of the session, and tested on the second stir action-related betas in the last two blocks of the session, and vice versa. We drew the training and test data for the context contrast from different experimental blocks in order to control for slow BOLD changes that could confound the analysis. These analyses allowed us to test whether the discriminative multivariate neural patterns representing the temporal and contextual information generalize across the sequence tasks and steps, respectively (see Bernardi et al., 2018).

To assess the significance of the resulting classification accuracies, we carried out a one-sided binomial test on the scores for each ROI separately (across participants). The binomial test assesses the probability of obtaining the observed number of “successes”—correct classification of a pattern at test in our case—based on the probability of a success in one trial at chance-level performance (50%) and the number of “trials,” that is, the total number of classifier predictions. Given that for each of the 18 participants 32 betas were classified at test, there were a total of 576 “trials” in our second-level (i.e., group-level) analysis. Previous research (Combrisson & Jerbi, 2015) has shown that the binomial test is a better estimator of classification accuracy significance and is a more appropriate statistical method than the Student's T-test because classification accuracies do not follow a normal distribution. A false discovery rate (FDR) correction (Benjamini & Hochberg, 1995) was then used to correct for multiple comparisons across ROIs (10 multiple comparisons), for each contrast.

Furthermore, we carried out an exploratory analysis on hemispheric differences in contextual and temporal information representations. We performed the same ROI classification analysis on all of the ROIs except for the frontal pole ROI (as 8 participants had 30 or fewer voxels in either the left or right hemisphere of that ROI). Thus, on the nine remaining ROIs, we carried the ROI classification analysis considering each hemisphere separately. To assess the statistical significance of these results, ROI-by-hemisphere classification accuracies were entered into a 2 × 2 × 9 repeated measures ANOVA with factors contrast (two levels: temporal and contextual), hemisphere (two levels: left and right), and ROI (nine levels: parahippocampal, hippocampus, caudal anterior cingulate, middle temporal, caudal middle frontal, rostral middle frontal, pars opercularis, pars triangularis, and pars orbitalis).

2.3 Data and code availability

All raw and preprocessed data are available from the Open Science Framework repository created by Holroyd et al. (2018): https://osf.io/UHJCF/. All analysis scripts created for the current study are available on the Github repository: https://github.com/mehdisenoussi/EST.

3 Results

Participants successfully implemented the task instructions (see Holroyd et al., 2018). No trials were rejected, so our current analysis is not biased because of differences in error patterns.

3.1 ROI classification

For the temporal contrast, the analysis of cross-validated classification scores (Fig. 2) revealed significantly higher than chance-level performance (after FDR correction) in the following ROIs: parahippocampal cortex (median ± [1st quartile, 3rd quartile]; 53.65% ± [46.87, 56.25]), middle temporal cortex (60.94% ± [57.03, 64.84]), caudal (62.50% ± [53.91, 68.75]) and rostral (65.62% ± [57.03, 68.75]) middle frontal cortex, pars opercularis (56.25% ± [50.78, 59.37]), pars triangularis (62.50% ± [53.91, 68.75]), and pars orbitalis (57.81% ± [53.12, 62.5]). In the context contrast, classification analysis (Fig. 2) revealed significantly higher than chance-level performance (after FDR correction) in pars triangularis (53.12% ± [45.31, 59.37]) and pars orbitalis (57.81% ± [46.87, 71.09]). In an exploratory analysis, we also examined the ROIs using a searchlight classification approach, Senoussi et al., 2016; the results are largely the same, Figure S3. Note also that for some participants, not all of the ROIs contained 120 voxels or more; for these ROIs, the classification analysis was performed using all the voxels available, see Fig. S4. Finally, we also performed this analysis without feature selection, to ensure that the pattern of classification accuracies was not drastically affected by this procedure, and found that the results without feature selection are similar to the results with feature selection (Fig. S5).

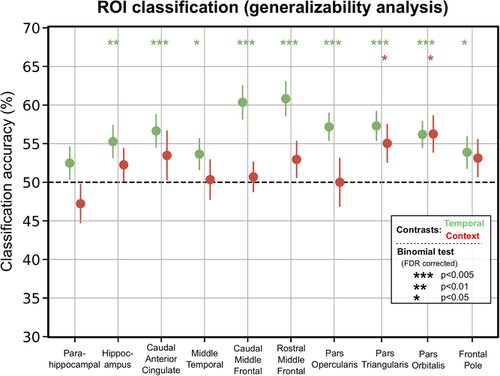

3.2 Analysis of the generalizability of the discriminative patterns

The results of the generalizability analysis (Fig. 3, see descriptive statistics in the Supplementary Material) were similar to the ROI classification analysis (Fig. 2): For the temporal contrast, the generalizability analysis identified most of the ROIs (except for parahippocampal cortex) that were previously found in the ROI classification analysis (i.e., middle temporal cortex, caudal and rostral middle frontal cortex, pars opercularis, pars triangularis, and pars orbitalis) (after FDR correction). However, this analysis also identified the hippocampus and caudal anterior cingulate cortex, which were not revealed by the ROI classification analysis. For the context contrast, the generalizability analysis identified both pars triangularis and pars orbitalis (after FDR correction), in keeping with the ROI classification analysis.

3.3 Hemispheric analysis

The ROI-hemisphere analysis of cross-validated classification accuracies with factors hemisphere, ROI, and contrast revealed a main effect of ROI (F(8, 136) = 2.67, p = .009) and no main effects of hemisphere or contrast (Fig. 4). Additionally, we found a significant two-way interaction between contrast and ROI (F(8, 136) = 2.65, p < .010), as well as a two-way interaction between contrast and hemisphere (F(1, 17) = 4.87, p = .041). To explore what underlies the significant interaction between contrast and hemisphere, we investigated the difference between the average classification accuracy for each contrast by hemisphere across ROIs. There was a marginal difference in classification accuracy between the temporal and contextual contrasts in the left hemisphere (with classification accuracies in the temporal contrast being higher than the ones in contextual contrast; t(17) = 1.93, p < .070), but no consistent difference in the right hemisphere (t(17) = 0.49, p = .678).

Further post-hoc tests were conducted only on the ROIs that showed at least one significant classification accuracy (either in temporal, or context contrast, or both; p < .05, FDR corrected). For each ROI, we compared the classification accuracies of the left and right hemisphere in each contrast with a two-sided paired samples T-test. For the temporal contrast, this analysis revealed that classification accuracies were higher in the left hemisphere in caudal middle frontal gyrus (t(17) = 2.83, p = .011), pars opercularis (t(17) = 2.5, p = .023), and pars orbitalis (t(17) = 2.30, p = .034). All other ROIs in the temporal contrast did not show significant differences between classification accuracies obtained from the left and right hemispheres (all ps > .25). For the contextual contrast, the only ROI exhibiting significantly above-chance performance in the ROI classification analysis was pars orbitalis, and it did not show any significant difference between hemispheres (p > .7). The descriptive statistics associated with the post-hoc analyses can be found in the Supplementary Material.

4 Discussion

In this inquiry, we examined which brain areas code for contextual and temporal information necessary for voluntary sequence production. We used pattern classification to detect brain areas that encode states of temporally extended, hierarchically structured sequences differently depending on which sequence is being performed (contextual contrast) or which step of each sequence is being implemented (temporal contrast).

The temporal contrast revealed that areas in the IFG (e.g., pars triangularis), lateral prefrontal cortex (caudal and rostral middle frontal areas), and temporal lobe (parahippocampal cortex and middle temporal cortex) code for temporal information in this task. The finding that lateral prefrontal cortex is involved in representing temporal information during sequence execution is consistent with studies in monkeys showing that the activity of neurons in the prefrontal cortex (PFC) is differentially modulated by the rank order of the actions in a sequence (Carpenter, Baud-Bovy, Georgopoulos, & Pellizzer, 2018; Ninokura et al., 2004; Warden & Miller, 2010). Similarly, other studies have shown that the activity in rostrolateral and dorsolateral prefrontal cortex (Averbeck, Crowe, Chafee, & Georgopoulos, 2003; Desrochers et al., 2019) ramps up in direct relation to the sequence progression, presumably as a means to encode temporal information. The involvement of PFC in representing temporal information can be explained either by a domain general role for this area in working memory or a domain-specific function in representing serial order of events. Also consistent with our findings are previous MVPA fMRI studies that have revealed several brain areas, including the anterior temporal lobe, RLPFC, and pars orbitalis and parahippocampal cortex (Hsieh et al., 2014, Kalm & Norris, 2017a), that are sensitive to the rank order of sequential events. By contrast, inconsistent with the past literature, we did not find evidence for involvement of the aMCC and hippocampus (although both of these areas are revealed in the generalizability analysis) in the distributed encoding of temporal information (e.g., see Davachi & DuBrow, 2015; Ma, Hyman, Phillips, & Seamans, 2014; Procyk, Tanaka, & Joseph, 2000). Of course, because these null findings could result from a lack of experimental power or an unrepresentative sample, we cannot interpret the absence of evidence as evidence of absence. We also found indications that the temporal information can be more strongly decoded in the left hemisphere, which may suggest some degree of lateralization of this type of representation.

These results were bolstered by the generalizability analysis, which showed that the pattern reflecting time information in one task (e.g., coffee making) can also be used to discern time information in the other task (e.g., tea making) in most of the areas detected in the ROI analysis. This indicates that the time information is partly represented in an abstract (task-invariant) format, which suggests that a subpopulation of voxels in this task represented time in a general way that was independent of each specific sequence. To elaborate, it is possible that the temporal and contextual information are coded in an independent fashion from each other and are later on multiplexed to support task performance.

Only two ROIs survived FDR correction for multiple comparisons in the analysis of the context contrast (and also the generalizability analysis), namely pars orbitalis and pars triangularis. This is consistent with some theoretical work that attributes a domain-general role to pars orbitalis in learning the relationship between environmental events and transition probabilities between various environmental states (Niv, 2019; Wilson et al., 2017). Similarly, pars triangularis is shown to be involved in learning artificial grammars—which is a form of sequence learning— and verbal working memory (Price, 2012; Uddén & Bahlmann, 2012). Contrary to past studies, we did not find evidence for representation of contextual information in rostral lateral prefrontal cortex (Averbeck & Lee, 2007; Desrochers, Chatham, & Badre, 2015), aMCC (Balaguer et al., 2016; Holroyd et al., 2018; Powell & Redish, 2016), and the hippocampus (Gupta, van der Meer, Touretzky, & Redish, 2010; Ranganath & Ritchey, 2012). We also failed to find evidence supporting theoretical work positing that aMCC and frontopolar cortex should be involved in the maintenance of contextual information (e.g., see: Mansouri et al., 2017; Shahnazian & Holroyd, 2018).

One reason for our null findings may be that the locale and the expanse of different anatomical areas offered by the Freesurfer parcellation does not closely match the localization of different sequencing-related functions within the frontal cortex. For example, the delineation of the anatomical regions in lateral frontal cortex according to the Freesurfer nomenclature creates large ROIs in comparison with the ROIs in IFG or the frontopolar ROI. Although we used feature selection to mitigate this problem, the differences in size and the mismatch between anatomical and functional separation of brain areas may have limited our ability to detect the representation of contextual information in some brain areas.

Notably, we did not find evidence that caudal anterior cingulate cortex codes for contextual information. In the temporal contrast, we were able to detect the caudal anterior cingulate cortex only in the generalizability analysis. This is despite the fact that RSA analysis of the same dataset shows that the aMCC (which overlaps anatomically with the caudal anterior cingulate cortex) is involved in representing contextual information. As mentioned before, one reason might be that the anatomical parcellation of the caudal anterior cingulate cortex does not well match the location and the expanse of the functional area often referred to as aMCC. Another reason may be that RSA and pattern classification approaches have different sensitivity profiles to pattern dissimilarities (Walther et al., 2016).

Perhaps most importantly, the aMCC cluster in the previous study was identified following a combined searchlight/RSA approach, where the predictive representational dissimilarity matrix was derived from the activation states of the hidden units of an RNN trained on the sequencing task, for all possible states of the task. Because the internal representations of RNNs are typically distributed and exhibit multiselectivity (Holroyd & Verguts, 2021), the aMCC cluster reflects these properties. Therefore, even though post-hoc analyses confirmed that this cluster was sensitive to temporal and contextual information, neither of these two factors on their own appear to have sufficient statistical power to yield much aMCC activity. Furthermore, the present study focused on only a subset of task states (whereas the RSA evaluated the pattern of dissimilarity across all time-steps; Holroyd et al., 2018) and normalized the data in order to prevent potential confounds that naturally result from multiselectivity (Kalm & Norris, 2017b). The present study confirms that the aMCC's distributed code for temporal information is not sufficiently consistent across blocks and sequence types to be detectable using the ROI classification approach, revealing only a weak effect size in the generalization analysis. The two approaches, therefore, appear to provide complementary information.

Furthermore, as an exploratory analysis, we used a secondary approach to evaluate the information content of the different ROIs, namely a searchlight analysis. This analysis revealed a similar topographical distribution of temporal and contextual information across ROIs. The two analyses, that is, ROI-based and searchlight, allowed us to show that our results were robust across MVPA approaches, even though they differ in multiple ways. For instance, the searchlight analysis yielded classification accuracies for every voxel of an ROI, which we then averaged per ROI. This procedure, therefore, produces a score that reflects the average informativity of this ROI in the contrast of interest, whereas the ROI-based analysis selected the most informative voxels to estimate how much information can be decoded from this ROI. Our results thus provide evidence that these methods do converge in our study, providing complementary evidence about what information is represented in the ROIs we studied, and that it would be of interest to further compare these two popular MVPA approaches in future work.

Our analyses revealed that temporal and contextual aspects of the task were encoded in distinct areas, even after tightly controlling for sensory and motor aspects of the task. Our classification analyses yielded classification accuracies up to 65%, which can be considered relatively low as compared with studies decoding perceptual or motor features. We believe that this is due to the specific events on which we focused our analyses: the stir actions of each sequence. These events were tightly controlled for sensory stimulation and required actions. Furthermore, we found lower and less extended representation of contextual information, relative to temporal information. One potential reason is that these regions undoubtedly code for other aspects of the task, for example, stimuli configuration (i.e., the order of utensils on the screen at the stir step). Therefore, because the paradigm and analyses were set up to avoid these confounds, it probably lowered the amount of information that could be extracted for temporal and contextual information. One other potential reason why context is much more difficult to decode is that it might be the case that during relatively long sequences, as used in this coffee–tea making task, the brain first sets up the sequence of actions to execute based on contextual information and then mostly represent progression through that specific sequence in different brain areas. It would be very interesting in future studies to investigate whether other aspects of neural activity reflect task context, such as fast neural dynamics or connectivity across frontal brain regions (Formica, González-García, Senoussi, & Brass, 2021; Senoussi et al., 2020; Smith et al., 2019), and what specific aspects of an extended task sequence could require a more evident or widespread representation of task context across brain areas.

Recently, Wen, Duncan, and Mitchell (2020) used a comparable hierarchical task featuring six sequences of four steps to look at the representation of context and temporal information in the default mode network (DMN) and multiple demand network (Fedorenko, Duncan, & Kanwisher, 2013) as revealed by RSA of fMRI data. Notably, the individual ROIs that were examined by the authors, which include regions in frontal gyrus, IFG, and the ACC, have a high degree of overlap with our ROIs.They found that almost all individual regions in both sets represent temporal information during the task. This is in line with our analyses showing that middle frontal gyrus and IFG areas are involved in representing temporal information. However, when they examined the representation of the context information, no individual ROIs survived correction for multiple comparisons (although the regions in the ACC and PCC were found when no correction was applied). Interestingly, when examined collectively, the DMN regions appear to code for the context. The results were, therefore, somewhat ambivalent. An important difference between this study and our study is the fact that in our study, we tightly control for perceptual and motor factors when examining each contrast of interest by having participants make the same choice twice. In contrast, in the study of Wen et al. (2020), participants choose a different item which may introduce confounds into the results.

Most of the past literature on sequencing (Desrochers & McKim, 2019) uses tasks that do not emulate the hierarchical and temporally extended nature of routine behavior. One reason for this is perhaps that sequence execution engages a variety of domain-general processes, including working memory, action selection, cognitive effort, and performance monitoring. Disentangling such domain general processes from the ones that are uniquely important to successful implementation of internally guided sequences of actions requires tight control over various confounds, which is hard to achieve when studying hierarchical and extended sequences. The results reported here and those of our previous work (Holroyd et al., 2018) and of Balaguer et al. (2016) are notable exceptions that generalize better to routine behavior in real life. We, therefore, advocate for adoption of similar tasks in future enquiries on the neural underpinnings of routine behavior. We also suggest that a combination of RSA and pattern classification analysis might be profitably used to examine various aspects of the distributed pattern of activity in various brain areas.

Acknowledgments

This project was supported in part with funding by the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation program (Grant agreement No. 787307 awarded to CBH and 636116 awarded to RMK). MS and TV were supported by grant G012816 from Research Foundation Flanders.