Incorporating mental imagery into geospatial environments for narrative visualizations

Abstract

Methods for evaluating cognitively inspired geospatial interfaces have been important for revealing and helping solve their cognitive and usability issues. We argue that this is now true of interfaces in GIScience that deliver narrative visualizations, including 3D virtual narrative environments. These spaces allow for controlled conditions and realistic natural settings, where spatio-temporal data can be collected and used to ascertain how well an interface design fulfilled a given narrative function. This study investigates the function of a cognitively inspired geospatial interface (Future Vision) that aimed to determine how mental images can be situated in geospatial environments and used to convey narratives that improve user cognition and decision-making. The results of a two-alternative forced-choice (2AFC) decision-making task showed that participants using future thinking guidance (mental images as a split-second display of correct path choice) had statistically significant improvements in their task completion times, movement speeds and 2AFC decision-making, compared to the unguided control group. Implications of the results include benefits for cue-based navigation of real and conceptual spaces in GIScience. Future research can improve the interface design by modifying the interface code to reduce visual loss caused by eye blinks and saccades.

1 INTRODUCTION

Narrative visualizations are an emerging field of geovisualization research, where geospatial data are incorporated into visual stories that contain a causal narrative structure (Lee et al., 2015; Segel & Heer, 2010). Problems in creating and evaluating the effectiveness of cognitively inspired narrative visualizations exist because the relationship between narrative and the cognitive sciences is continually evolving (Herman, 2001). A one-sided relationship currently exists between the two, with contributions from the cognitive sciences to the study of narrative lacking behind the insights of a narrative approach to cognition (Ryan, 2010). Cognitively inspired narrative visualizations which emphasize how internal cognitive narratives are influenced by external narrative visualization techniques represent a recent avenue of pursuit toward mitigating this disparity (Mayr & Windhager, 2018). This expands on early work in GIScience and cartography, which emphasized how the internal representations of mental images were influenced by the nature of the geospatial information presented in maps, resulting in a better understanding of how mental imagery influences and is influenced by cartographic communication (Peterson, 1987), geovisualization (Peterson, 1994), and by extension, narrative visualizations. Recently, visual storytelling has been proposed as a contemporary cartographic design principle (Roth, 2021) representing opportunities for the design of cognitively inspired geospatial interfaces that are informed by visual narrative comprehension (how sequences of images are represented in and interpreted by the human brain: Cohn, 2020). Such interfaces may resolve some of the cognitive and usability issues associated with narrative visualizations, specifically those surrounding how visual knowledge may be effectively incorporated into geospatial interface designs to enhance user understanding of spatiotemporal phenomena (MacEachren & Kraak, 2001; Slocum et al., 2001). Given that the ability to display and analyze geospatial information is a fundamental part of GIScience (Goodchild, 1992), the development and evaluation of cognitively engineered geospatial interfaces that employ novel narrative visualizations is a new approach toward integrating cognitive research into the field (Montello, 2009; Raubal, 2009) with applications in developing and improving future geospatial narrative interfaces for enhanced user cognition and decision-making. Examples include GeoCamera, an interactive tool for incorporating images generated by camera movements into geographic visualizations and geospatial narratives (Li et al., 2023) and dynamic cognitive geovisualizations (Vicentiy et al., 2016), producing cognitive geoimages that incorporate aspects of a user's mental models and perceptions of the environment into their outputs.

2 MOTIVATION AND RESEARCH AIM

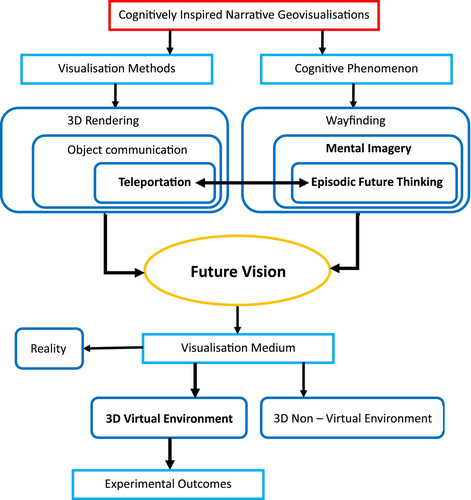

While previous narrative interfaces focused on the relationship between geospatial visual images and user cognition in behavior and performance-based studies, the processes involved in creating and evaluating narrative interfaces by incorporating mental imagery into geospatial environments have received less attention. In the research study presented in this paper, Future Vision was developed as the experimental interface to address this research gap. In developing Future Vision, considerations were given for what cognitive phenomena could best be incorporated into a visual interface that could exhibit narrative intelligence and truly represent user cognition, how the associated mental image could be visualized, and in what type of geospatial environment. The importance of being able to analyze the performance of the interface through geospatial analysis and usability testing was also considered. Based on this, the cognitive phenomenon of episodic future thinking (EFT) was chosen. EFT is defined as “a projection of the self into the future to pre-experience an event” (Atance & O'Neill, 2001, p. 533). It evolved from episodic memory; a general memory mechanism for recalling past events and recent experiences (Tulving, 1972). Thinking about the past and imagining the future coexist in the same part of the brain (Addis et al., 2007). Importantly, emerging research suggests that episodic memories are organized into a coherent narrative architecture, linking together events across space and time at varying scales (Cohn-Sheehy et al., 2022). This justified the use of EFT as the cognitive phenomenon to be incorporated into Future Vision. It was decided that the phenomenon could be simulated and visualized using teleportation in virtual reality. Through teleportation, the participant's viewpoint can instantly transition to designated locations in the virtual environment (VE) (Bowman et al., 1997; Mine, 1995). In this way, mental images can be related to the locations of visual scenes that are associated with the desired future thought. This type of interaction can be thought of as operating in a precue paradigm, where information about an upcoming event is provided in advance of its occurrence (Liu et al., 2021). It is well known that teleportation in virtual reality (VR) causes spatial disorientation (Bowman et al., 1997; Cherep et al., 2020; Moghadam et al., 2020) which may provide a sense of discomfort to participants. To mitigate this, the relationship between future thinking, visual precues (as mental images), and spatial cognition was examined using a subtle precueing paradigm. To capture the idea of subtle narrative communication, participants would be teleported for a duration of time that was within a hypothesized window of subliminal perception (Sandberg et al., 2022). A 3D VE model of a natural environment was chosen as the experimental testing ground as this allowed for the real world to be brought into a lab setting, where there was a balance between experimental control and ecological validity: The generalization of study results to the real world (Campbell et al., 2009; Parsons, 2015). Several studies support the notion that spatial knowledge can be effectively learnt and transferred between virtual and real environments during navigation tasks (Farrell et al., 2003; Lloyd et al., 2009; Richardson et al., 1999; Wilson et al., 1997) making the environment a valid platform for examining how the interface could operate in the real world or in extended reality (XR): An umbrella term that describes environments that exist across the reality–virtuality continuum (Çöltekin et al., 2020). In addition, VR experiments facilitate the collection of spatio-temporal data (Brookes et al., 2020; Shen et al., 2018; Ugwitz et al., 2019) which can be used to conduct usability tests on interface designs for navigation tasks (Kamińska et al., 2022) and in narrative environments (Jin et al., 2022). This further justified the use of VR as the experimental platform for conducting usability testing on Future Vision. Figure 1 provides a conceptual flow diagram that summarizes the design of Future Vision. Bold text and thicker arrows highlight the key elements and linkages in the interface.

Under this framework, three research objectives were developed for this study:

Objective One—Generate a VE model of a natural environment with a bifurcating path, and construct a teleportation interface to convey future thoughts as brief mental images for narrative guidance.

Objective Two—Determine what experimental parameters are most effective for subtle narrative communication in a cognitively inspired visual imagery display.

Objective Three—Investigate whether Future Vision influences spatial cognition and spatial decision-making by analyzing the navigational and decision-making behaviors of participants during a 2AFC decision-making task in VR.

From the objectives, three research hypotheses were devised that relate to the narrative visualizations produced by Future Vision:

H1.The visualizations will not be noticed by the participants.

H2.The visualizations will improve the participant's spatial navigation as they perform a goal-directed 2AFC decision-making task in VR.

H3.The visualizations will improve the participant's spatial decision-making as they perform a goal-directed 2AFC decision-making task in VR.

Objective one relates to the experimental design (Section 4.1), while objective two relates to Hypothesis one. Objective three relates to Hypotheses two and three.

3 LITERATURE REVIEW

The use of teleportation to incorporate mental imagery into immersive geospatial environments as a narrative visualization technique is a new area of research in the field. As such, related literature is scarce. Consequently, this review provides background content for this approach in three sections. Section 1 outlines the development of teleportation in VR, Section 2 draws on similar research that highlights how teleportation fits into contemporary narrative visualization discussions, Section 3 examines how narrative visualizations have been and may be used in GIScience.

3.1 Teleportation in virtual reality environments

Teleportation is a method for navigating VR environments that is achieved by instantly transporting a user to a designated location in the absence of translational motion, resulting in a sudden change of view (Mine, 1995). It can occur by direct translation, or by translating and rotating the viewer's perspective (Cherep et al., 2020). Spatial disorientation is a common complication of teleportation-based locomotion (Bowman et al., 1997). Despite this, teleportation is a widely adopted navigation technique due to its ability to enable locomotion in VR environments that extend beyond the dimensions of small experimental rooms (Coomer et al., 2018; Langbehn, Lubos et al., 2018). Advances in hardware and the development of head-mounted displays (HMDs) such as the HTC Vive and Oculus Rift in the early 2010s has led to a resurgence in research on locomotion techniques in VR; with teleportation becoming one of the most prominent (Cherni et al., 2020). Point and teleport (Bozgeyikli et al., 2016) is one of the most popular methods, where a line or arc emanates from either a hand-tracked location or an in game controller to the designated teleport location. Other methods involving hands-free teleportation have also been developed that rely on body movement (Bolte et al., 2011) or the use of body parts such as the eyes (Prithul et al., 2022) and feet (Von Willich et al., 2020).

3.2 Relationship to narrative visualizations

Immersive narrative visualizations developed from the notion that virtual reality could be used as a medium for telling stories (Aylett & Louchart, 2003). This provided foundational insights for the idea of narrative environments as places where stories are told and visually displayed (Bobick et al., 1999). The Placeholder research project (Laurel et al., 1994) was one of the first studies to use teleportation as a narrative interaction technique in a virtual narrative environment. The duration of teleportation was highlighted as an important variable when representing transitions across space and time. As a means of instantly transitioning between environmental scenes (Moghadam et al., 2020), teleportation as a narrative visualization technique is akin to discrete slideshows where movement is represented by transitions between sets of visual images (Andrienko et al., 2010; Mayr & Windhager, 2018; Segel & Heer, 2010). In this way, teleportation as a narrative visualization technique aligns with discussions on how concepts from cinematography such as camerawork, scene transitions, and film design can be used in the field (Cohn, 2013; Shi et al., 2021; Xu et al., 2022). Recent examples include GeoCamera (Li et al., 2023) which investigated the role of camera movements and transitions in shaping narrative visualizations. These visualizations encompass a systems architecture where camera designs emphasize geospatial targets through camera shots that serve specific narrative purposes.

3.3 Narrative visualizations in GIScience

Narrative visualizations combine storytelling techniques with data visualizations (Errey et al., 2023). Their development is a byproduct of advances in GIScience and geovisualization in the 1980s and 1990s which emphasized the use of computers as tools for developing map-based data visualizations of geospatial information (Dobson, 1983; MacEachren & Ganter, 1990; McCormick et al., 1987). Map animations are one of the primary means by which this has been achieved (Dibiase et al., 1992) in addition to the use of space–time cubes as an interactive geospatial representation of event data (Gatalsky et al., 2004). This informed the design of the Geo-Narrative (Kwan & Ding, 2008): one of the first narrative visualizations in GIScience that investigated the spatial and temporal activities of an individual using a space–time diagram that was overlaid onto a 2D street map. Cartographic representations of narrative activities led to the development of narrative cartography (Caquard, 2013), where narrative visualizations commonly take the form of story maps (Caquard, 2013; Caquard & Cartwright, 2014) with more artistic ideas of data comics as comic strip narratives also being proposed (Bach et al., 2018; Moore et al., 2018). Although the relationship between narrative visualizations, mental imagery, and GIScience is lacking in the literature, the use of mental imagery in a GIS decision support system was investigated by Vicentiy et al. (2016) through the concept of dynamic cognitive geovisualizations. These produce cognitive geoimages that incorporate aspects of a user's mental models and perceptions of the environment into their outputs. Recent applications include immersive narrative environments for Geo-Education (Lee, 2023) which aim to capture the idea of narrative immersion through XR narrative style GIS digital representations in virtual geographic environments (Rzeszewski & Naji, 2022). When creating virtual renditions of real spaces, these environments are affected by three issues: the processes involved in creating the VE, measures taken to optimize the performance of participants in the VE, and designing the human-virtual environment interaction (Kuliga et al., 2020). Future prospects for narrative visualizations in GIScience are related to recent advancements in Geospatial Artificial Intelligence (GeoAI) which highlights how geospatial data can be better analyzed, visualized, and represented with the use of artificial intelligence techniques (Janowicz et al., 2020). This has implications for how narrative visualizations may be generated and used in digital twin cities (Ketzler et al., 2020) as a means of governing the development of smart cities (Deng et al., 2021). The use of narrative visualizations to influence individual lives relates to the concept of AI narratives (Chubb et al., 2022) which discusses how stories are created, displayed, and analyzed using AI and how they are shaped for public perception and interpretation.

4 METHODOLOGY

To address the research objectives and hypotheses, an experiment was conducted at the University of Otago. Adult participants were recruited from the university and other communities to create experimental and control groups. Each participant was given a small incentive for their time. In total, 36 participants took part in the study (18M 18F, average age; 32.2 years). The control group finished with 13 participants (6M 7F) while the experimental group finished with 23 participants (12M 11F).

4.1 Experimental design

The experimental design was inspired by three issues associated with replicating real-world environments in VR (Kuliga et al., 2020). These are sequentially outlined in the following paragraphs.

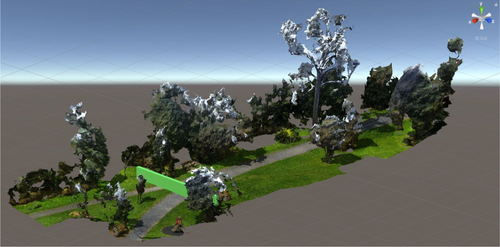

The VE is a replica of a section of Woodhaugh Gardens, Dunedin, New Zealand (Figure 2). This site was chosen due to its bifurcating path, which would allow for a 2AFC decision-making task to act as a means of establishing how well participants performed in the VE. It was created from 1600 digital images of the site taken on cloudy, windless days in September 2022 to provide spatial consistency and ambient lighting, which increased the quality of VE model. The environment afforded ample opportunity for photos to be collected under consistent conditions (i.e. with no variation in environmental features during the data collection period). Two mesh models were created and aligned, then transferred into Unity: a prominent software package for 3D game development, model building, and animation. Unity has recently become a platform for studying human behavior in virtual reality experiments (Brookes et al., 2020; Ugwitz et al., 2019).

Environmental performance was related to navigating to the path junction, where a green wall obscured the location of a pink sphere along one of the two diverging paths (Figure 3).This was the object that participants had to find in the 2AFC decision-making task. The sphere color was chosen for its low salience (Hays et al., 1972) while also contrasting with the environment enough to viably test the experimental hypothesis. Similarly, the wall that hid the pink sphere was colored green so that it blended in with the natural setting and greenery of the Woodhaugh Gardens. Participants were naïve with respect to the experimental design to avoid giving them too much information. Participants were immersed in the virtual environment with an HTC Vive Pro 2 HMD (backed up by supporting VE hardware and software, including Steam VR).

The human-virtual environment interaction was facilitated in three ways: virtual movement with the use of an HTC Vive controller, head movement, and locomotion by teleportation. Participants were teleported to the end of the path, where they would remain for either 60 or 90 ms; the upper and lower extremities of the hypothesized range of subliminal perception reviewed by Sandberg et al. (2022). This facilitated the subtle communication of decision and movement information that acted as a means of narrative guidance for the participants during their interactions with the environment. Participants were teleported either 1 or 5 s into the final navigation task. This shifted their first-person perspective from where they were, to the first-person perspective of a future location near the path junction (Figure 3). The view then returned to the first-person perspective of the participant based on their previous position after the aforementioned duration had elapsed. Similar research refers to this process as a means of discrete viewpoint control (Ryge et al., 2018), rotation and translation snapping (Farmani & Teather, 2020) and, when combined with continuous locomotion, hyperjumping (Adhikari et al., 2022; Riecke et al., 2022). This type of interface design has been found to reduce simulator sickness by up to 50% and does not lead to changes in user presence of performance (Farmani & Teather, 2020).

4.2 Experimental procedure

The experimental procedure was divided into three stages. In stage one (10 min), participants were provided with an information sheet and consent form which they read and signed. They were then instructed to do parts A–C of a paper questionnaire, which sought demographic information and a self-assessment of their spatial abilities and technological familiarity. Next, to optimize environmental performance, participants were given a demonstration which introduced them to the VR headset, the virtual environment, and the movement controller.

In stage two, participants were placed in the center of the experiment room with a calibrated virtual floor area of 2 m × 2 m. They put on the headset for a period of free environmental exploration to develop a cognitive map of the area and to familiarize themselves with how to use the movement controller. Participants spent 5–15 min exploring the environment, which included moving past the green wall to see an example of one of the paths the pink sphere could appear on. This was randomized on a trial-by-trial basis for each participant (including during their initial exploration), and they were told that the pink sphere could appear on either path. Important landmarks for the stage three tasks were identified during this time.

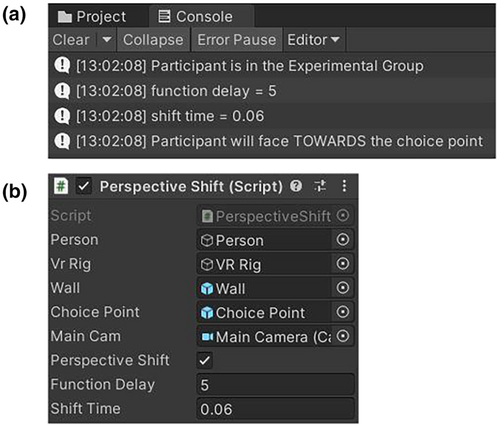

In stage three, participants were given an outline of the navigation task along with a description of the SteamVR home environment, which they waited in as each trial was prepared. This included informing participants that they would start the task either facing toward (Figure 4) or away from the green wall. Before each trial, participants were told which way to face in the physical world, and that the SteamVR home environment would display when the virtual environment was about to appear. Upon entering the experimental environment, participants traveled along the path (after turning around if they were facing away from the wall) until they were near the path junction. Participants were then asked to choose which path they thought the pink sphere was behind. After they made their choice, the wall was removed after a few seconds, revealing whether their decision was correct or not. Participants were then sent back to the SteamVR home environment to await the next trial. For the control group, the only experimental parameter was whether they faced toward or away from the green wall (constituting two trials). The experimental group did the same but with the two additional parameters mentioned in Section 4.1 (eight trials in total). The parameters for each trial were randomly generated using a separate program. Figure 5 gives an example of the output parameters for one such randomly generated trial and how they are displayed in the perspective shift code during a trial run. Once all trials were completed, participants took off the headset and began question part D of the questionnaire, which sought information about their experiences in the virtual environment. For the control group, this part of the experiment took around 10–15 min, while for the experimental group, it took 20–25 min. Overall the experiment took around 25–40 min to complete for the control group, and 35–50 min for the experimental group.

4.3 Data collection

Data for the experimental hypotheses were collected during and after navigation in the virtual environment by participants. While participants navigated the virtual environment and carried out the 2AFC decision-making task, their 3D VE position and time data were recorded from the VR headset every frame update and stored in a text file. Participants' 2AFC decisions were noted and, upon being teleported, it was noted if participants claimed to see the pink sphere while navigating to the path junction (a verbal response) or if they seemed to react to being teleported (a reactive response). Examples of reactive responses include noises that suggest participants may have noticed the spatial disruption associated with being teleported, or changes in their movement behavior such as more looking around or a discontinuity in their movement. After navigating the virtual environment, participants filled out part D of the questionnaire (post-VR experience). This was composed of 16 five-point Likert scale questions and four written responses. Some participants were informally interviewed after completing the experiment to determine whether upon reflection, they noticed the pink sphere at any time during the experimental session before they made their decision and if so, how often.

4.4 Data analysis

Summary statistics for Likert responses and virtual environment data were tabulated and compared between the control and experimental groups. Likert responses and virtual environment data were tested for normality with a Shapiro–Wilk test. If the responses were normally distributed between the two groups, a Bartlett's test for equality of variances was used. If this criterion was met, a two-sample t-test assuming equal variances was performed. If the groups did not have equal variances, a two-sample t-test assuming unequal variances was performed. As improvements were hypothesized with the use of Future Vision, the two-sample t-tests were one tailed. If any of the group responses were not normally distributed, a non-parametric Mann–Whitney U-test was performed instead. All tests used a significance level of alpha = 0.05. The analysis of the experimental interface was informed by the definition of usability testing given by the international organization for standardization (ISO reference 9241), which examines efficiency, effectiveness, and user satisfaction (Jokela et al., 2003). Task completion times and movement speed examined efficiency, performance in the 2AFC decision-making task examined effectiveness, and Likert responses examined user satisfaction.

Task completion times were defined as the period of time taken by a participant to navigate to the path junction before they carried out the 2AFC decision-making task. The completion time was the earliest piece of time data associated with a stationary position, and was taken from the end of the trial entry text file. Because the control group only had two trials from the direction parameter, a subset of the experimental group was used to compare task completion times between the two groups. These participants needed to have both a toward and an away condition in their first two trials for a fair comparison. Using these criteria, 14 participants from the experimental group were able to be used for this analysis. Averaging the completion times gave the two-trial (2T) task completion times for each participant, which were compared between groups. A two-sample t-test assuming equal variances was performed to establish statistical significance.

Speed data take into account time before the participant begins navigating with the movement controller and was derived from calculating the average distance traversed by participants in the first two trials and dividing this by their 2T task completion times. On a trial-by-trial basis, distance data were calculated as a straight line between the stationary position associated with the completion time data (on the x- and z-axis: the horizontal axes in the VE) and subtracting this from the position of the participant at t = 0. For statistical significance, a two-sample t-test with equal variances was carried out on the average speed between the two groups. Speed data were also evaluated over a 10-, 20-, and 30-s interval in addition to a 10- to 20-s and 20- to 30-s interval. Due to data availability, an additional analysis of continuous movement speeds for the 10- to 20-s interval between groups was also carried out. To establish statistical significance, two-sample t-tests with equal variances were carried out on the 20- and 30-s intervals along with the 10- to 20-s interval for continuous movement and the 20- to 30-s interval for discontinuous movement. Non-parametric Mann–Whitney U-tests were done on the 10-s and the 10- to 20-s discontinuous interval.

Experimental data from the 2AFC decision-making task were compared to a null hypothesis distribution—which assumed a chance performance on the task. A two-sample t-test assuming equal variances was performed. This covered overall performance, while an analysis of path choice accuracy for each condition was also conducted to establish the parameters that were most effective for subtly communicating and guiding participants with decision and movement information in accordance with objective three. Counts of verbal and reactive responses for participants in the experimental group during the navigation task were analyzed to determine if participants were more likely to notice certain experimental parameters compared to others. A broader analysis of precue perception was also evaluated based on informal interview responses.

Likert Responses included user feedback about the virtual environment interface and experience, feedback about the human-virtual environment interaction, and whether participants felt that their spatial cognition was enhanced in the environment. Mann–Whitney U-tests were performed on all questions to establish statistical significance.

5 RESULTS

Measures taken to analyze the efficiency and effectiveness of Future Vision yielded statistically significant results, but not measures of user satisfaction (Table 1). The following sections outline the results based on these categories.

| Usability measure | Usability variable | p-value(s) |

|---|---|---|

| Task completion times (s) | Efficiency | 0.0088 |

| Movement speeds (m/s) | Efficiency | 0.0057 |

| 2AFC decisions | Effectiveness | 0.043 |

| Likert question responses | User satisfaction | None significant |

5.1 Interface efficiency

The efficiency of Future Vision by average task completion times is outlined through the summary statistics of Table 2. Table 3 highlights the efficiency of Future Vision through the summary statistics for average participant movement speeds. Improvements in task completion times and movement speeds were immediately noticeable between the two groups. Both measures of interface efficiency demonstrate clear improvements with the use of Future Vision through reduced task completion times and faster movement speeds.

| Mean | Median | SD | Minimum | Maximum | |

|---|---|---|---|---|---|

| Control | 38.29 | 37.18 | 14.11 | 23.63 | 70.40 |

| Experimental | 26.88 | 24.93 | 8.81 | 15.66 | 50.34 |

| Difference | 11.41 | 12.17 | 5.3 | 7.97 | 20.06 |

| Change (%) | 29.80 | 32.95 | 37.59 | 33.73 | 28.49 |

| Mean | Median | SD | Minimum | Maximum | |

|---|---|---|---|---|---|

| Control | 1.79 | 1.68 | 0.58 | 0.95 | 2.71 |

| Experimental | 2.43 | 2.44 | 0.64 | 1.23 | 3.52 |

| Difference | 0.64 | 0.76 | 0.06 | 0.28 | 0.81 |

| Change (%) | 35.75 | 45.24 | 10.34 | 29.47 | 29.89 |

When partitioning movement speeds into three categories separated by 10-s intervals, the results show that average movement speeds in the first 20 s were statistically significant. However, average movement speeds over the first 10 s or the first 30 s were not statistically significant. Consequently, it was found that differences in average movement speeds in the 10- to 20-s interval produced the most statistically significant results. Despite this, differences in average movement speeds were not statistically significant for continuous movement in this interval (10–20 refined). Table 4 provides the p-values for each of the conditions along with their average movement speeds. The results show that discontinuous movement speeds in the 10- to 20-s window contribute the most to the statistically significant improvements in average movement speeds seen between the two groups.

| Category (s) | Control | Experimental | Difference | Change (%) | p-value |

|---|---|---|---|---|---|

| 0–10 | 0.76 | 0.99 | 0.23 | 30.26 | 0.63 |

| 0–20 | 1.55 | 2.11 | 0.56 | 36.13 | 0.043 |

| 0–30 | 1.35 | 1.63 | 0.28 | 20.74 | 0.31 |

| 10–20 | 2.33 | 3.22 | 0.89 | 38.20 | 0.029 |

| 10–20 refined | 3.56 | 3.75 | 0.19 | 5.34 | 0.62 |

| 20–30 | 1.75 | 1.90 | 0.15 | 8.57 | 0.76 |

- Note: Statistically significant categories are highlighted with bold text.

5.2 Interface effectiveness

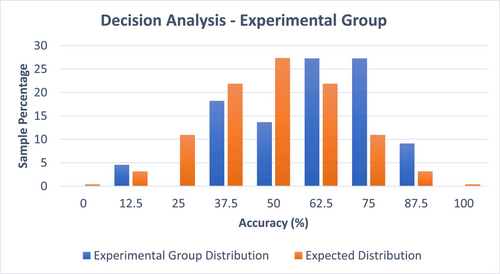

The effectiveness of Future Vision is defined by the number of correct path choices in the 2AFC decision-making task. Figure 6 shows the accuracy distribution for participants' path choices in the experimental group compared to an idealized normal distribution (see supporting materials for derivation). This is used to show how often participants would choose the correct path if these choices were made by chance alone. Summary statistics for these distributions are shown in Table 5.

| Mean | Median | SD | Minimum | Maximum | |

|---|---|---|---|---|---|

| Experimental | 60.0 | 62.5 | 18.8 | 12.5 | 87.5 |

| Expected | 50.0 | 50.0 | 15.0 | 25.0 | 75.0 |

| Difference | 10.0 | 12.5 | 3.8 | 12.5 | 12.5 |

| Change (%) | 20.0 | 25.0 | 25.3 | 50.0 | 16.6 |

The difference in path choice accuracy was statistically significant. On average, participants who used Future Vision were performing at a rate above chance when making their path decisions. Despite this, no improvements in decision-making were seen as the experimental session progressed between the two groups. Table 6 provides the results for the choice performance of each experimental condition based on the experimental parameters used. The results show that overall, conditions where participants started facing toward the wall led to a higher performance in the 2AFC decision-making task. When compared to average task completion times and movement speeds, improvements in average decision-making differ in statistical significance by an order of magnitude. This can be attributed to the fact that some experimental conditions were more effective than others for communicating decision information. Despite this, seven out of eight experimental conditions performed at a rate above chance, while one experimental condition performed at chance level.

| Condition | Direction | Delay (s) | Duration (s) | Correct | Incorrect | % correct |

|---|---|---|---|---|---|---|

| 1 | Towards | 1 | 0.06 | 15 | 7 | 68.2 |

| 2 | Towards | 1 | 0.09 | 15 | 7 | 68.2 |

| 3 | Towards | 5 | 0.06 | 14 | 8 | 63.4 |

| 4 | Towards | 5 | 0.09 | 13 | 9 | 59.1 |

| 5 | Away | 1 | 0.06 | 12 | 10 | 54.5 |

| 6 | Away | 1 | 0.09 | 13 | 9 | 59.1 |

| 7 | Away | 5 | 0.06 | 11 | 11 | 50 |

| 8 | Away | 5 | 0.09 | 12 | 10 | 54.5 |

| Total | 105 | 71 | 59.7 |

Results from informal interviews showed that upon reflection, most participants reported noticing the pink sphere once or twice, while some reported noticing the pink sphere two to three times. A couple of participants claimed that they saw the pink sphere half of the time, while other participants did not notice the pink sphere at all. For verbal and reactive responses, there were eight instances of participants noticing the pink sphere at least once during their session of eight trials. These were not always the same participants that reported noticing the pink sphere in the written responses of the questionnaire. Of these cases, five occurred during one trial, while three occurred in two trials. Verbal and reactive responses made up ~6% of the trials for the experimental group. Table 7 provides a ranked summary of the effectiveness of the experimental parameters. These are weighted by the number of times participants noticed the pink sphere, and path choice accuracy. The former is weighted more strongly than the latter as it relates to Hypothesis one. From this, it can be seen that conditions one and two are the most effective experimental parameters. These conditions had the highest path choice accuracy, while there were no reported instances of participants noticing the precues. They were displayed quickly (one second into the task) and had participants facing toward the wall and the location where they would make their path decision. Their effectiveness was not influenced by changes in shift duration.

| Condition | Direction | Delay (s) | Duration (s) | Noticed | % correct | Rank |

|---|---|---|---|---|---|---|

| 1 | Towards | 1 | 0.06 | 0 | 68.2 | 1 |

| 2 | Towards | 1 | 0.09 | 0 | 68.2 | 1 |

| 6 | Away | 1 | 0.09 | 0 | 59.1 | 2 |

| 3 | Towards | 5 | 0.06 | 2 | 63.4 | 3 |

| 4 | Towards | 5 | 0.09 | 4 | 59.1 | 4 |

| 5 | Away | 1 | 0.06 | 1 | 54.5 | 5 |

| 8 | Away | 5 | 0.09 | 3 | 54.5 | 6 |

| 7 | Away | 5 | 0.06 | 1 | 50 | 7 |

5.3 User satisfaction

- Q1: I enjoyed navigating through the virtual environment.

- Q2: It was easy to navigate through the virtual environment.

- Q3: The virtual environment experience was exciting

- Q5: The virtual environment seemed glitchy.

- Q16: My spatial cognition improved in the virtual environment.

For all of these questions, there were no statistically significant differences in the responses between the control and experimental group. Table 8 shows the average scores for these questions. Both groups generally agree with the first three questions, while the last two questions give neutral responses. Although not significant, the results show that the use of Future Vision has not led to consequences in the user experience as participants reported similar levels of enjoyment, ease of use, and no changes in perceived glitches. An interesting result is that question 16D shows a disparity between participant's perceptions and evaluations of their spatial cognitive abilities when interacting with Future Vision and their associated performance while using the interface. Put another way, even if participants reported noticing the precues, they were unaware that the visualizations produced by Future Vision had improved their spatial cognition in the context of the task they performed.

| D1 | D2 | D3 | D5 | D16 | |

|---|---|---|---|---|---|

| Control | 3.9 | 4.3 | 3.8 | 3.3 | 3.0 |

| Experimental | 3.9 | 4.2 | 3.6 | 3.2 | 2.7 |

| Difference | 0.0 | 0.1 | 0.2 | 0.1 | 0.3 |

| Change (%) | 0.0 | 2.3 | 5.3 | 3.0 | 10.0 |

6 DISCUSSION

This section begins by outlining the relevance of the results for the research objectives and hypotheses, which are related back to relevant literature to highlight their contributions. The limitations and future directions are then discussed, followed by implications of the results for GIScience and geospatial interface designs. Emphasis is placed on how the scope of GIScience is widened in XR through the construction of cognitively engineered narrative interfaces that situate mental imagery in geospatial environments.

6.1 Implications for research objectives and hypotheses

This section discusses the implications of the results for the research objectives and experimental hypotheses, beginning with objective two and Hypothesis one, followed by objective three and Hypotheses two and three. Objective one is omitted as this is achieved in the experimental design section of the methods.

Objective Two: Determine what experimental parameters are most effective for subtle narrative communication in a cognitively inspired visual imagery display.

The use of camera movements to subtly portray cognitively inspired geospatial information is an emerging area of narrative visualization research. In this regard, Future Vision expands GeoCamera (Li et al., 2023) into the 3D XR domain through virtual reality, using camera manipulations associated with teleportation as both a navigational interaction technique, a means of cognitive geospatial inquiry, and a narrative visualization technique for representing the mental images of future thoughts in a geospatial context. The effectiveness of this technique is defined by the performance of the experimental conditions in the 2AFC decision-making task. A similar study by Käthner et al. (2015) used a 62.5 ms single flash stimulus to examine cognitive communication for a spelling task with a HMD brain–computer interface (HMDBCI) in VR. Participants achieved an average accuracy of 64%. While this differs from the average accuracy of 59.7% reported in this study, this discrepancy is likely to be due to the broad range of experimental conditions used. This is reinforced by averaging the experimental conditions in Table 6 by facing direction. The toward conditions had an average accuracy of 64.7%, while the away conditions had an average accuracy of 54.5%. In this case, the average performance of the toward conditions is consistent with the average accuracy reported in the HMDBCI study for a single flash stimulus within the 60–90 ms range. A possible explanation for why the toward conditions performed better than the away conditions is due to the rotation of participants during the time of the perspective shift. This would facilitate visual loss through saccade contingent updating, where visual information in a scene is updated at the same time as an eye movement (saccade), impairing the visual system due to image blur while the eye is in motion (Triesch et al., 2002). Taken together, these results provide new insights into how mental imagery can be embedded in spatial environments and used as a form of cognitively inspired geospatial narrative communication, adding to the visual narrative comprehension literature (Cohn, 2020).

Hypothesis One.The visualizations produced by Future Vision will not be noticed by the participants.

The results show that there are a range of responses to the precues. Some participants reported noticing the precues half of the time, while other participants were unaware of the precues. As hypothesis one refers to each experimental condition, it is rejected on the basis that contributions for some decisions are coming from conscious sources. However, if hypothesis one were examining each individual condition, it could be accepted for conditions one, two, and six, where there no reported instances of participants noticing the precues during the experiment.

Objective Three: Investigate whether Future Vision influences spatial cognition and spatial decision-making by analyzing the navigational and decision-making behaviors of participants during a 2AFC decision-making task in VR.

The experimental results are in agreement with previous literature on the use of precue interfaces which provide task information ahead of time through imagery-based visualizations in XR environments. These studies found that precue interfaces improve task completion times and movement speeds in pointing tasks on 2D screens (Hertzum & Hornbæk, 2013), in 2D virtual and augmented reality interfaces (Liu et al., 2021; Volmer et al., 2018), and in 3D augmented reality environments when multiple precues are used in succession (Liu et al., 2022). This study extends these results to navigation and decision-making in 3D VR narrative environments.

Hypothesis Two.The visualizations produced by Future Vision will improve the participant's spatial navigation as they perform a goal-directed 2AFC decision-making task in VR.

And:

Hypothesis Three.The visualizations produced by Future Vision will improve the participant's decision-making as they perform a goal-directed 2AFC decision-making task in VR.

From the above discussion, both hypotheses can be accepted over the null hypothesis based on the results, which show statistically significant improvements in task completion times and movement speeds in the experimental group in addition to statistically significant improvements in the 2AFC decision-making task.

6.2 Limitations and future research

Limitations of the study include the large number of images used to compile and generate the 3D virtual environment and the processing power of the GPU, the most significant one. When participants interacted with Future Vision, the biggest limitation came from saccades and eye blinks, which resulted in a combined visual loss of 53% (see supporting materials for derivation). As a result, half of the visualizations were unnoticed by participants, reducing their decision-making to chance probabilities. This impacted the results as had this been controlled for, further improvements may have been observed for the decision-making abilities of the experimental group, strengthening Hypothesis three. Visual loss makes it difficult to determine visualization parameters that may have been successfully communicating narrative information in a subtle way, also resulting in participants not noticing the pink sphere. As such, factors contributing to visual loss represent the most significant problem when incorporating mental imagery into geospatial environments for narrative guidance. Finally, the absence of a rigorous quantitative method for determining how often participants noticed the pink sphere during their experimental sessions and a weakness in the questionnaire for determining participant's EFT abilities is also noted.

Future research could incorporate the perceptual awareness scale (Ramsøy & Overgaard, 2004) into part D of the questionnaire as a means of quantifying the participants' subjective perceptions of the precue. In addition, to quantitatively evaluate a participant's future thinking abilities, the experimental questionnaire could be adapted based on a Likert scale future thinking questionnaire created by Mazachowsky and Mahy (2020). The refined questionnaire could be incorporated into the Unity Experiment Framework (Brookes et al., 2020): a software package aimed at speeding up and simplifying the data collection process for human behavior experiments in Unity. An improved virtual environment model could be generated by using a different data collection method in RealityCapture. This includes drone imagery, terrestrial or airborne LIDAR, and video data (Xie et al., 2022).

To account for visual loss from eye blinks and saccades, future experiments should incorporate eye tracking methods into the experimental design (Clay et al., 2019). A usability study by Sipatchin et al. (2021) found that the HTC Vive Pro Eye is a useful tool for assisting in visual loss problems. Consequently, the interface code underlying Future Vision could be adapted to ensure that a perspective shift (teleportation) will not occur if a participant is undergoing a blink or saccade, acting as a means of system control (Adhanom et al., 2023). Additions to the code could come from the saccade detection algorithm of Behrens et al. (2010) that was used in a subliminal teleportation and reorientation study by Bolte and Lappe (2015). Blink detection algorithms can be incorporated into the code using Pupil Capture and Pupil Labs in Unity 3D, as was done by Langbehn, Steinicke et al. (2018) to subtly reposition and reorient participants in VR. In addition, future experiments should ensure that the experimental room is dimly lit to reduce tracking issues that are commonly encountered due to light-based interference.

6.3 Applications for GIScience and geospatial interfaces

Future Vision demonstrates how cognitively inspired narrative information can be incorporated into GIScience and geospatial interface designs as an immersive visual story, building on and expanding the concept of cartographic design as visual storytelling proposed by Roth (2021). The experiment also contributes to the wider literature on the nature of the technology, design, and human factors involved in creating geospatial narrative interfaces, leading to new notions of how modern GIScience fits into XR (Çöltekin et al., 2020). By addressing the problem of how mental imagery may be incorporated into geospatial narrative visualizations, Future Vision makes a cognitive contribution to how such visualizations inform narrative guidance and behavior in immersive geospatial environments. This mitigates the one-sided relationship between cognition and narrative emphasized by Ryan (2010) and provides a practical solution to disparities that arise between internal cognitive narratives and external narrative visualizations (Mayr & Windhager, 2018). Using camera movements to incorporate inquiry-based mental imagery into 3D visual narratives would be particularly useful for geospatial interfaces that employ visual diagrams and embedded visualizations for cue-based navigation (Mukherjea & Foley, 1995; Willett et al., 2007) and for supplementing navigational interfaces such as the 3D space-based navigation model (Yan et al., 2021) which provides a framework for seamless navigation in indoor and outdoor spaces. In this way, mental image queries associated with real or abstract navigation (of information spaces) could return images that are associated with a geodatabase of relevant environmental scenes that are associated with those queries. Such narrative visualizations could also be derived from real-time video data, allowing for a new type of exploratory data analysis based on cognitively inspired videographic geospatial inquiry (Garrett, 2011; Lewis et al., 2011). Such queries and visualizations could be used to access traffic data and assess traffic conditions in real time, providing practical uses for digital twin models of transport networks (Kušić et al., 2023). In a broader sense, this method could be incorporated into a universal framework that underlies the use of digital twins for geospatial modeling, simulations, and predictive analytics in domains such as city management, construction, and manufacturing (Semeraro et al., 2021; Sharma et al., 2022). Digital twins that generate cognitively inspired narrative visualizations could also inform the future design of immersive XR learning environments in the Earth Sciences (Hruby et al., 2019; Klippel et al., 2019; Lee, 2023; Lin et al., 2013) and define a new method for creating XR narrative style GIS digital representations (Rzeszewski & Naji, 2022).

7 CONCLUSION

Future Vision is a cognitively inspired narrative interface that simulates and visualizes mental images as episodic future thoughts (EFTs) to subtly portray decision and movement information via teleportation, acting as a means of narrative guidance for 2AFC decision-making tasks in 3D XR environments. As the world becomes increasingly digitized, GIScience and geospatial interface designs must adapt to facilitate such new types of human–computer interactions and visualizations. The incorporation of mental imagery into XR geospatial interfaces represents opportunities and challenges for narrative cartography and narrative visualization research, particularly as cartographic designs transition toward sequential images of data and information. This paper had two main objectives: to determine if mental images of future thoughts would affect user spatial cognition and decision-making in a 2AFC decision-making task in a 3D virtual narrative environment, and to establish what visualization parameters are most effective for subtly guiding users during the task. There were statistically significant differences in task completion times and movement speeds, representing improvements in spatial cognition for navigation in the experimental group (~30% for task completion times, ~36% for movement speeds). Statistically significant differences were also seen in the 2AFC decision-making task, with participants in the experimental group performing better than the control group and at a rate above chance (59.7% on average, max 68.2%). The most effective visualizations were those that were presented quickly and with the user aligned in the direction of the visual image. This research demonstrates the practicality of simulating and visualizing cognitive phenomena in a geospatial setting with imagery-based narrative designs and contributes a new method to the concept of XR narrative style GIS digital representations. Future research can improve the interface design by using eye tracking data and modifying the interface code to reduce visual loss caused by eye blinks and saccades.

ACKNOWLEDGMENTS

The authors wish to thank the following institutions for research funding: Land Information New Zealand (LINZ) for a Toitū Te Whenua Tertiary GIS Scholarship, and the University of Otago for a Māori Master’s Research Scholarship. We are grateful to Stuart Duncan and Tanh Tran for their help in the design of Future Vision and in setting up the virtual environment, as well as all the participants in the experiment. Open access publishing facilitated by University of Otago, as part of the Wiley - University of Otago agreement via the Council of Australian University Librarians.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest for this study.

Open Research

DATA AVAILABILITY STATEMENT

The data used in this study can be obtained by request to the lead author.