A Welfare Test for Sharing Health Data

We gratefully acknowledge funding support from the Office of the Privacy Commissioner of Canada Contributions Program 2023–2024 and the Universities of Waterloo (Faculty of Arts) and Strathclyde. The authors declare no known competing financial interests or personal relationships that could have influenced the work reported in this paper. This paper was presented at the IARIW-CIGI conference “Valuation of Data” that took place in Waterloo, Ontario, Canada, on November 2–3, 2023. Diane Coyle acted as guest editor of the conference papers submitted for publication in the ROIW. We thank Michael Wolfson and other conference participants for comments. We also thank Brigit Schippers and seminar participants at the University of Strathclyde, and Indian Institute of Technology (IIT), Kharagpur, India, for useful insights. Finally, we acknowledge helpful advice from an anonymous referee.

Abstract

Machine learning and artificial intelligence methods are being increasingly used in the analysis of patient level and other health data, resulting in new insights and significant societal benefits. However, researchers are often denied access to sensitive health data due to concerns on privacy and security breaches. A key reason for reluctance in data sharing by data custodians is the lack of a specific legislative or other methodological framework in balancing societal benefits against costs from potential privacy breaches. Consistent with traditional cost–benefit analysis and the use of Quality Adjusted Life Years (QALYs), this study proposes an economic test that would enable Research Ethics Boards (REBs) to assess the benefits versus risks of data sharing. Our test can also be used to assess whether REBs employ reasonable effort to protect individual privacy and avoid tort liability. Constructing an acceptable welfare test for health data sharing will also lead to a better understanding of the value of data.

1 Introduction

The objective of this paper is to construct a welfare test that can be used by policymakers and others to establish optimal sharing of confidential and sensitive health data, which have the potential to improve societal welfare through innovation, while balancing possible harm caused by breaches to individual privacy. Having an acceptable welfare test will increase the amount of research using artificial intelligence (AI) methods to analyze large datasets, leading to significant population level health benefits (Davenport & Kalakota, 2019; Kaur et al., 2022). Further, the use of an appropriate welfare test should stimulate a better understanding and increased knowledge of the true value of data, if health organizations and other data custodians are required by regulation requirements to explicitly balance the private and social benefits and costs of granting access to patient-level and other health information.

The need for a welfare test arises from difficulties in accessing health data for research purposes in many jurisdictions (Bauchner et al., 2016; Blasimme et al., 2018; van Panhuis et al., 2014), and the absence of a formal and universal test to guide data custodians in evaluating the social implications of health- data sharing.1 This holds particularly for health-related regulations, including the Health Insurance Portability and Accountability Act (HIPAA) in the United States, the Personal Information Protection and Electronic Documents Act (PIPEDA) in Canada, and the General Data Protection Regulations (GDPR) in the European Union (EU).2 Developing a welfare test to facilitate data sharing responds to the concerns highlighted in previous studies (Council of Canadian Academies [CCA], 2015, 2023), which critique the overly cautious approach by data custodians toward data sharing. Notably, in the absence of a legislative framework, data custodians tend to impose more stringent access restrictions that require significant effort and time to comply, consequently reducing the volume of such requests.

Despite these risk mitigations or safeguards in place and social benefits, many data organizations are still hesitant to share data for research given unclear regulations and liabilities. A common argument is that limited access to confidential data is more “ethical,” as it reduces the likelihood of data breaches and preserves individual privacy. Data custodians also have an incentive to be risk-averse if they are potentially liable for breaches of individual privacy. On the other hand, CCA (2015) notes that a failure to share data might be a significant opportunity cost for society, if that prevents research from being conducted to benefit society, particularly the disadvantaged communities. In such a scenario, the failure to share data could be interpreted as being unethical (Stanley & Meslin, 2007). Additionally, CCA (2023) highlights the prevalent overly cautious approach toward health data sharing in Canada and argues that the societal benefit of data is significantly greater when the data is shared, rather than being held in siloed information systems. A logical inference drawn from these messages is that an economic test weighing the potential gains in knowledge and improved health outcomes from enhanced data sharing against the associated risks of privacy loss would constitute a valuable contribution to the literature.

Our proposed welfare test on data sharing is based on net changes to societal surplus, which is consistent with the cost–benefit framework recommended by the Treasury Board of Canada Secretariat (TBS) in weighing quantifiable benefits to different stakeholders against corresponding costs, to determine the desirability of competing public-sector projects. If the marginal or incremental benefits of such projects exceed the incremental costs, then the project should be undertaken. These tests from different government organizations are based on traditional cost–benefit analysis, which defines societal welfare using an efficiency lens, where net benefits are added across different stakeholders to arrive at an estimate of societal welfare.3 Similar economic models are also employed in evaluating the effects of market power from mergers in Canada, EU, and the United States.4,5

A transparent welfare test that facilitates health data sharing can lead to significantly improved healthcare outcomes. For example, CCA (2023) documents the wide array of benefits for patients, health practitioners, and health researchers from improved health data sharing. The study notes that more rapid and more comprehensive access to a patient's medical information can assist practitioners in offering more targeted and precise care. Health data sharing results in considerable efficiencies by reducing unnecessary testing, as well as hospital admissions and consultations, which is significant given the severe capacity problems experienced by many public healthcare systems around the world. System-level data sharing is essential to improve public health programs through surveillance, reporting, and program evaluation, all of which have the potential to contribute to healthier populations and more equitable healthcare systems (Edelstein et al., 2018; World Health Organization, 2021). Improved health data sharing can also stimulate more research, leading to new knowledge and discoveries that help improve patient care and save lives.

There is an absence of legislative guidance on a clear welfare test that can be applied by Research Ethics Boards (REBs) to balance the benefits of data sharing against individual privacy concerns. This is true not only for Canada, but with respect to the EU and the United States as well. Hence, current models of data governance are more of “custodianship” rather than “stewardship.” To echo one of the conclusions of the Second Report of the Expert Advisory Group of the Pan-Canadian Health Data Strategy (Public Health Agency of Canada [PHAC], 2021), it is imperative to promote and move toward a health data governance model fostering stewardship, which recognizes the societal benefits from treating data as a public good, while ensuring effective privacy for individuals.

The results of this research demonstrate that a cost–benefit approach can be used by REBs to weigh societal benefits from knowledge creation facilitated by data sharing against possible harm from losses to individual privacy. Our methodology is also grounded in well-established cost–benefit analysis principles in health economics, where decisions are based on comparing improvements in quality adjusted life year indicators against incremental treatment costs to arrive at a potentially pareto improving outcome for society. Further, our model allows policymakers and others to define a measure of reasonable efforts by REBs to protect individual privacy. This is important given the ambiguity in legislation, which contributes to data custodians being overly cautious in releasing data for research because of liability concerns, which then likely leads to significant knowledge loss.

From a broader perspective, our proposed test can be used to construct other welfare measures to evaluate the social benefits of allowing de-identified data to be shared for societal good, while considering potential harm from potential losses in privacy. This is important given the absence of such welfare standards. For example, Scassa (2020) notes changes to Canadian data and privacy protection laws that propose enabling the use of de-identified data from individuals without their knowledge or consent, by different levels of government and public sector organizations, for “socially beneficial purposes.” However, the proposed legislation does not identify any specific welfare and/or privacy standards that can be employed to evaluate whether sharing such data fulfills socially beneficial purposes.6 Further, explicit welfare standards for health data use may also be useful given the increasing use of commercial data by academic researchers. The problem is the lack of transparency in how such data have been collected and curated and whether relevant ethical considerations have been used, especially with respect to individual privacy.7

The remainder of this paper is organized as follows. Section 2 discusses privacy legislation relevant to patient-level and sensitive health data in Canada with a brief summary of approaches used in the United States and the EU. Our proposed welfare test for data sharing is detailed in Section 3. Section 4 illustrates the use of the test through some specific examples. Section 5 concludes with a summary of our main points.

2 Accessing Patient-Level and Personal Data in Canada, the EU, and the United States

In Canada, PIPEDA is a federal act that establishes regulations for the entire country with respect to the collection, use, and disclosure of personal information, including personal health information (PHI). GDPR, enacted in 2018, applies to all EU member states and regulates the processing of personal data within the EU and the European Economic Area (EEA).8 In contrast, in the United States, there is no specific privacy legislation at the federal level. Instead, there are laws that cover privacy concerns on a sectoral or local basis, such as HIPAA, which regulates the collection, processing, use, and sharing of personal data relating to health conditions, treatments, or payments.9 All of the above legislations contain provisions that enable the processing of personal data without explicit consent for scientific and quality control purposes, which covers the use of patient-level data for research.10 Both the GDPR and PIPEDA allow for the processing of PHI for scientific research purposes if such research is conducted in accordance with ethical standards and safeguards, which is determined by an REB.11 In the United States, HIPPA similarly allows researchers to access patient-level data if their applications are reviewed and approved by an Institutional Review Board.

As detailed in CCA (2015), in Canada, all provincial/territorial legislations allow collection, use, or disclosure of identifiable PHI without consent for REB-approved research studies, but before they can do that, researchers must first have their studies reviewed by a REB or similar responsible entity. The study also noted how these, and other impediments can adversely impact the sharing of Canadian patient-level data for research purposes. Based on the comprehensive reviews of health data sharing in Canada conducted by the CCA (2015), we identify six critical reasons responsible for an absence of access to patient-level data across Canada for research purposes. First, a lack of consistency and clarity in the ethical and legal frameworks that are used to determine whether data should be shared for research purposes. Second, the existence of differing interpretations of key terms and issues in ethical/legal frameworks used across jurisdictions. For example, while federal and provincial/territorial laws enable researchers to access data that do not include “identifiable information,” the precise definition of “identifiable information” is often unclear and is not consistent across provinces or with the federal government. In a similar vein, while REBs do not face tort liability from data breaches, if they are deemed as having put in “reasonable effort,” there is no clear definition of the actions that may constitute such reasonable effort.

Third, CCA (2015) points toward tendencies among data custodians to interpret their legal/ethical duty of protecting the privacy of individuals as being best served by restricting access to data, often leading to overly cautious and conservative interpretations of permissible data sharing in many Canadian organizations. This tendency toward restrictiveness is likely attributable to the lack of specific legislation or regulations that define appropriate cost–benefit guidelines on data sharing. As noted in the Second Report of the Expert Advisory Group of the Pan Canadian Health Data Strategy (PHAC, 2021), a common reason cited by data custodians for not sharing data, is the possibility of harm through a hypothetical privacy incident from data breach. However, this philosophy ignores the harms that may occur from not sharing data. A good example is the lack of data sharing between general practitioners/family physicians and hospitals in Canada, which could lead to compromised care for individuals admitted for emergency care.

Fourth, data custodians often face an asymmetry in the sense that they do not reap any private benefits from research that generates knowledge that becomes public goods, but may face legal sanctions in the event of a data breach that compromises individual privacy (Davies & Collins, 2006).12 They may fail to consider that data shared with reasonable precautions taken (e.g., de-identification, aggregation, and encryption) poses a lower privacy risk. Hence, this perceived asymmetry results in an environment where data custodians are incentivized not to share data, even if data sharing might be supported within legal and ethical frameworks. Fifth, providing access to Canadian health data is complicated by healthcare being delivered through sometimes complex arrangements among many institutions, organizations, and programs that are often quite different across provinces, with limited coordination on data collection and analysis. Sixth, data custodians may be reluctant to share data that might reveal deficiencies in services and patient-level outcomes (van Panhuis et al., 2014). The cumulative effect of these factors are time-frames for approvals for access to health data from REBs that can range from months to years (CCA, 2015), as well as a proclivity to deny approval to research requiring significant data sharing.

Analyzing the impacts of all these possible reasons on data sharing incentives by REBs is beyond the scope of this paper. However, these findings do support the need for a uniform welfare test that can be implemented by REBs to make consistent and reasoned decisions. The need for such a welfare test is not restricted to Canada, as there is no uniform methodology weighing the benefits of health data sharing against privacy costs in the United States, nor in the EU. The creation of a test grounded in sound economic principles, designed for straightforward and transparent implementation, could facilitate a shift from the current data custodianship paradigm. In such a model, the advantages of data sharing would be explicitly weighed against privacy risks.13

3 A Welfare Test for Data Sharing

Our first step is to define the stakeholders for data sharing. Assume the existence of data (d), which is of interest to researchers to create knowledge that will result in some benefit (B) to society. The knowledge created will be a public good that is non-rivalrous and non-excludable. Consistent with the research ethics review model, researchers must obtain permission from a data custodian who has the authority to grant researchers access to data. The data custodian must use a societal framework model to evaluate whether allowing data access leads to net gains for society.

Following CCA (2015), we assume that the mandate of the data custodian is to ensure that societal benefits from knowledge creation are maximized while the probability of individual privacy being compromised is minimized. This is because the cost of knowledge creation from allowing access to data, is the possibility that individual-specific information contained in the database may be revealed, despite the use of privacy preserving technology by the data custodian. The data custodian does not gain any direct benefits from allowing access to data, but experiences costs (C) in establishing infrastructure that stores data and enables access by researchers. The cost of employing these technologies is also captured by C. For the present, C does not represent expenditures by the data custodian for protecting the privacy of individuals. The amount of effort by data custodians expended on protecting individual privacy will be addressed later.

Societal benefits from knowledge creation enabled by data sharing initially are an increasing function of data access or each unit of data. In terms of notation, this is B = B(d). However, there is a plateau, beyond which no further benefits are created, even through access to more data. Hence, and < 0 up to a certain threshold, after which . The optimal amount of data release is then defined at the point where the slopes of the marginal benefit and marginal costs (of the data custodian) are equal. We view these curvature assumptions as being conservative. The benefits of more data sharing could be a function of a critical lack of knowledge in certain research areas as well as the amount of existing data.14 One example is the lack of diversity in publicly available data sets used for facial recognition, which leads to correspondingly reduced research insights of relevance to disadvantaged communities (Kuhlman et al., 2020). In such instances, sharing available data with more researchers might not decrease marginal benefits. There may also be an argument that marginal benefits will not decline with more sharing, if the data are a public good.15

If an individual's privacy is compromised, then we assume that they will experience some harm (H), which can be monetary and/or non-monetary. The variable H captures both the probability of being harmed as well as the actual monetary and non-monetary amount of harm. We assume that sharing more data leads to a higher probability of PHI being re-identified or accessed by unauthorized third parties, and therefore, an increase in harm (H) to individuals, which increases at an increasing rate. Hence, if H = H(d), then and > 0.

In terms of other costs, the data custodian experiences economies of scale in maintaining data infrastructure and the employment of data-protection technologies. Specifically, while there are significant upfront costs in creating the infrastructure, average and marginal costs are equal and decline with each unit of data held by the custodian. Therefore, and < 0.16 As noted above, the other cost in this model is the possible harm to individuals whose information is somehow re-identified or retrieved by third parties who do not have permission to access the data. If societal costs (SC) are the sum of the operations costs of the data custodian and possible harm to individuals, then the societal cost curve will initially fall but will start to rise with increased harm, which will occur as more data are released. We initially assume that the data custodian is not liable for the harm experienced by individuals who are identified or suffer a data breach. The gap between the private cost curve of the data custodian and the societal cost curve is the amount of harm to individuals from re-identification or other privacy breaches.

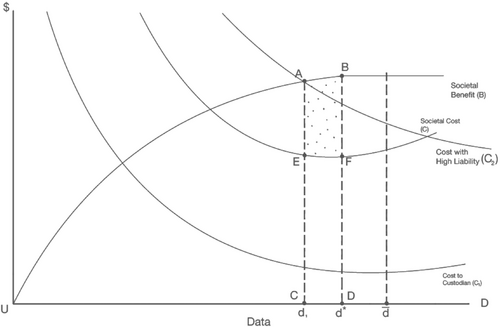

Figure 1 shows equilibrium data sharing with the societal benefit and cost curves and the cost curve of the data custodian. The data custodian's optimal amount of data release if only infrastructure costs are incurred by the data custodian, is denoted by . Taking harm to individuals into consideration, the optimal amount of data release is given by , where the slope of the societal benefit and cost curves are equal. This will be to the left of as the SC curve will start rising before the minimum point of the custodian cost curve. This model provides a framework for estimating net societal benefits from data sharing that is analogous to approaches used by other agencies. The important point is that the data custodian must compare the marginal benefits of data release against the corresponding marginal social costs which are the sum of marginal costs of infrastructure and harm to society. These benefits and costs can be calculated by the corresponding areas under the curves with respect to the amount of data being released.17

As discussed, data custodians have a limited incentive to share the socially optimal amount of data because of the lack of specific legislative guidance and concerns relating to liability. This can be captured by a shift up of C1 to C2 which represents an increase in perceived liability costs by data custodians. The amount of data shared by the custodian will then be d1, which is less than the socially optimal amount. At d1, the total societal benefit of data sharing is equal to total cost and there is no surplus generated for society.18 The marginal benefit to society of increasing the amount of data shared to is given by area ABCD. The corresponding marginal social cost is CDEF. As society will receive a net marginal benefit of ABEF, it makes sense for the data custodian to release the amount of data given by . These conclusions are based on the assumption that the welfare of all stakeholders is weighted equally. If this is the case, then sharing data is potentially pareto improving for society as the individuals who gain from data sharing can hypothetically compensate stakeholders who incur privacy losses and remain better off.

The assumption of treating stakeholders equally is consistent with the use of QALYs in health technology evaluations.19 Consider the example from Neumann (2018) that considers a hypothetical individual after the age of 70 years who develops cancer at age 74 years, and then dies 2 years later at the age of 76 years. QALYs can be used to conduct a cost–benefit analysis of the efficacy of cancer treatment. Similar to the use of the benefit function in our welfare test for sharing data, QALYs involve the use of a health utility function, which yields a specific numerical value. In the example from Neumann (2018), the health utility value “for a typical healthy individual in his or her 70s” is 0.95, which denotes a high quality of life from good health. In contrast, the corresponding health utility value or weight of another individual living with this specific cancer is assumed to be lower at 0.75.

These values can be used to understand the impacts of health treatments. For the individual who contracted cancer at 74 years and then dies at 76 years, this individual accrues (4 years × 0.95) + (2 years × 0.75) = 5.3 QALYs. On the other hand, if health treatment in the form of early screening allows the individual to live to 80 years of age, then the individual accrues 10 years × 0.95 = 9.5 QALYs. Hence, the marginal benefit of the screening to the individual is 9.5 QALYs − 5.3 QALYs = 4.2 QALYs. Assigning dollar values to QALYs facilitates the use of cost–benefit analysis to understand the societal value of different interventions, as the marginal benefit to the individual of 4.2 QALYs can be compared against the cost of screening and treatment. Therefore, our test to evaluate the societal impacts of health data sharing based on a traditional comparison of marginal benefits and marginal costs, is very similar in spirit to the use of QALYs in health treatment evaluations. In the example below, it will be seen that QALYs also play an important role in the implementation of our proposed welfare test for sharing health data.20

4 Implementing the Test

Suppose the data custodian is responsible for individual patient records at a hospital. The data custodian weighs potential harm in their decision to release data and does not factor in the cost of infrastructure (C) as such costs can be recouped through access fees for successful applications. Releasing the data has the potential to harm individuals through the risk of re-identification. Recent research has demonstrated that re-identifying individuals is possible even when released data are only a partial sample of the entire dataset and with a limited number of variables.21 However, there is less consensus on the precise harm an individual may experience if they are re-identified in a dataset. In the absence of available published data for Canada, we shall assume that the individual experiences a loss of $20,000. This could occur from successful re-identification which results in a phishing or ransomware attack.22

With the above functional form, an increase in each of the above factors will lead to greater harm to individuals who are re-identified from released data. Measuring individual harm in this manner allows us to capture how different communities may be affected by cybersecurity attacks. For example, particular groups may be more likely to be prone to successful attacks, such as seniors who are less likely to be aware of such schemes or adequate cybersecurity protection measures. The harm to them is also probably going to be higher. The probability of cyberattack variable (pa) captures the likelihood of a security breach once the data have been shared by an REB with researchers. A cyberattack is possible if an individual has been re-identified.

Cancer diagnosis techniques aim to detect the occurrence of cancer in a particular body organ of a person. In most cases, clinicians go for a biopsy where a tissue sample is removed from the body and analyzed for detection of cancer cells. Depending on the organ and type of cancer, different detection methods are available, and correspondingly researchers use some initial parameters, gene biomarkers, or CT images, etc. However, the diagnostic systems are not very efficient in performance. Since cancer is primarily asymptomatic in earlier stages, it is helpful to identify novel diagnostic techniques to reduce cancer mortality cases. With data mining in clinical research, various researchers have utilized different machine learning techniques to diagnose or classify cancer in other parts of the body.26

Another relevant study is Guo et al. (2021), which uses ML models to estimate the probability of a patient's individual post-surgery conditional risk of death, recurrence, and site-specific recurrence for cervical cancer. With access to data from multiple institutions, the authors can identify ML methods that offer superior predictions relative to traditional logistic or Cox regression models in estimating prognostic outcomes. Most studies on cervical cancer focus on estimating the probability of recurrence or death. In contrast, a key contribution of this study is the use of advanced models to evaluate the importance of specific recurrence sites (local or distant recurrence), which is essential for planning appropriate follow-up strategies. The authors use their research findings to construct a web-based calculator to predict post-operative survival and site-specific recurrence in cervical cancer patients, which can be employed by clinicians for such strategies. This is important given the traditional reliance by clinicians on their experience and knowledge to assign patients into crude categories, such as low- or high-risk groups to determine post-operative care, without accurately accounting for the specifics of each unique patient.

To quantify these benefits, we need to use a QALY indicator. In the absence of an established QALY for cervical cancer survivors, we will use the value of a statistical life recommended by the TBS (2023). The Value of a Statistical Life (VSL) is typically used to determine compensation for individuals involved in workplace accidents. VSL monetary amounts recommended by studies conducted by economists range from $5 million to $6 million. The TBS guidelines (2023) note that with a discount rate of 5%, the $5 million value of a statistical life translates into a value of a statistical life-year of $305,000 and an estimate of $143,000 per life-year, given a zero-discount rate.

Suppose that improved post-operative treatment recommended by a ML algorithm from outcomes facilitated by access to confidential data results, on average, in an increase in survival by 10 years for all cervical cancer patients treated in a hospital (TBS, 2023). Further, the quality of life is akin to what the patient experienced in good health before the onset of cancer symptoms. Hence, no further discounting is required to capture possible deteriorations in quality of life. If the hospital treats 20 patients on an annual basis, and a conservative life-year value of $150,000 is used, a back-of-the-envelope calculation implies that the aggregate societal benefits generated for patients treated at this hospital are $30 million.27

However, now assume that despite the best efforts of the researchers, sharing these data has compromised the privacy of 1,000 individuals in the dataset. Further, as many as 80% of the individuals have been subjected to successful cyberattacks, and the average corresponding financial loss is $30,000. Hence, pi = 1, pa = 0.8, M = $30,000. Therefore, harm to each individual = H = H(pi, pa, M) = pi *pa * M = 1 * 0.8 * 30,000 = $24,000. Consequently, aggregate societal harm = 1,000 * $24,000 = $24 million. If a dollar is valued equally by all stakeholders in this analysis, then sharing the data has made society better off, even though the number of people impacted by the data breach far exceeds the number of patients that experienced improved survival longevity as Societal Net Benefit = Societal Marginal Benefit − Societal Social Cost = $30 million − $24 million = $6 million. Sharing the data can be deemed to be potentially pareto improving for society as the individuals who gain—the patients who receive successful treatment—can hypothetically compensate the individuals harmed by unauthorized access to their data and remain better off.

Of course, these results are sensitive to the underlying assumptions. For example, significantly increasing the number of individuals experiencing the data breach to 5,000 implies a Societal Social Cost of $120 million. On the other hand, using a higher $305,000 VSL (based on a 5% discount rate) for 10 years for 50 patients, translates to a Marginal Societal Benefit of $152,000. On the other hand, if the treatment does not restore patients to a similar quality of life to what they had before the onset of cancer symptoms, then it is possible that societal benefits from data sharing would not exceed societal costs, and the data should, therefore, not be shared. As discussed, impacts on quality of life can be incorporated by using higher discount rates on VSL values. Societal Benefits would further decline if stakeholders were not treated equally, and a greater weight is placed on individuals whose data and privacy are compromised. The important insight from this discussion is that traditional and relatively straightforward methods developed by economists can be employed to assess the costs and benefits of patient-level data sharing, incorporating considerations of efficiency and equity. Another caveat is that the above example suggests that PHI data should be shared even if there is a high probability that many individuals will suffer cyberattacks and experience different forms of harm. However, although such an outcome might be potentially pareto improving, REBs should probably also consider the magnitude of harm in an absolute manner, and not exclusively, relative to potential benefits. There should be limits to the amount of “permissible harm” to individuals whose PHI are being shared.

Further caveats are in order. Our calculations of net societal benefits in the previous examples are premised on the assumption of correct diagnoses, which then determines the probability of disease onset and benefits of proper health treatments. On the other hand, diagnoses may sometimes be a result of false-positives from tests, which then lead to unnecessary treatments that might be costly and harmful to health. Let us take the example of breast cancer. Two recent systematic reviews have found that models using mammograms to predict risks of breast cancer are rarely precise enough (Anothaisintawee et al., 2012; Gardezi et al., 2019), and suggest that imprecise breast cancer prediction models can be attributed to data quality and scarcity. Further, findings from Ho et al. (2022) imply the presence of significant cumulative false-positive events (consisting of recall for further imaging, short-interval follow-up recommendations, and biopsy recommendations) associated with annual or biennial screening, even with digital breast tomosynthesis (DBT), which is a more advanced technology than digital mammography, adjusting for age and breast density.28 While this example is with respect to a single disease for women, it is a strong demonstration that estimating the net societal benefits of releasing health data is not a straightforward exercise.29

The second caveat is estimating accurate probabilities of compromise in data security and resulting successful cyberattacks on individuals.30 As detailed above, our calculations are based on assumptions of high probabilities of failures in privacy protection and data security. Data sharing can lead to compromises in data security that can be traced to a multitude of entry points which include (but are not restricted to): malware, identity theft from phishing emails; unpatched vulnerabilities in software systems, and unauthorized entry through company network systems.31 On the other hand, if REBs and researchers invest in appropriate data security methods and protocols, the probability of compromises in data security and unauthorized access should diminish. When researchers apply for REBs for research, they are usually required to document and take a series of risk mitigation practices to ensure potential harms will be mitigated. For example, when using health data, researchers need to specify where the data will be stored and what protections will be implemented to ensure the security and privacy protections of research data. In addition, if researchers use existing datasets from a data custodian instead of collecting data themselves, the data organization will also mitigate risks by imposing restrictions on researchers, such as a secure environment where researchers can only download their statistical results but not data themselves.

In terms of specific data security protocols, prior to any data sharing, REBs and university researchers need to confirm that cryptographic based authentication protocols are in place that can secure communications over networks.32 Further, shared data should be encrypted, with a limited number of keys given to researchers. Data masking might be useful in ensuring that individual anonymity is protected through replacing sensitive personal identifiers (such as date of birth and addresses) with unidentifiable values. Access control measures also need to be implemented by researchers to ensure that only the data required for the specified research are released to accredited researchers who have received training in data security and privacy. A comprehensive overview of all possible data security measures is beyond the scope of this research. However, ensuring that the above protocols are properly followed should significantly reduce the likelihood of a breach in data security.

In many cases, the data that researchers access from secure facilities are de-identified. However, re-identification remains a concern. The Panel found that best practices in de-identification can lower the risk of re-identification to acceptable levels. Although health data breaches can cause serious harm, the risk of a breach actually occurring in the context of research is low, particularly if effective governance mechanisms and protocols are in place and respected by care providers, researchers, and data custodians.

This view is consistent with what we have explicitly stated. While a potentially pareto improving methodology based on total societal surplus should be considered, significant harm should not be acceptable, and measures must be implemented to reduce the likelihood of successful attacks and harm. In this respect, more research is needed on how successful cybersecurity attacks are conducted and on the best preventative measures that can be implemented by data custodians and other research institutions that share health data.

4.1 Efficient Liability

Ensuring that the data custodian faces some liability for data breaches certainly incentivizes it to ensure adequate security protocols. As noted earlier, Canadian provincial laws contain provisions providing that data custodians may face tort liability and/or criminal sanctions if they are in breach of their duties under certain legislation. They are protected from liability if they can demonstrate that “reasonable effort” was made to secure the confidentiality of the data. However, what constitutes “reasonable effort” has not been defined by legislation.

Our model can assist in defining the optimal fine/penalty for data breaches as well as offer a definition of “reasonable effort.” The intuition is akin to how carbon taxes are used to increase costs on producers and consumers, resulting in a lower-equilibrium quantity of fossil fuel use in society. From the perspective of efficiency, carbon taxes must be proportionate to the marginal damage to the environment that is a result of market activity. Specifically, the optimal carbon tax is equal to the amount of marginal damage to the environment at the socially optimal level of industry output, which is defined by the intersection between the industry demand and societal marginal cost curves. Similar to this study, the societal marginal cost curve is the sum of the industry supply or marginal cost curve and the marginal damage curve from pollution. Imposing a carbon tax shifts up the supply curve and results in a higher price to consumers if neither the demand nor supply curves are perfectly elastic or inelastic. The carbon tax results in a transfer of total surplus to the government and a deadweight loss, both of which are shared between consumers and producers depending on the elasticities of demand and supply.33

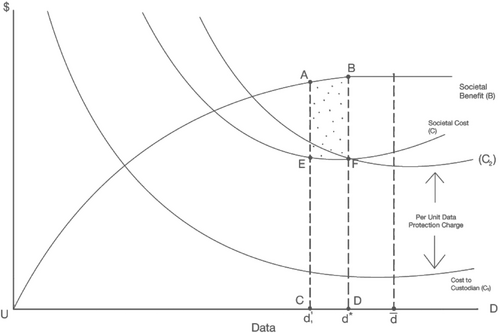

Imposing significant liability on researchers may result in a ‘chilling effect’ on applications for data access and resulting in reductions in the production of knowledge. Therefore, we focus on defining liability exclusively from the perspective of data custodians, which is consistent with existing legislation. In this respect, we propose that “reasonable effort” be interpreted as optimal effort. In the same way that carbon taxes result in an optimal amount of pollution by balancing the marginal damage to the environment against deadweight loss to consumers and producers, data custodians can be thought to have demonstrated “reasonable effort” and be free from liability by spending resources on data protection that is equal to the amount of marginal harm to individuals at the efficient amount of data sharing d = . These extra expenditures can be thought of as an extra “data-protection charge.”

This effect can be seen in Figure 2, where the data custodian's cost curve shifts up to C2 from C1. The amount of the increase in data custodian costs is the amount of marginal harm at the efficient amount of data sharing d = . A per-unit data-protection cost incurred by the custodian would lead them to an efficient amount of data sharing as long as the custodian would then be free from liability. Any increase in this data-protection charge beyond this amount leads to a suboptimal amount of data released by custodians and less knowledge creation and innovation for society. However, evidence that a data custodian is spending resources equal to an optimal data-protection cost should not be taken as a sufficient condition for liability release in case of a data breach. Obviously, the data custodian should also be given guidelines on the quality of their data protection measures and be expected to abide by them.

5 Conclusion

This paper presents a welfare-test framework to determine the optimal levels of data sharing, aimed at balancing societal innovation against possible harm to individuals from compromises to their privacy. This framework can be used by REBs to evaluate access to data proposals and evaluate the net social benefits associated with data sharing. The methodology defines the efficient level of responsibility of REBs through resources spent on data and privacy protection. This would reduce the liability concerns of REBs and other custodians and encourage enhanced data sharing that should lead to increased innovation and knowledge creation, with the potential to significantly improve health outcomes. Requiring our proposed cost–benefit framework should lead to a better understanding of the value of data, as researchers submitting applications to REBs will need to discuss the specific societal benefits that might result from their research and REBs will need to evaluate that information. While the development of our framework is based on existing privacy legislation in Canada, the implications are relevant to jurisdictions around the world, given research that has documented barriers to health data sharing and the corresponding loss in innovation and knowledge that might lead to better individual outcomes.

Limitations to our research are acknowledged. First, this study focused on a few examples, which illustrate the sensitivity of findings to underlying assumptions. Second, estimating the benefits of data sharing can be complicated if there is a significant likelihood of false-positive events from health treatments. Third, while the implementation of our test is applicable to the release of a single dataset by an REB, researchers may also be interested in the possibility of merging datasets from different sources. In such a scenario, constructing an appropriate cost–benefit approach becomes more complicated. The emergence of federated learning methods presents a means to facilitate data sharing efficiently while preserving individual privacy. Researchers in the United Kingdom have made progress in using federated learning with respect to patient data (Soltan et al., 2024). However, further research is required to determine the extent to which privacy is protected through federated learning methods and whether individual data may be exposed to more risk relative to the case of a single dataset. This recommendation is consistent with the overall need to understand the correlation between the use of specific data security protocols and the probability of successful cyberattacks. We plan to construct cost–benefit models relevant to federated learning in future research.

Finally, and perhaps more importantly, there is a significant knowledge gap in the value of health data. As we stated earlier, it is our opinion that implementing welfare tests such as the one proposed in this study will incentivize more research and effort toward this direction with significant spillover effects on how individual data can be valued in other sectors. Our intent is to pursue future research that helps establish baseline estimates of the probabilities of successful re-identification and the value of data sharing for different types of health research that can be mapped to treatments for specific diseases. We hope to create a table of such values that can be used by REBs to facilitate efficient data sharing.

References

- 1 Gordon et al. (2021) develop a “data utility” methodology that might be used by organizations such as Health Data Research UK which was established to unite the UK's health data to enable innovative research aimed at improving population health. However, their approach is based on developing a coding scheme that allows potential users to understand datasets characteristics, use by previous researchers, and the availability of coding programs and data dictionaries (for example). They do not develop an explicit welfare-based model that can be used for data sharing purposes.

- 2 In addition to privacy, security of health systems is another major concern with regards to data protection. European Union (EU) delegates have debated health data sharing for social benefits with proper safeguards in place to protect privacy and security in the 2012 European Summit to establish trustworthiness for health data sharing and secondary uses (Geissbuhler et al., 2013). However, how to have consistent and proper safeguards has remained inconclusive since corresponding regulations vary across different jurisdictions.

- 3 For example, see Financial Conduct Authority (2024) and US Environmental Protection Agency (2016).

- 4 The Competition Bureau of Canada used to weigh the economic costs that might occur to consumers who might pay higher prices because of a lessening of market competition against gains to merging parties in the form of cost savings. The methodology used by the Competition Bureau is known as “Total Surplus.” See Competition Bureau of Canada (2024) for further details.

- 5 Other studies have employed a cost benefit approach based on competition policy/law and economics in evaluating the effects of enhanced data sharing on innovation and firm level competition. Prüfer & Schottmüller (2021) develop a model to capture the advantage of an incumbent/market leader in data-driven markets. Sen (2022) looks at the effects of surplus created in data markets where incumbents are regulated in sharing data collected from digital platforms with entrants. Graef & Prüfer (2021) evaluate the efficiencies of different governance mechanisms that can be used to implement mandated data sharing by large firms with smaller entities. Please refer to Jullien & Sand-Zantman (2021) for a comprehensive literature review on the economics of platforms and two-sided markets along with associated competition policy problems.

- 6 Goldfarb & Que's (2023) work is an excellent review of the economic effects of privacy considerations that should be considered in a welfare test.

- 7 For further details, please see Alberto et al. (2023).

- 8 The European Data Protection Supervisor has guidelines that must be followed in the collection, storage, and use of personal data in terms of balancing fundamental rights to privacy. Further details can be found here https://edps.europa.eu/data-protection/our-work/publications/guidelines/assessing-proportionality-measures-limit_en (last accessed January 25, 2024). However, the guidelines do not contain any specific welfare test that can be used to evaluate the gains to information sharing against possible harm.

- 9 Some states (e.g., California, Colorado, Connecticut, Utah and Virginia) have implemented their own data privacy laws for individuals, with other states expected to implement legislation during 2025–2026. Broadly speaking, state-level legislative initiatives in the United States have some features in common with the GDPR, such as the right to have personal information deleted, and to opt-out of the sale of personal information.

- 10 With respect to the use of individual-level data by the private sector, the GDPR requires explicit consent from individuals, which must be freely given, specific, and informed. Individuals have the right to withdraw consent for the use of their data at any time. PIPEDA also mandates obtaining consent, and the use of a clear and understandable consent process that enables individuals to be made aware of the purposes for which their data will be used. CCA (2023) notes the difficulties associated with obtaining direct consent at a population-level for health data sharing. Further, requiring consent could impact the statistical validity of data samples if it leads to reduced sample sizes. On the other hand, CCA (2023) also points out that Australians are allowed to opt out of allowing their My Health Records data to be used for any secondary purposes, such as research, public health, and health system management (McMillan, 2023).

- 11 In Canada, the processes through which patient-level data may be obtained by researchers is usually governed by province-specific legislation, which supplement PIPEDA. In Ontario, the sharing of patient-level data for research purposes is governed by the Personal Health Information Protection Act (PHIPA). Under PHIPA, health information custodians, which include hospitals, are permitted to disclose PHI for research purposes under certain conditions. For example, PHIPA provides criteria under which health information custodians can disclose PHI for research without consent. This includes situations where obtaining consent is not reasonably possible and where the research has been approved by an REB. In terms of accessing data from across the county, the Canadian Institute for Health Information collects health data shared by custodians across the country, which it can share with authorized researchers. Statistics Canada also has access to the same data, which it can provide to university researchers, with linkages to its other datasets.

- 12 In Canada, all provincial legislation contains provisions such that data custodians may face tort liability and/or even criminal prosecution if they are deemed in breach of their duties of care under applicable legislation. However, they may be protected from liability if they can demonstrate reasonable efforts were made to secure the confidentiality of the data. Unfortunately, there is a legislative gap, as no provincial/territorial laws in Canada offer a precise definition of what constitutes “reasonable efforts.” For further details please refer to CCA (2015).

- 13 This distinction between data custodianship and data stewardship is taken from CCA (2015).

- 14 An important point made by Acemoglu et al. (2022) is that a person willing to share their data may find that their information does not have value if there are other individuals with similar characteristics who have already made their data available.

- 15 As noted by Goldfarb & Tucker (2019), because digital information can be copied for near zero marginal cost without degrading quality, data can be viewed as a being a nonrival good.

- 16 The assumption of declining costs is consistent with Agrawal et al. (2018) who assume that the marginal cost of data to information conversion (AI and predictive analytics) is downward sloping. The marginal cost of infrastructure costs should also be downward sloping. The assumption is also consistent with Sen (2022) who studies the surplus effects of data markets.

- 17 Of course, an underlying assumption is that equations for these curves can be estimated. This is possible through econometric methods developed by economists, provided that relevant data are available.

- 18 The idea here is similar to a “Tragedy of Commons” dilemma where no surplus is generated for society.

- 19 Please see Whitehead & Ali (2010) for a detailed discussion on the use of QALY indicators in health outcome evaluations.

- 20 The use of QALYs is not without issues. As noted by Neumann (2018), their use can favor resource allocation to younger and healthier populations that have more possible QALYs to gain from health intervention treatments. Another point is that QALYs require putting specific numbers on the gain to individuals, which can imply what people and/or certain groups are “worth” to society.

- 21 See Rocher et al. (2019).

- 22 This figure is based on a story available at https://www.cbc.ca/news/canada/toronto/bmo-scam-line-of-credit-two-factor-1.6947461, last accessed January 15, 2024.

- 23 The harm caused by privacy breaches is of course, not restricted to monetary losses. Victims can experience significant mental, emotional, and legal costs as well.

- 24 Assuming a linear multiplicative form of societal harm is consistent with Becker (1968).

- 25 Refer to Kaur et al. (2022).

- 26 In their literature review, Kaur et al. (2022) noted that the following patient-level information is typically used by studies: (1) age, gender, marital status; race; (2) all cancer-related information diagnosed at the time of examination by the clinician, such as tumor size, cancer type, stage of cancer, primary site; (3) type of treatment (type of surgery, chemotherapy cycles, androgen deprivation therapy); and (4) lifestyle attributes, such as smoking, tobacco, alcohol, and other comorbidities.

- 27 All treated patients experience the same benefits, and hence, in this example, the marginal benefits of research do not decline with data release.

- 28 Ho et al. (2022) use data from roughly 1 million women and almost 3 million mammograms over a 10-year period performed at 126 radiology facilities in the United States. The main finding of the study is that DBT was associated with fewer recalls for false-positive results in comparison to digital mammography (49.6% vs. 56.3%).

- 29 To address data challenges when sharing such sensitive health data is impossible, some researchers have turned to synthetic mammograms to augment existing training datasets so that they can develop more robust breast cancer prediction models (Cha et al., 2019; Guan, 2019).

- 30 We are grateful to an anonymous referee for noting the need to acknowledge both these caveats.

- 31 Apart from security breaches, careless sharing of confidential information can significantly compromise individual privacy. There are well-documented cases of the inadvertent sharing of HIV status of individuals that resulted in significant compromises to individual privacy. Please see https://www.cbc.ca/radio/asithappens/as-it-happens-thursday-edition-1.4270109/patients-whose-hiv-status-was-revealed-in-envelope-windows-take-insurance-company-to-court-1.4270110 (last accessed July 2, 2024) and https://www.theguardian.com/technology/2015/sep/02/london-clinic-accidentally-reveals-hiv-status-of-780-patients#:∼:text=The%20newsletter%20was%20sent%20to,full%20names%20and%20email%20addresses (last accessed July 2, 2024) for examples of such stories. In such cases the costs of failures in data security go beyond monetary implications and also entail embarrassment and psychological trauma. We are grateful to an anonymous referee for noting these types of cases.

- 32 For further details on adequate data and information security protocols regarding health data, please see Abouelmehdi et al. (2018), Jawad (2024), Kaissis et al. (2021), Mothukuri et al. (2021), and Qiu et al. (2020).

- 33 A simple exposition of this can be found in Rosen et al. (2023).