Artificial intelligence and melanoma: A comprehensive review of clinical, dermoscopic, and histologic applications

Abstract

Melanoma detection, prognosis, and treatment represent challenging and complex areas of cutaneous oncology with considerable impact on patient outcomes and healthcare economics. Artificial intelligence (AI) applications in these tasks are rapidly developing. Neural networks with increasing levels of sophistication are being implemented in clinical image, dermoscopic image, and histopathologic specimen classification of pigmented lesions. These efforts hold promise of earlier and highly accurate melanoma detection, as well as reliable prognostication and prediction of therapeutic response. Herein, we provide a brief introduction to AI, discuss contemporary investigational applications of AI in melanoma, and summarize challenges encountered with AI.

1 INTRODUCTION

Pigmented lesion classification poses a challenging task for dermatologists and pathologists alike given the variable clinical and histologic presentations. Melanoma has increased potential for morbidity and mortality compared to non-melanoma skin cancers, and it is now the third most commonly diagnosed cancer in the United States (Marghoob et al., 2009; Welch et al., 2021; Whiteman et al., 2016). While dermatologists require the fewest number of biopsies per diagnosis of cutaneous malignancy, both providers and patients must nevertheless accept that any skin biopsy exchanges diagnostic information for scar tissue (Nelson et al., 2019). Finding a diagnostic balance can be difficult, especially on the cosmetically sensitive regions of the face. Dermatologists have a 65–80% accuracy rate in melanoma diagnosis without the use of epiluminescence microscopy (dermoscopy). Artificial intelligence (AI) has potential to be a useful adjunct tool to assist dermatologists with this challenging diagnosis (Argenziano & Soyer, 2001). Thus, it is imperative that dermatologists understand the basics of AI.

Advances in AI and computer processing have led to increasing scientific and medical interest, to the extent that over 60,000 manuscripts were published on AI in 2017 (Artificial Intelligence, 2018). The role of AI in medicine is being investigated in numerous specialties, and dermatology is uniquely positioned to play an important role in its development due to the availability of large image databases (Filice, 2019; Hogarty et al., 2019; Kothari et al., 2019). A recent study found that while 85% of dermatologists were aware of AI as an emerging field, only 24% reported good or excellent knowledge on the subject (Polesie et al., 2020). We aim to provide an overview of current AI learning modalities and to review the application of AI in clinical, dermoscopic, and histologic evaluation of melanoma.

2 INTRODUCTION TO AI

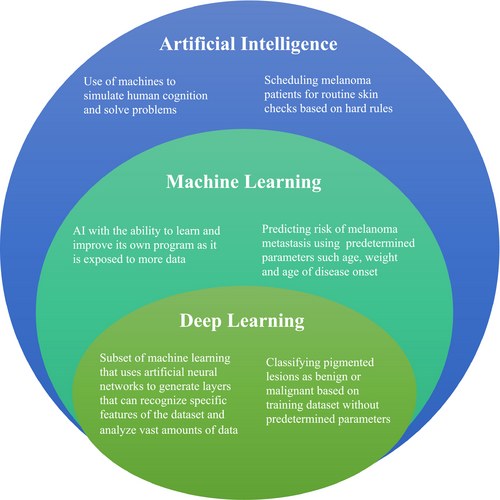

Artificial intelligence is the broad term used to describe the use of machines to simulate human cognition, answer questions, and solve problems (Table 1, Figure 1).

| Convolutional neural networks | A deep-learning algorithm especially useful for classification of images |

| Covariates | Input variables, also known as predictors or independent variables |

| Data augmentation | Techniques used to increase amount of data by modifying existing data |

| Deep learning | A subset of machine learning that uses multiple layers to extract high-level features |

| Epoch | A pass through an entire dataset in which the networks examines all data points |

| Ground truth | The gold standard outcome |

| Loss | Term used to quantify performance, higher loss equals worse performance |

| Machine learning | A branch of artificial intelligence in which computer algorithms that write their own programs draw inferences from data patterns |

| Overfitting | Undesirable effect that occurs when a model becomes very good at classifying data included in its training set, but is not as good at classifying new datasets |

| Semi-supervised learning | Algorithm is trained with both labeled and unlabeled data |

| Supervised learning | Algorithm is trained with labeled data |

| Test or validation subset | Data not seen during training, used to evaluate performance of the network |

| Training subset | Data with which the algorithm is trained in order to improve performance |

| Transfer learning | Transfer of pretrained models on a similar task to a new program |

| Unsupervised learning | Algorithm is trained on unlabeled data points |

| Weights | Parameters or instructions updated during training until performance is maximized |

2.1 Machine learning

Machine learning is a subtype of AI that involves the use of computer systems that draw inferences from data patterns without being explicitly programmed (Murphree et al., 2020). The learning approach can be supervised, semi-supervised, or unsupervised. With supervised learning, the machine is given data in which each example is labeled with the answer, or class, and the machine learns through trial and error. This method is used most commonly in dermatology, for example training with clinical photographs labeled with their pathologic diagnosis (Murphree et al., 2020). In contrast, with unsupervised learning, the input data do not have a defined answer and the algorithm discovers relationships. Data are assigned to groups based on similarity, and associations can be identified. Semi-supervised learning involves combining the two approaches, with labeled and unlabeled data (Hogarty et al., 2020).

The machine accepts input variables (covariates) and combines them to predict a specific outcome or response. For example, with photographs, the covariates are pixels, and the response could be the classification of the entire image (e.g., melanoma or benign nevus) or segmentation of specific pixels (e.g., identify the borders of the tumor) (Murphree et al., 2020). In the classic approach of machine learning, the researcher selects the subset of features to be used in model construction, which is time consuming and involves human expertise—outlining areas of interest, excluding image regions, and defining diagnoses (Brinker et al., 2018).

2.2 Deep learning

Deep learning is a specific type of machine learning which uses ordered layers of artificial neural networks (ANNs) in order to generate multiple layers that can recognize specific features of the dataset (Figure 2). The researcher does not have to manually extract features from the image. Not only is deep learning faster than the classic approach of machine learning, it also has improved performance (Marka et al., 2019). A neural network is inspired from the human brain's neurons, and adaptively optimizes performance (Murphree et al., 2020). The various layers of a neural network used for skin lesion classification may use lines, shapes formed from lines, and complex structures made from these shapes to ultimately classify the lesion into diagnostic categories such as basal cell carcinoma, sebaceous hyperplasia, or fibrous papule.

2.3 Convolutional neural network

Convolutional neural networks (CNNs) are a subtype of ANNs that are particularly useful for the classification and recognition of images. Trainable filters are applied on labeled raw input images in order to automatically extract complex high-level features by decomposing the dataset into smaller overlapping tiles (Ji et al., 2010; Nasr-Esfahani et al., 2016). CNNs learn the relationship between the covariates and output class through the numerous hidden layers in which features are extracted (Brinker et al., 2018). These models have been shown to detect traffic signs, objects, and faces better than humans, and are used in robots and self-driving cars (Brinker et al., 2018). There are numerous publicly available classification architectures, including Inception V3, VGG16, AlexNet, GoogLENet, ResNet, and Xception (Brinker et al., 2018; Chollet, 2017; Simonyan and Zisserman, 2015; Szegedy et al., 2015). Given their superior ability to recognize and classify images, CNNs are the primary method investigated for use in dermatology (Brinker et al., 2018; Nasr-Esfahani et al., 2016).

The most crucial part of creating an effective network is the dataset. Fortunately, a CNN can be pretrained on another large dataset through transfer learning, so CNN models with millions of free parameters can be used for classification, even if only a small amount of data is available for additional training (Brinker et al., 2018). Current popular software frameworks available to train CNNs are TensorFlow and PyTorch (Abadi & TensorFlow, 2016; Goyal et al., 2020; Paszke et al., 2019). There are also validated training datasets published and available for free, such as ImageNet, International Skin Imaging Collaboration (ISIC), DermQuest, and Dermofit Image Library that can be utilized to pretrain an algorithm (Codella et al., 2015; Esteva et al., 2017; Kawahara et al., 2016; Pomponiu et al., 2016; Russakovsky et al., 2015). In addition, datasets can be increased in size by data augmentation, where small changes are made to each labeled image without destroying the discriminative information, that is, rotation, cropping, and random color jitter. Unfortunately, there is no gold standard for the number of images required to train a successful neural network (Murphree et al., 2020).

The dataset is usually divided into two subsets: training subset and test/validation subset. The test subset is used to evaluate the model on data; it has not seen during training. An epoch is one pass by the model through the entire training set. During training, the network's predictions are compared to the labeled outcome (ground truth), and the optimizer algorithm updates its internal parameters (weights) in order to minimize mistakes (loss). This process repeats itself until the results plateau or the desired accuracy is reached. Performance is often plotted as a function of epoch. While training, the researcher must be careful to avoid “overfitting.” When a network is overfitted, it may perform very well with its training data but fail with new datasets as it overgeneralizes information from its dataset. This can happen with inherently biased datasets, such as datasets with limited Fitzpatrick skin types or datasets with insufficient numbers of images.

3 AI IN PIGMENTED LESION CLINICAL DIAGNOSIS

Classical machine learning techniques were used for pigment lesion classification as early as the 1990s, but the first studies using CNNs for pigmented lesion classification based on clinical images were published in 2016 (Ercal et al., 1994; Kawahara et al., 2016; Nasr-Esfahani et al., 2016; Pomponiu et al., 2016). Most authors use similar methods, in which the images are first preprocessed to reduce illumination and noise effects due to nonuniform lighting and reflections of light on the skin surface. The normal surrounding skin texture is smoothed in order to reduce its effect on the interpretation of the lesion. The dataset used by Nasr-Esfahani et al. consisted of 170 non-dermoscopic clinical images (70 melanoma, 100 nevus), increased to 6120 images by cropping, scaling, and rotating the original images. The feature extraction was left up to the CNN. The algorithm was 81% sensitive, 80% specific, and 81% accurate for detecting melanoma (Nasr-Esfahani et al., 2016). Other studies have found similar or better results, with sensitivities up to 90% and accuracies of 82%–94% (Han et al., 2018; Kawahara et al., 2016; Pomponiu et al., 2016). Esteva et al. used 129,450 images (including 3,374 dermoscopy images) with a pretrained CNN. The program was found to be equivalent to twenty-one board-certified dermatologists on distinguishing benign nevi versus melanoma. The area under the receiver operating curves (AUCs) for carcinomas and melanomas were both 0.96. On a test set of 135 epidermal tumor clinical images, 130 melanocytic lesion clinical images, and 111 melanocytic lesion dermoscopy images, the program outperformed dermatologists when asked whether to biopsy, treat the lesion, or reassure the patient (Esteva et al., 2017).

While most AI image classification is trained and assessed using cropped images, Soenksen et al. investigated the accuracy of AI in skin lesion classification from wide-field images (Soenksen et al., 2021). The CNN achieved a sensitivity and specificity of 90% in distinguishing suspicious pigmented lesions from nonsuspicious lesions, skin, and complex backgrounds. These authors also used the wide-field images to compare the CNN’s intrapatient saliency ranking (ugly duckling criteria) to that of the dermatologists. The CNN exhibited 83% agreement with at least one of the top three lesions in the dermatologists ranking. A CNN’s ability to detect the ugly duckling could improve assessment of a large number of skin lesions in a primary care visit and allow for enhanced patient triage (Soenksen et al., 2021).

AI with dermoscopic images is more widely published compared to clinical images. A recent review found that out of 51 articles, 38 used dermoscopic images, 12 used gross images, and one used a combination of both (Zakhem et al., 2020). Dermoscopy is not always readily available, especially to non-dermatologists, so it is important that more studies examine databases with gross clinical images (Bi et al., 2017; Codella et al., 2015; Cui et al., 2019; Dreiseitl et al., 2001; Marchetti et al., 2018; Phillips et al., 2019; Zakhem et al., 2020).

4 AI IN PIGMENTED LESION DERMOSCOPY

AI has been applied to dermoscopic images for over twenty years (Binder et al., 2000). Contemporary AI utilizes CNNs to classify dermoscopic images as benign or malignant. While early studies of machine learning utilized datasets with dermoscopic images on the order of 102, (Dreiseitl et al., 2001) investigators have recently trained CNNs with 104 dermoscopic images (Brinker et al., 2019).

Several studies comparing CNNs and humans have been published in recent years, wherein the challenge is to correctly categorize dermoscopic images as melanoma vs. non-melanoma (Esteva et al., 2017; Haenssle et al., 2018; Marchetti et al., 2018, 2020; Tschandl et al., 2019). A systematic review identified eleven studies comparing the accuracy of a CNN to human experts on the classification of pigmented lesions with dermoscopic images (Haggenmüller et al., 2021). Despite heterogeneity in datasets, methodologies, and outcome measures, a common theme presents itself: AI is able to match or outperform humans in the categorization of pigmented skin lesions. In a comparison of AI and dermatologists in the classification of dermoscopic images as nevi, melanoma, or seborrheic keratoses, the AI algorithm achieved an AUC of 0.87, compared to 0.74 for the dermatologists. In the same study, at the dermatologists’ overall sensitivity of 76%, the AI algorithm achieved a specificity of 85% versus the dermatologists’ 72.6%. In a separate comparison of AI versus 58 dermatologists in the detection of melanoma using dermoscopic images, the dermatologists achieved a sensitivity of 86.6% and a specificity of 71.3%; however, at the same sensitivity, the CNN had a superior specificity of 82.5%. With the addition of clinical information and images, the dermatologists improved their sensitivity (88.9%) and specificity (75.7%), yet still fell short of the CNN. Overall, the CNN’s AUC was 0.86, compared to the dermatologists' 0.79 (Haenssle et al., 2018).

Some studies have provided tangible insight into how AI may complement dermoscopic evaluation of skin lesions. Marchetti et al. examined how AI might aid lesion classification by imputing algorithm classification to lesion evaluations where physicians had low diagnostic confidence (Marchetti et al., 2020). These low-confidence evaluations represented 26.6% and 51% of all dermatologist and dermatology resident evaluations, respectively. With AI assistance, the percentage of the lesion evaluations correctly classified increased from 73.4% to 75.4% for dermatologists (n = 905) and from 69.4% to 72.6% for dermatology residents (n = 981) (Marchetti et al., 2020).

In contrast to static images used to train AI algorithms, the human examiner uses variable lighting and perspectives (e.g., head-on view, side view) to more thoroughly understand the morphology of a lesion. In an effort to examine how minor image perturbations might lead to compromise (“brittleness”) of an AI pigmented lesion classification algorithm, Maron et al. trained three CNNs to differentiate between dermoscopic images of melanoma and benign nevi (Maron et al., 2021). Performance after introduction of minor image changes was assessed. Multiple images of each lesion were included in two distinct test sets. The first test set consisted of “artificial” changes, such as zooms and rotations. The second test set contained “natural” changes, meaning slightly different photographs of the same lesion. The artificial changes resulted in a 3.5% to 12.2% probability of a diagnostic class change, for example, from nevus to melanoma or vice versa. These results suggest that AI is susceptible to image variables that may be inconsequential during visual examination by the practitioner.

CNNs have recently been used to classify dermoscopic images of pigmented lesions on special sites, such as acral and mucosal surfaces. Winkler et al. evaluated a CNN’s ability to distinguish acral, mucosa, and nail unit melanomas from commonly encountered benign lesions matched for localization and morphology, for example, solar lentigo vs lentigo maligna melanoma (LMM). The CNN’s performance was comparable for melanoma subtypes, with AUCs of 0.926 (LMM), 0.928 (acral melanoma), and 0.989 (SSM). The diagnostic accuracy of mucosal and nail unit melanomas was markedly inferior, with AUCs of 0.754 and 0.621 for mucosal and nail unit melanomas, respectively (Winkler et al., 2020).

Yu et al. compared the diagnostic accuracy of a CNN with that of two dermatologists who had five or more years of experience with dermoscopy (expert group). 724 images (350 acral melanoma, 374 benign nevi, all confirmed histologically) were randomly divided into two sets (A and B). The diagnostic accuracy was similar between the CNN and the expert group with both datasets (set A: 83.51% vs. 81.08%, set B: 80.23% and 81.64%, respectively). The AUC for both the CNN and the expert group was above 0.8 with both datasets. A “non-expert” group of general physicians had a significantly lower diagnostic accuracy (62.71%) and AUC (0.63–0.66) (Yu et al., 2018).

To illustrate how AI can improve the ability of clinicians to assess pigmented lesions, Lee et al. analyzed the decision-making accuracy of 60 physicians when confronted with 100 dermoscopic images of acral pigmented lesions. The clinicians had a binary decision tree: monitor the lesion with follow-up or perform a biopsy. When provided with the CNN’s (ALMnet) diagnosis of acral nevus or acral lentiginous melanoma, the clinicians improved their decision-making in comparison with history and dermoscopic images and dermoscopic images alone (accuracy 86.9% vs 79% vs 74.7%, respectively). Concordance among clinicians significantly improved, and the performance gaps among groups diminished with the aid of the CNN (Lee et al., 2020).

5 AI IN PIGMENTED LESION PATHOLOGY

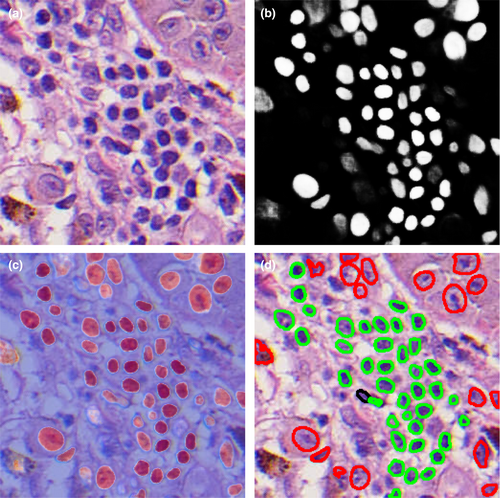

Computer-aided diagnosis of histopathologic specimens was first implemented in 1987 with the advent of a computer program known as TEGUMENT, which led pathologists through a decision tree to reach a final diagnosis (Potter & Ronan, 1987). This program's major limitation was the simplification of numerous variables and vast medical knowledge into a decision tree, and it was not widely utilized. Interest in computer assistance for the classification of melanocytic and pigmented lesions in dermatopathology re-emerged as whole-slide image (WSI) scanners made it possible to digitize datasets and CNNs allowed for advanced image classification (Pantanowitz et al., 2011; Tizhoosh & Pantanowitz, 2018).

In 2019, Hekler et al. used a CNN to categorize melanocytic lesions into benign nevi and melanoma (Hekler et al., 2019a). The algorithm was trained on 595 randomly cropped images and tested on 100 images for accuracy. The training images consisted of partial H&E sections and limited H&E sections. Despite these disadvantages, the CNN achieved a discordance rate of just 19% with the ground truth of the dermatopathologist's diagnosis, which is equivalent to the dermatopathologist's own intra-observer discordance rate. In comparison, the average discordance rate among pathologists in the diagnosis of melanoma versus benign nevus is approximately 25% (Lodha et al., 2008).

A recent study by Hart et al. highlights the importance of image selection when training a CNN (Hart et al., 2019). The investigators used a CNN to classify melanocytic neoplasms into Spitz nevi and conventional melanocytic nevi. The CNN was tested against the ground truth of a concurrent diagnosis of two dermatopathologists. When using representative images curated by dermatopathologists from WSIs, the algorithm achieved a classification accuracy of 92%, with a sensitivity and specificity of 85% and 99%, respectively. In a follow-up experiment, the CNN was trained on non-curated WSIs without dermatopathologist input. In addition to taking 16 times longer to train, the validation accuracy dropped to 52%. This large decrease in accuracy is likely because the algorithm classified the image based on the predominant, rather than diagnostically specific, histologic features. For example, if a small part of the lesion has Spitz nevus features, but the majority of the lesion appears to be a conventional melanocytic nevus, the algorithm would classify it as a conventional nevus while the dermatopathologist would diagnose a Spitz nevus.

A limitation of earlier studies is the small number of dermatopathologists used to compete with the algorithm. As mentioned earlier, inter-observer discordance with melanocytic neoplasms can be substantial. A recent study by Brinker et al. overcomes this limitation by using a larger comparator group (Brinker et al., 2021). WSIs of benign nevi and melanoma (50 cases each) were classified by an ensemble of 3 CNNs and compared to the majority vote of a panel of 18 international dermatopathologists. The ground truth was the consensus diagnosis of two experienced dermatopathologists not part of the panel. The mean accuracy of individual dermatopathologists was 90%. The majority vote of the expert panel yielded an accuracy of 98%, and the CNN achieved an accuracy of 92% for slides with a tumor region annotated as a region of interest and 88% with unannotated slides. Overall discordance between the dermatopathologists and the CNNs was 13.45%.

A challenge encountered in AI and pathology is the virtually infinite variability in histologic morphology. This suggests that large datasets are required to train algorithms to classify diseases with variable histopathology, such as melanoma. Especially challenging are cases with features consistent with an alternative diagnosis that can only be accurately classified upon very close inspection (e.g., nevoid melanoma). Similarly to how lighting can affect the AI classification for clinical images, CNNs are sensitive to variations in pathology slide staining. In addition, many lesions, as currently understood, are not well described by binary classification schemes. A binary outcome (benign or malignant) may be too simplified for neoplasms with uncertain malignant potential, such as moderate–severe atypical melanocytic nevi.

6 AI AND PROGNOSIS AND THERAPEUTIC CHOICE

Beyond clinical, dermoscopic, or histologic diagnostics, AI has been used to detect features that predict melanoma prognosis. Using a training set of 108 WSIs, a CNN was tested for accuracy of predicting distant metastatic recurrence (DMR) for two validations sets of 104 and 51 melanoma patients with at least 24 months of follow-up (Kulkarni et al., 2020). The AUCs were 0.91 and 0.88, for the larger and smaller validation sets, respectively. The model's output also correlated with disease-specific survival (p < .0001) (Kulkarni et al., 2020).

AI can also be used to predict therapeutic response to immune checkpoint inhibitors (ICI). By integrating deep learning on melanoma histology specimens with clinical data, Johannet et al. demonstrated that a CNN could accurately classify patients as high or low risk for disease progression (Johannet et al., 2021). The multivariate classifier predicted therapeutic response to ICI with an AUC of 0.805. Patients deemed high risk had significantly worse progression-free survival compared to low-risk patients. Hu et al. demonstrated that, based on histology alone, a CNN was able to predict ICI response of 54 melanoma cases (AUC 0.778), with 65.2% of responders and 74.2% of non-responders correctly classified (Hu et al., 2021).

7 CHALLENGES OF AI

There are several limitations preventing greater application of AI. Foremost among these challenges is the lack of labeled data. Training images used by CNNs traditionally use at least some data that have been annotated. Photographs of human skin are not standardized, compared to radiographic or funduscopic images. Numerous lesions may exist in a single photograph; a benign nevus may be adjacent to a melanoma, and the images must be cropped so that the algorithm is trained to detect only the lesion of interest. Furthermore, the CNN may pick up on clues not directly related to the diagnosis, for example, the algorithm used by Esteva et al. used rulers as a metric to predict malignancy (Esteva et al., 2017). This means a human must curate images (patches) taken from WSIs or photographs to label and provide to neural networks. Without this selective instruction, algorithms are less accurate, as highlighted by Hart et al.’s study discussed earlier (Hart et al., 2019). This work itself has several challenges, that is, its time-consuming nature, financial costs, and that the “ground truth” is often determined by the imperfect and inconsistent standard of human decision. In addition, the databases used to train deep-learning algorithms are prone to selection bias. Han et al. recently showed that CNNs trained on images of Asian patients performed poorly on images of white patients (Han et al., 2018). Most existing databases are composed of images taken from fair-skinned populations in Asia, Europe, and the United States. Consequently, melanoma detection in skin of color is far less robust (Adamson & Smith, 2018).

Another challenge of AI is the so-called “black box” a model uses to reach its decisions. The way the CNN generates its outcome may not be intuitive based on traditional clinical parameters or human logic, that is, the weights the CNN uses to generate its outcome are not human interpretable. Without an understanding of the rationale behind a decision, humans may be wary of AI and the results it produces, even in the face of numerous studies verifying accuracy.

In addition, most studies focused on comparing the accuracy of AI to dermatologists in the diagnosis of pigmented lesions in an artificial environment. Clinicians’ accuracy improves when clinical information, such as skin cancer history and demographics, is also provided (Haenssle et al., 2018, 2020). A recent systematic review identified 11 studies that compared a CNN’s performance before and after incorporating patient data, such as age, sex, and lesion location. In all studies, the CNN’s performance improved with additional patient data (Höhn et al., 2021). CNNs also have improved accuracy when the clinician and artificial intelligence work together to classify skin cancers, compared to either alone (Hekler et al., 2019b; Tschandl et al., 2019). However, there is a potential for the introduction of cognitive bias with the implementation of AI in clinical practice, and studies assessing the impact of human factors with the use of AI in the clinical setting are needed (Felmingham et al., 2021).

Welch et al. recently hypothesized that the rise in melanoma incidence is a result of increased skin cancer screening, increased biopsies, and a lower threshold to histologically diagnose melanocytic neoplasms as melanoma (Welch et al., 2021). It is plausible AI would potentiate some of these factors by increasing the sensitivity of clinicians and pathologists, with an uncertain effect on their specificity.

8 CONCLUSIONS

The use of AI in medicine is rapidly growing. While apprehension regarding its use in diagnosis and prognostication is understandable, dermatologists and dermatopathologists should work with technical specialists to embrace AI. It has powerful potential to augment our medical decision-making and to increase access to medical care. These benefits are especially relevant to the diagnosis and management melanocytic neoplasms, which despite decades of experience, remain an often-contentious enigma. AI will not in the foreseeable future replace the practitioner, who plays many roles ranging from diagnostician to patient confidant and counselor.

CONFLICTS OF INTEREST

None declared.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed in this study.