Association between clinical history in the radiographic request and diagnostic accuracy of thorax radiographs in dogs: A retrospective case-control study

Abstract

Background

The effect of clinical history on the interpretation of radiographs has been widely researched in human medicine. There is, however, no data on this topic in veterinary medicine.

Hypothesis/Objectives

Diagnostic accuracy would improve when history was supplied.

Animals

Thirty client-owned dogs with abnormal findings on thoracic radiographs and confirmation of the disease, and 30 healthy client-owned controls were drawn retrospectively.

Methods

Retrospective case-control study. Sixty radiographic studies of the thorax were randomized and interpreted by 6 radiologists; first, with no access to the clinical information; and a second time with access to all pertinent clinical information and signalment.

Results

A significant increase in diagnostic accuracy was noted when clinical information was provided (64.4% without and 75.2% with clinical information; P = .002). There was no significant difference in agreement between radiologists when comparing no clinical information and with clinical information (Kappa 0.313 and 0.300, respectively).

Conclusions and Clinical Importance

The addition of pertinent clinical information to the radiographic request significantly improves the diagnostic accuracy of thorax radiographs of dogs and is recommended as standard practice.

Abbreviations

-

- BAL

-

- broncho-alveolar lavage

-

- CI

-

- confidence interval

-

- CT

-

- computed tomography

-

- DICOM

-

- Digital Imaging and Communications in Medicine

-

- PACS

-

- Picture Archiving and Communication System

-

- TXR

-

- thoracic radiography

1 INTRODUCTION

Thoracic radiography (TXR) is a widely accessible and commonly used tool in the day-to-day practice of small animal medicine.1 It remains a cost-effective, readily available, and safe method for the initial investigation of a large range of pulmonary and cardiac diseases in dogs.2 Its interpretation can be challenging as the visible structures vary substantially depending on animal size, age, and breed, and there is a large overlap in the appearance of different disease processes.1, 3

Since the 1960s,4 the impact of clinical history on image interpretation has been widely investigated in human medicine. Some authors suggest that radiologists who heavily rely on clinical information might be negatively influenced (biased) and therefore not perform a detailed examination of the images.5 Anecdotally, relevant clinical information can sometimes be withheld from the radiologist in an attempt to avoid bias. In contrast, many support the idea that the addition of an adequate clinical history is imperative to the process of image interpretation.6

Considering the high level of subjectivity and recognized low agreement between readers in reference to TXR,7-10 some argue that only with clinical information can a radiologist account for the aforementioned anatomical differences and interpret their meaning applied to a specific image.11-13 Recently, an in-depth literature review concluded that, based on the flawed study designs used to date, the question of how clinical history impacts image interpretation remains open to speculation.5 A thorough literature search of 3 databases (Pubmed, ScienceDirect, and Google Scholar) using the words “radiographs,” “imaging,” “interpretation,” “clinical history,” “veterinary medicine,” “dogs,” and “small animals” from 1960 to present was performed, and no publications that analyzed what effect the addition of clinical history and signalment has on image interpretation in veterinary medicine were identified.

The objective of our study was to evaluate the impact of the provision of signalment and pertinent clinical history on the diagnostic accuracy of thoracic radiographic interpretation, as well as the radiologists' confidence in their diagnosis and agreement (consensus) between radiologists. We hypothesized that the addition of clinical history and signalment would improve the diagnostic accuracy and confidence of the radiologists in the interpretation of thoracic radiographs of dogs, and that the consensus would improve with the addition of clinical history.

2 MATERIALS AND METHODS

This study was a 2-part, retrospective case-control study. Cases and controls were selected from dogs that underwent TXR at the University of Veterinary Medicine Vienna (Vienna, Austria) between December 2015 and December 2021 and had a minimum of 2 radiographic views (left or right lateral; and ventro-dorsal and/or dorso-ventral). The determination of the sample size required for our study was based on similar research published recently.14-16

For the purpose of this study, confirmed disease was defined as the final diagnosis as determined by an internal medicine specialist and/or a cardiology specialist based on a reference standard or commonly accepted testing. Diagnostic accuracy was defined as how closely the radiologist's report matched the confirmed disease.

2.1 Case selection

One thousand five hundred three thoracic radiographic studies taken of dogs between 2015 and 2021 were reviewed. A total of 60 studies were selected (30 with a confirmed disease and 30 healthy controls) and evaluated by 6 radiologists (3 diplomates of the European College of Veterinary Diagnostic Imaging and 3 non-diplomates with over 20 years of experience in radiology).

The radiographs were reviewed and selected by a diagnostic imaging fellow (N.A.B) and the clinical history and examinations by a small animal internal medicine resident (L.H.). The inclusion criteria for the disease group were the presence of an interstitial lung pattern and its variations on the original radiological report and confirmed disease that would account for the lung pattern. Included were pulmonary and cardiac conditions that have the potential to cause variations of interstitial pattern17: (broncho-) pneumonia (confirmed by positive broncho-alveolar-lavage [BAL] culture), parasitic disease (confirmed by a positive fecal float for Angiostrongylus vasorum), cardiogenic lung edema (confirmed by echocardiography and clinical presentation),18 lung hemorrhage/contusion (clear history of trauma, rodenticide toxicity, presence of b-lines on lung ultrasonography, and/or thrombocytopenia with elevated values of partial thromboplastin time and activated partial thromboplastin time), eosinophilic broncho-pneumopathy (confirmed by BAL cytology), and fibrotic interstitial lung disease (confirmed by computed tomography [CT]). Cardiac disease was confirmed by echocardiography: cor pulmonale (with the underlying pulmonary cause additionally confirmed by its corresponding reference standard), subaortic stenosis, myxomatous mitral valve disease, and tricuspid valve disease.

Cases were excluded if they did not have confirmed disease, based on a reference standard examination or a commonly accepted alternative testing, or if the radiographs presented any feature that could aid in its distinction from others (eg, thorax drain, fractures, luxated vertebrae, or sternebra). Since alveolar patterns are too easily recognized among raters,19 radiographs where this pattern was predominant were also excluded.

For inclusion as matched controls, we included cases of dogs with normal thoracic radiographs according to the original report and no suggestions of lung or heart disease based on the clinical history. Cases were selected at random and included dogs that were radiographed for either metastasis search, as an annual health screening, or as part of a work-up post-trauma. Cases with a medical record suggestive of potential lung or cardiac disease (eg, cough, dyspnea, heart murmur) were excluded.

Thirty cases were included as diseased and 30 as control. All cases were randomized, and the images were anonymized and made available in a DICOM (Digital Imaging and Communications in Medicine) format. The 60 cases were broken into sets of 10 cases where the number of diseased and control cases varied in each set of 10.

2.2 Case interpretation

All cases were interpreted on 2 separate occasions: (a) A first blinded reading, where no clinical information or signalment was provided (Round 1); (b) A second reading (within 12 weeks of the first) with access to all pertinent clinical information and signalment (Round 2). Clinical information included: breed, sex, age, body weight (if available), presenting complaint, short anamnesis, clinical exam at presentation, other known conditions (if any), laboratory tests (if any), other diagnostics (if any), and additional pertinent information (if any).

A consistent search strategy or standardized approach was not applied, and the radiologists reviewed the radiographs using their preferred method. The radiologists had no access at any point in time to the original radiographic report or the dog's medical record and were blinded to the confirmed diagnosis. They also interpreted the radiographs independently of one another and without consultation.

Radiologists were instructed to fill out a complete image report sheet (Appendix S1), where the following features were reported based on Thrall20 and Scrivani21: Lung pattern (normal, bronchial, interstitial, alveolar, and/or vascular hypovolemia or vascular congestion), lung volume (normal, increased or decreased), distribution (cranioventral, caudodorsal, diffuse, focal, multifocal, lobar, locally extensive, and/or asymmetric), severity (0 to 5, with 0 being normal, 1—mild, 3—moderate, and 5—severe), confidence in diagnosis (0—low, 1—intermediate, or 2—high) and observable changes that influenced decision making (advanced or young age, pleural effusion, pneumothorax, cardiomegaly, cachexia or excess weight, musculoskeletal changes and/or mass and others).

The diagnosis was left as a free text entry to avoid the introduction of bias. If the radiologist was considering multiple diagnoses, these were to be ranked from most likely to least likely.

The use of the radiographs as well as medical records was approved by the Diagnostic Imaging Department of the University of Veterinary Medicine Vienna. All images were acquired by use of a stationary X-ray machine (Siemens ICONOS; Erlangen, Germany), and a digital flat-panel detector (FDR-D Eco, Fujifilm, Tokyo, Japan). Images were reviewed using different DICOM viewers (ie, Horos, JiveX, and Oehm and Rehbein).

2.3 Statistical analysis

To quantify the degree of agreement between the 6 radiologists for inferring a correct diagnosis we calculated Light's kappa. Light's kappa is an extension of Cohen's kappa for 3 or more raters that computes Cohen's kappa for all rater pairs and then uses the mean of these to provide an overall measure of consensus. Kappa values range from −1 to 1, with positive values indicating an above average chance of consensus. We used Landis and Koch guidelines for classifying agreement of kappa values: slight (0 to 0.2); fair (0.21 to 0.40); moderate (0.41 to 0.60); substantial (0.61 to 0.80); and almost perfect (0.81 to 1.0) consensus. To calculate 95% confidence intervals (CIs) for the kappa statistics, we used a bootstrapping method with n = 1000 replication to be able to compare them to each other.

To investigate how the diagnostic accuracy of radiologists is affected by providing clinical history, we used a chi-square test of independence to compare distribution across the relevant groups. Changes in performance were analyzed using all cases, as well as for control and disease cases independently. To investigate if the self-reported confidence of raters increased by including clinical history, we used a chi-square test of independence comparing the distribution across levels of confidence in Round 1 and Round 2. We used an alpha level of 0.05 for all statistical tests, and all analyses were performed in R (version 4.2.0).

3 RESULTS

3.1 Sample of dogs

Dogs were represented by 28 breeds, including 21 mixed breeds (69.9%), 3 Labrador Retrievers (9.99%), 3 Bernese Mountain Dogs (9.99%), 3 Pointers (9.99%), 2 Flat Coated Retrievers (6.66%), 2 Magyar Vizslas (6.66%), 2 Jack Russel Terriers (6.66%), 2 Chihuahuas (6.66%), 2 Rottweilers (6.66%), 2 Golden Retrievers (6.66%), and 1 (3.33%) of each of the following: Yorkshire Terrier, Dobermann, Greyhound, Tibetan Terrier, Dalmatian, Maltese, Border Collie, Australian Cattle Dog, West Highland White Terrier, Shih-Tzu, Anglo-Francais de Petite Vener, Australian Shepherd, American Staffordshire Terrier, Spitz, Boxer, English Bulldog, Rhodesian Ridgeback and Cavalier King Charles Spaniel.

The body weight was available for 43 of the 60 dogs and ranged from 2 to 50 kg, with a mean of 21.5 kg and a median of 21.6 kg. Females represented 48.33% of the population (n = 29) and males the remaining 51.66% (n = 31). Of the females, 4 were intact (13.79%) and 25 were spayed (86.2%). A total of 5 males were intact (16.1%; including 1 unilateral cryptorchid) and 26 were castrated (83.87%; including 1 chemically castrated). The dog's ages ranged from 6 months to 14 years (mean of 8.21 years, median of 11.25 years).

In the disease group, 14 dogs (46.66%) had left-sided congestive heart failure (confirmed by echocardiography in combination with the clinical presentation), 6 dogs (20%) had bacterial pneumonia (confirmed by positive BAL culture), 3 dogs (10%) had eosinophilic bronchopneumopathy (confirmed by BAL cytology), 2 dogs (6.66%) had left-sided congestive heart failure combined with tricuspid valve insufficiency (confirmed by echocardiography) and 1 dog (3.33%) of each of the following: parasitic disease (confirmed by a positive fecal float for Angiostrongylus vasorum), cor pulmonale (confirmed by echocardiography) secondary to fibrotic interstitial lung disease (confirmed by CT), lung contusion (confirmed by ultrasonography and clear history of trauma), subaortic stenosis (confirmed by echocardiography) and 1 fibrotic interstitial lung disease (confirmed by CT) without cor pulmonale. All echocardiographic examinations were performed by a veterinary cardiologist, in unsedated animals and through standard 2-dimensional and Doppler trans-thoracic echocardiography.22

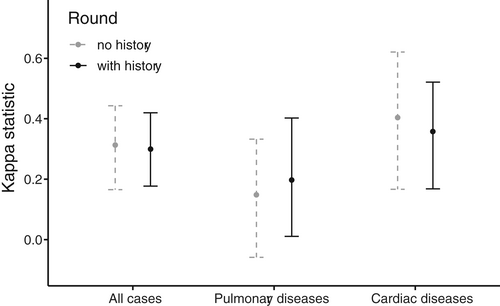

3.2 Consensus

The kappa coefficients of inter-rater reliability between radiologists across measurements in Rounds 1 and 2 are plotted in Figure 1.

The consensus (agreement) between all raters was fair and there was no significant difference in the consensus of all raters between Rounds 1 and 2 of interpretation (Round 1: k = 0.404, 95% CI: 0.166-0.621; Round 2: k = 0.358, 95% CI: 0.168-0.521).

The lowest agreement was noted during identification of pulmonary disease (all raters), with a slight agreement (Round 1: k = 0.148, 95% CI: 0.059-0.332; Round 2: k = 0.197, 95% CI: 0.011-0.402). In comparison, agreement on the subset of cardiac diseases (n = 19) in rounds 1 and 2 was slightly higher, although also interpreted as fair (Round 1: k = 0.404, 95% CI: 0.166-0.621; Round 2: k = 0.358, 95% CI: 0.168-0.521).

3.3 Diagnostic accuracy

There was a statistically significant increase in diagnostic accuracy of all cases in Round 2 of interpretation, where clinical history had been provided (P = .002). In total, 64.4% of cases were diagnosed accurately in Round 1 and 75.2% in Round 2.

The diagnostic accuracy was generally better in the control group when compared with the disease group, regardless of the interpretation round (Round 1: P ≤ .001; Round 2: P ≤ .001). Round 1:77.5% (control) vs. 51.1% (disease); Round 2:85.0% (control) vs. 65.4% (disease).

When considering only the radiographs from the control group, the diagnostic accuracy remained the same, regardless of whether history was provided (77.5% in Round 1 vs. 85.0% in Round 2; P = .09).

In the disease group, the diagnostic accuracy increased significantly when history was provided (51.1% in Round 1 vs. 65.4% in Round 2; P = .009).

3.4 Confidence

There was an overall increase in confidence in diagnosis when the clinical history was included (P ≤ .001). Confidence in Round 1 vs. Round 2, respectively: low confidence (10.5% vs. 2.0%); intermediate confidence (35.7% vs. 32.1%); and high confidence (53.8% vs. 65.9%).

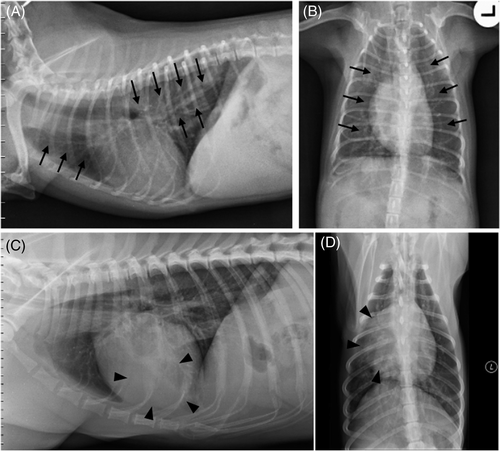

Radiographs in which at least 4 raters changed their interpretation after the addition of clinical history are shown in Figure 2.

4 DISCUSSION

This study assesses the effect of access to the clinical history on radiological diagnosis in veterinary medicine. Our case-control study demonstrated a significant increase in radiological diagnostic accuracy when clinical information was provided, as well as increasing the radiologist's confidence in their diagnosis.

This study focused on thoracic radiographs with an interstitial lung pattern, which alone has at least 22 possible differential diagnoses.17 This pattern is anecdotally considered one of the most challenging to interpret and was thus selected for this study. A specific region and pattern were selected to render the study practically achievable. Multiple regions or patterns would necessitate a much greater number of cases to be reviewed and a marked increase in the time needed to perform the readings. As mentioned previously, the number of cases in each group was comparable to similar human studies.14-16

The radiologist's interpretation in this study should be viewed as a step in the process, similar to image acquisition and image processing. Consequently, it is important to emphasize that this study is assessing the impact of clinical history and not the radiologist's performance. Thus, the agreement between radiologists and comparison with the confirmed disease (diagnostic accuracy) were analyzed as a means of assessing the impact of history on the interpretation process and not an assessment of individual performance. The fact that there was no significant change in the consensus of all radiologists between Rounds 1 and 2 should support this thinking. The inclusion of 6 different radiologists, evaluating in isolation precluded against groupthink. Additionally, the 3 board-certified radiologists trained in 3 countries, which also reduced the risk of inherent bias.

Frequently, in disease-specific studies, the observer can err with a positive diagnosis, as one searches for data to confirm a specific hypothesis (also known as confirmation bias).23, 24 To prevent this, control cases were included and mixed in with the diseased cases. For each set of 10 cases, the radiologist was unaware of how many disease or control cases (if any) were present in a given set. With further reference to inherent bias, the use of a pre-assembled image report sheet for image interpretation was utilized in our study, as it is a practice recommended by several human radiology societies and authors.25-29 For instance, the Radiological Society of North America created the Radiology Reporting Initiative, a library of common radiology procedure templates, as a way to standardize report formats and diminish errors.30 It is important to note that the end diagnosis was left open and not given as a checklist in our study, thus eliminating potential bias.

The improvement in diagnostic accuracy—particularly in the diseased group—confirms our hypothesis and is in agreement with similar research in human medicine.9, 12, 31 Reaching outside the scope of TXR, there is evidence of a positive impact on diagnostic accuracy with the addition of clinical information.32 A study that looked for the detection of prostate nodules in magnetic resonance imaging of humans showed a significant increase in tumor detection rate with the addition of clinical information.33 Conversely, Good et al.34 noticed a decrease in the diagnostic accuracy of TXR in disease-specific cases. Similar results were recorded in a comparable study in a PACS (Picture Archiving and Communication System) environment.35 It should be noted that the aforementioned studies partially included the clinical status of the human patients as diagnostic confirmation and deliberately omitted any clinical information indicative of the disease in question. Furthermore, both studies had a disease-specific format, where the radiologists should look specifically for interstitial disease, nodules, or pneumothorax. In a critical review by Berbaum and Franken, the authors criticized the impact of these results with such case samples, since the history serves to guide a search through a much wider range of diagnostic possibilities.36

The statistically significant increase in confidence in diagnosis in Round 2 of interpretation was unsurprising. This specific data has allegedly been investigated in human medicine; however, no results in this regard were published.37 Anecdotally, increased confidence in diagnosis, even if it does not materially alter the progression of the animal's investigation/treatment or the clinical decision-making of the case, is important for radiologists as it reduces mental stress and may reduce anxiety/imposter syndrome that can lead to burnout.38

The broad range of studies in human medicine that examined the impact of clinical history on image interpretation vary widely in design, case selection, and data collection. Additionally, no reference standard regarding disease confirmation was included in any of these studies. The final diagnosis was either clinical,9, 34, 35 a consensus of the readers,4 or simply based on the original radiographic report.19 Our study design only included cases with diseases that were confirmed pragmatically using accepted reference standards of practice. The variety of diseases included in this study reflects common cases seen in a clinical environment. In an ideal world, a histopathological evaluation would have also been available for each case; however, in many diseases the animal recovers without such an intervention and it would be ethically questionable to insist on this for control cases.

A concept that must be considered is the quality of the history that was provided. The addition of false or inaccurate information decreases the diagnostic accuracy of radiologists when interpreting chest radiographs in humans.12, 14, 39 The same results were replicated in a CT study, where the addition of false historical information decreased the accuracy of the radiological diagnosis; conversely, when the clinical information was accurate, the radiological diagnosis improved.40 Further research has also raised concerns about the significant increase in false positives when radiologists are instructed to only look for specific abnormalities (eg, pulmonary nodules).37 This is a biasing factor in medicine and is called “framing bias,” which refers to giving opposite answers to the same problem, depending on how the problem is presented.24

The addition of clinical history significantly improved diagnostic accuracy when both groups or only the disease group were included. No significant difference was observed when only the control group was included. This suggests that radiologists are less likely to be influenced by clinical history when no significant disease or gross radiographic abnormalities are detected.

Based on the Kappa analysis in our study, the consensus was fair; which is in agreement with published data,19 and did not improve with the addition of clinical information. The lowest agreement was seen on the identification of lung diseases, downgraded from fair to slight on both interpretation rounds. This comes as no surprise, considering the high subjectivity and general low agreement on the determination of lung patterns.41 Although all 3 of the board-certified radiologists were trained in different institutions, they recorded the best agreement. Additionally, while there was an increase in Kappa values for the identification of cardiac disease and a decrease in the identification of pulmonary disease, there was substantial overlap of the 95% CI's, which suggests that the inclusion of clinical history did not result in a statistically significant change in the reliability of these specific findings.

The retrospective nature of our study, as well as the method for the inclusion of cases, might be considered limitations. Moreover, some of the included diseases had a small sample size and could therefore decrease the statistical power. Another potential limitation of our study relates to some of the diagnostic testing used for disease confirmation. For instance, although echocardiography is the most commonly used practice for diagnosing cor pulmonale, the reference standard method in humans and dogs is right-sided cardiac catheterization.42, 43 This reasoning also applies to the diagnosis of lung contusion (where the considered reference standard is CT44, 45) and fibrotic interstitial lung disease (where the reference standard is histopathology46, 47). It should be noted; however, that each of these conditions represented no more than 3.33% of our study population.

To conclude, in this focused study assessing the association of clinical history on the interpretation of canine thoracic radiographs, the provision of accurate clinical history improved the radiological diagnosis and is recommended by the authors as standard practice.

ACKNOWLEDGMENT

No funding was received for this study. The use of all images and data in the medical records was approved by the Department of Diagnostic Imaging of the University of Veterinary Medicine Vienna. The authors thank the staff of the Diagnostic Imaging Service of the University of Vienna for their outstanding work.

CONFLICT OF INTEREST DECLARATION

Authors declare no conflict of interest.

OFF-LABEL ANTIMICROBIAL DECLARATION

Authors declare no off-label use of antimicrobials.

INSTITUTIONAL ANIMAL CARE AND USE COMMITTEE (IACUC) OR OTHER APPROVAL DECLARATION

Authors declare no IACUC or other approval was needed.

HUMAN ETHICS APPROVAL DECLARATION

Authors declare human ethics approval was not needed for this study.