The Value of Agricultural Economics Extension Programming: An Application of Contingent Valuation

Abstract

We used contingent valuation to estimate participant willingness to pay (WTP) for agricultural economics extension programming. The data, collected from evaluation forms used for a series of outlook meetings conducted by faculty from Ohio State University, and subsequent analysis suggest participant private benefits exceeded departmental costs of conducting the program (benefit-cost ratios of 1.07 under conservative assumptions and 1.74 under moderate assumptions). We also explore the revenue generation potential from alternative program pricing and discuss the potential for developing differentiated programs to reach distinct audience segments. Additional research necessary before implementing alternative pricing or program differentiation plans is also discussed.

Structural changes in U.S. agriculture have had important implications for many affiliated sectors. In particular, over the past two decades, land-grant universities and their extension services have undergone substantial changes as federal and state funding has declined (Knutson and Outlaw; Barth et al.). In an attempt to project the longevity and usefulness of the institution, economists have contemplated how the structure of land-grant extension could be adjusted (McDowell) to enhance and lengthen the institution's role in the United States (Knutson; Knutson and Outlaw). Other economists have evaluated the economic implications of several suggested reformulations of existing institutional funding mechanisms (Dinar and Keynan; Hanson and Just).

As public sources of funds decline, administrators must ponder difficult issues not only about how to allocate diminishing public resources, but also how to develop alternative sources of income for extension. Some authors have suggested that extension services collect user fees, or outsource some programs to private firms. These suggestions are more than theoretical, as some extension services in several countries have already been privatized (Dinar and Keynan; Hanson and Just), and extension administrators already have begun exploring market-rate user fees for some programs (National Association of State Universities and Land-Grant Colleges (NASCALGU)).

Clearly, privatizing extension programs or adopting user fees raises questions about what program or research is a public versus private good. A basic level of education is clearly still considered a public good within the United States, as evidenced by continued public support for primary, secondary, undergraduate, and post-graduate education. Individuals interested in obtaining higher-quality education, or post-graduate education with higher market values (e.g., obtaining a masters of business administration), however, must pay the associated marginal costs. Thus, at least to some extent, education has a private good component. In light of this, the mission of extension to quickly disseminate new research findings and provide lifelong educational opportunities must be carefully considered. On the one hand, extension can play a clear role in making sure that the findings of university research are transmitted quickly to citizens facing important financial or public policy decisions. However, universities have been privatizing many research findings in recent years by securing the benefits of patents in genetics research, for example, or by developing special research agreements with bioengineering or pharmaceutical companies. The temptation for extension administrators to consider capturing similar revenues from programming escalates.

Brown et al. argue that extension administrators must make careful decisions about the public versus private nature of programs offered as they allocate public funds. For programs that are more private in nature, they must discern pricing strategies. In deciding the optimal mix of public and private extension programs as well as the prices to potentially charge, we suggest that benefit-cost analysis should be applied broadly. This information can be used by administrators to help determine resource allocation.

While the methods used in any particular benefit-cost analysis are likely to differ depending on the case, they all must begin with assessing the demand for the program. Estimating demand, however, is likely to be the most difficult component. Typical measures of extension “demand,” such as counts of attendance, ratings of participant satisfaction, and anecdotal impact statements gathered from participants, often do not provide relevant information on the marginal value of the program. Traditional demand estimation is often impossible because, in part, about 80% of U.S. extension programs are provided free or for a nominal fee (NASCALGU, summary of questions 7 and 8). Administrators who want to do simple analyses to assess whether prices for existing programs could be increased have scant information because there is little variation in price, making it difficult to know how program participation will respond to changes in fees. Without basic information on demand, it will be nearly impossible to efficiently calculate shadow values to make optimal budget allocations with public funds, to alter central budgets to take risks on new potential revenue generation sources, or to set prices for programs that are closer to private goods.

The contribution of this study is the estimation of demand for extension programming using data that can be collected on program evaluation instruments and using estimation techniques common in environmental economics. Previous work in this area includes analyses of the demand for specific extension programming in other countries (Dinar 1989, 1996; Holloway and Ehui) and in the pre-World War II United States (Frisvold, Fernicola, and Langworthy), while others have provided estimates of the aggregate ex post impact of extension activities (Birkhaeuser, Evenson, and Feder; Huffman).

This paper differs from previous work because it focuses on the demand for extension programming provided by agricultural economists in a modern U.S. setting. We also employ more recent empirical methods, namely contingent valuation, to estimate participants' willingness to pay (WTP) for a series of local agricultural economics and policy outlook meetings provided by a land-grant department of agricultural economics. Contingent valuation provides at least two benefits. First, it has been developed explicitly for valuing public goods, and thus can be used for basic allocation questions related to which investments of public dollars provide adequate returns to justify continued support. Second, because we vary prices in the survey instrument, it can be used to assess the implications of price increases.

Although we believe that the methods employed provide a first step toward helping administrators answer fundamental questions such as where to place resources, who to hire, and how to allocate faculty time, this study clearly presents just one alternative for measuring demand. There are many programs in the four main areas of extension (Agriculture/Natural Resources, Community Development, 4-H, and Family and Consumer Sciences), and estimating benefits may require different methods. For instance, the benefits of Family and Consumer Science programs on medical issues may be better measured by considering health outcomes, and the relatively simply contingent-valuation techniques may not suffice. Nevertheless, successfully estimating demand for particular programs is likely to be of increasing importance as universities decide which programs to continue supporting, and which to consider adding.

The Value of Agricultural Economics Extension Programming

We evaluate a series of agricultural market and policy outlook meetings conducted by the faculty of a department of agricultural economics at Ohio State University. At each meeting, several faculty present analyses of particular markets, policy issues, or other areas with potential economic relevance to members of land-grant clientele with ties to production agriculture (e.g., farmers, lenders, agricultural service providers, local extension personnel, etc.). Considerable time is allocated to answering audience questions and stimulating discussion of the economic ramifications of current events for the agricultural sector. The meetings have been conducted annually by the department for several decades and, hence, has an established track record and reputation among much of the target audience.

This program involves both public and private goods. On the one hand, the program provides basic information on historical prices and future projected trends. Such information can easily be obtained by the participants through private channels, and so can be considered partially as a private good. On the other hand, the program incorporates an educational component by providing basic information on economic markets and how they operate, as well as information on other issues of public interest. In recent years, presentations have been made on genetically modified organisms, climate change, water quality, land use, and other issues of public concern. Policy analysis of this information may also be available through subscription services, but as with the market components of the program, university faculty giving presentations are less compromised than their private counterparts to provide unbiased information. This component, we argue, is closer to a public good that may be valued by clientele.

This extension program can help land-grant clientele increase utility in several ways, e.g., increased revenue; decreased production costs; lower costs of searching for and evaluating new technology; improved evaluation of new business methods or organizational forms; and reduced financial and market risk. Estimating the ex post effects of the programming (e.g., Birkhaeuser, Evenson, and Feder; Huffman) requires tracing how disseminated information affects the future flow of participants' profits and utility and the potential spillover effects of such information to friends and neighbors. However, we focus on the participants' expected value of such programming. Such an approach is appropriate in the current context because of the extension program's established track record; participants largely understand the potential value of the information being delivered. To accomplish this, we model participants' intended attendance decision for a situation involving a higher entry fee in order to estimate the consumer surplus for such programming.

Methods for Identifying the Value of Extension Programming

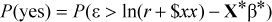

(1)

(1)Data Collection

One method of identifying participants' WTP for extension programs is to ask respondents an open-ended contingent-valuation question in which they express their value for the programming. When participants perceive that the answers they provide may actually result in an increase in future fees (i.e., the question is decisive), such open-ended questions are not incentive-compatible. In other words, respondents may have an incentive to understate their true WTP because they cannot be assured their response will be decoupled from the magnitude of future fee increases. Given methodological issues with such direct questioning (Haab and McConnell), many contingent-valuation practitioners suggest couching the question in a closed-end form that more closely mimics decisions made in a market context (i.e., attend or not attend a program) and mutes any respondent's ability to misrepresent WTP. One such closed-end form is a dichotomous-choice contingent-valuation question, in which the respondent either agrees or disagrees that they would be willing to pay a certain dollar amount to have a particular good made available to them.

In these times of tightening federal, state and departmental budgets support for programs such as this might be decreased such that an increase in registration fees is needed. Would you have attended this year's program if the registration fee would have been $xx higher?

The $xx value is referred to as the bid level and this value was randomized among the following values: 5, 10, 15, and 25. The $25 value represents more than double the average registration fee. Inclusion of higher bid values in the experimental design was considered at the time of survey formulation because higher bids could provide crucial information concerning the upper tail of the sample population's WTP distribution. However, inclusion of higher bids was judged potentially damaging to the ongoing relationship between local program organizers and many of the participants. We did not want local organizers, who often interact with many of the participants in an ongoing basis, to have to explain why they were considering vastly increasing prices of current programs.

Two hundred seventy-two instruments were collected (47.9% response rate) and 173 participants provided usable responses for the contingent valuation question (30.5% of total participants and 63.6% of returned surveys). Table 1 provides full summary statistics of participant characteristics.

| Variable | Definition | N | Mean | Std. Dev. | Min, Max |

|---|---|---|---|---|---|

| LAST YEAR | = 1 if attended program in previous year | 173 | 0.47 | 0.50 | (0, 1) |

| = 1 if overall rating of this year's program was | |||||

| GR1 | fair | 173 | 0.18 | 0.15 | (0, 1) |

| GR2 | good | 173 | 0.43 | 0.50 | (0, 1) |

| GR3 | excellent | 173 | 0.39 | 0.49 | (0, 1) |

| SPKGOOD | = 1 if average rating of speakers ≥ “very good” | 173 | 0.82 | 0.38 | (0, 1) |

| = 1 if participant's type of business is | 173 | ||||

| livestock | 0.278 | 0.45 | (0, 1) | ||

| grain | 0.393 | 0.49 | (0, 1) | ||

| livestock and grain | 0.011 | 0.11 | (0, 1) | ||

| ag sales | 0.220 | 0.42 | (0, 1) | ||

| ag service | 0.052 | 0.22 | (0, 1) | ||

| other | 0.046 | 0.21 | (0, 1) | ||

| BUSSALE | annual gross sales of business in $1,000 | 117 | 574.6 | 2768.8 | (6.3, 30,000) |

| SALAMIS | = 1 if annual gross sales in missing | 173 | 0.329 | 0.47 | (0, 1) |

| LBID | ln(contingent-valuation bid amount) | 173 | 2.257 | 2.30 | (1.61, 3.22) |

| COST | program registration fee | 173 | 12.150 | 7.28 | (0, 25) |

| LTOTBID | ln(CV bid + registration fee) | 173 | 3.039 | 3.135 | (1.61, 3.91) |

| OUTCNT | = 1 if traveled from another county to attend | 173 | 0.405 | 0.49 | (0, 1) |

| INTERNET | = 1 if uses the internet for business | 173 | 0.694 | 0.46 | (0, 1) |

| COUNTY | location-specific dummy variables also used |

The form and format of our study meets many of the suggested guidelines in Arrow et al.'s report to the National Oceanic and Atmospheric Administration concerning the use of contingent valuation. These guidelines call for a sufficiently large sample size so we questioned all current users of the good. The guidelines insist upon a high response rate for the contingent-valuation question. Our response rate was lower than ideal, but we feel that was generally caused by issues unrelated to the valuation task.1

The guidelines also suggest using instruments that feature a closed-ended WTP question, are administered in person and have a clear and realistic method for collecting additional payment. Our instrument meets all these criteria. Furthermore, the guidelines require that respondents have sufficient information about the item being valued to answer the questions. Each respondent in our survey experienced the good (meeting) being valued. We did not follow the guideline that suggests explicitly reminding the respondent that an affirmative answer to the contingent-valuation question would reduce the money they would have available for other things. We felt this would have minimal impact since the respondents had already paid to attend the program where they filled out the evaluation form. Finally, the questionnaire was not formally pre-tested within the respondent sample as suggested by Arrow et al. However, we did receive feedback from local extension agents concerning the wording of the question.

From this data, we estimated two different versions of the WTP model—the current program and the size of fee increase that would cause the respondent to not attend the program.

Estimated WTP Models

Model 1: Full WTP

(2)

(2) (3)

(3)Estimation and data details

Table 1 contains a description of the model variables, including information on the respondent's business operation, the perceived quality of the meeting attended, and controls for meeting location. To compensate for item nonresponse, the business sales variable contains a number of imputed values. Missing values were replaced with the average sales figures for all nonmissing sales responses. To test for a significant difference in responses between those reporting sales figures and those not, a missing value dummy variable was included in the estimated model (SALEMIS). A significant SALEMIS coefficient indicates that some systematic variation in the contingent valuation (CV) responses exists between those reporting business sales information and those choosing not to.

The estimated model includes two variables that control for respondents' perceptions of program quality (table 1). The first represents respondent satisfaction with the meeting presentations and was calculated from a respondent's average ratings of all speakers based on a scale of 1–5, where larger numbers indicate higher perceived quality. Due to limited variability in these ratings (few respondents use the lowest categories), a dummy variable was created which equals one if the average rating was greater than or equal to 4 (very good or excellent). Second, dummy variables were included that summarize a respondent's overall rating of the program (GR1, GR2, GR3). This was a separate question on the survey and captured such attributes as discussion sessions, meeting location amenities, etc.

Results

Table 2 contains the results of the binary probit model. The data fits the model well: 85.8% of observations are correctly predicted and the pseudo-R2 is 0.34. For the most part, the results conform to our prior expectations. The probability of a yes response decreases significantly with the natural logarithm of total cost (LTOTBID). Increases in self-reported business sales significantly increase WTP for the extension programs indicating that larger operations may have a greater value for extension programming because they can apply useful information over more units of business than a small operation and, hence, the cost savings or revenue enhancement may be larger for these firms.

| Variable Name | Coefficient Estimate | P-Value |

|---|---|---|

| CONSTANT | 2.78 | 0.02 |

| LTOTBID | −1.5895 | 0.0001 |

| BUSSALE | 0.0012 | 0.04 |

| SALEMIS | −0.53 | 0.08 |

| BUSDUM1 | −0.15 | 0.70 |

| BUSDUM2 | 4.72 | 0.99 |

| BUSDUM3 | −0.10 | 0.81 |

| BUSDUM4 | 5.48 | 0.98 |

| BUSDUM5 | 0.51 | 0.38 |

| INTERNET | 0.58 | 0.04 |

| SPKGOOD | 0.77 | 0.02 |

| GR1 | −1.48 | 0.11 |

| GR2 | −0.36 | 0.36 |

| GR3 | 0.04 | 0.91 |

| LAST YEAR | 0.48 | 0.05 |

| OUTCNT | 0.71 | 0.01 |

| N | 172 | |

| % correct predictions | 85.8 | |

| Psuedo-R2b | 0.34 | |

| Likelihood value | −76.64 | |

| Chi-square of covariatesc | 71.29 | 0.0001 |

- a County dummy variables are not reported here, but were included as controls in the model.

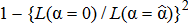

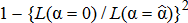

- b Psuedo-R2 is defined as

, where L(·) is the value of the likelihood function and α is the vector of coefficients to be estimated.

, where L(·) is the value of the likelihood function and α is the vector of coefficients to be estimated. - c Likelihood-ratio test statistic of the null hypothesis that all nonintercept covariates of the model are equal to zero, which is distributed as a chi-square with 25 degrees of freedom.

The negative, marginally significant coefficient on the imputed business sales value (SALEMIS) variable indicates that respondents who refused to provide sales data hold marginally smaller WTP values for extension programming. We are not certain if those failing to report business sales were in fact, smaller operations and, hence, followed the pattern of CV responses similar to smaller operators who did report business sales. Alternatively, those failing to report business sales may have been from a broad range of business sales levels but were distinct in other ways that caused them to have a lower WTP.

Individuals with internet access have significantly higher WTP. If internet access is a substitute for information provided at these outlook meetings, we would expect to find that access would decrease WTP for extension programs. However, the positive effect could be driven by several factors. First, the extension program might actually serve as a complement to internet information because the program allows for two-way interaction. The program format enables participants to receive feedback from both faculty and other participants about information gathered at the meeting and from the internet. The meeting format may facilitate participants' ability to filter the information received, localize its implications, and prioritize among emerging issues. A second possible explanation is that individuals who have access to the internet may simply hold a higher marginal value for all information sources.

The speaker-rating variables act in an expected fashion to control for perceived quality of the programs. Participants are willing to pay more for the program if they perceive speakers to be good or excellent. Similarly, the higher the general program rating, the greater is WTP as evidenced by the increasing size of the estimated parameters on GR1, GR2, and GR3. However, the general rating dummies are insignificant. One possible explanation is colinearity between the speaker and general ratings (i.e., good speaker ratings lead to good overall ratings). To test for this, we estimated models that included each rating separately. In each case, the ratings variables were significant in explaining WTP, but the various treatments had very little effect on the remaining estimated coefficients. For completeness, we report the results of the model including all ratings variables.

Participation in the previous year's meeting (LAST YEAR) increased the WTP for the current year's meeting, indicating a positive correlation between attendance across years. These individuals may have a long-term relationship with the extension program or personnel and value particular interactions with the speakers and other participants more than do individuals who have less past experience with the meetings.

We also find a positive and significant coefficient for the dummy variable indicating whether the respondent traveled from another county to attend the meeting. One might expect that individuals traveling from further away would be willing to pay less in registration because of greater costs just to reach the meeting.2 This appears not to be the case. One possible explanation is self-selection by individuals traveling from further distances into a higher WTP group than those traveling shorter distances. Most meetings are organized and publicized at the county level and, are often held in a central location within the county. Individuals from outside the county who attended the meeting were likely to have been heavy information seekers in order to find out about the meeting's location and time. Therefore, it may not be that surprising that they hold higher WTP for such programming than local participants.

Model 2: Maximum Registration Fee Increases

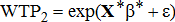

(4)

(4)In this case, a participant will indicate a “yes” response to the CV question if WTP2 > $xx, where $xx is the offered fee increase. This differs from the derivation in Model 1 because current costs are not included in the decision process. To test whether costs significantly influence the WTP decision, we include them as an explanatory variable. If our hypothesis is correct, then costs will be an insignificant determinant in the WTP function. Table 3 presents the results of a probit model based on equation (4).

| Variable Name | Coefficient Estimate | P-Value |

|---|---|---|

| CONSTANT | 2.12 | 0.02 |

| LBID | −1.3376 | 0.0001 |

| COST | −0.01 | 0.78 |

| BUSSALE | 0.0014 | 0.03 |

| SALEMIS | −0.49 | 0.11 |

| BUSDUM1 | −0.24 | 0.54 |

| BUSDUM2 | 4.41 | 0.99 |

| BUSDUM3 | −0.34 | 0.44 |

| BUSDUM4 | 5.49 | 0.98 |

| BUSDUM5 | 0.53 | 0.39 |

| INTERNET | 0.43 | 0.15 |

| SPKGOOD | 0.66 | 0.06 |

| GR1 | −1.55 | 0.12 |

| GR2 | −0.47 | 0.25 |

| GR3 | 0.05 | 0.90 |

| LAST YEAR | 0.50 | 0.05 |

| OUTCNT | 0.68 | 0.02 |

| N | 173 | |

| % correct predictions | 87.5 | |

| Psuedo-R2b | 0.37 | |

| Likelihood value | −72.13 | |

| Chi-square of covariatesc | 80.30 | 0.0001 |

- a County dummy variables are not reported here, but were included as controls in the model.

- b Psuedo-R2 is defined as

, where L(·) is the value of the likelihood function and α is the vector of coefficients to be estimated.

, where L(·) is the value of the likelihood function and α is the vector of coefficients to be estimated. - c Likelihood-ratio test statistic of the null hypothesis that all nonintercept covariates of the model are equal to zero, which is distributed as a chi-square with 26 degrees of freedom.

The results are substantively the same as table 2 with respect to goodness of fit, signs of results, and magnitudes of coefficients. Of importance is that the cost variable is a statistically insignificant determinant of the WTP response, indicating that respondents did not consider the registration fee in formulating their response to the CV question. Based on this result, it seems appropriate to use this model to forecast meeting attendance behavior simply on the surplus in excess of current costs rather than on full surplus as we did in Model 1.

Applications to Program Evaluation

Program Benefits

Based on the parameter estimates from Model 1 (table 2), the median WTP is calculated for each individual in the sample. The sample average of these median WTP figures is $77.36, while the fifth and ninety-fifth percentile values are $9.83 and $439.02, respectively. It should be noted that this formulation includes the current registration fee as part of the WTP estimate, and as such represents the total WTP for the meeting above travel costs and the opportunity cost of travel and meeting time. Hence, it is a partial measure that understates the sample respondents' true WTP.

While travel cost information was not collected from participants who answered the WTP question, data collected at the same meetings held the previous year (2000) revealed that the median attendee's one-way travel covered 15 miles and took twenty-five minutes. When added to the duration of the meeting (three hours), the total time commitment by the median 2000 attendee was three hours and fifty minutes. Assuming these median values apply to 2001 attendees and using conservative estimates of travel costs (the U.S. Internal Revenue Service's tax deductible rate of $0.345 per mile for 2001) and time costs (one-third of the 2001 average Corn Belt farm labor wage rate for the fall of 2000 or $3.18 per hour [U.S. Department of Agriculture, p. 7]), we estimate travel and opportunity of time costs of $22.52 for the median attendee. Using more moderate estimates for travel costs (the American Automobile Association's estimate of total car ownership costs of $0.51 per mile) and opportunity costs of time (the average Corn Belt wage rate of $9.53 per hour) suggests that travel and time costs of attendance are $51.80 for the median participant. Adding these median travel costs to each 2001 participant's estimated median WTP figure yields our final estimate for total WTP for the extension programming by the average participant of $99.88–$129.16, depending on true travel and time costs.

We construct total WTP for the extension program by extrapolating these estimated results across all meeting participants. This requires two assumptions. First, the estimates for WTP are based upon only those who answered the WTP question. Yet we would like to extrapolate to those who did not answer the WTP question. Statistical analysis of a respondent's decision to answer the WTP question given that they turned in a survey instrument suggests little systematic explanation for the nonresponse to the WTP question. Hence extrapolation of the estimated WTP model to participants who did not answer the question seems feasible.4

However, we would also like to use this model to explain the WTP of participants who did not complete any evaluation. Given our lack of data on nonrespondents, we are uncertain of the validity of such extrapolation and encourage caution when interpreting total WTP figures. Anecdotal evidence suggests that many evaluation instruments are not gathered because individuals leave a meeting early or do not like to fill out forms. Attendees may depart early due to scheduling conflicts or, perhaps, to dissatisfaction with the program. To account for our uncertainty of nonrespondents true value of the program, we use two methods of extrapolation. In the first approach, we assume nonrespondents have the exact same distribution of WTP as respondents. In the second and more conservative approach, we assume the relative distribution of WTP among nonrespondents is the same as for respondents but that each point in the empirical distribution of WTP is divided by two. Note that in both cases we assume respondent benefits include full travel and opportunity costs.

Given these assumptions, we can estimate several different figures for total WTP. Based on conservative travel and time cost estimates and a conservative approach to treating nonrespondents' WTP for the program yielded an aggregate benefits estimate of $45,127 across all participants. Using the least conservative assumptions yielded an aggregate WTP of $73,362.

Program Benefit-Cost Analysis

To determine benefits versus costs, we compared the respondents' aggregate WTP to the resources expended by the department to conduct the program. We calculated the cost of materials used at these meetings to be $1,800 while mileage paid to participants was approximately $1,200. The cost of program materials is currently recovered through a centrally imposed registration fee typically rolled into the fee charged by local organizers. This allows us to net this figure out of the benefit-cost analysis.

Personnel costs are the dominant component of the department's resource allocation. Ten different faculty members delivered presentations at least once during the meetings. Participating faculty members were asked to estimate the amount of time used to prepare presentations and generate program materials, taking into consideration that preparation time and materials often help in other extension programming, classroom teaching, and research. Summing across faculty yielded a time outlay of about 210 hours. In addition, we estimated the time commitment of faculty while traveling to and attending each meeting. Summing across faculty yielded a time outlay of 195 hours. The total faculty time outlay was 405 hours. Given the mix of junior and senior faculty, the corresponding university average salaries by rank, costs of benefits, and the types of appointments held by each faculty member (academic- versus calendar-year appointments), the total value of the time spent by faculty is approximately $22,700.

Furthermore, a professional staff member and an administrative assistant had major programmatic responsibility for organizing meetings with approximately 100 hours of total time commitments for the staff member and 280 hours for the administrative assistant. Adding their salary and benefits led to a total personnel cost estimate of approximately $27,500. Furthermore, we assumed the department's overhead charge for faculty salaries and mileage was 47% (i.e., equal to the university's prevailing overhead charge). The total costs to the department equaled about $42,200, which is slightly smaller than the most conservative estimate for aggregate WTP (benefit-cost ratio of 1.07) and well below the least conservative estimate for aggregate WTP (benefit-cost ratio of 1.74).

Note that program benefit estimates are for attendees. Given that participants may share information gathered at the meeting with others (e.g., neighbors or friends), positive information spillovers may exist from the program. These are not likely to be incorporated into attendants' responses to survey questions; hence, the true benefit-cost ratio may be larger than estimated above.

Program Pricing and Potential Revenue Generation

Given current national and university initiatives, the department is also interested in the ability of extension programming to generate revenue. As a starting point, we considered how program attendance would change if registration fees were increased while holding the format and quality of the program constant. We use Model 2 and equation (4) to predict the decrease in program attendance for a wide array of increases in registration fees. Multiplying this increase in registration fees by the forecasted attendance yields an estimate for the increase in total revenues.

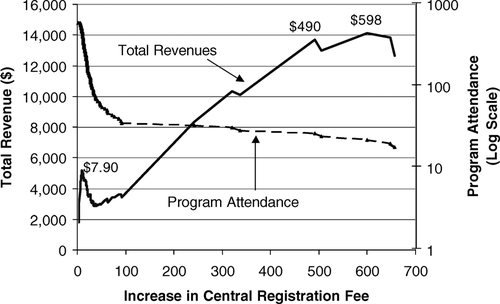

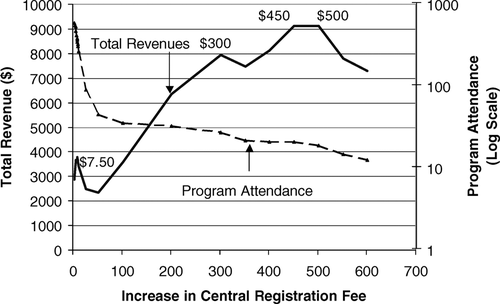

Currently, the department collects $3 per participant from each meeting organizer to cover the cost of handout materials. These estimates suggest marginal increases in costs charged to local organizers would have a negligible effect on attendance while increasing departmental revenue. The projected total attendance and program revenue are plotted in figures 1 and 2 for the two different assumptions concerning survey nonrespondents used in the previous section (identical WTP distribution to survey respondents and one-half the WTP distribution of survey respondents).

The increase in registration fees that would maximize departmental revenues is quite large for both the conservative and less conservative assumptions. Under less conservative assumptions the revenue-maximizing fee increase is $598. Such a fee increase is forecast to generate about $14,000 for the department from the 23 people attending. Under the more conservative assumptions, the revenue-maximizing fee increase would be either $450 or $500 with the attending crowd of either 20 or 18, respectively.5 Such a pricing approach would be similar to registration fees commonly charged by private consulting firms that hold exclusive sessions with a small number of clients.

Program revenue and attendance forecasts—less conservative assumptions

Program revenue and attendance forecasts—more conservative assumptions

While the higher fees appear lucrative, implementing such a drastic increase for the same program would likely be unwise. Such a fee schedule could cause significant friction between the department, local meeting organizers, traditional participants, and university extension administrators. Local organizers are typically county agricultural extension agents who rely upon the good will and opinion of local participants to reach a portfolio of goals and carry out many events and activities. These agents would feel obliged to explain such a large fee increase to participants who likely would react negatively. So, while we can estimate participants' compensating variation for the meeting, capturing this surplus with large fee increases would likely be difficult to implement due to importance of local planning and promotion.

The total revenue curves plotted in figures 1 and 2, each feature several local maximum. A more moderate fee increase of $7.90 would decrease attendance by 18% and yield revenue of $5,083 (figure 1). A local maximum under the more conservative assumptions occurs at a fee increase of $7.50 (figure 2); this is projected to lower attendance by about 38% and generate $3,717 of revenue.

These more moderate fee increases would push total central registration fees to about $11 and would likely be met with significantly less resistance from local organizers. While such an increase is not the short-run revenue-maximizing price, it strikes a balance between increasing revenues and maintaining good will among local organizers. However, such higher fees may dissuade participation by people from smaller business operations—a group to whom the department and university may have a special interest in providing service. Hence, final decisions concerning pricing will have to take into consideration long-term interests, such as the shadow value of departmental good will with clientele, local organizers and extension administration, as well as the short-term benefit of increased revenues.

Visual inspection of figures 1 and 2 suggests a natural segmentation of the current program's clientele may exist between a large segment of participants who hold lower and moderate values for the program and a smaller number of participants with very large values. As a result, the department might differentiate the program into two types of meetings: one focused on the needs of those with smaller WTP for the program and another on those with very high values. Each program could cater to the specific qualities demanded by each segment and be priced in a manner commensurate with the delivered quality levels. Such a strategy could benefit participants because they would attend meetings better customized to their informational needs while the department would benefit because charging prices commensurate with quality could generate more revenue to sustain programming efforts. The optimal design and quality changes for such a differentiation strategy is not obvious from the current data, however; further research that specifically explores proposed changes in format and quality would be necessary.

Summary, Conclusions, and Future Directions

As land-grant extension services continue to experience declining public budgets, they face increasingly difficult decisions regarding allocation of public resources to educational programming. To support some public programs, extension administrators are openly considering raising fees on programs that can effectively support higher prices. As extension administrators and department chairs work through defining the programs important to support with public funds and those that could potentially raise revenues through higher fees, we suggest that they must carefully consider benefits and costs. This paper develops and implements methods to estimate the demand for a specific extension program and uses the resulting demand function for benefit-cost analysis. Such information can provide useful direction to administrators who must make difficult financial decisions.

This paper takes a first step in applying economic methodologies to derive values for traditional extension programs. For the program we consider, average WTP for participants above the travel and time costs of attending is $77.36. Individuals with larger operations, as expected, are willing to pay more, as are individuals who have access to the internet, and those who have experience with the program from previous years. When aggregated across all attendees, annual private benefits of the program range from $45,127 per year (most conservative) to $73,362 per year (least conservative). These private benefits outweigh the costs of producing the program by a ratio of 1.07 to 1.74 to 1. Furthermore, others who receive this information via alternative sources (e.g., press accounts of the programs or by discussions with neighbors who attended) may also hold positive values for the program, which would further inflate the benefit-cost ratios.

In addition to considering the existing program, the data allow us to consider how departments may alter their pricing structure to generate more revenue. Our results suggest that there is a delicate balance between attendance and fees; specifically higher fees are predicted to have a relatively large impact on attendance. Revenue-maximizing fees approach or exceed $500 per participant; however, these fees would have a dramatic effect on total attendance. Smaller fee increases of $7–$8 per program would reduce attendance by 18–38%. We believe these fee increases are more realistic, given the public good nature of extension.

Although the methods and results presented in this paper show how techniques common in the nonmarket valuation arena can be applied to extension program valuation, there are several limitations with the methods and data. First, although the contingent-valuation questions are similar to those used elsewhere, crafting of such questions requires significantly more advance testing (Haab and McConnell) than we were able to accomplish. Second, the questionnaire we used focused on evaluating speakers rather than valuing the program, so we were unable to ask the full range of questions that would help us more accurately calibrate our models. In addition, we did not obtain travel cost data and contingent-valuation data on the same survey. Future surveys should gather both pieces of data from the same respondents.

Third, the benefit-cost analysis conducted above is limited because we have not also considered the value of faculty and staff applying their time and expertise to alternative programs that might have even higher value (i.e., we have not addressed the opportunity costs). While important, this does not necessarily detract from our results. Alternative programs that the faculty and staff conduct could also be estimated with similar methods to determine these opportunity costs. Then, program administrators could compare benefit-cost ratios of the potential, competing uses of scarce personnel and other resources.

Fourth, it is important to recognize that attempts to extract revenue from those who formerly received programming for a nominal fee or for free are likely to be contentious. Allocating scarce resources is always a contentious issue, but prioritizing resources among programs can be aided by objective pricing techniques based on user (and possibly even nonuser) values for the programs.

The evolution and survival of the extension institution will require better knowledge of its clientele's values for individual programs and a better understanding of how the application of user fees might affect not only the amount of revenue generated but also the quantity and composition of the audience that attends. Land-grant extension services will continually have to reconcile market signals concerning the full value of the information and training they provide with the traditional social mandate of free provision.

In order to fully explore the implementation of differentiated extension programs that target segments of existing extension clientele or target audiences not traditionally associated with extension, additional research methods are needed. For example, the use of focus groups (Greenbaum) with different segments of extension clientele might be a wise first step before considering any major changes in extension programming. Such structured dialogues can help identify the needs and concerns of different user segments (e.g., low resource, commercial, nontraditional), customize and refine quantitative research instruments, and identify segments that may be disenfranchised or offended by changes to existing programs.

The qualitative results gleaned from focus groups can then be used to formulate a plan for detailed quantitative research, perhaps in the form of conjoint analysis (Louviere). This stated-preference technique commonly used to develop consumer products can be customized to efficiently explore how individuals trade-off a number of extension programming attributes (program quality, time, location, amenities) against registration fees.

Acknowledgments

The authors acknowledge support from the Ohio Agricultural Research and Development Center.