Artificial intelligence and upper gastrointestinal endoscopy: Current status and future perspective

Abstract

With recent breakthroughs in artificial intelligence, computer-aided diagnosis (CAD) for upper gastrointestinal endoscopy is gaining increasing attention. Main research focuses in this field include automated identification of dysplasia in Barrett's esophagus and detection of early gastric cancers. By helping endoscopists avoid missing and mischaracterizing neoplastic change in both the esophagus and the stomach, these technologies potentially contribute to solving current limitations of gastroscopy. Currently, optical diagnosis of early-stage dysplasia related to Barrett's esophagus can be precisely achieved only by endoscopists proficient in advanced endoscopic imaging, and the false-negative rate for detecting gastric cancer is approximately 10%. Ideally, these novel technologies should work during real-time gastroscopy to provide on-site decision support for endoscopists regardless of their skill; however, previous studies of these topics remain ex vivo and experimental in design. Therefore, the feasibility, effectiveness, and safety of CAD for upper gastrointestinal endoscopy in clinical practice remain unknown, although a considerable number of pilot studies have been conducted by both engineers and medical doctors with excellent results. This review summarizes current publications relating to CAD for upper gastrointestinal endoscopy from the perspective of endoscopists and aims to indicate what is required for future research and implementation in clinical practice.

Introduction

As deep learning (DL) technology has rapidly gained attention as an optimal machine learning method, the application of artificial intelligence (AI) in medicine has been enthusiastically explored.1-4 In the field of upper gastrointestinal endoscopy, AI is expected to provide the solution to clinical hurdles that are believed difficult to overcome with currently available imaging technology. Currently, optical diagnosis of early dysplasia relating to Barrett's esophagus (BE) can be precisely done only by experts; thus, current guidelines recommend endoscopic surveillance in patients with BE with random four-quadrant biopsies obtained every 1–2 cm to detect dysplasia.5 In addition, regarding detection of gastric cancers, 10% of upper gastrointestinal cancers are missed at endoscopy.6

To overcome these limitations, computer-aided diagnosis (CAD) is expected to help endoscopists improve detection and classification of gastrointestinal disease.7 To date, there have been several experimental studies by engineers and some preclinical studies conducted mainly by physicians. The studies focus on four topics: (i) identification of dysplasia in BE; (ii) identification of esophageal squamous cell carcinoma (SCC); (iii) detection of gastric cancers; and (iv) recognition of Helicobacter pylori (HP) infection.

A qualitative review of the literature on CAD for gastroscopy identified 29 relevant studies (16 were physician-initiated and 13 were engineer-initiated). In the present review, we introduce the current status of this research area by describing 16 physician-initiated studies (Table 1) and discuss future perspectives.

| Reference | Year | Target disease | Endoscopic modality | Study design | Main study aim | Subjects for validation |

|---|---|---|---|---|---|---|

| van der Sommen et al.8 | 2016 | Barrett's esophagus | Conventional endoscopy | Retrospective | Detection of neoplasia | 44 patients |

| Swager et al.9 | 2017 | Barrett's esophagus | VLE | Retrospective | Detection of neoplasia | 19 patients |

| Kodashima et al.10 | 2007 | Esophageal SCC | Endocytoscopy | Retrospective | Differentiation of cancer from normal tissues | 10 patients |

| Shin et al.11 | 2015 | Esophageal SCC | HRME | Retrospective | Differentiation of neoplasia from non-neoplasia | 78 patients |

| Quang et al.12 | 2016 | Esophageal SCC | HRME | Retrospective | Differentiation of neoplasia from non-neoplasia | 3 patients |

| Horie et al.13 | 2018 | Esophageal SCC | Conventional endoscopy | Retrospective | Detection of cancer | 97 patients |

| Kubota et al.14 | 2012 | Gastric cancer | Conventional endoscopy | Retrospective | Prediction of invasion depth | 344 patients |

| Miyaki et al.15 | 2013 | Gastric cancer | Magnified FICE | Retrospective | Differentiation of cancerous areas from non-cancerous areas | 46 patients |

| Miyaki et al.16 | 2015 | Gastric cancer | Magnified BLI | Retrospective | Differentiation of cancerous areas from non-cancerous areas | 95 patients |

| Kanesaka et al.17 | 2018 | Gastric cancer | Magnified NBI | Retrospective | Delineation of cancerous areas | 81 images |

| Hirasawa et al.18 | 2018 | Gastric cancer | Conventional endoscopy | Retrospective | Detection of cancer | 69 patients |

| Zhu et al.19 | 2018 | Gastric cancer | Conventional endoscopy | Retrospective | Prediction of invasion depth | 203 lesions |

| Huang et al.20 | 2004 | H. pylori | Conventional endoscopy | Post-hoc analysis of prospectively obtained data | Prediction of H. pylori infection | 74 patients |

| Shichijo et al.21 | 2017 | H. pylori | Conventional endoscopy | Retrospective | Prediction of H. pylori infection | 397 patients |

| Itoh et al.22 | 2018 | H. pylori | Conventional endoscopy | Retrospective | Prediction of H. pylori infection | 30 images |

| Nakashima et al.23 | 2018 | H. pylori | Conventional endoscopy with/without BLI and LCI | Post-hoc analysis of prospectively obtained data | Prediction of H. pylori infection | 60 patients |

- BLI, blue laser imaging; FICE, flexible spectral imaging color enhancement; HRME, high-resolution microendoscopy; LCI, linked color imaging; NBI, narrow band imaging; SCC, squamous cell carcinoma; VLE, volumetric laser endomicroscopy; WCE, wireless capsule endoscopy.

What Is Deep Learning?

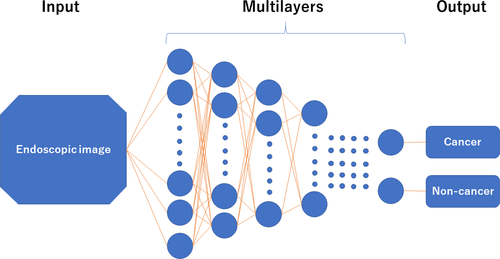

Artificial intelligence that adopts machine learning methods is classified into roughly two categories: hand-crafted algorithm (conventional) and DL algorithm (recently developed). In the hand-crafted algorithm, researchers manually indicate the potential features (e.g. edge, size, color and surface pattern) of the images based on clinical knowledge, whereas the DL algorithm autonomously extracts and learns discriminative features of the image. Technically, DL is capable of approximating complex information by using a multilayered system (e.g. convolutional neural networks [CNN]) in which neural layers only connect to the next layer (Fig. 1). Both methods have pros and cons; DL usually (not always24 ) outperforms the hand-crafted algorithm; however, it requires a far greater amount of learning material. One limitation of DL is its black-box nature; the DL algorithm cannot apply reason to the machine-generated decision, which can confuse endoscopists who are familiar with diagnostics that have been accumulated by many endoscopists. A new research area “interpretable DL” is now attracting attention because it tries to show the reasons for decisions made by DL.25

Esophagus

Identification of dysplasia in BE

Barrett's esophagus is a risk factor for the development of esophageal adenocarcinoma, and automated identification of dysplastic change in BE is one of the hottest research topics in the field of CAD for gastroscopy. This topic is of special interest because it potentially helps endoscopists carry out “high-accuracy” targeted biopsies that can eliminate the need for random biopsies, which are considered labor- and time-intensive with a relatively low per-lesion sensitivity of 64% for detection of dysplasia.26-28 The American Society of Gastrointestinal Endoscopy recently endorsed the use of advanced endoscopic modalities to carry out targeted biopsy versus random biopsy under specific conditions.5 For this purpose, the Society set the performance thresholds for optical diagnosis as a per-patient sensitivity of 90% and a negative predictive value (NPV) of 98% for detecting high-grade dysplasia and esophageal adenocarcinoma, and a specificity of 80%.5 However, these thresholds can be met by experts only. Therefore, a decision-supporting tool is required for non-expert endoscopists to implement optical diagnosis in clinical practice.

van der Sommen et al.8 developed an automated detection system for early neoplasia in BE by adopting algorithms based on specific texture, color filters, and machine learning for conventional endoscopic images. The authors evaluated their algorithm using 100 images from 44 patients with BE. The system identified early neoplastic lesions on a per-image analysis with a sensitivity and specificity of 83%.

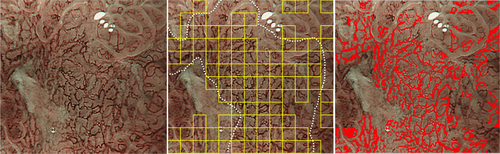

The same study group subsequently reported the application of AI technology for volumetric laser endomicroscopy (VLE) in 2017.9 VLE provides a near-microscopic resolution scan of esophageal wall layers up to 3 mm deep based on optical coherence tomography technology, allowing improved detection of early neoplasia in BE. The authors focused on clinically inspired features for machine learning in their AI model (Fig. 2). According to ex vivo cross-validation of the proposed AI model using 60 VLE images, results showed a sensitivity and specificity of 90% and 93%, respectively.

Identification of SCC

Esophageal cancer is the sixth leading cause of cancer death worldwide. Most deaths occur in developing countries because of late-stage diagnosis, and where nearly all cases are esophageal SCC.12, 29 Lugol's chromoendoscopy is currently considered the gold-standard screening method to identify SCC during gastroscopy; however, its specificity is low (approximately 70%) whereas its sensitivity is considered >90%. This relatively lower specificity is mainly because of false-positive findings of inflammatory lesions that are difficult to endoscopically differentiate from neoplastic change. This low specificity could be compensated for by using “pink color sign” assessment in a Lugol-voiding area but an additional few minutes are required. Moreover, heartburn, severe discomfort, and the risk of allergic reaction caused by iodine staining are considered problematic as a means of screening.30 Narrow band imaging (NBI; Olympus Corp., Tokyo, Japan) is considered an attractive, non-invasive option to yield high accuracy, but its specificity was limited to roughly 50% in a randomized controlled trial.31 To overcome these drawbacks, more advanced endoscopic imaging techniques have been developed, including confocal laser endomicroscopy (CLE; Mauna Kea Corp., Paris, France) and endocytoscopy (Olympus Corp.).32, 33 However, interpreting these microscopic images usually requires expertise and intensive training, which hinders generalization of the technologies to clinical practice. Therefore, several research groups developed CAD systems that allow automated image interpretation.

Kodashima et al.10 developed a computer system to simplify differentiating malignant and normal tissues in endocytoscopic images of esophageal specimens obtained from 10 patients. Endocytoscopes allow in vivo microscopic visualization of the mucosal surface based on the principle of contact microscopy. Using endocytoscopes, morphologies and arrangements of nuclei can be evaluated when stained with methylene blue. Computer-based analysis showed a significant difference in the mean ratio of total nuclei to the entire selected field, 6.4% ± 1.9% in normal tissues and 25.3% ± 3.8% in malignant samples (P < 0.001), which enabled endoscopic differentiation of normal and malignant tissues.

Shin et al.11 developed a quantitative image analysis algorithm for identifying squamous dysplasia from non-neoplastic mucosa. This software was designed to analyze the segmented nuclear and cytoplasmic regions of the acquired images using high-resolution microendoscopy (HRME), which provides similar images to CLE, but costs significantly less. The software was developed and tested using data obtained from 99 patients and finally validated using 167 biopsied sites from 78 patients. The software's sensitivity and specificity for identifying neoplastic change were 87% and 97%, respectively, and the authors concluded that HRME with a quantitative image analysis algorithm may overcome problems of training and expertise in low-resource settings.

The same research group12 subsequently improved the algorithm to allow real-time analysis and implemented the algorithm in a tablet-interfaced HRME, which demonstrated comparable imaging performance but at lower cost compared with laptop-interfaced HRME systems. Evaluating the same 167 biopsied sites used in the previous study, the algorithm identified neoplasia with a sensitivity and specificity of 95% and 91%, respectively. In addition, this novel software was evaluated in vivo for three patients, providing 100% consistency with histopathology.

Despite the excellent performance of the three proposed models, clinical implementation of the software has not occurred, partly because of limited availability of endocytoscopes and HRME. In this regard, Horie et al. recently published notable results of using AI designed for conventional endoscopy.13 Their constructed model based on CNN, for which 8428 endoscopic images of esophageal cancer from 384 patients were used for machine learning, provided 98% sensitivity in the detection of cancerous lesions. The positive predictive value (PPV) was limited to 40% with a considerable number of false-positive images; however, the NPV of the developed model reached 95%. The authors concluded that the drawback of the system, namely the low PPV, will decrease as the amount of learning material incrementally increases.

Stomach

Identification of gastric cancer

Gastric cancer is a major cause of cancer-related death worldwide, and gastroscopy is considered useful in detecting early-stage gastric cancer. However, two clinical concerns remain in early identification of gastric cancer, including difficulty in detection. Early gastric cancers usually show a subtle elevation or depression with faint redness, which hinders endoscopists’ accurate recognition. The second problem is predicting the invasion depth to the gastric wall. Ideally, differentiated-type, intramucosal gastric cancers (M) or those invading the shallow submucosal layer (≤500 μm: SM1) should be resected endoscopically, whereas those invading deeply into the submucosa (>500 μm: SM2) should be resected surgically because of the substantial risk of nodal and distant metastasis. However, discrimination of M/SM1 from SM2 is not necessarily easy. CAD for early gastric cancers has been investigated to overcome these problems, mainly by Asian researchers.

Magnifying endoscopy combined with narrow spectrum imaging such as NBI, flexible spectral imaging color enhancement (FICE; Fujifilm Corp., Tokyo, Japan), and blue laser imaging (BLI; Fujifilm Corp.) are clinically useful in discriminating cancerous areas from non-cancerous areas in the stomach.34-40 However, this optical diagnosis requires substantial expertise and experience, which prevents its general use in gastroscopy. Miyaki et al.15 developed software to automatically differentiate cancerous from non-cancerous areas. The authors used a bag-of-features framework with densely sampled scale-invariant feature-transform descriptors to magnifying FICE images and validated the model using 46 intramucosal gastric cancers. The CAD system yielded a detection accuracy of 86%, sensitivity of 85% and specificity of 87% for a diagnosis of cancer.

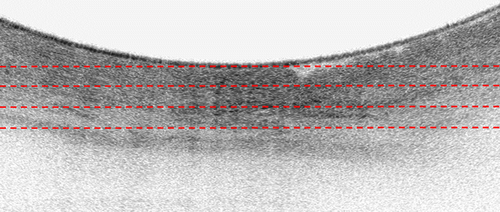

The same group16 applied similar technology to magnifying BLI images in 2015, evaluating the CAD system for use with BLI to identify early gastric cancer. The authors prepared 100 early gastric cancers, 40 flat or slightly depressed, small, reddened lesions, and surrounding tissues to quantitatively validate the CAD model. Results showed an average output value from CAD of 0.846 ± 0.220 for cancerous lesions, 0.381 ± 0.349 for reddened lesions, and 0.219 ± 0.277 for surrounding tissue, with the CAD output value for cancerous lesions being significantly greater than that for other categories (Fig. 3). Based on these findings, the research group concluded that automated quantitative analysis could be used to identify early gastric cancer.

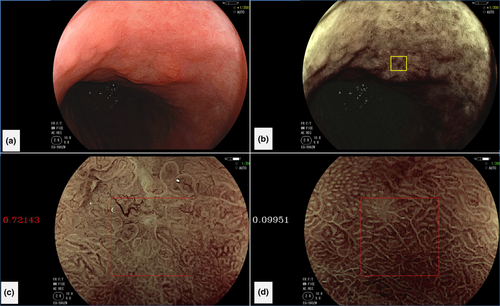

Another study by Kanesaka et al.17 advanced the field. The authors developed software that enabled not only identification of gastric cancer but also delineated the border between cancerous and non-cancerous areas (Fig. 4). This CAD algorithm was designed to analyze gray-level co-occurrence matrix features of partitioned pixel slices of magnifying NBI images, and a support vector machine was used for the machine learning method. A total of 126 magnifying NBI images were used as training material. The authors validated the constructed model using 61 magnifying NBI images of early gastric cancers and 20 magnifying NBI images of non-cancerous areas as a test set. The model's diagnostic performance to differentiate cancer showed 97% sensitivity and 95% specificity. The performance for area concordance showed 66% sensitivity and 81% specificity.

In 2018, Hirasawa et al.18 first reported automated detection of early gastric cancer using DL technology. The authors’ achievement was distinct in that they used conventional endoscopy images rather than magnifying NBI, FICE, or BLI as a target of their algorithm. The constructed CNN model was based on single-shot multibox detector architecture. The authors trained the network using 13 584 endoscopic images and evaluated its performance using a test set of 2296 stomach images collected from 69 consecutive patients with 77 gastric cancer lesions. The model required 47 s to analyze 2296 test images and correctly diagnosed 71/77 gastric cancer lesions with an overall sensitivity of 92%. However, 161 non-cancerous lesions were detected as gastric cancer, resulting in a PPV of 31%. This constructed CNN for detecting gastric cancer processed numerous stored endoscopic images in a very short time with a clinically relevant diagnostic ability and potentially works during real-time gastroscopy (Video S1).

Regarding depth analysis of wall invasion of gastric cancer, Kubota et al.14 developed a CAD system using a back-propagation neural network algorithm on endoscopic images from 344 gastric cancer patients. Their cross-validation evaluation showed diagnostic accuracies of 77%, 49%, 51%, and 55% for T1, T2, T3, and T4 stages, respectively. T1 subanalysis (intramucosal cancer [T1a] and submucosally invasive cancer [T1b]) resulted in accuracy of 69%.

Zhu et al.19 advanced this research area by developing the notable DL algorithm that enabled identification of SM2 from M/SM1. A total of 790 conventional endoscopic images of gastric cancers were used for machine learning, while an additional 203 images, which were completely independent from the learning material, were used as a test set. The AI model showed 76% sensitivity and 96% specificity in identifying “SM2 or deeper” cancers, resulting in significantly higher sensitivity and specificity than those achieved with endoscopists’ visual inspection. The high specificity of 96% could minimize overdiagnosis of invasion, which would contribute to a reduction in unnecessary surgeries for M/SM1 cancers.

Identification of Helicobacter pylori infection

Helicobacter pylori (HP)-associated chronic gastritis can cause mucosal atrophy and intestinal metaplasia, both of which increase the risk of gastric cancer.22, 41, 42 Gastroscopic examination is very helpful to detect HP infection at an early stage. Various endoscopic features such as mucosal atrophy, mucosal swelling, and lack of a regular arrangement of collecting venules are findings suspicious for HP infection. However, diagnostic accuracy in diagnosing HP infection is not necessarily high. Watanabe et al.43 reported endoscopic sensitivity and specificity for HP infection of 62% and 89%, respectively. To prevent failure to detect HP-infected patients during gastroscopy, AI is an anticipated decision-support tool, especially for non-expert endoscopists.

In 2004, Huang et al.20 developed a CAD model using refined feature selection with neural network techniques to predict HP-related gastric histological features of conventional endoscopic images. The authors trained the model using endoscopic images from 30 patients and used image parameters obtained from 74 patients to construct the prediction model for HP infection. The model had a sensitivity of 85% and a specificity of 91% for detecting HP infection.

In 2017, Shichijo et al.21 developed a 22-layer deep CNN to predict HP infection during ongoing gastroscopy. A dataset of 32 208 images from 735 HP-positive patients and 1015 HP-negative patients was used to construct the model. A separate test data set (11 481 images from 397 patients) was retrospectively independently evaluated by the CAD system and 23 endoscopists. The authors found a sensitivity, specificity, and diagnostic time for CAD of 89%, 87%, and 194 s, respectively. These values for the 23 endoscopists were 79%, 83%, and 230 ± 65 min, respectively. The model had a significantly higher accuracy than that of the endoscopists.

Another study group22 developed a CAD model based on DL technology in 2018. The authors prepared 179 gastroscopic images obtained from 139 patients (65 were HP-positive and 74 were HP-negative) for the study. Among the images, 149 were used as training images for a conventional neural network, and the remaining 30 (15 from HP-negative patients and 15 from HP-positive patients) were used as test images. Sensitivity and specificity of the CAD model to detect HP infection was 87% and 87%, respectively, and the area under the receiver operating characteristic curve (AUC) was 0.956.

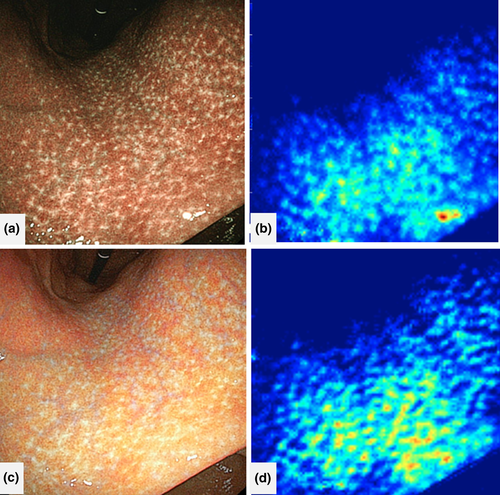

Following this study by Itoh et al., Nakashima et al.23 suggested image-enhanced endoscopy rather than conventional endoscopy as a promising tool to predict HP infection using AI. Nakashima et al. designed a CAD system based on a DL algorithm using endoscopic images from 162 patients as learning material and those from 60 patients as a test data set. From each patient, three white-light images (WLI), three BLI-bright images, and three linked color images (LCI; Fujifilm Corp.), respectively were captured. AUC on receiver operating characteristics analysis was 0.66 for white-light endoscopy. In contrast, the AUC of BLI-bright and LCI were 0.96 and 0.95, respectively, both significantly larger than those for WLI (Fig. 5). The study of Nakashima et al. is notable in that the authors collected all data prospectively, which can contribute to the reliability of results; however, the authors did not carry out in vivo, real-time analysis using CAD.

Future Directions

Technical limitations of AI: What causes false-positive/negative findings?

The most important outcome measure of both automated detection and characterization of gastrointestinal neoplasia is sensitivity for neoplastic lesions because they usually require treatment whereas non-neoplastic polyps may be left in situ. Other crucial outcome measures include false-negative findings (i.e. neoplastic areas/lesions misdiagnosed as non-neoplastic) and false-positive findings (i.e. non-neoplastic areas/lesions misdiagnosed as neoplastic). Hirasawa et al.18 elaborated on the importance of these two types of measures; in their study, six of 71 cancers were missed, indicating that the lesion-based false-negative rate was 8%. Most of these missed cancers were minute (≤5 mm) with superficially depressed morphology, which were difficult to distinguish from gastritis even for experienced endoscopists. On the other hand, the false-positive findings included gastritis with changes in color tone or irregular mucosal surface and even normal anatomical structures of cardia, angulus, and pylorus. The main cause of the false-positive/negative findings might have been the limited number and quality of the learning material. Therefore, further accumulation of a variety of endoscopic images could decrease those false findings. Considering that collecting video-based images contribute to high accuracies in the field of CAD for colonoscopy,44, 45 adopting video-based images instead of static images as learning material may be a good option to reduce false-positive/negative findings in CAD for endoscopy. Video frames usually contain far more low-quality, “real-world” images that are seldom seen in captured static images.

What evidence is currently lacking?

Although energetic research in both medical and engineering institutions has been carried out worldwide, and good results have been reported in some preliminary studies, a crucial problem remains to be addressed: all previous studies were retrospective or post-hoc analyses of a limited number of test samples. Considering the future application of AI technology to gastroscopy practice, prospective trials using AI technology in a real-time method is mandatory for several reasons.

First, the results of retrospective studies are likely to appear better than what is actually true because of selection bias. Second, retrospective studies cannot clarify how to manage unanalyzable/low-quality images, which are often seen in clinical practice (in some retrospective studies, low-quality endoscopic images were excluded from the analysis).

Difference of the research phase between CAD for colonoscopy and CAD for gastroscopy

A couple of CAD systems for colonoscopy have already been prospectively validated and data describing both good (efficacy) and bad (limitations) aspects of using CAD have been revealed.46-50 For example, Mori et al. conducted a large-scale prospective study including 791 patients for validation of their developed CAD model designed for endocytoscopy. They found their model provided 93.7% NPV for diminutive rectosigmoid adenomas even under the worst-case scenario. However, some limitations were observed; the researchers found that NPV for diminutive adenomas in the right-sided colon were limited to 60% under the worst-case scenario and approximately 30% of the captured images were unanalyzable for CAD because of their low quality.50

There seem to be several reasons for the existence of more prospective studies on CAD for colonoscopy than on CAD for gastroscopy.51 First, the prevalence of colorectal cancers is higher than that of esophageal cancer or gastric cancer, especially in the West, thus CAD for colonoscopy tends to attract more interest from international research communities. Second, endoscopic images for machine learning of CAD are more easily collected in the colonoscopy field than in the gastroscopy field because of the large number of positive findings (i.e. neoplasms including cancers and adenomas) in colonoscopy. For example, a screening colonoscopy detects colorectal cancer in less than 1%, but adenomas in as many as 30%,52 whereas a screening gastroscopy detects gastric cancer in less than 1%53 with a similar number of adenomas. A higher proportion of positive findings usually contributes to a reduction of learning material, specifically, the number of endoscopic images. In addition, CAD for identifying dysplasia in the esophagus and stomach might require far more learning material than CAD for colonoscopy; these types of dysplasia arise from an inflammatory background, which make them challenging to detect, whereas colorectal polyps are not accompanied by surrounding inflammatory mucosa. Technically, more images are required for machine learning to identify lesions that are difficult to distinguish with the human eye.

Once high-volume and highly varied learning images are prepared for machine learning to optimize performance of the system, prospective evaluation of CAD for gastroscopy will be successfully realized.

What are the future directions?

Provided that high-quality evidence based on prospective evaluation of CAD for gastroscopy is secured, what roles will be expected for its use in clinical practice?

Computer-aided diagnosis for gastroscopy is basically expected to serve as a second observer during real-time gastroscopy, helping endoscopists detect more neoplasms. At the same time, it can be used for educational purposes to help train novice endoscopists because CAD is able to detect lesions that are often missed by inexperienced endoscopists. CAD can also notify trainees of the presence of such lesions, as senior endoscopists would do during fellowship training. In addition, CAD can be used for postprocedural assessment of completed gastroscopies. In several areas of Japan, a double-check after population-based screening gastroscopies is recommended, which is recognized as a big burden for endoscopists. CAD can be a good solution to lessen the endoscopists’ burden. Finally, CAD has the potential to explore uncultivated area of clinical endoscopy, such as optical diagnosis of duodenal superficial tumors (e.g. determining adenoma vs cancer). Accurate optical diagnosis of such lesions is currently considered challenging even for experienced endoscopists;54 however, CAD will be a game changer in these fields if its high performance is realized.

Conclusion

Computer-aided diagnosis for upper gastrointestinal endoscopy is a rapidly growing research area being explored for use in assisting with the diagnosis of various diseases, specifically BE, esophageal SCC, gastric cancer, and HP infection. Although as of this writing, this research field has been evaluated only experimentally, the advantage of CAD in clinical gastroscopy can be anticipated given the high performance provided by the state-of-the-art technology of DL. With larger learning samples and well-designed prospective trials, this novel technology for upper gastrointestinal endoscopy could be implemented in clinical practice in the near future.

Acknowledgments

This work was supported by Grants-in-Aid for Scientific Research (Number 17H05305) from the Japan Society for the Promotion of Science. We express our deepest appreciation to Drs Toshiaki Hirasawa, Tomohiro Tada, Hirotaka Nakashima, Hiroshi Kawahira, Shigeto Yoshida, Noriya Uedo, Takashi Kanesaka, John Tsung-Chun Lee, Maarten Struyvenberg, and Jacques Bergman for providing the video and figures used in this article. We also thank Jane Charbonneau, DVM, from Edanz Group (www.edanzediting.com/ac) for editing a draft of this manuscript.

Conflicts of Interest

YM, SK, MM are inventors of the patent “Image-processing instrument and method” (No. 6059271 in Japan) with inventor's premiums paid by Showa University. YM, SK, MM received speaking honoraria from Olympus Corporation. KM received research funding from Cybernet Corporation. None of the other authors declares conflicts of interest relating to the present article.