Deep learning detects subtle facial expressions in a multilevel society primate

Abstract

Facial expressions in nonhuman primates are complex processes involving psychological, emotional, and physiological factors, and may use subtle signals to communicate significant information. However, uncertainty surrounds the functional significance of subtle facial expressions in animals. Using artificial intelligence (AI), this study found that nonhuman primates exhibit subtle facial expressions that are undetectable by human observers. We focused on the golden snub-nosed monkeys (Rhinopithecus roxellana), a primate species with a multilevel society. We collected 3427 front-facing images of monkeys from 275 video clips captured in both wild and laboratory settings. Three deep learning models, EfficientNet, RepMLP, and Tokens-To-Token ViT, were utilized for AI recognition. To compare the accuracy of human performance, two groups were recruited: one with prior animal observation experience and one without any such experience. The results showed human observers to correctly detect facial expressions (32.1% for inexperienced humans and 45.0% for experienced humans on average with a chance level of 33%). In contrast, the AI deep learning models achieved significantly higher accuracy rates. The best-performing model achieved an accuracy of 94.5%. Our results provide evidence that golden snub-nosed monkeys exhibit subtle facial expressions. The results further our understanding of animal facial expressions and also how such modes of communication may contribute to the origin of complex primate social systems.

INTRODUCTION

Several mechanisms, such as acoustic, tactile, olfactory, and visual displays, enable animals to effectively convey signals to receivers (Smith 1977; Dawkins & Krebs 1978). Such communication is essential in mediating social interactions in animals, the complexities of which are strongly associated with social structures (Blumstein & Armitage 1997; Jackendoff 1999; Mccomb & Semple 2005; Freeberg 2006). Communication in animals involves an individual's motivation, intentions, demands, hints, and emotions, which are all required to coordinate social interactions and maintain social cohesion and stability (van Hooff 1967; Ekman 1997; Parr et al. 2002).

Among nonhuman primates (hereafter “primates”), facial expressions are thought to be adaptive visual signals in social contexts because they provide information about the sender's current condition and future behaviors (Andrew 1963; van Hooff 1967; Goosen & Kortmulder 1979). This enables information to be effectively conveyed to maintain stable and long-lasting relationships (Parr 2011). Among some primate species, such as chimpanzees, orangutans, gibbons, and macaques, facial expressions have been suggested as having a nonverbal communication function, analogous to those of human beings (Vick et al. 2007; Parr et al. 2010; Waller et al. 2012; Caeiro et al. 2013; Julle-Danière et al. 2015). For example, expressions such as the baring of teeth, pant-hooting, and screaming have been identified and classified as transmitting to other individuals different modes of information in chimpanzees (Parr & Waller 2006). In both Barbary and rhesus macaques, the facial expression of pulling the corners of the lips back and slightly upward has a similar communicative function to smiling in humans (Parr et al. 2010; Julle-Danière et al. 2015). These facial expressions have clear facial movements and have similarities to human facial expressions.

The Facial Action Coding System (FACS) method was developed in humans and was modified and applied to evaluate facial expressions and emotions in primates. In FACS, facial movements are identified by numerical code as action units to describe the range of observable facial expressions (Parr et al. 2010). This method has been widely used in studies of several primate species, such as macaques (Parr et al. 2010) and chimpanzees (Vick et al. 2007; Parr & Waller 2006). Based on FACS, several new methods to detect social information from facial expressions have been recently employed (Waller et al. 2020; Mielke et al. 2022; Rincon et al. 2023). However, the assessment of FACS was often done manually, which may introduce a lack of accuracy and subjectivity, is time-consuming, and requires observer experience and skills. Moreover, due to the focus of the FACS system on facial expressions produced by pronounced facial movements, previous research has predominantly focused on facial expressions that are readily recognizable by human observers. This raises a question that remains unsolved so far: Do animals, especially primates, use subtle facial expressions that are imperceptible to human observers, enabling a form of micro-expression communication?

Following new technological developments, such as artificial intelligence (AI), image recognition based on deep learning techniques has been used for various applications, mostly in object classification and detection (Noor et al. 2020). Recent advances in computer vision have made accurate, fast, and robust measurements of animal behaviors a reality. For instance, deep learning was applied for individual recognition from facial images of chimpanzees (Schofield et al. 2019) and many other mammal species (Guo et al. 2020; Billah et al. 2022; Ahmad et al. 2023; Bergman et al. 2024). Capturing the postures of animals—pose estimation—has been rapidly advancing with new deep learning methods such as DeepLabCut (Mathis & Mathis 2020; Wiltshire et al. 2023). Hence, the recent advancement of deep learning in computer vision has the potential to help us classify subtle facial expressions in primates. The application of deep learning for facial expressions may have the advantages of increased speed, accuracy, and objectivity (Noor et al. 2020; Lencioni et al. 2021). Also, although humans cannot easily detect the subtle changes in some facial expressions, AI models may have the potential to detect and quantify subtle changes, which has been shown in the recognition of human micro-expressions (Xia et al. 2020). For example, an enriched long-term recurrent convolutional network can encode each micro-expression frame as a feature vector through a convolutional neural network (CNN) module and pass the feature vector through a long short-term memory module to predict the micro-expressions (Xu et al. 2017; Wang et al. 2018; Xia et al. 2020; Ben et al. 2021; Zhao et al. 2021b; Xie et al. 2022). Another spatio-temporal neural architecture search algorithm called AutoMER has also been shown to be efficient and effective in detecting and quantifying facial micro-expressions (Ben et al. 2021; Verma et al. 2022).

Here, we studied the golden snub-nosed monkey, a primate species well-known for its complex multilevel society (MLS) (Qi et al. 2014; Grueter et al. 2017; Fang et al. 2022). Such societies are social systems that include multiple layers of social entities, with at least two clear and consistent levels of organization (Grueter et al. 2012; Grueter et al. 2020). Social integration occurs between individuals and populations within these social layers (Schreier & Swedell 2012; Swedell & Plummer 2012). Primate MLSs are rare; in addition to humans, they have been only reported in papionin monkeys (e.g. geladas, Theropithecus gelada, hamadryas baboons, Papio hamadryas, and Guinea baboons, Papio papio) and colobine monkeys (e.g. snub-nosed monkeys, proboscis monkeys, Nasalis larvatus, and douc langurs, the genus Pygathrix) (Grueter et al. 2020). The core unit of the golden snub-nosed monkey MLS is the one-male unit, comprising a resident male, several breeding females, and their infant and juvenile offspring. Though core units or cliquishness typifies many mammal societies, the subunits are socially cohesive in multilevel societies and form higher layers (Qi et al. 2014, 2017; Fang et al. 2022). Living in such a complex social system, individuals face the challenge of interacting with more than one hundred individuals, from intra-unit to inter-unit, and repeatedly interacting with the same individuals over time (Freeberg et al. 2012). Therefore, communication is essential for mediating social interactions (Sewall 2015), which ultimately triggers the need to express a wider range of emotional and motivational states (Peckre et al. 2019). In golden snub-nosed monkeys, previous studies used clear facial expressions such as the opening of mouth and baring of the teeth expression, which showed this species can identify different facial expression stimuli (Yang et al. 2013; Zhang et al. 2019; Zhao et al. 2021a). This indicates that facial expressions may be important in communication in golden snub-nosed monkeys. However, whether or not this species uses subtle facial micro-expressions remains to be investigated.

Here, we used deep learning models to investigate subtle facial expressions in golden snub-nosed monkeys. By presenting the subtle facial expressions of monkeys related to three different social contexts (affiliative behaviors, agonistic/submissive behaviors, and neutral behaviors), we compared the performance of deep learning models with human observers. We made two predictions: (i) monkeys make different subtle facial expressions according to different social contexts, and deep learning models can recognize and differentiate these expressions; (ii) humans are unable to detect some subtle facial expressions of monkeys. This study aims to uncover subtle facial expressions in these monkeys, enhancing our understanding of the intricate communication patterns within complex animal social systems.

MATERIALS AND METHODS

Study site

This study was conducted at two sites, one with wild and another with captive golden snub-nosed monkeys. For the wild monkeys, the study site was in the Dapingyu region of the Guanyinshan National Nature Reserve in the Qinling Mountains, central China (Wang et al. 2020; Wang et al. 2023). Since 2010, the monkeys have been habituated to food provisioning. The breeding band consisted of 70–95 individuals with 9–17 adult males (7+ years old), 19–34 adult females (5+ years old), 10–14 subadults (4–6 years old), and 19–21 juveniles (1–3 years old) (Zhang et al. 2006; Fang et al. 2022).

For the captive monkeys, the study site was the Shaanxi Wild Animal Rescue and Research Center, Louguantai, China (Chen et al. 2018). These captive monkeys consisted of 3 adult males, 6 adult females, and 15 juveniles, kept in four cages and fed three times daily, at 10 a.m., 2 p.m., and 4 p.m., respectively.

Data collection

For the wild monkeys, we used GoPro HERO10 cameras to record the monkeys by ad libitum video recording. For the captive monkeys, surveillance video cameras (BWT AIN03) were set in each cage for 24-h recording. All data were collected from February 25, 2022, to April 14, 2023.

For both sites, ad libitum sampling and all-occurrence recording were used to record behaviors. Following previous definitions of primate behaviors (Galinski & Barnwell 1995), the behaviors collected from each video clip were categorized into three groups: (i) affiliative behaviors, (ii) agonistic/submissive behaviors, and (iii) neutral behaviors.

Affiliative behaviors refer mainly to grooming (Wei et al. 2012). Grooming involves cleaning or maintaining one another's body function and hygiene. Grooming is a cooperative behavior and is important in establishing and maintaining relationships within primate groups (Dunbar 2010; Grueter et al. 2013). Also, grooming is considered to be the most common affiliative behavior among primates and facilitates the maintenance of group cohesion (Schino & Aureli 2008; Kaburu et al. 2019). Self-grooming is not considered an affiliative behavior in this study.

Agonistic behaviors consist of biting, fighting (for the aggressor), chasing, lunging, supplanting, and vocal threatening. Submissive behaviors consist of avoidance, crouching, and fleeing when agonistic behaviors occur.

Neutral behavior was exhibited when a monkey was in a daze and remaining still with both eyes open under a calm and peaceful atmosphere, with no other individuals within 2 m around, and no other behavior was exhibited for at least 1 min.

To reduce the effect of behaviors from other monkeys nearby affecting the results, affiliative and neutral behaviors were collected under two criteria: (i) Individuals were at least 2 m from any other monkey and (ii) no agonistic/submissive behaviors were exhibited by any other monkeys in the group.

Video processing

When the focal monkeys exhibited affiliative, agonistic/submissive, or neutral behaviors in a video recording, we edited the recording from the time of beginning to the end of the behavior and split each recording into 3228 images with the ffmpeg tool (https://ffmpeg.org) for further labeling.

This resulted in a total of 217 video clips (23.5 ± 19.7 s, mean ± SD). Three frames per second were extracted from the video clips to generate images. These images contained the site background and time stamp, so we cropped the monkey faces using the Faster-RCNN model (Guo et al. 2020), which had been previously fine-tuned on facial images of labeling by the annotation tool LabelMe (https://pypi.org/project/labelme). Each monkey's face was assigned a rectangular region and recorded for later analysis. Frontal faces are selected with two standards: (i) Facial pictures must contain both eyes, the nose, and mouth; and (ii) be less than 30 degrees deviated from the horizontal and vertical axes of the exact front face.

All the images of the agonistic/submissive category come from the wild monkeys since the captive monkeys very rarely engage in fights. For the other two categories, images come from both captive and wild situations.

We collected 3427 images which were randomly divided into a training set of 2618 images (1337 affiliative images, 248 agonistic/submissive images, and 1033 neutral images) and a test set of 809 images (521 affiliative images, 124 agonistic images, and 164 natural images).

Facial expression recognition by humans

We used 87 participants (17–36 years old, 36 males and 51 females) to test for the ability to discriminate between different subtle facial expressions of affiliative, agonistic/submissive, and neutral behaviors made by the monkeys. We collected individual information about sex, age, observation experience (how much experience each observer had), and time of their last monkey observation. Participants consisted of two groups in terms of experience. Group A contained 32 researchers with experience in observing golden snub-nosed monkeys, while Group B consisted of 55 college students with no such experience.

We built a freely available online program to test if humans can detect the subtle facial expressions made by the monkeys. The program exhibited 60 images of monkeys one by one on a monitor. The program set 20 images for each category. All images were extracted from the training set randomly and shuffled across every test. Participants were tested individually. They were asked to discriminate whether the monkey was making an affiliative, agonistic/submissive, or neutral gesture in Group A. In Group B, since participants have no experience in animal observation and might not understand the meaning of affiliative, agonistic, and submissive, the participants were asked to discriminate whether the monkey's expression was friendly, tense, or neutral. Both groups were asked to choose from a drop-down list located under the image corresponding to the three categories within 10 s after the image showed up on the monitor. The program recorded 60 trials per participant and calculated the accuracy. There was no feedback from/to the participants: a trial ended and then another trial began without any feedback.

After collecting the data, we conducted a multiple regression analysis to figure out what factors (sex, age, total observation time, and the time from the last observation) could be influencing the accuracy. The analysis was conducted in SPSS V19.0 (SPSS Inc., Chicago) and was two-tailed with a significant level at P < 0.05.

Facial expression recognition by AI models

The present study employed and trained the deep learning models with the collected real data. Three representative neural models were: (i) EfficientNet (Tan & Le 2019), (ii) RepMLP (Ding et al. 2021), and (iii) Tokens-To-Token ViT (T2T-ViT) (Yuan et al. 2021). EfficientNet is efficient at dealing with a large number of high-dimensional primate facial expression images, particularly in scenarios requiring accurate classification and identification of subtle facial expressions (Tan & Le 2019). T2T-ViT is adept at handling dynamic changes and complex features in facial expressions (Ding et al. 2021), making it suitable for understanding and analyzing subtle differences in animal behaviors. For tasks emphasizing local features in images, Rep-MLP provides an efficient and direct approach (Yuan et al. 2021). This is important for accurately recognizing and classifying primate facial expressions. To leverage the learned knowledge of image recognition, we employed the pre-trained models for these three architectures, which were trained on the ImageNet dataset. Then, the three models were fine-tuned for facial expression recognition. Each of these three models is described briefly as follows.

EfficientNet

CNN is commonly used in computer vision such as facial expression recognition (Xia et al. 2020). To achieve better architectures by searching, the EfficientNet scales up the CNN by balancing all the dimensions of network width, depth, and resolutions and presents eight models, namely, B0–B7. These architectures contain various convolutional layers and pooling layers in different connection ways and are divided into the basic mobile inverted bottleneck MBConv (Tan & Le 2019). By stacking the MBConv blocks, the local information of input is derived and classified by the fully connected layer. Considering the tradeoff between the representation ability and the complexity of EfficientNet, we finally chose the Efficient-B4 as the baseline method for recognizing the facial expressions of golden snub-nosed monkeys.

RepMLP

Multi-layer perceptron (MLP) has been used in image recognition in recent years and achieved better performance in some special tasks. In MLP, the fully connected layers are directly used to build the long-range dependencies and extract the global features for images. As the MLP has huge parameters and depends on large training samples, the RepMLP can enable structural re-parameterization for fully connected layers by adding the convolutional layers in the training stage but removing them in the inference stage. In the training stage, three groups of fully connected layers, that is, global perceptron, partition perceptron, and local perceptron, are employed in the network. After obtaining the parameters, some layers in the global perceptron and partition perceptron and all layers in the local perceptron (containing convolutional layers) are simplified and merged into the three fully connected layers. By following the ResNet50 connection way, the RepMLP-Res50 was finally selected as the baseline for golden snub-nosed monkeys.

Tokens-To-Token ViT

The transformer has recently been used in image recognition and achieves similar performance to CNN. Using the self-attention module, the transformer can construct the long-range relationship of image patches and extract the regional features for images. To obtain the deep model on the expression task, we employed the extension of the vanilla vision transformer (ViT), namely, T2T-ViT, to recognize facial expressions. The T2T-ViT re-structured the original image and softly split the reconstructed images iteratively by the T2T module to reduce the length of tokens and transform the spatial structure of the image. Then, it stacks the standard transformer layers similar to the vanilla ViT for extracting the global features of these tokens. As ViT uses more storage than CNN, we finally chose the T2T-ViT-14 with 14 transformer layers as the baseline for recognizing the expressions of golden snub-nosed monkeys.

Experimental setup for training the AI models

For training the three different models, that is, EfficientNet, RepMLP, and T2T-ViT, the data augmentation strategy was used to enrich the training samples, including random scaling and flipping (Tan & Le 2019; Ding et al. 2021; Yuan et al. 2021). Following the same strategies as for model learning, the learning configurations for the three models were set up as follows. For EfficientNet, the learning rate, the momentum, and the decay factor were set to 0.1, 0.9, and 0.0001 by using the AdamW optimization method (Loshchilov & Hutter 2019). For RepMLP, the initial learning rate and decay factor were set to 0.000125 and 0.05, respectively. For T2T-ViT, the same configuration for AdamW was used while the cosine learning rate decay strategy was adopted additionally.

Randomization experiment and gradient-weighted class activation map

We performed a randomization experiment and a gradient-weighted class activation map to guarantee AI models were actually detecting the facial expressions of the monkeys; details are shown below.

Randomization experiment

To demonstrate that distinguishable features can indeed be learned from the three types of expressions, we performed a randomization experiment for the three learning models. We set two scenarios. The first was to give the true labels, which means labeling each facial expression based on real social contexts. The second was to use random labels, which meant generating random orders for different social contexts and then assigning them to facial expression samples. We compared the performances of these two scenarios with the deep learning models.

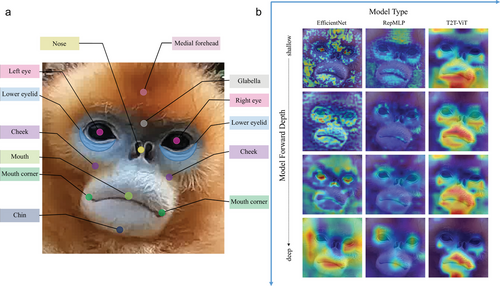

Gradient-weighted class activation map

A gradient-weighted class activation map was used to monitor the main detecting areas of the figures. Key areas identified by the three models were highlighted as the calculation depth increased during the models' computations. The detecting area of all three models was mainly concentrated on the facial part of each monkey. This result showed that models were detecting facial expressions rather than other characters (Fig. 1).

All deep learning models were accomplished on PyTorch 1.11 and Ubuntu 20.04 with the single NVIDIA RTX3090.

Resource availability

Materials availability

The codes for recognizing facial expressions of humans and the AI models were written in Python and PyQt, which can run on Windows 10 and Ubuntu 20.04. The GUI for human recognition was designed with PyQt5.15 and packaged with PyInstaller. The AI models were performed on a workstation with Intel Xeon(R) E5-2678v3, Graphics: GeForce RTX 3090 24G, RAM: 16GB, and Storage: 1TB SSD.

Data and code availability

All the original videos, the pictures of the monkey facial expressions, and the related models for this study are publicly available on the website: https://pan.baidu.com/s/1XhTi7T3cY9CW-3FlXhcMmw?pwd=2667

RESULTS

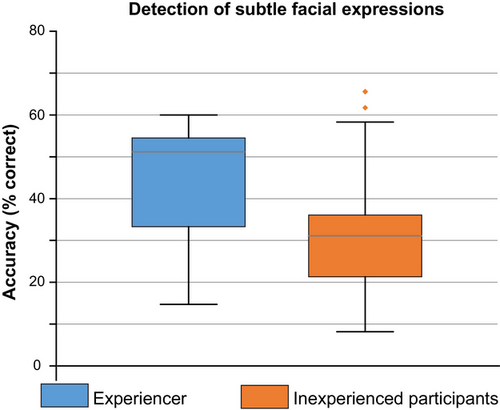

Human recognition

Human participants performed relatively poorly in the recognition test (Fig. 2). The mean accuracy (% correct of 60 trials) was 45.0% ± 13.2% (mean ± SD, n = 32) for the experienced observers of Group A. The chance level of the three-alternatives discrimination was 33%. The statistical analysis showed that experienced researchers can detect subtle facial expressions at rates higher than by chance. The performance of Group B (inexperienced observers) was 32.1% ± 14.5% (mean ± SD, n = 55). Group A observers had higher rates of accuracy than the inexperienced observers of Group B (Mann–Whitney U test: z = −3.503, P < 0.001). Based on a multiple regression analysis, we found no significant relation between accuracy and sex, age, total observation time, or last observation time in Group A (Table 1).

| Index | Standardized coefficients beta | t-value | P-value |

|---|---|---|---|

| Gender | −0.16 | −0.92 | 0.37 |

| Age | −0.23 | −0.95 | 0.35 |

| Total observation time | 0.09 | 0.38 | 0.71 |

| The time from the last observation | −0.38 | −2.06 | 0.06 |

The participants described the photos as nearly the same in both Group A and Group B and have very slight differentiation of facial movement. The highest accuracy in Group A is 60%, while Group B has almost the same result (65.6%).

AI model recognition

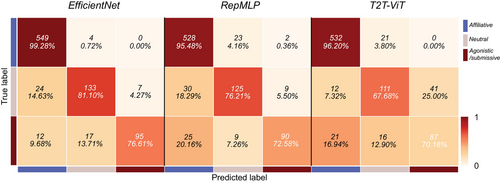

The performance

For the randomization experiment, the results of the self-collected data are summarized in Table 2. In the table, the accuracies of the three AI models (i.e. EfficientNet, RepMLP, and T2T-ViT) for the regular group (using the labeled category) are 94.50%, 90.62%, and 87.31%, respectively (Table 2). Conversely, the accuracies of these models for the control group (using the random-assigned labels) are 36.45%, 36.77%, and 43.87%, which is close to the chance level of 33.33% (Table 2). This suggests that when random labels are assigned to the images, the deep models do not distinguish any differences. In other words, some information exists in the labeled categories (i.e. affiliative, agonistic/submissive, and neutral).

| Groups | Description | EfficientNet | RepMLP | T2T-ViT |

|---|---|---|---|---|

| Normal group |

Affiliative: 521 Agonistic: 124 Neural: 164 |

94.48% (0.89) | 90.62% (0.85) | 87.31% (0.73) |

| Control group |

Random affiliative: 521 Random agonistic: 124 Random neural: 164 |

36.45% (0.08) | 36.77% (0.09) | 43.87% (0.06) |

To observe the recognition details for each facial expression, the confusion matrices for the three deep learning models are shown in Fig. 3. The EfficientNet model achieved the best accuracy of 99.28% for the affiliative category, while the worst precision was 76.61% for the agonistic/submissive category. The RepMLP model obtained a rate of 95.48% precision for the affiliative category, while the worst accuracy was for the agonistic/submissive category at 72.58%. The T2T-ViT achieved 96.20% accuracy for the affiliative category and 70.16% for the agonistic/submissive category. All deep learning models achieved at least 65% precision for each of the three types of facial expressions. For each model, a level of at least 95% precision was obtained for at least one type of facial expression. In the confusion matrices, most misclassified categories had a less than 35% error rate (Fig. 3). Observed from the results, the AI models learn some representations effectively from three categories by comparing the random-label learning. Different AI models achieved different recognition results as they have various representation abilities.

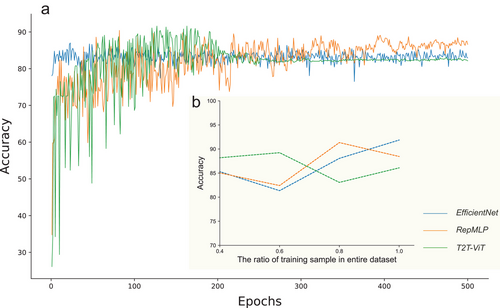

The training samples and epochs

The training samples and epochs are essential for a deep learning model to effectively recognize different facial expressions. We evaluated the training samples by randomly selecting different ratios from the dataset and using various training samples for model learning (Fig. 4). We also report the accuracy of various epochs and the relationship between these two factors and the model performance (Fig. 4). The deep learning models become stable at approximately 85% accuracy after 200 epochs. Accuracy is not improved with more epochs (>200 iterations). Besides, the explored models are robust to the ratio of training samples and could be improved slightly, as observed in Fig. 4b. When the ratio is the lowest, all three models can obtain an accuracy of at least 85%; when the ratio is high, all three models can achieve an accuracy of at least 87%.

DISCUSSION

Here, we show that the AI deep learning models, EfficientNet, RepMLP, and T2T-ViT, can distinguish three behavioral contexts of golden snub-nosed monkeys based on subtle facial expressions. This suggests that the facial expressions of golden snub-nosed monkeys can be used to communicate different social behaviors. Using the same dataset, human observers are ineffective in detecting the monkeys’ subtle facial expressions. Our results reveal that the facial expressions of primates can be subtle, with at least some unrecognizable by humans.

Humans are ineffective in detecting subtle facial expressions but AI can

Our results suggest that humans are ineffective in detecting the three behavioral categories of golden snub-nosed monkeys based on subtle facial expressions. Experienced observers (Group A) performed significantly better than inexperienced observers (Group B). However, we found no significant relation between accuracy and sex, age, total observation time, or last observation time in the experienced group. This may be due to a small sample size (n = 32) and also because most individuals in Group A have a similar number of years of experience in making relevant behavioral observations. Compared with inexperienced individuals, those with observation experience can sometimes distinguish the facial expressions of golden snub-nosed monkeys under different behavioral contexts. Such experience may be important to enable humans to distinguish facial expressions in these monkeys. This also suggests that subtle facial expressions are present in golden snub-nosed monkeys, but the ability to detect them by human observation is limited and requires more efficient methods. For the study of subtle facial expressions in primates, better detection through artificial recognition may thus be required.

For model recognition, all three methods, the EfficientNet, RepMLP, and T2T-ViT, achieved good recognition performance. All deep learning models of various architectures obtained in excess of 87% accuracy, which is higher than the configuration with random labels (Table 2). The highest accuracy rate (EfficientNet) reached 94.5%. According to the randomization experiment, low performance (less than 44% accuracy, Table 2) for the various models trained with random labels suggests that the deep learning models were not overfitted to the dataset and captured the intrinsic features for the subtle facial expressions of the monkeys. The different models achieved variable performances with the same training samples as the same models with different parameters. With increased numbers of training samples and appropriated epochs, the accuracy of the deep learning models increased (Fig. 3). Before overfitting at epoch 500, all models converged stably and almost achieved their best performance. These deep learning model results suggest that the facial expressions of the monkeys vary in subtle ways under different social contexts.

Previous work on horses found that subtle facial expressions are present in these social mammals (Tomberg et al. 2023). Our results build on these previous findings. We found that golden snub-nosed monkeys can express different subtle facial expressions that vary according to different social contexts. Furthermore, our study highlights the advantages of using AI over manual methods, as this offers enhanced accuracy, speed, and robustness. Our results show the potential of AI in studies of animal behavior.

Subtle facial expressions could be related to the complex social system

The social complexity hypothesis proposes that animals living in groups with comparatively greater social complexity will exhibit greater complexity in their methods of communication (Freeberg 2006; Freeberg et al. 2012; Sewall 2015). The facial expressions of primates known to have communicative functions should increase in complexity in species with the level of sophistication of their social system and may have subtle manifestations that humans cannot distinguish.

Golden snub-nosed monkeys have a complex social system, an MLS (Qi et al. 2014, 2023). As pointed out in Introduction, there is a core unit called a one-male unit, and one-male units are closely associated to form a breeding band. Less competitive males aggregate into a bachelor group, called an all-male band which shadowed around the breeding band, and together form a herd. Different herds engage in seasonal fission-fusion to form a troop (Qi et al. 2014, 2017). It has been argued that similar processes in primate MLS may have influenced social evolution in ancestral and modern hunter-gatherer humans (Rodseth 2012; Macfarlan et al. 2014; Dyble et al. 2016). This unique social system of golden snub-nosed monkeys could be the trigger that individuals evolve to subtle ways to communicate. Our results could be evidence of the social complexity hypothesis. Because of the complexity of the social system, the communication signal of the golden snub-nosed monkey could be more complex. Under this situation, the monkeys may use subtle facial expressions to communicate in different social contexts. The ability that primates can make subtle facial expressions might relate to the formation of the complex social system in primates. The subtle facial expression may also exist in other primate MLSs.

Darwin (1876) described the expressions of humans and animals as by-products of emotions. In humans, facial expressions that reveal emotion may stimulate empathy in others (Williams et al. 2013). However, in animals, it has been argued that revealing emotions to others might expose vulnerability and weakness and could be counter-adaptive (Waller et al. 2014). We found that subtle facial expressions occurred under different social contexts, suggesting that some primates may not express their emotions and motivations by clear facial expressions. The way some primates express emotions may thus be a balance between soliciting prosocial assistance and exposing weakness.

In summary, using deep learning techniques, we found that golden snub-nosed monkeys make subtle facial expressions that were undetectable by humans but recognizable by AIs. The best-performing AI deep learning models achieved an accuracy rate of 94.5%, much higher than for human observers. Our findings present a novel approach that demonstrates the potential of deep learning techniques in studying animal facial expressions. Moreover, our results provide evidence that animals can indeed exhibit subtle facial expressions. In primate species characterized by multi-level societies, the communication system can be complex. These subtle facial expressions may be closely linked to the formation and functioning of such intricate social systems. As a result, our study contributes to a deeper understanding of animal facial expressions and sheds light on the possible origins of complex social systems in the animal kingdom.

Animal welfare notes

All research protocols reported here adhere to the regulatory requirements and were approved by the animal care committee of the Wildlife Protection Society of China (SL-2012-42). The research was under the supervision of the Institutional Animal Care and Ethics Committee of Northwest University, China.

ACKNOWLEDGMENTS

We thank Guanyinshan National Nature Reserve and Shaanxi Wild Animal Rescue and Research Center for giving us permission to carry out the research. We thank Prof. Ruliang Pan and Prof. Derek Dunn for the comments and suggestions on the manuscript. We also thank all who helped us during the fieldwork, and all of the participants of the human test. This study was supported by the National Natural Science Foundation of China (32101238, 32471565, 31730104, and 62006191), the West Light Foundation of The Chinese Academy of Science (XAB2020YW04), the Strategic Priority Research Program of the Chinese Academy of Sciences (XDB31020302), the Key Research and Development Program of Shaanxi (2021ZDLGY15-01, 2021ZDLGY09-04, 2022ZDLGY06-07, 2021ZDLGY15-04, 2021GY-004, and 2020GY-050), the Project to Attract Foreign Expert of China (G2022040013L), the Shaanxi Fundamental Science Research Project for Mathematics and Physics (22JHQ038), and the International Science and Technology Cooperation Research Project of Shenzhen (GJHZ20200731095204013).

CONFLICT OF INTEREST STATEMENT

The authors declare no competing interests.

Open Research

DATA AVAILABILITY STATEMENT

All the original videos, the pictures of the monkey's facial expression, and the related models in our study have been released publicly on the website: https://pan.baidu.com/s/1XhTi7T3cY9CW-3FlXhcMmw?pwd=2667