Targeted enrichment of whole-genome SNPs from highly burned skeletal remains

Presented at the 74th Annual Scientific Conference of the American Academy of Forensics, February 21–26, in Seattle, WA.

Abstract

Genetic assessment of highly incinerated and/or degraded human skeletal material is a persistent challenge in forensic DNA analysis, including identifying victims of mass disasters. Few studies have investigated the impact of thermal degradation on whole-genome single-nucleotide polymorphism (SNP) quality and quantity using next-generation sequencing (NGS). We present whole-genome SNP data obtained from the bones and teeth of 27 fire victims using two DNA extraction techniques. Extracts were converted to double-stranded DNA libraries then enriched for whole-genome SNPs using unpublished biotinylated RNA baits and sequenced on an Illumina NextSeq 550 platform. Raw reads were processed using the EAGER (Efficient Ancient Genome Reconstruction) pipeline, and the SNPs filtered and called using FreeBayes and GATK (v. 3.8). Mixed-effects modeling of the data suggest that SNP variability and preservation is predominantly determined by skeletal element and burn category, and not by extraction type. Whole-genome SNP data suggest that selecting long bones, hand and foot bones, and teeth subjected to temperatures <350°C are the most likely sources for higher genomic DNA yields. Furthermore, we observed an inverse correlation between the number of captured SNPs and the extent to which samples were burned, as well as a significant decrease in the total number of SNPs measured for samples subjected to temperatures >350°C. Our data complement previous analyses of burned human remains that compare extraction methods for downstream forensic applications and support the idea of adopting a modified Dabney extraction technique when traditional forensic methods fail to produce DNA yields sufficient for genetic identification.

Highlights

- Targeted enrichment panel is effective at capturing whole-genome SNPs from burned skeletal tissue.

- Whole-genome SNP data recovered from skeletal material subjected to a range of burning conditions.

- Significant decrease in SNP data obtained from skeletal elements subjected to temperatures >350°C.

- Samples extracted with the Dabney protocol produced, on average, more on-target SNPs.

1 INTRODUCTION

Disaster victim identification is often hindered by the effects of burning [1-8]. Obtaining DNA from thermally altered forensic human remains is challenging due to the acute pyrolytic degradation of DNA in soft and mineralized tissues. Short tandem repeat (STR) profiles of these cases often remain unresolved due to the extremely low yields at high temperatures. However, skeletal remains often show variable levels of heat alteration in such cases, unlike commercial cremains [6-8], suggesting DNA recovery may be possible. For example, Hartman et al. [4] found that bones from bushfire victims produced full DNA profiles, and non-crematory or archeological cremations are characterized by lower temperatures (<300°C), which has resulted in DNA recovery in some cases [9-12]. Furthermore, the design of highly sensitive protocols for ancient DNA (aDNA) extraction and library preparation, aimed at enhancing the yield of short DNA fragments and increasing the concentration and complexity of the library [13-18], has been complemented by advances in next-generation sequencing (NGS, or massively parallel sequencing). Together with targeted capture techniques, these developments are rapidly enhancing the quality and quantity of on-target DNA molecules essential for forensic identification [19-22]. To maximize resources and minimize the destruction of remains, it is crucial to understand the conditions under which bones and teeth produce DNA.

Fire can impact both soft and hard tissues [23], and the resulting post-incineration condition of skeletal material depends primarily on the pre-incineration state of the bone [24]. Bones containing collagen and other organic components are categorized as “green” or “fresh”, while dry bones respond differently, and post-incineration signatures can distinguish between dry and fleshed cremations [25]. Green or fleshed bones exhibit consistent post-incineration color change characteristics on a continuum affected by the duration of exposure to fire and oxygen availability [26-34]. Although the assessment of the links between temperature, fire duration, and color changes varies somewhat among researchers, the sequence reported by Schwark et al. [35] is characteristic and commonly used: (1) well-preserved (<200°C), (2) yellow or brown (200–300°C), (3) black or smoked (300–350°C), (4) gray (550–600°C), (5) white or calcined (>650°C) [36]. Teeth follow a slightly different sequence [37, 38], with Symes et al. [39] reporting it as beige-yellow (unburned), black, brown/olive, gray, and white. The progression from unburned to smoked to calcined is associated with more fragmentation and the loss of collagen, and past research on DNA recovery shows that it declines significantly after reaching 600–800°C [40], at the transition from burned/charred/smoked to blue-gray-white visual signals of calcination [24]. However, the effects of burning on DNA preservation are not well understood in general and particularly in the context of newer NGS analyses.

While testing the use of aDNA protocols in forensic DNA case work is not new [41-43], integrating these methods with state-of-the-art sequencing and novel computational workflows has the potential to optimize the outcomes of DNA recovery in forensic contexts, and resolve cold and other challenging cases that remain indeterminate using traditional forensic DNA techniques alone [43]. Ancient DNA techniques include those that are optimized to recover low DNA yields, amplify degraded DNA template material with a high frequency of uracils, remove deaminated bases (i.e., Uracil-DNA glycosylase treatment) [44, 45], target low copy partial and whole genomic regions of interest [36-38, 46-52], and assemble, authenticate, and quantify low-coverage genomic data via post-sequencing bioinformatics programs [53-60]. Leveraging aDNA methods for challenging forensic cases has the potential to increase DNA yields and improve DNA quality for both traditional applications, such as STR analysis, PCR amplification of the hypervariable regions of the mitochondrial genome (mtDNA), and more recent methodological advances, including targeted enrichment and NGS of the mtDNA genome and genome-wide SNPs.

The extraction of degraded DNA from skeletal remains is a challenging task due to DNA fragmentation and damage [10, 55, 56, 61, 62]. Most extraction protocols employed by forensic and ancient DNA researchers use either phenol-chloroform or guanidinium hydrochloride (GuHCl) salt-based methods [15, 63-67]. A method developed by Loreille et al. [64, 68] for forensic remains has been found to be particularly useful in retrieving DNA molecules from skeletal tissues [64, 68]. Ancient DNA studies usually rely on a method developed to recover ultrashort DNA fragments between 30–50 bp in length [14, 69]. Protocols optimized specifically for ancient DNA analyses have been applied to forensic DNA cases and have facilitated the recovery of DNA in situations where previous methods have failed [70, 71].

Genome-wide SNPs are currently being assessed for forensic analyses, and they are useful for identification, as a reasonable number of SNPs can provide equivalent resolution or even greater resolution than CODIS STRs [72]. They also facilitate discerning ancestry, phenotype, blood type, and individual identity [37, 72-75]. SNPs can be analyzed using several methods, including allele-specific PCR, SNP chips/arrays, targeted capture, and NGS sequencing [76-79]. NGS technologies enable the parallel sequencing of multiple samples simultaneously and at a greater sequencing depth than traditional CE-based Sanger sequencing technologies [77, 80-83]. At some point, NGS analyses of STR, autosomal SNP, and mtDNA genome data may replace Sanger sequencing as the gold standard in forensic genetics [80, 84, 85].

Targeted capture, or enrichment, is one solution for obtaining endogenous DNAs from highly degraded and/or contaminated human skeletal tissues. In-solution targeted capture techniques use short DNA or RNA probes (e.g., 80–120 nt) designed to hybridize to a specific genomic region of interest. Once hybridized to their target, the product is isolated, amplified, and sequenced. One notable advantage of targeted enrichment is a reduction in the cost associated with whole-genome sequencing by focusing the enrichment chemistry on a particular region (e.g., SNPs or the non-recombining region of the Y-chromosome). Despite these advantages, targeted capture strategies are not without limitation. Some regions of the genome may be difficult to capture due to their sequence composition, and post-enrichment amplification can introduce biases, as some regions may be over- or under-represented in the sequencing data, due to either PCR over-amplification and/or capture efficiency [86, 87]. Improvements in probe design and hybridization techniques help mitigate these issues. Here we test an in-solution-based SNP panel (3897 SNPs) to understand the effects of burning on whole-genome SNP recovery from skeletal tissues.

2 MATERIALS AND METHODS

2.1 Sub-sampling and burn cohort classification

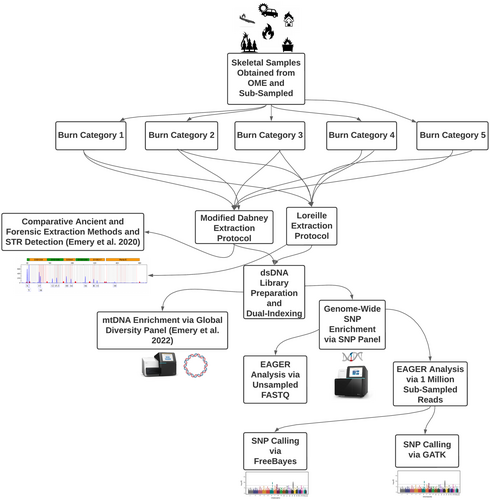

The Maricopa County Office of the Medical Examiner (MCOME) in Phoenix, Arizona, collected and recorded 27 fire victims from incidents including house fires, airplane crashes, vehicle fires, and motor vehicle accidents (Figure 1). Various skeletal elements including radii, phalanges, metatarsals, tibiae, femur, parietal and temporal fragments, and premolars were sampled at the MCOME. These 27 samples were sorted into five burn categories (1–5) according to the bone discoloration patterns described by Bonucci and Graziani [88] and Schwark et al. [35], which resulted in a total of n = 60 sub-samples for downstream processing. Bone and teeth samples were then transferred to the Laboratory of Molecular Anthropology at Arizona State University (ASU) for further processing [35, 88] (Table 1). Samples belonging to burn categories 1–3 were processed in a laboratory dedicated to DNA extraction of modern samples with low amounts of DNA, while burn category 4 and five samples were processed in an ancient DNA laboratory space equipped with UV and class 10,000 HEPA filtration.

| Burn category | Color | Temperature, °C (°F) | Total N samples per burn category |

|---|---|---|---|

| 1 | White; Yellow | <200 (<392) | 21 |

| 2 | Yellow; Brown | 200–300 (392–572) | 30 |

| 3 | Carbonized; Black | 300–350 (572–662) | 28 |

| 4 | Gray; Blue | 550–600 (1022–1112) | 10 |

| 5 | Calcined; Cremated white ash | >650 (>1202) | 18 |

| Extraction/Library negative controls | – | – | 10 |

The skeletal elements were sub-sectioned using a diamond cutting-wheel and Dremel collar and crushed with a hammer. To prevent contamination, sampling tools were decontaminated using a 6% NaClO and ultrapure H2O washing process, UV-irradiation, and drying. This process was repeated for each sample, and the resulting bone powder was transferred to two separate polypropylene tubes for DNA extraction. Each sub-sample was extracted in duplicate: one using the modified Dabney technique, and the other using the Loreille extraction method. Duplicated extracts resulted in a total of n = 107 sub-samples (n = 58 Dabney extracted samples; n = 49 Loreille extracted samples) for double-stranded DNA (dsDNA) library preparation.

2.2 DNA extraction and double-stranded DNA library preparation

Two different DNA extraction methods were employed: one commonly used in the field of ancient DNA by Dabney et al. [14], and the other routinely used in forensic cases for the total demineralization of bone by Loreille et al. [14, 68]. These are referred to subsequently as the Dabney and Loreille protocols, respectively. The Dabney protocol utilized a varied range of bone powder input (21–500 mg), while the bone powder yields for the total demineralization protocol (Loreille et al. [68]) were standardized to 200 mg. Specifically, DNA from bone and tooth samples were extracted according to the methods outlined in Emery et al. [22, 89]. DNA extracts, as well as extraction and library blanks were converted to dsDNA libraries and dual-indexed following the protocol described in Emery et al. [89]. Further specifics regarding the adjustments made to both extraction and library preparation protocols can be found in Appendix S1.

2.3 SNP panel and whole-genome SNP enrichment

To evaluate the performance of samples exposed to varying degrees of burning, we used a genome-wide SNP panel designed by Dr. Odile Loreille and synthesized by Daicel Arbor Biosciences. The panel (20,000 baits; 52 nt in length) is comprised of forensically relevant SNPs, including individual identity SNPs (IISNPs; n = 104), microhaplotype SNPs (n = 37), phenotype informative SNPs (PISNPs; n = 48), ancestry informative SNPs (AISNPs; n = 164), and SNPs that correspond to blood type (ABOSNPs; n = 12), with SNPs located on the autosomes, X (n = 1592) and Y (n = 1940) chromosomes. The RNA baits were designed using the hg38 human genome assembly and Y-chromosome SNPs from ISOGG (International Society of Genetic Genealogy). A total of 3897 SNPs were queried using NCBI's dbSNP database and ISOGG, together with their chromosome positions, and REF/ALT alleles. These data were used to build 3 reference VCF, BED, and eigenstrat files for SNP enriched library filtering via the EAGER pipeline and bcftools.

Targeted enrichment was carried out in accordance with myBaits Manual v.4.01 guidelines provided by Daicel Arbor Biosciences, MI, USA, with minor adjustments to bait concentrations (50 ng per rxn), hybridization temperature (55°C), and time (24 h). Post-hybridization, the enriched libraries underwent re-amplification using 18.8 μL of template in a 40 μL reaction, with this method: The KAPA SYBR® FAST qPCR Master Mix (2X), primer combination IS5_long_amp.P5 (5'-AATGATACGGCGACCACCGA-3′) and IS6_long_amp.P7 (5'-CAAGCAGAAGACGGCATACGA-3') at 150 nM concentration, and ultrapure H2O. The amplification protocol was an initial incubation of 95°C for 5 min, followed by 12 cycles of 95°C for 30 s, 60°C for 45 s, and a final incubation at 60°C for 3 min. Post-amplified captured libraries were purified using MinElute™ PCR Purification Kit columns and eluted with 10.75 μL of buffer EB for another round of enrichment. This second round of whole-genome SNP enrichment repeated the previously described process. Libraries of burn category 1–3 were diluted in a ratio of 1:10,000, while burn category 4–5 libraries were diluted at a ratio of 1:1000. The diluted library fractions were measured using the PhiX Sequencing Control V3 (Illumina) standard for the 425–525 bp range, with dilutions decreasing from 100 pM to 0.0625 pM for calibration of the qPCR. The enrichment of libraries was quantified using the primer pairs IS5_long_amp.P5 and IS6_long_amp.P7. The qPCR master mix consisted of 150 nM of each primer, KAPA SYBR® FAST qPCR Master Mix (2X), diluted library at 1:10,000 (or 1:1000) ratio, PhiX serial dilutions (4 μL), along with a pair of ultrapure H2O blanks. The parameters for the quantitative PCR amplification replicated the re-amplification instructions described earlier. After quantification, the libraries were pooled at equimolar concentrations for sequencing.

2.4 Illumina sequencing

SNP-enriched libraries were sequenced on an Illumina NextSeq 550 System at ASU's Genomics Core facility using two high-output kits with a 2 × 150 bp read chemistry.

2.5 Post-sequencing read alignment and filtering with EAGER

The NGS reads were initially demultiplexed utilizing Illumina's Bcl2fastq2 software for downstream analysis. Since the library pool was divided across two separate Illumina NextSeq 550 runs, multiple R1 and R2 FASTQ files were created for the same library. Consequently, we merged the multiple files using a custom Bash script (refer to Appendix S1 for details), then assessed the quality of the pre-processed, merged raw R1 and R2 FASTQ files using FastQC, and compiled the FastQC reports into one MultiQC file [90]. The merged FASTQ files were processed, aligned, and filtered using the nf-core pipeline, EAGER v.2.4.5/6 (Efficient Ancient Genome Reconstruction), and a modified list of the custom parameters used during those EAGER runs is provided in Appendix S1 [57]. In brief, the paired-end FASTQ files were trimmed and merged (retaining singletons) using AdapterRemoval2. Trimmed and merged FASTQ files were then mapped to the human genome reference (GRCh38; GCA_000001405.15; UCSC hg38) using BWA-MEM. A BED file containing all the flanking positions for the 3897 SNPs using EAGER's --snpcapture_bed flag was also included to generate SNP statistics. Aligned SAM files were converted into BAM format, then restricted to a minimum read length of 35 bp and a mapping quality of 30 using Samtools. Lastly, duplicated reads were identified and removed by using Picard's MarkDuplicates. In order to normalize the number of reads going into the pipeline, a separate EAGER run was performed on the raw paired-end FASTQs that were sub-sampled to 1,000,000 reads [1 M] using the program seqtk, and is further detailed below. Additionally, the unsheared burn category IV and V libraries were tested for terminal deamination using mapDamage2.0 and DamageProfiler [55, 56].

2.6 SNP calling and filtering: FreeBayes and GATK

The output of FreeBayes and GATK (v.3.8) from the EAGER pipeline runs were compared to investigate differences in variant calling between these two common variant calling programs. SNP calling using FreeBayes was performed during the first EAGER run directly on the 1 M sub-sampled paired-end FASTQ files. Conversely, GATK SNP calling was performed with a modified EAGER script using the deduplicated BAM files from that previous EAGER run. Although subsequent filtering steps were performed on the resulting VCF files (reviewed below), initial EAGER parameters were modified to exclude variant positions with <3 reads (--freebayes_C 3) for SNPs called via FreeBayes. Sample ploidy was set to “2” for diploid genomes, and GATK's HaplotypeCaller was used for variant detection. GATK's variant calls output to a GVCF file, so GATK's software was used to convert GVCF to VCF. Variant calling files were compressed in BGZF format, then indexed and filtered to a minimum depth of coverage of 3X and a minimum genotype quality (GQ) score of 30 using bcftools. A VCF file containing all the SNPs in the enrichment panel was also compressed in BGZF format and indexed, and queried for SNP sites via chromosome and position (hg38 assembly). Variants were then extracted from the library VCF files using the queried SNPs sites file output and bcftools filter by region parameter (see https://github.com/ACStoneLab/extract_snp). Furthermore, VCF files were merged by extraction type and burn category using bcftools merge command for comparative analysis. EAGER parameters and command-line arguments for variant processing, filtering, and extraction are contained in Appendix S1.

2.7 DNA authentication and contamination control

Contamination was monitored using extraction and library negative controls, pre-sequencing QC checks (via negative qPCR controls during library amplification), and post-sequencing QC via quantifying mtDNA heteroplasmy from the same dsDNA libraries previously enriched for mtDNA, and published elsewhere [89]. Given that 27 individuals are represented by multiple replicates (i.e., sub-sampled across extraction methods and across burn categories), consistency among mtDNA and Y haplogroup calls were used to monitor for potential cross-contamination.

2.8 Haplotype assessment

We identified haplotypes using the filtered and deduplicated BAM files via the CLC Genomics Workbench software v.12.0.3 from CLC/Qiagen and the Forensic Reference/Resource on Genetics Knowledge Base (FROG-kb). We omitted libraries that failed to produce VCF files (i.e., low-coverage libraries with a total of ≤1000 filtered and deduplicated reads), or failed to produce consistent haplotypes across extraction and/or burn category sub-samples, which resulted in a total of 86 out of 107 libraries available for Y-chromosome and AISNP haplotype determination and analyses. Y-chromosome and AISNP haplotypes were called and classified at SNP positions with ≥4 depth of coverage. Ancestry informative SNPs were compared against both the Seldin (128 AISNPs) and the Kidd (55 AISNPs) panels, that include 131 and 161 populations, respectively (Appendix S2, Table S9, for AISNP data for the Seldin and Kidd panels) [91-94].

3 RESULTS

3.1 Contamination, sample omission, and DNA authentication

Prior mitochondrial DNA (mtDNA) analysis identified possible cross-contamination in six libraries, four of which were analyzed in this study. As a result, these specific SNP-enriched libraries (i.e., 26D3, 26D4, 26L4, 11L3, and those enriched from the same indexed dsDNA library) were excluded from further examination. While the negative controls did detect minor contamination, they did not provide any data indicative of cross-contamination between samples, or from human sources present within the laboratory. Negative extraction and library preparation controls did not produce SNP data. The data suggest that the spurious reads in our libraries are likely minor exogenous environmental contamination, and not significant human contamination (Appendix S2, Table S6). Additional qPCR controls (negative controls run in duplicate) did not indicate the presence of amplifiable DNA, and all mtDNA and Y-chromosome haplogroups were consistently observed across sub-sampled libraries for each individual, according to previous mtDNA enrichment results using the same dsDNA libraries published elsewhere (Emery et al. [89]), and Y-chromosome haplogroups reported below. Additionally, no deamination was detected in both mtDNA and SNP enriched unsheared burn category 4 and 5 libraries (Appendix S1, Figure S1). The results of the sub-sampled output (1 M reads; the retention of singletons increased the number of reads to >1 M for the majority of libraries) are reported below, including both mapped and filtered, as well as the data generated from the raw, unsampled FASTQ files.

3.2 Normalized non-parametric statistical assessment

Despite normalization of the genomic data (per mg of bone, see Appendix S2, Tables S3–S5), several variables demonstrated a non-normal distribution, as indicated by a Shapiro–Wilk normality test for the normalized filtered and deduplicated mapped reads, the number of aligned reads to the SNP panel BED file, and the quantity of variants identified by FreeBayes and GATK (p-value = <2.2e-16 for all variables) (refer to Appendix S1, Figure S2 for data dispersion against normal and log-normal distributions). Non-parametric assessment of the normalized genomic data used a Kruskal–Wallis test for two-sample comparisons (i.e., extraction type) and a pairwise Wilcoxon rank-sum test for grouped data. A Spearman's coefficient matrix shows a high correlation (0.91–1) between the number of reads aligning to the BED file using Dabney extracted libraries and Loreille extracted libraries and variants called using GATK and FreeBayes software (Appendix S1, Figure S3). Additional linear modeling revealed a strong fit between the number of filtered and deduplicated mapped reads and the number of reads aligning to the BED file (R2 = 0.83), and both the filtered and deduplicated mapped reads (R2 = 0.86). A strong relationship was also found for the aligned reads against the SNP capture BED file (R2 = 0.86), and the number of SNPs called by GATK and FreeBayes, respectively (Figure S4).

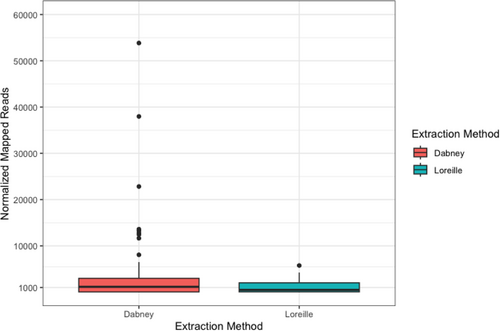

3.3 Sequence variation and variant detection by extraction method

A normalized average of 4229 and 1179.78 filtered and deduplicated reads per mg of bone was obtained for Dabney and Loreille extracted libraries, respectively (Appendix S1, Table S3). Despite a higher number of aligned reads for the Dabney extracted libraries on average, the differences are not statistically significant (p-value = 0.3201) (Figure 2). However, eight Dabney extracted libraries with low sample input amounts (<100 mg) produced substantially more mapped reads than libraries with higher sample input (>100 mg) for both Dabney and Loreille extracted libraries, a pattern linked to a reduction in the co-extraction of inhibitors.

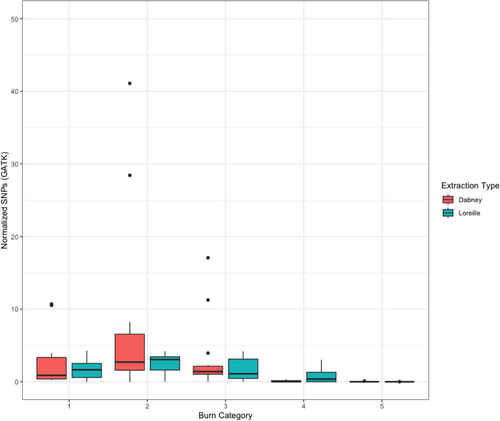

A similar pattern was observed for the number of reads aligning to the BED file (Dabney extracted libraries = 147.06; Loreille extracted libraries = 58.1; p-value = 0.5651), and SNPs called using both FreeBayes and GATK for Dabney extracted (FreeBayes = 3.34; GATK = 3.37) and Loreille extracted (FreeBayes = 1.57; GATK = 1.57) libraries, which are not significantly different (FreeBayes, p-value = 0.9198; GATK, p-value = 0.88) (Figure 3). The total number of SNPs called (i.e., across both extraction types and all five burn categories) using FreeBayes and GATK were also not significantly different (Wilcoxon Rank-Sum test, p-value = 0.9504). Since the variant metrics are almost identical for both SNP calling methods, and GATK's pipeline is highly scalable and well documented, the remaining burn category differences are reported using GATK's variant output metrics (Figure 3, see Appendix S2, Tables S3 and S8). The normalized mean depth of coverage was calculated for each GATK-derived VCF file (i.e., across all SNPs contained in the VCF file) by extraction type, and no significant differences between either method (p-value = 0.5822) were found (see Appendix S1, Figure S4 for SNP density by depth of coverage for Dabney and Loreille extracted libraries).

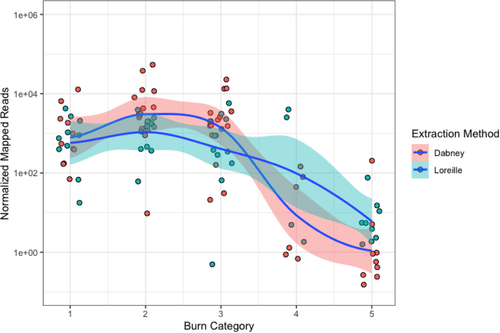

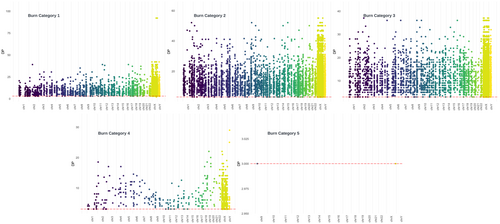

3.4 Sequence variation and variant detection by burn category

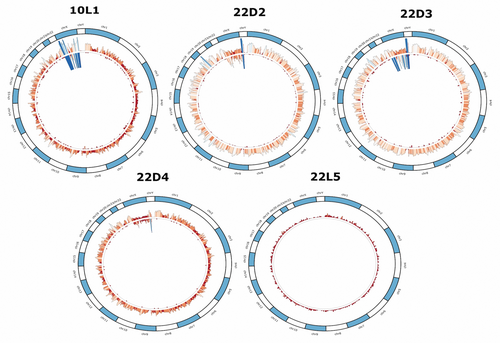

Genomic data obtained from sub-sampled burnt remains based on burn category display marked differences in sequencing metrics. The number of normalized filtered and deduplicated mapped reads are comparable for burn categories 1 through 3, although there is a substantial increase in the total number of reads between burn categories 1 (1852.2) and 2 (5677.8), that then decreases for burn category 3 libraries (3096.03). The total number of normalized reads for burn categories 4 and 5 measured 680.76 (normalized SNPs = 0.43) and 18.6 (normalized SNPs = 0.005), respectively (Figure 4, Appendix S2, Table S4). No significant differences in the number of mapped reads were detected in burn categories 1 through 3, or between burn categories 4 and 5. However, a significant difference was found between burn categories 3 and 4 (p-value = 0.0399; temperature range 350–550°C) (Table 2), a result consistent with previous STR and mtDNA results obtained from the same samples [22, 89]. The normalized number of total SNPs by burn category and extraction method is shown in Figure 5 (Appendix S1, Figures S5–S8). As indicated above, eight Dabney extracted libraries are increasing the variance for burn categories 1–3. Unlike the trend of significance between burn categories 3 and 4 for normalized filtered and mapped reads, a pairwise Wilcoxon Rank Sum analysis of the SNP data indicates significant differences between each burn category (Table 3). The normalized mean depth of coverage for both Dabney and Loreille extracted libraries was explored to determine whether there is a significant drop in the depth of coverage at the target SNP regions in the data set (Figure 6; see Appendix S1, Figures S5–S7 for SNP density and depth of coverage across burn categories per library). While no significant differences among burn categories 1–3 were measured, a significant difference was found between burn categories 3 and 4 (p-value = 0.0135), suggesting that, together with the normalized, filtered and deduplicated mapped reads, there is a statistically significant drop in read coverage between 350 and 550°C (Table 4). Figure 7 depicts a Circos plot showing SNP density and depth of coverage across the nuclear genome. While no single bone/tooth sample spanned all 5 burn categories, library 22 yielded four sub-samples (represented by the 1st premolar and tibia shaft section), and library 10L1 as a typical burn category 1 library is included for intra-burn category comparison across the human genome. Following the statistical trend obtained for the normalized data discussed above, relatively consistent SNP densities and coverage for burn categories 1–3, and substantially lower SNP densities and coverage for burn category 4 and 5 libraries were observed.

| Burn categories | Burn category 1 | Burn category 2 | Burn category 3 | Burn category 4 |

|---|---|---|---|---|

| p-value | p-value | p-value | p-value | |

|

Burn Category 2 p-value |

0.0895 | – | – | – |

|

Burn Category 3 p-value |

0.4644 | 0.4017 | – | – |

|

Burn Category 4 p-value |

0.0399 | 0.0053 | 0.0399 | – |

|

Burn Category 5 p-value |

1.9e-08 | 8.2e-11 | 2.6e-08 | 0.3982 |

| Burn categories | Burn category 1 | Burn category 2 | Burn category 3 | Burn category 4 |

|---|---|---|---|---|

| p-value | p-value | p-value | p-value | |

|

Burn Category 2 p-value |

0.0478 | – | – | – |

|

Burn Category 3 p-value |

0.9758 | 0.0366 | – | – |

|

Burn Category 4 p-value |

0.0156 | 0.0011 | 0.0156 | – |

|

Burn Category 5 p-value |

1.4e-06 | 1.8e-07 | 9.0e-07 | 0.0457 |

| Burn categories | Burn category 1 | Burn category 2 | Burn category 3 | Burn category 4 |

|---|---|---|---|---|

| p-value | p-value | p-value | p-value | |

|

Burn Category 2 p-value |

0.1770 | – | – | – |

|

Burn Category 3 p-value |

0.9517 | 0.1669 | – | – |

|

Burn Category 4 p-value |

0.0197 | 0.0012 | 0.0135 | – |

|

Burn Category 5 p-value |

3.3e-05 | 4.3e-06 | 8.3e-06 | 0.1620 |

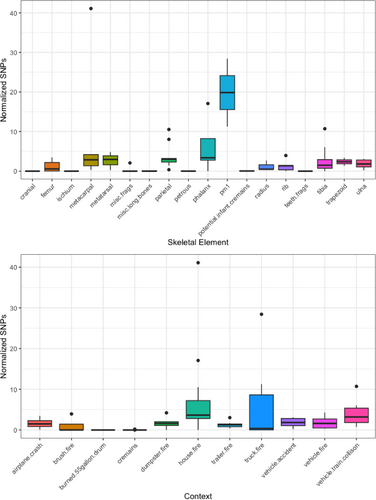

3.5 Sequence variation and variant detection by context and skeletal element

Bone and tooth sub-samples derive from a variety of different skeletal elements and forensic contexts. Both of these variables result in a degree of variation for the number of normalized filtered and deduplicated mapped reads and variants called using GATK. The highest number of SNPs per mg of bone were called from phalanges and 1st premolar sub-samples (top), and from skeletal remains derived from house and vehicle (i.e., truck) fires, while the lowest number of SNPs per mg of bone were obtained from cranial fragments, ischium (pelvis), unidentified and miscellaneous bone fragments, miscellaneous long bone fragments, segments of petrous bone, infant cremains, and teeth fragments. The lowest normalized SNP counts by context are from burned remains recovered from a 55-gallon (208.1 L) drum, cremations, a dumpster fire and a trailer fire (Figure 8). An overwhelming majority of “miscellaneous” and fragmented remains derive from the “cremains” group, so it is not surprising that these skeletal elements were unidentified, nor is it surprising that they generated the least amount of enriched genomic data (see Appendix S1: Code Blocks 1 and 2 for the grouped mean normalized SNP counts by skeletal element and context). SNPs called for the cremains from the “miscellaneous fragments” group approach significance when compared to the SNPs obtained from the tibia (p-value = 0.06), and they are significantly different when compared to the normalized SNP data obtained from parietal sections (p-value = 0.04) and sections removed from the metacarpals (p-value = 0.05) (Appendix S1, Figure S10). Likewise, the genomic SNP data by context show significant pairwise differences between the remains from the 55-gallon drum and cremains, and all other context categories except the truck fire remains for which we recorded the second highest mean number of SNPs per mg of bone (6.74) (see Appendix S1; Code Block 2; Figure S11).

3.6 Mixed-effects modeling

Mixed-effects models are statistical methods that analyze both fixed effects and random effects. These models are useful in a variety of contexts, such as when data are collected from hierarchical or grouped structures. Normalized SNPs obtained via GATK were modeled using mixed-effects modeling with the lme4 package version 1.1 [95] in R version 4.3.0. The data was fitted with a binomial distribution in order to capture the right skewed distribution of filtered and deduplicated mapped reads using the glmer.nb() function from lme4. This function uses the theta.ml function from the MASS package version 7.3 [96] to estimate the distribution overdispersion parameter (theta) using maximum likelihood. The negative binomial can be thought of as an extension of the Poisson distribution for modeling counts when there is overdispersion in the data (i.e., the distribution variance is greater than the distribution mean). The main predictors in the model were burn category (categorical, 1–5) and extraction protocol (binary, coded as Dabney or Loreille). Random intercepts were fit to capture variability in normalized SNPs counts that are potentially due to inherent DNA preservation qualities of types of skeletal elements. To avoid overestimating variability in the outcome due to element type, skeletal element categories were first consolidated into to the following categories: long bones (all complete and fragmentary long bones), hand/foot tubular bones (e.g., metacarpals, metatarsals, phalanges, but not carpals/tarsals), dental (complete and fragmentary teeth), dense bones (e.g., ischium, carpals, petrous portions), ribs, cranium, cremains, and miscellaneous other fragments. Relative to a fixed-effect only model, SNP values predicted back from the mixed-effects model reflect some of the variability in the SNPs values that is due to factors other than burn category.

The resulting model (Table 5) found significant differences between the baseline burn category 1 and burn categories 4 and 5. Burn category 2 showed an increase in normalized SNPs which was not significant (Incidence Rate Ratio IRR: 1.68, 95% CI: 0.94–3.01), while burn categories 3–5 had decreased reads relative to baseline (IRRs: 0.68 [0.37–1.27], 0.19 [0.06–0.60], and 0.00 [0.00–0.97], respectively), though the decrease was not significant for burn category 3. The model predicted a mean decrease in SNP recovery associated with using the Loreille extraction protocol over a Dabney protocol, though the relationship was not statistically significant (IRR: 0.69, 95% CI: [0.44–1.06]). These results suggest that on average, bones burnt to temperatures consistent with category 2 showed a 68% increase in the number of reads relative to those burnt to temperatures consistent with category 1. Those bones burnt to temperatures consistent with categories 3, 4, and 5 experienced average reductions in reads of 12%, 71%, and 99% relative to those burnt to temperatures consistent with category 1. The Intraclass Correlation Coefficient (ICC), calculated as the variance associated with the random effects (σ2 in the table below) divided by the total variance (τ00 + σ2 in the table below), is 0.51, or 51%. This suggests that while burn category and extraction protocol explain SNP variance, other factors not captured by the fixed effects are also important.

| Incidence rate ratios | CI | p | |

|---|---|---|---|

| Predictors | |||

| Intercept | 2.90 | 1.20–7.01 | 0.018 |

| Burn category 2 | 1.68 | 0.94–3.01 | 0.079 |

| Burn category 3 | 0.68 | 0.37–1.27 | 0.228 |

| Burn category 4 | 0.19 | 0.06–0.60 | 0.005 |

| Burn category 5 | 0.00 | 0.00–0.97 | 0.049 |

| Loreille extraction | 0.69 | 0.44–1.06 | 0.090 |

| Random effects | |||

| σ 2 | 0.77 | ||

| τ 00 skeletal. element | 0.79 | ||

| ICC | 0.51 | ||

| N skeletal. element | 8 | ||

| Observations | 107 | ||

| Marginal R2/Conditional R2 | 0.793/0.898 | ||

The Loreille extraction method shows a lower incidence rate ratio (0.69) compared to the baseline and is associated with a lower number of SNPs than Dabney extracted libraries, although this difference is not statistically significant (p-value of 0.090). The Intraclass Correlation Coefficient (ICC) of 0.51 suggests that about 51% of the total variability in the normalized SNPs detected is due to variability between types of skeletal elements and, together with the total normalized SNPs per skeletal element, suggests that long bones, hand and foot bones, and teeth are better genomic DNA reservoirs. Finally, the Marginal R2 of 0.793 indicates that around 79.3% of the variance in the outcome can be explained by the fixed factors in the model and the conditional R2 of 0.898 indicates that when considering both the fixed and random factors, the model explains around 89.8% of the variance in the outcome (Table 5).

3.7 Y-chromosome haplogroup and sex estimation

To examine the performance of SNP recovery in the panel, we analyzed Y chromosome SNPs and determined the haplogroups of 53 out of 86 sub-sampled libraries (i.e., across burn categories 1–5), across both extraction types (i.e., 29 Dabney and 24 Loreille extracted libraries) (Appendix S2, Table S9, Appendix S3). From these data, we estimated that 12 individuals were male (M), 6 were female (F), 6 were undetermined (U), while 3 individuals failed to produce data for ancestry and sex estimation (Table 6). Ancestry informative SNPs were obtained using the CLC Genomics Workbench v12.0.3 (Qiagen) and both the Seldin (128 AISNPs) and the Kidd (55 AISNPs) panels, that surveyed 131 and 161 populations, respectively (Appendix S2, Table S9, for AISNP data for the Seldin and Kidd panels) [91-94]. Table 6 includes a summary of the Y chromosome haplotypes inferred for each individual (with concordant haplogroups across sub-sampled replicates) as well as the mtDNA haplogroups published in Emery et al. [89] obtained from the same libraries (where possible).

| Sample ID | mtDNA haplogroup (Emery et al. [89]) | Y haplogroup | Sex estimation |

|---|---|---|---|

| 01 | H1ak | N/A | N/A |

| 02 | A2c | Q1b1a1a | M |

| 03 | H1ak | R1a1a1b1a2b3a4 | M |

| 04 | N/A | N/A | U |

| 05 | N/A | N/A | U |

| 06 | K2a8 | N/A | F |

| 07 | H1b1 | R1b1a1b1a1a2 | M |

| 08 | H5a1 | N/A | F |

| 09 | H5a1 | R1b1a1b1a1a2b1a1 | M |

| 10 | T2c1d1 | N/A | F |

| 11 | L2a1+143+16189 (16192)+@16309 | I2a1b2a | M |

| 12 | K2a8 | N/A | F |

| 13 | T2b13 | N/A | F |

| 14 | N/A | N/A | U |

| 15 | N/A | N/A | N/A |

| 16 | N/A | N/A | M |

| 17 | N/A | N/A | U |

| 18 | N/A | N/A | U |

| 19 | J1c3 | R1b1a1b1a1a2a1b2 | M |

| 20 | T2b13 | N/A | U |

| 21 | C4 | I1a1b1a1b3a | M |

| 22 | A2 | E1b1a1a1a1c1a1 | M |

| 23 | H5b1 | I1a1a1a1a3 | M |

| 24 | U5a1b + 16362 | R1b1a1b1a1a2 | M |

| 25 | A2w1 | N/A | F |

| 27 | V3 | R1b1a1b1a1a2c1a4a1 | M |

- Note: Individuals identified as male, female, and undetermined, are denoted as M, F, and U, respectively. N/A denotes missing data.

Haplogroup calling determined Y-lineages from 11 out of 12 males identified in our sample (Table 6), including major haplogroups associated with paternal origins from western Europe (R1b), eastern Europe and South Asia (R1a), central Asia and the Americas (Q1b), southeastern Europe and the Balkans (I2a), northern Europe (I1a), and Africa and Asia (E1b) (see Appendix S3 for the refined Y haplotype subclusters). No significant difference for the number of GATK called SNPs (p-value = 0.88) was observed between extraction types, and both extraction methods were able to determine the Y haplogroups in burn categories 1 (Dabney = 8; Loreille = 8), 2 (Dabney = 10; Loreille = 8), 3 (Dabney = 9; Loreille = 7), and 4 (Dabney = 1; Loreille = 2) using SNPs identified at a minimum of 4X. No Y haplogroups were determined in burn category 5 libraries (see Appendix S2, Figure S12).

4 DISCUSSION AND CONCLUSION

The identification of disaster victims, whether caused by natural events, war, or terrorism, is often hindered by the effects of burning. In this study, we examined the impact of burning on the recovery of genome-wide SNPs. Our results suggest a high degree of variability driven predominantly by burn category and skeletal element, and not by extraction type or forensic context, although extreme environmental contexts, such as those found in crematoria, were found to significantly lower the probability of obtaining genomic DNA (Figure S10). Comparative analysis of the normalized genomic metrics suggests little overall difference between extraction methods. However, mixed-effects modeling provided additional information about the nature of DNA yield and preservation across burn categories. Specifically, we observed a significant difference in the number of normalized reads between burn category 4 and 5 and the reference category (burn category 1), but not between the first burn category and burn categories 2 and 3, which suggests that DNA yields significantly decrease between burn categories 3–4 and 4–5. This finding is corroborated by the pairwise nonparametric Wilcoxon Rank Sum analysis (see Table 4), the substantial drop in complete STR profiles documented in a previous study (Emery et al. [22]), and the significant decreases in normalized mapped reads and depth of coverage for mtDNA genomes (Emery et al. [89]). The degree of burning influenced the number of SNPs recovered; however, the model (Table 5) suggests that there remains a substantial amount of unexplained variability that might be due to unmeasured factors or inherent randomness. Some types of tissue or specific elements may better retain genomic DNA after burning, but the low number of replicates per category in this study prevents further exploration. Future studies should explore a larger number of skeletal elements with larger sample sizes per group to determine the best candidates for genomic DNA retrieval.

Diagenetic analysis using Fourier-transform infrared spectroscopy, X-ray diffraction, and/or the histomorphology of mineral hydroxyl-apatite (HA) have determined four heat-induced alteration stages in bone: (1) dehydration through the loss of water and the breakdown of hydroxyl bonds, (2) organic decomposition through pyrolytic degradation, (3) inversion, or the removal of bone carbonate, and (4) fusion and ultimate melting of the hydroxyapatite (HA) crystalline structure [97-99]. These stages are likely linked to the loss of DNA at higher temperatures. For example, Carrol and Squires [97] note the substantial removal of collagen and carbon at temperatures >500°C and the complete loss and pyrolization of organic material at 700°C. A similar pattern of degeneration was found in our SNP results, whereby a significant difference in the number of normalized mapped reads (p-value = 0.03), SNP counts (p-value = 0.015), and SNP depth of coverage (p-value = 0.013) was observed between burn categories 3 (300–350°C) and 4 (550–600°C), a trend that corroborates with the stages 2 and 3 of HA heat-induced alteration (Tables 1–4). These results also align with our previous research [22, 89], where we found a significant decrease in DNA yields, STR profiles, and the number of mapped reads and coverage of the mtDNA genome between burn categories 3 and 4 (that is, within the temperature range of 350–550°C). These patterns also corroborate the results of earlier experiments that suggested DNA becomes irretrievable at temperatures exceeding 600°C for short periods of time or at 400°C if exposed for longer than 15 min [24, 40, 100]. However, the exact duration needed to degrade DNA to levels undetectable at this temperature range remains unestablished. A systematic and controlled incineration of cadaver tissues followed by careful accessioning, sub-sampling, and NGS analyses might provide a more accurate evaluation of these temperature ranges and determine the pyrolytic effects occurring between 400 and 550°C.

Y haplogroup classifications and sex estimations for 53 out of 86 sub-samples (from 27 individuals) were successful despite the challenges of obtaining high-quality DNA yields from thermally altered skeletal material. Three libraries in burn category 4 produced relatively high-coverage Y SNPs for haplogroup assignment: one extracted using the Dabney method (19D4), and two using the Loreille method (19L4 and 22L4). However, due to the small sample sizes in the higher burn categories, it is unknown whether the Dabney or Loreille extraction protocol is more effective at obtaining DNA for whole-genome SNP analysis at these higher temperatures.

Despite the growing pace of innovative technology for real-time and rapid STR genotyping, such as the rapid genotyping of in-situ disaster victims recovered from the California forest fires (circa 2018), and the recent Hawaiian wildfires on Maui [101, 102], a number of ongoing challenges for DNA analysis in burned contexts remain. The first pertains to understanding the rate of decomposition of DNA in both soft and hard tissues when exposed to varying temperatures over different periods of time as noted above. The second challenge is to standardize burn category ranges and bone discoloration patterns such that they are informative about DNA preservation. Correlating these measures is important to forensic scientists and anthropologists who rely on these methods to make informed decisions about how to distribute precious financial and/or laboratory resources in disaster scenarios and forensic casework. Ideally, this could also integrate data derived from other techniques (such as Fourier-transform infrared spectroscopy, X-ray diffraction, or histomorphology) used for evaluating changes in the water, organic, and inorganic components of bone. Establishing a standardized burn category nomenclature and scheme would require a collaborative effort among bone biochemists, forensic anthropologists, and forensic DNA analysts. Such a standardized categorization would also require rigorous validation across a range of contexts and populations to ensure its robustness and reproducibility.

The third challenge is the availability of skeletal material for DNA extraction. One significant advantage of the Dabney extraction protocol, in comparison to other bone DNA extraction protocols, is the relatively low sample input required to extract DNA (i.e., 50–100 mg of pulverized bone material). This key methodological difference is likely to improve DNA yields from lower burn categories (i.e., burn categories 1 and 2), where the co-extraction of undigested organic material and other inhibitors could potentially inhibit the release of DNA from the spin-column silica-membrane, and decrease downstream library preparation and PCR efficiency [69, 103, 104]. This pattern was observed here with respect to DNA yield and the subsequent number of normalized mapped reads for eight mtDNA and SNP enriched libraries with <100 mg of pulverized bone powder versus samples with >100 mg of bone powder [22, 89]. While this might be true for lower temperature burn categories, it is unknown if the amount of bone powder affects DNA yields at higher burn categories, which are dehydrated and show significant organic degeneration through pyrolization. In these circumstances (burn categories 4 and 5), higher amounts of bone powder may have marginal improvements on DNA yields. Further testing is required to determine the amount of bone needed to optimize DNA yield for samples subjected to temperatures >350°C. Additionally, the inversion stage (3) of hydroxyapatite alteration that loosely correlates with burn categories 3 and 4 is characterized by the loss of bone carbonates (CO3), and likewise corresponds to the observed carbonized black and gray/blue discoloration of bone. Consequently, the initial demineralization and digestion step prior to DNA extraction is typified by dark, carbonized supernatants, which ultimately lead to discolored and potentially inhibited extracts. Implementing a modified Dabney extraction with an additional cold spin step, which may remove inhibitory pyrolyzed carbonates, similar to that used in the sedimentary DNA cold spin extraction method developed by Murchie et al. [48, 105], may improve PCR amplification efficiency at these higher temperatures.

Lastly, another challenge for DNA analysis is identifying the best targeted enrichment genomic SNP panels for forensic identification in degraded contexts. For instance, Arbor's community panel FORCE v.2 (FORensic Capture Enrichment), which comprises 5402 whole-genome SNPs, is designed specifically for use in forensic DNA identification [49]. These SNPs are linked to informative biomarkers useful for individual identification, ancestry, phenotypic expression, and blood type. Validated SNP panels and workflows are required in forensic DNA research; however, the FORCE v.2 panel was not used in this study since our research began prior to its publication [49]. Instead, a previously unpublished but forensically relevant SNP panel was tested. Future attempts to investigate the whole-genome SNP data obtained from burnt human remains should implement commercially available and validated panels to assess their performance for this context.

Integration of forensic DNA protocols with methods from related fields (i.e., aDNA) may improve DNA quality and quantity for downstream PCR- and NGS-based analyses. Our research presently suggests that the choice of a suitable extraction method should be contextual. Access to resources, such as funding, sampling materials, instrumentation and time, the amount of bone material recovered, the state of preservation prior to thermal alteration, and the degree of pyrolytic damage must be considered before experimentation begins. For example, in cases where highly degraded skeletal materials with low sample representation and/or low representative bone powder are available for processing, application of the Dabney extraction method may be more appropriate. However, in cases where bone samples can provide ≥200 mg of pulverized material, are relatively well preserved and STR markers are the intended target, then the total demineralization protocol may provide higher yields (on average) at higher burn categories (e.g., Figure 4). Xavier et al. [103] rightly note that forensic labs will maintain the use of PCR-based CE-based STR genotyping for identification for the foreseeable future, necessitating an extraction protocol that preserves longer DNA fragments for rapid STR-based CODIS analyses, such as the Loreille protocol [68, 103]. However, in forensic DNA cases where the lengths of molecular fragments are known to be shorter, such as due to severe environmental damage (i.e., DNA fragmentation as a result of depurination), the Dabney protocol could be used to obtain these shorter targets, including mini-STRs and mtDNA and genome-wide SNP data, to generate leads for identification purposes. Additional testing of NGS-based library preparation methods and enrichment panels is needed to discern which methods work best for DNA analysis of burned remains. Future studies should also focus on larger sample sizes, particularly for higher burn categories, and increase the number of bone/tooth sub-samples per skeletal element for accurate statistical comparison.

Overall, the SNP data generated in this study confirm the findings about the effects of burning from previous mtDNA and STR analyses of these samples and complement genetic results from other studies investigating the preservation of bone biochemistry under extreme thermal conditions. While the possibility of recovering adequate DNA yields at temperatures >550°C are low, obtaining genomic DNA and mtDNA from individuals exposed to lower temperatures over short periods of time is possible using current forensic and ancient DNA laboratory protocols. Continued study into the pyrolytic processes that lead to the total loss of DNA from human remains will provide further insight into how high temperatures impact the integrity of DNA.

ACKNOWLEDGMENTS

We would like to thank Dr. Abagail Breidenstein for their manuscript feedback and project consultation. The authors thank the dedicated scientists at the Maricopa Office of the Medical Examiner in Phoenix, Arizona, Daicel Arbor Biosciences (Ann Arbor, Michigan), and ASU's Genomics Core facilities.

FUNDING INFORMATION

This project was supported by Award No. 2016-DN-BX-0158, awarded by the National Institute of Justice, Office of Justice Programs, U.S. Department of Justice. The opinions, findings, and conclusions or recommendations expressed in this publication/program/exhibition are those of the author(s) and do not necessarily reflect those of the Department of Justice.

CONFLICT OF INTEREST STATEMENT

The authors have no conflicts of interest to declare.

DISCLAIMER

This publication is number #23.28 of the Laboratory Division of the Federal Bureau of Investigation. The names of commercial manufacturers are provided for identification purposes only, and inclusion does not imply endorsement by the FBI. The views expressed in this article are those of the authors and do not necessarily reflect the official policy or position of the FBI or US Government. One or more of the authors is a US Government employee and prepared this work as part of that person's official duties. Title 17 United States Code (USC) Section 105 provides that Copyright protection under this title is not available for any work of the United States Government. Title 17 USC Section 101 defines a US Government work as a work prepared by an employee of the United States Government as part of that person's official duties.