IFF: Identifying key residues in intrinsically disordered regions of proteins using machine learning

Abstract

Conserved residues in protein homolog sequence alignments are structurally or functionally important. For intrinsically disordered proteins or proteins with intrinsically disordered regions (IDRs), however, alignment often fails because they lack a steric structure to constrain evolution. Although sequences vary, the physicochemical features of IDRs may be preserved in maintaining function. Therefore, a method to retrieve common IDR features may help identify functionally important residues. We applied unsupervised contrastive learning to train a model with self-attention neuronal networks on human IDR orthologs. Parameters in the model were trained to match sequences in ortholog pairs but not in other IDRs. The trained model successfully identifies previously reported critical residues from experimental studies, especially those with an overall pattern (e.g., multiple aromatic residues or charged blocks) rather than short motifs. This predictive model can be used to identify potentially important residues in other proteins, improving our understanding of their functions. The trained model can be run directly from the Jupyter Notebook in the GitHub repository using Binder (mybinder.org). The only required input is the primary sequence. The training scripts are available on GitHub (https://github.com/allmwh/IFF). The training datasets have been deposited in an Open Science Framework repository (https://osf.io/jk29b).

1 INTRODUCTION

The evolutionary history of DNA/RNA sequences and the proteins they encode can be revealed through multiple sequence alignment methods, enabling the identification of phylogenetic relationships. These methods have been used to identify our extinct Neanderthal and Denisovan cousins through DNA extracted from ancient bones (Green et al., 2010; Meyer et al., 2012), to discover the Archaea domain through prokaryotic ribosomal 16S RNA sequences (Woese and Fox, 1977), and to trace myoglobin and hemoglobin protein sequences back to their globin origins (Hardison, 2012; Suzuki and Imai, 1998). Protein structures also provide insights into protein evolution, as they can be conserved despite changes in the primary sequence. For example, the structural similarity between the motor domains of kinesin and myosin suggests that they have a common ancestor despite low sequence identity (Kull et al., 1996). The shape of a protein also influences its evolution and the conservation of functionally important residues. When conservation levels are mapped onto 3D structures, the most conserved residues are often found in key locations such as the folding core (Echave et al., 2016) or catalytic sites (Craik et al., 1987).

However, intrinsically disordered proteins (IDPs) or proteins with intrinsically disordered regions (IDRs), which are estimated to comprise approximately half of the eukaryotic proteome (Dunker et al., 2000), do not adhere to the structural constraints in evolution. As a result, the sequences of IDPs or IDRs exhibit a broader range of variation compared to their folded counterparts. This phenomenon is exemplified by the example provided in Figure S1. Although some structural evolutionary restraints still apply to some IDRs, especially those that undergo folding-upon-binding (Jemth et al., 2018; Karlsson et al., 2022), the evolution of IDRs is mainly constrained by function. One recently recognized function of IDRs is their ability to undergo liquid–liquid phase separation (LLPS) (Alberti et al., 2019; Alberti and Hyman, 2021). This mechanism contributes to the formation of membraneless organelles and explains the spatiotemporal control of many biochemical reactions within a cell (Banani et al., 2017; Shin and Brangwynne, 2017). The proteins within these condensates do not adopt specific conformations (i.e., they still behave like random coils) (Brady et al., 2017; Burke et al., 2015) and thus evolve without structural restraints. Although multiple sequence alignment may work in some instances (e.g., the aromatic residues in the IDRs of TDP-43 and FUS are conserved, highlighting their potential importance for LLPS [Ho and Huang, 2022]), most IDRs cannot be aligned, especially when there are sequence gaps between orthologs (Light et al., 2013).

The functionally important physicochemical properties of IDPs/IDRs encoded in their primary sequence may be retained during evolution. Aromatic residue patterns (Martin et al., 2020), prion-like amino-acids (Patel et al., 2015), charged-residues blocks (Greig et al., 2020), and coiled-coil content (Fang et al., 2019) all contribute to LLPS, but these features cannot be revealed by sequence alignment. Multiple sequence alignment methods are, therefore, of limited use in identifying critical residues in IDRs. To overcome this challenge, we propose an unsupervised contrastive machine learning model trained using self-attention neuronal networks on human IDR orthologs. Our results show that the trained model “pays attention” to crucial residues or features within IDRs. We also provide online access to our model that uses primary sequences as input.

2 METHODS

2.1 Training dataset preprocessing

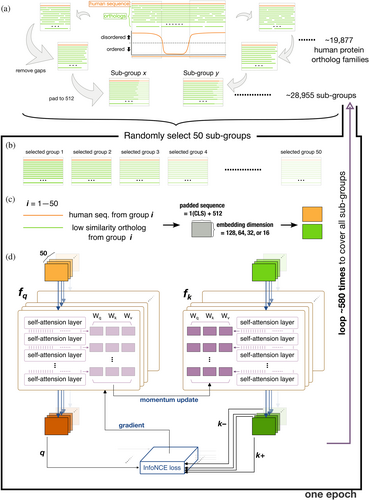

Human protein sequences were retrieved from UniProt (UniProt, 2019) and the corresponding orthologs were obtained from the Orthologous Matrix (OMA) database (Altenhoff et al., 2021). Chordate orthologs were aligned using Clustal Omega (Sievers et al., 2011). The PONDR (Romero et al., 1997) VSL2 algorithm was used to predict the IDR of the human proteins and to define the boundaries of the aligned sequences (Figure 1a). Aligned regions were defined as subgroups. N-terminal methionines were removed to assist learning (methionine is coded by the start codon in protein synthesis). After removing gaps within the aligned sequences, all sequences were padded to a length of 512 amino acids (repeating from the N-terminus; Figure 1a). The few sequences longer than 512 amino acids (56,086 out of 2,402,346, 2.3%) were truncated from the C-terminus. To pad or to truncate the sequence to 512 is for unifying the dimension of our training dataset, keeping the consistency of the model input size. The repeated sequences preserve the relative ordering of the original sequence. This can be beneficial for tasks where the order is important in sequence classification. The training dataset thus consisted of 28,955 ortholog subgroups from 13,476 human protein families with IDRs longer than 40 amino acids.

Each training batch consisted of 50 randomly selected subgroups (Figure 1b). The human sequence from each subgroup was paired with one of its orthologs (one of the nonhuman sequences in the same subgroup, Figure 1c). The selection probability was weighted by the Levenshtein distance (Levenshtein, 1966) from the human sequence to favor low similarity pairings. Figure S2 shows how different the sequences typically were in these ortholog subgroups, along with the corresponding selection probabilities. The most dissimilar sequences (high probability of being selected for training) in each ortholog group were also deposited in Open Science Framework. A classifier token (CLS) was added to the start of the selected sequences, and these were mapped to a matrix with an embedding dimension of 128 (embed_dim; Figure 1c). Each residue in the protein sequence was embedded in a numerical vector, as another dimension alongside the padding (512). We have tested different embedding sizes from 16, 32, 64, and 128. The embedding size of 128 is sufficient for converged training performance.

2.2 Training architecture

This scheme (Figure 1b–d) was repeated ~580 times to include all 28,955 subgroups in each training epoch. The training consists of 400 epochs, and the InfoNCE loss is sufficiently converged (Figure S3). After the training, the attention scores for each residue in an input sequence are predicted by the trained model. The attention score represents the measure of importance or relevance assigned to each amino acid position within a protein sequence. It indicates how much attention or focus the model attributes to that specific position when making predictions. A higher attention score suggests that the model considers that position to be more influential within the protein. The attention score is a representation of the model's internal weighting and should be interpreted in the context of its impact on the model's predictions rather than directly linked to specific physical properties of the amino acids.

The model was built on PyTorch and the training was performed on a Nvidia Telsa P100 16G GPU.

3 RESULTS

3.1 The trained model attributes a high attention score to experimentally confirmed critical residues

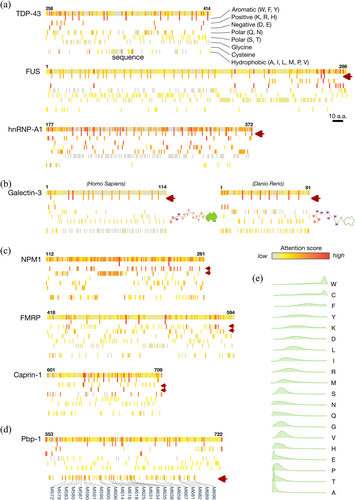

Studies have shown that the aromatic residues (phenylalanine, tyrosine, and tryptophan) in the IDRs of TDP-43 (Li et al., 2018b), FUS (Lin et al., 2017a) and hnRNP-A1 (Molliex et al., 2015) are critical for LLPS-related functions. These residues obtain a high attention score in our model (Figure 2a). The aromatic residues (two tryptophans and 10 tyrosines) in galectin-3 (Lin et al., 2017b) also score highly (Figure 2b, left panel). Interestingly, although a zebrafish's galectin, which has an IDR, differs substantially in primary sequence from human galectin-3 (Supplementary Figure S4), the aromatic residues (mostly tryptophan instead of tyrosine) also have high attention scores (Figure 2b, right panel). Note that this zebrafish's galectin was not in the OMA ortholog database used for training (OMA number: 854142). Charged residues (purple arrows in Figure 2c) reported to be associated with condensation in NPM1 (Mitrea et al., 2018), FMRP (Tsang et al., 2019), and Caprin1 (Wong et al., 2020) also obtain high attention scores (Figure 2c). Our model also assigns high attention scores to the methionines in Pbp-1 (labeled in Figure 2d; Pbp-1 is the yeast ortholog of human Ataxin-2), which have been shown to be critical for redox-sensitive regulation (Kato et al., 2019). Altogether, these results indicate that the trained model correctly identifies known key IDR residues.

3.2 Most amino acids have broadly distributed attention scores except tryptophan and cysteine, whose presence in IDRs hints at potential importance

Figure 2e compares the attention score distributions of the amino acids in human IDRs. The differences are striking, but the attention scores are not correlated with other physical properties, such as disorder/order propensity (Radivojac et al., 2007; Vihinen et al., 1994), prion-likeness (Lancaster et al., 2014), or prevalence in human IDRs (Figure S5). The attention scores of alanine are always low. Although poly-alanine promotes α-helix formation (Polling et al., 2015), which is known to contribute to IDR functions (Chiu et al., 2022; Conicella et al., 2020; Li et al., 2018a), our model ignores this amino acid. This is probably because α-helices are also promoted by other amino-acid types, such as leucine or methionine (Levitt, 1978; Pace and Scholtz, 1998), in different combinations not involving alanine. The training process did not include structure information, and thus structure-related sequence motifs could not be learned by our model. At the other end of the distribution, tryptophan and cysteine systematically obtain high attention scores. These structure-promoting amino acids rarely appear in unstructured regions (Radivojac et al., 2007; Uversky and Dunker, 2010; Vihinen et al., 1994); therefore, their appearance in IDRs hints at their potential importance. Although little is known about the role of cysteine in IDRs, its involvement in tuning structural flexibility and stability has been recently discussed (Bhopatkar et al., 2020), and our results may have also predicted its currently ignored importance in IDRs. Tryptophan, in contrast, is well-known to act as LLPS-driving “stickers” in IDRs (Li et al., 2018b; Sheu-Gruttadauria and MacRae, 2018; Wang et al., 2018), and bioinformatic analysis shows that they may have evolved in the IDRs of specific proteins to assist LLPS (Ho and Huang, 2022).

The fact that most amino acids, including those highlighted in Figure 2a–d, have broad attention score distributions (Figure 2e), excludes the possibility that our model is biased toward particular amino-acid types rather than sequence content as a whole. Moreover, in the machine learning procedure, the protein sequences were embedded into higher dimension matrices (as sequences of digits; Figure 1c), and amino-acid type information was lost when the matrices were transformed into tensors along with the self-attention layers (Figure 1d). These results support the predictive ability of the trained model.

4 DISCUSSION

Genetic information, in the form of a linear combination of nucleic or amino acids, becomes more diverse over time. Comparing levels of diversity between different species reveals how closely related they are. In terms of amino acids, multiple sequence alignment not only highlights phylogenetic relationships between proteins but also facilitates homology modeling for structure prediction (Balakrishnan et al., 2011; Morcos et al., 2011; Weigt et al., 2009). Machine learning approaches have recently been used to incorporate information from evolution to train structure prediction models (AlQuraishi, 2019; Xu, 2019), and the highly accurate predictions from AlphaFold (Jumper et al., 2021) and RoseTTAFold (Baek et al., 2021) have revolutionized structural biology. In contrast, the structural conformations of IDRs lack a one-to-one correspondence with the primary sequence, and multiple sequence alignment often fails (Ho and Huang, 2022; Lindorff-Larsen and Kragelund, 2021). These limitations make IDR structural ensembles challenging to predict. A few attempts have been reported, such as using generative autoencoders to learn from short molecular dynamics simulations (Bhopatkar et al., 2020). The potential and challenges of machine learning in IDR ensemble prediction are also discussed (Lindorff-Larsen and Kragelund, 2021).

Sequence pattern prediction faces similar challenges, including the lack of a sufficient stock of “ground-truth” training data for validating the model performance, such as image databases or the Protein Data Bank. Nevertheless, unsupervised learning architectures have been developed to train models without labeled datasets (Hinton and Sejnowski, 1999), and this type of approach is particularly well-suited for IDRs. For instance, Saar et al. (2021) used a language-model-based classifier to predict whether IDRs undergo LLPS. Moses and coworkers pioneered the use of unsupervised contrastive learning, using protein orthologs as augmentation (Lu et al., 2020), to train their model to identify IDR characteristics (Lu et al., 2022). While we also used ortholog sequences as training data, our approach differs in several key ways. We used self-attention networks, rather than convolutional neural networks, to capture the distal features in the entire protein sequence. Additionally, we trained our model using the latest contrastive learning architecture (MoCo v3), which greatly reduces memory usage for larger batches and enhances efficiency. In contrast to other masked language models (Brandes et al., 2022; Elnaggar et al., 2022; Rives et al., 2021), our approach is the first, to the best of our knowledge, to combine contrastive learning and self-attention in extracting features using natural language processing for protein sequence analysis. Furthermore, our trained model directly “pays attention” to potentially critical residues in the entire sequence, rather than mapping the primary sequence to learned motifs (Lu et al., 2022).

Our research investigates the viability and potential of using contrastive learning and self-attention networks to identify features within proteins' IDRs. Our tool offers a convenient resource for biochemists and cell biologists to identify overall features in an IDR sequence, such as a predominance of aromatic residues or blocks of charged residues (Figure 2). Moreover, our model provides intuitive results highlighting potentially important residues for researchers to target in mutagenesis or truncation experiments. Nevertheless, we are aware that the predictive capacity of our approach could be enhanced through the use of larger training datasets, including nonhuman orthologues, or by using increased computational resources, such as additional GPUs, to enable training with larger batch sizes.

We have created online access to our model, IFF (IDP Feature Finder), which only requires a primary sequence or UniProt ID as input. We expect our program to be useful in various research fields, notably cell biology, to efficiently identify critical residues in proteins with IDRs, such as those that undergo LLPS.

ACKNOWLEDGMENTS

The authors thank the IT Service Center at NYCU for GPU access. This work was supported by the National Science and Technology Council of Taiwan (110-2113-M-A49A-504-MY3). The authors are also grateful to Prof. Wen-Shyong Tzou (National Taiwan Ocean University) for his help.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.