Validation of the LEOSound® monitor for standardized detection of wheezing and cough in children

Abstract

Background

A hallmark of many respiratory conditions is the presence of nocturnal symptoms. Nevertheless, especially in children there is currently still a huge diagnostic gap in detecting nighttime symptoms, which leads to an underestimation of the frequency in clinical practise.

Methods

We evaluated the clinical applicability and determined the formal test characteristics of the LEOSound ® system, a device for digital long-time recording and automated detection of acoustic airway events. Airway sounds were recorded overnight in 115 children and adolescents (1–17 years) with and without respiratory conditions. The automated classification for “cough” and “wheezing” was subsequently validated against the manual acoustic reassessment by an expert physician.

Results

The general acceptance was good across all age groups and a technically successful recording was obtained in 98 children, corresponding to 92,976 sound epochs (à 30 s) or a total of 774 h of lung sounds. We found a sensitivity of 89% and a specificity of 99% for the automated detection of cough. For detection of wheezing, sensitivity and specificity were both 98%. The cough index and the wheeze index (events per hour) of individual patients showed a strong positive correlation (cough: rS = 0.85, wheeze: rS = 0.95) and a sufficient agreement of the two assessment methods in the Bland–Altman analysis.

Conclusion

Our data show that the LEOSound® is a suitable device for standardized detection of cough and wheezing and hence a promising diagnostic tool to detect nocturnal respiratory symptoms, especially in children. However, a validation process to reduce false positive classifications is essential in clinical routine use.

1 INTRODUCTION

Asthma, one of the most frequent chronic diseases in childhood,1 displays circadian variations with a worsening during the night.2 Chronobiologically determined variations in airway physiology controlled by central zeitgebers contribute to the nocturnal characteristic of asthma and lead to a nadir of bronchial width in the early morning hours.3 In addition, the circadian oscillation of the expression of multiple genes which influence lung physiology (e.g., the beta-2-adreno receptor)4 and variations of the local inflammatory response contribute to the nocturnal aggravation of asthma activity (see review in5).

The frequency of nocturnal asthma symptoms is usually assessed by interview and parental questionnaires. However, self- or parental report tend to underestimate the children's nocturnal cough,6, 7 not least because of their peak in the 2nd half of the night, when parents are also asleep. Moreover, wheezing, the second cardinal acoustic asthma symptom, completely evades any self- and/or proxy assessments with questionnaires. Nevertheless, in most national and international asthma guidelines, the absence of nighttime symptoms in the patient's history is one pivotal criterion for the asthma classification as “controlled” and serves as an important guidance for asthma therapy and modification of pharmacological treatment (Global Initiative for Asthma [GINA]: Global strategy for asthma management and prevention [2008]. http://www.ginasthma.com).8 However, currently there is a huge diagnostic gap in the detection of nocturnal asthma symptoms, leading to a putative underestimation of the frequency. This gap does not only exist for asthma but for a wide variety of respiratory conditions, in which nocturnal symptoms play a relevant role. Therefore, numerous attempts have been made to develop technical devices for objective detection and quantification of acoustic airway events (i.e., coughing and wheezing).9-13

The LEOSound®, a mobile device for detection, automated analysis, and software-based classification of acoustic airway events, has been made commercially available and legally licensed for use in children (older than 24 months).14 While the first clinical assessments of the device were performed in adults,15-17 first publications exist, from our own group and others, reporting on the application of the LEOSound® in pediatric respiratory conditions.18-20 However, until now the formal test characteristics of the detection device and the automated classification software have not been systematically evaluated. The aim of our present study was to evaluate the applicability of the LEOSound® system for the clinical use in children and to assess and validate the software algorithms for automated classification of coughing and wheezing.

2 MATERIALS AND METHODS

2.1 Patients

We included 115 children and adolescents (1–17 years) into the study. In all cases, the parents or caregivers had consented for their child to take part in the study. Patients were admitted to the inpatient ward of the Department of Pediatric Pulmonology at the University Children's Hospital Regensburg or at the Alpenklinik Santa Maria in Oberjoch. The recruitment strategy for the technical validation followed two aims: (i) We aimed to cover a broad age spectrum for the assessment of the clinical applicability of the measurement device. An emphasis was put on infants and pre-schoolers since the most relevant age group regarding the acceptance of the device was supposed to be children under age 5 years. (ii) Positive findings of varying intensity as well as negative (i.e., normal) examples were needed for the technical validation of the automated detection and analysis of lung sounds. The best validation of the software-based classification would be possible with the broadest spectrum of acoustic airway events (ranging from zero to maximum). Therefore, we included children with asthma and obstructive bronchitis of all severity levels, patients with other respiratory diagnoses, as well as children with no airway symptoms at all. The patients’ characteristics are summarized in Table 1.

| Age | 1–3 years | 24 |

| 4–6 years | 36 | |

| 7–11 years | 22 | |

| 12–17 years | 16 | |

| Gender | Female | 39 |

| Male | 59 | |

| Diagnosis | Asthma | 50 |

| Bronchitis | 16 | |

| Pneumonia | 12 | |

| Other respiratory | 8 | |

| Nonrespiratory | 12 |

The study was approved by the Ethics Committee of the Philipps-University Marburg, Germany and the Ethics Committee of the University Regensburg, Germany.

2.2 LEOSound® monitor and application for recording of airway sounds

The LEOSound® monitor (Löwenstein Medical) works as a digital long-term stethoscope. Three bioacoustic microphones were attached to the patient's chest using self-adhesive, annular patches and connected to a small handheld device as previously described.14 During the measurement lung sounds were recorded in epochs of 30 s each and saved to an internal storage.

In the present validation study, lung sounds were recorded during the night, usually between 11 p.m. to 7 a.m., with minor differences due to the individual bedtime of the single child. The LEOSound® device was prepared and attached to the patient by the same examiner (C.U.) throughout the whole study.

2.3 Automated analysis of acoustic airway events

After completion of measurement, data were transferred to a personal computer and analyzed using the software LEOSound Analyzer® version 2.0 patch 5 (as previously described14). The following settings were deployed: in analogy to the common representation in polysomnography, each epoch consisted of 30-s recording. An epoch was classified as wheezing epoch if wheezing sounds were present during this 30-s time frame. Correspondingly, an epoch was classified as cough epoch when at least one cough was detected. As the two aspects might occur in parallel, it was possible that an epoch was classified both as a wheezing and a cough epoch.

2.4 Expert reassessment and validation of automated classification

To validate the automated analysis and computerized classification, recordings were completely reassessed by an experienced pediatric pulmonologist, who listened to all recorded acoustic events using high-fidelity headphones (Sennheiser). The possible validation results were defined as follows. True positive: acoustic event (wheezing or cough) correctly classified. True negative: absence of cough or wheezing correctly assigned. False positive: automated analysis detected cough or wheezing which the expert did not. False negative: automated analysis missed acoustic event which was classified by the expert. The expert's classification was considered the gold standard, against which the automated analysis was validated.

2.5 Statistical analysis

Statistical analyses were performed using GraphPad Prism 6.07. Normality distribution was assessed with a D'Agostino and Pearson omnibus normality test. The cough index (defined as cough epochs per hour) and the wheeze index (defined as wheeze epochs per hour) were calculated for each patient. For determination of correlation between automated classification and expert assessment of acoustic events, Spearman's rank correlation coefficients were calculated. A least square model was used to fit the data in the nonlinear regression analysis. To assess the extent of agreement between the two different classification methods (automated software and expert validation), a Bland–Altman analysis was performed,21 delineating the difference against the corresponding average value. The performance of the automated classification was calculated using a traditional confusion matrix for binary classifiers with the following conventional definitions: Sensitivity = number (n) of true positive events/n of all positive events. Specificity = n (true negative)/n (all negative). Accuracy = [n (true positive) + n (true negative)]/total. False discovery rate = n (false positive)/n (all positive). Miss rate = n (false negative)/n (all negative).

3 RESULTS

3.1 Clinical applicability and acceptance

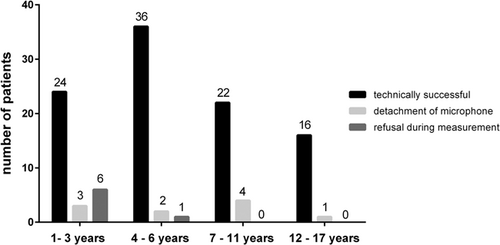

A technically correct measurement was completed in 98 of the recruited 115 children (Figure 1). In 10 cases, at least one of the bioacoustics microphones detached during the recording period. Seven parents withdrew their consent after the recording had begun, mainly because the child refused the microphone probes. This drop out reason was age-dependent with a maximum in the infants’ group.

In the following validation analysis, only the data from the 98 technically correct recordings were included. We analyzed, reassessed, and validated a total of 92,976 epochs (à 30 s), corresponding to 774 h of recorded lung sounds.

3.2 Test characteristic of automated cough detection

To determine the test characteristics of the automated classification, we assessed its test performance on the level of single sound epochs (n = 92,976). Functioning as a binary classifier, each single epoch could either be classified as cough positive or cough negative.

Out of the 92,976 analyzed lung sound epochs, the automated detection software classified 1444 as cough positive epochs (see confusion matrix in Table 2). In the manual reassessment by the expert physician, 297 of these epochs were revised and considered false positive, resulting in a false discovery rate of 20.6%. On the other hand, 131 cough epochs were missed by the automated detection (miss rate 10.3%). On the level of single epochs, the sensitivity for cough detection was 89.7% and the specificity 99.7%. With 1147 true positive and 91,401 true negative epochs, the accuracy was 99.5%.

| Automated | ||||

|---|---|---|---|---|

| Number of sound epochs | Cough | No cough | Total | |

| Expert | Cough | 1147 | 131 | 1278 |

| No cough | 297 | 91,401 | 91,698 | |

| Total | 1444 | 91,532 | 92,976 | |

Sensitivity |

0.897 |

False discovery rate |

0,206 |

|

| Specificity | 0.997 | Miss rate | 0,103 | |

| Accuracy | 0.995 |

3.3 Test characteristic of automated wheeze detection

In analogy to cough detection, the test characteristics of the automated wheeze classification were determined on the level of epochs (n = 92,976), with each sound epoch functioning as a binary classifier (each epoch was either classified as wheeze positive or wheeze negative. The automated detection software classified 6383 epochs (out of 92,976) as wheeze positive (see confusion matrix in Table 3). In the manual reassessment by the expert physician, 1849 of these epochs were revised and rated false positive, resulting in a false discovery rate of 29.0%. The main reasons for this strikingly high rate of false positive classifications are outlined below in the context of individual patients. With 111 wheeze epochs that had been overlooked by the automated detection, the miss rate was 2.4%. On the level of single epochs, the sensitivity for wheeze detection was 97.6% and the specificity 97.9%. With 4543 true positive and 86,482 true negative epochs, the accuracy was also 97.9%.

| Automated | ||||

|---|---|---|---|---|

| Number of sound epochs | Wheeze | No wheeze | Total | |

| Expert | Wheeze | 4534 | 111 | 4645 |

| No wheeze | 1849 | 86,482 | 88,331 | |

| Total | 6383 | 86,593 | 92,976 | |

Sensitivity |

0.976 |

False discovery rate |

0.290 |

|

| Specificity | 0.979 | Miss rate | 0.024 | |

| Accuracy | 0.979 |

3.4 Correlation between automated cough detection and manual reassessment on the level of individual patients

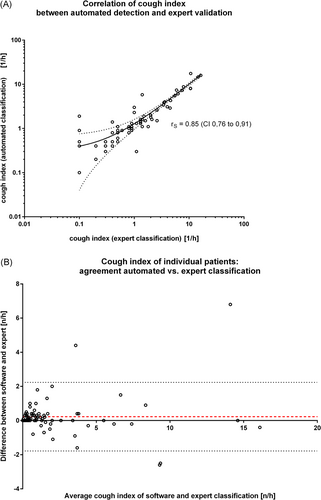

Whereas the pure test metrics of the automated classification software could be best determined using the single epoch of sound recording as a binary classifier, the clinician's focus is the individual patient. Therefore, we also analyzed the correlation between automated cough detection and manual reassessment on the level of the single child in the study (n = 98). Unlike the binary 0-1-situation with the single epoch as classifier, this approach demands a metric measure. Therefore, the cough index (defined as cough epochs per hour) was calculated for each patient.

While 30 of the 98 examined children did not cough at all during the recording time (cough index = 0.00/h, expert classification), the cough index in the remaining 68 children ranged from 0.10/h to 16/h with a median of 0.95/h (95% CI: 0.60–1.4, expert classification). The comparison between automated determination and expert validation showed a positive correlation with rS = 0.85 (95% CI: 0.76–0.91, Spearman rank correlation) (Figure 2A). A “good” correlation between two methods which were designed to determine the same parameter does not necessarily mean a good agreement of the methods. Therefore, to further assess the extent of agreement between the two different classification methods (automated software and expert validation), a Bland–Altman analysis was performed (Figure 2B). For the cough index, the mean bias of the automated classification against gold standard (expert physician) was +0.226/h (95% limits of agreement −1781 to 2234).

Using no threshold at all, the automated classification would have erroneously labeled 18 children as coughing at any time during the recording while they were re-classified as not coughing at all by the expert, leading to a calculative specificity (on the level of single patient) of 40% and a sensitivity of 100%. As outlined in Section 4, 4, the determination of a reasonable threshold above which the cough index is considered significant has a decisive clinical dimension and was not scope of our present technical evaluation study. In terms of correct/incorrect classification by the automated software, the introduction of a threshold at ≥0.5/h (meaning that a child with a cough index ≥0.5/h was considered coughing) would have provided a specificity of 73% and a sensitivity of 98% and a threshold of ≥1.0/h would have provided a specificity of 84% and a sensitivity of 97%, respectively.

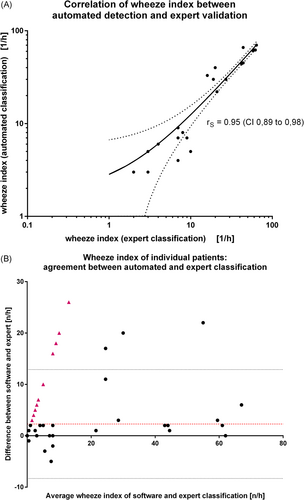

3.5 Correlation between automated wheeze detection and manual reassessment on the level of individual patients

The wheeze index (defined as wheeze epochs per hour) was calculated for each patient for a correlation analysis on the individual level. Wheezing was detected by the expert analysis in 26 of the 98 examined children, whereas 72 children did not wheeze at all during the recording. The wheeze index of the 26 children with wheezing ranged from 1.0/h to 64/h with a median of 13/h (95% CI: 7.0–42, expert classification). The comparison between automated determination and expert validation for the children with wheezing showed a positive correlation of rS = 0.95 (95% CI: 0.89–0.98, Spearman rank correlation) (Figure 3A). For the automated detection of wheezing, the Bland–Altman analysis of agreement between the two classification methods (expert validation as gold standard) showed a mean bias of +2.286/h (95% limits of agreement −8.320 to 12.89) (Figure 3B).

The analysis of the full audio raw data by the automated software with no threshold (i.e., any child with a wheezing index > 0 counted as wheezing) or without time constraints and no validation resulted in incorrect labeling of 25 individuals as wheezing while at manual classification they were classified as non-wheezing. This corresponded to a false discovery rate of 51% and a specificity of 65%. The automated detection overlooked two wheezing children (of 26), which lead to a sensitivity of 92%. The retrospective validation yielded two main groups of reasons for false positive classification: The first group comprised sounds of biological origin, produced by the child, mainly speech and snoring. Especially talking before sleeping, while the sound recording was already running, was frequently misinterpreted as wheezing due to its musical sound character. It contributed significantly to the false positive classifications. The second source of false positive rating were external acoustic artifacts from technical devices, especially medical monitors or a television in the room.

4 DISCUSSION

The principal objective of our study was to evaluate the clinical applicability of the LEOSound®14 system for the use in children and to validate the test characteristics regarding the detection and automated analysis of pathologic airway sounds. A total of 98 overnight recordings of children with and without respiratory conditions were analyzed and validated against the gold standard (manual acoustic reassessment by an expert physician), corresponding to 92,976 sound epochs (à 30 s) or a total of 774 h of lung sounds. A limitation of our study was the measurement under inpatient conditions, which possibly contributed to additional ambient noise. We cannot exclude that the overall performance of the validated system would have been different or (presumably) better under quieter conditions at home.

The general acceptance of the bio-microphone probes was good. Unsurprisingly, we observed an age-dependency with the best acceptance in school children and teenagers (no refusal at all) and a higher refusal rate in preschoolers (3%) and infants (18%). However, even 73% of the youngest age group (1–3 years) accepted an overnight recording. We did not collect data about a preferred or avoided sleep position during the recording. However, as far as we know, no child did complain about perceived discomfort in a particular sleep position.

Regarding hardware aspects, we found that the detachment of the microphones was mainly due to a tautly stretched cable of the tracheal probe, which occurred when the child was moving or turning during sleep. Therefore, we suggested modifying the cabling of the device by adding an extension cord for the tracheal probe. The manufacturer has already reacted on this suggestion and now includes an extension cable of 25 cm length, leading to a strain relieve.

The automatic sound analysis was evaluated manually to assess the test characteristics of the LEOSound® system. The fundamental classifier was a 30-s epoch which was classified as either pathologic or non-pathologic. Our reassessment and validation of more than 90,000 epochs yielded a sensitivity of 89% and a specificity of 99% for the automated cough detection. For wheezing, both sensitivity and specificity were 98%. The formally high specificities occurred statistically due to the overwhelming proportion of true negative classifications (which nevertheless is also is an important quality criterion for a diagnostic test). The false discovery rate of 21% for cough, and 29% for wheezing, directs the focus to a challenge in the routine clinical use of the automated device: our data showed that the application in a “real” patient and a consecutive decision based on the results requires at least a basic validation process.

While the consideration of single epochs is a sound approach for the determination of the test characteristics, the focus of the physician is the individual patient. Unlike an epoch, which can be handled as a binary classifier, the classification of a child as either “non-coughing” or “coughing” (irrespective of the quantity) contradicts our clinical perception and is not helpful for the medical decision. Therefore, we defined a cough index as “cough epochs per hour” which was calculated for each patient. For the 68 children with coughing during the night, the cough index showed a large interindividual variation, ranging from 0.10/h to 16/h. The corresponding analysis showed a strong positive correlation of rS = 0.85 (95% CI: 0.76–0.91, Spearman rank) and a good agreement of the two assessment methods in the Bland-Altman analysis as well. In analogy, a wheeze index (wheezing epochs per hour) was calculated for each patient and the corresponding correlation analysis showed an rS of 0.95 (95% CI: 0.89–0.98, Spearman rank) and a good agreement in the Bland-Altman analysis. We conclude that the automated classification of cough and wheezing by the LEOSound® sufficiently corresponds to the expert validation.

Without a threshold, as in our technical evaluation study, the automated classification would have erroneously labeled some children as coughing at any time while the expert re-classified the recording as not coughing at all. The cough index of these children was almost exclusively between 0.1 and 1.0/h, that is, in a very low range. For the routine clinical use of the LEOSound® system, we suggest the application of a threshold value above which the cough index or the wheeze index is considered significant, which would lead to a marked reduction of false positive classifications. The determination of such a threshold raises the question How much coughing is normal? and should be scope of a future study with healthy children.

We consider that the relatively high false positive rates require at least a basic manual validation. We suggest a two-step validation process. First, the automated analysis in the LEOSound Analyzer® should be limited to the child's actual “sleeping time”. As the measurement automatically starts at the pre-set time, often a significant time period before sleep is recorded, which is usually rich of speech and other external sound events (e.g., TV or mp3-player, etc.). Excluding the period before individual “light off,” as documented by the parents on a simple paper log, can be easily done in the LEOSound Analyzer® software. It will lead to a reduction of the false discovery rate and hence to a marked improvement of the predictive value. In addition, the parents should be informed that external sound sources should be minimized during the recording. The second step in our proposed validation process is to focus the validation on the remaining events classified as pathologic. Each tagged audio event should either be confirmed or rejected by briefly listening to the recording. Our data show that in a routine clinical setting it is not necessary to search for overheard events (as we did in this validation study). The described validation process in our experience only takes a few minutes. A study published by Lindenhofer et al. reported a mean duration for audio-validation of LEOSound® recordings of 14 min.20

In summary, the data of our evaluation study show that the clinical application of the LEOSound® recorder is technically easy and that the general acceptance is good across all age groups from infants to teenagers. The analysis of the automated classification of cough and wheezing showed a strong correlation with the assessment by an expert and displayed sufficient test characteristics on the level of single sound events. To reduce false positive classifications of individual patients, a simple validation process for clinical routine is indispensable. The LEOSound® device is a promising tool for closing the diagnostic gap in detecting nocturnal respiratory symptoms in children.

CONFLICT OF INTERESTS

CU, AK, and MK declared no conflicts of interest. RC has received scientific advisory fees from Spiromedical Norway and GDS Medtech UK and accepted consultancy fees from Heinen und Löwenstein. CL and SK received lecture fees from Novartis GmbH, Heinen und Löwenstein, and Sanofi-Aventis Deutschland GmbH. CL has participated in advisory boards of Sanofi-Aventis Deutschland GmbH. AZ accepted consultancy fees from Novartis, Vertex Pharmazeuticals, Chiesi and Teva, and has been a speaker in a scientific symposium sponsored by Heinen und Löwenstein. Regarding the assessed device in the current paper, the authors have no conflict of interest.

AUTHOR CONTRIBUTIONS

Christof Urban: conceptualization (equal); formal analysis (equal); investigation (lead); validation (equal); writing review & editing (supporting). Alexander Kiefer: supervision (equal); writing review & editing (supporting). Regina Conradt: writing original draft (equal); writing review & editing (equal). Michael Kabesch: resources (equal); writing original draft (supporting); writing review & editing (supporting). Christiane Lex: methodology (supporting); writing original draft (supporting); writing review & editing (supporting). Angela Zacharasiewicz: methodology (equal); writing original draft (supporting); writing review & editing (supporting). Sebastian Kerzel: conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); project administration (equal); supervision (lead); validation (equal); writing original draft (lead); writing review & editing (lead).