Relaxation approach for optimization of free boundary problems

Abstract

We consider an optimal control problem (OCP) constrained by a free boundary problem (FBP). FBPs have various applications such as in fluid dynamics, flow in porous media or finance. For this work we study a model FBP given by a Poisson equation in the bulk and a Young-Laplace equation accounting for surface tension on the free boundary. Transforming this coupled system to a reference domain allows to avoid dealing with shape derivatives. However, this results in highly nonlinear partial differential equation (PDE) coefficients, which makes the OCP rather difficult to handle. Therefore, we present a new relaxation approach by introducing the free boundary as a new control variable, which transforms the original problem into a sequence of simpler optimization problems without free boundary. In this paper, we formally derive the adjoint systems and show numerically that a solution of the original problem can be indeed asymptotically approximated in this way.

1 INTRODUCTION

Free boundary problems (FBPs) occur in various fields of application. These include, for example, solidification, technical textiles, or thin film manufacturing processes [1-3]. Phase separation and optimal control problems (OCPs) constrained by the Stefan problem have been widely studied [2, 4-6]. Many of these problems have in common that there is a relation between the surface tension and the curvature of the boundary [7, 8].

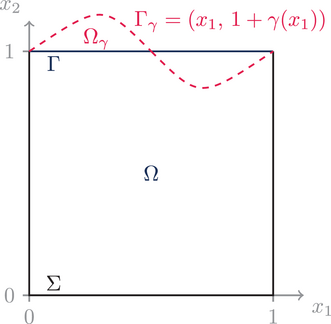

In this paper, we study a model FBP that is very similar to the one considered by Saavedra and Scott [9]. Analytical results such as existence and uniqueness of solutions, existence of a minimizer and first and second order optimality conditions are shown by Antil et al. [10, 11]. Throughout this work we assume that the free boundary (dashed line) is represented as a graph of a function γ, cf. Figure 1 . The model consists of two equations: a Poisson equation, which describes the quantity in the interior of the domain, and a Young-Laplace equation, which connects the surface tension to the curvature of the free boundary.

Free boundaries make the optimization highly complex and costly, particularly the derivation of the necessary optimality conditions and efficient numerical handling. When solving OCPs with FBPs, besides the state variables, the domain is also an unknown. To avoid having to deal with shape derivatives, we transform the FBP to a fixed domain. This is done at the expense of highly nonlinear PDE coefficients.

In this paper, we propose a relaxation-based approach that allows solving a sequence of simpler optimization problems instead of the optimization problem with the coupled constraint. Thereby we use the free boundary as a control, which is an idea already presented by Volkov [3, 12, 13].

This paper is organized as follows. In the remainder of this section we formulate the model FBP on a reference domain so that we do not have to deal with shape derivatives. We also state the nonlinear PDE constrained OCP and the relaxed OCP. Section 2 is devoted to the analysis of the well-posedness of the relaxed OCP and the formal derivation of the adjoint systems. The numerical solution of the OCPs based on a Broyden-Fletcher-Goldfarb-Shanno (BFGS) method is covered in Section 3. Here, we also show that the sequence of minimizers converges towards a minimizer of the original optimization problem as desired. We conclude in Section 4 with a summary and outlook.

1.1 Coupled state problem

We consider a model FBP on a domain similar to the problem analyzed by Antil et al. [10]. The difference is that we do distributed control, whereas their control variable u acts on the boundary. The top boundary of the physical domain is free and parametrized by γ, compare Figure 1. Throughout this work we require that γ is Lipschitz continuous with constant 1, that is, , which ensures that . The governing equations are given by a Poisson equation in the bulk and a Young-Laplace equation accounting for surface tension on the free boundary. In order not to deal with changes in the domain when we consider OCPs for the FBP, we transform to and to , compare with ref.[10]. Consequently, the coefficients in our PDE are highly nonlinear. Further, we identify the function spaces on Γ and . Since we are only interested in small perturbations of the flat case , we assume like in ref. [10] that the curvature can be linearized and our control is scaled accordingly.

1.2 Optimal control problems

We want to optimize in a Hilbert space setting, so we consider the following spaces. Let , and . The reason for those high regularities is discussed later.

Graph as state variable

Graph as control variable

2 ANALYSIS

- (A1) the set of admissible controls or , respectively, is a weakly closed and convex subset of the control space,

- (A2) the desired potential is in , and the desired boundary in .

2.1 Well-posedness of ()

Due to the highly nonlinear coefficients in the PDE constraint of (), the following theorem provides the necessary existence and uniqueness of a solution y.

Theorem 2.1. (Existence and Uniqueness of forward problem)For all with and right-hand sides the PDE (1a)–(1b) has a unique solution .

Proof.The PDE (1a)–(1b) is uniformly elliptic, since is positive definite; compare ref. [9]. Clearly, is a convex, bounded, and open subset of and the right-hand side of the PDE is in by assumption. By Sobolev's embedding theorem [14, Thm. 5.4], we have

Remark 2.2.The high regularity of γ implies . Hence, the co-normal derivative along the boundary Γ is well-defined in ; see, for example [16].

Theorem 2.3. (Existence of Minimizer)Let be fixed and assumptions (A1) and (A2) hold. Assume further that . With these assumptions there exists such that

The proof follows standard arguments; see, for example [17].

2.2 Formal Lagrange principle

Due to limited space, we only present the formal adjoint equations to (P0) and (). Assuming enough regularity to ensure Fréchet differentiability, we apply the Lagrangian multiplier technique, where r denotes the Lagrange multiplier for the equation constraint and s is the corresponding one for the additional boundary condition; see, for example [18] or [17].

3 NUMERICAL SOLUTION OF THE OCPs

Setting

The PDE constraints are solved with a finite element method (FEM)in the framework of FENICS [19, 20]. For this purpose, the function spaces Y and U are discretized with continuous galerkin (CG) elements of first order. The function space G is discretized with CG elements of second order [20].

The optimization procedure is initialized with and and stopped when the termination criterion is fulfilled, which is composed of two conditions. Either the relative gradient norm is smaller than a given tolerance, or the relative change of the controls is no longer large. This second criterion has been shown to be reasonable by numerical experiments. The tolerances for both criteria are chosen as .

Results

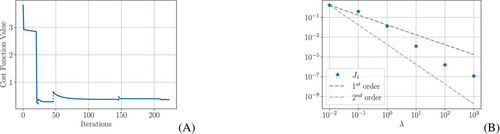

Repeatedly solving problem () for increasing values of λ eventually leads to a critical point, where the second equation of the coupled PDE problem (1) is also satisfied in the weak sense. The evolution of the cost functional can be seen in Figure 2. The jumps result from the term in the cost function and enlarging λ. Between two jumps we see the evolution of the cost functional while computing a critical point of () for fixed λ. Next to the composite cost functional, one sees the decrease in the term J1, which essentially measures the square of the residual of the second equation of the coupled PDE. The relation between the increase in λ and the decrease in J1 is somewhat better than linear, what we would expect. Note, however, that in ref.[22] it is shown for a different problem that the order of convergence can be better for solutions with higher regularity, which we might observe here as well. In our setting λ was increased from 10−2 to 103.

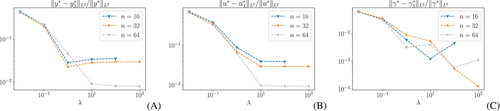

It remains to illustrate that the final minimizer of () converges towards the minimizer (P0) for finer discretizations. For this purpose, the difference of the minimizers are calculated for and γ and plotted against the parameter λ for different grid sizes in Figure 3.

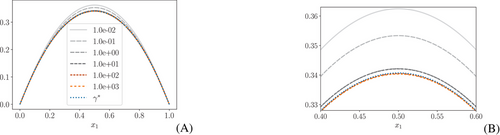

In Figure 4 different (interim) solutions for γ are shown, including the final critical point of (), and various intermediate solutions for γ from 10−2 to 103. Next to it a zoom into the interesting part at the center of the interval is given. In both figures the critical point of (P0) is shown as well.

4 CONCLUSION

We presented a highly nonlinear optimization problem for a FBP model that was transformed onto a reference domain and used a penalty method to relax the condition for the free boundary. First, the free boundary was treated as a state variable, while in the second approach it acts as a control variable. We stated results concerning the existence and uniqueness of solutions with regularity to the state equation in the relaxed case. Further, we discussed the well-posedness of the OCP for the relaxed problem, which required a careful regularity analysis of the co-normal derivative involving the highly nonlinear Nemytskii operator . For numerical simulations, we formally calculated derivatives using the Lagrangian approach. Finally, we examined numerically the asymptotic behavior of the two OCPs for a reachable setting, underlining the feasibility of our approach.

ACKNOWLEDGMENTS

Open access funding enabled and organized by Projekt DEAL.