Cherry Picking with Synthetic Controls

Abstract

We evaluate whether a lack of guidance on how to choose the matching variables used in the Synthetic Control (SC) estimator creates specification-searching opportunities. We provide theoretical results showing that specification-searching opportunities are asymptotically irrelevant if we restrict to a subset of SC specifications. However, based on Monte Carlo simulations and simulations with real datasets, we show significant room for specification searching when the number of pre-treatment periods is in line with common SC applications, and when alternative specifications commonly used in SC applications are also considered. This suggests that such lack of guidance generates a substantial level of discretion in the choice of the comparison units in SC applications, undermining one of the advantages of the method. We provide recommendations to limit the possibilities for specification searching in the SC method. Finally, we analyze the possibilities for specification searching and provide our recommendations in a series of empirical applications.

INTRODUCTION

The synthetic control (SC) method has been recently proposed in a series of seminal papers by Abadie and Gardeazabal (2003) and Abadie, Diamond, and Hainmueller (2010, 2015) as an alternative method to estimate treatment effects in comparative case studies. Despite being relatively new, this method has been used in a wide range of applications in Political Science, Economics, and other Social Sciences.1 Athey and Imbens (2017) describe the SC method as arguably the most important innovation in the policy evaluation literature in the last fifteen years.

Abadie, Diamond, and Hainmueller (2010, 2015) describe many advantages of the SC estimator over techniques traditionally used in comparative studies. Among them, one important feature of the SC method is that it provides a transparent way to choose comparison units. In the SC method, a data-driven process is used to choose the weights that build the weighted-average of the controls’ outcomes that estimates the counterfactual for the treated unit. Also, since the estimation of the SC weights does not require access to post-intervention outcomes, researchers could decide on the study design without knowing how those decisions would affect the conclusions of their studies. Taken together, these features potentially make the SC method less susceptible to specification searching relative to alternative methods for comparative case studies. This could be an important advantage of the SC method, given the growing debate about transparency in social science research (e.g., Miguel et al., 2014).2

An important limitation of the SC method, however, is that there is no consensus on the choice of predictor variables and covariates that should be used to estimate the SC weights.3 Although Abadie, Diamond, and Hainmueller (2010) define vectors of linear combinations of pre-intervention outcomes that could be used as predictors, there is no specific recommendation about which variables should be used. Such lack of guidance on how to choose the predictors when implementing the synthetic control method translates into a wide variety of different specifications in empirical applications. If different specifications result in widely different choices of the SC unit, then a researcher would have relevant opportunities to select “statistically significant” specifications even when there is no effect. This flexibility may undermine one of the potential advantages of the SC method, as it essentially implies some discretionary power for the researcher to construct the counterfactual for the treated unit—and, therefore, the estimated treatment effects—by choosing which predictors to include, rather than having a purely data-driven process.4

In this paper, we investigate these opportunities for specification searching by considering only one particular step of the method: the choice of pre-treatment outcome lags used in the estimation of the SC weights.5 In the following section, we first provide conditions under which different SC specifications lead to asymptotically equivalent estimators when the number of pre-treatment periods (T0) goes to infinity and we restrict to specifications whose number of pre-treatment outcome lags used as predictors goes to infinity with T0. This equivalence result is true whether or not covariates are included as predictors. Under these conditions, we also show that the placebo test suggested by Abadie, Diamond, and Hainmueller (2010) asymptotically leads to the same conclusion regardless of the chosen specification. On the one hand, these results show that the SC method is robust to specification searching, provided we have a large number of pre-treatment periods, and we restrict to a specific subset of specifications. This is an important feature of the SC estimator that is not generally shared by other methods. On the other hand, these results point out exactly when specification searching should be a problem in SC applications. First, many SC applications do not have a large number of pre-treatment periods to justify large-T0 asymptotics, as argued by Doudchenko and Imbens (2016), possibly leaving room to specification searching even if we restrict to this specific class of SC specifications. Moreover, there are common SC specifications whose number of included pre-treatment periods does not go to infinity, possibly leading to specification-searching opportunities even when the number of pre-treatment periods is large.

Guided by our theoretical results, we then measure the specification-searching opportunities in SC applications using Monte Carlo (MC) simulations in the third section, and placebo simulations with the Current Population Survey (CPS) in Appendix E.6 We calculate the probability that a researcher could find at least one specification such that she would reject the null using the test procedure proposed by Abadie, Diamond, and Hainmueller (2010), when the actual effect of the intervention is zero. If different SC specifications lead to similar SC estimators, then this probability would be close to 5 percent for a 5 percent significance level test, while it may be much higher than 5 percent if different SC specifications lead to wildly different estimates, implying that there is room for specification searching. We consider seven different specifications commonly used in SC applications.7

We find that the probability of detecting a false positive in at least one specification for a 5 percent significance test can be as high as 14 percent when there are 12 pre-treatment periods. The possibilities for specification searching remain high even when the number of pre-treatment periods is large. For example, with 400 pre-treatment periods—which is much longer than the usual SC application—we still find a probability of around 13 percent that at least one specification is significant at 5 percent. These results suggest that, even with a large number of pre-treatment periods, different specifications that are commonly used in SC applications can still lead to significantly different synthetic control units, generating substantial opportunities for specification searching. Given our theoretical results, it is expected that the significant specification-searching possibilities with a large T0 are driven by specifications that do not increase the number of pre-treatment lags used as predictors when the number of pre-treatment periods goes to infinity. Indeed, we find that excluding those specifications from the set of options strongly attenuates the specification-searching problem when T0 is large. However, we still find significant possibilities for specification searching for values of T0 commonly considered in SC applications, suggesting that reliable asymptotic approximations may require unrealistically long time series. We also show that specification searching may remain a problem even when we restrict the set of options to specifications with a good pre-treatment fit.

Since transparency in the choice of comparison units is one of the often-advocated advantages of the method (Abadie, Diamond, & Hainmueller, 2010, p. 494), our main conclusion is that such an advantage is weakened by a lack of consensus on which variables should be chosen as predictors to estimate the SC weights. If there were a consensus on how the SC specification should be selected, then the risk of p-hacking (at least in this dimension) would be limited. For this reason, we specifically recommend focusing on the specification that uses all the pre-treatment outcome lags as matching variables, unless there is a strong prior belief that it is crucial to balance on a specific set of covariates. We discuss this and other recommendations in the fourth section.

Finally, we also consider, in the fifth section, the possibilities for specification searching and the implementability of the above recommendations in two empirical applications based on Abadie, Diamond, and Hainmueller (2015) and Bartel et al. (2018). We find that different specifications can reach either significant or non-significant results, showing the potential for specification searching with synthetic controls. In Appendix F, we consider three more examples, two based on Smith (2015) and one based on Abadie, Diamond, and Hainmueller (2010).8 In the first example, the conclusions are robust to specification searching; in the second example, most specifications show insignificant effects, but it would be possible to find a few “statistically significant” specifications; and, in the third example, all results are significant, but at different significance levels. While in some of these empirical applications conclusions vary depending on the SC specification, we show that applying our recommendations from the fourth section to these empirical applications provides clear conclusions about the significance of these estimates.

Appendix A presents the formal theoretical results and proofs that guide our investigation about specification-searching opportunities. The code for all our simulations and empirical examples was made available by Ferman, Pinto, and Possebom (2020).

SYNTHETIC CONTROLS AND SPECIFICATION SEARCHING

Abadie and Gardeazabal (2003) and Abadie, Diamond, and Hainmueller (2010, 2015) have developed the Synthetic Control Method (SCM) in order to address counterfactual questions involving only one treated unit. This method uses a weighted average of control units and flexibly estimates treatment effects for each post-treatment period. Below, we explain the SCM following Abadie, Diamond, and Hainmueller (2010).

Suppose we observe data for  units during

units during  time periods and a treatment that affects only unit 1 from period

time periods and a treatment that affects only unit 1 from period  to period T uninterruptedly. Let

to period T uninterruptedly. Let  be the potential outcome that would be observed for unit j in period t if there were no treatment for

be the potential outcome that would be observed for unit j in period t if there were no treatment for  and

and  . Let

. Let  be the potential outcome under treatment. Define

be the potential outcome under treatment. Define  as the treatment effect and

as the treatment effect and  as the observed outcome.

as the observed outcome.

We aim to identify  . Since

. Since  is observable for

is observable for  , we only need to estimate the counterfactual

, we only need to estimate the counterfactual  to accomplish this goal.

to accomplish this goal.

Let  be the vector of observed outcomes for unit

be the vector of observed outcomes for unit  in the pre-treatment period and

in the pre-treatment period and  be a

be a  -vector of predictors of

-vector of predictors of  . Those predictors can be not only covariates that explain the outcome variable, but also linear combinations of the variables in

. Those predictors can be not only covariates that explain the outcome variable, but also linear combinations of the variables in  . Let also

. Let also  be a

be a  -matrix and

-matrix and  be a

be a  -matrix.

-matrix.

Given the choice of predictors in matrix  , the idea of the SC method is to construct the counterfactual for the treated unit using a weighted average of the control units,

, the idea of the SC method is to construct the counterfactual for the treated unit using a weighted average of the control units,  .

.

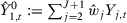

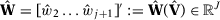

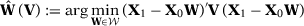

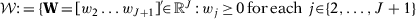

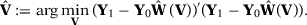

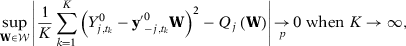

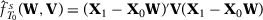

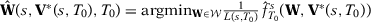

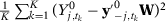

are given by the solution to a nested minimization problem:

are given by the solution to a nested minimization problem:

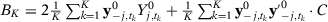

()

() and

and  and V is a diagonal positive semidefinite matrix of dimension

and V is a diagonal positive semidefinite matrix of dimension  . Moreover,

. Moreover,

()

()Intuitively,  is a weighting vector that measures the relative importance of each unit in the synthetic control of unit 1, while

is a weighting vector that measures the relative importance of each unit in the synthetic control of unit 1, while  measures the relative importance of each one of the F predictors. The relative importance of each predictor is estimated in a data-driven optimization problem presented in equation (2). We define the Synthetic Control Estimator of

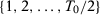

measures the relative importance of each one of the F predictors. The relative importance of each predictor is estimated in a data-driven optimization problem presented in equation (2). We define the Synthetic Control Estimator of  (or the estimated gap) as

(or the estimated gap) as  for each

for each  , where

, where  is constructed using weights

is constructed using weights  .

.

Even though a crucial part in the implementation of the SC method is the choice of predictors, there is no consensus on which variables to include in matrix  . This lack of guidance can create an opportunity for the researcher to look for specifications that yield “better” results by including or excluding some pre-treatment outcome values from its specification. This risk is even greater when we consider that there is no consensus about which functions of the outcome values should be included in

. This lack of guidance can create an opportunity for the researcher to look for specifications that yield “better” results by including or excluding some pre-treatment outcome values from its specification. This risk is even greater when we consider that there is no consensus about which functions of the outcome values should be included in  .

.

To illustrate this lack of consensus, we present in Table 1 a list with all papers that use the SC method published in the American Economic Review, American Economic Journal–Economic Policy, American Economic Journal–Applied Economics, Quarterly Journal of Economics, Review of Economic Studies, Review of Economics and Statistics, Journal of Development Economics, Journal of Labor Economics, and Journal of Policy Analysis and Management, including information on the specifications used in the implementation of the method. Abadie and Gardeazabal (2003), Abadie, Diamond, and Hainmueller (2015), Kleven, Landais, and Saez (2013), Baccini, Li, and Mirkina (2014), and DeAngelo and Hansen (2014) use the mean of all pre-treatment outcome values and additional covariates; Cunningham and Shah (2018) pick  ,

,  ,

,

,

,  ,

,  ,

,  ,

,  ,

,  ,

,  and additional covariates; Smith (2015) uses

and additional covariates; Smith (2015) uses  ,

,  ,

,  ,

,  and additional covariates; Abadie, Diamond, and Hainmueller (2010) pick

and additional covariates; Abadie, Diamond, and Hainmueller (2010) pick  ,

,  ,

,  and additional covariates; Lindo and Packham (2017) pick

and additional covariates; Lindo and Packham (2017) pick  ,

,  ,

,  ; Billmeier and Nannicini (2013), Bohn et al. (2014), Gobillon and Magnac (2016), Hinrichs (2012), Dustmann, Schonberg, and Stuhler (2017), Zou (2018) and Bartel et al. (2018) use all pre-treatment outcome values; Cavallo et al. (2013) use the first half of the pre-treatment outcome values and additional covariates; Eren and Ozbeklik (2016) use the even-numbered pre-treatment lags and additional covariates; and Montalvo (2011) uses only the last two pre-treatment outcome values and additional covariates.9

; Billmeier and Nannicini (2013), Bohn et al. (2014), Gobillon and Magnac (2016), Hinrichs (2012), Dustmann, Schonberg, and Stuhler (2017), Zou (2018) and Bartel et al. (2018) use all pre-treatment outcome values; Cavallo et al. (2013) use the first half of the pre-treatment outcome values and additional covariates; Eren and Ozbeklik (2016) use the even-numbered pre-treatment lags and additional covariates; and Montalvo (2011) uses only the last two pre-treatment outcome values and additional covariates.9

| Authors | Journal | Pre-treatment Periods | Post-treatment periods | Number of Covariatesa | Outcome Lagsb | Number of Control Units |

|---|---|---|---|---|---|---|

| Abadie and Gardeazabal (2003) | AER | 10 | 30 | 11 | Mean | 16 |

| Kleven et al. (2013) | AER | 11 | 5 | 3 | Mean | 14 |

| DeAngelo and Hansen (2014) | AEJ:EP | 37 | 35 | 14 | Mean | 46 |

| Lindo and Packham (2017) | AEJ:EP | 6 | 5 | 0 | −1, −3, −5 | 38 |

| Dustmann et al. (2017) | QJE | 6 | 5 | 5 | All | 85 |

| Cunningham and Shah (2018) | RESTUD | 18 | 6 | 5 | 0, −1, −2, −7, −8, −9, −11, −14,−15, −16 | 50 |

| Montalvo (2011) | RESTAT | 4 | 1 | 2 | 0, −1 | 32 |

| Hinrichs (2012) | RESTAT | 9 | 6 | 0 | All | 3-7 |

| Billmeier and Nannicini (2013 | RESTAT | 2-32 | 10 | 5 | All | 4-62 |

| Cavallo et al. (2013) | RESTAT | 11 | 10 | 7 | First Half | 53 |

| Bohn et al. (2014) | RESTAT | 9 | 3 | 42 | All | 45 |

| Gobillon and Magnac (2016) | RESTAT | 8 | 13 | 0 | All | 135 |

| Smith (2015) | JDE | 10-43 | 16-49 | 2 | 0, −2, −4, −6 | 7-32 |

| Zou (2018) | JLE | 2 | 1 | 6 | All | 2429 |

| Baccini et al. (2014) | JPAM | 7 | 5 | 0 | Mean | 36 |

| Eren and Ozbeklik (2016) | JPAM | 19 | 6 | 7 | Even Lags | 28 |

| Bartel et al. (2018) | JPAM | 5 | 9 | 11 | All, Mean | 49 |

- a Number of Covariates included in matrix Xj besides the ones related to the outcome variable.

- b Outcome Lags included in matrix Xj . The last pre-treatment period (T0 ) is denoted by the number 0.

- Notes: List of articles using the SC method published at American Economic Review, American Economic Journal–Economic Policy, American Economic Journal–Applied Economics, Quarterly Journal of Economics, Review of Economic Studies, Review of Economics and Statistics, Journal of Development Economics, Journal of Labor Economics, and Journal of Policy Analysis and Management. We did not find any articles using the SC method published at Econometrica or the Journal of Political Economy.

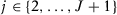

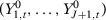

A key question, therefore, is whether different specifications may lead to substantially different SC estimators. We consider the asymptotic behavior of different SC specifications when  . We define a specification s by the set of predictors

. We define a specification s by the set of predictors  that are used when there are T0 pre-treatment periods, which may include pre-treatment outcome lags, functions of pre-treatment outcome lags, or other observed covariates. Let

that are used when there are T0 pre-treatment periods, which may include pre-treatment outcome lags, functions of pre-treatment outcome lags, or other observed covariates. Let  be the number of pre-treatment periods t such that

be the number of pre-treatment periods t such that  is included as a predictor when there are T0 pre-treatment periods. For example, consider a specification s to be such that R covariates and the first half of the pre-treatment outcome lags

is included as a predictor when there are T0 pre-treatment periods. For example, consider a specification s to be such that R covariates and the first half of the pre-treatment outcome lags  are used as predictors. Then,

are used as predictors. Then,  . Note that, in this case, the dimension of

. Note that, in this case, the dimension of  would be

would be  .

.

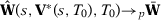

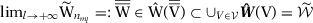

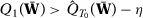

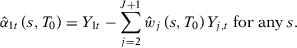

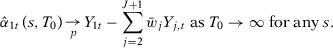

Let  be the SC weights using specification s when there are T0 pre-intervention periods. We want to understand under which conditions

be the SC weights using specification s when there are T0 pre-intervention periods. We want to understand under which conditions  converges in probability to the same

converges in probability to the same  for any specification s when

for any specification s when  . We show in Proposition 2 (see Appendix A) that this is the case when we consider specifications such that the number of pre-treatment outcomes used as predictors increases with T0 (i.e.,

. We show in Proposition 2 (see Appendix A) that this is the case when we consider specifications such that the number of pre-treatment outcomes used as predictors increases with T0 (i.e.,  when

when  . The only assumption we need is that pre-treatment averages for subsequences of

. The only assumption we need is that pre-treatment averages for subsequences of  converge to the same value.10 Given that, the difference between two SC estimators using specifications s and

converge to the same value.10 Given that, the difference between two SC estimators using specifications s and  converge in probability to zero if

converge in probability to zero if  and

and  when

when  (see Corollary 3 in Appendix A).

(see Corollary 3 in Appendix A).

The intuition for these results is that, when  , the minimization problem (2) that chooses the matrix

, the minimization problem (2) that chooses the matrix  will only assign positive weights to the pre-treatment outcome lags if

will only assign positive weights to the pre-treatment outcome lags if  , even when other covariates are included. Therefore, asymptotically, all such specifications will choose the SC weights by minimizing an average of a function of the pre-treatment outcomes that are included as predictors. A formal proof is presented in the Appendix.

, even when other covariates are included. Therefore, asymptotically, all such specifications will choose the SC weights by minimizing an average of a function of the pre-treatment outcomes that are included as predictors. A formal proof is presented in the Appendix.

While different SC specifications may generate different SC estimates, our theoretical results show that, under some conditions, different specifications will lead to asymptotically equivalent SC estimators, as long as the number of pre-treatment lags used as predictors goes to infinity with . However, our results do not guarantee that different SC specifications would lead to similar SC estimates when T0 is finite, nor determine a value of T0 that is large enough to ensure that the asymptotic approximation is reliable. Moreover, there are common specifications used in SC applications that do not satisfy the condition that

. However, our results do not guarantee that different SC specifications would lead to similar SC estimates when T0 is finite, nor determine a value of T0 that is large enough to ensure that the asymptotic approximation is reliable. Moreover, there are common specifications used in SC applications that do not satisfy the condition that  when

when  . For example, in roughly a third of the published papers that use the SC method in Table 1, the authors consider the use of the mean of all pre-treatment outcome values in addition to other covariates as predictors. These alternative specifications would generally lead to SC weights that will not converge to

. For example, in roughly a third of the published papers that use the SC method in Table 1, the authors consider the use of the mean of all pre-treatment outcome values in addition to other covariates as predictors. These alternative specifications would generally lead to SC weights that will not converge to  , so there may still be significant variation in the SC estimates even when T0 is large.

, so there may still be significant variation in the SC estimates even when T0 is large.

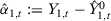

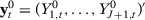

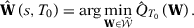

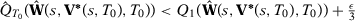

and

and  ,

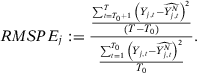

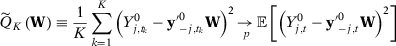

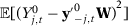

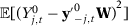

,  as described above. Then, they compute the ratio of the mean squared prediction errors as a test statistic:

as described above. Then, they compute the ratio of the mean squared prediction errors as a test statistic:

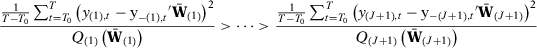

()

()Moreover, they propose to calculate a p-value,  and reject the null hypothesis of no effect if p is less than some pre-specified significance level. Abadie, Diamond, and Hainmueller (2010) recognize that the randomization inference assumptions are very restrictive for the SC set-up, as treatment is not, in general, randomly assigned.11 In the absence of random assignment, they interpret the p-value as the probability of obtaining an estimate value for the test statistics at least as large as the value obtained using the treated case as if the intervention were randomly assigned among the data. Although the p-value from this placebo test lacks a clear statistical interpretation, this test is commonly used in SC application. Therefore, our simulation exercises can be seen as the probability that a researcher applying the SC method would find a test statistic that is in the top 5 percent of the distribution of test statistics in the placebo runs, which is how researchers applying the SC method usually assess whether their estimates are significant. Moreover, note that, in our simulations, the placebo test considering a single SC specification would have a rejection rate under the null of 5 percent by construction. In Appendix G, we also consider as a robustness check an infeasible test based on the actual distribution of the test statistic in our MC simulations to assess the statistical significance of the results.12

and reject the null hypothesis of no effect if p is less than some pre-specified significance level. Abadie, Diamond, and Hainmueller (2010) recognize that the randomization inference assumptions are very restrictive for the SC set-up, as treatment is not, in general, randomly assigned.11 In the absence of random assignment, they interpret the p-value as the probability of obtaining an estimate value for the test statistics at least as large as the value obtained using the treated case as if the intervention were randomly assigned among the data. Although the p-value from this placebo test lacks a clear statistical interpretation, this test is commonly used in SC application. Therefore, our simulation exercises can be seen as the probability that a researcher applying the SC method would find a test statistic that is in the top 5 percent of the distribution of test statistics in the placebo runs, which is how researchers applying the SC method usually assess whether their estimates are significant. Moreover, note that, in our simulations, the placebo test considering a single SC specification would have a rejection rate under the null of 5 percent by construction. In Appendix G, we also consider as a robustness check an infeasible test based on the actual distribution of the test statistic in our MC simulations to assess the statistical significance of the results.12

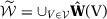

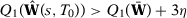

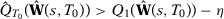

Given our results that the difference between two SC estimators using specifications s and  converge in probability to zero when both

converge in probability to zero when both  and

and  when

when  , we also show that the ranking of

, we also show that the ranking of  will remain asymptotically invariant to changes in the SC specification when

will remain asymptotically invariant to changes in the SC specification when  , whenever we consider only specifications whose number of pre-treatment outcome lags goes to infinity with T0 (see Corollary 4 in Appendix A). As a consequence, the test decision in the placebo test is asymptotically invariant to the specification choice when

, whenever we consider only specifications whose number of pre-treatment outcome lags goes to infinity with T0 (see Corollary 4 in Appendix A). As a consequence, the test decision in the placebo test is asymptotically invariant to the specification choice when  , provided we restrain to such set of SC specifications. Therefore, in this case, the possibilities for specification searching are asymptotically irrelevant. This is a feature of the SC method that is not generally shared by other methods and is valid even when covariates are included.13

, provided we restrain to such set of SC specifications. Therefore, in this case, the possibilities for specification searching are asymptotically irrelevant. This is a feature of the SC method that is not generally shared by other methods and is valid even when covariates are included.13

In addition to showing that the SC estimator is robust to specification searching when T0 is large and when we restrict attention to a subset of specifications, these theoretical results provide guidance on the conditions in which specification searching might be relevant in SC applications: (i) when T0 is not large enough to ensure a reliable asymptotic approximation or (ii) when one considers specifications with few pre-treatment outcomes as predictors.

Monte Carlo Simulations

We design an MC simulation guided by our results presented in the previous section. We evaluate whether values of T0 commonly used in SC applications are large enough so that our asymptotic results provide a reliable approximation, and whether alternative specifications commonly used in SC applications, but that do not satisfy the conditions in our theoretical results, can imply significant specification-searching possibilities even when T0 is large.

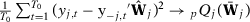

We generate 10,000 datasets and, for each one of them, test the null hypothesis of no effect whatsoever adopting several different specifications. Conditional on a given specification, in our simulations, this placebo test should provide a rejection rate of α percent under the null for a α percent significance test by construction. We are interested, however, in the probability of rejecting the null hypothesis at the α-percent-significance level for at least one specification. If different specifications result in wildly different SC estimators, then the probability of finding one specification that rejects the null at α percent can be significantly higher than α percent. In the extreme case, in which we have S different specifications and these specifications lead to independent estimators, this probability would be given by  .14 In this case, lack of guidance about specification choice could generate substantial opportunities for specification searching. In contrast, if different SC specifications lead to similar SC weights, then this rejection rate will be close to α percent and the risk of specification searching would be very low. We consider two data-generating processes (DGP).

.14 In this case, lack of guidance about specification choice could generate substantial opportunities for specification searching. In contrast, if different SC specifications lead to similar SC weights, then this rejection rate will be close to α percent and the risk of specification searching would be very low. We consider two data-generating processes (DGP).

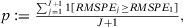

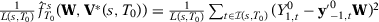

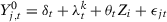

()

() . We consider the case in which

. We consider the case in which  and

and  . Therefore, units 1 and 2 follow the trend

. Therefore, units 1 and 2 follow the trend  , units 3 and 4 follow the trend

, units 3 and 4 follow the trend  , and so on. We consider that

, and so on. We consider that  is normally distributed following an AR(1) process with 0.5 serial correlation parameter,

is normally distributed following an AR(1) process with 0.5 serial correlation parameter,  and

and  .

. that follows a random walk,

that follows a random walk,

()

() and

and  . We consider in our simulations

. We consider in our simulations  and

and  . Therefore, units

. Therefore, units  follow the same non-stationary path

follow the same non-stationary path  as the treated unit, although only unit

as the treated unit, although only unit  also follows the same stationary path

also follows the same stationary path  as the treated unit.

as the treated unit.We fix the number of post-treatment periods  and we vary the number of pre-intervention periods in the DGPs,

and we vary the number of pre-intervention periods in the DGPs,  . Note that seven papers in Table 1 use a number of pre-treatment periods around 12 (i.e., between eight and 16). Moreover, the longest pre-treatment period is 43. Therefore, setting

. Note that seven papers in Table 1 use a number of pre-treatment periods around 12 (i.e., between eight and 16). Moreover, the longest pre-treatment period is 43. Therefore, setting  in our Monte Carlo is useful to test the reliability of the asymptotic approximations described in the previous section, but we should bear in mind that this is an extreme setting that is unlikely to hold in common SC applications. In both models, we impose that there is no treatment effect, i.e.,

in our Monte Carlo is useful to test the reliability of the asymptotic approximations described in the previous section, but we should bear in mind that this is an extreme setting that is unlikely to hold in common SC applications. In both models, we impose that there is no treatment effect, i.e.,  for each time period

for each time period .

.

In Appendix G, we consider variations in our stationary model (4) by setting (i)  , and (ii)

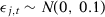

, and (ii)  . In Appendix B, we consider a DGP with time-invariant covariates. Moreover, in Appendix E, we consider placebo simulations with the CPS.15 In all cases, we find similar results as the ones presented in the main text, showing that our conclusions are not restricted to the particular DGP we present in this section.

. In Appendix B, we consider a DGP with time-invariant covariates. Moreover, in Appendix E, we consider placebo simulations with the CPS.15 In all cases, we find similar results as the ones presented in the main text, showing that our conclusions are not restricted to the particular DGP we present in this section.

-

All pre-treatment outcome values:

-

The first three-fourths of the pre-treatment outcome values:

-

The first half of the pre-treatment outcome values:

-

Odd pre-treatment outcome values:

-

Even pre-treatment outcome values:

-

Pre-treatment outcome mean:

-

Three outcome values (the first one, the middle one, and the last one):

Observe that specifications 1 through 5 satisfy the conditions for the asymptotic equivalence results we present in the previous section, while specifications 6 and 7 do not. In order to simplify the presentation of our results, we do not consider in our MC simulations the use of time-invariant covariates, as is commonly used in specifications that rely on the pre-treatment outcome mean. In Appendix B, we show that our results remain valid if we consider specifications that use time-invariant covariates as predictors in addition to functions of the pre-treatment outcomes.17

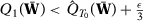

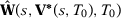

For each specification, we run a placebo test using the root mean squared prediction error (RMSPE) test statistic proposed in Abadie, Diamond, and Hainmueller (2010) and reject the null at 5 percent significance level if the treated unit has the largest RMSPE among the 20 units. We are interested in the probability that we would reject the null at the 5 percent significance level in at least one specification. This is the probability that a researcher would be able to report a significant result even when there is no effect if she were to engage in specification searching. If all different specifications result in the same synthetic control unit, then we would find that the probability of rejecting the null in at least one specification would be equal to 5 percent as well. However, this probability may be higher if the synthetic estimator depends on specification choices, which may be the case in finite samples or for specifications 6 and 7.

We present in columns 1 and 2 of Table 2, panel A, the probability of rejecting the null at 5 percent and at 10 percent significance levels in at least one of our seven specifications for the stationary model. Columns 3 and 4 present the same results for the non-stationary model.18 With  , a researcher considering these seven different specifications would be able to report a specification with statistically significant results at the 5 percent (10 percent) level with probability 14.3 percent (25.0 percent) for the stationary model and 14.2 percent (25.4 percent) for the non-stationary model.19 Therefore, with few pre-treatment periods, a researcher would have substantial opportunities to select statistically significant specifications even when the null hypothesis is true. Importantly, Table 1 shows that SC applications with around 12 pre-treatment periods are common.

, a researcher considering these seven different specifications would be able to report a specification with statistically significant results at the 5 percent (10 percent) level with probability 14.3 percent (25.0 percent) for the stationary model and 14.2 percent (25.4 percent) for the non-stationary model.19 Therefore, with few pre-treatment periods, a researcher would have substantial opportunities to select statistically significant specifications even when the null hypothesis is true. Importantly, Table 1 shows that SC applications with around 12 pre-treatment periods are common.

| Stationary model | Non-stationary model | |||

|---|---|---|---|---|

| 5% test | 10% test | 5% test | 10% test | |

| (1) | (2) | (3) | (4) | |

| Panel A: specifications 1 to 7 | ||||

| T0 = 12 | 0.143 | 0.250 | 0.142 | 0.254 |

| (0.003) | (0.004) | (0.004) | (0.004) | |

| T0 = 32 | 0.146 | 0.255 | 0.158 | 0.275 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

| T0 = 100 | 0.143 | 0.254 | 0.152 | 0.264 |

| (0.003) | (0.004) | (0.004) | (0.004) | |

| T0 = 400 | 0.134 | 0.241 | 0.145 | 0.255 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

| Panel B: specifications 1 to 5 | ||||

| T0 = 12 | 0.106 | 0.19 | 0.110 | 0.198 |

| (0.003) | (0.004) | (0.003) | (0.004) | |

| T0 = 32 | 0.100 | 0.179 | 0.109 | 0.191 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

| T0 = 100 | 0.090 | 0.157 | 0.094 | 0.162 |

| (0.003) | (0.004) | (0.003) | (0.004) | |

| T0 = 400 | 0.077 | 0.138 | 0.081 | 0.142 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

- Notes: Rejection rates are estimated based on 10,000 observations and on seven specifications: (1) all pre-treatment outcome values, (2) the first three-quarters of the pre-treatment outcome values, (3) the first half of the pre-treatment outcome values, (4) odd pre-treatment outcome values, (5) even pre-treatment outcome values, (6) the mean of all pre-treatment outcome values, and (7) three outcome values. z% test indicates that the nominal size of the analyzed test is z percent and T0 is the number of pre-treatment periods.

If the variation in the SC weights across different specifications vanishes when the number of pre-treatment periods goes to infinity, then we would expect the rejection rate to get closer to 5 percent once the number of pre-treatment periods gets large. In this case, all different specifications would provide roughly the same SC unit and, therefore, the same treatment effect estimate. The results in Table 2 show that the probabilities of rejecting the null are still significantly higher than the test size even when the number of pre-intervention periods is large. In a scenario with 400 pre-intervention periods in the non-stationary model, it would be possible to reject the null in at least one specification 14.5 percent (25.5 percent) of the time for a 5 percent (10 percent) significance test.20

These results suggest that, when we include specifications that violate the conditions for the asymptotic equivalence results from the previous section, specification searching remains a problem for the SC method, even when the number of pre-intervention periods is remarkably large for empirical applications. Therefore, we present in panel B of Table 2 the same results excluding specifications 6 and 7. As expected, based on our theoretical results presented in the previous section, excluding specifications 6 and 7 significantly attenuates the specification-searching problem, especially when the number of pre-treatment periods is large.21 However, it does not completely solve the problem even when T0 is relatively large in comparison to usual dataset sizes in SC applications. Given that our theoretical results suggest that specification-searching possibilities within a well-defined class of specifications should be very small asymptotically, this result suggests that asymptotic results may not provide reliable approximations in most SC applications.

The results in Table 2 are driven by the fact that the weights of specifications 1 through 5 converge to the same set of weights when , while weights of specifications 6 and 7 may converge to different points according to the theoretical discussion presented in the previous section. Moreover, for the DGP we consider in our simulation exercise, we can evaluate the proportion of weights that are misallocated to control units that do not follow the same trends as the treated unit. The proportion of misallocated weights is much larger for specifications 6 and 7, and it does not decrease with T0. In contrast, for specifications 1 to 5, the proportion of misallocated weights is much smaller and decreasing with the number of pre-treatment periods. We present these results in detail in Appendix C.22

, while weights of specifications 6 and 7 may converge to different points according to the theoretical discussion presented in the previous section. Moreover, for the DGP we consider in our simulation exercise, we can evaluate the proportion of weights that are misallocated to control units that do not follow the same trends as the treated unit. The proportion of misallocated weights is much larger for specifications 6 and 7, and it does not decrease with T0. In contrast, for specifications 1 to 5, the proportion of misallocated weights is much smaller and decreasing with the number of pre-treatment periods. We present these results in detail in Appendix C.22

Finally, one important feature of the SC method emphasized by Abadie, Diamond, and Hainmueller (2010, 2015) is that the method should only be used in situations with good pre-treatment fit. Therefore, if the specification-searching problem documented in Table 2 came from specifications with a particularly poor pre-treatment fit, then this phenomenon would not be a crucial problem for the method, as those specifications should not be chosen by applied researchers. However, in Appendix D, we show that the probability of rejecting the null in at least one SC specifications remains substantially higher than the significance level of the test even when we restrict to specifications that have a good fit. Therefore, our main conclusion—that there can be substantial opportunities for specification searching in the SC method because there are commonly used specifications that do not satisfy the conditions for the asymptotic equivalence results seen in the second previous section or T0 is usually not large enough to provide reliable asymptotic approximations—remains valid even when we restrict to specifications with a good pre-treatment fit. As detailed in Appendix D, this phenomenon is explained by the impact of conditioning on a good pre-treatment fit on the number of “acceptable” specifications and on the denominator of the test statistic.23 On the one hand, if conditioning on a good fit does not actually restrict the set of options a researcher has, then we have the same results as in the unconditional case. This is generally what happens when data is non-stationary. On the other hand, if conditioning severely restricts the set of options, then we have over-rejection because the test statistic for the treated unit is conditional on a denominator that is close to zero, while the test statistics for the placebo units are unconditional (Ferman & Pinto, 2017).

Recommendations

The specification-searching problem we identify arises from a lack of consensus about which specifications should be used in SC applications. If there are no covariates, the specification including all pre-treatment periods should be used. This specification is the one that minimizes the RMSE in the pre-treatment period, and it is not subject to arbitrary decisions regarding which pre-treatment outcome lags are included as predictors.

The only reason not to use all pre-treatment periods is when the researcher believes that the SC unit must also balance a specific set of covariates. In this case, the researcher would have to use a specification that does not include all pre-treatment lags, otherwise all covariates would be rendered irrelevant in the estimation of weights, as documented by Kaul et al. (2018). In those situations, we first recommend considering only specifications that satisfy the conditions given earlier in the second section. Both our theoretical and simulation results show that the specification-searching problem is attenuated by focusing only on the specifications with those properties. This is especially true when we have a large number of pre-treatment periods, even though it does not solve the problem completely when we consider T0 in line with common SC applications.

Since there is more than one possible specification that satisfies the conditions above, we recommend presenting results for many different specifications. In particular, we recommend that specification 1 is always included as a benchmark. However, even if we present results for all possible SC specifications with a hypothesis test for each specification, this would not provide a valid hypothesis test. If the decision rule is to reject the null if the test rejects in all specifications, then we could end up with a very conservative test (Romano & Wolf, 2015).24 If the decision rule is to reject the null if the test rejects in at least one specification, then we would be back in the situation where we over-reject the null.

One possible solution is to base the inference procedure on a new test statistic that is a function that combines all the test statistics for the individual specifications (Imbens & Rubin, 2015). The drawback of this solution is that it does not provide an obvious point-estimator. There are two possible ways to handle this disadvantage. First, if the test function is simply a weighted average of the test statistics for individual specifications, then Christensen and Miguel (2008) and Cohen-Cole et al. (2009) suggest using the same weights to compute a weighted average of the point-estimator of each specification, generating an estimate that incorporates model uncertainty. As another alternative, we can focus on set identification, as suggested by Firpo and Possebom (2018). In this case, we would invert this combination of test statistics to compute a confidence set that contains all treatment effect functions within a pre-specified class that is not rejected by the inference procedure.

Another possibility is to consider a criterion for choosing among all possible specifications. If one restricts attention to only one specification that is chosen based on an objective criterion, without the need of subjective decisions by the researcher, then the possibility for specification searching would be limited, at least in this dimension. For example, Donohue, Aneja, and Weber (2018) report that they considered different specifications, and eventually chose the one that minimized the mean squared prediction error (MSPE) during the validation period. While this is a reasonable and interesting idea, it potentially allows for specification searching in other dimensions, such as the decision on how to split the pre-treatment periods into training and validation periods. Dube and Zipperer (2015) provide a similar idea but they consider the specification that minimizes the MSPE in the post-intervention periods for the placebo estimates.

Empirical Application

Example 1: German Reunification (Abadie, Diamond, & Hainmueller, 2015)

Abadie, Diamond, and Hainmueller (2015) evaluate the impact of the German Reunification in 1991 on GDP per capita.25 The pre- and post-treatment periods are 1960 through 1990 and 1991 through 2003, respectively, with a training period of 1971 through 1980 and a validating period of 1981 through 1990. The donor pool consists of 16 Organisation for Economic Co-operation and Development (OECD) countries.

We reestimate the impact of the German reunification on GDP per capita using the synthetic control method with 14 different specifications. Specifically, we test the same seven specifications from the third section of the paper and, for each one of them, we either include five covariates or not.26, 27 Specifications ending with a do not include covariates, while those ending with b include them. Specification 6b is the original one in Abadie, Diamond, and Hainmueller (2015).

Table 3 shows the p-value for each specification. The results show that the researcher could try different specifications and pick one whose result is significant.28 In particular, only nine of them are significant at the 10 percent significance level, while four of them are not, implying that different specifications could lead to different conclusions.

| Specification | (1a) | (1b) | (2a) | (2b) | (3a) | (3b) | (4a) | (4b) |

| p-value | 0.059 | 0.059 | 0.059 | 0.118 | 0.118 | 0.059 | 0.059 | 0.059 |

| Specification | (5a) | (5b) | (6a) | (6b) | (7a) | (7b) | ||

| p-value | 0.118 | 0.059 | 0.588 | 0.059 | 0.353 | 0.059 |

- Notes: We analyze 14 different specifications. The number of the specifications refers to: (1) all pre-treatment outcome values, (2) the first three-fourths of the pre-treatment outcome values, (3) the first half of the pre-treatment outcome values, (4) odd pre-treatment outcome values, (5) even pre-treatment outcome values, (6) pre-treatment outcome mean (original specification by Abadie, Diamond, & Hainmueller, 2010), and (7) three outcome values. Specifications that end with an a do not include covariates, while specifications that end with a b include the covariates trade openness, inflation rate, industry share, schooling levels, and investment rate.

If we believe that covariates are not relevant to explain the German GDP per capita, the recommended specification uses all pre-treatment outcome lags. Note that specification 1a indicates that the treated unit has the largest RMSPE, suggesting that our treatment has a statistically significant effect.

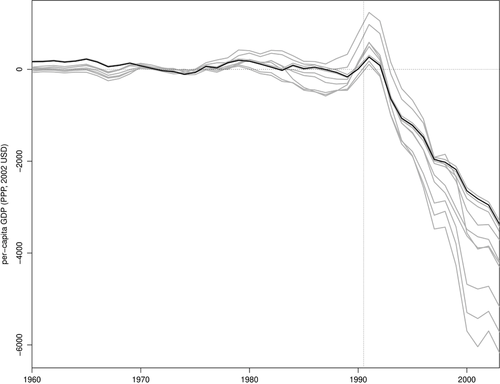

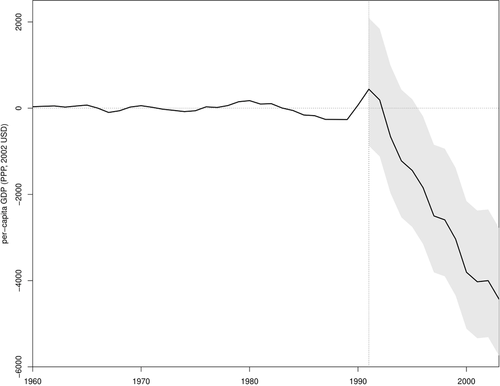

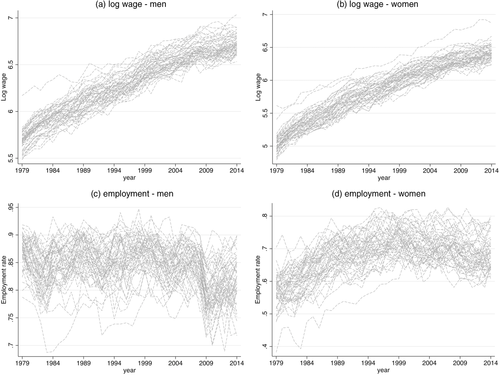

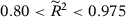

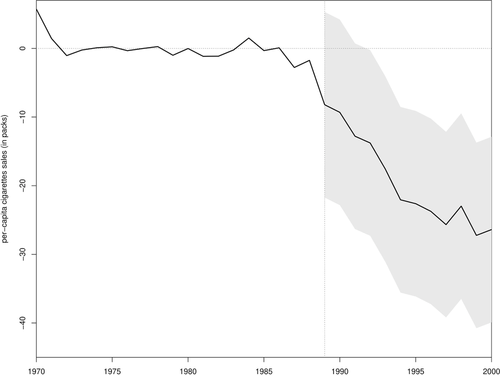

However, if we believe that the SC unit should also match the covariates, then we should focus only on the specifications that satisfy the conditions outlined in the second section by dropping specifications 6 and 7. By looking at Table 3, we note that the significance of the treatment effect is not straightforward. By looking at Figure 1, we find that specifications 1 through 5 point to a treatment effect that is negative in the long run. However, the magnitude of this effect varies across specifications. The next step is to test the null hypothesis using a test statistic that combines the test statistics of specifications 1 through 5. We find that the p-value of a test that uses the mean of the RMSPE statistic across specifications (Imbens & Rubin, 2015), is equal to 0.059, suggesting that the German Reunification had a statistically significant impact on West Germany's per-capita GDP. In order to present point-estimates associated with this test, we follow Christensen and Miguel (2018) and Cohen-Cole et al. (2009) and show, in Figure 2, the average treatment effects across specifications 1 through 5 as the black line. This average treatment effect suggests a strongly negative effect in the long run. We also follow Firpo and Possebom (2018) to compute a confidence set (Figure 2) that includes all treatment effect functions that we fail to reject using this combined test statistic, considering functions that are deviations from the average treatment effect across specifications by an additive and constant factor. We find that, although we cannot reject treatment effect functions that are initially positive, all treatment effect functions in our confidence set are negative in the long run. Finally, we apply the choice criteria suggested by Dube and Zipperer (2015) and Donohue et al. (2018), restricting ourselves to specifications 1 through 5. The first criterion picks specification 1a (in this case, we would reject the null with a p-value of 0.059), while the second one picks specification 2b (in this case, we would marginally reject the null, with a p-value of 0.118).

Treatment Effects for Specifications 1 through 5 and the Original Specification—Database from Abadie, Diamond, and Hainmueller (2015).

Notes: The solid black line is the original specification by Abadie, Diamond, and Hainmueller (2015) and gray lines are specifications 1 through 5. The vertical line denotes the beginning of the post-treatment period.

Ninety Percent Confidence Sets Around the Average Across Specifications 1 through 5—Database from Abadie, Diamond, and Hainmueller (2015).

Notes: We compute confidence sets by inverting the average test statistic across specifications. Our confidence sets include all treatment effect functions that we fail to reject using this combined test statistic, considering functions that are deviations from the average treatment effect across specifications by an additive and constant factor. The black line is the average treatment effect of West Germany and the gray area is the confidence set. The vertical lines denote the beginning of the post-treatment period.

After this analysis, a reasonable conclusion would be that there is a significant and negative treatment effect in the long run.

Example 2: Paid Family Leave (Bartel et al., 2018)

Bartel et al. (2018) evaluate the impact of the California's Paid Family Leave (CA-PFL) program on fathers’ leave-taking. The pre- and post-treatment periods are 2000 through 2004 and 2005 through 2013 using data from the American Community Survey (ACS). The donor pool consists of the District of Columbia and all American states, excluding New Jersey, because it also implemented a similar program in 2008.

We reestimate the impact of the CA-PFL program on fathers’ leave-taking using the synthetic control method with 14 specifications. Specifically, we test the same seven specifications from the third section of the paper and, for each one of them, we either include 11 covariates or not.29 Specifications ending with a do not include covariates, while those ending with b include them. Similarly to our recommendations, Bartel et al. (2018) analyze and report results for many different specifications: our specifications 1b and 6b are their specifications 7 and 6, respectively (Bartel et al., 2018, Table 6).

Table 4 shows the p-value for the specifications with a good pre-treatment fit.30 The results show that the researcher could try different specifications and pick one whose result is significant: specifications 1a, 3b, 5b, 6b, and 7b are significant at the 5 percent level; specification 2b is significant at the 10 percent level; and specifications 1b and 4b are not significant. As a consequence, different specifications could lead to different conclusions.

| Specification | (1a) | (1b) | (2b) | (3b) | (4b) | (5b) | (6b) | (7b) |

| p-value | 0.02 | 0.12 | 0.06 | 0.02 | 0.125 | 0.04 | 0.021 | 0.021 |

- Notes: We analyze 14 different specifications and only report the ones with good pre-treatment fit according to the measure proposed in Appendix D. The number of the specifications refers to: (1) all pre-treatment outcome values (specification 7 by Bartel et al., 2018), (2) the first three-fourths of the pre-treatment outcome values, (3) the first half of the pre-treatment outcome values, (4) odd pre-treatment outcome values, (5) even pre-treatment outcome values, (6) pre-treatment outcome mean (specification 6 by Bartel et al., 2018), and (7) three outcome values. Specifications that end with an a do not include covariates, while specifications that end with a b include the covariates related to racial composition, educational attainment, employment, and labor force participation.

If we believe that covariates are not relevant to explain fathers’ leave-taking, the recommended specification uses all pre-treatment outcome lags. Note that specification 1a indicates that the treated unit has the largest RMSPE, suggesting that our treatment has a statistically significant effect.

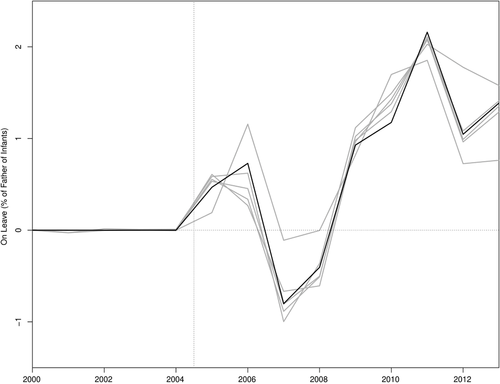

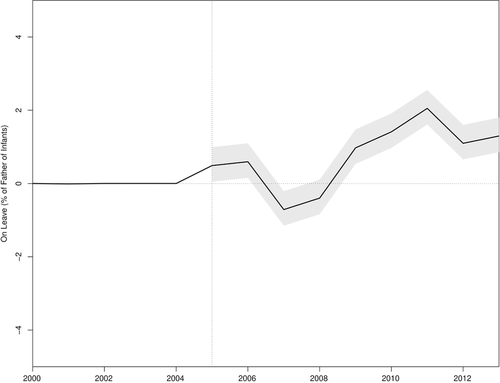

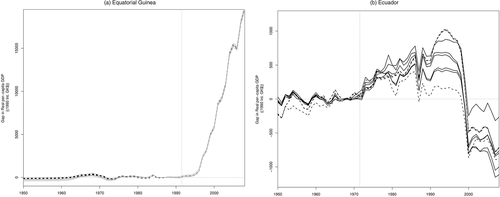

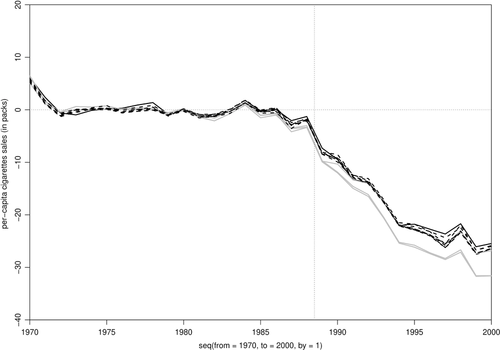

However, if we believe that the SC unit should also directly match the covariates, then we should focus only on the specifications that satisfy the conditions outlined in the second section by dropping specifications 6 and 7. By looking at Table 4, we note that the significance of the treatment effect is not straightforward.31 By looking at Figure 3, we find that specifications 1 through 5 point to a treatment effect of similar magnitude and positive in the long run. The next step is to test the null hypothesis using a test statistic that combines the test statistics of specifications 1 through 5. We find that the p-value of a test that uses the mean of the RMSPE statistic across specifications (Imbens & Rubin, 2015) is equal to 0.021, suggesting that the CA-PFL program had an impact on fathers’ leave-taking behavior. In order to present point-estimates associated with this test, we follow Christensen and Miguel (2018) and Cohen-Cole et al. (2009), and show, in Figure 4, the average treatment effects across specifications 1 through 5 as a black line, suggesting a positive effect in the long run. We also follow Firpo and Possebom (2018) to compute the confidence set (Figure 4) that includes all treatment effect functions that we fail to reject using this combined test statistic, considering functions that are deviations from the average treatment effect across specifications by an additive and constant factor. We find that, although we cannot reject treatment effect functions that are initially negative, all treatment effect functions in our confidence set are positive in the long run. Finally, we apply the choice criterion suggested by Dube and Zipperer (2015), restricting ourselves to specifications 1 through 5. The choice criterion picks specification 5b (in this case, we would reject the null with a p-value of 0.040).

Treatment Effects for Specifications 1 through 5—Database from Bartel et al. (2018).

Notes: The solid black line is the specification 7 by Bartel et al. (2018); gray lines are the other specifications in Table 4 that satisfy the conditions outlined in the second section. The vertical line denotes the beginning of the post-treatment period.

Ninety Percent Confidence Sets Around the Average Across Specifications 1 through 5—Database from Bartel et al. (2018).

Notes: We compute confidence sets by inverting the average test statistic across specifications. Our confidence set includes all treatment effect functions that we fail to reject using this combined test statistic, considering functions that are deviations from the average treatment effect across specifications by an additive and constant factor. The black line is the average treatment effect of CA-PFL and the gray area is the confidence set. The vertical lines denote the beginning of the post-treatment period.

After this analysis, a reasonable conclusion would be that there is a significant and positive treatment effect in the long run.

In Appendix F, we consider other empirical applications. In particular, we present an empirical application based on Smith (2015) in which we can find a few “statistically significant” specifications although most specifications show insignificant effects, illustrating the potential for specification searching in SC applications.32 Following our recommendations, we provide clear evidence that the effects are not significant in this application.33

CONCLUSION

We analyze whether a lack of guidance on how to choose among different SC specifications creates the potential for specification searching with synthetic controls. We first provide theoretical results showing that the possibility for specification searching becomes asymptotically irrelevant if the number of pre-treatment outcome lags used as predictors goes to infinity when the number of pre-treatment periods goes to infinity. However, guided by our theoretical results, we provide evidence from simulations that specification searching may be a relevant problem in real SC applications for at least two reasons. First, many SC applications do not have a large number of pre-treatment periods to guarantee that our asymptotic results are approximately valid. Second, many SC applications rely on specifications that do not satisfy the conditions in our theoretical results. We provide a series of recommendations to limit the scope for specification searching in SC applications.

ACKNOWLEDGMENTS

We would like to thank Juan Camilo Castillo, Sergio Firpo, Ricardo Masini, Masayuki Sawada, and participants at the Sao School of Economics seminar, Yale Econometrics Lunch, African Meeting of the Econometric Society, the 2016 Meeting of the Brazilian Econometric Society, and the Young Economists Symposium 2018 for the excellent comments and suggestions. Deivis Angeli and Murilo S. Cardoso provided outstanding research assistance. Bruno Ferman gratefully acknowledges financial support from CNPq.

APPENDIX A: THEORETICAL RESULTS

Main Theoretical Results

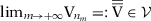

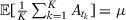

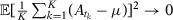

Here, we formalize the theoretical results presented in the second section in the main paper. We consider a sufficient assumption to guarantee that a broad set of SC specifications will be asymptotically equivalent when  .

.

Assumption 1.For any sequence of integers  with

with  , and for any

, and for any  , we have that

, we have that

is a continuous and strictly convex function.

is a continuous and strictly convex function.

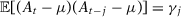

Assumption 1 implies that pre-treatment averages of the second moments of every subsequence of  converge to the same value. We show below that this assumption is satisfied if, for example, we assume that

converge to the same value. We show below that this assumption is satisfied if, for example, we assume that  has weak stationarity, each element of

has weak stationarity, each element of  has absolutely summable covariances, and

has absolutely summable covariances, and  is non-singular, where

is non-singular, where  .

.

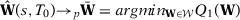

Define  as the estimated gap when specification s is used, and consider the definitions of

as the estimated gap when specification s is used, and consider the definitions of  and

and  given in the second section of the main paper. Then, we have the following results (see Proposition 2 below).

given in the second section of the main paper. Then, we have the following results (see Proposition 2 below).

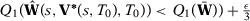

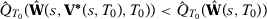

Proposition 2.Let  be the SC weights using specification s when there are T0 pre-intervention periods. If

be the SC weights using specification s when there are T0 pre-intervention periods. If  when

when  , then, under Assumption 1,

, then, under Assumption 1,  . (See details below for Proof of Proposition 2.)

. (See details below for Proof of Proposition 2.)

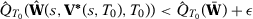

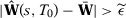

Corollary 3.Let  and

and  be two SC estimators for the treatment effect at time

be two SC estimators for the treatment effect at time  using specifications s and

using specifications s and  such that

such that  and

and  when

when  . Then, under Assumption 1,

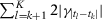

. Then, under Assumption 1,  (1). (See details below for Proof of Corollary 3.)

(1). (See details below for Proof of Corollary 3.)

Therefore, while different SC specifications may generate different SC estimates, our result from Proposition 2 and Corollary 3 show that, under some conditions, different specifications will lead to asymptotically equivalent SC estimators, as long as the number of pre-treatment lags used as predictors goes to infinity with T0.

Our results are valid irrespective of whether the SC estimator is unbiased, as we are only comparing the asymptotic behavior of the SC estimator under different specifications. For a thorough analysis on the asymptotic bias of the SC estimator when  , see Ferman and Pinto (2019). In our Monte Carlo simulations in the third section of the paper and in Appendix E, the conditions in which the SC estimator is unbiased are satisfied. Also, our results are related to the results from Kaul et al. (2018), who show that covariates would become irrelevant in the minimization problem (1) if all pre-treatment outcome lags are included as predictors. Since our theoretical results hold whether or not other covariates are included as predictors, this implies that covariates would also become asymptotically irrelevant in the minimization problem (1) whenever we consider specifications such that

, see Ferman and Pinto (2019). In our Monte Carlo simulations in the third section of the paper and in Appendix E, the conditions in which the SC estimator is unbiased are satisfied. Also, our results are related to the results from Kaul et al. (2018), who show that covariates would become irrelevant in the minimization problem (1) if all pre-treatment outcome lags are included as predictors. Since our theoretical results hold whether or not other covariates are included as predictors, this implies that covariates would also become asymptotically irrelevant in the minimization problem (1) whenever we consider specifications such that  when

when  , even if we do not include all pre-treatment outcome lags. This, however, does not necessarily imply that the SC weights will not attempt to match the covariates of the treated unit, nor that the SC estimator will be asymptotically biased, as explained by Botosaru and Ferman (2019).

, even if we do not include all pre-treatment outcome lags. This, however, does not necessarily imply that the SC weights will not attempt to match the covariates of the treated unit, nor that the SC estimator will be asymptotically biased, as explained by Botosaru and Ferman (2019).

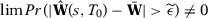

As a corollary from both results, we show that the ranking of  (defined in equation 3) will remain asymptotically invariant to changes in the SC specification when

(defined in equation 3) will remain asymptotically invariant to changes in the SC specification when  whenever we consider only specifications whose number of pre-treatment outcome lags goes to infinity with T0.

whenever we consider only specifications whose number of pre-treatment outcome lags goes to infinity with T0.

Corollary 4.Under Assumption 1 and assuming that  is continuous, with probability approaching one when

is continuous, with probability approaching one when  and

and  is fixed, the ordering of

is fixed, the ordering of  is invariant to SC specifications such that

is invariant to SC specifications such that  when

when  . (See details below for Proof of Corollary 4.)

. (See details below for Proof of Corollary 4.)

As a consequence of Corollary 4, the test decision in the placebo test is asymptotically invariant to the specification choice when  , provided that we restrain to SC specifications whose number of pre-treatment outcome lags goes to infinity with T0.

, provided that we restrain to SC specifications whose number of pre-treatment outcome lags goes to infinity with T0.

Proof of Proposition 2.Let  for some

for some  , and

, and  . Also, let

. Also, let  , where

, where  includes the predictors used in specification s when there are T0 pre-treatment periods.

includes the predictors used in specification s when there are T0 pre-treatment periods.

We want to show that  . First, let

. First, let  be a diagonal matrix with diagonal entries equal to one for pre-treatment outcome lags and zero for other predictors when we consider the predictors used in specification s with T0 pre-treatment periods. Then we have that

be a diagonal matrix with diagonal entries equal to one for pre-treatment outcome lags and zero for other predictors when we consider the predictors used in specification s with T0 pre-treatment periods. Then we have that  . By Assumption 1 and by the fact that

. By Assumption 1 and by the fact that  when

when  ,

,  converges uniformly in probability to

converges uniformly in probability to  , which is uniquely minimized at

, which is uniquely minimized at  . Let

. Let  . Since

. Since  is compact, we have that

is compact, we have that  when

when  (Theorem 2.1 of Newey & McFadden, 1994).

(Theorem 2.1 of Newey & McFadden, 1994).

We now show that the solution to the nested problem proposed in Abadie, Diamond, and Hainmueller (2010) will also converge in probability to  . First, note that

. First, note that  always exists. According to Berge's Maximum Theorem (Ok, 2007, p. 306),

always exists. According to Berge's Maximum Theorem (Ok, 2007, p. 306),  is a compact-value, upper hemicontinuous and closed correspondence. As a consequence,

is a compact-value, upper hemicontinuous and closed correspondence. As a consequence,  is a compact set. To see that, take any sequence

is a compact set. To see that, take any sequence  such that

such that  for any

for any  . Since

. Since  by its definition, there exists

by its definition, there exists  for each

for each  such that

such that  . We also know that there exists a convergent subsequence

. We also know that there exists a convergent subsequence  such that

such that  because

because  is a compact set. By the definition of upper hemicontinuity (Stokey & Lucas, 1989, p. 56), there exists a convergent subsequence

is a compact set. By the definition of upper hemicontinuity (Stokey & Lucas, 1989, p. 56), there exists a convergent subsequence  such that

such that  , proving that

, proving that  is a compact set. Consequently, Weierstrass’ Extreme Value Theorem guarantees that

is a compact set. Consequently, Weierstrass’ Extreme Value Theorem guarantees that  exists.

exists.

From Assumption 1, we have that  converges uniformly to

converges uniformly to  over

over  . Therefore, for any

. Therefore, for any  , (i) uniform convergence of

, (i) uniform convergence of  implies that

implies that  and

and  with probability approaching to one (w.p.a.1), and (ii) convergence in probability of

with probability approaching to one (w.p.a.1), and (ii) convergence in probability of  and continuity of

and continuity of  implies that

implies that  w.p.a.1. Therefore,

w.p.a.1. Therefore,  w.p.a.1.

w.p.a.1.

Suppose now that  does not converge in probability to

does not converge in probability to  . Then

. Then  such that

such that  when

when  . Since

. Since  is compact and

is compact and  is uniquely minimized at

is uniquely minimized at  , then

, then  implies that

implies that  such that

such that  . Uniform convergence of

. Uniform convergence of  implies that

implies that  and

and  w.p.a.1. Therefore,

w.p.a.1. Therefore,  w.p.a.1.

w.p.a.1.

However, if we set  , then we have

, then we have  w.p.a.1, which contradicts the fact that for all

w.p.a.1, which contradicts the fact that for all  we can always find

we can always find  such that

such that  with

with  minimizes

minimizes  with positive probability. Therefore, it must be that

with positive probability. Therefore, it must be that  converges in probability to

converges in probability to  .

.

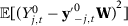

Proof of Corollary 3.Notice that we can write each estimator as:

such that

such that  and

and  when

when  :

:

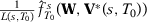

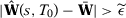

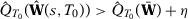

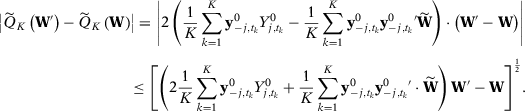

Proof of Corollary 4.Let  be the vector of outcomes at time t excluding unit j,

be the vector of outcomes at time t excluding unit j,  be the SC weights when unit j is used as treated, and

be the SC weights when unit j is used as treated, and  .

.

, we can define

, we can define  such that, with probability one:34

such that, with probability one:34

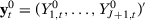

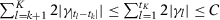

()

()From Proposition 2, we know that  and

and  . Therefore, the inequalities in equation (A.1) will remain valid w.p.a.1 when we consider the test statistics for the placebo runs.

. Therefore, the inequalities in equation (A.1) will remain valid w.p.a.1 when we consider the test statistics for the placebo runs.

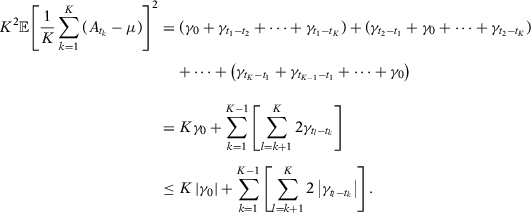

Sufficient Conditions for Assumption 1

Let  . We show that the following assumption is sufficient for Assumption 1.

. We show that the following assumption is sufficient for Assumption 1.

Assumption 5. has weak stationarity, each element of

has weak stationarity, each element of  has absolutely summable covariances, and

has absolutely summable covariances, and  is non-singular.

is non-singular.

be one element of

be one element of  . Under Assumption 5, we can define

. Under Assumption 5, we can define  and

and  , where

, where  . Consider a subsequence

. Consider a subsequence  with

with  . Note that

. Note that  . We want to show that

. We want to show that  when

when  . Note that:

. Note that:

. Now note that, for each k,

. Now note that, for each k,  is the sum of a subsequence of

is the sum of a subsequence of  . Therefore, for any k, we have that

. Therefore, for any k, we have that  . Therefore:

. Therefore:

when

when  . Therefore, we have that all elements of the pre-treatment averages of

. Therefore, we have that all elements of the pre-treatment averages of  for any subsequence

for any subsequence  converge in probability to their corresponding expected values.

converge in probability to their corresponding expected values. is a linear combination of pre-treatment averages of elements of

is a linear combination of pre-treatment averages of elements of  for a given subsequence

for a given subsequence  , for any

, for any  , we have that:

, we have that:

is continuous and strictly convex.

is continuous and strictly convex. , using the mean value theorem, we can find

, using the mean value theorem, we can find  such that:

such that:

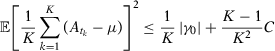

Define  . Since

. Since  is compact,

is compact,  is bounded, so we can find a constant C such that

is bounded, so we can find a constant C such that  . From Assumption 5,

. From Assumption 5,  converges in probability to a positive constant, so

converges in probability to a positive constant, so  . Note also that

. Note also that  is uniformly continuous on

is uniformly continuous on  . Therefore, from Corollary 2.2 of Newey (1991), we have that

. Therefore, from Corollary 2.2 of Newey (1991), we have that  converges uniformly in probability to

converges uniformly in probability to  for any subsequence

for any subsequence  .

.

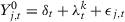

APPENDIX B: MODEL WITH TIME-INVARIANT COVARIATES

In the paper's third section, we provide evidence that specifications 6 (pre-treatment outcome mean as economic predictor) and 7 (initial, middle, and final years of the pre-intervention period as economic predictors) fail to take into account the time-series dynamics of the data, which implies that the SC estimator using these specifications does not converge to the SC estimators using the other specifications, which satisfy the conditions outlined in the second section. As a consequence, the possibilities for specification searching do not vanish even when the number of pre-treatment periods is large in contrast to the behavior of the specifications within the scope of our theoretical results. However, in most applications that use specifications 6 and 7, other time-invariant covariates are also considered as economic predictors. Here, we consider an alternative MC simulation where we include time-invariant covariates and we show that the same pattern observed in the third section can arise even when we consider specifications that also include time-invariant covariates as economic predictors.

for

for  and

and  for

for  . As in our DGP from the main paper, we consider

. As in our DGP from the main paper, we consider  .35 We consider that

.35 We consider that  is normally distributed following an AR(1) process with 0.5 serial correlation parameter,

is normally distributed following an AR(1) process with 0.5 serial correlation parameter,  ,

,  , and

, and  . We consider the same seven specifications as in the third section of the main paper, except that we also include

. We consider the same seven specifications as in the third section of the main paper, except that we also include  as economic predictor.

as economic predictor.In columns (1) and (2) of Table B1, we present the probability of rejecting the null in at least one of our seven specifications at, respectively, 5 percent and 10 percent significance levels. The possibilities for specification searching remain high for large T0 because specifications 6 and 7 remain poorly behaved in comparison to the other specifications. This result is similar to our findings in the main paper.

| All Specifications | Excluding 6 and 7 | |||

|---|---|---|---|---|

| 5% test | 10% test | 5% test | 10% test | |

| (1) | (2) | (3) | (4) | |

| T0 = 12 | 0.142 | 0.232 | 0.107 | 0.196 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

| T0 = 32 | 0.141 | 0.224 | 0.101 | 0.175 |

| (0.003) | (0.004) | (0.004) | (0.005) | |

| T0 = 100 | 0.136 | 0.215 | 0.089 | 0.158 |

| (0.003) | (0.004) | (0.003) | (0.004) | |

| T0 = 400 | 0.125 | 0.200 | 0.078 | 0.138 |

| (0.003) | (0.004) | (0.003) | (0.004) | |