Validating a novel measure for assessing patient openness and concerns about using artificial intelligence in healthcare

Abstract

Objectives

Patient engagement is critical for the effective development and use of artificial intelligence (AI)-enabled tools in learning health systems (LHSs). We adapted a previously validated measure from pediatrics to assess adults' openness and concerns about the use of AI in their healthcare.

Study Design

Cross-sectional survey.

Methods

We adapted the 33-item “Attitudes toward Artificial Intelligence in Healthcare for Parents” measure for administration to adults in the general US population (AAIH-A), recruiting participants through Amazon's Mechanical Turk (MTurk) crowdsourcing platform. AAIH-A assesses openness to AI-driven technologies and includes 7 subscales assessing participants' openness and concerns about these technologies. The openness scale includes examples of AI-driven tools for diagnosis, prediction, treatment selection, and medical guidance. Concern subscales assessed privacy, social justice, quality, human element of care, cost, shared decision-making, and convenience. We co-administered previously validated measures hypothesized to correlate with openness. We conducted a confirmatory factor analysis and assessed reliability and construct validity. We performed exploratory multivariable regression models to identify predictors of openness.

Results

A total of 379 participants completed the survey. Confirmatory factor analysis confirmed the seven dimensions of the concerns, and the scales had internal consistency reliability, and correlated as hypothesized with existing measures of trust and faith in technology. Multivariable models indicated that trust in technology and concerns about quality and convenience were significantly associated with openness.

Conclusions

The AAIH-A is a brief measure that can be used to assess adults' perspectives about AI-driven technologies in healthcare and LHSs. The use of AAIH-A can inform future development and implementation of AI-enabled tools for patient care in the LHS context that engage patients as key stakeholders.

1 INTRODUCTION

Developing a learning health system (LHS) is an aspirational goal that the Academy of Medicine defines as a system where “science, informatics, incentives, and culture are aligned for continuous improvement and innovation, with best practices seamlessly embedded in the care process, patients and families active participants in all elements, and new knowledge captured as an integral by-product of the care experience.”1 Artificial intelligence (AI) and related methodologies will be necessary components of an LHS to support dynamic medical care that is responsive to emerging evidence.1-3 AI can support the analysis of enormous amounts of data from electronic health records and could support real-time decision-making in the future.

AI-enabled interventions continue to gain attention, investment, and use among healthcare systems,4, 5 and AI is integral to the further development of LHS.6 As the use of AI-enabled technologies expands across the country, so do potential effects on patient care, access to care management programs, provision of specific treatments, and healthcare spending.7 Understanding patients' perceptions, openness, and priorities related to AI in healthcare is critically important because of the implications for patient trust, which will dictate the acceptability and subsequent development and use of AI-enabled tools for patient care. Trust and engagement with patients are especially integral to the development of an LHS, further emphasizing the need to understand patients' perspectives on the use of AI in their healthcare.1, 8, 9 As described by Grob and colleagues, “LHSs cannot fully accomplish their missions without a strategy to robustly engage patients and families.”4 Prior work has also found that when data are collected and used without patients' knowledge or consent, it can damage their trust in the healthcare system and their providers.10, 11

Patient engagement is essential for effective learning and incorporating AI-enabled tools into healthcare. Engaging patients effectively in LHS, AI development, and implementation requires evidence and empirical knowledge of how they understand and perceive AI-enabled tools.12 It is currently unclear in the academic literature how patients assess various potential benefits and risks associated with AI-enabled tools in healthcare, or how open they are to these innovations. While existing qualitative work has identified some patient concerns with AI in healthcare settings,13 there is a dearth of simple, quick, and validated measures available to measure openness to and concerns about using AI in healthcare among adults in the United States. A brief and validated survey would facilitate the incorporation of patient perspectives in the continuous learning cycles of LHSs across the country.

1.1 Research interests

To address this critical gap in understanding patients' openness to and concerns about AI-enabled tools in healthcare, we adapted an existing and validated measure (AAIH-P) to measure adult perceptions of AI use in healthcare (AAIH-A). We assessed the validity of the measure and compared it with other validated measures related to trust in health information systems and technology, patient characteristics, and health-related quality of life. We hypothesized that openness to using AI in healthcare would be correlated with (1) concerns about using AI in healthcare, (3) trust in health information systems and technology, and (4) patient characteristics that have been found in prior literature to be correlated with greater trust in government and healthcare and health-related quality of life.

2 METHODS

2.1 Design

We adapted a previously validated measure, the Attitudes toward Artificial Intelligence in Healthcare in Pediatrics (AAIH-P), to assess adults' attitudes about the use of AI in their own healthcare.14 We collected responses to the adapted measure, called Attitudes toward Artificial Intelligence in Healthcare-Adult Healthcare (AAIH-A), along with existing attitude and personality scales assessing constructs such as trust in technology, trust in the healthcare system, and trait-based openness. Analyses focused on establishing the construct validity of the AAIH-A concerns subscales. Likewise, we examined associations of the AAIH-A openness scale with concerns, attitudes, and personality traits to provide evidence of construct validity. Next, we examined which variables were associated with openness to AI-driven technologies in healthcare. Finally, exploratory analysis assessed how quality of life and socio-demographics were associated with the AAIH-A concerns subscales.

2.2 Adapting the AAIH-A measure

The original AAIH-P measure assessed parental views of the use of AI-driven technologies in their child's healthcare.14 Contributing to the content and face validity of the AAIH-P, we conducted an extensive initial literature review to identify potential risks and benefits of AI in healthcare, and we engaged multiple experts and parents to refine the survey items. We conducted an initial exploratory factor analysis in a sample (n = 418) independent of a second confirmatory factor analysis (n = 386). The AAIH-P measure included a 12-item scale assessing general openness to AI-driven technologies in healthcare for the diagnosis, risk prediction, treatment selection, and medical guidance. This openness scale asked participants, “How open are you to allowing new healthcare devices to do the following things?” This question was followed by a list of 33 items to respondents indicating their openness (Appendix S1).

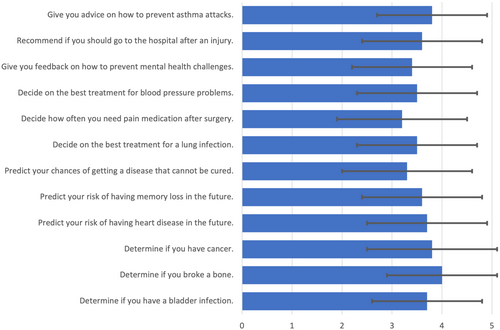

We edited the openness items in the AAIH-A openness scale to address health issues appropriate for adults. For example, we removed “ear infection” from the scale and added “bladder infection” and “heart disease” to make them more pertinent to adult healthcare. Respondents rated openness on a 5-point Likert scale ranging from Not at all open (1) to Extremely open (6). An example item from the AAIH-A openness scale is, “Predict your risk of developing heart disease in the future” (see Figure 1 for all openness items). The openness score is the mean of the 12 items. In the pediatric setting, AAIH-P demonstrated good internal consistency, and we found strong evidence of construct validity.14

The AAIH-P also included 7 subscales that assessed which factors individuals found important when considering the use of these AI-driven technologies in medical care (Table 1). We adapted the AAIH-P Concerns subscales for use in adults by changing “your child” to “you.” The 7 Concerns subscales included items addressing (1) quality and accuracy, (2) privacy, (3) cost, (4) convenience, (5) social justice, (6) shared decision-making, and (7) preserving the human element of care. For example, an AAIH-P item reflecting quality is, “Whether these devices reduce medical errors.” Participants were asked how important these items were on a 5-point Likert scale, ranging from Not important (1) to Extremely important (6). Each subscale in the parental study demonstrated good internal consistency, and exploratory and confirmatory factor analysis demonstrated a 7-factor solution that demonstrated a good fit. We did not modify the content of these concerns items, beyond changing “your child” to “you.”

| Concern | Conceptual definition |

|---|---|

| Quality and accuracy | Concerns about the effectiveness and fidelity of these technologies. |

| Privacy | Concerns about loss of control over one's personal information, who has access to this information, and how this information might be used. |

| Cost | Concerns about whether these technologies will affect individual or societal costs of healthcare. |

| Convenience | Concerns about the ease with which an individual can access and utilize these technologies. |

| Social justice | Concerns about how these new technologies might affect the distribution of the benefits and burdens related to AI use in healthcare. |

| Shared decision making | Concerns about patient involvement and authority in deciding whether and how these technologies are utilized in their care. |

| Human element of care | Concerns about the effect of these technologies on the interaction or relationship between clinicians and patients. |

Before administering the survey, we conducted 3 cognitive interviews with adult participants to verify the clarity of the newly adapted items.15 Based on cognitive interviews, we referred to these technologies in the prompt as “new healthcare devices,” rather than using the phrase “computer programs” from the AAIH-P measure. Similar to the AAIH-P study, we avoided the use of the terms “artificial intelligence” or “machine learning” in the measure because of misconceptions and complexity associated with these terms. By describing technologies that are facilitated by AI and offering different ethical concerns created by these technologies, this measure assesses participants' views of AI-driven technologies in a way that is broadly understandable to the general population.

2.3 Co-administered measures for construct validity

In addition to the AAIH-A measure, we assessed for construct validity by co-administering previously validated measures that we hypothesized would correlate with the AAIH-A openness measure. Measures of attitudes included the Platt Trust in Health Information Systems, which is comprised of four subscales: Fidelity, Competency, Trust, and Integrity.16 We also included a measure of faith and trust in general technology.17 We hypothesized a positive correlation between trust measures and AAIH openness. Measures of personality included the Ten Item Personality Inventory (TIPI) which assesses personality constructs of openness to new experiences, conscientiousness, extraversion, agreeableness, and emotional stability.18 We hypothesized a positive correlation between openness on TIPI and the AAIH openness measure.

Socio-demographics variables included age, sex, race and ethnicity, level of education, employment status, experience working in the healthcare field, urban/suburban/rural residence, type of insurance, and healthcare system utilization patterns. We assessed political views by asking, “As of today, do you consider yourself a Republican, a Democrat, or an Independent.” For those responding as independent or other, we asked a follow-up question: “As of today, do you lean more to the Democratic Party or the Republican Party?” We then dichotomized results as either Democrat/Lean Democrat or Republican/Lean Republican, similar to prior publications.19

We assessed health-related quality of life using the Rand Corporations 36-Item Short Form Health Survey (SF-36),20 which includes scales for physical functioning, role limitations due to physical health, role limitations due to emotional problems, energy/fatigue, emotional well-being, social role functioning, bodily pain, and general health perceptions. Higher scores on SF-36 subscales indicate a more favorable health state. We included the SF-36 to explore how health status was associated with AAIH concerns, having found in a prior study a modest relationship between lower health status and greater general concerns about AI in healthcare.21

2.4 Survey participants

We employed criterion-based sampling through MTurk, requiring that participants were 18 years or older, resided in the United States, and had a 98% MTurk approval rating on at least 100 prior tasks to qualify for this survey. We also applied custom qualifications to prevent multiple entries from the same individual. MTurk provides samples from across the country that are low cost and can provide evidence of validity for novel measures targeted to the general population.18 We administered the survey through a web link to Qualtrics survey software. We provided $3.65 for completing this 20–30-min task, which is a standard pay rate for MTurk tasks. This study was approved by the Institutional Review Board at Washington University, which deemed the study exempt (IRB# 202004198). All subjects consented to participate by reviewing a brief information sheet and indicating their agreement to participate before proceeding to the survey. Surveys were administered in July 2020. We required responses to all items to ensure the completion of surveys. Respondents were not able to review or change answers from prior screens. The completion rate was 95% (407/427). For quality control, we excluded participants who completed the survey in fewer than 6 min 30 s, anticipating that each question should take a minimum of 4–5 s to complete meaningfully based on the prior validation study for AAIH-P. For this reason, we excluded 28 participants. After exclusions, 379 participants were included in this analysis, which is considered an appropriate sample size for factor analysis.22

2.5 Validity and psychometric properties of AAIH measure

For the 12-item AAIH openness scale, we calculated the mean response to create a composite score that ranged from 1 to 5. We assessed for internal reliability by calculating Cronbach's alpha for the full 12-item scale, and for items related to four different AI-driven healthcare functions: diagnosis, risk prediction, treatment selection, and medical guidance. For the 33 items of the AAIH concerns measure, we performed confirmatory factor analysis for a seven-factor model using Maximum Likelihood estimation in the IBM SPSS statistical package v26.0.0.0 with the Amos Structural Equation Modeling plug-in for CFA. We allowed all factors to correlate with each other.

2.6 Data analysis

We examined correlations between AAIH openness and co-administered scales using Pearson (continuous variables) or Spearman (nominal and ordinal variables) correlations. We further explored relationships between openness and these variables through multiple linear regression with stepwise entry, excluding non-significant variables (p > 0.05). In initial models, we included scores of all AAIH concerns subscales, race, age, and all variables that demonstrated a significant bivariable correlation (p < 0.05) with openness. Given the number of included variables, we assessed for multicollinearity through iterative calculations of the variance inflation factor (VIF). All VIFs were <2, indicating a low likelihood of multicollinearity. In a final set of exploratory analyses, we examined associations of the SF-36 health-related quality of life survey scales and socio-demographics with AAIH concerns. All analyses were performed in the IBM SPSS statistical package v26.0.0.0.

3 RESULTS

3.1 Participant characteristics

The majority of participants were male (61%, 232/379), and the mean age was 37 years. Ages ranged from 19 to 73 years old. Most participants were White (79%, 290/379) and non-Hispanic (84%, 319/379). Most participants had completed a bachelor's or graduate degree (62%, 235/379) and had household incomes between $45 001 and $75 000 (Table 2).

| Participant characteristics (N = 379) | n (%), except where specified |

|---|---|

| Age, mean (SD) | 37 years (10.9) |

| Female sex | 144 (38%) |

| Race | |

| White | 290 (77%) |

| Black or African American | 63 (17%) |

| Asian | 29 (8%) |

| American Indian or Alaska Native | 8 (2%) |

| Native Hawaiian or Pacific Islander | 2 (<1%) |

| Hispanic ethnicity | 52 (14%) |

| Employment status | |

| Full-time | 250 (66%) |

| Part-time (not a full-time student) | 33 (9%) |

| Full-time student | 11 (3%) |

| Self-employed | 50 (13.2) |

| Caregiver or homemaker | 11 (3%) |

| Retired | 9 (2%) |

| Unemployed | 13 (3%) |

| Household income | |

| Less than 23 000 | 44 (12%) |

| 23 001–45 000 | 105 (28%) |

| 45 001–75 000 | 130 (34%) |

| 75 001–112 000 | 57 (15%) |

| Greater than 112 001 | 37 (10%) |

| Level of education | |

| Some high school | 2 (1%) |

| High school graduate | 47 (12%) |

| Some college | 60 (16%) |

| Associate's degree | 34 (9%) |

| Bachelor's degree | 188 (50%) |

| Master's or doctoral degree | 47 (12%) |

| Place of residence | |

| Urban | 142 (38%) |

| Suburban | 174 (46%) |

| Rural | 63 (17%) |

| Health insurance status | |

| Private | 187 (49%) |

| Medicaid | 71 (19%) |

| Medicare/medicare advantage | 84 (22%) |

| No health insurance | 56 (15%) |

| Other | 7 (2%) |

| Visited doctor in past 12 months | 232 (61%) |

| Number of doctor visits, mean (SD) | 7.0 (5.0) |

| Hospitalized in the past 12 months | 40 (11%) |

| Number of hospitalizations, mean (SD) | 3.4 (2.5) |

| Have a primary care doctor | 285 (75%) |

| Satisfaction with quality of healthcare in last 12 monthsa | |

| Very satisfied | 121 (40%) |

| Somewhat satisfied | 154 (52%) |

| Somewhat dissatisfied | 16 (5%) |

| Very dissatisfied | 8 (3%) |

| Experience working in the healthcare field | 77 (20%) |

- Note: The following numbers of participants preferred not to answer specific demographic questions: race (n = 5), ethnicity (n = 8), sex (n = 2), and income (n = 6).

- a 80 participants had not seen a doctor in the prior 12 months, so the denominator for satisfaction is 299.

3.2 Openness to AI-driven healthcare technologies and participant concerns

The mean openness score on the AAIH was 3.6 (on a 5-point scale), SD 0.9. Openness to four different AI-driven healthcare functions were as follows: diagnosis, mean = 3.9, SD = 1.0; risk prediction, mean = 3.5, SD = 1.1; treatment selection, mean = 3.4, SD = 1.1; medical guidance, mean = 3.6, SD = 1.0 (Figure 1). CFA confirmed a seven-factor model of concerns that participants considered important when evaluating the use of AI-driven healthcare technologies in their medical care, and there was an acceptable fit between the model and observed data: χ2 p < 0.001, CFI = 0.9, RMR = 0.077, RMSEA = 0.059. Quality (mean = 4.2, SD = 0.6), cost (mean = 4.0, SD = 0.8), shared decision-making (mean = 3.9, SD = 0.9), and privacy (mean = 3.9, SD = 0.9) were rated most highly. Convenience (mean = 3.7, SD = 0.9), social justice (mean = 3.6, SD = 1.0), and maintaining the human element (mean = 3.2, SD = 0.9) received lower ratings.

3.3 Relationships between openness on AAIH and participant concerns and characteristics

Consistent with our first hypothesis, all seven concerns confirmed by factor analysis correlated significantly and positively with the openness score on AAIH. Consistent with our second hypothesis, openness scores were positively correlated with measures of trust in health systems and technology—Platt competency, Platt trustworthiness, Platt integrity, faith in technology, and trust in technology. Personality traits of openness and agreeableness also had significant positive correlations with AAIH openness (Table 3). Inconsistent with our third hypothesis, sociodemographic variables and quality of life did not correlate with general openness on the AAIH. The SF-36 scales had near zero correlations with openness (r = 0.001–0.037). Likewise, the socio-demographics had very small correlations with openness (r = 0.013–0.097).

| Variable (Cronbach's alpha) | Pearson correlation (p-value) |

|---|---|

| Concerns | |

| Social justice (0.895) | 0.26 (<0.001) |

| Human element (0.764) | 0.12 (0.014) |

| Cost (0.745) | 0.31 (<0.001) |

| Convenience (0.742) | 0.36 (<0.001) |

| Shared decision-making (0.829) | 0.09 (0.095) |

| Privacy (0.878) | 0.12 (0.020) |

| Quality (0.747) | 0.42 (<0.001) |

| Attitudes and personality scales | |

| Health system fidelity (0.685) | 0.06 (0.221) |

| Health system competency (0.735) | 0.16 (0.002) |

| Health system trustworthiness (0.934) | 0.16 (0.001) |

| Health system integrity (0.771) | 0.15 (0.005) |

| Extroversion (0.728) | 0.01 (0.787) |

| Openness (0.522) | 0.18 (<0.001) |

| Agreeableness (0.517) | 0.12 (0.020) |

| Conscientiousness (0.550) | 0.05 (0.331) |

| Emotional stability (0.732) | 0.01 (0.892) |

| Faith in technology (0.851) | 0.40 (<0.001) |

| Trust in technology (0.876) | 0.31 (<0.001) |

- Note: Bolded values indicate p < 0.05.

In multivariable linear regression, four variables showed a significant association with openness to AI-driven technologies in their healthcare. Of the concerns, quality (beta = 0.33, 95% confidence interval [CI] 0.17–0.49) and convenience (beta = 0.17, 95% CI 0.07–0.27) were positively associated with openness. Trust in technology (beta = 0.11, 95% CI 0.04–0.17) and faith in technology (beta = 0.2, 95% CI 0.08–0.32) were positively associated with openness (Table 4).

| Variable | Beta coefficient (95% CI) | p value |

|---|---|---|

| Concerns—quality | 0.33 (0.17–0.49) | <0.001 |

| Concerns—convenience | 0.17 (0.07–0.27) | <0.001 |

| Trust in technology | 0.11 (0.04–0.17) | 0.037 |

| Faith in technology | 0.20 (0.08–0.32) | 0.002 |

- Note: Bolded values indicate p < 0.05.

Female participants had greater concern for SDM and privacy. Non-white participants had more concern about the human element, cost, convenience, cost, and privacy. People with experience working in the healthcare field were less concerned about the human element and more concerned with quality. Greater income, college education, and having private insurance were associated with less concern for social justice, and those reporting Democrat political alignment, or leaning Democrat, were more concerned with social justice. See Table 5 for full results.

| Variable (Cronbach's alpha) | Social justice | Human element | Cost | Convenience | SDM* | Privacy | Quality |

|---|---|---|---|---|---|---|---|

| r (p-value) | r (p-value) | r (p-value) | r (p-value) | r (p-value) | r (p-value) | r (p-value) | |

| Quality of life scales | |||||||

| Physical functioning (0.952) | −0.02 (0.687) | −0.19 (<0.001) | 0.13 (0.009) | 0.02 (0.750) | 0.07 (0.182) | 0.13 (0.010) | 0.27 (<0.001) |

| Limitations due to physical health (0.821) | −0.06 (0.217) | −0.24 (<0.001) | 0.13 (0.011) | −0.02 (0.636) | 0.07 (0.183) | 0.10 (0.055) | 0.27 (<0.001) |

| Limitations due to emotional problems (0.815) | −0.14 (0.007) | −0.25 (<0.001) | −0.04 (0.474) | −0.10 (0.044) | 0.02 (0.703) | −0.00 (0.983) | 0.14 (0.009) |

| Energy/fatigue (0.805) | −0.06 (0.219) | −0.09 (0.094) | −0.00 (0.939) | −0.03 (0.631) | −0.05 (0.372) | −0.02 (0.700) | 0.03 (0.582) |

| Emotional well-being (0.879) | −0.02 (0.669) | −0.16 (0.002) | 0.08 (0.126) | 0.04 (0.460) | 0.05 (0.299) | 0.06 (0.272) | 0.17 (0.001) |

| Social functioning (0.788) | −0.16 (0.002) | −0.26 (<0.001) | 0.06 (0.256) | −0.04 (0.406) | 0.06 (0.227) | 0.04 (0.398) | 0.25 (<0.001) |

| Bodily pain (0.857) | −0.05 (0.351) | −0.19 (<0.001) | 0.10 (0.047) | 0.01 (0.888) | 0.04 (0.414) | 0.12 (0.023) | 0.27 (<0.001) |

| General health perceptions (0.817) | 0.07 (0.209) | −0.03 (0.613) | 0.10 (0.057) | 0.04 (0.404) | 0.05 (0.328) | 0.14 (0.009) | 0.15 (0.003) |

| Socio-demographic variables | |||||||

| Female sex | −0.07 (.181) | 003 (.629) | −0.06 (.224) | 0.03 (0.629) | −0.14 (0.006) | −0.11 (0.038) | −0.01 (0.864) |

| Age | −0.05 (0.359) | −0.03 (0.560) | 0.05 (0.309) | −0.05 (0.328) | 0.06 (0.274) | −0.07 (0.170) | 0.05 (0.300) |

| Race (White vs. non-White) | −0.10 (0.063) | −0.18 (<0.001) | −0.11 (0.038) | −0.17 (0.001) | −0.05 (0.290) | −0.13 (0.011) | −0.05 (0.314) |

| Ethnicity (Hispanic vs. non-Hispanic) | 0.02 (0.640) | 0.05 (0.346) | −0.11 (0.035) | −0.03 (0.572) | −0.02 (0.701) | −0.09 (0.068) | −0.14 (0.007) |

| Worked in healthcare field | −0.05 (0.321) | −0.19 (<0.001) | 0.07 (0.167) | −0.02 (0.755) | −0.04 (0.474) | 0.04 (0.476) | 0.21 (<0.001) |

| Income | −0.21 (<0.001) | −0.07 (0.156) | −0.01 (0.825) | 0.02 (0.675) | −0.05 (0.377) | −0.03 (0.633) | 0.02 (0.759) |

| College Graduate | −0.13 (0.009) | 0.07 (0.210) | −0.15 (0.004) | −0.06 (0.243) | −0.07 (0.171) | −0.04 (0.496) | −0.10 (0.043) |

| Democrat/lean Democrat political alignment | 0.15 (0.004) | −0.05 (0.386) | 0.10 (0.060) | −0.02 (0.752) | 0.09 (0.078) | 0.02 (0.723) | 0.05 (0.308) |

| Private insurance (vs. other) | −0.16 (0.002) | −0.13 (0.015) | 0.09 (0.093) | 0.08 (0.136) | 0.05 (0.378) | 0.06 (0.220) | 0.10 (0.066) |

| Visited doctor past 12 months | 0.07 (0.191) | 0.130 (0.011) | 0.02 (0.731) | 0.05 (0.353) | 0.01 (0.810) | 0.00 (0.959) | −0.01 (0.811) |

| Hospitalization past 12 months | −0.00 (0.983) | 0.18 (<0.001) | −0.13 (0.010) | 0.02 (0.762) | −0.04 (0.452) | −0.11 (0.026) | −0.18 (<0.001) |

| Have a primary doctor | −0.04 (0.494) | 0.14 (0.009) | −0.04 (0.401) | 0.10 (0.046) | −0.00 (0.921) | −0.08 (0.117) | −0.02 (0.685) |

- Note: Bolded values indicate p < 0.05.

- Abbreviation: SDM, shared decision-making.

4 DISCUSSION

We have adapted and validated an existing measure to assess openness to AI-driven healthcare technologies in a population of US adults. The measure also captures how participants view seven potential concerns associated with implementing AI-enabled devices in healthcare. We adapted this measure based on previous work measuring attitudes among parents toward the use of AI in their children's healthcare, which included an extensive literature review, cognitive interviews with parents to refine items, and exploratory and confirmatory factor analysis in two independent samples.14 In this study, we adapted the survey items to focus on adults' own healthcare, conducted cognitive interviews to refine items, and established initial evidence for the validity in a sample of adults.

This novel tool is useful for studying dimensions of AI-driven healthcare technologies that might influence the acceptability of implementing AI in healthcare, with relevance for LHS as they design, implement, and scale these tools. The AAIH-A includes the use of new healthcare devices that diagnose, predict risk, select treatment, or offer medical guidance. The concern scales, which capture the importance people assign to different risks and benefits of potential uses of AI in healthcare, could help to inform considerations in the design and deployment of such tools, such as potential tradeoffs people might be willing to accept. While the AAIH-A allows assessment across a number of factors, the scale is relatively brief, contributing to its practicality. The measures analyzed here provide a potential method for investigators and AI developers to systematically and routinely evaluate the perspectives of patients as they continue to develop and implement AI-driven tools. These insights can inform the development and implementation of LHS by demonstrating the factors that influence the openness of patients to permitting AI-enabled technologies in their healthcare. Given the essential role of patient engagement in LHS, understanding the factors that influence openness to AI-enabled technologies could inform strategies to educate patients and families about the role of AI in LHS.

The measure's openness and concerns subscales had acceptable to excellent alpha reliability scores, ranging from 0.74 to 0.92. Confirmatory factor analysis provided evidence of the expected factor structure of the concern scales and had a good model fit. Positive associations of openness with scales measuring trust in the healthcare system and trust in technology provided evidence of construct validity. As expected, we also found that trait-based openness measured by the TIPI personality scale was correlated with openness to new AI-driven devices. Multivariate models suggested that the AAIH openness score was not simply explained by trait-based openness, as no personality scales were predictors of openness when multiple variables were included as predictors.

In this sample, quality and convenience were predictors of openness, along with beliefs about the trustworthiness and fidelity of technology. Overall, participants were moderately open (3.6/5) to AI technologies in their own healthcare. This level of openness was comparable to what we found among parents rating their openness (3.4/5) to AI in their children's healthcare.9 In previous work validating the AAIH-P among parents, in addition to quality and convenience, cost and shared decision-making were predictors of openness, suggesting that concerns about the acceptability of AI in pediatric healthcare might differ from those associated with adult care. In the current study of AAIH-A, trust in the health systems did not predict openness, but trust in the health system was a predictor of openness among parents.

Finally, our data provide preliminary evidence, although correlations are modest, that while quality of life and socio-demographics did not relate to general openness, they did have statistically significant relationships with concerns. These findings warrant further investigation because capturing concerns about novel health technologies among different groups is important as we seek acceptability and equity in implementing such technologies.23 Significant gaps have been observed between stated interest in some technologies and engagement with them; we suspect concerns play a major role in creating such gaps in healthcare engagement.24

These findings should be considered in light of this study's limitations. We did not explore the ecological validity of the item, for example, by testing the association of openness with actual behaviors and healthcare choices. Variables in our study had modest to moderate correlations, and future work can establish the consistency of these findings and explore additional variables that might explain variance in openness and concerns. The sample obtained using MTurk included a majority of White males who were relatively young, healthy, and educated. Our findings should not be taken to generalize across all potential participant groups. Future work validating these measures in other populations should be done with nationally representative samples of adults in varying states of sickness and health.

5 CONCLUSION

The development of AI-driven technologies in healthcare, which are meant to improve healthcare and facilitate learning, should incorporate the views of the public. Empirical data are needed to inform these innovations. We designed and validated a survey that allows researchers to capture adults' attitudes toward AI in healthcare. The openness of patients to AI-enabled healthcare will be essential to the continued development and advancement of an LHS.

ACKNOWLEDGMENTS

We thank Sara Burrous for her input into survey design and assistance in collecting data for this study.

FUNDING INFORMATION

Research reported in this publication was supported by the Washington University Institute of Clinical and Translational Sciences grant UL1TR002345 from the National Center for Advancing Translational Sciences (NCATS).

CONFLICT OF INTEREST STATEMENT

All authors have read and approved the final manuscript and do not declare any conflicts of interest.