Using Convolutional Neural Networks for the Classification of Suboptimal Chest Radiographs

ABSTRACT

Introduction

Chest X-rays (CXR) rank among the most conducted X-ray examinations. They often require repeat imaging due to inadequate quality, leading to increased radiation exposure and delays in patient care and diagnosis. This research assesses the efficacy of DenseNet121 and YOLOv8 neural networks in detecting suboptimal CXRs, which may minimise delays and enhance patient outcomes.

Method

The study included 3587 patients with a median age of 67 (0–102). It utilised an initial dataset comprising 10,000 CXRs randomly divided into a training subset (4000 optimal and 4000 suboptimal) and a validation subset (400 optimal and 400 suboptimal). The test subset (25 optimal and 25 suboptimal) was curated from the remaining images to provide adequate variation. Neural networks DenseNet121 and YOLOv8 were chosen due to their capabilities in image classification. DenseNet121 is a robust, well-tested model in the medical industry with high accuracy in object recognition. YOLOv8 is a cutting-edge commercial model targeted at all industries. Their performance was assessed via the area under the receiver operating curve (AUROC) and compared to radiologist classification, utilising the chi-squared test.

Results

DenseNet121 attained an AUROC of 0.97, while YOLOv8 recorded a score of 0.95, indicating a strong capability in differentiating between optimal and suboptimal CXRs. The alignment between radiologists and models exhibited variability, partly due to the lack of clinical indications. However, the performance was not statistically significant.

Conclusion

Both AI models effectively classified chest X-ray quality, demonstrating the potential for providing radiographers with feedback to improve image quality. Notably, this was the first study to include both PA and lateral CXRs as well as paediatric cases and the first to evaluate YOLOv8 for this application.

1 Introduction

Artificial intelligence (AI) is the process of training machines with human intelligence to perform decision-making tasks without manual interruption [1]. Integrating visual intelligence into AI in medical imaging allows models to classify images based on relevant characteristics [1]. Deep learning algorithms enhance diagnostic accuracy in medical imaging by identifying abnormalities, segmenting images, and automating bone measurements [2, 3]. Additionally, certain AI models can detect inaccuracies in substandard radiographs [3].

Reject analysis plays a pivotal role in quality assurance within radiology by identifying suboptimal images and reducing the necessity for repeat examinations [4]. Chest X-rays (CXRs) are among the most performed imaging studies in clinical practice and tend to have high reject rates [5]. Research indicates that high rejection rates of chest radiographs may lead to delays in patient care, increased costs, radiation exposure, and errors in radiographic interpretation [5]. The primary reasons for rejecting CXRs include issues with collimation and positioning, such as the scapula obscuring the lung fields, which may result from the patient's critical condition or insufficient attention from radiographers [6, 7]. Furthermore, discrepancies in quality perception between radiographers and radiologists—where radiographers base their judgements on technical criteria, potentially rejecting images that radiologists might find acceptable—contribute to image rejection rates [6, 8, 9]. Saade et al. indicate that the rejection rates among radiographers are considerably higher than those of radiologists [9]. Consequently, reject analysis is essential for identifying the causes and frequency of rejected images and developing strategies to mitigate these issues [4].

A comprehensive literature review highlights a gap in models capable of effectively assessing technical errors in suboptimal radiographs, with few solutions addressing this challenge [10, 11]. This study employs AI-driven neural networks, specifically DenseNet121 and YOLOv8, to analyse CXRs, encompassing high-quality and substandard images. A study by Sriporn et al. employed convolutional neural network models, specifically MobileNet, Densenet-121, and Resnet-50, to investigate lung diseases by detecting lung lesions [12]. Notably, Densenet-121 demonstrated superior performance in image classification compared to the other models [12]. Other research also indicates DenseNet-121's exceptional performance in image recognition within healthcare and various other fields, highlighting the adaptability of this model across multiple domains [13-15]. Hence, DenseNet121 is widely used in medical imaging due to its dense connections that enhance feature learning, making it highly suitable for complex image classification tasks [16]. YOLOv8, a state-of-the-art model, excels in feature extraction and integration, enabling rapid and precise classification [17]. Elmessery et al. evaluated different iterations of the YOLO-based algorithm aimed at the automatic detection of pathological conditions in broilers, underscoring the significant capabilities of YOLOv8 in image classification relevant to the healthcare domain [18]. Additional studies have demonstrated the high accuracy and efficiency of YOLOv8 in image classification applications within the healthcare sector [19]. The selection of these models is justified by their exceptional performance in image classification tasks [10-12, 17].

Integrating AI into radiology Quality Control (QC) has meaningful clinical advantages, such as ensuring consistent reporting, easing observer workload, and broadening quality checks across all radiographs [10]. Efficiently detecting rejects using AI enables auditing large volumes of images and providing feedback and education, thereby potentially reducing reject rates. This, in turn, can decrease radiation exposure, streamline workflow, expedite patient care and diagnosis, and enhance the overall patient experience [7]. Moreover, improving the quality of CXRs is crucial for accurately assessing critical findings such as pneumothorax, pneumonia, and lesions, thereby directly impacting patient outcomes [5].

This study assesses whether the DenseNet121 and YOLOv8 convolutional neural networks can effectively classify CXRs as optimal or suboptimal. Notably, it is the first study to include both PA and lateral CXRs, as well as paediatric cases, and the first to evaluate YOLOv8 in this context.

2 Methodology

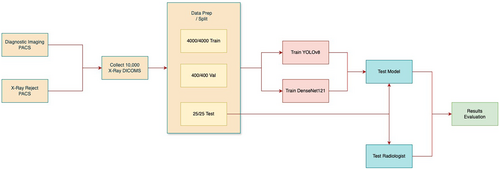

This study utilised a quantitative, retrospective, and experimental approach to evaluate the effectiveness of AI models in classifying optimal versus suboptimal CXRs. Data was sourced from a large tertiary health network covering a range of specialties, including a dedicated children's hospital. A substantial dataset of CXRs was collected and divided into training, validation, and test sets with no overlap between each dataset to evaluate the models' performance in relation to that of the radiologists' (Figure 1).

2.1 Data Preparation

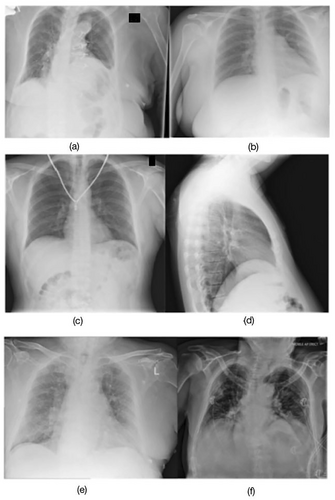

Initially, 10,000 CXRs were retrieved from Picture Archiving and Communication System (PACS) servers using a Python script and the Digital Imaging and Communications in Medicine (DICOM) protocol. DICOM is a standard for the storage and dissemination of medical images as it encompasses both the image data and the details regarding the method of capture [20]. Suboptimal radiographs from Reject PACS included various technical deficiencies such as the exclusion of anatomy, patient motion, artefacts, inadequate exposure, and patient rotation (Figure 2a to d). Optimal radiographs from the accepted pile on PACS were deemed to have no significant technical deficiencies, and their quality was sufficient for diagnoses to be made by the radiologists (Figure 2e,f). The whole image dataset included 28.4% PA, 28.6% AP, and 43% lateral projections. A randomised subset was then selected for training and validation. Training datasets are used to develop models, validation datasets are employed to fine-tune and optimise models, and testing datasets are used to assess model performance [21].

For model training, 4000 suboptimal images were obtained from the Reject PACS, and 4000 optimal images were obtained from the Diagnostic Imaging PACS. For validation, 400 optimal images and 400 suboptimal images were used (Figure 1). The test set comprised 25 optimal and 25 suboptimal CXRs with a distribution of 36.1% PA, 19.4% AP, and 44.5% lateral. It consists of a mix of technical deficiencies: 6 related to positioning, 6 to image quality, 9 with clipped anatomy, and 4 showing patient motion with some overlap across categories. To mitigate biases and ensure a diverse dataset, the manual selection was performed by 2 radiographers (1 experienced and 1 junior) based on the standard chest radiograph criteria (Table S1). Prior studies showed that test sets for different feature categories were roughly equivalent despite notable discrepancies in the volume of training data [11]. Consequently, this study applied a comparable method, using equivalent amounts of optimal and suboptimal test images.

The performance of AI models was evaluated in comparison to radiologists, who serve as the clinical gold standard and exhibit a lower image rejection rate than radiographers. Training and testing models in alignment with the standards set by radiologists may have the potential to decrease radiation exposure for patients over time, as AI models could better guide radiographers to develop a perception akin to that of radiologists. This test set was limited to 50 images to facilitate a controlled comparison between model performance and radiologist assessment, as the same images were independently reviewed in the radiologist survey. This design ensured sufficient variance in patient demographics, rejection reasons, and borderline image quality (Figure 2).

The data retrieval method utilised convenience sampling without accounting for variables such as patient age, race, sex, radiographic equipment, or projection type. All images were resized to a resolution of 256 × 256 for optimal training on constrained computing resources. All study identifiers and corresponding images underwent anonymisation during the data extraction process, with no metadata preserved. The anonymised images were securely stored within a secure research computing environment for subsequent processing.

2.2 Model Training

The models underwent training for 200 epochs using PyTorch version 2.4 with Python 3.10. Training was performed on an NVIDIA P40 GPU with 24 GB of VRAM, using CUDA version 12.1. Hyperparameters such as batch size and learning rate were systematically adjusted to optimise training and validation outcomes (Appendices S1 and S2- found in supporting information). Weights and Biases (WandB) were used to visualise the training progression, and loss graphs were generated to assess model stability.

2.3 Data Analysis

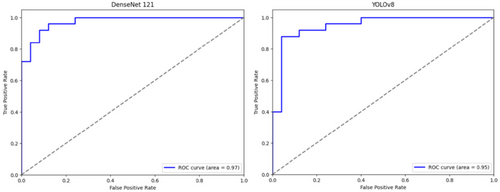

The study utilised AUROC curves to assess the accuracy of AI models in image quality classification. The neural network was assumed to have better performance as the AUROC approached 1.0 [22]. When the AUROC exceeded 0.5, the test demonstrated significance, and a value of 0.8 or higher indicated that the classification was acceptable [23].

Three radiologists, each possessing 5–10 years of expertise in the field, conducted a blind classification of the supplied images, designating them as either “No major technical deficiency” (optimal) or “Technical deficiency present” (suboptimal). The images were delivered as 256 × 256 JPEG files and presented to the radiologists through a Google Forms survey (see Figure S1 under supporting information).

The classifications were gathered and analysed to evaluate the results of the two models and the radiologists. A chi-squared test was used to determine whether the observed differences between the model classifications and those of the radiologists were statistically significant, indicating a genuine relationship rather than chance [24]. The goal was to assess whether the models' classifications were comparable to those of the radiologists. Statistical analysis was conducted using the Python scikit-learn package.

2.4 Ethics

The study was classified as a quality improvement project by the Monash Health Ethics Review Board, which granted an exemption from full ethical review. As the research was conducted retrospectively using anonymised data, informed consent was not required. The project was approved under the reference number RES-23-0000-805Q.

3 Results

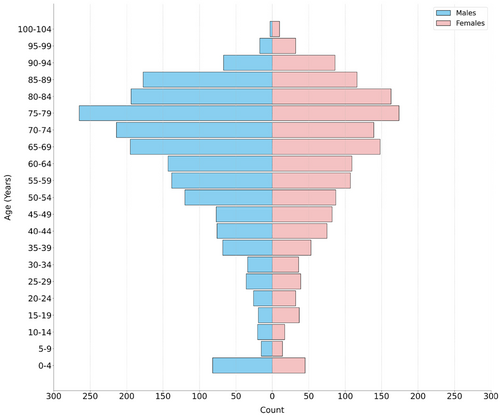

The patient cohort comprised 3587 individuals of adults and paediatrics, with a median age of 67 years, spanning a range from 0 to 102 years (Figure 3). The sample comprised 1986 males and 1601 females, providing a varied representation for model training and testing.

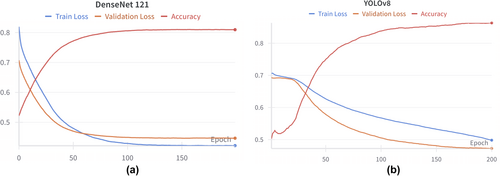

There is a positive trend in the accuracy of YOLOv8 and DenseNet121, along with a decrease in training and validation loss that eventually stabilises (Figure 4). The YOLOv8 loss curves suggest the potential for further improvement if left to run for longer; however, they were kept at 200 epochs for consistency (Figure 4b).

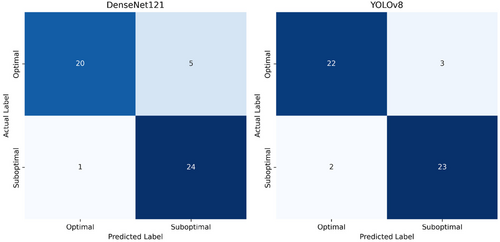

The DenseNet121 network correctly predicted 20 (80%) of optimal and 24 (96%) of suboptimal images. The ROC curve of DenseNet121's performance was plotted with an AUC of 0.97 (Figures 5 and 6). YOLOv8 correctly predicted 92% of optimal images and 88% of suboptimal images with an AUC of 0.95 (Figures 5 and 6).

A precision-recall graph was created to compare the models and radiologists in classifying optimal and suboptimal images (Figure 7). Precision refers to the classifications corresponding with the categories of optimal and suboptimal images. Recall indicates the proportion of optimal images successfully identified by the models. DenseNet121 showed the highest precision at 0.95, indicating accurate identification of both categories, while its recall was slightly lower at 0.8, reflecting some missed optimal images. YOLOv8 demonstrated a balanced precision of 0.9 and a higher recall of 0.85, suggesting better identification of optimal images with slightly reduced precision. The radiologists had varying precision and recall scores, indicating differing levels of accuracy and consistency, with Radiologist 3 having the highest recall among them (Figure 7). From the implementation of a chi-squared test, although there is some variability in the radiologists' performance, the p-values for both models show no significant difference when compared to the combined confusion matrices of radiologists', implying similar levels of effectiveness (see Figure S2 under supporting information).

4 Discussion

This study's results indicated that CNNs could be trained to detect suboptimal CXRs. Both models' ROC curves showed outstanding performance, with AUC measuring 0.97 for DenseNet121 and 0.95 for YOLOv8, suggesting strong classification ability while minimising false positives. DenseNet121 slightly outperformed YOLOv8, yet both models demonstrated potential for clinical application with opportunities for further refinement. Notably, this was the first study to include both PA and lateral chest X-rays, as well as paediatric cases, and to test YOLOv8 in this context. The consistent performance of the CNNs across diverse patient age groups, sexes, and radiographic projections highlights their robustness in image quality classification.

Research in related domains had similarly demonstrated the efficacy of DenseNet121 in identifying suboptimal X-rays with an AUC of 0.937. In a separate study, the performance of AI models was divided into subcategories based on technical deficiencies in images. The models obtained 0.87 AUC for missing anatomy, 0.91 for inadequate exposure, 0.94 for low lung volume, and 0.94 for patient rotation [8]. A direct comparison with the second study was not feasible as our research did not include subclasses, which was not possible at the time of research due to resource constraints. While our model demonstrated superior performance on suboptimal images compared to findings from Kashyap et al. [10], this discrepancy might be attributed to differences in the training and test datasets. Literature has shown the promising performance of YOLOv8 in detecting tumours, classifying diseases, and organ segmentation [17]. However, no other studies have investigated YOLOv8's accuracy in identifying suboptimal-quality radiographs.

Early identification of suboptimal images offers significant benefits, such as reducing the need for repeat imaging, which in turn minimises radiation exposure to patients. Additionally, fewer imaging attempts reduce repositioning time and alleviate patient discomfort. However, integrating deep learning models into healthcare systems presents several challenges, including issues with reproducibility, limited explainability of model outputs, potential unintended biases, privacy concerns, compliance requirements, and increased costs. Therefore, thorough validation is crucial to prevent unexpected model failures [22]. A more practical implementation could involve auditing all CXRs sent to PACS, where the models would flag suboptimal images and provide a feedback loop for training and quality improvement.

4.1 Future Work

Future research may benefit from integrating clinical details before model training, which could have enriched the clinical context and enhanced both model accuracy and radiologists' image quality assessment. Suboptimal X-rays could also be classified into subclasses during training, enabling the model to recognise rejection reasons, such as anatomical exclusion, improper exposure, poor inspiration, mispositioning, and overlying anatomy [7]. Additionally, involving radiologists in grading the dataset before training could yield a more dependable ground truth.

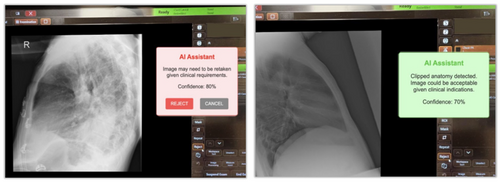

4.2 Applications

Figure 8 presents a mocked implementation of a flagging tool designed to enhance the application of AI in radiographic practice. Such flagging tools could significantly improve educational practices and facilitate quality audits. Integrating a model capable of flagging technical deficiencies directly at the imaging console offers the potential for immediate feedback to radiographers following X-ray acquisition (Figure 8). Intervention could be particularly beneficial for students, medical imaging interns, and junior radiographers, who often face challenges accepting or rejecting suboptimal X-rays. In this context, AI models could provide real-time feedback, enhancing junior staff's decision-making skills. Importantly, the patient's clinical indications should inform the decision to accept or repeat an X-ray.

Lastly, these models can be an effective auditing tool for large image datasets. Through retrospective audits, they may help identify X-rays that require quality improvements, thereby uncovering opportunities for additional training or enhancing adherence to protocols. This approach eliminates the need to manually curate rejected data, which is time-consuming and labourious.

4.3 Limitations

This research presents some limitations. Firstly, images were categorised into optimal and suboptimal based on where they were delivered. All images transmitted to Diagnostic Imaging PACS were considered optimal, whereas those directed to Reject PACS were classified as suboptimal. The classification relied exclusively on the radiographers' judgement, without any prior classification by radiologists, who are regarded as the benchmark for evaluating image quality. The dependence on the judgement of radiographers may have influenced the precision of the reference standard employed in this study.

Furthermore, the absence of clinical indications within the dataset led to inconsistencies in the radiologist's image quality assessment. Without comprehensive clinical information, the models and radiologists encountered difficulties in consistently evaluating the quality of the CXRs. This limitation reduced inter-observer agreement among radiologists, thereby impacting the overall reliability of the assessments.

In addition, the manually curated test set (n = 50) may introduce the potential for selection bias. While this design facilitated a controlled comparison between radiologist and model performance as a proof of concept, this limited sample size may not adequately reflect the full spectrum of technical deficiencies observed in routine clinical practice, thereby constraining the generalisability of the findings.

Lastly, the dataset encompassed inpatient and outpatient cases, introducing variability in the necessary image quality standards. Inpatient imaging frequently accommodates lower quality standards due to patients' critical conditions and clinical requirements, while outpatient imaging necessitates higher quality to facilitate precise diagnoses. The differences in image quality standards between inpatients and outpatients presented a challenge for model training, complicating the model's ability to generalise across various clinical settings. It is essential to consider these limitations when analysing the study's findings and assessing the broader relevance of the model.

5 Conclusion

This research demonstrated that DenseNet121 and YOLOv8 effectively categorise CXRs into optimal and suboptimal classes, achieving AUROC scores of 0.97 and 0.95, respectively. These models show significant potential for auditing radiographic quality, which may reduce the need for repeat imaging, lower patient radiation exposure, and improve workflow efficiency in clinical practise.

Future research should focus on refining these models to identify specific technical errors in radiographs, including rotation, clipped anatomy, or poor exposure. Clinical details could be incorporated for increased contextual precision by the model.

AI-assisted feedback tools show promise for providing real-time support to radiographers, while automated quality control systems could standardise imaging practices and improve patient outcomes. These findings highlight the potential of AI to enhance radiographic quality and diagnostic accuracy.

Acknowledgements

Open access publishing facilitated by Monash University, as part of the Wiley - Monash University agreement via the Council of Australian University Librarians.

Ethics Statement

The project was approved by the Monash Health Ethics Review Board under the reference number RES-23-0000-805Q.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

All data produced in the present study are available upon reasonable request to the authors.