Identifying epilepsy surgery referral candidates with natural language processing in an Australian context

Abstract

Objective

Epilepsy surgery is known to be underutilized. Machine learning-natural language processing (ML-NLP) may be able to assist with identifying patients suitable for referral for epilepsy surgery evaluation.

Methods

Data were collected from two tertiary hospitals for patients seen in neurology outpatients for whom the diagnosis of “epilepsy” was mentioned. Individual case note review was undertaken to characterize the nature of the diagnoses discussed in these notes, and whether those with epilepsy fulfilled prespecified criteria for epilepsy surgery workup (namely focal drug refractory epilepsy without contraindications). ML-NLP algorithms were then developed using fivefold cross-validation on the first free-text clinic note for each patient to identify these criteria.

Results

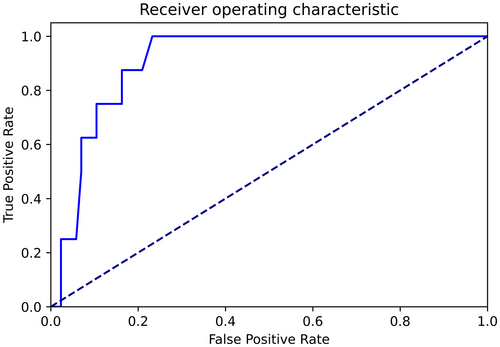

There were 457 notes included in the study, of which 250 patients had epilepsy. There were 37 (14.8%) individuals who fulfilled the prespecified criteria for epilepsy surgery referral without described contraindications, 32 (12.8%) of whom were not referred for epilepsy surgical evaluation in the given clinic visit. In the prediction of suitability for epilepsy surgery workup using the prespecified criteria, the tested models performed similarly. For example, the random forest model returned an area under the receiver operator characteristic curve of 0.97 (95% confidence interval 0.93–1.0) for this task, sensitivity of 1.0, and specificity of 0.93.

Significance

This study has shown that there are patients in tertiary hospitals in South Australia who fulfill prespecified criteria for epilepsy surgery evaluation who may not have been referred for such evaluation. ML-NLP may assist with the identification of patients suitable for such referral.

Plain Language Summary

Epilepsy surgery is a beneficial treatment for selected individuals with drug-resistant epilepsy. However, it is vastly underutilized. One reason for this underutilization is a lack of prompt referral of possible epilepsy surgery candidates to comprehensive epilepsy centers. Natural language processing, coupled with machine learning, may be able to identify possible epilepsy surgery candidates through the analysis of unstructured clinic notes. This study, conducted in two tertiary hospitals in South Australia, demonstrated that there are individuals who fulfill criteria for epilepsy surgery evaluation referral but have not yet been referred. Machine learning-natural language processing demonstrates promising results in assisting with the identification of such suitable candidates in Australia.

Key points

- In Australia, there are patients that may be eligible for epilepsy surgery but have not been referred for such evaluation.

- Machine learning, in particular natural language processing, may assist in identifying epilepsy surgery candidates.

- The random forest model returned an area under the receiver operator characteristic curve of 0.97 for this task.

1 INTRODUCTION

Estimated 250 000 Australians have epilepsy.1 Approximately one-third of these patients will have ongoing seizures despite treatment with two appropriate antiseizure medications (ASM) and be characterized as having drug refractory epilepsy.2 For this patient group, epilepsy surgery to resect or ablate the source of seizures offers a meaningful chance of seizure freedom which in turn leads to enduring improvements in quality of life.3 Unfortunately, epilepsy surgery is vastly underutilized.

There are various reasons leading to this underutilization, one of which is a lack of identification of candidate patients and prompt referral to comprehensive epilepsy centers. Additional strategies to facilitate the referral of patients for evaluation for epilepsy surgery may be beneficial. Machine learning, in particular natural language processing (NLP), may be able to assist with the identification of patients suitable for epilepsy surgery workup. Studies demonstrated that NLP techniques can achieve moderate to high performance in this task, with some models able to identify these patients 1–2 years prior to clinicians instigating referral.4 Briefly, NLP involves the use of computers to understand, analyze, and interpret human language.5 Machine learning approaches can be applied for natural language processing tasks to gain insight into various data types, such as unstructured text.

Natural language processing has previously been employed with the aim of identifying individuals who may be suitable for epilepsy surgery. These studies have typically analyzed medical free-text notes, and used algorithms including support vector machines, random forest models, and logistic regression models.6-10 High level of performance has been described; however, the external generalizability of such approaches is uncertain. Majority of these studies have been conducted in the United States. The extent to which these strategies translate to other centers is uncertain. Since NLP is contingent upon lexical patterns, which may vary between different jurisdictions, evaluation of these strategies is necessary in diverse centers.

The aims of this study were to (1) determine the proportion of patients seen in two tertiary Australian hospitals who may be suitable for epilepsy surgery evaluation and (2) evaluate the performance of a pilot machine learning natural language processing analysis in the identification of patient characteristics relevant to epilepsy surgery referral.

2 MATERIALS AND METHODS

2.1 Study setting and data collection

The two participating hospitals (the Royal Adelaide Hospital and Flinders Medical Centre) are tertiary care centers with multiple specialized and general neurology outpatient departments, including epilepsy clinics. These outpatient departments are typically staffed by consultants and neurology advanced trainees. In Australia, advanced trainees are usually postgraduate year 5 or higher specialty trainees. These participating hospitals do not currently have the capacity to perform stereoelectroencephalography. When patients undergo evaluation for epilepsy surgery candidacy, such as with stereoelectroencephalography, they are typically referred for evaluation at other centers. Data were collected from the inception of the implementation of the institutional electronic medical record at each participating center to July 2022. Therefore, the inclusion period for each center was from March 2020 and July 2021, respectively.

Notes entered in outpatient neurology visits in which the word “epilepsy” was used were collected. For each patient, the first recorded note from the time of inception of the electronic medical record was included. The reason for this selection criteria was that, for existing patients, in the first visit after transfer to the electronic medical record, previous documentation was frequently transcribed from paper notes into the electronic medical record to provide a site of reference moving forwards. Additionally, for patients who were seen for the first time after the introduction of the electronic medical record, a first visit typically includes a full history with associated comprehensive documentation. When the first note was not a clinic note (a medical note written by a doctor following in-person or phone review of a patient—as opposed to administrative and other notes), these notes were included in the study since the algorithms ability to correctly identify clinic notes is also a significant factor that will influence subsequent applicability.

Manual review of the included notes was conducted by investigators to extract the following variables: Whether an included note was in fact a clinic review note; whether a patient had a diagnosis of epilepsy (this was either based on a mentioned diagnosis by the treating doctor or description of patient having 2 or more separate occasions of unprovoked seizures); age; gender; the presence of focal onset seizures; the presence of generalized onset seizures; whether a lesion was described as being present on magnetic resonance imaging (MRI); whether a focal abnormality was described as being present on positron emission tomography; the number of antiseizure medications previously trialed; whether the patient was seizure-free at the time of review (either by explicit use of this term or having been described not to have had seizures since a preceding appointment or intervention); whether they had previous epilepsy surgery; whether they were referred for epilepsy surgery as a result of the clinic encounter; whether they had any absolute or relative contraindications to epilepsy surgery; and whether they met prespecified criteria for referral for epilepsy surgery workup. The presence of absolute and relative contraindications was considered to include expressed opposition to surgical management, progressive incurable imminently life-limiting illness, and significant cognitive impairment.11 The criteria for referral for epilepsy surgery workup were focal epilepsy, failure of at least two described appropriate antiseizure medication trials, age ≤70, and the absence of described contraindications. For patients who were considered epilepsy surgery workup candidates based on the prespecified criteria, but who were not referred in the included clinic note, subsequent case note review was undertaken in September 2023 to enable the determination of outcome for these individuals with at least 12-month follow-up.

It is acknowledged that certain criteria described here may be debated, particularly regarding aspects of relative and absolute contraindications.12 The described, relatively narrow criteria were selected to provide a number of variables for the machine learning analysis on which its performance could be evaluated. Similar methods could be employed for alternative criteria.

In the collection of these variables, only the included clinic note was used for reference. In other words, when there was insufficient information in a given note to ascertain a piece of data (such as the number of antiseizure medications previously trialed), other components of the electronic medical record were not reviewed to determine this information. The reason for this approach was that it was acknowledged that a certain proportion of patients would have insufficient information available in a clinic note to make an evaluation as to whether they may be a candidate for epilepsy surgery. Since the machine learning analysis was intended to be performed on a note-wise basis, the gold standard that was required for comparison against the machine learning was the best conclusion that was able to be drawn from the individual note in question (see Section 4).

2.2 Machine learning analysis

The free-text document for each patient was the input for the machine learning analyses. Data preprocessing involved negation detection, punctuation removal, stop-word removal, and Porter stemming. Gendered pronouns were removed during the preprocessing stage. The Natural Language Toolkit (NLTK) library was used to perform negation detection, which involves appending a suffix of (“_NEG”) to any terms that follow a negating term (such as “not”) in a sentence.13 Text was then transformed into a term frequency inverse document frequency (TF-IDF) array using n-grams of one to three word stems in length. Data were then split into training and testing datasets with a random 80%: 20% split.

XGBoost, logistic regression, and random forest models were then developed on the training data set using fivefold cross-validation. Hyperparameter tuning and architecture experimentation were conducted at this stage. Cutoff scores were derived with Youden's index.14 After each model had been optimized on the training data set, performance analysis was evaluated on the holdout test data. Area under the receiver operator curve (AUROC) values were generated with 95% confidence intervals (95% CI) calculated via bootstrapping. The primary outcome of the study was the AUROC for the best performing model in the classification of individuals meeting criteria for consideration of epilepsy surgery referral. Calibration graphs were produced with four bins. The application of cutoff scores enabled the calculation of metrics including sensitivity, specificity, and F1 scores, which were calculated as standard. Misclassifications of the best performing model, and word stems with the highest/lowest regression coefficients, were collected for epilepsy surgery workup suitability classifications. Analyses were undertaken using open-source Python and R libraries, including SciKit-Learn and XGBoost.15, 16

2.3 Ethical approval

This study was conducted with institutional review board approval from the Central Adelaide Local Health Network and Southern Adelaide Local Health Network Research Ethics Committees (reference numbers 14750 and 35.22).

3 RESULTS

3.1 Patient characteristics

There were 457 notes included in the study. Of these notes, 307 were clinic notes, and 250 of 307 notes were for patients with epilepsy. Of the patients with epilepsy, the mean age was 45.3 (SD 17.8) and there were 135 female (54%). There were 117 patients with focal seizures (46.8%) and 49 (19.6%) with generalized seizures. Two of these patients were described to have both focal and generalized seizures. There were 86 (34.4%) individuals in which the type of seizure was not specified in the included note. The number of patients with a described MRI was 72 (28.8%) and the number with a PET was 5 (2%). The number of patients who had a focal MRI lesion described as being present was 40 (16%). There were no patients that were described to have a focal PET abnormality. Accordingly, 32 and 5 patients had a non-lesional MRI and PET, respectively. Patient characteristics are displayed in Table 1.

| Characteristics | Epilepsy patients (n = 250) |

|---|---|

| Mean age (SD) | 45.3 (17.8) |

| Female gender (%) | 135 (54) |

| Focal seizures (%) | 117 (46.8) |

| Generalized seizures (%) | 49 (19.6) |

| Unidentified seizure type (%) | 86 (34.4) |

| Focal MRI lesion (%) | 40 (16) |

| Focal PET abnormality | 0 |

3.2 Epilepsy treatment

The median number of ASM that had been described to be trialed previously was 2 (IQR 1–3, minimum 0, maximum 6). There were 129 (51.6%) patients who had trialed ≥2 ASM. There were 130 (52%) patients who were seizure-free at the time of review. There were 31 individuals with either a relative or absolute contraindication to epilepsy surgery. The number of patients referred for epilepsy surgery as a result of the clinic visit was 5 (2%), and 4 (1.6%) had previously undergone epilepsy surgery. There were 37 (14.8%) individuals who fulfilled the prespecified criteria for epilepsy surgery referral without described contraindications, 32 (12.8%) of whom were not referred for epilepsy surgery in the given clinic visit. There was no described reason for non-referral in all of these cases.

3.3 Follow-up outcomes

In the 32 identified clinic notes in which there was no referral but individuals fulfilled the prespecified criteria for referral, all patients had at least 12-month follow-up period prior to outcome determination. In this group, there were four (12.5%) of patients who were subsequently referred for epilepsy surgery evaluation. There were 11 (34.4%) of patients who were described to have been content with their seizure frequency and not pursued epilepsy surgery evaluation as a result. There were seven (21.9%) of patients who achieved seizure freedom with medications. There was one (3.1%) patient in whom lack of medical engagement and medication non-adherence was considered to preclude epilepsy surgery evaluation. There was one (3.1%) patient for whom epilepsy surgery was considered too high risk. There were four (12.5%) cases in which there was no described reason for not referring for epilepsy surgery evaluation. There was one (3.1%) patient who moved interstate. There were three (9.4%) patients who died.

3.4 Machine learning analysis

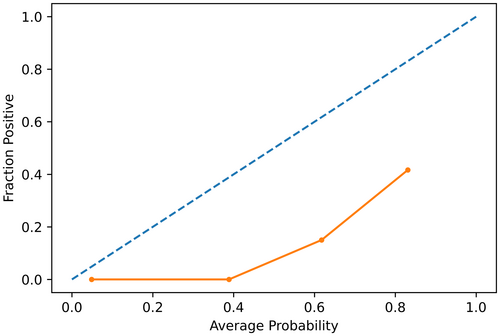

The machine learning natural language processing performance characteristics are summarized in Table 2. With respect to the primary outcome of the study, the tested models performed similarly and were largely equivalent. Given the prespecified analysis plan to examine misclassifications of the best performing model, the random forest model was selected as it provided the numerically highest AUROC. This model returned an AUROC of 0.97 (95% CI 0.93–1.0) for this task (see Figure 1). When results were dichotomized, this model provided a sensitivity of 1.0 and specificity to 0.93 for the classification of those meeting the criteria for epilepsy surgery workup referral. However, the examination of the calibration graph revealed that the model was not well calibrated (see Figure 2), which is consistent with the predominance of false positives (and absence of false negatives) returned by the model.

| Classification task | Model | AUROC (95% CI) | AP score | TP | FN | TN | FP | Sensitivity | Specificity | PPV | NPV | Accuracy | F1 score |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Identify clinic note | LR | 1.0 (0.99–1.0) | 0.998 | 58 | 1 | 34 | 1 | 0.98 | 0.97 | 0.98 | 0.97 | 0.98 | 0.98 |

| RF | 1.0 (0.99–1.0) | 0.999 | 57 | 2 | 35 | 0 | 0.97 | 1.00 | 1.00 | 0.95 | 0.98 | 0.98 | |

| XGB | 0.99 (0.97–1.0) | 0.995 | 58 | 1 | 33 | 2 | 0.98 | 0.94 | 0.97 | 0.97 | 0.97 | 0.97 | |

| Epilepsy diagnosis | LR | 0.92 (0.84–0.98) | 0.900 | 51 | 1 | 34 | 8 | 0.98 | 0.81 | 0.86 | 0.97 | 0.92 | 0.90 |

| RF | 0.92 (0.84–0.97) | 0.889 | 49 | 3 | 35 | 7 | 0.94 | 0.83 | 0.88 | 0.92 | 0.91 | 0.89 | |

| XGB | 0.93 (0.87–0.97) | 0.936 | 50 | 2 | 34 | 8 | 0.96 | 0.81 | 0.86 | 0.94 | 0.91 | 0.89 | |

| Focal onset seizures | LR | 0.88 (0.79–0.95) | 0.692 | 17 | 3 | 59 | 15 | 0.85 | 0.80 | 0.53 | 0.95 | 0.65 | 0.81 |

| RF | 0.94 (0.87–0.99) | 0.834 | 18 | 2 | 65 | 9 | 0.90 | 0.88 | 0.67 | 0.97 | 0.77 | 0.88 | |

| XGB | 0.96 (0.91–0.99) | 0.898 | 19 | 1 | 66 | 8 | 0.95 | 0.89 | 0.70 | 0.99 | 0.81 | 0.90 | |

| Generalized onset seizures | LR | 0.8 (0.68–0.9) | 0.293 | 9 | 1 | 55 | 29 | 0.90 | 0.65 | 0.24 | 0.98 | 0.38 | 0.68 |

| RF | 0.83 (0.68–0.93) | 0.434 | 9 | 1 | 56 | 28 | 0.90 | 0.67 | 0.24 | 0.98 | 0.38 | 0.69 | |

| XGB | 0.86 (0.75–0.95) | 0.378 | 8 | 2 | 69 | 15 | 0.80 | 0.82 | 0.35 | 0.97 | 0.48 | 0.82 | |

| MRI with focal lesion | LR | 0.83 (0.69–0.94) | 0.220 | 6 | 0 | 56 | 32 | 1.00 | 0.64 | 0.16 | 1.00 | 0.27 | 0.66 |

| RF | 0.88 (0.72–0.99) | 0.498 | 4 | 2 | 85 | 3 | 0.67 | 0.97 | 0.57 | 0.98 | 0.62 | 0.95 | |

| XGB | 0.89 (0.77–0.98) | 0.448 | 6 | 0 | 65 | 23 | 1.00 | 0.74 | 0.21 | 1.00 | 0.34 | 0.76 | |

| Trialed ≥2 antiseizure medications | LR | 0.88 (0.8–0.94) | 0.676 | 22 | 3 | 52 | 17 | 0.88 | 0.75 | 0.56 | 0.95 | 0.69 | 0.79 |

| RF | 0.86 (0.78–0.93) | 0.663 | 21 | 4 | 56 | 13 | 0.84 | 0.81 | 0.62 | 0.93 | 0.71 | 0.82 | |

| XGB | 0.86 (0.78–0.93) | 0.636 | 21 | 4 | 52 | 17 | 0.84 | 0.75 | 0.55 | 0.93 | 0.67 | 0.78 | |

| Seizure-free currently | LR | 0.77 (0.67–0.86) | 0.508 | 27 | 2 | 37 | 28 | 0.93 | 0.57 | 0.49 | 0.95 | 0.64 | 0.68 |

| RF | 0.81 (0.72–0.89) | 0.623 | 24 | 5 | 46 | 19 | 0.83 | 0.71 | 0.56 | 0.90 | 0.67 | 0.74 | |

| XGB | 0.86 (0.78–0.93) | 0.720 | 27 | 2 | 45 | 20 | 0.93 | 0.69 | 0.57 | 0.96 | 0.71 | 0.77 | |

| Contraindication to epilepsy surgery | LR | 0.82 (0.61–0.95) | 0.126 | 3 | 0 | 59 | 32 | 1.00 | 0.65 | 0.09 | 1.00 | 0.16 | 0.66 |

| RF | 0.84 (0.54–1.0) | 0.302 | 2 | 1 | 87 | 4 | 0.67 | 0.96 | 0.33 | 0.99 | 0.44 | 0.95 | |

| XGB | 0.85 (0.53–1.0) | 0.689 | 2 | 1 | 91 | 0 | 0.67 | 1.00 | 1.00 | 0.99 | 0.80 | 0.99 | |

| Meets criteria for epilepsy surgery referral | LR | 0.95 (0.91–0.99) | 0.622 | 8 | 0 | 78 | 8 | 1.00 | 0.91 | 0.50 | 1.00 | 0.67 | 0.91 |

| RF | 0.92 (0.86–0.97) | 0.407 | 8 | 0 | 72 | 14 | 1.00 | 0.84 | 0.36 | 1.00 | 0.53 | 0.85 | |

| XGB | 0.92 (0.84–0.99) | 0.515 | 7 | 1 | 73 | 13 | 0.88 | 0.85 | 0.35 | 0.99 | 0.50 | 0.85 |

- Abbreviations: AP, average precision; AUROC, area under the receiver operator characteristic curve; FN, false negative; FP, false positive; LR, logistic regression; NPV, negative predictive value; PPV, positive predictive value; RF, random forest; TN, true negative; TP, true positive; XGB, XGBoost.

With respect to misclassifications, the random forest model in the identification of individuals suitable for epilepsy surgery workup referral (random forest) had no false-negative results on the holdout test dataset. In other words, it can be seen that the random forest model correctly identified all patients who were indeed referred for epilepsy surgery as a result of their clinic visit that were in the test dataset. Therefore, the only examples of misclassifications for this model were false positives. One example of a false positive was a telehealth consultation regarding a patient with epilepsy on oxcarbazepine with adequately controlled seizures but significant drowsiness on the medication, who was planned for oxcarbazepine levels, a sleep deprived electroencephalogram and a brain magnetic resonance imaging scan with an “epilepsy” sequence. This patient was not classified as fulfilling the criteria for referral for multiple reasons, including lack of information regarding their seizure onset (it was unclear from the note whether they had focal onset or generalized onset seizures) and insufficient evidence of ≥2 appropriate antiseizure medication trials. However, it could be suggested that it is most likely that the patient has focal seizures (given the choice of oxcarbazepine) and that they most likely have previously failed other antiseizure medications (since to access oxcarbazepine on the Australian Pharmaceutical Benefit Scheme, one must previously have had seizures that were unable to be controlled by other agents).

When examining the word stems with the most extreme regression coefficients in the prediction of epilepsy surgery referral candidature, patterns relating to medical treatment and note types were observed. In particular, word stems with strong positive regression coefficients typically related to focal epilepsy, and to second- or third-line antiseizure medications (e.g., “lacosamid,” “seizur,” “tempor,” “focal,” “perampanel,” and “oxcarbazepin”). There were also several words that alone may indicate a degree of overfitting, although in context may relate to lack of seizure freedom, namely “month” and “time.” There were also word stems that related to note types that were not clinic notes (e.g., inclusion of fax numbers in administrative notes).

4 DISCUSSION

These results have demonstrated that, in an Australian context, there are a significant number of patients with epilepsy who may be suitable for referral for epilepsy surgery workup who have not been referred. Machine learning natural language processing can accurately characterize aspects of the medical history of patients with epilepsy, including having a described focal onset to their seizures. This technique can also reasonably accurately identify patients who fulfill prespecified criteria for being candidates for referral for epilepsy surgery workup.

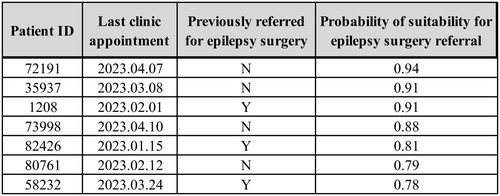

While the performance of the algorithms developed in this study has been presented with dichotomous performance metrics, the potential utility of the algorithms may also be served with a continuous output. The continuous output of a machine learning algorithm could be made an indicator of the probability of a patient fulfilling the criteria for epilepsy surgery referral. Therefore, rather than making a categorical classification, patients, or notes, could be ranked in order of likelihood of fulfilling the criteria. This ranking could then be used to facilitate audit and quality improvement activities that aim to ensure that all patients who may benefit from epilepsy surgery workup have been actively considered for this process (see Figure 3). The impact of such an application would then need to be examined regarding total referrals and patient-centered outcomes, such as quality of life and seizure freedom, to demonstrate benefit. Such applications may evaluate the impact of different styles of output, and which style clinicians find most useful.

This study has focused on the analysis of medical free text in clinical notes. It should also be noted that, while such reports are at times copied into clinical notes, MRI reports and electroencephalogram reports may also provide useful medical text for future analyses. Furthermore, multiparametric machine learning models may incorporate multiple datatypes, including free text, imaging, and discrete data fields for this purpose. There has been significant research utilizing MRI and PET with machine learning approaches to predict the outcomes of epilepsy surgery.17, 18 Additionally, models that integrate clinical and imaging features to aid in epileptogenic zone localization have been developed.19 It seems likely that transfer learning, utilizing models developed for these tasks, to a task of identifying those suitable for epilepsy surgery workup referral, would be effective.

There are important limitations of this study that require consideration. In particular, the entire analysis is based on individual clinic notes. Therefore, data that were not present in these clinic notes were not included in the analysis. These additional data may have included electroencephalogram or imaging reports, as well as clinical progress. For example, while a high proportion of patients appear not to have been referred for epilepsy surgery, this result is on the basis of a single appointment. The duration of time before subsequent referral was beyond the scope of this project. Although conducted across two centers, and supporting previous findings from the United States of America,6-10 the utility of the models of this study is still contingent upon local documentation practices. It is possible that in other settings in which there are different practices with respect to medical documentation, the performance of such models may be lower. Additionally, as discussed above, the indications and contraindications for referral for epilepsy surgery may be debated and vary between centers.

Future research in this area may seek to further develop the algorithms in this study, through further calibration and external validation at other centers. Audit-based studies may seek to examine whether the implementation of such algorithms improves patient or system-oriented outcomes. These studies may also seek to examine health economic outcomes pertaining to subsequent lost years of productivity. Further algorithms aiming to identify patients suitable for epilepsy surgery may assess multiple serial patient notes, as well as integration of multiple datatypes, such as imaging results.

5 CONCLUSION

Still, in Australia, there are patients who may benefit from epilepsy surgery workup who are not referred. Machine learning natural language processing can accurately identify patients who may be suitable for referral. Further research aiming to leverage such technology to improve patient outcomes is warranted.

AUTHOR CONTRIBUTIONS

Sheryn Tan and Stephen Bacchi were involved in conceptualization and design of work, acquisition of data, analysis and interpretation of data, drafting the article, revising the article critically for important intellectual content. Rudy Goh, Jeng Swen Ng, Charis Tang, Cleo Ng, Joshua Kovoor, Brandon Stretton, Aashray Gupta, Christopher Ovenden were involved in conceptualization and design of work, drafting the article, revising the article critically for important intellectual content. Merran Courtney, Andrew Neal, Emma Whitham, Joseph Frasca, Michelle Kiley, Amal Abou-Hamden were involved in conceptualization and design of work, revising the article critically for important intellectual content. All authors gave final approval for the version to be published and agreed to be accountable for all aspects of the work.

FUNDING INFORMATION

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

ACKNOWLEDGMENTS

Open access publishing facilitated by The University of Adelaide, as part of the Wiley - The University of Adelaide agreement via the Council of Australian University Librarians.

CONFLICT OF INTEREST STATEMENT

None of the authors has any conflict of interest to disclose.

ETHICS STATEMENT

We confirm that we have read the Journal's position on issues involved in ethical publication and affirm that this report is consistent with those guidelines.

Open Research

DATA AVAILABILITY STATEMENT

Research data are not shared due to institutional ethics approval.