QuadWindow: A Perspective-Aware Framework for Geometric Window Extraction From Street-View Imagery

Funding: This work was supported by National Key Research and Development Program of China, 2018YFD1100405.

ABSTRACT

Rapid and reliable assessment of building damage is essential for post-disaster response and recovery. As windows often reflect critical structural changes, their automatic extraction from street-view images provides valuable insights for emergency assessment, urban risk modeling, and disaster database updates. Existing methods struggle to leverage the quadrilateral prior of windows due to two main issues: poor handling of perspective distortion and the lack of robust loss functions when precise vector annotations are unavailable. To overcome these challenges, we introduce QuadWindow, a framework specifically designed to handle perspective distortions through a perspective transformation sub-network that predicts transformations from street-view images to frontal views, significantly simplifying window extraction tasks without manual correction. Additionally, we propose a differentiable rendering loss that directly aligns predicted quadrangles with raster-based ground truth, bypassing the need for explicit corner-point annotations. Experimental results demonstrate that QuadWindow outperforms state-of-the-art methods across five façade datasets, with an average F1-score of 87.6% and Intersection over Union (IoU) of 78.03%, achieving 1.47% and 5.2% improvement, respectively.

1 Introduction

Automatic extraction of windows from building façade images is critical for geospatial applications such as urban energy modeling [1-3], daylight simulation [4-6], disaster assessment [7-9], 3D building modeling [10, 11], and building digital documentation [12]. Previous studies [13-20] employed heuristic methods, relying on hand-crafted pattern templates or grammatical rules to match the repetitive and symmetrical features of building façades. However, the limitations of these methods lie in their limited feature representation and heavy reliance on manual priors, making it difficult for them to adapt to diverse façade styles.

Due to the variations in window shape and the presence of perspective distortion in street-view images, accurately extracting window instances remains a challenging task. This task requires not only careful algorithmic design but also substantial human effort for precise annotation. Accordingly, most studies recast window parsing as quadrangle extraction, the dominant shape observed in urban façades [21, 22]. When applied to rectified façade images, this simplification becomes the extraction of rectangular windows [16, 23]. While this reformulation reduces accuracy to some extent, it is justifiable for two reasons: (1) It simplifies the task assumptions, facilitating the development of high-performance models by reducing the complexity of the output space; (2) In applications like seismic simulation and daylight analysis, the accuracy provided by quadrangles is often sufficient for practical needs.

Building upon these considerations, this study formulates the window extraction task as a quadrangle prediction problem. However, training a high-performance deep learning model under this simplified framework remains challenging, particularly in leveraging the spatial prior knowledge of window arrangements. Such prior is essential for improving network performance [24-26], especially in occlusion scenarios, where it assists in extracting occluded windows. Furthermore, spatial relationships, such as alignment and size consistency of windows in the same row or column, provide structural information that can improve predictions. While previous studies have successfully integrated spatial prior [27, 28], their methods often rely on manually corrected façade images with a frontal view. However, in street-view images, perspective distortion disrupts the spatial arrangement of windows, reducing the reliability of existing methods. Although manual rectification can partially mitigate these distortions, it introduces additional complexity, restricting the practical applicability of these methods in large-scale urban analysis.

Another challenge lies in effectively leveraging the quadrangle assumption to ensure robust and accurate predictions. By enforcing a quadrangle constraint, window extraction becomes significantly simplified and facilitates the generation of more refined window shapes for quadrangle windows. Wang et al. [29] proposed an approach based on a generalized bounding box, while Li et al. [21] introduced a key point prediction method, both yielding promising results. However, these methods encounter a critical issue in establishing loss functions, as they require explicit ground truth annotations for window corner points. This poses a challenge because many annotations in existing datasets are raster masks, and a significant portion of these do not conform to a quadrangle representation. Forcibly converting such annotations into bounding boxes or corner points often leads to substantial accuracy loss, as illustrated in Figure 1. This raises an important question: can we bypass reprocessing arbitrary-shaped window annotations and instead directly establish a loss between vectorized predictions and raster-based ground truth annotations? This would enable the model to predict more regularized window shapes without requiring additional re-annotation efforts.

To address these challenges, we propose an improved deep learning framework for quadrilateral-based window extraction from street-view images. To effectively leverage the quadrangle prior for window extraction, similar to methods that treat objects as key points [31], our approach adopts a multi-branch architecture that predicts quadrangles directly, ensuring geometric consistency despite the presence of perspective distortions. First, we integrate a perspective transformation estimation subnetwork that predicts transformations from street-view façade images to frontal views, adaptively eliminating perspective distortion. To supervise the estimation of perspective matrices, we design two unsupervised loss functions that leverage the inherent structural consistency of window layouts, enabling effective supervision without additional annotations. Additionally, we introduce a differentiable rendering loss that directly aligns quadrangle predictions with raster-based ground truth, bypassing the need for manual simplification or re-annotation. Experimental results demonstrate that our method outperforms state-of-the-art methods across five different datasets, with an average F1-score of 87.6% and an Intersection over Union (IoU) of 78.03%, achieving 1.47% and 5.2% improvement, respectively.

- We propose a subnetwork that predicts transformations from street-view to frontal images, eliminating the need for manual corrections and ensuring the robust integration of priors through attention mechanisms. Additionally, we introduce two unsupervised loss functions, enabling effective supervision of the transformations without additional annotations.

- A differentiable rendering loss is proposed to directly align quadrangle predictions with raster-based ground truth annotations, bypassing the need for manual re-annotation or contour simplification and enabling regularized quadrangle representations.

- We develop a multi-branch network for quadrilateral-based window extraction that leverages spatial priors and attention mechanisms. This framework generates geometrically consistent vectorized window instances in an end-to-end manner.

The remainder of this paper is organized as follows: Section 2 reviews related work and outlines our motivations, followed by Section 3 detailing the proposed method. Sections 4 and 5 present experimental results and ablation studies, respectively, while Section 6 concludes the paper.

2 Related Work

2.1 Window Extraction With Architectural Prior

Windows are typically quadrilaterals arranged in a symmetrical pattern, which constitutes one of the most significant forms of prior knowledge for extracting windows from building façades. This prior knowledge imposes constraints on the extraction process and results, aiding in addressing challenges such as occlusion and false extractions. This has been demonstrated in both grammar-based methods [14, 17, 32, 33] and deep learning-based approaches [26, 27, 34-36].

In deep learning, architectural priors have been integrated more deeply through post-processing or attention mechanisms. For example, Ma and Ma [34] proposed a post-optimization method that clusters and votes for window bounding boxes along horizontal and vertical directions, refining candidate boxes with confidence scores below a predefined threshold. Similarly, Liu et al. [27] and Zhang et al. [36] cluster windows in the same row and column, constraining segmentation results using a variance loss function. Meanwhile, Sun et al. [35] and Zhuo et al. [28] employed attention mechanisms to model long-range dependencies, implicitly encoding spatial relationships between windows. Tao et al. [22] demonstrated that sparse attention mechanisms can significantly enhance network representation capabilities when applied to corrected images.

While previous studies have successfully integrated spatial prior, their methods often rely on manually corrected façade images with a frontal view. In contrast, street-view images suffer from perspective distortion, which diminishes the reliability of these approaches. Although manual rectification can mitigate this issue [6, 37], it is not scalable for large datasets, limiting the practicality of such methods in real-world applications. In contrast, our method directly addresses this limitation by learning transformation matrices from raw, distorted images, thereby improving scalability and practicality.

2.2 Vectorized Windows Extraction

To leverage the quadrilateral assumption of windows, traditional grammar-based methods often assume that windows are regular rectangles in rectified images and parse façades using appropriate grammar rules to balance representation accuracy and computational efficiency [13, 16, 26].

In deep learning, researchers have also leveraged the quadrilateral assumption of windows and improved the accuracy of extraction. For instance, DeepFacade [27] and DAN-PSPNet- [36] conduct self-regulating rectangle constraints that penalize windows not being rectangles by comparing the prediction with their rectangle hulls and bounding boxes. Deepwindows [35] implicitly encodes window shape information by embedding the width and height of windows into high-dimensional embeddings and concatenating them with semantic features. Although these methods improve overall segmentation accuracy, they still suffer from over-smoothing effects due to their reliance on raster-based grid representations, which limit boundary sharpness [28, 38]. To achieve sharper window representations, some researchers have adopted more concise vectorized representations. For example, Wang et al. [29] proposed using two bounding boxes to represent the four corners of a window, enabling sharp representations without resolution constraints. Li et al. [21] introduced a bottom-up approach that first detects window corners in the image and then clusters them into distinct window instances based on pairing relationships, achieving more computational efficiency.

Vectorizing windows not only reduces computational costs [11, 29] but also accurately captures the geometric shapes of the most common window types. However, these methods typically require explicit ground truth annotations for window corners, while most existing datasets provide mask annotations rather than corner points. This reliance on explicit corner annotations poses a significant challenge, often requiring manual re-annotation or lossy simplification [11, 21]. Therefore, it is essential to develop a method for extracting vectorized windows using raster-based annotations.

In recent years, vectorized representations have gained significant attention in deep learning, due to their ability to adapt to complex shapes and provide flexible object representations. These methods model objects as sets of key points [39, 40] or contour points [41-43], enabling better handling of irregular and deformable objects. Among these, RepPoints [31] and its extension, Dense RepPoints [40] represent objects using a set of adaptive points that position themselves over the object to circumscribe its spatial extent and capture semantically significant local areas. This approach offers a unified object representation over different levels of granularity. However, the vanilla RepPoints derive bounding boxes by converting the points into rectangular shapes, which is insufficient to fully represent the quadrangular shapes of windows. Conversely, Dense RepPoints requires as many as 729 points to represent the object, making it less efficient for window extraction.

3 Methodology

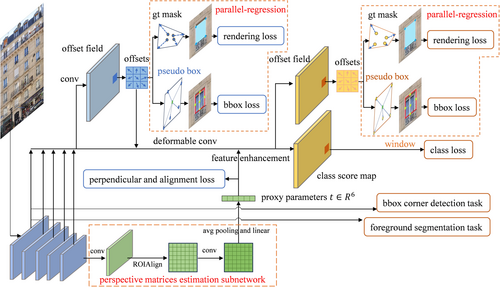

Following the design of RepPoints [31], the proposed QuadWindow framework uses a set of adaptive points to circumscribe a window's spatial extent. To generate quadrangle predictions, we design two parallel regression branches: one supervised by our proposed differentiable rendering loss to refine four corner positions, and the other retaining the original settings from RepPoints to maintain compatibility with off-the-shelf detectors. Additionally, we integrate the perspective transformation estimation subnetwork into the final feature map generated by the backbone. This subnetwork is supervised using dedicated alignment and perpendicular losses, leveraging the shape priors to eliminate distortions. To refine these corrected feature maps, we employ a lightweight attention mechanism, which captures long-range dependencies and enhances spatial feature representations. The overview of the proposed method is illustrated in Figure 2.

3.1 Perspective Transformation Estimation

Given that perspective distortion is a high-level semantic feature, we incorporate the perspective transformation estimation subnetwork into the final feature map of backbone's outputs. As illustrated in Figure 3, the feature map is first processed through a 3 × 3 convolution and a ReLU activation [47]. Following this, a 7 × 7 RoIAlign [48] layer is employed to resample the feature map to a fixed size. The resampled feature map is passed through a prediction head comprising sequential layers: a 1 × 1 convolution, an average pooling layer, a LeakyReLU [49] activation, and another 1 × 1 convolution. The final output consists of surrogate parameters, which are used to construct the perspective matrix as previously described.

After obtaining the perspective matrix for each image, we resample the backbone's feature maps using this matrix, correcting potential geometric distortions. To enhance these corrected feature maps with window arrangement priors, we employ a simple row- and column-based attention mechanism [50], chosen for its computational efficiency. The enhanced feature maps are then resampled back to their original size using the inverse of the perspective matrix. This perspective matrix-assisted attention mechanism helps us leverage the prior knowledge of frontal views, even when dealing with non-frontal view images. This enables the model to capture long-range contextual information and improve overall performance.

3.2 Alignment and Perpendicular Loss

In the perspective transformation estimation subnetwork, direct annotations for supervised training are unavailable. Considering that during façades are rectified to frontal views, window shapes will progressively become regular, whose edges align with horizontal or vertical axes, and adjacent edges become perpendicular. Based on this shape prior, we propose two types of losses: alignment loss and perpendicular loss, to effectively guide and supervise the perspective transformation estimation subnetwork.

3.2.1 Alignment Loss

3.2.2 Perpendicular Loss

The coordinates of the points in both loss functions are derived from homography transformation with the perspective matrix. Before applying this transformation, we detach the gradients of these points. This operation prevents gradients from flowing back to the point coordinates, ensuring effective supervision of the perspective matrix.

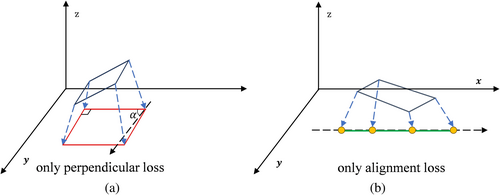

Clearly, these two proposed loss functions are complementary: the perpendicular loss encourages adjacent edges to be perpendicular but does not guarantee that the quadrangle is ultimately aligned with the coordinate axes after transformation, while the alignment loss enforces edges to be aligned with the axes but does not ensure they are properly aligned with both the x and y axes after transformation. The potential extreme optimization results when using only one of these losses are illustrated in Figure 4.

3.3 Differentiable Rendering Loss for Quadrangles

The proposed differentiable rendering loss is designed to supervise window corner locations using raster mask annotations. Unlike existing methods that rely on explicit window corner annotations, this loss directly aligns quadrangle predictions with raster-based ground truth, eliminating the need for manual re-annotation or contour simplification. Specifically, a closed 2D quadrangle is constructed from the predicted points and rendered into a grid mask. The discrepancy between the rendered mask and the ground truth mask is then quantified using the L2 loss, effectively guiding the model to refine its predictions.

To ensure stable training, several enhancements were introduced. As shown in Figure 5, the network's first four output points are mapped counterclockwise to the window corners, forming a closed quadrangle. This quadrangle is then triangulated, and the resulting vertices and triangle indices are passed to the differentiable rendering pipeline [51], generating the rendered mask . Here, represents normalized point coordinates, and specifies the triangle vertex indices.

It is worth noting that while the proposed differentiable rendering loss proves effective and empirically demonstrates stable convergence after initial training epochs, it inevitably introduces additional computational overhead. Nevertheless, this computational cost is negligible when utilizing contemporary deep-learning devices, as modern GPU-based hardware inherently originates from architectures optimized for highly efficient graphics rendering pipelines. Furthermore, recent advancements, such as NVIDIA's differentiable rendering frameworks [51], have enabled seamless integration of high-performance graphics pipelines with CUDA-based computation. This integration achieves smooth interoperability without substantially increasing computational load, while maintaining differentiability throughout the rendering process, thereby mitigating potential concerns regarding the efficiency and practicality of our proposed approach.

4 Experiments and Results

4.1 Datasets

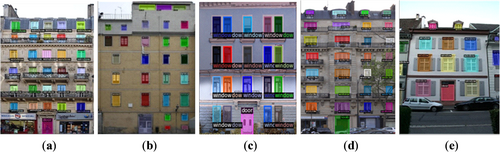

Following a prior study [27], we validated our results on five benchmark datasets: the ECP dataset [16], CMP dataset [25], Graz50 dataset [23], ArtDeco dataset [26], and eTRIMS dataset [30]. These datasets, specifically designed for façade parsing, feature diverse architectural styles collected from cities worldwide, offering a comprehensive evaluation of the proposed method. Our research focused exclusively on window extraction, ignoring other categories in these datasets. Detailed dataset information is shown in Table 1, with annotated examples in Figure 6.

| Datasets | Count | Rectified | Category |

|---|---|---|---|

| ECP | 104 | Yes | window, wall, balcony, door, shop, sky, roof, chimney. |

| CMP | 606 | Yes | wall, molding, cornice, pillar, window, door, sill, blind, balcony, shop, deco, background. |

| Graz50 | 50 | Yes | wall, door, window, sky. |

| ArtDeco | 79 | Yes | door, shop, balcony, window, wall, sky, roof. |

| eTRIMS | 60 | No | window, wall, door, sky, pavement, vegetation, car, road. |

4.2 Comparative Methods and Evaluation Metrics

We compared the performance of our model with three state-of-the-art methods: DeepFacade [27], DeepWindows [35], and CFBS [28]. To ensure a fair comparison, we used semantic segmentation accuracy metrics, including the F1-score [52], which is the harmonic mean of precision and recall, and the Jaccard index (IoU) [53], to evaluate window extraction accuracy. Pixel accuracy metrics were excluded because they can be misleading due to foreground-background imbalance. For comparison, we converted our method's vector outputs into binary masks through rasterization.

4.3 Implementation Details

Our method was implemented using the mmdetection framework [54] and trained on a single 2080Ti GPU. We adopted ResNet-50, pre-trained on ImageNet, as the backbone. Apart from the proposed improvements, other parameters followed those of RepPoints v2 [55]. We trained the model using a synchronized stochastic gradient descent optimizer with two images per minibatch, an initial learning rate of 0.005, a weight decay of 0.0001, and momentum of 0.9. Training lasted 36 epochs with multi-scale images (1333 × 480 to 1333 × 960), and the learning rate decayed at the 24th and 32nd epochs. Random horizontal flipping was applied during training, and non-maximum suppression ( = 0.5) was used during inference for post-processing.

In the differentiable rendering loss, ground-truth masks were resampled to a resolution of 32 × 32 for supervision. During attention enhancement, the query and key channels were reduced by a factor of 32, and the value channel by a factor of 16. After computing row and column-based attention, a 1 × 1 convolution was applied to restore the feature channels to their original dimensions. All losses are summed up to form the final loss.

4.4 Quantitative Evaluation

Table 2 presents a quantitative comparison of our method with state-of-the-art approaches across multiple datasets, with bold values marking the best results for each metric. Our method consistently achieved the highest F1-score and IoU across most datasets, demonstrating superior precision, recall balance, and segmentation accuracy.

| Datasets | Methods | F1 (%) | IoU (%) |

|---|---|---|---|

| ECP | DeepFacade | — | 80.3 |

| DeepWindows | 79.6 | 63.8 | |

| CFBS | 90.1 | 82.0 | |

| QuadWindow (Ours) | 90.41 | 82.49 | |

| CMP | DeepFacade | 80.0 | — |

| DeepWindows | 71.3 | 56.7 | |

| CFBS | 82.4 | 64.1 | |

| QuadWindow (Ours) | 91.02 | 83.52 | |

| Graz50 | DeepFacade | — | 71.3 |

| DeepWindows | 70.2 | 59.9 | |

| CFBS | 84.3 | 73.1 | |

| QuadWindow (Ours) | 85.43 | 74.57 | |

| ArtDeco | DeepFacade | — | 70.7 |

| DeepWindows | 74.6 | 60.5 | |

| CFBS | 87.7 | 72.1 | |

| QuadWindow (Ours) | 85.81 | 75.14 | |

| eTRIMS | DeepFacade | — | 71.1 |

| DeepWindows | 85.2 | 74.3 | |

| CFBS | — | — | |

| QuadWindow (Ours) | 85.33 | 74.42 | |

| Mean | DeepFacade | 80.0 | 73.35 |

| DeepWindows | 76.18 | 63.04 | |

| CFBS | 86.13 | 72.83 | |

| QuadWindow (Ours) | 87.6 | 78.03 |

- Note: For both F1-score and IoU, the superior values are shown in bold.

On the CMP dataset, our F1-score reached 91.02% and our IoU reached 83.52%, outperforming all other approaches. Given the CMP dataset's larger sample size, these results strongly emphasize the superiority of our method. The observed slight performance reduction in terms of F1-score compared to the state-of-the-art CFBS method on the ArtDeco dataset is primarily attributed to our lightweight feature enhancement strategy, which prioritizes computational efficiency. This approach may insufficiently address complex occlusion scenarios present in this dataset. The extensive occlusions in the ArtDeco dataset may lead to missed detections of fully occluded windows. While our method effectively estimates the position and shape of partially occluded windows based on contextual clues, its performance diminishes for completely occluded windows, negatively affecting the overall results. Nonetheless, the regular shapes predicted by our method result in competitive performance compared to state-of-the-art methods.

Results on the ECP and Graz50 datasets further demonstrate the superiority of our method. Our approach achieves the highest average F1-score and IoU across these datasets, highlighting its adaptability to diverse architectural styles. It is noteworthy that CFBS achieves higher accuracy on its second step by combining segmentation results with original images to re-regress window bounding boxes as segmentation results. This approach introduces stronger assumptions about the façade images, leading to better outcomes on manually corrected datasets. As such, we did not include it in our comparison.

The eTRIMS dataset was not manually rectified, meaning the window arrangement priors are no longer valid. As a result, the DeepFacade method, which depends on symmetry loss functions, performs poorly. On the other hand, DeepWindows incorporates an instance-based relationship module and is less reliant on strict façade priors, making it more resilient to perspective distortions and resulting in the second-best performance. Our method, by combining spatial and shape priors through perspective transformation and quadrangle representation of masks, achieves the best results across all metrics.

4.5 Qualitative Comparison and Analysis

In addition to quantitative analysis, we also conducted visual comparisons to demonstrate the distinctive characteristics of our method.

Figure 7 shows the visual comparison results on the ECP dataset. Our method not only produces results with superior regularity but also closely matches the ground truth. In contrast, other methods exhibit excessively smooth corners and noisy boundaries, highlighting our method's advantage in predicting regular shapes and avoiding the over-smoothing effects common in conventional semantic segmentation.

Figure 8 presents the qualitative comparison on the CMP dataset. Similar to the ECP dataset, our method completely avoids noisy segmentation and produces more regular output compared to other methods. The attention mechanism enhancement further ensures that our method does not mistakenly extract irrelevant objects, such as doors and decorations, enhancing the accuracy of our results.

Figure 9 presents the qualitative comparison on the Graz50 dataset. It can be observed that our method closely matches the ground truth, not only detecting all windows but also avoiding the erroneous extraction of doors observed in other methods. Visually, our results are nearly indistinguishable from the ground truth, demonstrating the robustness and accuracy of our approach.

Figure 10 illustrates various occlusion scenarios in the ArtDeco dataset, which pose significant challenges for window extraction. In the first and second columns, showing partial occlusions, our method accurately estimates window positions and shapes, producing regular and precise predictions. In the third column, where a large area is occluded, our method extracts most windows while maintaining their regular shapes. In contrast, while other methods predict window locations correctly, their predicted shapes are often unsatisfactory.

Figure 11 presents a qualitative comparison of the segmentation results between our method and DeepWindows. It is evident that our method achieves superior boundary regularity for windows. DeepWindows produces jagged and irregular boundaries, whereas our method consistently maintains sharp, clean edges, ensuring more precise window extraction.

5 Discussion

In addition to comparing our method with state-of-the-art approaches, we conducted ablation studies to further verify its effectiveness. Specifically, we compared RepPoints v2 and its extension, Dense RepPoints v2 [55], which is designed for instance segmentation using more points, to examine the impact of domain knowledge on deep learning models and assess the effectiveness of our improvements. Furthermore, we visually validated the role of our perspective transformation estimation subnetwork and quantitatively analyzed its contribution to feature enhancement.

5.1 Effectiveness of the Proposed Quadrangle Representation

Accuracy comparison of our method with baseline methods is presented in Table 3. It is worth noting that the RepPoints v2 method is designed for object detection and cannot be directly compared with the other methods; therefore, we generate segmentation results based on its predicted bounding boxes.

| Datasets | Methods | Precision (%) | Recall (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|---|

| ECP | RepPoints v2 | 88.13 | 91.24 | 89.66 | 81.25 |

| Dense RepPoints v2 | 93.92 | 83.49 | 88.4 | 79.21 | |

| QuadWindow (Ours) | 91.58 | 89.26 | 90.41 | 82.49 | |

| CMP | RepPoints v2 | 93.11 | 88.84 | 90.92 | 83.36 |

| Dense RepPoints v2 | 95.53 | 79.47 | 86.76 | 76.62 | |

| QuadWindow (Ours) | 92.99 | 89.13 | 91.02 | 83.52 | |

| Graz50 | RepPoints v2 | 81.59 | 89.31 | 85.28 | 74.33 |

| Dense RepPoints v2 | 90.17 | 80.18 | 84.88 | 73.74 | |

| QuadWindow (Ours) | 83.45 | 87.51 | 85.43 | 74.57 | |

| ArtDeco | RepPoints v2 | 84.57 | 84.95 | 84.19 | 73.26 |

| Dense RepPoints v2 | 85.54 | 83.52 | 84.52 | 73.18 | |

| QuadWindow (Ours) | 87.62 | 84.07 | 85.81 | 75.14 | |

| eTRIMS | RepPoints v2 | 80.74 | 89.06 | 84.7 | 73.46 |

| Dense RepPoints v2 | 92.39 | 79.07 | 85.21 | 74.23 | |

| QuadWindow (Ours) | 87.85 | 82.96 | 85.33 | 74.42 |

- Note: Bold indicates the highest score for each metric.

From Table 3, it can be observed that, compared to baseline methods, our approach consistently achieves the best balance between precision and recall. While RepPoints v2 and Dense RepPoints v2 may surpass our method in precision or recall, our approach consistently achieved the highest F1-score across all five datasets. Additionally, our method outperformed the baseline methods in terms of IoU.

In addition to accuracy improvements, we further investigate the computational efficiency of our approach to evaluate its scalability in real-world applications. We compared the computational cost and parameter count of our method with Dense RepPoints v2, finding that our method reduces computational cost by 46.83% and parameters by 8.12%. These efficiency gains underscore the suitability of our proposed approach for deployment in resource-constrained environments, such as rapid disaster response and large-scale urban analysis tasks. Overall, these results validate the effectiveness of our quadrangle-based window extraction method and demonstrate the significant advantages of incorporating domain-specific knowledge into deep learning frameworks.

5.2 Effectiveness of Perspective Estimation

To evaluate the effectiveness of perspective estimation, we rectified the original images using the perspective matrix generated by our model and visually compared them. The results, shown in Figure 12, indicate that while the images were not perfectly rectified, the perspective distortion was significantly eliminated. This clearly demonstrates the effectiveness of our perspective transformation estimation subnetwork, as well as the perpendicular and alignment losses.

The quantitative evaluation of the effectiveness of the perspective transformation estimation module is presented in Table 4. It is evident that the performance of our method is suboptimal without feature enhancement, likely due to the model's limited receptive field, which restricts its ability to effectively utilize prior knowledge of window spatial arrangements. Incorporating row and column-based attention enhancement improves performance, particularly on the ArtDeco dataset, which contains occlusions. This improvement can be attributed to the network's ability to learn useful architectural priors even with sparse attention.

| Datasets | Methods | Precision (%) | Recall (%) | F1 (%) | IoU (%) |

|---|---|---|---|---|---|

| ECP | w/o AE | 90.38 | 86.49 | 88.39 | 80.61 |

| w/o PM | 91.56 | 89.11 | 90.32 | 82.45 | |

| w/PM | 91.58 | 89.26 | 90.41 | 82.49 | |

| CMP | w/o AE | 91.12 | 87.61 | 89.33 | 81.72 |

| w/o PM | 92.66 | 89.03 | 90.81 | 83.38 | |

| w/PM | 92.99 | 89.13 | 91.02 | 83.52 | |

| Graz50 | w/o AE | 85.04 | 85.74 | 85.39 | 74.46 |

| w/o PM | 83.56 | 87.97 | 85.71 | 74.64 | |

| w/PM | 83.45 | 87.51 | 85.43 | 74.57 | |

| ArtDeco | w/o AE | 87.21 | 80.37 | 83.65 | 72.57 |

| w/o PM | 87.55 | 84.08 | 85.78 | 74.98 | |

| w/PM | 87.62 | 84.07 | 85.81 | 75.14 | |

| eTRIMS | w/o AE | 88.2 | 80.8 | 84.34 | 72.92 |

| w/o PM | 87.85 | 81.81 | 84.72 | 73.41 | |

| w/PM | 87.85 | 82.96 | 85.33 | 74.42 |

- Note: w/o AE denotes no attention enhancement is used; w/o PM refers to the use of row- and column-based attention enhancement without the assistance of the perspective matrix; w/PM refers to the attention enhancement method proposed in this paper using the perspective matrix. The best performance for each metric is highlighted in bold.

When utilizing the perspective matrix for feature enhancement, our method achieves the best performance across most datasets, though improvements are marginal in some cases. Notably, in the Graz50 dataset, with its highly regular characteristic, perspective matrix-assisted feature enhancement led to a slight performance drop. Conversely, in the eTRIMS dataset, which lacks rectification, the enhancement significantly improves model performance by up to 1.1%. These results highlight the effectiveness of our perspective transformation estimation subnetwork and validate the robustness of our feature enhancement strategy.

5.3 Uncertainties and Limitations

Due to the inherent nonlinear complexity involved in accurately estimating perspective transformations, images exhibiting extreme perspective distortion may reduce the accuracy of the estimated perspective matrix. Furthermore, such extreme distortions can result in a significant loss of semantic information, leading to the degradation of window features. This, in turn, makes the precise extraction of windows more difficult. As a result, the proposed method is less effective on images with severe perspective distortion.

Additionally, a limitation of our proposed approach is its primary applicability to quadrilateral window shapes. Although quadrilaterals represent the majority of real-world window geometries, other shapes, such as arches or irregular polygons, exist and may impact extraction accuracy. While extending our approach from quadrilaterals to polygons by increasing the number of key points could enable finer-grained boundary representations, such polygonal representations introduce greater complexity. Ensuring stable training and reliable polygon triangulation thus requires further exploration and rigorous testing. Future research should therefore investigate methodologies capable of effectively handling these more intricate polygonal structures, thereby enhancing the generalizability and robustness of our proposed framework.

It is important to acknowledge that the current validation was performed on relatively clean façade images. In realistic post-disaster scenarios, images may contain occluded, damaged, or misaligned windows. These complex conditions are not well represented in existing benchmarks. Therefore, future work should explore the robustness of the proposed framework on such challenging, disaster-specific datasets to confirm its applicability in operational disaster management systems.

6 Conclusion

We present QuadWindow, a perspective-aware framework that enables direct, vectorized window extraction from street-view images without a separate vectorization step. The framework integrates a perspective transformation estimation subnetwork that predicts perspective matrices to rectify distorted façades, thereby leveraging the arrangement priors of windows to encode architectural priors into the feature space. Two specialized loss functions supervise this module using geometric constraints without requiring additional annotations. Furthermore, we modify RepPoints into parallel branches and introduce a differentiable rendering loss that aligns predicted quadrangles with raster annotations, enabling end-to-end vectorized predictions without manual re-annotation.

Extensive quantitative and qualitative experiments across five datasets demonstrate that our method outperforms state-of-the-art techniques, achieving an average F1-score of 87.6% and an IoU of 78.03%. Ablation studies further validate the effectiveness of the proposed modules, particularly in addressing perspective distortions and enabling automated vectorized window extraction. These innovations overcome limitations of prior research and enable automated extraction of façade-level features for post-disaster building assessments, rapid risk mapping, and vulnerability analysis. Integrated into natural hazard databases and GIS platforms, QuadWindow can enhance both the spatial and temporal granularity of structural monitoring, contributing to more intelligent and responsive disaster management systems.

Author Contributions

Zhuangqun Niu: data curation, formal analysis, methodology, software, validation, writing – original draft, visualization, investigation. Ke Xi: data curation, validation, writing – original draft, visualization, investigation. Yifan Liao: formal analysis, validation, writing – original draft. Pengjie Tao: conceptualization, methodology, resources, supervision, writing – review and editing, funding acquisition. Tao Ke: conceptualization, methodology, project administration, resources, supervision, writing – review and editing, funding acquisition.

Acknowledgments

This research was funded by the National Key Research and Development Program, grant number 2018YFD1100405.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.